Dynamic Programming Ring for Point Target Detection

Abstract

:1. Introduction

2. Model of Point Target

3. MFF-DPR-Based Point Target Detection

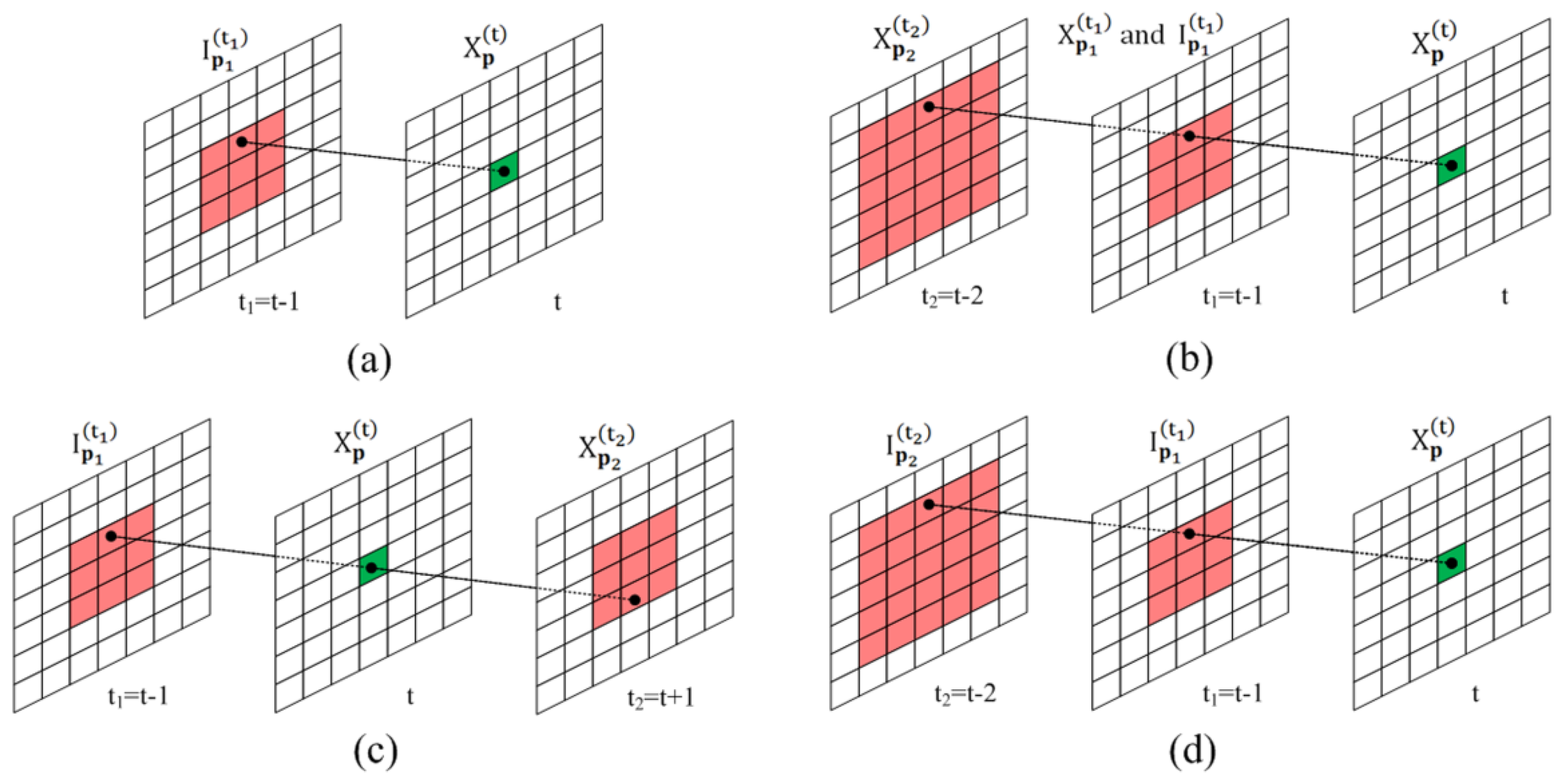

3.1. MFF-DP

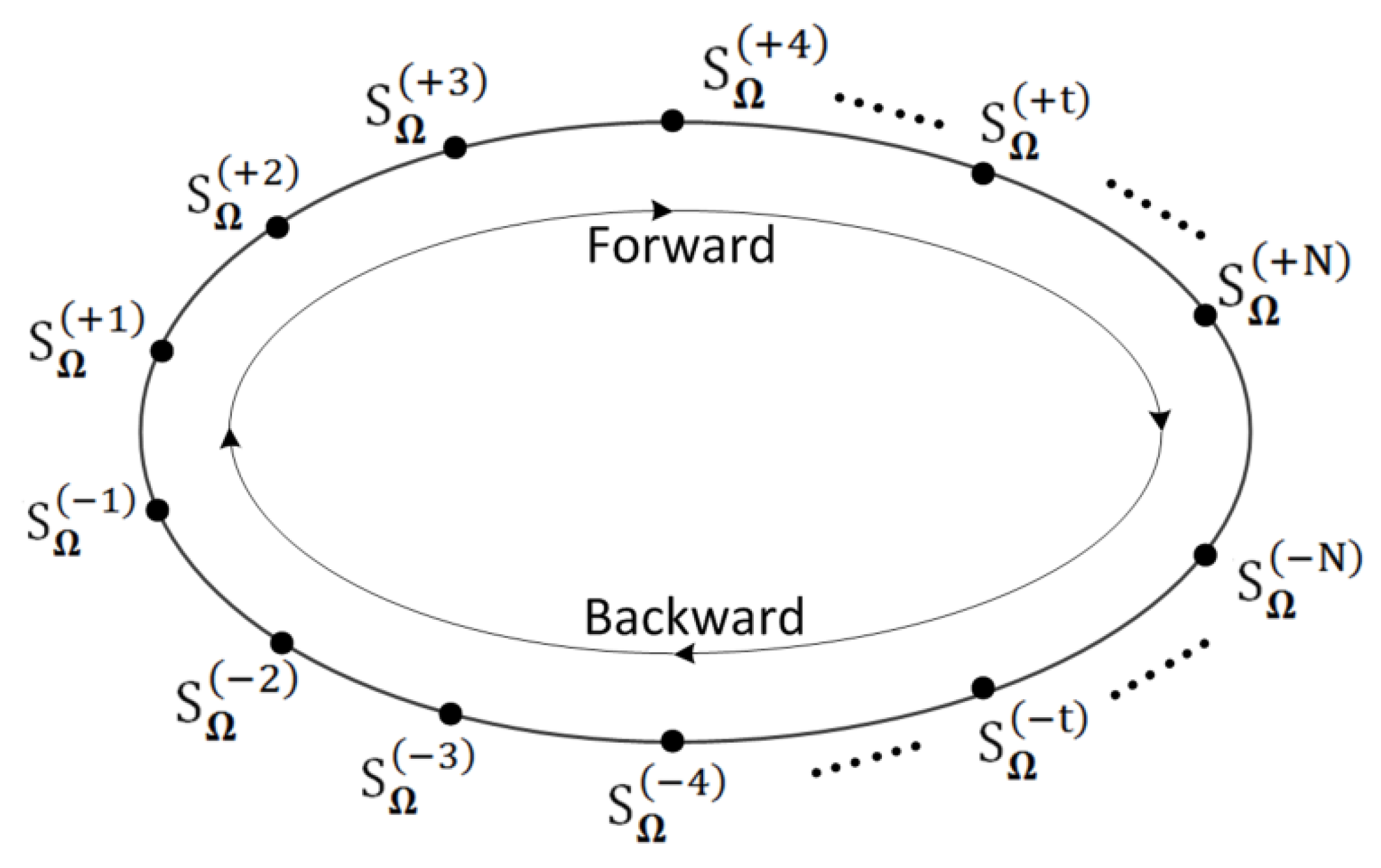

3.2. MFF-DPR

| Algorithm 1 MFF-DPR |

| Input: Assume the upper limit of the point target motion speed is , the sequence length is , the iteration counter is , and the present time . Output: The merit function of the MFF-DPR . Step 1: Pixel state updating. Pixel state is updated weekly along the data ring, as shown in Figure 3. If or , due to the lack of prior information on the velocity, according to Equations (2), (3) and (5), the state estimation is performed in the following way: If , let , and the first two states of the reverse MFF-DP are initialized in the following way: The iteration counter increased by one, i.e., ; if ,, the algorithm returns to the Step 1 of pixel state updating; otherwise, it proceeds to the Step 2 of MFF-DPR merit function derivation. Step 2: MFF-DPR merit function derivation. The merit function of the MFF-DPR can be obtained by averaging merit functions of the sequential and reverse MFF-DPs at the same time, which can be expressed as follows: |

3.3. Multi-Target Detection

| Algorithm 2 Multi-Target Detection |

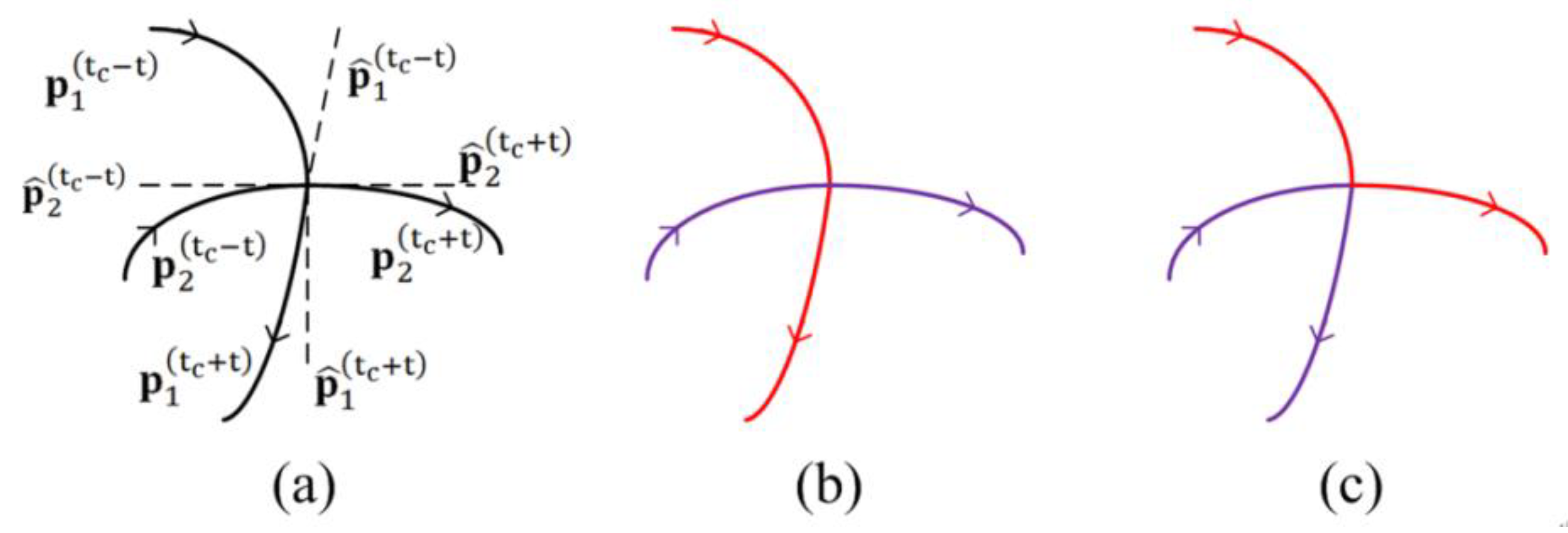

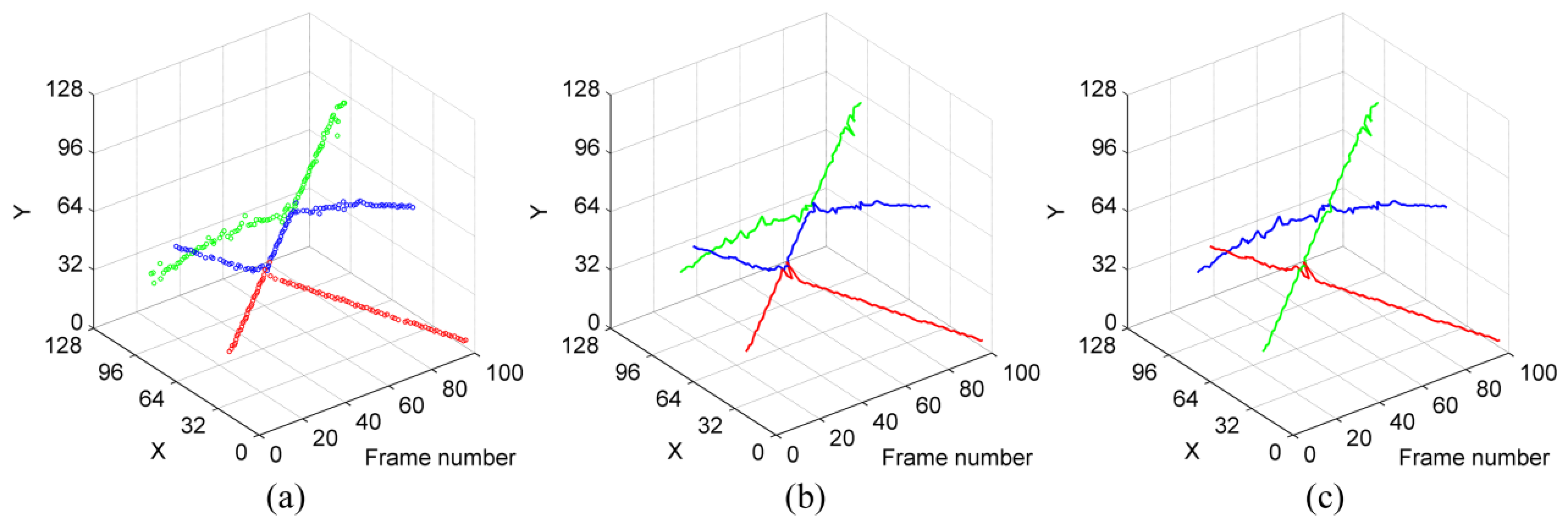

| Input: Set the number of iterations to be equal to the target number, i.e., ; the sequence length is , and the iteration counter is set to . Output: The multi-target trajectories . Step 1: Energy accumulation. Run Algorithm 1 for the merit functions of the MFF-DPR of the iteration . Step 2: Merit function maximum value coordinates extraction. The coordinates corresponding to the maximum value of the merit function of the MFF-DPR at time during the iteration can be expressed as: To prevent the same trajectory is detected multiple times, in this study, target trajectories are detected one by one. Step (3) outputs one trajectory at a time, and the trajectory can be generated by correlating the coordinate set . The specific steps are as follows: Step 3.1: Trajectory initialization. For the maximum value coordinate without matching the previous frame, a search window with a size of is set by centering it on this coordinate point. If there exists a maximum value coordinate within the search window, a new trajectory is established, and the tracking counter is set to two; then, a search window of the next frame centered on the predicted point is set, and the prediction counter is set to zero; otherwise, the maximum value coordinate of the previous frame is considered a noise coordinate and thus is deleted. Step 3.2: Trajectory generation. For the established trajectory, if there is a maximum value coordinate in the search window, the tracking counter is increased by one. Then, the trajectory is extended to the matching point, a search window of the next frame centered on the predicted point is set, and the prediction counter is set to zero. If no matching point is found, the trajectory is extended to the prediction point, the search window of the next frame centered on the predicted point is set, the size of the search window is increased by 2 pixels, and the prediction counter is increased by one. If the prediction counter is greater than five, the point target corresponding to the trajectory has been lost, and the current trajectory updating process is terminated. In this process, the least squares linear prediction algorithm with five consecutive frames is used for coordinate prediction. Step 3.3: The highest-score trajectory selection. The trajectory coordinates are scored as follows: invalid coordinates as “0”, initial coordinates as “1”, prediction coordinates as “2”, and matching coordinates as “3”. After batch processing, as shown in Steps (3.1) and (3.2), the coordinate scores of each trajectory are added to the trajectory score. The trajectory’s coordinates and trajectory score corresponding to the highest-score trajectory are output. The iteration counter is increased by one, i.e., . If , the algorithm goes to Step 4; otherwise, it goes to Step 5. Step 4: Updating observation data. To prevent the same trajectory is detected multiple times, once the trajectory is detected, the corresponding pixels in the observation data are replaced by adjacent background pixels. Define the updating area , and perform median filtering filling on it as follows: Step 5: Trajectory regularization. In theory, complete target trajectories can be extracted from observation data by performing Steps 1–4. However, target identification errors caused by trajectory intersection could arise, as shown in Figure 4c. To mitigate the target identification errors, this paper proposes an algorithm on any two trajectories as follows: Assume that the trajectory coordinate sets of two trajectories are denoted by and ; then, if Suppose ,,, and denote local trajectories at time , and ,, and are the predicted trajectories obtained by the straight-line fitting of ,,, respectively, as shown in Figure 4a; where, . The fitting errors of the two types of trajectories can be calculated by: If , the trajectory correlation is performed using the method shown in Figure 4b; otherwise, the method shown in Figure 4c is employed. |

4. Simulations and Analysis

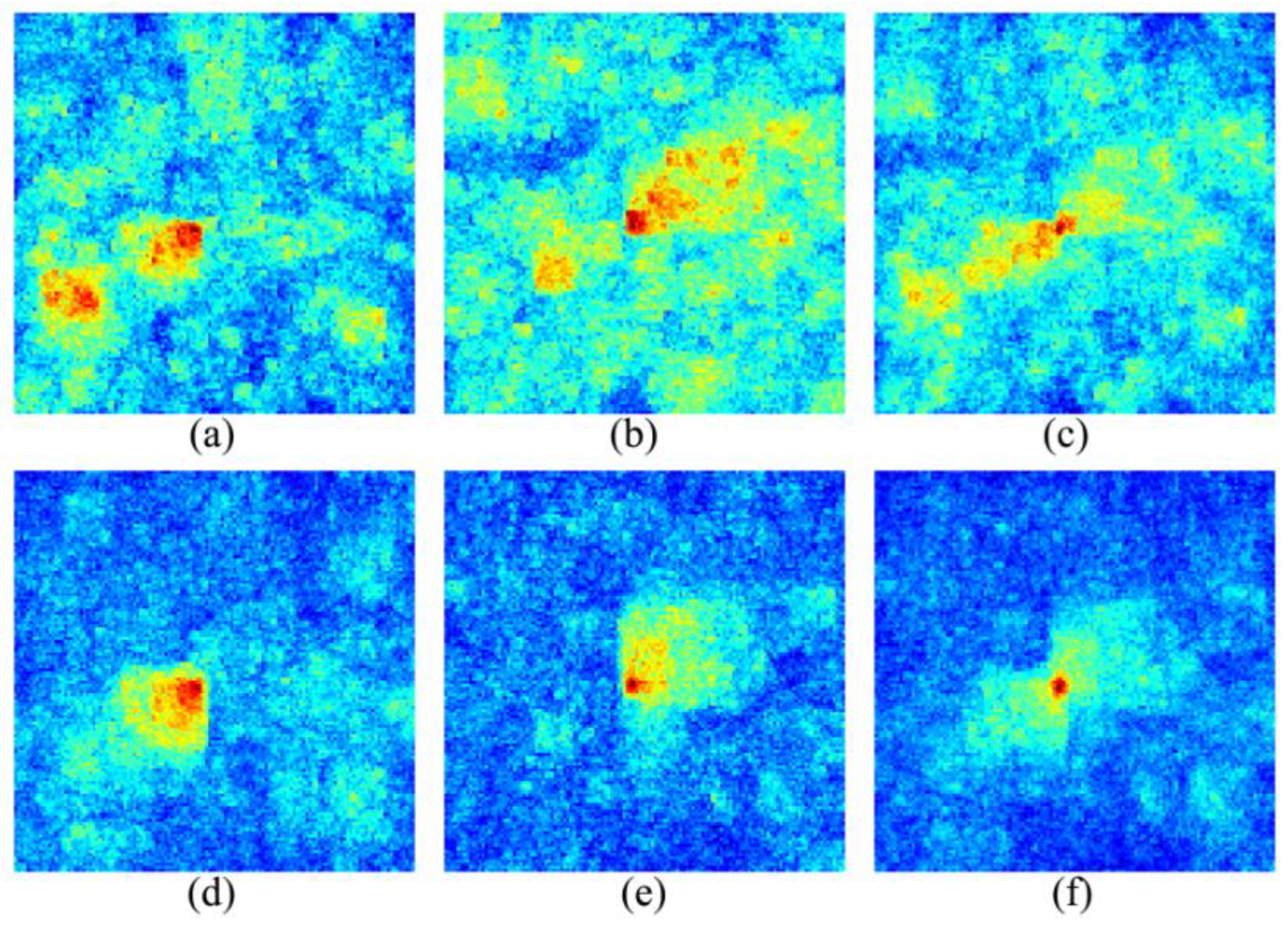

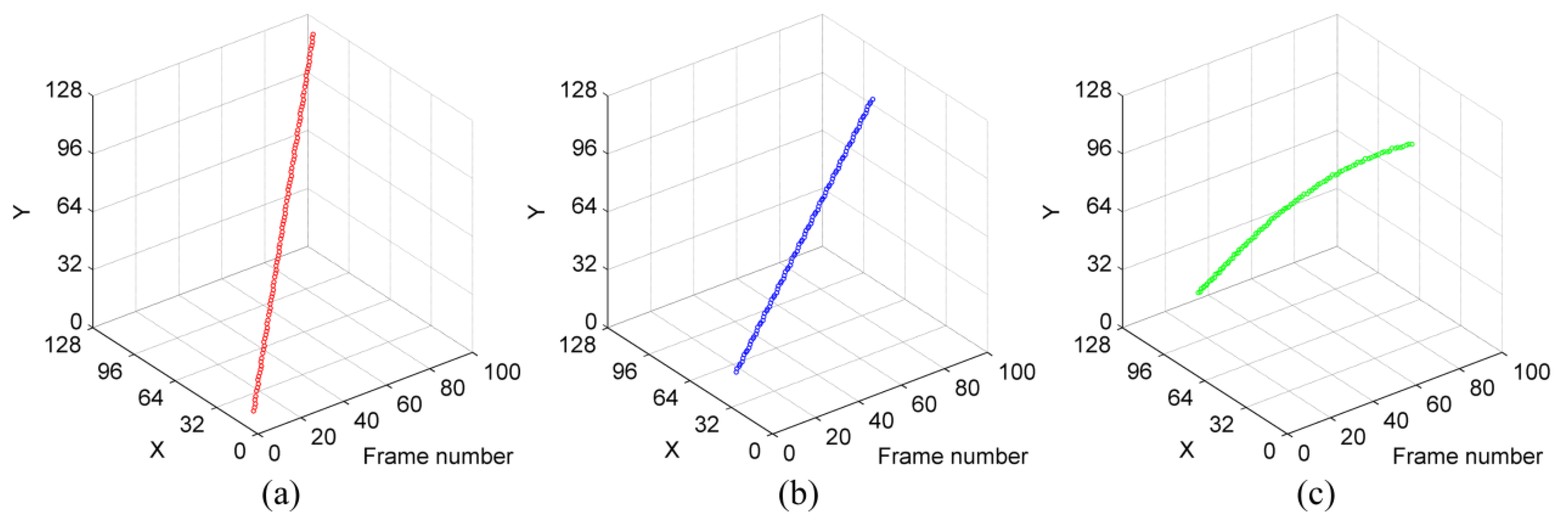

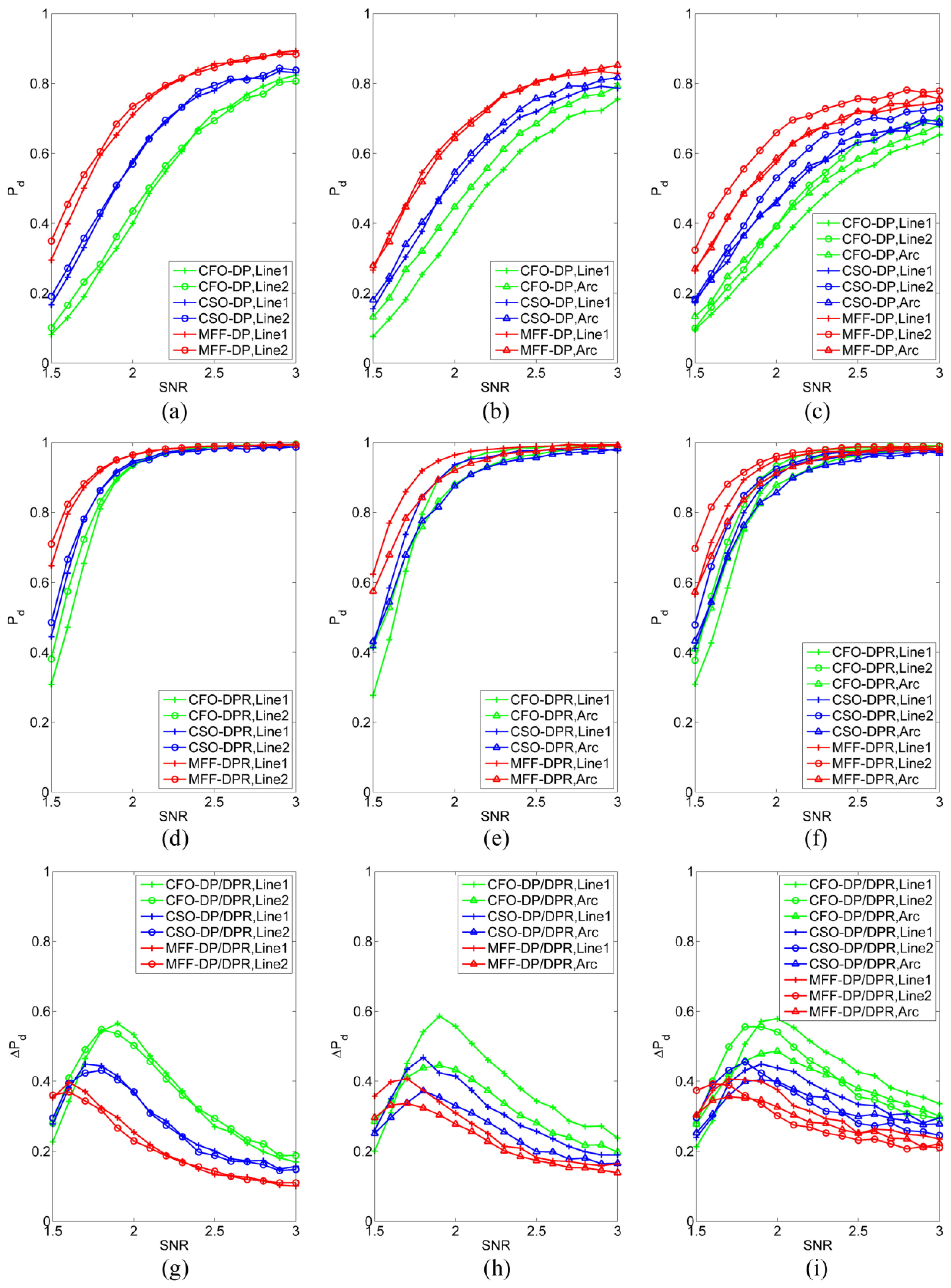

4.1. Single-Target Detection Test

4.2. Multi-Target Detection Test

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xiangzhi, B.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar]

- Chen, C.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Fu, J.; Zhang, H.; Wei, H.; Gao, X. Small bounding-box filter for small target detection. Opt. Eng. 2021, 60, 033107. [Google Scholar] [CrossRef]

- Ward, M. Target velocity identification using 3-D matched filter with Nelder-Mead optimization. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2011; pp. 1–7. [Google Scholar]

- Fu, J.; Wei, H.; Zhang, H.; Gao, X. Three-dimensional pipeline Hough transform for small target detection. Opt. Eng. 2021, 60, 023102. [Google Scholar] [CrossRef]

- Blostein, S.D.; Richardson, H.S. A sequential detection approach to target tracking. IEEE Trans. Aerosp. Electron. Syst. 2002, 30, 197–212. [Google Scholar] [CrossRef]

- Vo, B.T.; Vo, B.N. A random finite set conjugate prior and application to multi-target tracking. In Proceedings of the 2011 Seventh International Conference on Intelligent Sensors, Sensor Networks and Information Processing, Adelaide, SA, Australia, 6–9 December 2011; pp. 431–436. [Google Scholar]

- Barniv, Y. Dynamic programming solution for detecting dim moving targets. IEEE Trans. Aerosp. Electron. Syst. 1985, 21, 144–156. [Google Scholar] [CrossRef]

- Yong, Q.; Cheng, J.L.; Zheng, B. An effective track-before-detect algorithm for dim target detection. Acta Electron. Sin. 2003, 31, 440–443. [Google Scholar]

- Jiang, H.; Yi, W.; Kong, L.; Yang, X.; Zhang, X. Tracking targets in G0 clutter via dynamic programming based track-before-detect. In Proceedings of the IEEE Radar Conference, Arlington, VA, USA, 10–15 May 2015; pp. 356–361. [Google Scholar]

- Arnold, J.; Shaw, S.W.; Pasternack, H. Efficient target tracking using dynamic programming. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 44–56. [Google Scholar] [CrossRef]

- Tonissen, S.M.; Evans, R.J. Target tracking using dynamic programming: Algorithm and performance. In Proceedings of the 34th IEEE Conference on Decision and Control, New Orleans, LA, USA, 13–15 December 1995; pp. 2741–2746. [Google Scholar]

- Tonissen, S.M.; Evans, R.J. Peformance of dynamic programming techniques for track-before-detect. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 1440–1451. [Google Scholar] [CrossRef]

- Succary, R.; Succary, R.; Kalmanovitch, H.; Shurnik, Y.; Cohen, Y.; Cohenyashar, E.; Rotman, S.R. Point target detection. Infrared Technol. Appl. 2003, 3, 671–675. [Google Scholar]

- Johnston, L.A.; Krishnamurthy, V. Performance analysis of a dynamic programming track-before-detect algorithm. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 228–242. [Google Scholar] [CrossRef]

- Orlando, D.; Ricci, G.; Bar-Shalom, Y. Track-before-detect algorithms for targets with kinematic constraints. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1837–1849. [Google Scholar] [CrossRef]

- Xing, H.; Suo, J.; Liu, X. A dynamic programming track-before-detect algorithm with adaptive state transition set. In International Conference in Communications, Signal Processing, and Systems; Springer: Singapore, 2020; pp. 638–646. [Google Scholar]

- Guo, Y.; Zeng, Z.; Zhao, S. An amplitude association dynamic programming TBD algorithm with multistatic radar. In Proceedings of the 35th Chinese Control Conference, Chengdu, China, 27–29 July 2016; pp. 5076–5079. [Google Scholar]

- Yong, Q.; Licheng, J.; Zheng, B. Study on mechanism of dynamic programming algorithm for dim target detection. J. Electron. Inf. Technol. 2003, 25, 721–727. [Google Scholar]

- Cai, L.; Cao, C.; Wang, Y.; Yang, G.; Liu, S.; Zheng, L. A secure threshold of dynamic programming techniques for track-before-detect. In Proceedings of the IET International Radar Conference, Xi’an, China, 14–16 April 2013; pp. 1–3. [Google Scholar]

- Grossi, E.; Lops, M.; Venturino, L. Track-before-detect for multiframe detection with censored observations. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2032–2046. [Google Scholar] [CrossRef]

- Zheng, D.-K.; Wang, S.-Y.; Yang, J.; Du, P.-F. A multi-frame association dynamic programming track-before-detect algorithm based on second order markov target state model. J. Electron. Inf. Technol. 2012, 34, 885–890. [Google Scholar]

- Wang, S.; Zhang, Y. Improved dynamic programming algorithm for low SNR moving target detection. Syst. Eng. Electron. 2016, 38, 2244–2251. [Google Scholar]

- Sun, L.; Wang, J. An improved track-before-detect algorithm for radar weak target detection. Radar Sci. Technol. 2007, 5, 292–295. [Google Scholar]

- Lin, H.U.; Wang, S.Y.; Wan, Y. Improvement on track-before-detect algorithm based on dynamic programming. J. Air Force Radar Acad. 2010, 24, 79–82. [Google Scholar]

- Nichtern, O.; Rotman, S.R. Parameter adjustment for a dynamic programming track-before-detect-based target detection algorithm. EURASIP J. Adv. Signal Process. 2008, 19, 1–19. [Google Scholar] [CrossRef] [Green Version]

| CFO-DP/DPR | CSO-DP/DPR | MFF-DP/DPR | ||

|---|---|---|---|---|

| Number of single-step state searches | 9 | 49 | 49 | |

| 25 | 165 | 165 | ||

| Single-frame processing time (ms) | 15/29 | 57/117 | 75/155 | |

| 32/63 | 165/343 | 227/449 | ||

| CFO-DP/DPR | CSO-DP/DPR | MFF-DP/DPR | ||

|---|---|---|---|---|

| Single-frame processing time (ms) | Test case 1 | 34/65 | 118/236 | 161/315 |

| Test case 2 | 34/65 | 119/237 | 155/313 | |

| Test case 3 | 53/95 | 175/353 | 235/485 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, J.; Zhang, H.; Luo, W.; Gao, X. Dynamic Programming Ring for Point Target Detection. Appl. Sci. 2022, 12, 1151. https://doi.org/10.3390/app12031151

Fu J, Zhang H, Luo W, Gao X. Dynamic Programming Ring for Point Target Detection. Applied Sciences. 2022; 12(3):1151. https://doi.org/10.3390/app12031151

Chicago/Turabian StyleFu, Jingneng, Hui Zhang, Wen Luo, and Xiaodong Gao. 2022. "Dynamic Programming Ring for Point Target Detection" Applied Sciences 12, no. 3: 1151. https://doi.org/10.3390/app12031151

APA StyleFu, J., Zhang, H., Luo, W., & Gao, X. (2022). Dynamic Programming Ring for Point Target Detection. Applied Sciences, 12(3), 1151. https://doi.org/10.3390/app12031151