Processing RGB Color Sensors for Measuring the Circadian Stimulus of Artificial and Daylight Light Sources

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

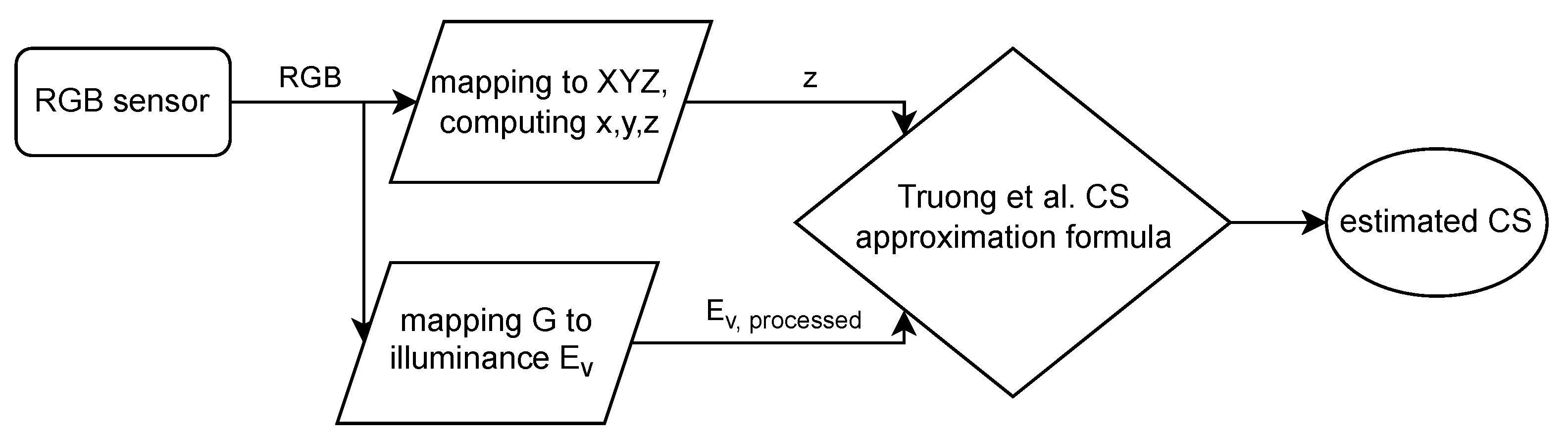

2.1. Circadian Stimulus and Its Approximation

2.2. Processing of RGB Color Sensors

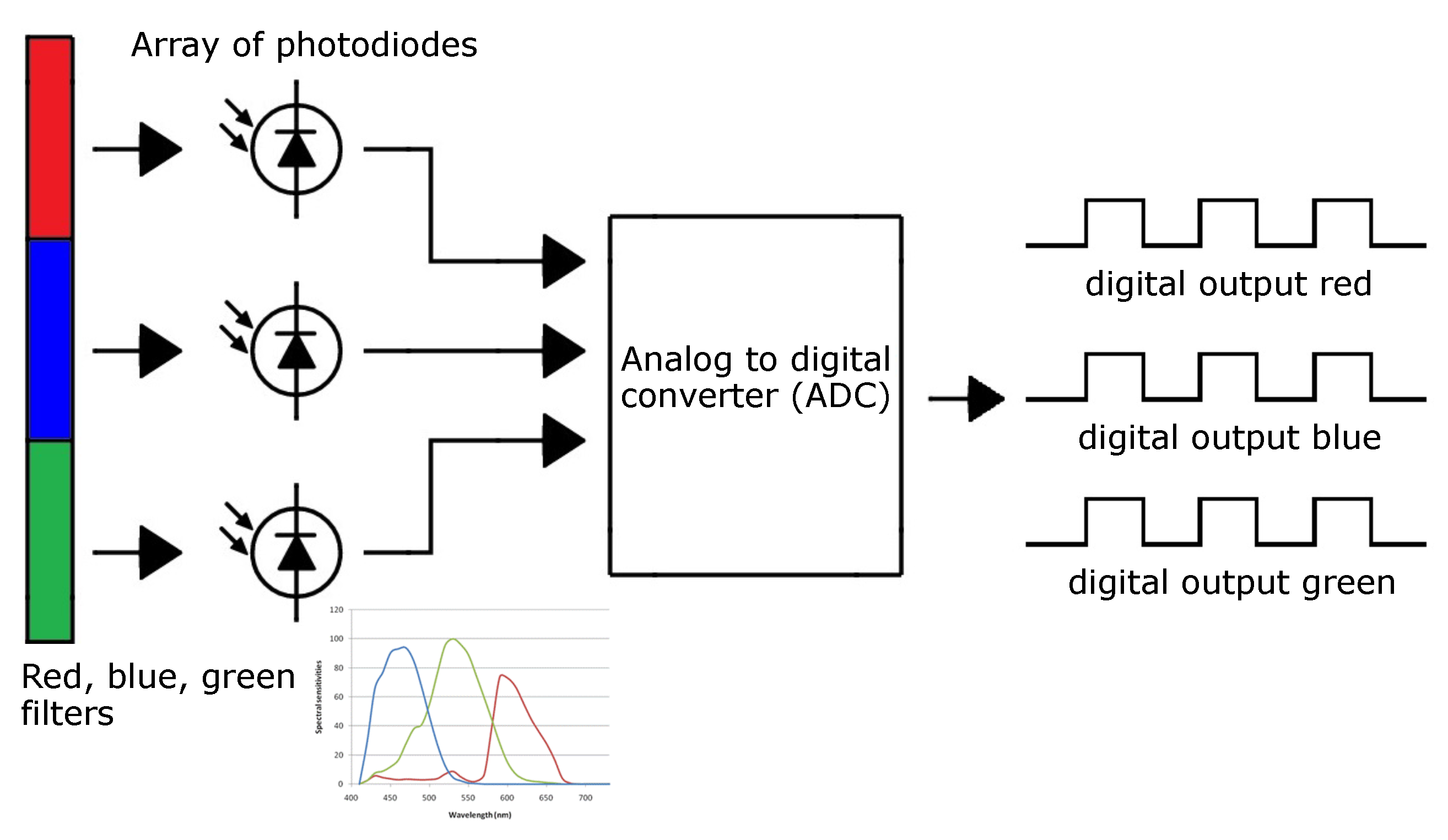

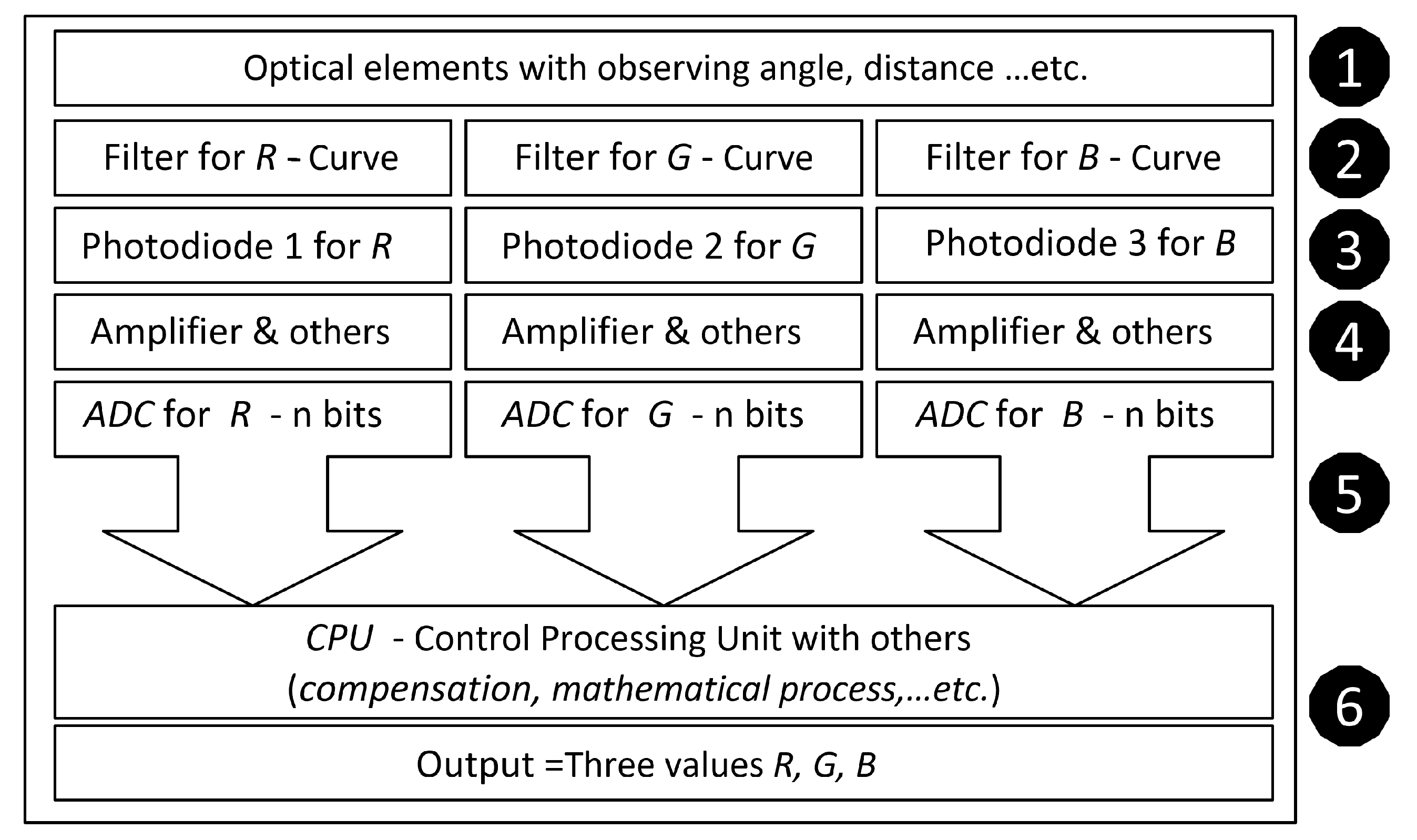

2.2.1. Physical Structure and Working Principle of RGB Color Sensors

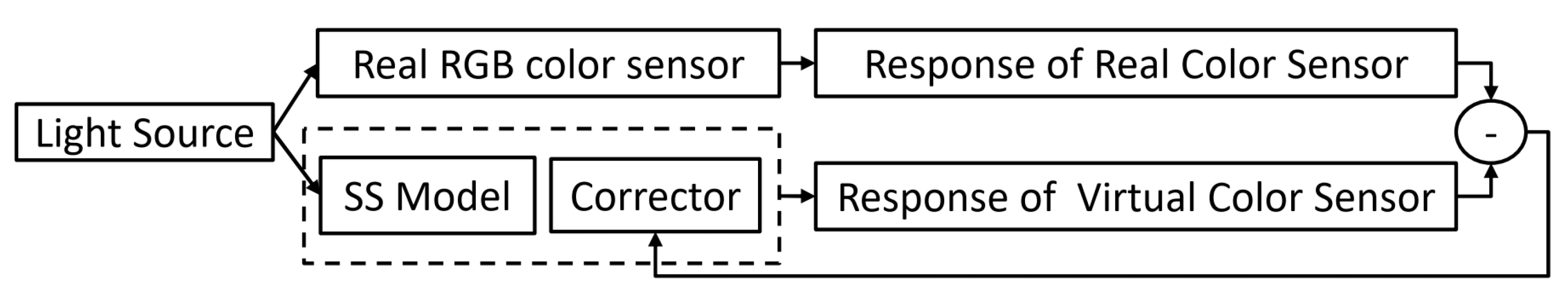

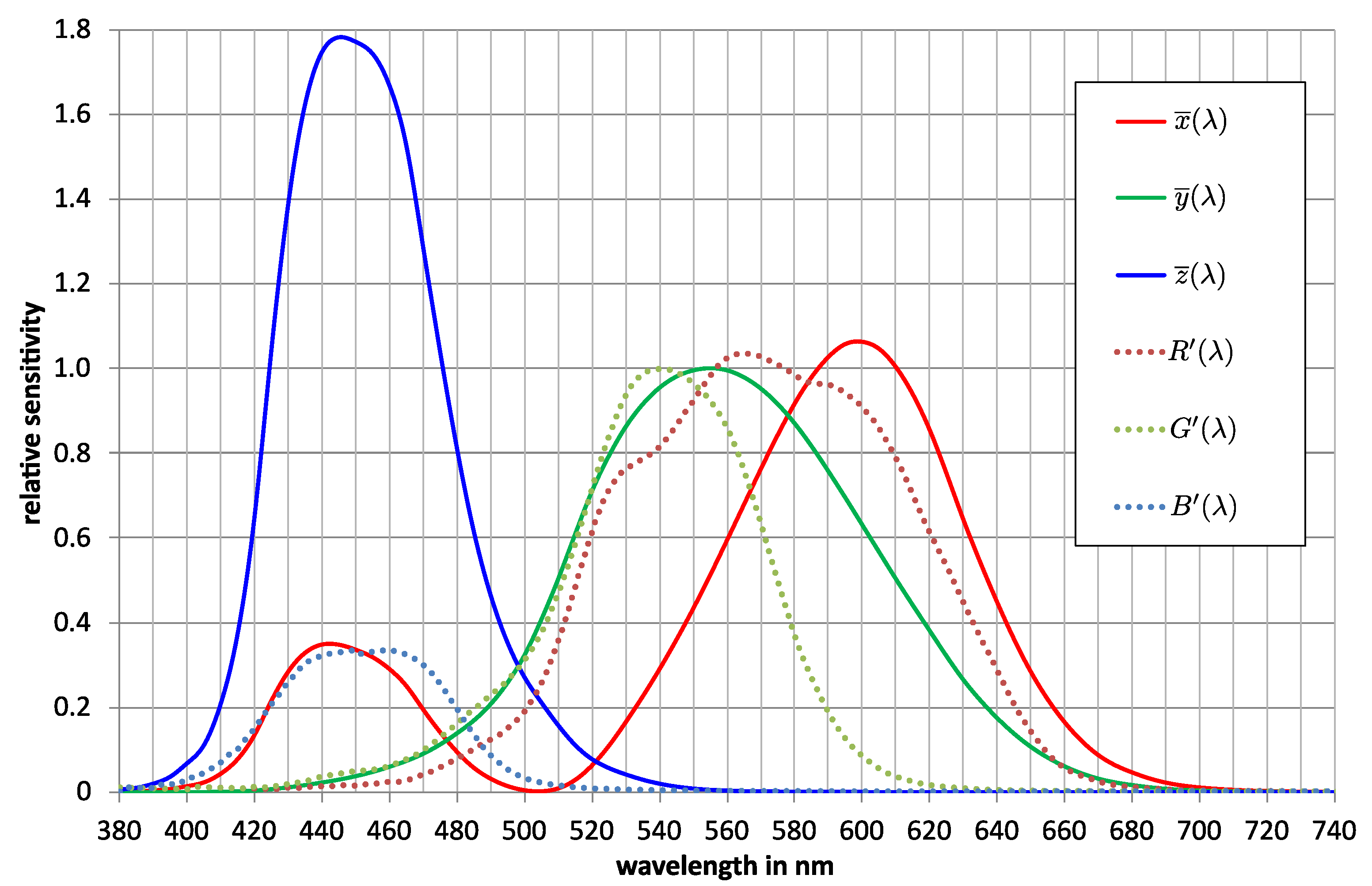

2.2.2. Determination of the Spectral Sensitivity Curves of an RGB Color Sensor

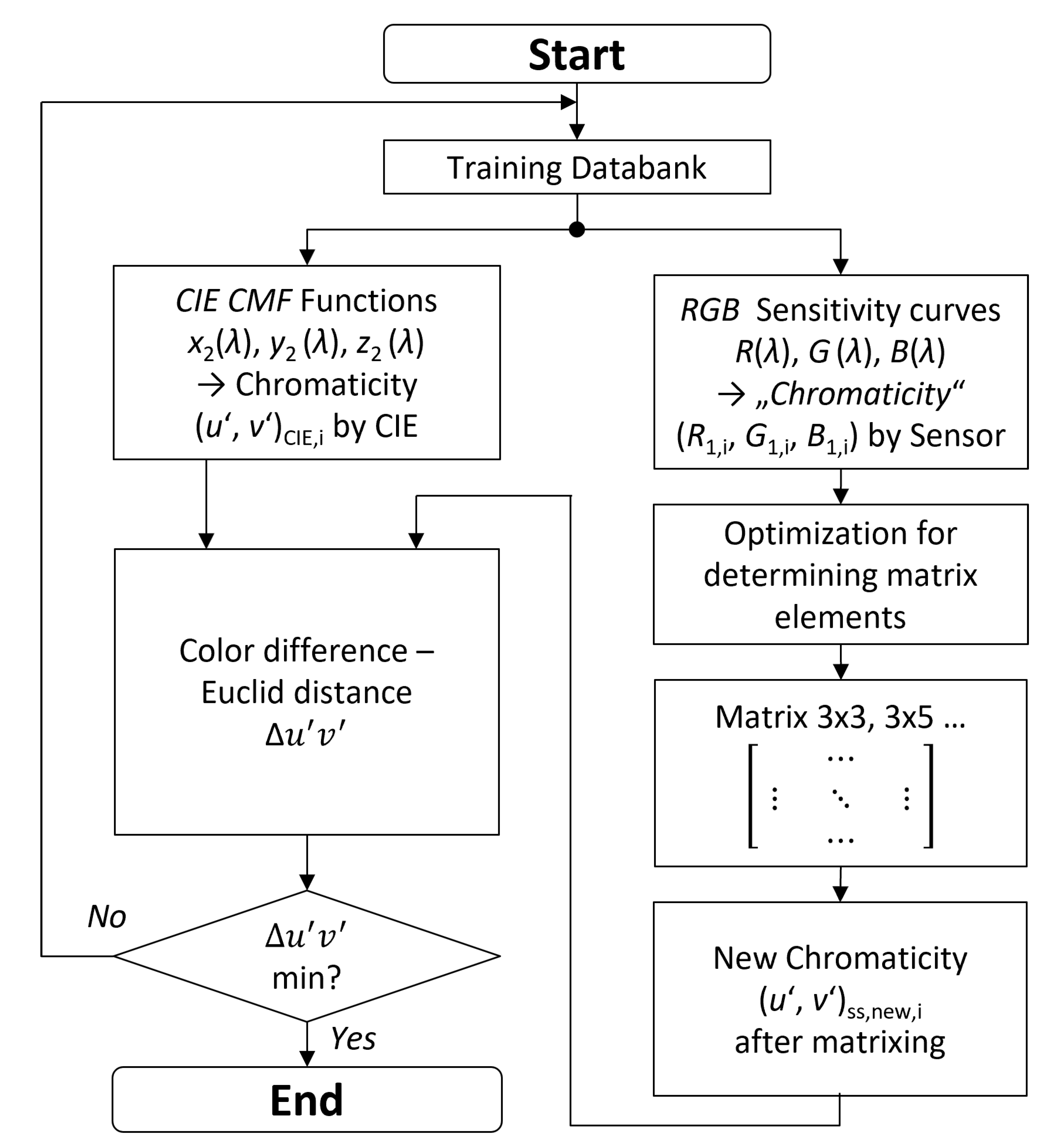

2.2.3. Colorimetric Mapping of Sensor Readouts

2.3. CIE Daylight Model and CCT Determination for a Daylight Spectral Reconstruction

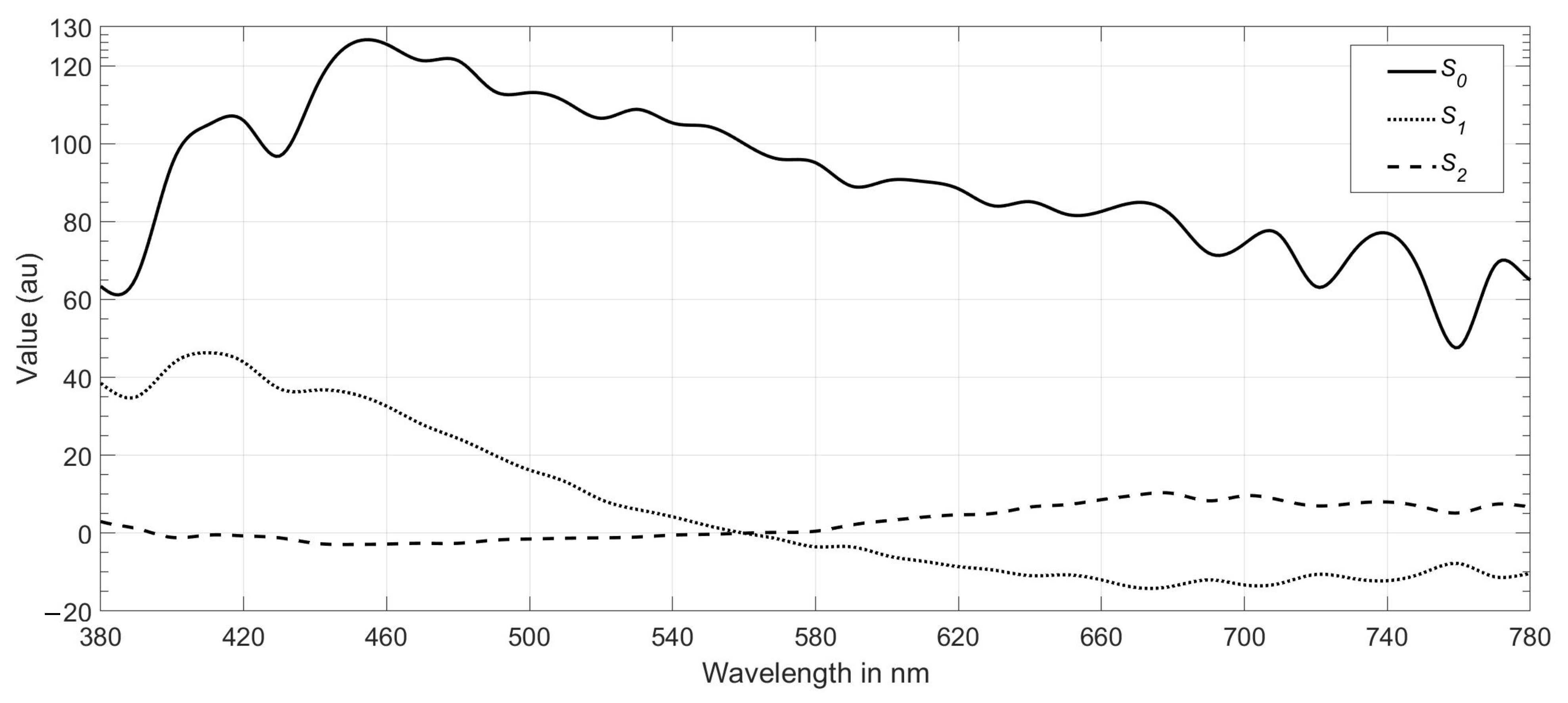

2.3.1. CIE Daylight Model

2.3.2. Determination of the CCT from Sensor Readouts

3. Results

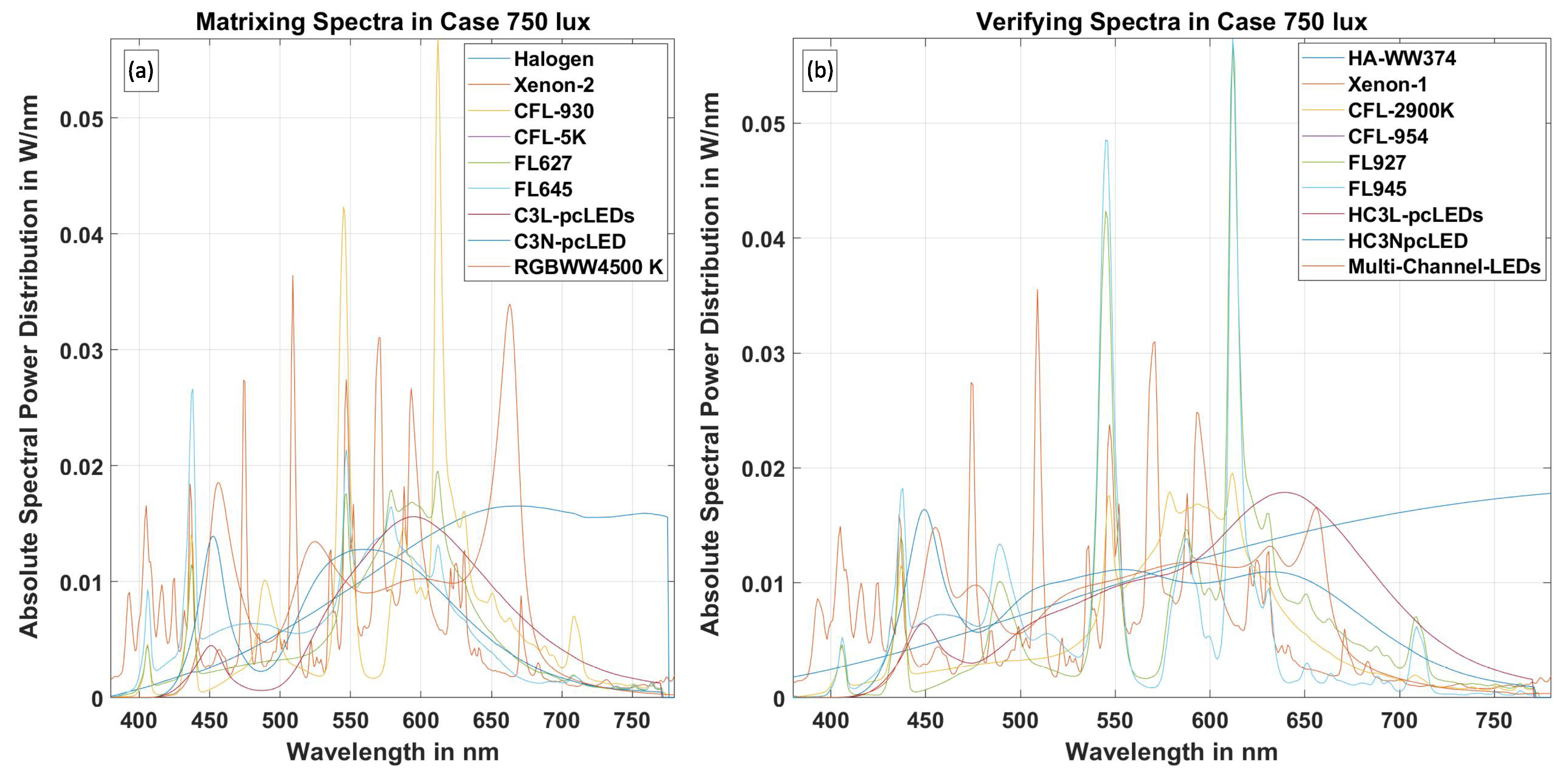

3.1. CS Estimation for Artificial Light Sources

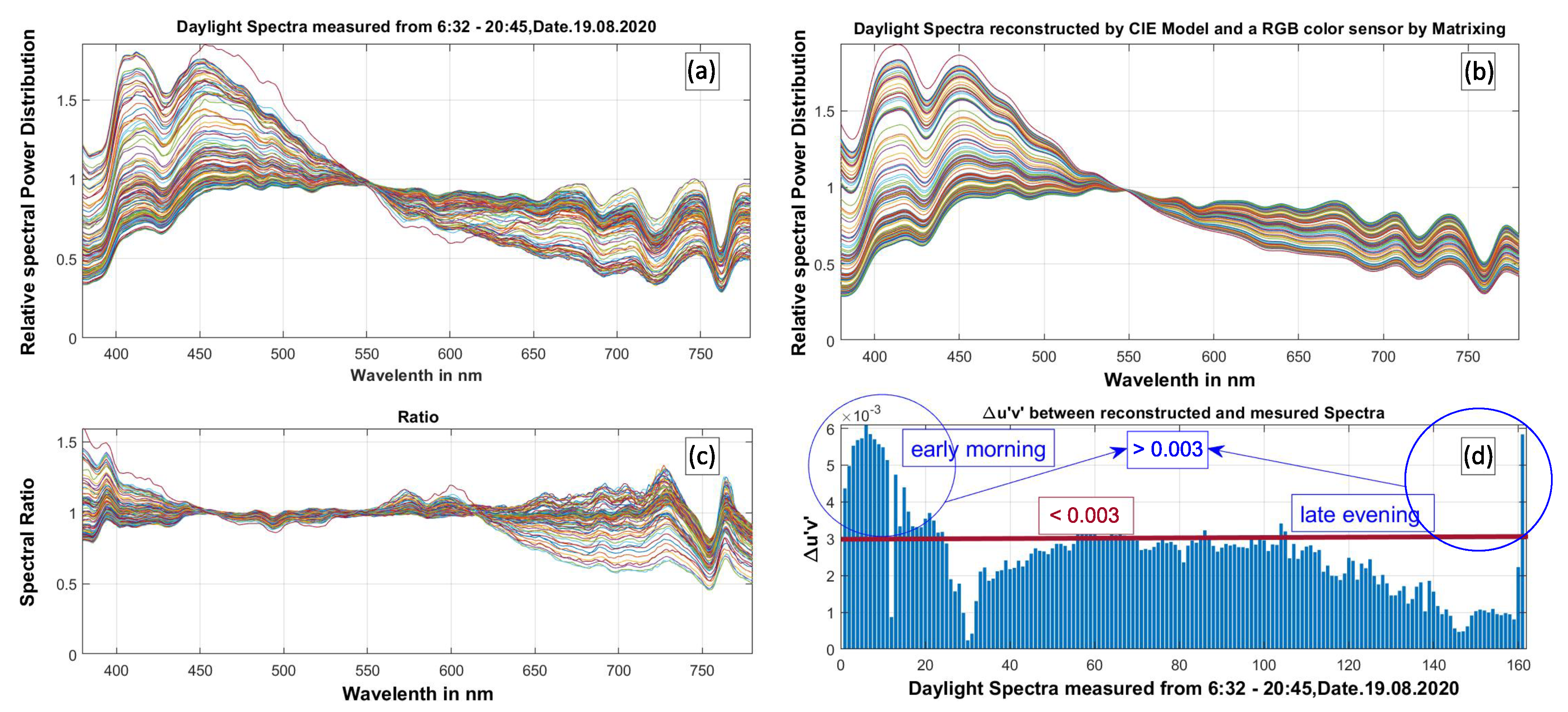

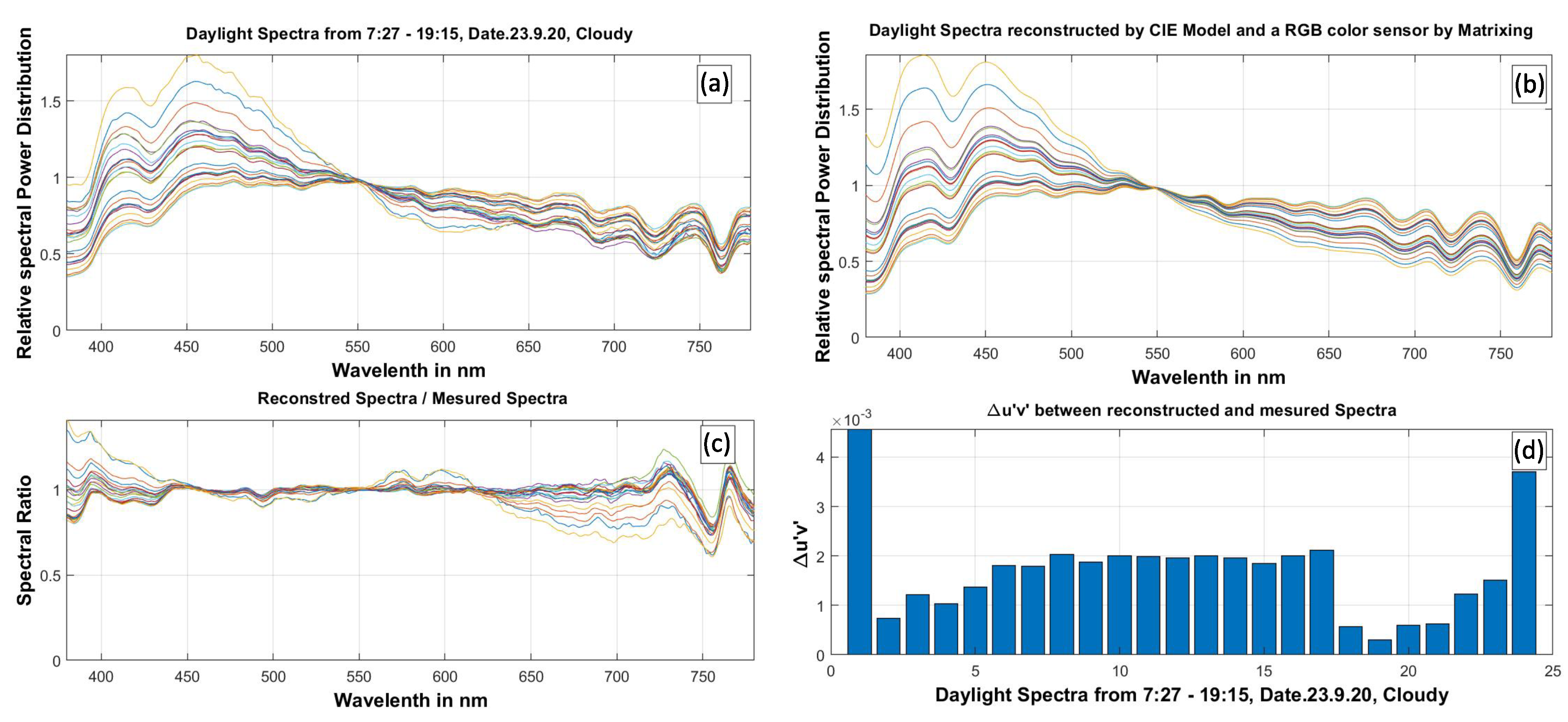

3.2. CS Estimation for Daylight Light Sources

4. Conclusions and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Schubert, E.F.; Kim, J.K. Solid-state light sources getting smart. Science 2005, 308, 1274–1278. [Google Scholar] [CrossRef] [PubMed]

- Protzman, J.B.; Houser, K.W. LEDs for general illumination: The state of the science. LEUKOS 2006, 3, 121–142. [Google Scholar] [CrossRef]

- Zhang, T.; Tang, H.; Li, S.; Wen, Z.; Xiao, X.; Zhang, Y.; Wang, F.; Wang, K.; Wu, D. Highly efficient chip-scale package LED based on surface patterning. IEEE Photonics Technol. Lett. 2017, 29, 1703–1706. [Google Scholar] [CrossRef]

- Usman, M.; Mushtaq, U.; Zheng, D.; Han, D.; Rafiq, M.; Muhammad, N. Enhanced internal quantum efficiency of bandgap-engineered green w-shaped quantum well light-emitting diode. Appl. Sci. 2019, 9, 77. [Google Scholar] [CrossRef] [Green Version]

- Hsiang, E.; He, Z.; Huang, Y.; Gou, F.; Lan, Y.; Wu, S. Improving the power efficiency of micro-LED displays with optimized LED chip sizes. Crystals 2020, 10, 494. [Google Scholar] [CrossRef]

- Wei, Y.; Gao, Z.; Liu, S.; Chen, S.; Xing, G.; Wang, W.; Dang, P.; Al Kheraif, A.A.; Li, G.; Lin, J. Highly efficient green-to-yellowish-orange emitting Eu2+-doped pyrophosphate phosphors with superior thermal quenching resistance for w-LEDs. Adv. Opt. Mater. 2020, 8, 1901859. [Google Scholar] [CrossRef]

- Lian, L.; Li, Y.; Zhang, D.; Zhang, J. Synthesis of highly luminescent InP/ZnS quantum dots with suppressed thermal quenching. Coatings 2021, 11, 581. [Google Scholar] [CrossRef]

- Liang, G.; Yu, S.; Tang, Y.; Lu, Z.; Yuan, Y.; Li, Z.; Li, J. Enhancing luminous efficiency of quantum dot-based chip-on-board light-emitting diodes using polystyrene fiber mats. IEEE Trans. Electron Devices 2020, 67, 4530–4533. [Google Scholar] [CrossRef]

- Sadeghi, S.; Kumar, B.G.; Melikov, R.; Aria, M.M.; Jalali, H.B.; Nizamoglu, S. Quantum dot white LEDs with high luminous efficiency. Optica 2018, 5, 793–802. [Google Scholar] [CrossRef]

- Taki, T.; Strassburg, M. Review—Visible LEDs: More than efficient light. ECS J. Solid State Sci. Technol. 2019, 9, 015017. [Google Scholar] [CrossRef]

- Pattison, M.; Hansen, M.; Bardsley, N.; Elliott, C.; Lee, K.; Pattison, L.; Tsao, J. 2019 Lighting R&D Opportunities; Technical Report DOE/EE-2008 8189; U.S. Department of Energy: Washington, DC, USA, 2020. [CrossRef]

- Commission Internationale de l’Éclairage. Method of Measuring and Specifying Colour Rendering Properties of Light Sources—CIE Technical Report 13.1; CIE: Vienna, Austria, 1965. [Google Scholar]

- Commission Internationale de l’Éclairage. Method of Measuring and Specifying Colour Rendering Properties of Light Sources—CIE Technical Report 13.2; CIE: Vienna, Austria, 1974. [Google Scholar]

- Commission Internationale de l’Éclairage. Method of Measuring and sPecifying Colour Rendering Properties of Light Sources—CIE Technical Report 13.3; CIE: Vienna, Austria, 1995. [Google Scholar]

- American National Standards Institute, Inc. IES Method for Evaluating Light Source Color Rendition; ANSI/IES TM-30-20; American National Standards Institute, Inc.: New York, NY, USA, 2019. [Google Scholar]

- Zhang, F.; Xu, H.; Wang, Z. Optimizing spectral compositions of multichannel LED light sources by IES color fidelity index and luminous efficacy of radiation. Appl. Opt. 2017, 56, 1962–1971. [Google Scholar] [CrossRef] [PubMed]

- Žukauskas, A.; Vaicekauskas, R.; Ivanauskas, F.; Gaska, R.; Shur, M.S. Optimization of white polychromatic semiconductor lamps. Appl. Phys. Lett. 2002, 80, 234–236. [Google Scholar] [CrossRef]

- Žukauskas, A.; Vaicekauskas, R.; Ivanauskas, F.; Shur, M.S.; Gaska, R. Optimization of white all-semiconductor lamp for solid-state lighting applications. Int. J. High Speed Electron. Syst. 2002, 12, 429–437. [Google Scholar] [CrossRef]

- Ries, H.; Leike, I.; Muschaweck, J.A. Optimized additive mixing of colored light-emitting diode sources. Opt. Eng. 2004, 43, 1531–1536. [Google Scholar] [CrossRef]

- Lin, K.C. Approach for optimization of the color rendering index of light mixtures. J. Opt. Soc. Am. A 2010, 27, 1510–1520. [Google Scholar] [CrossRef]

- Smet, K.; Ryckaert, W.R.; Pointer, M.R.; Deconinck, G.; Hanselaer, P. Optimal colour quality of LED clusters based on memory colours. Opt. Express 2011, 19, 6903–6912. [Google Scholar] [CrossRef]

- Smet, K.A.G.; Ryckaert, W.R.; Pointer, M.R.; Deconinck, G.; Hanselaer, P. Optimization of colour quality of LED lighting with reference to memory colours. Light. Res. Technol. 2012, 44, 7–15. [Google Scholar] [CrossRef]

- Chalmers, A.N.; Soltic, S. Light source optimization: Spectral design and simulation of four-band white-light sources. Opt. Eng. 2012, 51, 044003. [Google Scholar] [CrossRef]

- Bulashevich, K.A.; Kulik, A.V.; Karpov, S.Y. Optimal ways of colour mixing for high-quality white-light LED sources. Phys. Status Solidi A 2015, 212, 914–919. [Google Scholar] [CrossRef]

- He, G.; Yan, H. Optimal spectra of the phosphor-coated white LEDs with excellent color rendering property and high luminous efficacy of radiation. Opt. Express 2011, 19, 2519–2529. [Google Scholar] [CrossRef]

- Zhong, P.; He, G.; Zhang, M. Optimal spectra of white light-emitting diodes using quantum dot nanophosphors. Opt. Express 2012, 20, 9122–9134. [Google Scholar] [CrossRef]

- He, G.; Tang, J. Spectral optimization of phosphor-coated white LEDs for color rendering and luminous efficacy. IEEE Photonics Technol. Lett. 2014, 26, 1450–1453. [Google Scholar] [CrossRef]

- He, G.; Tang, J. Spectral optimization of color temperature tunable white LEDs with excellent color rendering and luminous efficacy. Opt. Lett. 2014, 39, 5570–5573. [Google Scholar] [CrossRef] [PubMed]

- Zhong, P.; He, G.; Zhang, M. Spectral optimization of the color temperature tunable white light-emitting diode (LED) cluster consisting of direct-emission blue and red LEDs and a diphosphor conversion LED. Opt. Express 2012, 20, A684–A693. [Google Scholar] [CrossRef] [PubMed]

- Soltic, S.; Chalmers, A.N. Differential evolution for the optimisation of multi-band white LED light sources. Light. Res. Technol. 2012, 44, 224–237. [Google Scholar] [CrossRef]

- Knoop, M. Dynamic lighting for well-being in work places: Addressing the visual, emotional and biological aspects of lighting design. In Proceedings of the 15th International Symposium on Lighting Engineering, Valencia, Spain, 13–15 September 2006; Lighting Engineering Society of Slovenia: Bled, Slovenia, 2006; pp. 63–74. [Google Scholar]

- van Bommel, W. Visual, biological and emotional aspects of lighting: Recent new findings and their meaning for lighting practice. LEUKOS 2005, 2, 7–11. [Google Scholar] [CrossRef]

- Lledó, R. Human centric lighting, a new reality in healthcare environments. In Health and Social Care Systems of the Future: Demographic Changes, Digital Age and Human Factors; Springer: Cham, Switzerland, 2019; Volume 1012. [Google Scholar] [CrossRef]

- Houser, K.W.; Boyce, P.R.; Zeitzer, J.M.; Herf, M. Human-centric lighting: Myth, magic or metaphor? Light. Res. Technol. 2021, 53, 97–118. [Google Scholar] [CrossRef]

- Houser, K.W.; Esposito, T. Human-centric lighting: Foundational considerations and a five-step design process. Front. Neurol. 2021, 12, 630553. [Google Scholar] [CrossRef]

- Babilon, S.; Lenz, J.; Beck, S.; Myland, P.; Klabes, J.; Klir, S.; Khanh, T.Q. Task-related Luminance Distributions for Office Lighting Scenarios. Light Eng. 2021, 29, 115–128. [Google Scholar] [CrossRef]

- Ashibe, M.; Miki, M.; Hiroyasu, T. Distributed optimization algorithm for lighting color control using chroma sensors. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008; pp. 174–178. [Google Scholar] [CrossRef]

- Botero-Valencia, J.S.; López-Giraldo, F.E.; Vargas-Bonilla, J.F. Calibration method for Correlated Color Temperature (CCT) measurement using RGB color sensors. In Proceedings of the Symposium of Signals, Images and Artificial Vision-2013: STSIVA-2013, Bogota, Colombia, 11–13 September 2013; pp. 3–8. [Google Scholar] [CrossRef]

- Botero-Valencia, J.S.; López-Giraldo, F.E.; Vargas-Bonilla, J.F. Classification of artificial light sources and estimation of Color Rendering Index using RGB sensors, K Nearest Neighbor and Radial Basis Function. Int. J. Smart Sens. Intell. Syst. 2015, 8, 1505–1524. [Google Scholar] [CrossRef] [Green Version]

- Chew, I.; Kalavally, V.; Tan, C.P.; Parkkinen, J. A spectrally tunable smart LED lighting system With closed-loop control. IEEE Sens. J. 2016, 16, 4452–4459. [Google Scholar] [CrossRef]

- Maiti, P.K.; Roy, B. Evaluation of a daylight-responsive, iterative, closed-loop light control scheme. Light. Res. Technol. 2020, 50, 257–273. [Google Scholar] [CrossRef]

- Myland, P.; Babilon, S.; Khanh, T.Q. Tackling heterogeneous color registration: Binning color sensors. Sensors 2021, 21, 2950. [Google Scholar] [CrossRef] [PubMed]

- Babilon, S.; Beck, S.; Kunkel, J.; Klabes, J.; Myland, P.; Benkner, S.; Khanh, T.Q. Measurement of circadian effectiveness in lighting for office applications. Appl. Sci. 2021, 11, 6936. [Google Scholar] [CrossRef]

- Rea, M.S.; Figueiro, M.G. Light as a circadian stimulus for architectural lighting. Light. Res. Technol. 2018, 50, 497–510. [Google Scholar] [CrossRef]

- Truong, W.; Trinh, V.; Khanh, T.Q. Circadian stimulus—A computation model with photometric and colorimetric quantities. Light. Res. Technol. 2020, 52, 751–762. [Google Scholar] [CrossRef]

- Truong, W.; Zandi, B.; Trinh, V.Q.; Khanh, T.Q. Circadian metric—Computation of circadian stimulus using illuminance, correlated colour temperature and colour rendering index. Build. Environ. 2020, 184, 107146. [Google Scholar] [CrossRef]

- Judd, D.B.; MacAdam, D.L.; Wyszecki, G.; Budde, H.W.; Condit, H.R.; Henderson, S.T.; Simonds, J.L. Spectral distribution of typical daylight as a function of correlated color temperature. J. Opt. Soc. Am. 1964, 54, 1031–1040. [Google Scholar] [CrossRef]

- Brainard, G.C.; Lewy, A.J.; Menaker, M.; Fredrickson, R.H.; Miller, L.S.; Weleber, R.G.; Cassone, V.; Hudson, D. Effect of light wavelength on the suppression of nocturnal plasma melatonin in normal volunteers. Ann. N. Y. Acad. Sci. 1985, 453, 376–378. [Google Scholar] [CrossRef]

- Mclntyre, I.M.; Norman, T.R.; Burrows, G.D.; Armstrong, S.M. Human melatonin suppression by light is intensity dependent. J. Pineal Res. 1989, 6, 149–156. [Google Scholar] [CrossRef]

- Dollins, A.B.; Lynch, H.J.; Wurtman, R.J.; Deng, M.H.; Lieberman, H.R. Effects of illumination on human nocturnal serum melatonin levels and performance. Physiol. Behav. 1993, 53, 153–160. [Google Scholar] [CrossRef]

- Monteleone, P.; Esposito, G.; La Rocca, A.; Maj, M. Does bright light suppress nocturnal melatonin secretion more in women than men? J. Neural Transm. 1995, 102, 75–80. [Google Scholar] [CrossRef] [PubMed]

- Hashimoto, S.; Nakamura, K.; Honma, S.; Tokura, H.; Honma, K. Melatonin rhythm is not shifted by lights that suppress nocturnal melatonin in humans under entrainment. Am. J. -Physiol. -Regul. Integr. Comp. Physiol. 1996, 270, R1073–R1077. [Google Scholar] [CrossRef] [PubMed]

- Nathan, P.J.; Burrows, G.D.; Norman, T.R. The effect of dim light on suppression of nocturnal melatonin in healthy women and men. J. Neural Transm. 1997, 104, 643–648. [Google Scholar] [CrossRef]

- Aoki, H.; Yamada, N.; Ozeki, Y.; Yamane, H.; Kato, N. Minimum light intensity required to suppress nocturnal melatonin concentration in human saliva. Neurosci. Lett. 1998, 252, 91–94. [Google Scholar] [CrossRef]

- Visser, E.K.; Beersma, D.G.M.; Daan, S. Melatonin suppression by light in humans is maximal when the nasal part of the retina is illuminated. J. Biol. Rhythm. 1999, 14, 116–121. [Google Scholar] [CrossRef] [Green Version]

- Nathan, P.J.; Wyndham, E.L.; Burrows, G.D.; Norman, T.R. The effect of gender on the melatonin suppression by light: A dose response relationship. J. Neural Transm. 2000, 107, 271–279. [Google Scholar] [CrossRef]

- Zeitzer, J.M.; Dijk, D.; Kronauer, R.E.; Brown, E.N.; Czeisler, C.A. Sensitivity of the human circadian pacemaker to nocturnal light: Melatonin phase resetting and suppression. J. Physiol. 2000, 526, 695–702. [Google Scholar] [CrossRef]

- Brainard, G.C.; Hanifin, J.P.; Greeson, J.M.; Byrne, B.; Glickman, G.; Gerner, E.; Rollag, M.D. Action spectrum for melatonin regulation in humans: Evidence for a novel circadian photoreceptor. J. Neurosci. 2001, 21, 6405–6412. [Google Scholar] [CrossRef] [Green Version]

- Thapan, K.; Arendt, J.; Skene, D.J. An action spectrum for melatonin suppression: Evidence for a novel non-rod, non-cone photoreceptor system in humans. J. Physiol. 2001, 535, 261–267. [Google Scholar] [CrossRef]

- Wright, H.R.; Lack, L.C. Effect of light wavelength on suppression and phase delay of the melatonin rhythm. Chronobiol. Int. 2001, 18, 801–808. [Google Scholar] [CrossRef] [PubMed]

- Hébert, M.; Martin, S.K.; Lee, C.; Eastman, C.I. The effects of prior light history on the suppression of melatonin by light in humans. J. Pineal Res. 2002, 33, 198–203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Figueiro, M.G.; Bullough, J.D.; Parsons, R.H.; Rea, M.S. Preliminary evidence for spectral opponency in the suppression of melatonin by light in humans. Neuroreport 2004, 15, 313–316. [Google Scholar] [CrossRef] [PubMed]

- Figueiro, M.G.; Bullough, J.D.; Bierman, A.; Rea, M.S. Demonstration of additivity failure in human circadian phototransduction. Neuroendocrinol. Lett. 2005, 26, 493–498. [Google Scholar] [PubMed]

- Figueiro, M.G.; Bullough, J.D.; Parsons, R.H.; Rea, M.S. Preliminary evidence for a change in spectral sensitivity of the circadian system at night. J. Circadian Rhythm. 2005, 3, 14. [Google Scholar] [CrossRef] [Green Version]

- Kayumov, L.; Casper, R.F.; Hawa, R.J.; Perelman, B.; Chung, S.A.; Sokalsky, S.; Shapiro, C.M. Blocking low-wavelength light prevents nocturnal melatonin suppression with no adverse effect on performance during simulated shift work. J. Clin. Endocrinol. Metab. 2005, 90, 2755–2761. [Google Scholar] [CrossRef] [Green Version]

- Herljevic, M.; Middleton, B.; Thapan, K.; Skene, D.J. Light-induced melatonin suppression: Age-related reduction in response to short wavelength light. Exp. Gerontol. 2005, 40, 237–242. [Google Scholar] [CrossRef] [Green Version]

- Cajochen, C.; Münch, M.; Kobialka, S.; Kräuchi, K.; Steiner, R.; Oelhafen, P.; Orgül, S.; Wirz-Justice, A. High sensitivity of human melatonin, alertness, thermoregulation, and heart rate to short wavelength light. J. Clin. Endocrinol. Metab. 2005, 90, 1311–1316. [Google Scholar] [CrossRef] [Green Version]

- Jasser, S.A.; Hanifin, J.P.; Rollag, M.D.; Brainard, G.C. Dim light adaptation attenuates acute melatonin suppression in humans. J. Biol. Rhythm. 2006, 21, 394–404. [Google Scholar] [CrossRef]

- Figueiro, M.G.; Rea, M.S.; Bullough, J.D. Circadian effectiveness of two polychromatic lights in suppressing human nocturnal melatonin. Neurosci. Lett. 2006, 406, 293–297. [Google Scholar] [CrossRef]

- Revell, V.L.; Skene, D.J. Light-induced melatonin suppression in humans with polychromatic and monochromatic light. Chronobiol. Int. 2007, 24, 1125–1137. [Google Scholar] [CrossRef] [PubMed]

- Brainard, G.C.; Sliney, D.; Hanifin, J.P.; Glickman, G.; Byrne, B.; Greeson, J.M.; Jasser, S.; Gerner, E.; Rollag, M.D. Sensitivity of the human circadian system to short-wavelength (420-nm) light. J. Biol. Rhythm. 2008, 23, 379–386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bullough, J.D.; Bierman, A.; Figueiro, M.G.; Rea, M.S. On melatonin suppression from polychromatic and narrowband light. Chronobiol. Int. 2008, 25, 653–656. [Google Scholar] [CrossRef] [PubMed]

- Figueiro, M.G.; Bierman, A.; Rea, M.S. Retinal mechanisms determine the subadditive response to polychromatic light by the human circadian system. Neurosci. Lett. 2008, 438, 242–245. [Google Scholar] [CrossRef]

- Kozaki, T.; Koga, S.; Toda, N.; Noguchi, H.; Yasukouchi, A. Effects of short wavelength control in polychromatic light sources on nocturnal melatonin secretion. Neurosci. Lett. 2008, 439, 256–259. [Google Scholar] [CrossRef]

- Revell, V.L.; Barrett, D.C.G.; Schlangen, L.J.M.; Skene, D.J. Predicting human nocturnal nonvisual responses to monochromatic and polychromatic light with a melanopsin photosensitivity function. Chronobiol. Int. 2010, 27, 1762–1777. [Google Scholar] [CrossRef] [Green Version]

- West, K.E.; Jablonski, M.R.; Warfield, B.; Cecil, K.S.; James, M.; Ayers, M.A.; Maida, J.; Bowen, C.; Sliney, D.H.; Rollag, M.D.; et al. Blue light from light-emitting diodes elicits a dose-dependent suppression of melatonin in humans. J. Appl. Physiol. 2011, 110, 619–626. [Google Scholar] [CrossRef] [Green Version]

- Brainard, G.C.; Hanifin, J.P.; Warfield, B.; Stone, M.K.; James, M.E.; Ayers, M.; Kubey, A.; Byrne, B.; Rollag, M. Short-wavelength enrichment of polychromatic light enhances human melatonin suppression potency. J. Pineal Res. 2015, 58, 352–361. [Google Scholar] [CrossRef] [Green Version]

- Nagare, R.; Rea, M.S.; Plitnick, B.; Figueiro, M.G. Nocturnal melatonin suppression by adolescents and adults for different levels, spectra, and durations of light exposure. J. Biol. Rhythm. 2019, 34, 178–194. [Google Scholar] [CrossRef]

- Nagare, R.; Plitnick, B.; Figueiro, M.G. Effect of exposure duration and light spectra on nighttime melatonin suppression in adolescents and adults. Light. Res. Technol. 2019, 51, 530–543. [Google Scholar] [CrossRef]

- Nagare, R.; Rea, M.S.; Plitnick, B.; Figueiro, M.G. Effect of white light devoid of ”cyan” spectrum radiation on nighttime melatonin suppression over a 1-h exposure duration. J. Biol. Rhythm. 2019, 34, 195–204. [Google Scholar] [CrossRef] [PubMed]

- Rea, M.S.; Nagare, R.; Figueiro, M.G. Predictions of melatonin suppression during the early biological night and their implications for residential light exposures prior to sleeping. Sci. Rep. 2020, 10, 14114. [Google Scholar] [CrossRef]

- Rea, M.S.; Nagare, R.; Figueiro, M.G. Relative light sensitivities of four retinal hemi-fields for suppressing the synthesis of melatonin at night. Neurobiol. Sleep Circadian Rhythm. 2021, 10, 100066. [Google Scholar] [CrossRef] [PubMed]

- Rea, M.S. Toward a definition of circadian light. J. Light Vis. Environ. 2011, 35, 250–254. [Google Scholar] [CrossRef] [Green Version]

- Rea, M.S.; Figueiro, M.G.; Bierman, A.; Hamner, R. Modelling the spectral sensitivity of the human circadian system. Light. Res. Technol. 2012, 44, 386–396. [Google Scholar] [CrossRef]

- Rea, M.S.; Figueiro, M.G.; Bullough, J.D.; Bierman, A. A model of phototransduction by the human circadian system. Brain Res. Rev. 2005, 50, 213–228. [Google Scholar] [CrossRef] [PubMed]

- Rea, M.S.; Nagare, R.; Figueiro, M.G. Modeling circadian phototransduction: Retinal neurophysiology and neuroanatomy. Front. Neurosci. 2021, 14, 615305. [Google Scholar] [CrossRef]

- Commission Internationale de l’Éclairage. CIE System for Metrology of Optical Radiation for ipRGC-Influenced Responses to Light, CIE S 026/E:2018; CIE: Vienna, Austria, 2018. [Google Scholar] [CrossRef]

- Spitschan, M.; Stefani, O.; Blattner, P.; Gronfier, C.; Lockley, S.W.; Lucas, R.J. How to report light exposure in human chronobiology and sleep research experiments. Clocks Sleep 2019, 1, 280–289. [Google Scholar] [CrossRef] [Green Version]

- Commission Internationale de l’Éclairage. What to Document and Report in Studies of ipRGC-Influenced Responses to Light, CIE TN 011:2020; CIE: Vienna, Austria, 2020. [Google Scholar] [CrossRef]

- Lucas, R.J.; Peirson, S.N.; Berson, D.M.; Brown, T.M.; Cooper, H.M.; Czeisler, C.A.; Figueiro, M.G.; Gamlin, P.D.; Lockley, S.W.; O’Hagan, J.B.; et al. Measuring and using light in the melanopsin age. Trends Neurosci. 2014, 37, 1–9. [Google Scholar] [CrossRef]

- International WELL Building Institute pbc. The WELL Building Standard, Version 2; International WELL Building Institute pbc: New York, NY, USA, 2020; Available online: https://v2.wellcertified.com/wellv2/en/overview (accessed on 9 November 2021).

- Wood, B.; Rea, M.S.; Plitnick, B.; Figueiro, M.G. Light level and duration of exposure determine the impact of self-luminous tablets on melatonin suppression. Appl. Ergon. 2013, 44, 237–240. [Google Scholar] [CrossRef]

- Figueiro, M.G.; Plitnick, B.A.; Lok, A.; Jones, G.E.; Higgins, P.; Hornick, T.R.; Rea, M.S. Tailored lighting intervention improves measures of sleep, depression, and agitation in persons with Alzheimer’s disease and related dementia living in long-term care facilities. Clin. Interv. Aging 2014, 9, 1527–1537. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sahin, L.; Wood, B.M.; Plitnick, B.; Figueiro, M.G. Daytime light exposure: Effects on biomarkers, measures of alertness, and performance. Behav. Brain Res. 2014, 274, 176–185. [Google Scholar] [CrossRef] [PubMed]

- Young, C.R.; Jones, G.E.; Figueiro, M.G.; Soutière, S.E.; Keller, M.W.; Richardson, A.M.; Lehmann, B.J.; Rea, M.S. At-sea trial of 24-h-based submarine watchstanding schedules with high and low correlated color temperature light sources. J. Biol. Rhythm. 2015, 30, 144–154. [Google Scholar] [CrossRef]

- Figueiro, M.G.; Hunter, C.M.; Higgins, P.; Hornick, T.; Jones, G.E.; Plitnick, B.; Brons, J.; Rea, M.S. Tailored lighting intervention for persons with dementia and caregivers living at home. Sleep Health 2015, 1, 322–330. [Google Scholar] [CrossRef] [Green Version]

- Figueiro, M.G.; Rea, M.S. Office lighting and personal light exposures in two seasons: Impact on sleep and mood. Light. Res. Technol. 2016, 48, 352–364. [Google Scholar] [CrossRef]

- Figueiro, M.; Overington, D. Self-luminous devices and melatonin suppression in adolescents. Light. Res. Technol. 2016, 48, 966–975. [Google Scholar] [CrossRef]

- Figueiro, M.G.; Steverson, B.; Heerwagen, J.; Kampschroer, K.; Hunter, C.M.; Gonzales, K.; Plitnick, B.; Rea, M.S. The impact of daytime light exposure on sleep and mood in office workers. Sleep Health 2017, 3, 204–215. [Google Scholar] [CrossRef]

- Figueiro, M.G. Biological effects of light: Can self-luminous displays play a role? Inf. Disp. 2018, 34, 6–20. [Google Scholar] [CrossRef] [Green Version]

- Figueiro, M.G.; Plitnick, B.; Roohan, C.; Sahin, L.; Kalsher, M.; Rea, M.S. Effects of a tailored lighting intervention on sleep-quality, rest-activity, mood, and behavior in older adults with Alzheimer disease and related dementias: A randomized clinical trial. J. Clin. Sleep Med. 2019, 15, 1757–1767. [Google Scholar] [CrossRef] [Green Version]

- Figueiro, M.G.; Kalsher, M.; Steverson, B.C.; Heerwagen, J.; Kampschroer, K.; Rea, M.S. Circadian-effective light and its impact on alertness in office workers. Light. Res. Technol. 2019, 51, 171–183. [Google Scholar] [CrossRef]

- Figueiro, M.G.; Sahin, L.; Roohan, C.; Kalsher, M.; Plitnick, B.; Rea, M.S. Effects of red light on sleep inertia. Nat. Sci. Sleep 2019, 11, 45–57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Figueiro, M.G.; Sahin, L.; Kalsher, M.; Plitnick, B.; Rea, M.S. Long-term, all-day exposure to circadian-effective light improves sleep, mood, and behavior in persons with dementia. J. Alzheimer’S Dis. Rep. 2020, 4, 297–312. [Google Scholar] [CrossRef]

- Luther, R. Aus dem Gebiet der Farbreizmetrik (On color stimulus metrics). Z. Tech. Phys. 1927, 8, 540–558. [Google Scholar]

- Fischer, S.; Khanh, T.Q. Color reproduction of digital camera systems using LED spotlight illumination. In Proceedings of the 23rd Color and Imaging Conference, Tunis, Tunisia, 23–26 August 2015; Society for Imaging Science and Technology: Darmstadt, Germany, 2015; pp. 143–147. Available online: https://www.ingentaconnect.com/content/ist/cic/2015/00002015/00000001/art00025 (accessed on 9 November 2021).

- Fischer, S.; Myland, P.; Szarafanowicz, M.; Bodrogi, P.; Khanh, T.Q. Strengths and limitations of a uniform 3D-LUT approach for digital camera characterization. In Proceedings of the 24th Color and Imaging Conference, San Diego, CA, USA, 7–11 November 2016; Society for Imaging Science and Technology: San Diego, CA, USA, 2016; pp. 315–322. [Google Scholar] [CrossRef]

- Babilon, S.; Myland, P.; Klabes, J.; Simon, J.; Khanh, T.Q. Spectral reflectance estimation of organic tissue for improved color correction of video-assisted surgery. J. Electron. Imaging 2018, 27, 053012. [Google Scholar] [CrossRef]

- Babilon, S.; Beck, S.; Khanh, T.Q. A field test of a simplified method of estimating circadian stimulus. Light. Res. Technol. 2021. [Google Scholar] [CrossRef]

- Puiu, P.D. Color sensors and their applications. In Optical Nano- and Microsystems for Bioanalytics; Fritzsche, W., Popp, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 3–45. [Google Scholar] [CrossRef]

- Yurish, S.Y. Intelligent opto sensors’ interfacing based on universal frequency-to-digital converter. Sens. Transducers 2005, 56, 326–334. [Google Scholar]

- Thomasson, J.A. Cotton-color instrumentation accuracy: Temperature and calibration procedure effects. Trans. Am. Soc. Agric. Biol. Eng. 1999, 42, 293–307. [Google Scholar] [CrossRef]

- Vora, P.L.; Farrell, J.E.; Tietz, J.D.; Brainard, D.H. Digital Color Cameras-1-Response Models; Technical Report HPL-97-53; Hewlett-Packard Laboratories: Palo Alto, CA, USA, 1997. [Google Scholar]

- Urban, P.; Desch, M.; Happel, K.; Spiehl, D. Recovering camera sensitivities using target-based reflectances captured under multiple LED-illuminations. In Proceedings of the 16th Workshop on Color Image Processing, Brno, Czech Republic, 28–30 May 2014; German Color Group: Ilmenau, Germany, 2010; pp. 9–16. [Google Scholar]

- Jiang, J.; Liu, D.; Gu, J.; Süsstrunk, S. What is the space of spectral sensitivity functions for digital color cameras? In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Clearwater Beach, FL, USA, 15–17 January 2013; IEEE: Clearwater Beach, FL, USA, 2013; pp. 168–179. [Google Scholar] [CrossRef] [Green Version]

- Finlayson, G.; Darrodi, M.M.; Mackiewicz, M. Rank-based camera spectral sensitivity estimation. J. Opt. Soc. Am. A 2016, 33, 589–599. [Google Scholar] [CrossRef] [Green Version]

- European Machine Vision Association. EMVA Standard 1288: Standard for Characterization Of Image Sensors And Cameras, Release 3.1; EMVA: Barcelona, Spain, 2016. Available online: https://www.emva.org/wp-content/uploads/EMVA1288-3.1a.pdf (accessed on 9 November 2021).

- Walowit, E.; Buhr, H.; Wüller, D. Multidimensional estimation of spectral sensitivities. In Proceedings of the 25th Color and Imaging Conference, Society for Imaging Science and Technology, Lillehammer, Norway, 11–15 September 2017; pp. 1–6. [Google Scholar]

- Muschaweck, J.; Rehn, H. Illumination design patterns for homogenization and color mixing. Adv. Opt. Technol. 2019, 8, 13–32. [Google Scholar] [CrossRef]

- Khanh, T.Q.; Szarafanowicz, M. Innovative Sensor-und Integrationstechnologien für Intelligente SSL Leuchtensysteme (INNOSYS), Teilvorhaben “Farbsensorik—Farbregelungskonzept”: Abschlussbericht, Förderkennzeichen 16ES0273; Technische Universität Darmstadt: Darmstadt, Germany, 2018. [Google Scholar] [CrossRef]

- Byrd, R.H.; Hribar, M.E.; Nocedal, J. An interior point algorithm for large-scale nonlinear programming. SIAM J. Optim. 1999, 9, 877–900. [Google Scholar] [CrossRef]

- Byrd, R.; Gilbert, J.; Nocedal, J. A trust region method based on interior point techniques for nonlinear programming. Math. Program. 2000, 89, 149–185. [Google Scholar] [CrossRef] [Green Version]

- Waltz, R.A.; Morales, J.L.; Nocedal, J.; Orban, D. An interior algorithm for nonlinear optimization that combines line search and trust region steps. Math. Program. 2006, 1007, 391–408. [Google Scholar] [CrossRef]

- Schanda, J. CIE colorimetry. In Colorimetry: Understanding the CIE System; Schanda, J., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2007; pp. 25–78. [Google Scholar] [CrossRef]

- Babilon, S. On the Color Rendition of White Light Sources in Relation to Memory Preference. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2018. Available online: https://tuprints.ulb.tu-darmstadt.de/7799/ (accessed on 7 January 2021).

- Hong, G.; Luo, M.R.; Rhodes, P.A. A study of digital camera colorimetric characterization based on polynomial modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- Cheung, V.; Westland, S.; Connah, D.; Ripamonti, C. A comparative study of the characterisation of colour cameras by means of neural networks and polynomial transforms. Color. Technol. 2004, 120, 19–25. [Google Scholar] [CrossRef]

- Commission Internationale de l’Éclairage. Colorimetry—CIE Technical Report 15:2004, 3rd ed.; CIE: Vienna, Austria, 2004. [Google Scholar]

- MacAdam, D.L. Projective Transformations of I. C. I. Color Specifications. J. Opt. Soc. Am. 1937, 27, 294–299. [Google Scholar] [CrossRef]

- Commission Internationale de l’Éclairage. Technical note: Brussels session of the International Commission on Illumination. J. Opt. Soc. Am. 1960, 50, 89–90. [Google Scholar] [CrossRef]

- Li, C.; Cui, G.; Melgosa, M.; Ruan, X.; Zhang, Y.; Ma, L.; Xiao, K.; Luo, M.R. Accurate Method for Computing Correlated Color Temperature. Opt. Express 2016, 24, 14066–14078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Polyak, B.T. Newton’s Method and its Use in Optimization. Eur. J. Oper. Res. 2007, 181, 1086–1096. [Google Scholar] [CrossRef]

- Bieske, K. Über die Wahrnehmung von Lichtfarbenänderungen zur Entwicklung Dynamischer Beleuchtungssysteme. Ph.D. Thesis, Technische Universität Ilmenau, Ilmenau, Germany, 2010. Available online: https://d-nb.info/1002583519/04 (accessed on 3 November 2021).

- Botero-Valencia, J.S.; Valencia-Aguirre, J.; Durmus, D.; Davis, W. Multi-channel low-cost light spectrum measurement using a multilayer perceptron. Energy Build. 2019, 199, 579–587. [Google Scholar] [CrossRef]

- Botero-Valencia, J.S.; Valencia-Aguirre, J.; Durmus, D. A low-cost IoT multi-spectral acquisition device. HardwareX 2021, 9, e00173. [Google Scholar] [CrossRef]

- Tuchinda, C.; Srivannaboon, S.; Lim, H.W. Photoprotection by window glass, automobile glass, and sunglasses. J. Am. Acad. Dermatol. 2006, 54, 845–854. [Google Scholar] [CrossRef]

- Li, D.; Li, Z.; Zheng, Y.; Liu, C.; Lu, L. Optical performance of single and double glazing units in the wavelength 337–900 nm. Sol. Energy 2015, 122, 1091–1099. [Google Scholar] [CrossRef]

- Serrano, M.; Moreno, J.C. Spectral transmission of solar radiation by plastic and glass materials. J. Photochem. Photobiol. B Biol. 2020, 208, 111894. [Google Scholar] [CrossRef] [PubMed]

- Klir, S.; Fathia, R.; Babilon, S.; Benkner, S.; Khanh, T.Q. Unsupervised clustering pipeline to obtain diversified light spectra for subject studies and correlation analyses. Appl. Sci. 2021, 11, 9062. [Google Scholar] [CrossRef]

| No. | Size | Content |

|---|---|---|

| 1 | 3 × 3 | [R G B] |

| 2 | 3 × 5 | [R G B RGB 1] |

| 3 | 3 × 7 | [R G B RG RB GB 1] |

| 4 | 3 × 8 | [R G B RG RB GB RGB 1] |

| 5 | 3 × 10 | [R G B RG RB GB 1] |

| 6 | 3 × 11 | [R G B RG RB GB RGB 1] |

| 7 | 3 × 14 | [R G B RG RB GB RGB 1] |

| 8 | 3 × 16 | [R G B RG RB GB RGB G B R ] |

| 9 | 3 × 17 | [R G B RG RB GB RGB G B R 1] |

| 10 | 3 × 19 | [R G B RG RB GB RGB G B R R2B G2R B2G ] |

| 11 | 3 × 20 | [R G B RG RB GB RGB G B R R2B G2R B2G 1] |

| 12 | 3 × 22 | [R G B RG RB GB RGB G B R B R G GB RB RG] |

| Name/Par. | Halogen | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Δu′v′(3 × 3) | 3.2 | 7.4 | 7.7 | 1.5 | 4.5 | 1.5 | 4.2 | 2.7 | 8.3 |

| Δu′v′(3 × 5) | 43 | 4.8 | 48 | 12 | 41 | 12 | 51 | 16 | 16 |

| Δu′v′(3 × 7) | 0.00 | 8.5 | 5.3 | 0.00 | 1.6 | 0.00 | 7.0 | 20 | 6.4 |

| Δu′v′(3 × 8) | 4.3 | 6.9 | 32 | 11 | 40 | 11 | 48 | 7 | 16 |

| Δu′v′(3 × 10) | 0.66 | 2.9 | 4.5 | 0.0 | 0.92 | 0.0 | 12 | 0.00 | 3.8 |

| Δu′v′(3 × 11) | 43 | 7.9 | 0.00 | 2.2 | 37 | 2.2 | 42 | 12 | 8.1 |

| Δu′v′(3 × 14) | 7.6 | 7.1 | 10 | 0.00 | 7.8 | 0.0 | 0.0 | 1.7 | 6.7 |

| Δu′v′(3 × 16) | 0.77 | 8.4 | 5.7 | 0.00 | 3.3 | 0.00 | 2.5 | 1.9 | 10 |

| Δu′v′(3 × 17) | 0.77 | 8.4 | 5.7 | 0.00 | 3.3 | 0.00 | 2.5 | 1.9 | 10 |

| Δu′v′(3 × 19) | 5.6 | 7.2 | 9.1 | 0.00 | 4.3 | 0.00 | 0.00 | 5.7 | 7.5 |

| Δu′v′(3 × 20) | 5.6 | 7.2 | 9.1 | 0.00 | 4.3 | 0.00 | 0.00 | 5.7 | 7.5 |

| Δu′v′(3 × 22) | 5.1 | 6.6 | 8.9 | 0.00 | 2.2 | 0.00 | 0.00 | 3.2 | 7.2 |

| Name/Par. | Halogen | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| and other parameters directly calculated from the measured artificial light spectra | |||||||||

| CCT in | 2762 | 4100 | 2640 | 4423 | 2785 | 4423 | 2640 | 4580 | 4500 |

| in | 750 | 750 | 750 | 750 | 750 | 750 | 750 | 750 | 750 |

| 676.11 | 471.03 | 640.73 | 574.68 | 529.72 | 574.73 | 397.04 | 430.78 | 710.02 | |

| 0.47 | 0.40 | 0.46 | 0.44 | 0.43 | 0.44 | 0.37 | 0.39 | 0.48 | |

| and other parameters estimated from the color sensor readouts | |||||||||

| in | 748 | 794 | 829 | 806 | 806 | 673 | 690 | 669 | 821 |

| 0.44 | 0.40 | 0.45 | 0.45 | 0.45 | 0.42 | 0.38 | 0.40 | 0.49 | |

| Compared parameters | |||||||||

| 0.0271 | 0.0072 | 0.0062 | 0.0098 | 0.0234 | 0.0245 | 0.0042 | 0.0105 | 0.0079 | |

| Optimized matrix transformation determined from the artificial light sources training database | |||||||||

| Functional relationship for calculating illuminance from sensor output | |||||||||

| ; from Equation (5) | |||||||||

| Name/Par. | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| and other parameters directly calculated from the measured artificial spectra | |||||||||

| CCT in | 3300 | 4058 | 2785 | 4390 | 2640 | 4390 | 2797 | 4870 | 4000 |

| in | 750 | 750 | 750 | 750 | 750 | 750 | 750 | 750 | 750 |

| 897 | 471 | 530 | 620 | 641 | 620 | 727 | 763 | 637 | |

| 0.51 | 0.40 | 0.43 | 0.45 | 0.46 | 0.45 | 0.48 | 0.49 | 0.46 | |

| and other parameters estimated from the color sensor readouts | |||||||||

| in | 673 | 790 | 766 | 878 | 878 | 748 | 738 | 694 | 815 |

| 0.49 | 0.39 | 0.44 | 0.47 | 0.46 | 0.45 | 0.46 | 0.47 | 0.44 | |

| Compared parameters | |||||||||

| 0.0208 | 0.0126 | 0.0143 | 0.0208 | 0.0038 | 0.0083 | 0.0186 | 0.0198 | 0.0203 | |

| 2.42 | 7.50 | 4.46 | 7.04 | 7.73 | 7.04 | 6.46 | 6.10 | 4.37 | |

| Sampling Time | 06:32 | 08:03 | 10:04 | 12:01 | 14:02 | 16:11 | 18:23 | 19:04 | 20:35 |

|---|---|---|---|---|---|---|---|---|---|

| Δu′v′(3 × 3) | 0.5 | 0.02 | 0.094 | 0.053 | 0.092 | 0.16 | 0.15 | 0.094 | 1.2 |

| Δu′v′(3 × 5) | 38 | 49 | 6.6 | 5.4 | 4.5 | 3.1 | 9.5 | 9.0 | 33 |

| Δu′v′(3 × 7) | 1.4 | 1.5 | 0.018 | 0.55 | 0.2 | 0.6 | 0.038 | 1.1 | 0.79 |

| Δu′v′(3 × 8) | 35 | 48 | 6.6 | 5.4 | 4.5 | 3.0 | 9.6 | 8.7 | 29 |

| Δu′v′(3 × 10) | 0.71 | 0.054 | 0.13 | 0.057 | 0.065 | 0.17 | 0.57 | 0.061 | 0.78 |

| Δu′v′(3 × 11) | 31 | 48 | 6.5 | 5.3 | 4.4 | 3.0 | 9.6 | 8.4 | 23 |

| Δu′v′(3 × 14) | 1.1 | 0.16 | 0.27 | 0.11 | 0.077 | 0.14 | 0.068 | 0.12 | 2.2 |

| Δu′v′(3 × 16) | 0.66 | 0.21 | 0.3 | 0.1 | 0.039 | 0.21 | 0.61 | 0.11 | 0.8 |

| Δu′v′(3 × 17) | 0.66 | 0.21 | 0.3 | 0.1 | 0.039 | 0.021 | 0.61 | 0.11 | 0.8 |

| Δu′v′(3 × 19) | 0.23 | 0.069 | 0.093 | 0.039 | 0.047 | 0.05 | 0.094 | 0.13 | 0.64 |

| Δu′v′(3 × 20) | 0.23 | 0.069 | 0.93 | 0.039 | 0.047 | 0.05 | 0.094 | 0.13 | 0.64 |

| Δu′v′(3 × 22) | 0.48 | 0.019 | 0.064 | 0.083 | 0.051 | 0.11 | 0.26 | 0.17 | 0.74 |

| Sampling Time | 06:32 | 08:03 | 10:04 | 12:01 | 13:02 | 14:02 | 16:11 | 18:23 | 20:45 |

|---|---|---|---|---|---|---|---|---|---|

| and other parameters directly calculated from the measured daylight spectra | |||||||||

| CCT in | 10,969 | 14,170 | 5557 | 5614 | 6324 | 5679 | 6240 | 5389 | 17,815 |

| in lux | 871 | 5046 | 57,919 | 80,580 | 29,407 | 87,612 | 33,370 | 36,472 | 203 |

| 0.2714 | 0.2610 | 0.3312 | 0.3299 | 0.3162 | 0.3285 | 0.3175 | 0.3351 | 0.2523 | |

| 0.2903 | 0.2756 | 0.3428 | 0.3402 | 0.3278 | 0.3396 | 0.3304 | 0.3470 | 0.2685 | |

| 0.613 | 0.690 | 0.699 | 0.699 | 0.698 | 0.699 | 0.698 | 0.698 | 0.435 | |

| and other parameters estimated from the color sensor readouts by applying the CIE daylight model | |||||||||

| in | 10,969 | 14,170 | 5557 | 5614 | 6324 | 5679 | 6240 | 5389 | 17,815 |

| in | 879 | 5015 | 578,11 | 80,315 | 29,447 | 87,491 | 33,519 | 36,431 | 200 |

| 4.4 | 3.3 | 2.2 | 3.1 | 2.9 | 2.8 | 2.1 | 1.7 | 5.8 | |

| 0.614 | 0.690 | 0.699 | 0.699 | 0.698 | 0.699 | 0.698 | 0.698 | 0.433 | |

| 0.001 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.002 | |

| and other parameters estimated from the color sensor readouts by applying Truong et al.’s model | |||||||||

| in | 879 | 5015 | 57,811 | 80,315 | 29,447 | 87,491 | 33,519 | 36,431 | 200 |

| 0.607 | 0.688 | 0.698 | 0.699 | 0.697 | 0.699 | 0.697 | 0.697 | 0.414 | |

| 0.006 | 0.002 | 0.001 | 0.000 | 0.001 | 0.000 | 0.001 | 0.001 | 0.021 | |

| Optimized matrix transformation determined from the daylight light sources training database | |||||||||

| Functional relationship for calculating illuminance from sensor output | |||||||||

| ; from Equation (5) | |||||||||

| Sampling Time | 07:27 | 10:03 | 11:06 | 12:03 | 13:05 | 14:07 | 15:10 | 16:12 | 19:14 |

|---|---|---|---|---|---|---|---|---|---|

| and other parameters directly calculated from the measured daylight spectra | |||||||||

| CCT in | 12,464 | 8033 | 6313 | 5651 | 5914 | 5853 | 5470 | 8174 | 16,066 |

| in lux | 401 | 9397 | 23,940 | 58,212 | 39,463 | 43,150 | 71,006 | 15,058 | 4613 |

| 0.2652 | 0.2942 | 0.3163 | 0.3291 | 0.3236 | 0.3248 | 0.3332 | 0.2919 | 0.2568 | |

| 0.2831 | 0.3066 | 0.3293 | 0.3413 | 0.3364 | 0.3376 | 0.3449 | 0.3081 | 0.2705 | |

| 0.528 | 0.693 | 0.697 | 0.699 | 0.698 | 0.699 | 0.699 | 0.696 | 0.561 | |

| and other parameters estimated from the color sensor readouts by applying the CIE daylight model | |||||||||

| in | 12,561 | 8044 | 6306 | 5647 | 5907 | 5845 | 5470 | 8146 | 16,309 |

| in | 402 | 9389 | 24,051 | 58,213 | 39,593 | 43,239 | 70,871 | 15,180 | 454 |

| 4.6 | 1.8 | 2.0 | 2.0 | 1.9 | 1.9 | 2.0 | 0.57 | 3.7 | |

| 0.530 | 0.693 | 0.697 | 0.699 | 0.698 | 0.699 | 0.699 | 0.696 | 0.559 | |

| 0.002 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.001 | |

| and other parameters estimated from the color sensor readouts by applying Truong et al.’s model | |||||||||

| 0.47 | 0.0 | 0.174 | 0.12 | 0.182 | 0.196 | 0.0596 | 0.403 | 0.85 | |

| 0.517 | 0.691 | 0.696 | 0.698 | 0.698 | 0.698 | 0.699 | 0.695 | 0.548 | |

| 0.011 | 0.002 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.013 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trinh, V.Q.; Babilon, S.; Myland, P.; Khanh, T.Q. Processing RGB Color Sensors for Measuring the Circadian Stimulus of Artificial and Daylight Light Sources. Appl. Sci. 2022, 12, 1132. https://doi.org/10.3390/app12031132

Trinh VQ, Babilon S, Myland P, Khanh TQ. Processing RGB Color Sensors for Measuring the Circadian Stimulus of Artificial and Daylight Light Sources. Applied Sciences. 2022; 12(3):1132. https://doi.org/10.3390/app12031132

Chicago/Turabian StyleTrinh, Vinh Quang, Sebastian Babilon, Paul Myland, and Tran Quoc Khanh. 2022. "Processing RGB Color Sensors for Measuring the Circadian Stimulus of Artificial and Daylight Light Sources" Applied Sciences 12, no. 3: 1132. https://doi.org/10.3390/app12031132

APA StyleTrinh, V. Q., Babilon, S., Myland, P., & Khanh, T. Q. (2022). Processing RGB Color Sensors for Measuring the Circadian Stimulus of Artificial and Daylight Light Sources. Applied Sciences, 12(3), 1132. https://doi.org/10.3390/app12031132