1. Introduction

Semantic segmentation is a fundamental task in computer vision and plays an important part in many scene understanding problems. A semantic segmentation model needs to predict the category of each pixel in an image. In the process of inference, two problems need to be solved: localization and classification.

Semantic segmentation is closely related to image classification. As a fundamental task in computer vision, many surveys [

1] have been performed. Most recent semantic segmentation methods follow the fully convolutional network (FCN) [

2] and equip it with an encoder-decoder architecture. By removing fully connected layers, the fully convolutional network is able to handle images of any size and obtain pixel-wise predictions. The encoder in an encoder-decoder semantic segmentation model is usually borrowed from the image classification task [

3,

4,

5,

6,

7], consists of stacked convolution layers, progressively reduce the spatial resolution of feature maps and enlarge the receptive fields of convolution kernels as much as possible to get more abstract/semantic information. The decoder recovers the spatial resolution for pixel-level classification of the feature representations yielded by the encoder. Feature representation learning is the most important model component, which usually is finished by a backbone pretrained on the ImageNet. CNN is famous for the extraction of local features. After removing the fully connected layer, each element in the feature map output by the fully convolutional network has a shortage of encoding long-range dependency information, which is momentous for semantic segmentation and results in coarse results.

To overcome the lack of multi-scale information, many works enlarged receptive fields of convolution operations. DeepLab [

8,

9,

10,

11] introduced artous convolution and developed the ASPP module that adopted atrous convolutions with different dilation rates. PSPNet [

12] proposed the PPM module to obtain contextual information on different scales and built a feature pyramid. To balance the contradiction between semantic and spatial information, many works [

13] aggregated the feature maps from different stages of the backbone, and multiple variants of the encoder-decoder architectures are developed.

However, the aforementioned methods still do not know which part of context information is pivotal for segmentation. To address this question, some works integrate attention modules into FCN-based architectures. PSANet [

14] proposed the point-wise spatial attention module for capturing the long-range contextual information. DANet [

15] used two attention modules to model long-range dependencies from spatial and channel dimensions, respectively. CCNet [

16] introduced a relatively low computation complexity attention module. OCNet [

17] attached attention modules to each branch of the PPM module or the ASPP module.

The aforementioned models are still FCN-based models that are decorated with attention modules. More recently, the success of transformer in natural language processing inspires researchers to treat computer vision tasks as sequence-to-sequence tasks and build pure transformers for computer vision tasks. They usually deploy a transformer [

18] to encode an image as a sequence of patches. ViT [

19] is the first work built with a pure transformer for the image classification task and outperforms other CNN-based architectures. Following the method used in the image classification task, the semantic segmentation task is also regarded as a sequence-to-sequence prediction task. The transformer is proficient in modeling long-range dependencies in the data, SETR [

20], Swin transformer [

21] and BEiT [

22] took full advantage of it and significantly surpassed the FCN-based models.

State-of-the-art models are computationally expensive. Many works focus on speeding up the inference of their models. ERFNet [

23] reduced the resolution of the input image. BiSeNet [

24] introduced a fast downsampling context path to extract semantic information and a simple spatial path to preserve spatial information. FastFCN [

25] proposed the joint pyramid upsampling module to extract high-resolution feature maps.

In this paper, we aim to provide a post-processing method for the semantic segmentation task. It is an attempt and exploration of a new idea. First, we count the frequency of paired occurrences of adjacent connected components in the training set and build a relevancy matrix. The class of the central connected component is highly correlated with the category of the surrounding area. We get prior probabilities from the relevancy matrix. Then, we propose two post-processing methods for the semantic image segmentation task. Based on the average confidence of the central connected component, we apply the appropriate post-processing method for it. Recently, popular methods based on deep learning have focused on how to improve the performance of prediction models. We propose two new post-processing methods to improve the original results of the prediction model, which is different from the prediction methods, and also our innovation. Our proposed post-processing method improves the mean IoU of UperNet50 [

26], OCRNet [

27] and SETR-MLA on the ADE20K [

28] validation set with single-scale inference from 40.7%, 43% and 48.64% to 42.07%, 44.09% and 49.09%, and the mean IoU of PSPNet, DeepLabV3 and OCRNet on the COCO-Stuff dataset [

29] validation set with single-scale inference from 37.26%, 37.3% and 39.5% to 38.93%, 38.95% and 40.63%.

In practical applications, in most cases of autonomous driving, it is a street view image that has fewer categories, and the post-processing proposed does not have much effect. For application scenarios, such as unmanned aerial vehicle navigation, due to many objective factors, such as light, shooting angle and weather, the images taken will be partially blurred or include a number of categories. For processing these complex images, the post-processing method will be very useful.

2. Method

In this section, two post-processing methods for deep learning-based semantic segmentation models are introduced.

There are usually many objects in the image in complex application scenarios. The categories of objects that appear in images taken from similar scenes are highly correlated. For example, there are beds, pillows and bedside tables in a bedroom and tables and cutlery in a dining room. On roads, we can see cars, buildings and traffic signs. Under normal circumstances, it is impossible for cars, traffic signs, grass, etc., to appear around a bed. There is a high probability that pillows and quilts will appear on a bed. Therefore, the relationship between two classes may be close or distant. This is not significant for datasets such as cityscapes [

30] that focus on a single scene. However, for datasets such as ADE20K that are collected from multiple scenes, the relevancy between classes A and B may differ greatly from the relevancy between classes A and C.

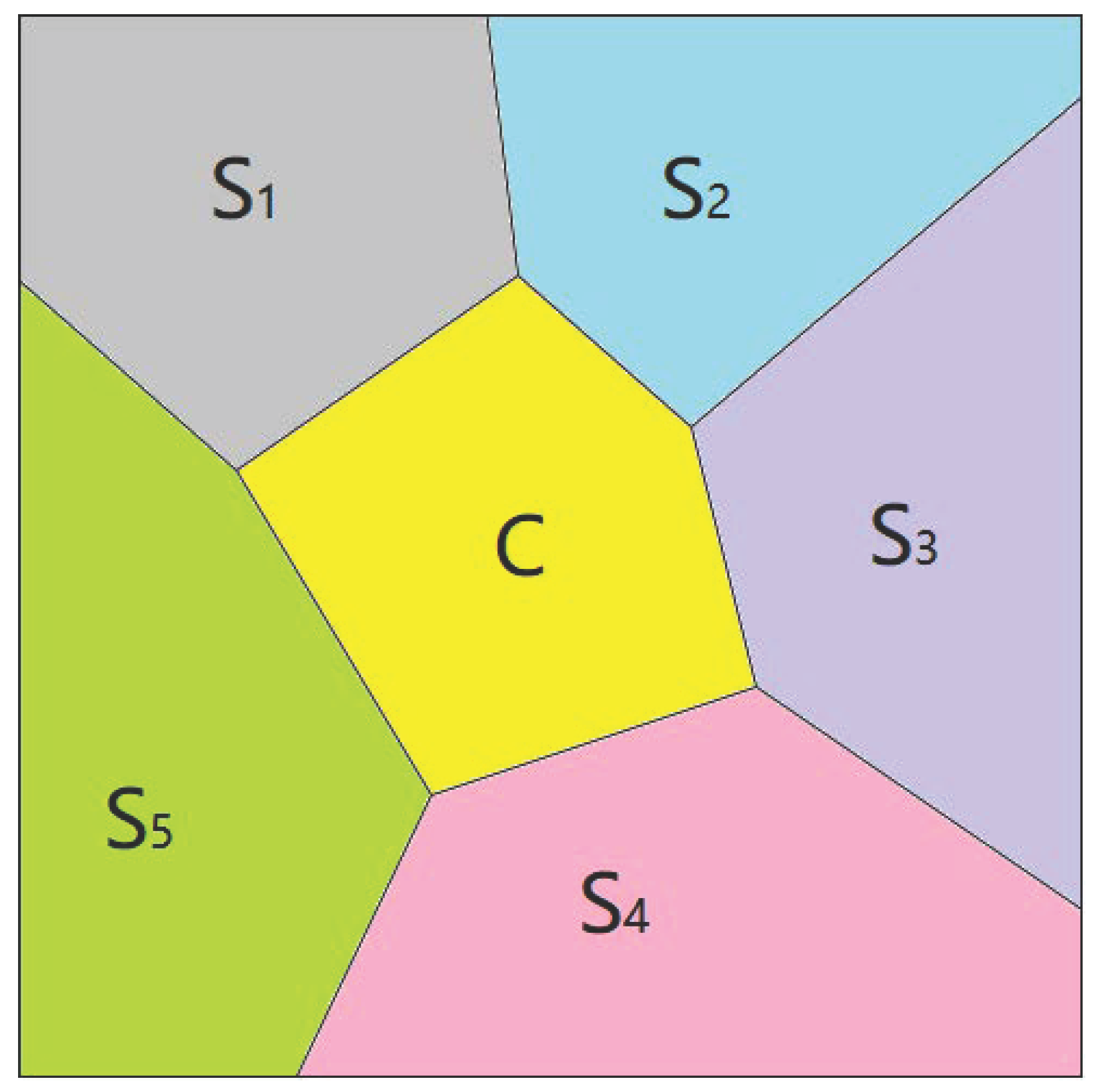

Each annotation in a semantic segmentation dataset is a matrix in which each element is a number representing a category. Inside a thing or a patch of stuff, it is filled with the same number, which makes it a connected component. As shown in

Figure 1, there are other connected components around each thing or stuff. As mentioned before, connected components of class B maybe often appear around connected components of class A, while connected components of class C do not. Therefore, we count the frequency of any two adjacent connected components appearing in annotations of the training dataset to find out the distance between any two classes. We denote the frequency of objects belonging to class

j appearing adjacent to objects belonging to class

i as

. Frequencies between some categories are shown in

Table 1. If region

S is adjacent to region

C, then we approximate the probability that

C belongs to category

j under the condition that

S is known to belong to category

i as

.

The same as common conception, the class most closely related to wall is floor, and the least closely related class to wall is lake. Lake never appeared next to wall in the training set. While ceiling and wall share the closest connection. The closeness between many class pairs is 0, such as building and bed, road and cabinet. Therefore, if a model infers a region of an image to be road and it is true, then it is probably wrong that a region surrounded by road is inferred to be a cabinet. This letter is inspired by these common senses.

In the semantic segmentation task, a model needs to localize and classify. In popular methods based on deep learning, various network architectures are designed to extract features. Regardless of the difference between network structures, all these networks will generate feature maps with the same number of channels as the number of categories. Then, feature maps are fed into a softmax layer. The class with the highest confidence is chosen as the prediction for each pixel. Such wrong inferences mentioned earlier are made by bad classifications of a model. In this letter, we only address classification, not localization. In order to correct wrong classifications of objects that deep learning models may make, we propose two post-processing methods to correct such wrong classifications. For each connected component, the categories of all connected components around it have an impact on the classification of the region. Our proposed post-processing methods are based on connected components. As shown in

Figure 2, we denote the central connected component as

C and the

ith connected component as

.

We first calculate the average confidence for each class over all pixels in region

C.

where

is the predicted confidence that pixel

i belongs to class

k and

n is the number of pixels in region

C. The class with the highest average confidence is the class that model predicted for region

C.

where

K is the number of categories in the dataset. If the highest average confidence of a connected component is below the predefined threshold, it means that the model’s prediction for this region is not confident enough. These connected components are what we think needs to be processed.

The following describes the first post-processing method. We use the frequencies as the relevancy matrix of all classes. Each category in

S has a relevancy vector with other classes. We add the relevancy vectors of all adjacent connected components as the reference

for the central connected component in which we are interested.

where

is the frequency vector of the

jth surrounding class with other classes, and

S is the set of classes to which the connected components around the central component belong. The highest-scored class in the reference is the most likely class to which the region of the image belongs, and the lowest-scored class is the opposite. If we denote the

kth highest score in

as

r, then the set of the top

k scored classes denoted as

K can be expressed as:

We think that the right class for region

C is in

K. Therefore, we keep the top k scored classes and discard the rest. If the class that the deep learning semantic segmentation model predicted for region

C is not in

K, then we change the classification result and choose the class with the highest average confidence among the top k scored classes as the classification result for the region. To this end, we set the average confidence of classes not in

K to 0.

At this point, the class with the highest average confidence in

is the label that we think region

C belongs to.

The category of each pixel in region C predicted by the model is the same. However, when we discard categories that are not in K, the category with the highest confidence for each pixel in region C will not all be the same. If we conduct the post-processing method for each pixel in the central region without considering the integrity, it will split the connected component. Therefore, we select the category with the highest average confidence over all pixels in this region as a result.

The following describes the second post-processing method. Suppose the number of connected components around the central connected component is

n. If objects of class A never appear next to objects of these

n categories in images from the training set, then the probability that the central connected component belongs to class A approximates 0. However, this setting is obviously too strong, as few connected components meet this condition. Therefore, we weaken this condition. If there are more than

k categories whose relevancy with class A is 0 among n surrounding categories, then we believe that the category of the central connected component cannot be class A. In addition, we treat the frequencies close to 0 as 0. We set the average confidence for each class that satisfies the condition to 0, which means we discard the class.

where

N is the number of categories annotated in the dataset. Similar to the first post-processing method, we select the category with the highest average confidence among the rest of the classes as the classification result of the central region.

3. Experiments

In this section, the experimental results for the two post-processing methods for the semantic segmentation task are introduced. Considering the adequacy of the experiment, two different public datasets were selected. Let us introduce one of them first. The ADE20K semantic segmentation dataset contains 150 categories. It is divided into 20,120, 2000, 3000 images for training, validation and testing. ADE20K is the most popular dataset in the field of image semantic segmentation and one of the most difficult publicly available datasets; it contains many categories and is very close to the scene distribution in the real world. We conduct several experiments on three different logits output by three different deep learning models. They are UPerNet, OCRNet and SETR. UPerNet aimed to parse visual concepts across scene categories, objects, parts, materials and textures from images. UPerNet50 achieved 40.7% mean IoU on the ADE20K validation set with single-scale inference. OCRNet added a cross-attention module to its encoder-decoder architecture. OCRNet achieved 43% mean IoU on the ADE20K validation set with single-scale inference. SETR introduced a sequence-to-sequence prediction framework as an alternative perspective for semantic segmentation. SETR-MLA achieved 48.64% mean IoU on the ADE20K validation set with single-scale inference.

The results of the ablation study on OCRNet are listed in

Table 2 to illustrate the effect and difference between the first method and the second method. If the average confidence of a patch is below 0.35, it means that the model is very unconfident in the classification result. In this case, the first candidate obtained from the reference vector has a very high probability of being the correct category, which is why the first method is effective. When we apply the first method to all connected components, the result decreases instead. When the threshold is set between 0.35 and 0.7, the second method is more effective than the first method. It improves the result to 43.86%. When the threshold is between 0.7 and 1, the second method has no influence on the result, and the first method has a side effect for the first method is a stronger condition than the second. Overall, we first calculate the average confidence for each connected component. Then according to the results obtained, we determine which post-processing method to use or not.

The experimental results on three representative models are listed in

Table 3. The original results of the three models are all from the implementations by [

31]. The experiments in

Table 2 have shown that the first method is more effective than the second method when the threshold is below 0.35 and has a side effect when the threshold is above 0.35. According to this finding, the first method is applied to all connected components with an average confidence of less than 0.35, and all connected components with average confidence above 0.35 are not processed. Therefore, it brings the results of the three models to 41.11%, 43.23% and 48.83%, respectively. If we use the first post-processing method on connected components with average confidence less than 0.35, use the second method on connected components with average confidence between 0.35 and 0.7 and connected components with average confidence above 0.7 are not processed, the results of the three models will be improved to 42.07%, 44.09% and 49.09%, respectively. Obviously, the combination of the first method and the second method is more effective than any single method.

In order to further verify the effectiveness of the two post-processing methods, additional datasets and two other methods were selected for experiments. The COCO-Stuff dataset is a challenging scene-parsing dataset that contains 171 semantic classes. The training set and test set consist of 9K and 1K images, respectively. OCRNet was also used for the dataset to compare the challenges of the COCO-Stuff dataset and the ADE20K dataset. Meanwhile, PSPNet and DeepLabV3, two classical image semantic segmentation models, were selected for the experiment. The core contribution of PSPNet was to propose the pyramid pooling module, which can integrate different scale context information, improve the ability to obtain global feature information and increase the expression of the model. DeepLabV3 proposed the atrous spatial pyramid pooling module, which can tap different-scale convolutional features and encode global image feature information to enhance semantic segmentation. Although they were proposed in 2017, it is also appropriate to test the effects of the post-processing methods.

The experimental results from the COCO-Stuff dataset are listed in

Table 4. As we can see from

Table 4, the COCO-Stuff dataset is more challenging than the ADE20K dataset by the performance of OCRNet. After a combination of the two post-processing methods, the original results of the three models are improved to 38.93%, 38.95% and 40.63%, respectively. They are all improved by more than 1%, and their overall performance gains are higher than the former experiment. Through the comparative analysis of

Table 3 and

Table 4, the post-processing method has a more significant effect on the more difficult datasets, which is also in line with our common sense.

The final result of the post-processing method proposed in this paper seriously relies on the original mIoU of the deep learning model. Several representative models have been selected to conduct experiments. There may be models that are more suitable for the post-processing method; that is, the gain of post-processing will be greater. Because we have proved the effectiveness of the post-processing method, this paper no longer performs more repeated experiments to verify other models. Based on analyzing the results of the above two groups of experiments and the deep understanding of the principle of post-processing, the post-processing method will have a greater effect gain for processing complex images or blurry images in real application scenarios. Complex images generally include many categories, and the correlation between categories is strong, so the effect will be better after application processing. Due to the shooting angle or the influence of other objective factors, blurred images have some unclear areas, and the effect gain of the post-processing method will be greater for processing such images.

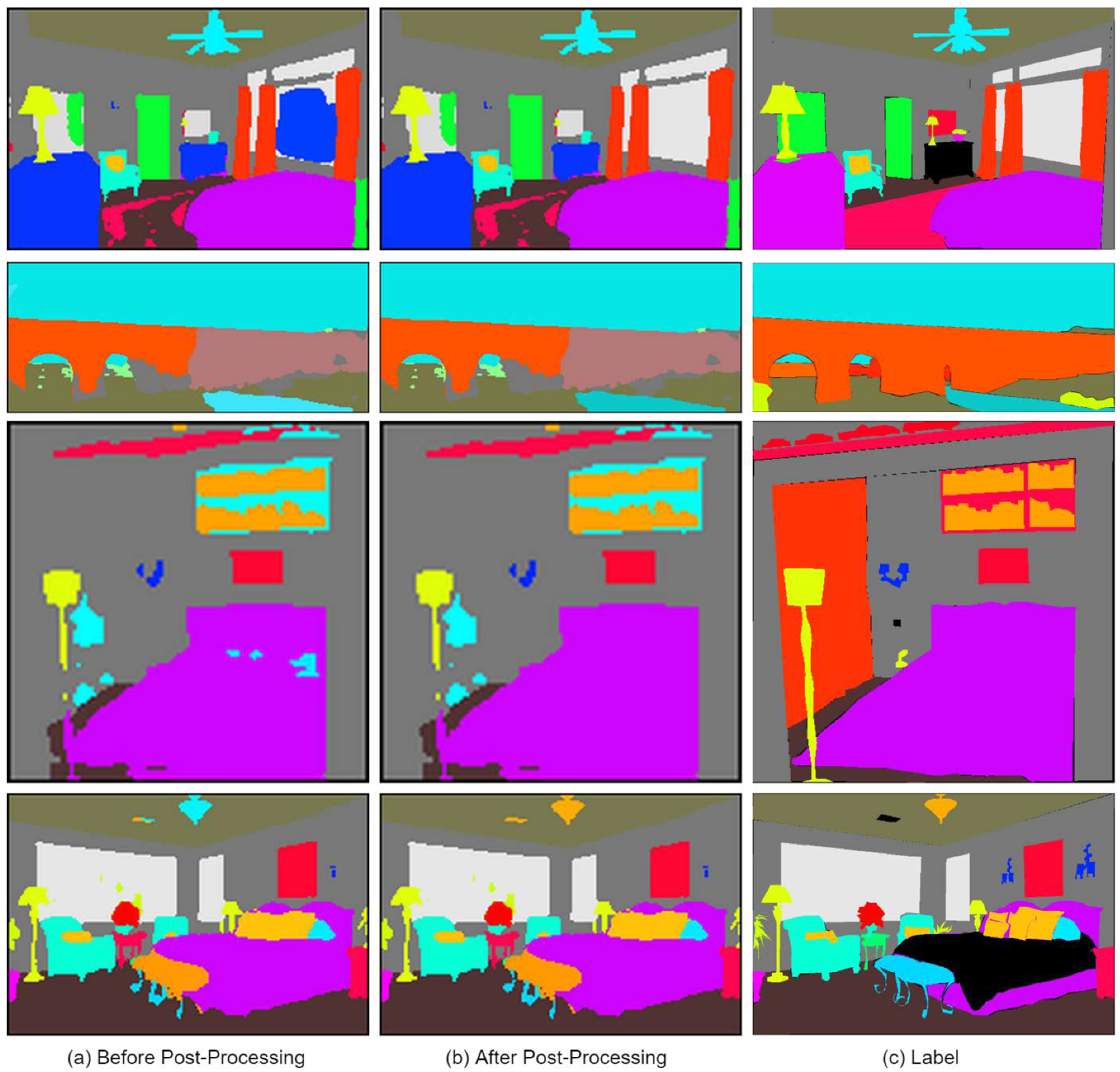

In order to visually demonstrate the effect of the post-processing method, some samples from the ADE20K dataset are presented in the form of comparison, as shown in

Figure 3. The first column represents the original prediction result of the deep learning methods, the second column represents the result after the post-processing method, and the third column represents the annotation results in

Figure 3. It is easy to see that window, river, bed and light in the examples are corrected by the post-processing method.

Specifically, the large blue area on the window in the first image in the first row is caused by the wrong prediction of a deep learning model in

Figure 3, which is corrected in the second image. The color of the river in the second image is different from that in the first image in the second row, but it is consistent with that in the third image, which requires careful observation. The three small connecting areas on the bed in the first image in the third row and the light in the first image in the fourth row are obviously wrongly classified, but they are all correctly corrected through the proposed post-processing method.

4. Conclusions

This paper presents two new post-processing methods for the semantic segmentation task. In particular, we correct the wrong classification of a central connected component inferred by a deep learning-based semantic segmentation model according to the relationships of the central connected component with its surrounding connected components. We experiment with our proposed post-processing methods on the logits of several representative models. After post-processing, the results of these models have all been improved, which shows the effectiveness of our post-processing methods.

As we all know, deep learning methods are based on a large number of labeled data. The larger the amount of data, the better the model performs. However, the cost of labeling data is expensive, and these data-based methods lack explanation. The post-processing method proposed in this paper is based on common sense, so its interpretability is very strong. All in all, our work not only improves the original prediction results of the deep learning model but also has good persuasiveness in the interpretability of the improvement. It is hoped that this new attempt will provide other researchers with new research perspectives.

Of course, our methods also have limitations and deficiencies. In the dataset with few categories, the post-processing method may not have an obvious effect. Because the fewer categories there are, the simpler the logical relationship between the categories is, the less useful this method will be. As an extreme example, if we are dealing with a dataset with only two categories, this method will almost never work.