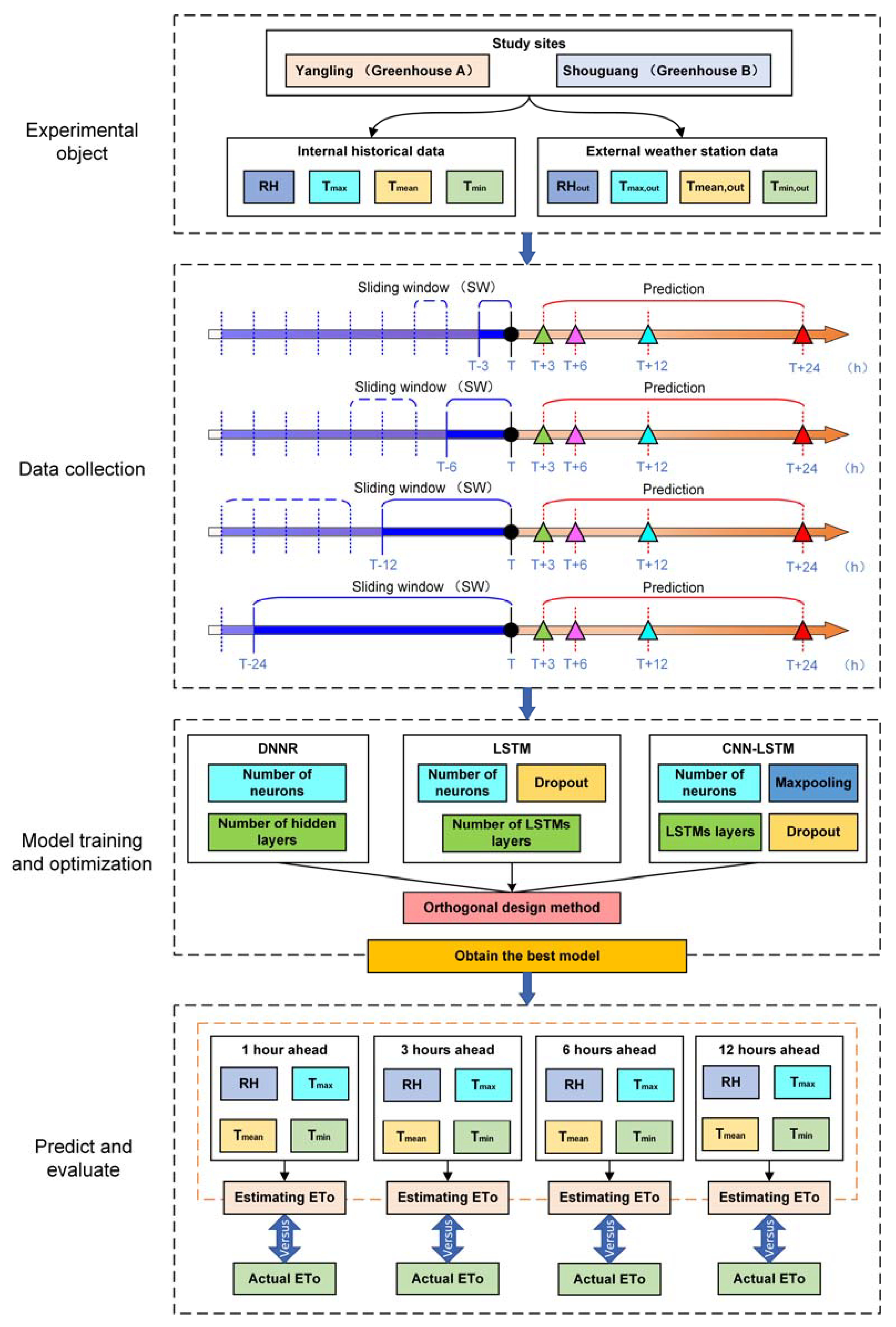

This section provides a comparison of the prediction performance for three models, optimal forecast results at four forecast time points, and daily ETo evaluated using the predicted environmental parameters.

4.1. Comparison of the Prediction Performance for Three Models

In order to verify the applicability of three models, each of nine DNNR models, nine LSTM models, and 18 CNN-LSTM models were trained and tested using 16 training sets and corresponding test sets. For three models, the best performing models of each training set and the corresponding test set are shown in

Table 7. As can be seen from the table, the best performing DNNR model for different training and test sets is also different, and it is difficult to find the distribution rule of the best model for 16 datasets. The same conclusion applies to the LSTM and CNN-LSTM models. For humidity and temperature in two solar greenhouses, the best performing DNNR model for same training and test set is also different, the same conclusion applies to two greenhouses and the LSTM, CNN-LSTM models.

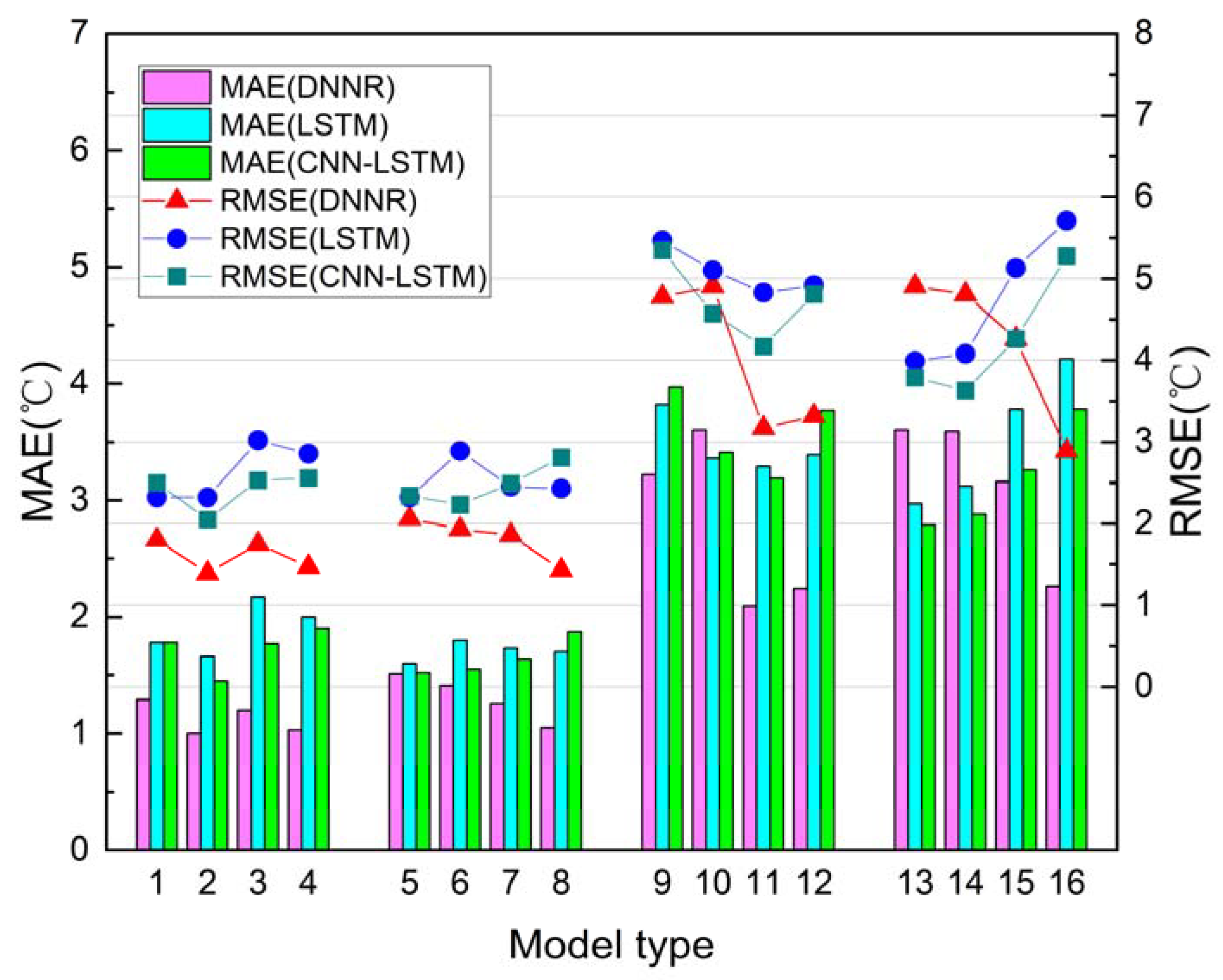

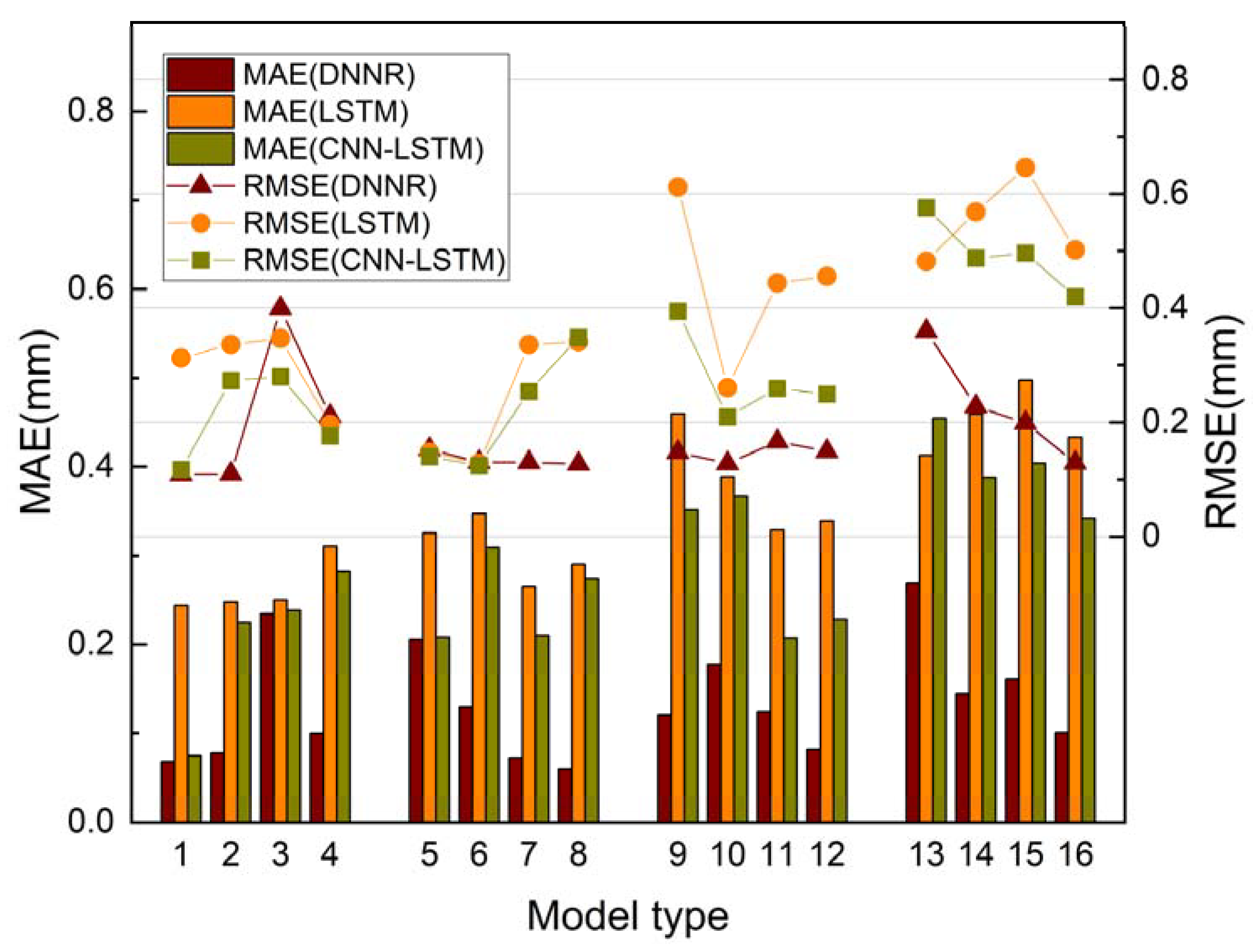

For three type prediction models of Greenhouse A humidity, the RMSE and MAE of the best experimental model in 16 datasets were shown in

Figure 9, it can be seen from the results, for the test set of T+3h, T+12h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models increase with the training set time horizon, conversely, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. For the test set of T+6h, the RMSE and MAE of the best LSTM and CNN-LSTM models decrease with the training set time horizon, conversely, the RMSE and MAE of the best DNNR models increases with the training set time horizon. In general, the MAE and RMSE of the best three models increase with the extension of forecast time, and the performance of DNNR was best among the three models.

For three type prediction models of Greenhouse A temperature, the RMSE and MAE of the best experimental model under 16 datasets were shown in

Figure 10, it can be seen from the results, for the test set of T+3h, T+6h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models increase with the training set time horizon. For the test set T+12h, the RMSE and MAE of the best LSTM and CNN-LSTM models decrease with the training set time horizon. Conversely, for the test set of T+3h, T+6h, T+12h and T+24h, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. In general, the MAE and RMSE of the best three models also increases with the extension of forecast time, the performance of DNNR was also best among the three models.

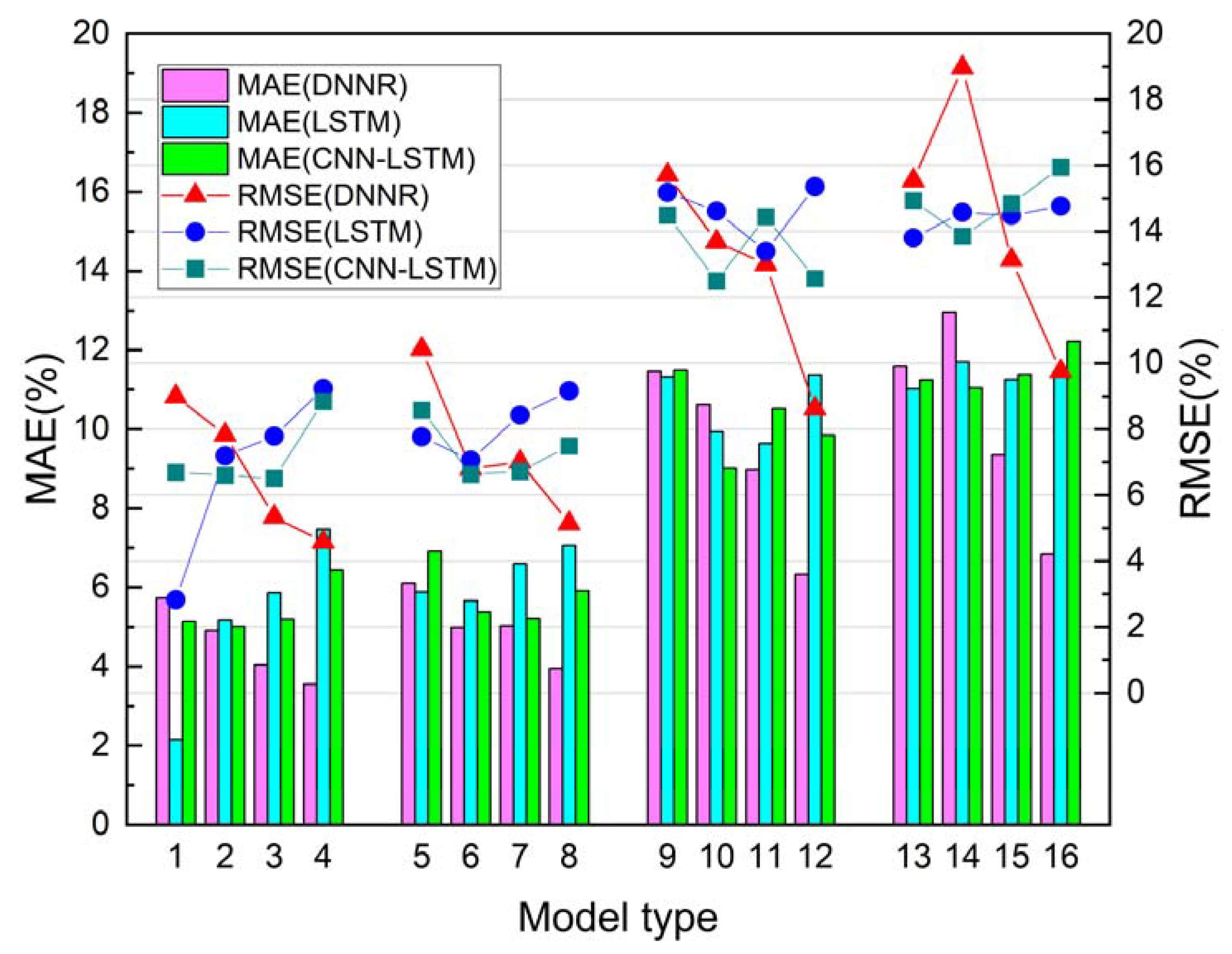

For three type prediction models of Greenhouse B humidity, the RMSE and MAE of the best experimental model in 16 datasets are shown in

Figure 11, it can be seen from the results, for the test set of T+3h, T+6h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models increases with the training set time horizon. For test set T+12h, the RMSE and MAE of the best LSTM and CNN-LSTM models decrease with the training set time horizon. On the contrary, for the test set of T+3h, T+6h, T+12h and T+24h, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. In general, the MAE and RMSE of the best three models also increases with the extension of the forecast time, and the performance of DNNR was also best among the three models.

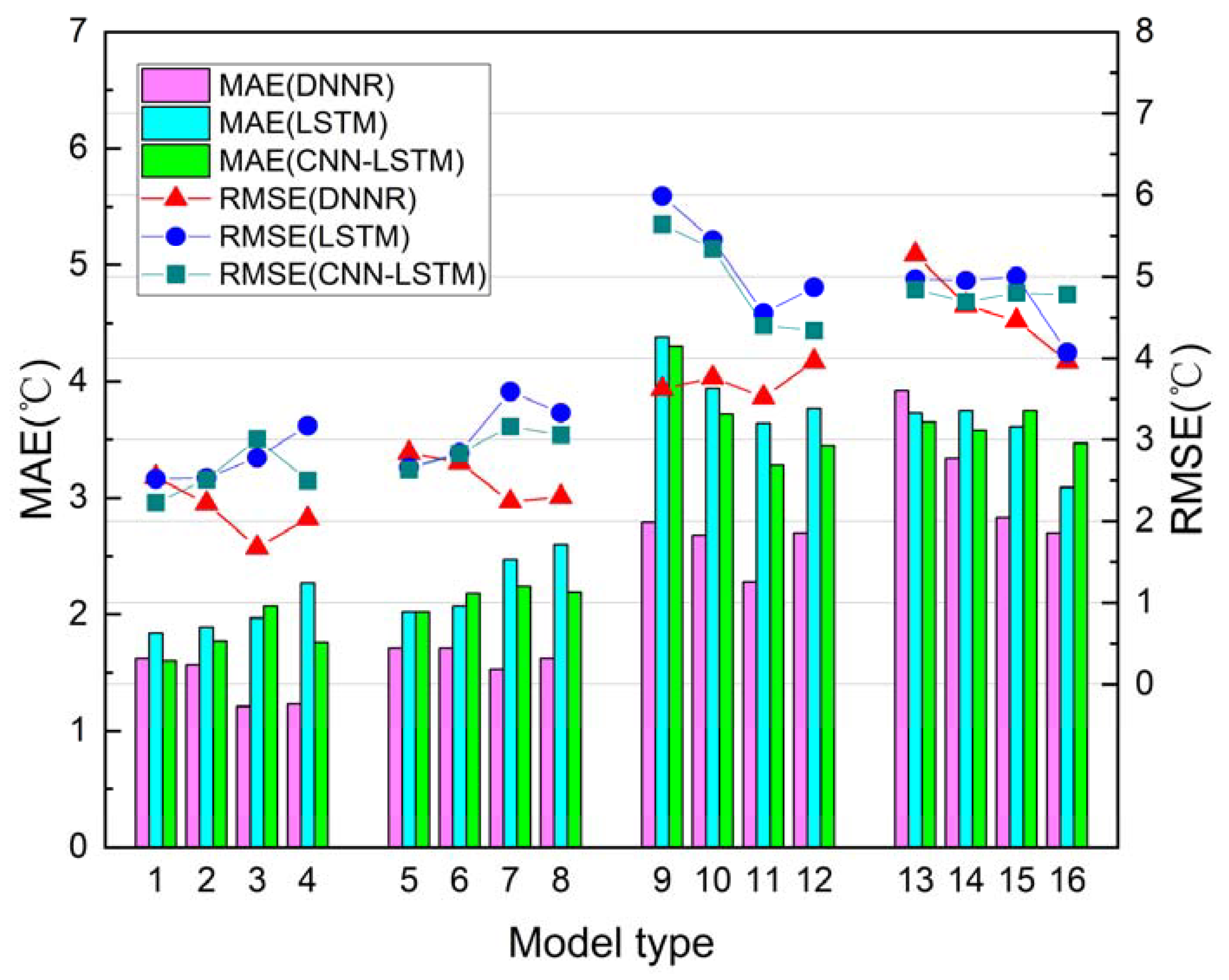

For three type prediction models of Greenhouse B temperature, the RMSE and MAE of the best experimental model in 16 datasets are shown in

Figure 12, it can be seen from the results, for the test set of T+3h and T+6h, the RMSE and MAE of the best LSTM and CNN-LSTM models increases with the training set time horizon. For the test set of T+12h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models decrease with the training set time horizon. On the contrary, for the test set of T+3h, T+6h, T+12h and T+24h, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. For the of T+12h test set, the RMSE and MAE of the best DNNR models increase with the training set time horizon. In general, the MAE and RMSE of the best three models are also increased with the extension of forecast time, the performance of DNNR was also the best among the three models.

4.2. Optimal Forecast Results at Four Forecast Time Points

For each forecast time points (T+3h, T+6h, T+12h and T+24h), the predictive performances of all experimental models were compared, and the best model at each forecast time point was shown in

Table 8; for Greenhouse A temperature prediction, the best models for each of the three different models are similar at each forecast time point. At the same time, for other parameters in two greenhouses, the best model for each of the three different models was not exactly the same at each forecast time point.

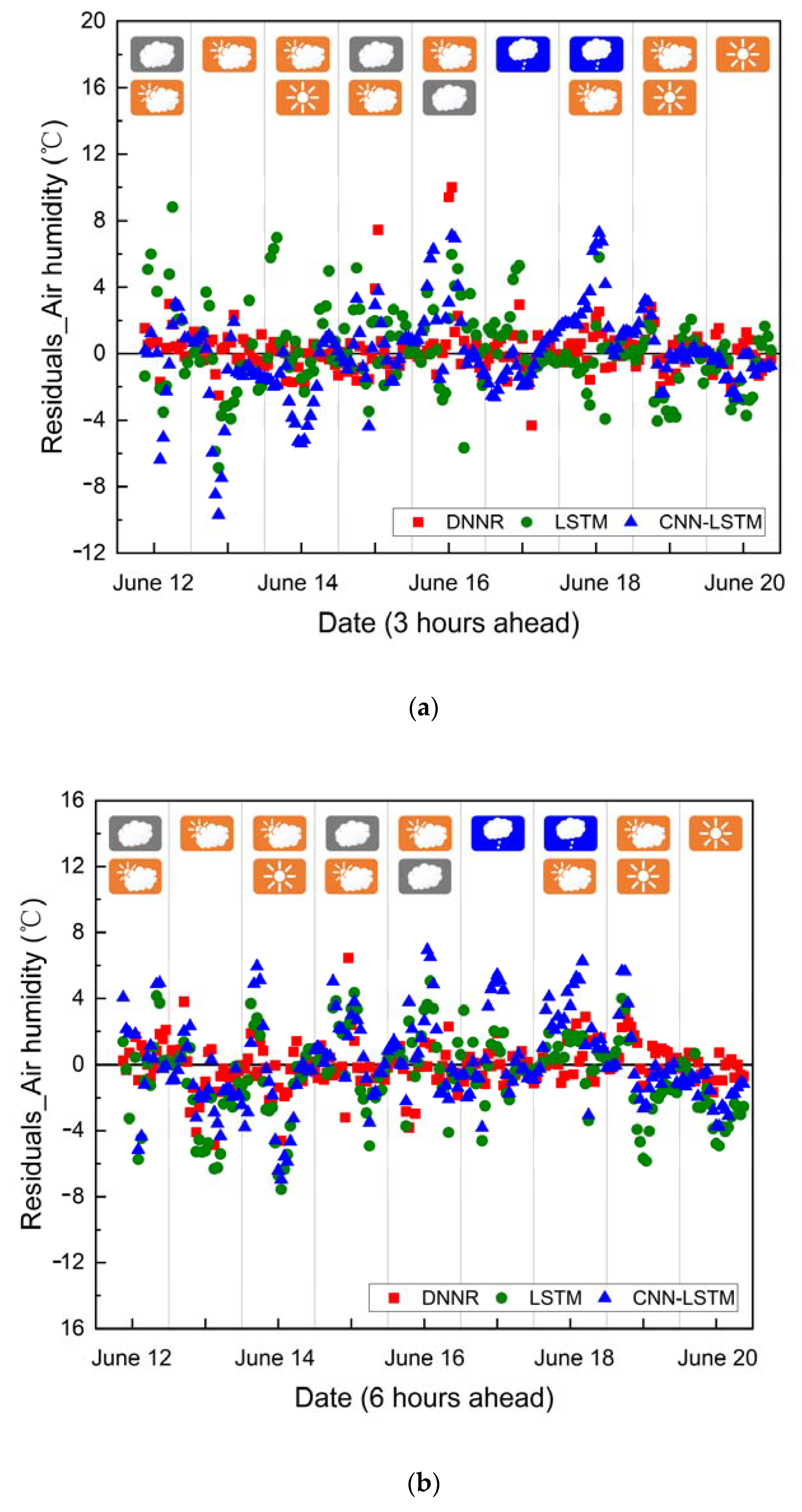

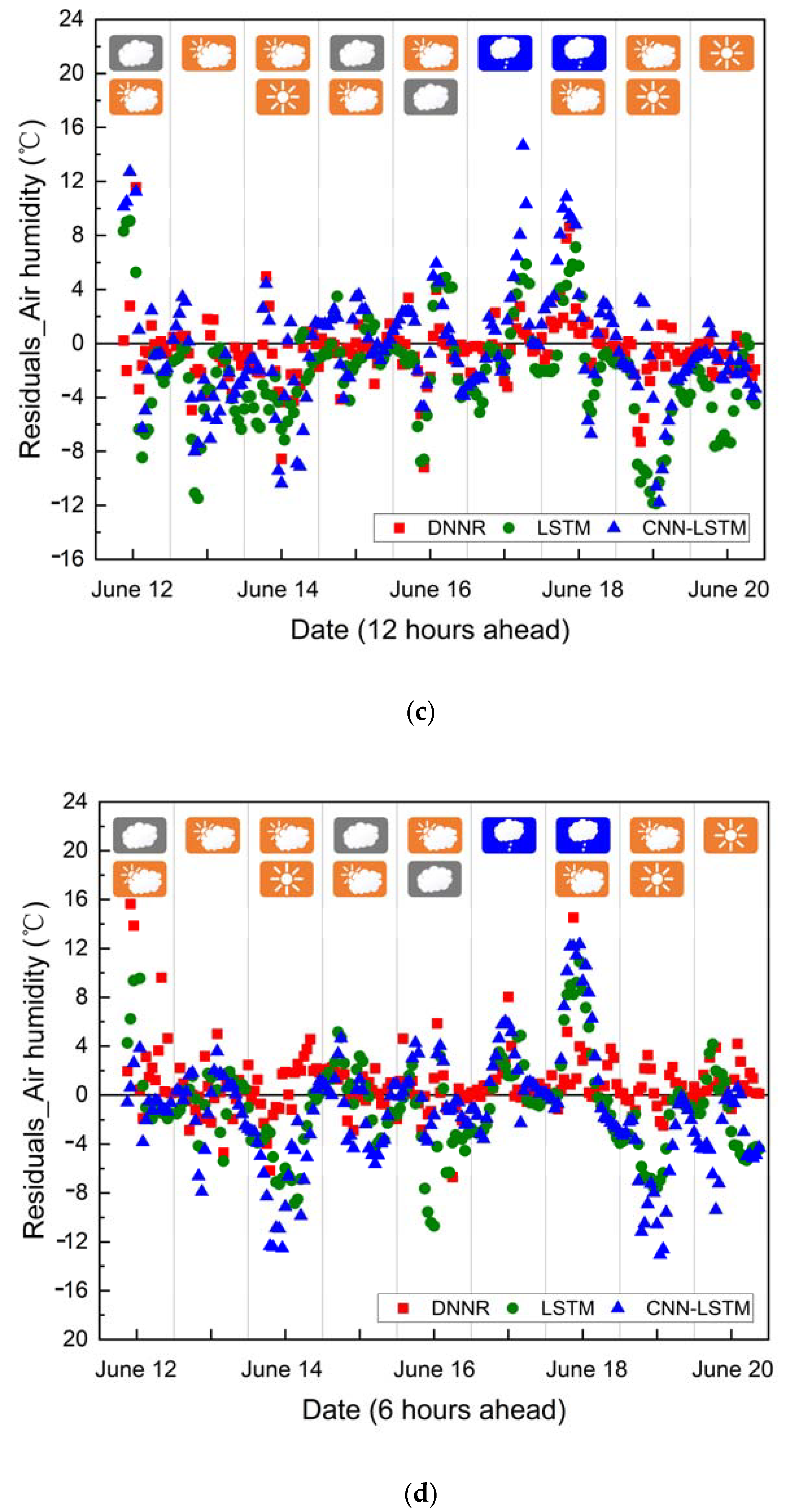

For the humidity of Greenhouse A, the statistics of the error distribution between the predicted value of the best model and the actual value at four forecast time points are shown in

Figure 13. From the figure, the errors of the predictions of the DNNR model at four forecast time points are within [−8, 10], [−12, 13], [−44, 49] and [−19, 26], respectively. The errors of LSTM model predictions at four forecast time points are within [−19, 8], [−22, 13], [−26, 45] and [−41, 34], respectively. The errors of CNN-LSTM model predictions at four forecast time points are within [−21, 16], [−25, 18], [−28, 41] and [−31, 34], respectively. The errors of the three models increase with the prediction time, specifically, for the forecast time of T+12, the errors of the LSTM and CNN-LSTM models are smaller than the DNNR models, for the forecast time of T+3, T+6 and T+24, the errors of the DNNR model are smaller than the LSTM and CNN-LSTM models.

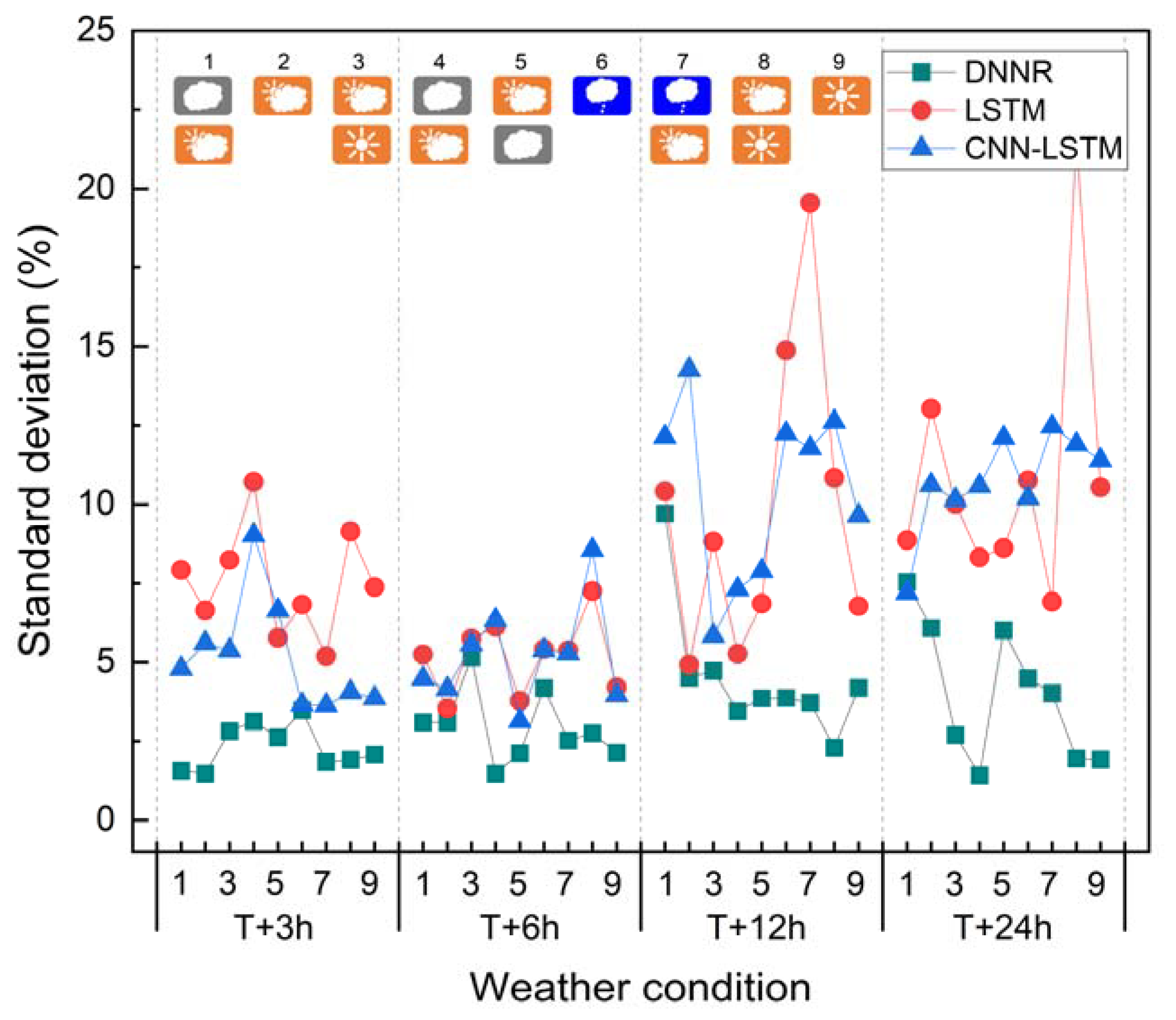

In order to analyze the correlation between the errors of the three forecasting models and the weather conditions, for the humidity of Greenhouse A, the ST of the three model errors under different weather conditions are shown in

Figure 14; for three models, the ST of the DNNR model was the smallest under different weather conditions, and the ST of three models increase with the training set time horizon, the ST of three models are the largest at T+12 time point under different weather conditions, which means that the DNNR was the best predictive model among the three models, and the DNNR model was not suitable for parameter prediction 12 h ahead.

For the temperature of Greenhouse A, the statistics of the error distribution between the predicted value of the best model and the actual value at four forecast time points are shown in

Figure 15. From the figure, the errors of the predictions of the DNNR model at four forecast time points are within [−5, 8], [−6, 6], [−8, 12] and [−7, 7] °C, respectively. The errors of LSTM model predictions at four forecast time points are within [−6, 9], [−6, 10], [−18, 17] and [−13, 11] °C, respectively. The errors in the predictions of the CNN-LSTM model at four forecast time points are within [−4, 7], [−3, 9], [−14, 12] and [−15, 8] °C, respectively. The errors of the three models increase with the prediction time, specifically, for the forecast time of T+12, the errors of the LSTM and CNN-LSTM models are also smaller than the DNNR models. For the forecast time of T+3, T+6 and T+24, the errors of the DNNR model are smaller than the LSTM and CNN-LSTM models.

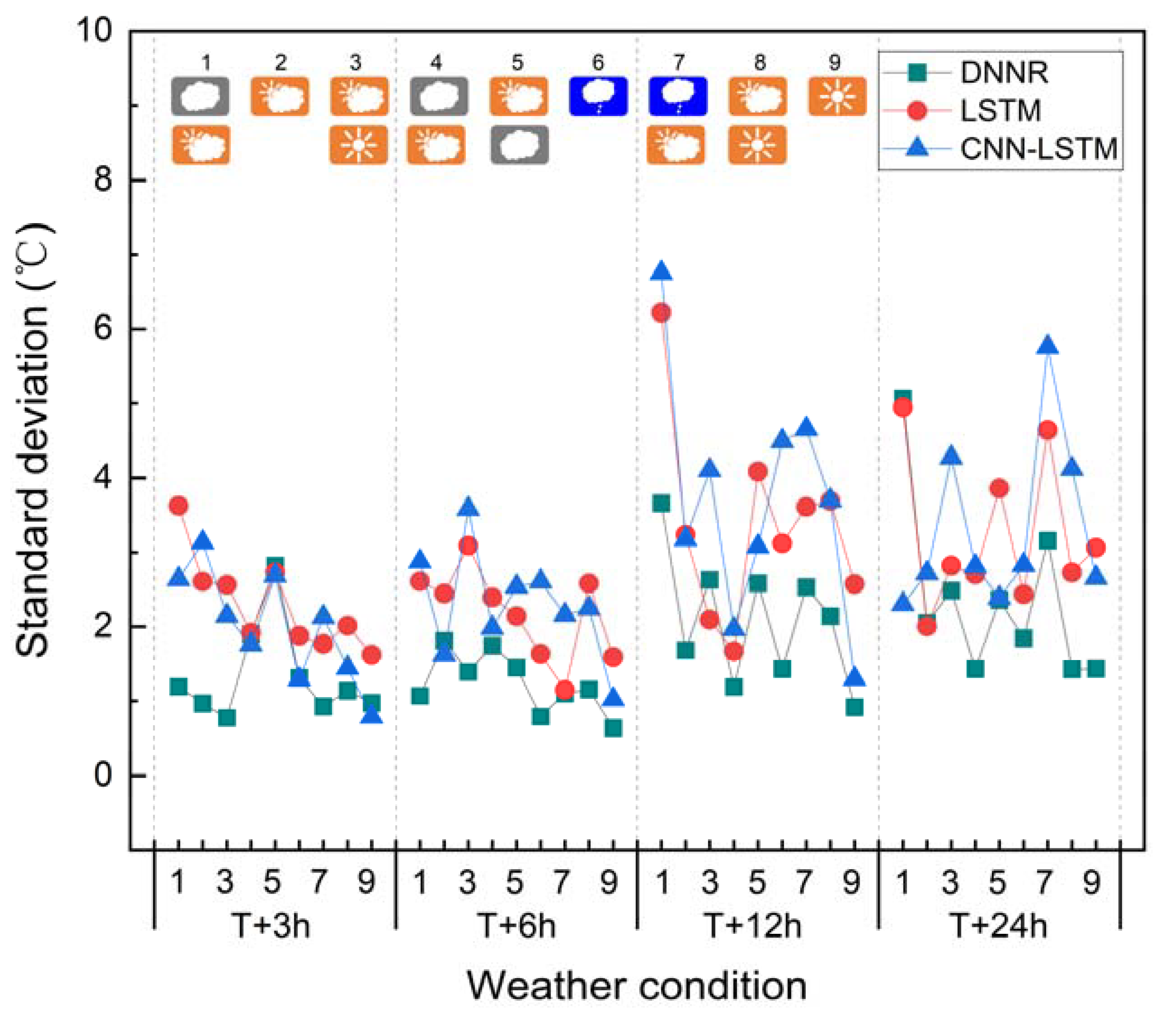

The ST of the errors of the three models under different weather conditions are shown in

Figure 16, for three models, the ST of the DNNR model is also the smallest under different weather conditions, and the ST of three models increase with the training set time horizon, the ST of three models are the biggest at T+12 time point under different weather conditions, which means that the DNNR was the best predictive model among the three models, and the DNNR model was not suitable for parameters prediction 12 h ahead.

For Greenhouse B humidity, statistics of the error distribution between the predicted value of the best model and the actual value at four forecast time points are shown in

Figure 17. From the figure, the errors of the predictions of the DNNR model at four forecast time points are within [−11, 6], [−13, 10], [−19, 23] and [−25, 27] %, respectively. The errors of the LSTM model predictions at four forecast time points are within [−29, 17], [−21, 9], [−51, 23] and [−37, 41] %, respectively. The errors of CNN-LSTM model predictions at four forecast time points are within [−28, 21], [−26, 9], [−44, 24] and [−37, 39] %, respectively. The errors of the three models increase with the prediction time, specifically, for the forecast time of T+12, the errors of the LSTM and CNN-LSTM models are smaller than the DNNR models, for the forecast time of T+3, T+6 and T+24, the errors of DNNR model are smaller than the LSTM and CNN-LSTM models.

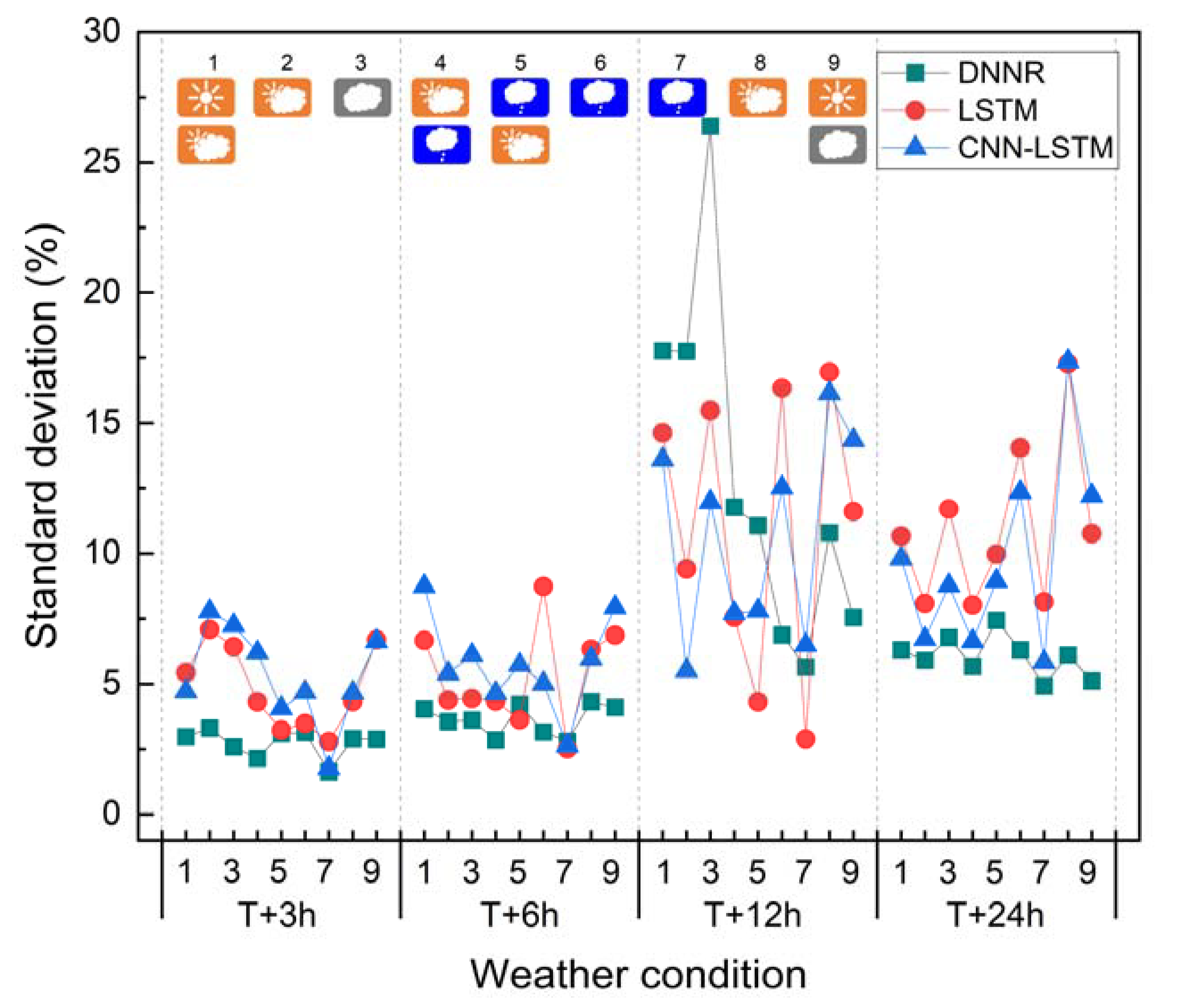

The errors of the three models errors under different weather conditions are shown in

Figure 18, for three models, the ST of DNNR model is the smallest under different weather conditions, and the ST of three models increase with the training set time horizon, the ST of three models are the biggest at T+12 time point under different weather conditions, which means that the DNNR is the best predictive model among the three models, and the DNNR model is not suitable for prediction of parameters 12 h ahead.

For Greenhouse B temperature, statistics of the error distribution between the predicted value of the best model and the actual value at four forecast time points are shown in

Figure 19. From the figure, the errors in the predictions of the DNNR model at four forecast time points are within [−5, 10], [−5, 7], [−10, 12] and [−7, 16] °C, respectively. The errors in the predictions of the LSTM model at four forecast time points are within [−7, 9], [−8, 6], [−12, 10] and [−11, 13] °C, respectively. The errors in the predictions of the CNN-LSTM model predictions at four forecast time points are within [−10, 8], [−7, 7], [−12, 15] and [−14, 13] °C, respectively. The errors of the three models increase with the prediction time, specifically, for the forecast time of T+12, the errors of the LSTM and CNN-LSTM models are also smaller than the DNNR models, For the forecast time of T+3, T+6 and T+24, the errors of the DNNR model are smaller than the LSTM and CNN-LSTM models.

The ST of the errors of the three models under different weather conditions are shown in

Figure 20, for three models, the ST of the DNNR model is also the smallest under different weather conditions, and the ST of three models increase with the training set time horizon, the ST of three models are the biggest at T+12 time point under different weather conditions, which means that the DNNR was the best predictive model among the three models, and the DNNR model was not suitable for parameter prediction 12 h ahead.

The degree of dispersion between the predictive value and the actual value of air humidity was larger than the degree of dispersion between the predictive value and the actual value of air temperature in two greenhouses, this was due to the greenhouse where the range of air humidity value was greater than the range of air temperature value.

4.3. Daily ETo Evaluated Using the Predicted Environmental Parameters

For two solar greenhouses, the maximum and minimum air temperature in the solar greenhouse were predicted using the optimized temperature prediction model, respectively. Net radiation in two greenhouses was predicted by the sliding mean filtering method. By incorporating the above data into the PM formula, the hourly ETo is calculated using the predictive maximum, minimum, and average air temperature, net radiation and RH, at the same time, the actual value of hourly ETo was calculated using the monitored environmental parameters. Daily ETo is obtained by accumulating hourly ETo.

For the three models, the RMSE and MAE of the predicted daily ET

o and the actual daily ET

o for the Greenhouse A under four forecast time points are shown in

Figure 21. It can be seen from the results, for the test set of T+3h, T+12h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models increase with the training set time horizon, and for the test set of T+6h, the RMSE and MAE of the best LSTM and CNN-LSTM models are decrease with the training set time horizon. On the contrary, for four forecast time points, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. In general, the performance of DNNR was best among the three models.

The RMSE and MAE of the predicted daily ET

o and the actual daily ET

o for Greenhouse B under four forecast time points are shown in

Figure 22. It can be seen from the results, for the test set of T+6h and T+24h, the RMSE and MAE of the best LSTM and CNN-LSTM models increase with the training set time horizon, and for the test set of T+6h and T+12h, the RMSE and MAE of the best LSTM and CNN-LSTM models are decrease with the training set time horizon. On the contrary, for four forecast time points, the RMSE and MAE of the best DNNR models decrease with the training set time horizon. In general, the performance of DNNR was best among the three models.