1. Introduction

Emotions are a very important factor in decision-making, interaction, and perception. Human emotions are diverse and we therefore have different definitions of emotions from different perspectives. Emotions are the expressed internal states of an individual that are evoked as a reaction to, and an interaction, with certain stimuli. The emotional states of an individual are affected by the person’s intentions, norms, background, cognitive capabilities, physical or psychological states, action tendencies, environmental conditions, appraisals, expressive behavior, and subjective feelings [

1].

The researcher Tomkins conducted the first study and demonstrated that facial expressions were reliably associated with certain emotional states [

2]. Later, Tomkins recruited Paul Ekman and Carroll Izard to work on this phenomenon, they performed a compressive study regarding facial expression and emotions which are known as “universality studies”. Ekman proposed universal facial expressions (happiness, sadness, disgust, anger, fear, and neutral) and claimed that these were present in every human belonging to any culture, these expressions were later agreed to be universal expressions [

3].

Facial expressions are powerful tools to analyze emotions due to the expressive behavior of the face, with the possibility of capturing rich content in multi-dimensional views. Humans can use the movement of facial features to express their desired inner feelings and mental states.

Emotions play a vital role in all human activities, recent developments in neurology have shown that there is a connection between emotions, cognition, and audio functions [

4]. Emotions have a strong impact on learning and thus play a vital role in education [

5,

6,

7]. Emotions do not just impact traditional classroom learning but influence various types of learning such as language learning, skills learning, ethics learning, etc.

A human possesses different emotions, and each has a different role in different situations, it can therefore be said that there is no limit to the emotions affecting a classroom environment. An educator needs to analyze their student’s facial expressions with a deep understanding of the surrounding environment during learning in order to improve the learning process [

8,

9].

Research studies have depicted the relationship between a students’ emotions explicitly expressed through the face and their academic performances; and have demonstrated the importance of considering the quadratic relationship between a students’ positive emotions during learning and their academic grades [

10,

11,

12,

13,

14,

15,

16]. Good learning can have a positive impact on the academic grades of the learner [

17] while difficulty in learning can lower a student’s academic grades and can cause dropout [

18].

Automatic Emotion Recognition System

The most significant components of human emotions are input into machines so that they can automatically detect the emotions expressed by a human through their facial features. The advent of, and advancement in, automatic facial expression recognition systems can tremendously increase the amount of processed data. The real-time implementation of the FER can boost society. The FER can be applied in various areas such as mental disease diagnosis, human social/physiological interaction detection, criminology, security systems, customer satisfaction, human–computer interaction, etc. There are many computing techniques that are used to automate facial expression recognition [

19]. The most implemented and successful technique is “deep learning” [

20,

21,

22,

23,

24]. Deep learning is basically a subfield of machine learning using artificial intelligence that imitates the biological processes of the human brain and deals with algorithms inspired by the structure and function of the human brain known as artificial neural networks. Jeremy Howard, on his Brussels 2014 TEDx talk, states that computers trained using deep learning techniques have been able to achieve some effective computing processes that are similar to human emotion recognition and which are necessary for machines to better serve their purpose [

25].

The facial expression recognition systems use either static images or videos. Most applications of facial expression recognition systems use imagery datasets [

26,

27]. It has been observed that the imagery dataset requires a trained person to label the images into discrete emotions and that, moreover, these discrete emotions do not cover the micro-expression of the individual [

28]. The research argues that analyzing facial expressions using a huge imagery dataset with unnecessary input dimensions can decrease the efficiency of the FER system. Similarly, another study concludes that images taken from different angles, low resolution, and noisy backgrounds can be problematic in automatic facial expression recognition [

29,

30,

31,

32,

33]. Another research has argued that static images are not sufficient for automatic facial expression recognition and the authors conducted a study using recorded video of the classroom to improve the accuracy of FER [

34,

35,

36,

37].

One of the researchers has divided their proposed emotion detection system into positive and negative emotions [

38]; they used two positive facial expressions happiness and surprise and five negative expressions of anger, contempt, disgust, fear, and sadness to analyze the impact of negative and positive emotions on students’ learning. Another study was conducted to analyze students’ facial expressions in the classroom using deep learning, focused on the concentration level and interest of learners using eye and head movement [

39].

There are many deep learning techniques used in building automatic facial expression recognition systems. The convolutional neural network (CNN) is one of the most efficient and widely used models to develop an automatic emotion recognition system [

40,

41,

42,

43,

44]. The

Table 1. below show the existing facial expression recognition systems.

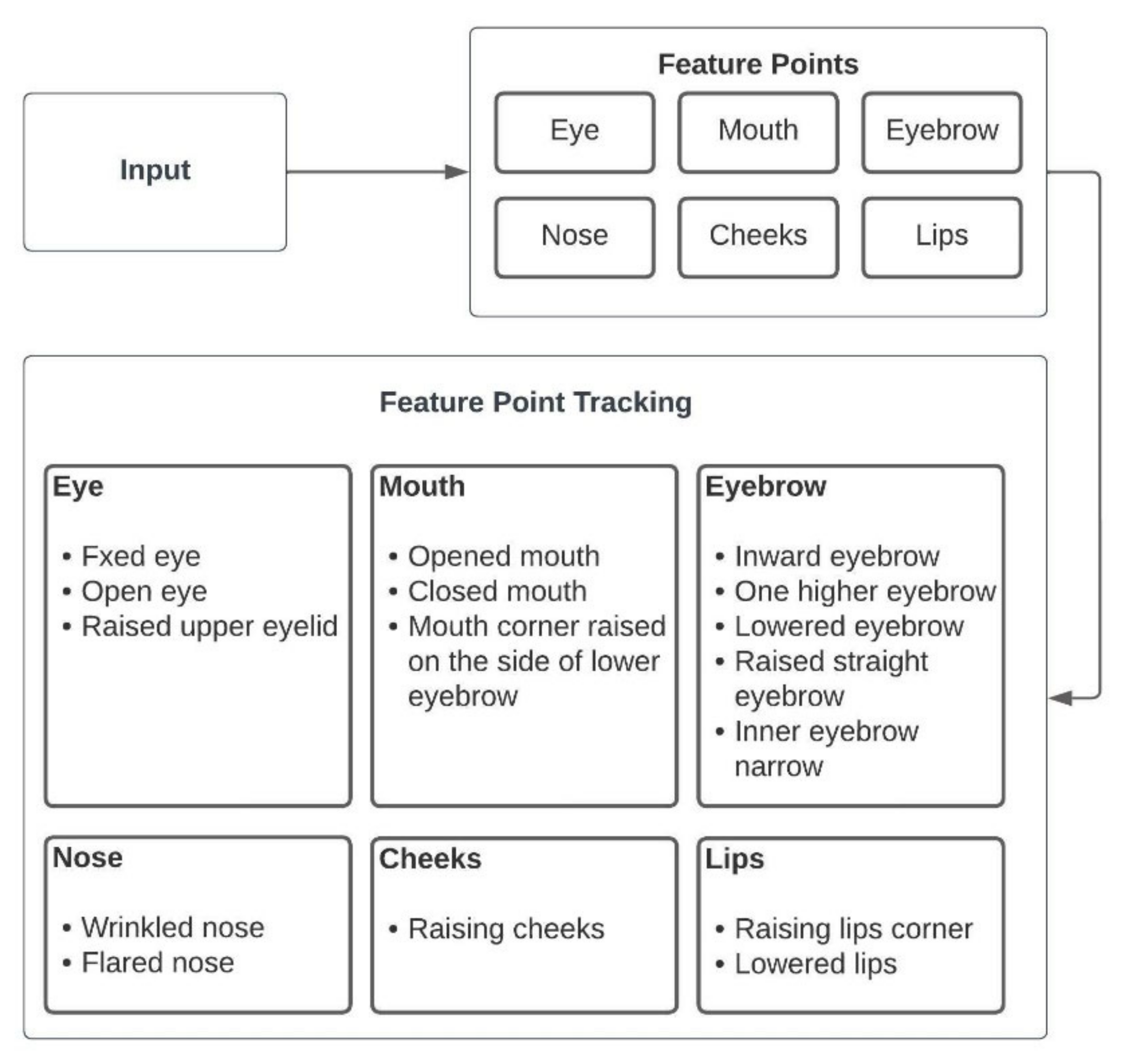

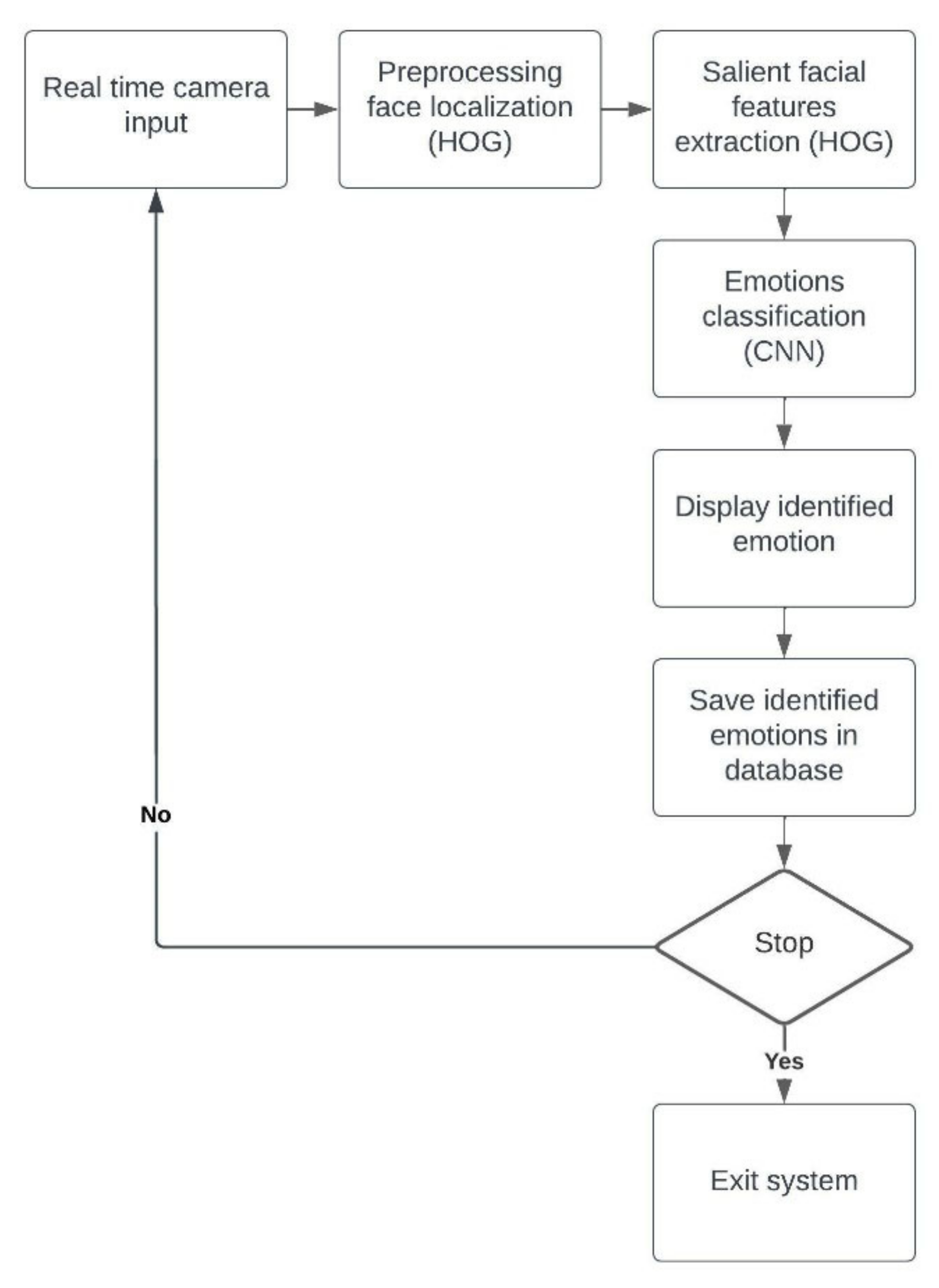

The

Figure 1 Above show the general composition of the existing FER systems. It has been observed that the performance of automatic facial expression recognition is determined by feature selection, and appropriate movements associated with the facial feature in order to identify certain expressions [

65,

66]. A research study that was conducted to focus on the selection and extraction of facial features for an automatic facial expressions recognition system; emphasized the facial geometric feature selection of eyebrows, eyes, etc., and used local binary patterns. They have modified hidden Markov model to mitigate the change in the local facial landmarks distance [

67,

68,

69,

70,

71,

72,

73].

It has been observed that a very minor misalignment can cause displacement of the sub-regional location of the facial feature and can cause misleading placement in classification. Research has focused on the selection of patches of the facial features and has demonstrated high accuracy for an automatic facial expression recognition system. We, therefore, have selected salient facial features in our proposed automatic facial expression recognition system and have used the histogram of orientation gradients (HOG) technique to accurately identify the facial features.

3. Results

The study focused on analyzing the relationship between facial expressions and learning. It has been observed from the data that the facial expressions of students change frequently (in seconds) during lecture which shows that the learning and facial expressions are directly related to each other.

The six selected facial expressions (dissatisfied, sadness, happiness, fear, satisfied and concentration) of 100 students were analyzed against five selected variables (duration of lecture, difficulty of subject, gender, seating position in classroom and department).

The facial expressions were first analyzed against the variable “gender”. The homogeneity of covariance matrix the (Box’s M = 135 and the p value is p > 0.05) and the Levene’s test for homogeneity or equality of variance for selected facial expressions where p < 0.05 shows the homogeneity of data. The MANOVA test was carried out for the variance of student’s facial expressions for the variable “gender”. There was a significant difference between male and female student’s facial expressions when collectively considered for six selected facial expressions of students during the classroom learning. The value of Wilks’ Lambda (Λ)= 0.926, F (6,506) = 6.694, p < 0.001, partial η2 = 0.74. The separate ANOVA was conducted for each dependent variable, with each ANOVA evaluated at an alpha level of 0.0083 with the Bonferroni adjustments. The variables showed the following effects:

Happy had no significant effect, F(1,511) = 0.003, partial η2 < 0.001

Sad had no significant effect, F(1,511) = 4.483, partial η2 = 0.009

Satisfied had a significant effect, F(1,511) = 15.370, partial η2 = 0.029

Dissatisfied had a significant effect, F(1,511) = 23.026, partial η2 = 0.43

Concentration did not have a significant effect, F(1,511) = 4.176, partial η2 = 0.008

Fear did not have a significant effect, F(1,511) = 3.446, partial η2 = 0.007

Similarly, using the MANOVA to test the effect of the variable “department”, we analyzed the five selected facial expressions mentioned above. The homogeneity of the covariance matrix (Box’s M = 141 and the

p value is

p < 0.05) and the Levene’s test for homogeneity or equality of variance for selected facial expressions (multiple variables) of the students where the

p value is

p < 0.05 shows the homogeneity of the data. The value of Wilks’ Lambda (Λ) = 0.972, F (18,1426) = 0.792,

p = 0.712, partial η2 = 0.009 shows that there was not a significant impact of the variable “department” on the facial expressions of the students. The statistical analysis is shown in

Table 2.

“Seating position” was another selected variable. It was analyzed against selected facial expressions to determine its effect. The covariance matrix homogeneity (Box’s M = 240,

p < 0.05) and variance homogeneity against selected facial expressions shows

p < 0.05 and Wilks’ Lambda (Λ) = 0.877, F (6,506) = 11.741;

p < 0.05), indicating the seating position effect on the student’s facial expressions. Furthermore, the seating position was divided into three rows—first row, middle row and last row—and the facial expressions were analyzed according to row in order to clearly understand the effect by applying the MANOVA test. The students in the first row had a Wilks’ Lambda (Λ) = 0.969, F (6,506) = 2.681;

p < 0.05, partial η2 = 0.031 and after applying ANOVA for each variable the

p < 0.05, indicating a strong impact. Furthermore, in order to identify the most frequent facial expressions among the students sitting in the front row, the mean and standard deviation were focused there and showed that those students displayed satisfaction, concentration and happiness. The results are given in the

Table 3.

The ANOVA conducted for students sitting in the middle row shows that students sitting there were satisfied, as shown in

Table 4.

Similarly, the students sitting in the last row were also evaluated using ANOVA to determine the impact of each variable. The results in

Table 5 show that the students in the last row seemed dissatisfied.

The variable “lecture duration” was considered and its effect on the students’ facial expressions was analyzed. The lecture duration was divided in to three sections: 15, 30 and 45 min. The lecture duration of 15 min included the overview of the topic, while the 30 min’s lecture included the definitions and details of the topic and the 45 min’s lecture included the background, definition and comprehensive detail of the topic. The statistics from mean, Std. deviation and chart given in

Table 6 show the facial expression that lasted for the most time on an individual in each time division of the “lecture duration”.

The facial expressions satisfaction, concentration and happiness were high in the lecture duration of 15 min while the feelings of satisfaction and concentration were at high in the 30 min’s lecture. The feelings of dissatisfaction and sadness were found in the lecture duration of 45 min.

Another selected variable “difficulty of subject” was analyzed against selected facial expressions. The difficulty level of the subject was analyzed by the students’ grades performance the more the failure or the lower grade the students will attain the subject is considered as difficult subject. The theory of automata, multivariable calculus, general science and modern programming languages subjects were considered as the higher difficulty level subjects. The results show that the difficulty level of the subject has a huge impact on the facial expressions of the students.

Table 7 below shows that the mean value of the dissatisfied expression (x = 0.78) was higher in the subjects selected with higher difficulty level. Similarly, the mean of another facial expression, sad, is (x = 0.63) while facial expressions such as satisfied is lower, (x = 0.19), indicating that the difficulty level of the subjects had a great impact on the facial expressions of the students.

Moreover, the selected subjects with higher difficulty level were analyzed with the time duration of the lecture and the values indicate that the dissatisfied facial expression was found to be at its peak when the lecture duration was 15 min and was lower at 30 min and 45 min, as shown in

Table 7.

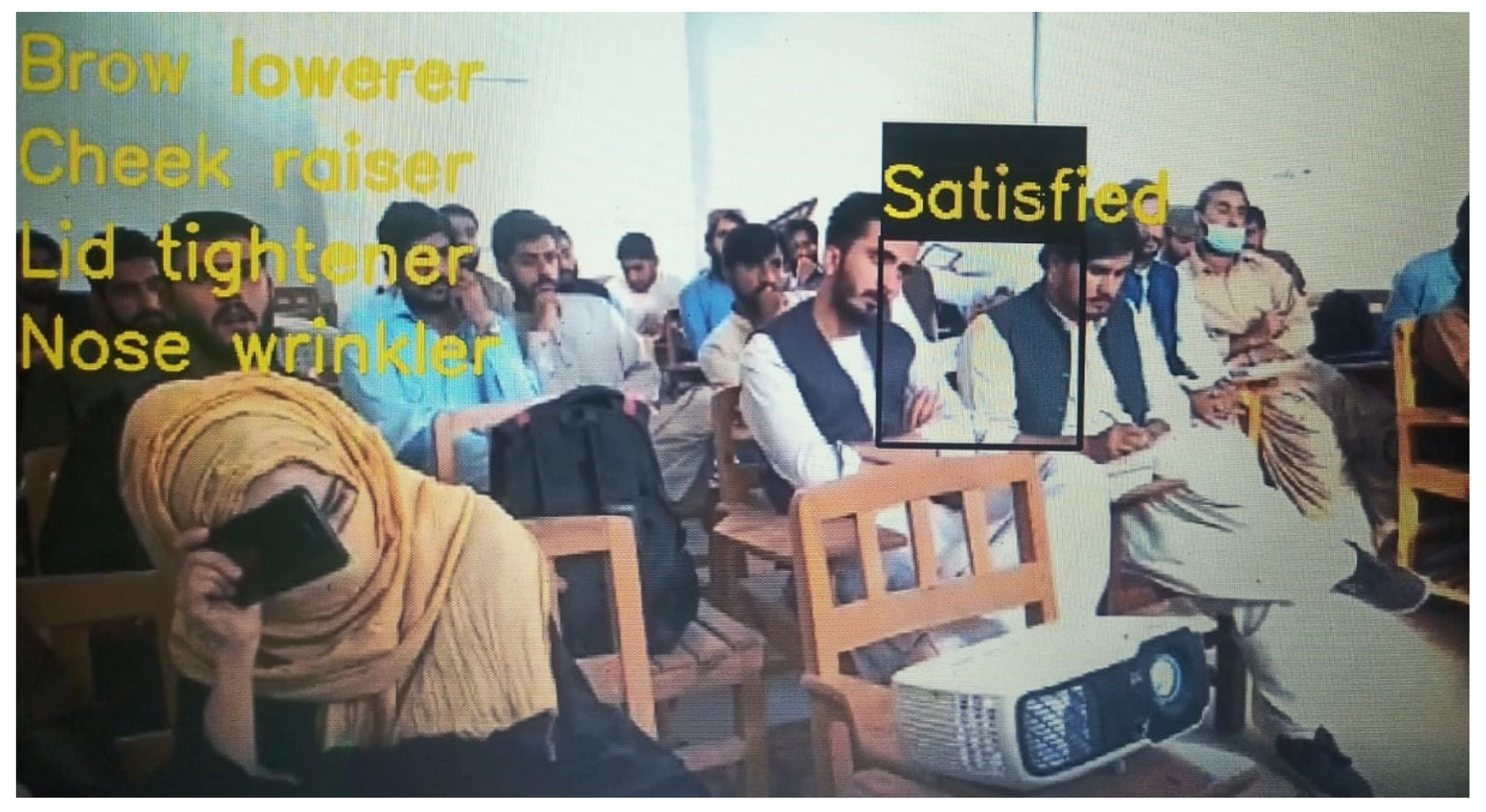

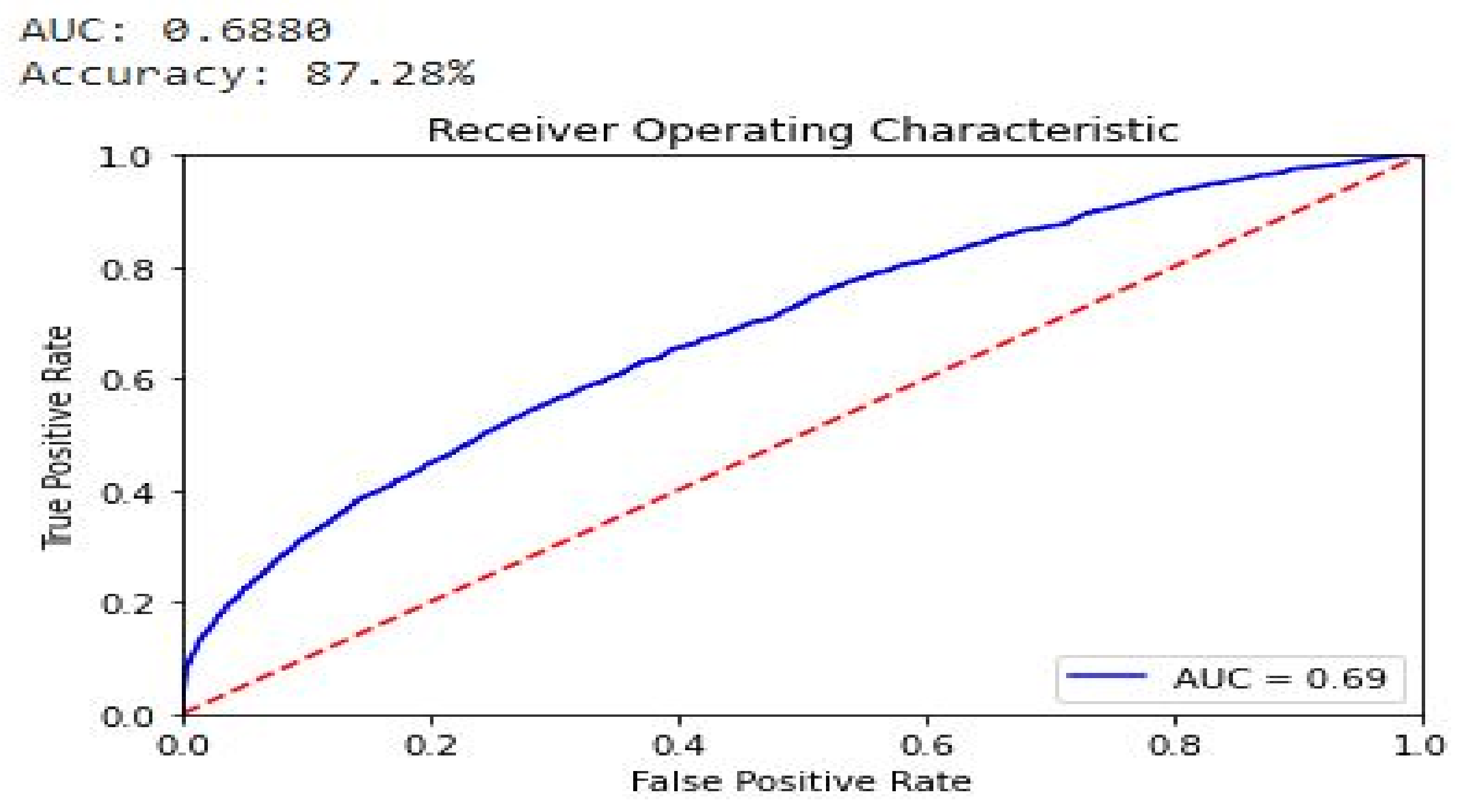

The research question “Does the use of salient facial patches improve the performance the of automatic facial expression recognition system?” can be answered by stating that adding the salient facial patches increased the accuracy of the automatic facial expression detection system to a greater extent. The statistical measure area under the curve (AUC) and the evaluation metric of accuracy showed improved results while comparing the proposed system with well-known FERs such as Azure, Face ++, and Face Reader.

Figure 6 and

Figure 7 shows a comparative analysis of the proposed automatic emotion recognition system using facial expression with other well-known FERs on the market.

The proposed system was developed to work in a real-time classroom environment but the system is capable of computing the images and videos as well. In order to compare the performance of the proposed system with the existing system the proposed system was provided with images as input because the existing systems can input images only. In

Table 8 the commercial FER systems are considered and the F1 score of each emotion is listed using the FER-2013 dataset.

In

Table 9 the accuracy of the existing FER models is compared with the proposed system using the well-known datasets FER 13, JAFFE, and CK+.

In

Table 10 the accuracy of FERs that can work in a real-time environment are compared with the proposed system.

The statistic above shows higher accuracy of the proposed system as compared to existing FER. Moreover, the proposed FER is trained to analyze emotions with the help of proposed novel facial feature movements. Each emotion addressed in the study is composed of multiple micro muscle movements of the facial features that are analyzed to improve the accuracy of the emotion.

Moreover, the lectures analyzed by our system were recorded. The videos of the recorded lectures were presented to a senior psychologist, Dr. Inam Shabir, an employee of the federal government of Pakistan, to evaluate the facial expressions of the students during lecture. The assessments by our proposed automatic emotions recognition system and this senior psychologist were compared. He observed the facial expressions of students against the variable “gender” and observed that the facial expressions of female students changed most frequently during the lecture. He observed the variable “seating position” and concluded that the students sitting in the first row are mostly happy and concentrating on the lecture, that the students in the middle row seemed satisfied and showed concentration during lecture, and that the students in the last row seemed a little bored, concentrating and dissatisfied. He suggested that the student’s behavior in the last row was affected by factors such as the invisibility of the board, the voice of the instructor and the speed of teaching and that improving the seating arrangement could change the facial expressions. He furthermore observed the variable “difficulty of subject” and observed that subject difficulty increased sadness, concentration and dissatisfaction among the students. The variable “lecture time” was analyzed and the psychologist observed that boredom and dissatisfaction most frequently occurred during the 45 min section of the lecture. He stated that the increase in lecture duration caused the students to lose their focus and become tired. It is therefore suggested not to increase the lecture time or that students should be given breaks during lecture to refresh their minds for better focus and good results. He suggested that this situation can be overcome if the instructor uses interactive, thought provoking and engaging lecture techniques during lecture.

The second research question “Do student’s facial expressions during lecture change according to the variables “department’”, “gender”, ”difficulty of subject”, “lecture duration”, or “seating position”? was answered as our research study explored the effect of each variable over students’ facial expressions and concluded that the external variables associated with the learning environment can affect the facial expressions of the student’s during learning.

The third research question addressed was “Do student’s facial expression during the lecture effect their performance”?

The facial expressions of each student evaluated by the proposed system were compared with their grade performance and it was observed that the students’ grade performances were directly connected to their facial expressions during learning. The fourth research question addressed was “Are student’s facial expressions related to the difficulty of the subject?”. The statistic from the research shows that when the students were taught a subject with higher difficulty they displayed higher dissatisfaction and sadness. Similarly, the study analyzed the difficulty of the subject against student’s grades and the results show that the difficulty level of the subject also has a huge impact on the students’ performances. The fifth research question addressed was “What is the impact of lecture duration on student’s facial expression?”. It has been observed in this research study that as the lecture duration increases the facial expressions of the majority of student’s changes from satisfied, concentrated and happy to dissatisfied and sad. We can therefore, conclude that lecture duration should not be very long or that lecture breaks be included to overcome this issue.