Abstract

Identifying and locating track areas in images through machine vision technology is the primary task of autonomous UAV inspection. Aiming at the problems that railway track images are greatly affected by light and perspective, the background environment is complex and easy to misidentify, and existing methods are difficult to reason correctly about the obscured track area, this paper proposes a generative adversarial network (GAN)-based railway track precision segmentation framework, RT-GAN. RT-GAN consists of an encoder–decoder generator (named RT-seg) and a patch-based track discriminator. For the generator design, a linear span unit (LSU) and linear extension pyramid (LSP) are used to concatenate network features with different resolutions. In addition, a loss function containing gradient information is designed, and the gradient image of the segmentation result is added into the input of the track discriminator, aiming to guide the generator, RT-seg, to focus on the linear features of the railway tracks faster and more accurately. Experiments on the railway track dataset proposed in this paper show that with the improved loss function and adversarial training, RT-GAN provides a more accurate segmentation of rail tracks than the state-of-the-art techniques and has stronger occlusion inference capabilities, achieving 88.07% and 81.34% IoU in unaugmented and augmented datasets.

1. Introduction

Railways are an important lifeline for the country’s economy and trade and extend over long distances between various regions. This makes monitoring and inspection of railway lines both expensive and laborious. Traditionally, railway monitoring and inspection have been accomplished by deploying specialised personnel and machinery on the track [1,2]. However, this method is degraded by high labour costs and limited inspection frequency and duration. There is also a personal safety risk to railway lines in no-man’s land.

As a result, unmanned aerial vehicles (UAVs) are becoming increasingly popular to improve the efficiency of the inspection process [3,4,5,6]. UAV inspections can be carried out more frequently and do not conflict spatially with traditional inspection methods (track inspection vehicles, manual patrols, etc.). Since UAVs do not obstruct the railway, normal train operations can continue even during inspections. However, current UAV-based inspection methods require a dedicated flyer to control the UAV in real time or set the route manually, and the operation is cumbersome. Autonomous UAV inspection technology will be an important development direction in the future. Among the technologies related to autonomous inspection by UAVs, the identification and localization of railroad areas in images through machine vision is the main task.

The main challenges of the railway segmentation problem in UAV images include the following:

First, the railway target contains a variety of structures such as rail track, sleeper, ballast and turnout. Among them, the rail track is the core structure of the railway, and its surface is smooth, which shows large differences in various intensities and angles of light environments.

Second, the scenes around the railway may be vegetation or terrain with different textures, colours, appearance and other structures, such as roads, bridges and power lines. Moreover, some of the scenes have textures similar to those of railway targets, which are prone to misidentification.

Third, the railway tracks are slender linear structures, and the track images change considerably with different camera angles.

Fourth, the UAVs used to capture the images may be at different heights at various times and locations along the railway. Therefore, the tracks may appear in the images at different scales.

To overcome the above challenges, deep-learning-based image segmentation methods are gradually becoming mainstream. In order to obtain finer segmentation results, researchers have carried out research on various aspects of network structure design, objective functions, and training methods. Encoder–decoder models such as SegNet [7], U-net [8] and DeeplabV3+ [9] and multi-scale feature combination means such as Pyramid Network (FPN) and ASPP, are widely used to achieve semantic segmentation of fine targets such as utility poles, lane lines and blood vessels. CondLaneNet [10], LaneATT [11] and other models directly use the line model (anchor point + angle + bias) as the optimization target to achieve fast detection of lane lines. However, such models are poorly adapted to images with complex aerial views. Models, such as HED [12], Deepflux [13], and ALSN [14], obtain high accuracy segmentation results of line shape targets such as edges and skeletons by calculating loss functions of intermediate features with different resolutions and ground truth with side output supervision.

However, in practice, it is found that existing models and training methods suffer from obvious problems of similar region mis-segmentation and weak inference ability of occluded regions. For linear targets such as railway tracks, humans can easily remove the misidentified scenes by global information and fill the obscured track regions. This is an automatic generation capability for unknown regions, and based on this consideration, a GAN-based accurate segmentation framework for railway tracks, RT-GAN, is proposed, which improves the segmentation accuracy for slender linear targets, such as rail tracks, by introducing gradient information and adversarial training. RT-GAN consisted of two parts: a track segmentation network, termed RT-seg, which generates the track segmentation image, and a track discriminator, which determines whether the segmentation image is true or not. Compared with using the track segmentation network alone, the track discriminator and the track segmentation network supervise each other by adversarial training, which can obtain higher accuracy. In practice, only the track segmentation network is being used, which does not affect the efficiency of the segmentation method.

The main contributions of this study include the following:

- A framework for accurate segmentation of railroad tracks, RT-GAN, is proposed. The framework treats the segmentation of track targets as an image-to-image generation task and enables the generator RT-seg of RT-GAN to more accurately exclude misidentified similar targets and reason about the occluded track regions through adversarial training.

- A training process optimized for the continuous gradient features of track images, a gradient loss function and a discriminator input containing gradient images are designed to guide the generator RT-seg to focus on linear features of railroad tracks faster and more accurately.

- A UAV-based railway image dataset named “iRailway”, including illumination changes, occlusions and various complex backgrounds, which can reflect the challenges related to real-world railway images is included.

The rest of this article is organizised as follows. In Section 2, the work related to the semantic segmentation of railway targets and GAN-based image segmentation methods is outlined. In Section 3, the proposed RT-GAN framework for track segmentation in UAV images is presented, including the track segmentation network, track discriminator and training. In Section 4, the experiment and analysis are discussed. In Section 5, the conclusion is presented. The dataset and code for DFR are available at github.com/ksws0499733/RTGAN.

2. Related Work

2.1. Vision-Based Railway Target Segmentation

Image detection and segmentation are widely used in the field of railway safety monitoring. Some studies have used traditional image methods such as morphological operator [15], visual saliency [16], and Markov random field [2,17] to extract the location and shape of rail surface defects in short-range rail images from an inspection car.

In recent years, deep convolutional neural network (DCNN)-based methods for track target detection have become popular. Most of these studies use various general-purpose image segmentation networks to classify track targets and then design dedicated evaluation metrics to determine whether the targets are defective or not. Mammeri et al. [4] used U-Net, a full convolutional encoder–decoder type segmentation network, to segment the track regions in UAV images. Ikshwaku et al. [5] proposed a two-stage detection and classification system based on GoogLeNet. The first stage detects the presence of tracks, while the second stage further classifies objects, such as lines, ballast, anchors, sleepers and fasteners, in UAV-based images. Kang et al. [18] identified the location of track targets such as fasteners and insulators in the images by Faster RCNN. Tu et al. [19] also finely segmented the fastener region using Yolact++ to detect the presence of looseness. Zhang et al. [20] designed a cascaded depth segmentation network (CDSNets) based on Deeplabv3 to extract insulators from suspended chainline images. A GAN-based defective sample generation network was proposed for solving the problem of the scarcity of defective samples.

Although vision-based rail target segmentation and recognition have been widely studied, there is still a lack of systematic methods for rail detection on UAV platforms. Most of the traditional methods are only applicable to fixed-view cameras and cannot handle railways in complex scenes (e.g., lighting changes and background noise interference ), and existing deep neural networks ignore the intrinsic features of railway tracks (e.g., line shape and unique structure). A dedicated and fast railway track segmentation network needs to be designed to meet the demand of UAV-based track inspection.

2.2. Image Segmentation Based on GAN

Pixel-level image segmentation can be viewed as an image-to-image conversion task where the input image has the same underlying structure as the output image [21], and the key is to learn the pixel mapping function from input to output. Traditional mapping functions are designed by hand and rely on specialised domain knowledge, and heuristics are difficult to apply to complex scenes. With the development of adversarial training theory, GANs have solved this problem by adversarially training the generator G and discriminator D to approximate the mapping function. In the field of semantic segmentation, GAN is often used to reduce the overfitting and improve the generalization ability. The characteristics of some state-of-the-art GAN-based semantic segmentation methods are shown as Table 1.

Table 1.

Characteristics of state-of-the-art GAN-based semantic segmentation methods.

Luc et al. [22] proposed a GAN-based semantic segmentation approach (SS-GAN), which first used GAN ideas in the field of semantic segmentation. SS-GAN uses a traditional segmentation network as a generator to output a classification image and then uses a discriminator network to determine whether this classification image is true or not and improves the segmentation accuracy of the segmentation network and the discriminator’s discriminative ability through adversarial training.

In order to improve the adaptability of image segmentation networks to different domain data, Hong et al. [23] proposed the cGANDA algorithm, which uses conditional GAN to reduce the feature differences between different domain datasets and improve the segmentation accuracy of semantic segmentation networks trained with generated data on real data.

Hoffman et al. [24] proposed the cyclic consistent adversarial domain adaptive (CYCADA) method, which uses multiple loss functions while learning the transformation relationships of different domain data at the pixel and feature levels, achieving state-of-the-art results in cross-domain digital recognition and cross-domain semantic segmentation of urban scenes.

Li et al. [25] proposed a bi-directional semantic segmentation learning system that alternately trains an image translation model and a segmentation adaptive model, which mutually reinforce each other and solve the problem of domain adaptive limited datasets.

Zhang et al. [26] proposed a GAN-based multiple sclerosis lesions segmentation network, MS-GAN, which solves the problem of adaptive segmentation of different multiple sclerosis lesions images by rational design of the adversarial loss function.

Liu et al. [27] proposed a two-stage image segmentation framework, FISS-GAN, for foggy images. The first stage of FISS-GAN generates edge images of foggy images by adversarial training. The second stage generates semantic segmentation results using both edge images and real images as inputs.

Among the different variants of GAN models, Pix2Pix [21] is one of the most successful GAN models for semantic segmentation, which treats semantic segmentation as an image-to-image transformation problem and constructs a generic conditional GAN to solve it. Its objective can be formulated as follows:

where is the loss of conditional GAN, and is the distance between the generated mask and the ground truth, which is used to constrain the difference between the generated image and the true image. In the optimisation process of , the objectives of G and D are to minimise and maximise them, respectively, because the task of the generator is to deceive the discriminator by generating a mask as close as possible to the ground truth, as opposed to the detection performed by the discriminator to distinguish the difference between the generated mask and the ground truth. The distance is added as a regularised term to the adversarial training progress.

In this paper, the conditional GAN approach is used to train image segmentation networks. In addition, most other studies have focused on the training framework for GAN with less attention to the selection of generators. In practice, the better the independent performance of the generators, the stronger the effect of adversarial training. For a specific problem, such as rail track segmentation, this paper carefully designs the generator RT-seg to obtain the best segmentation results.

3. Method

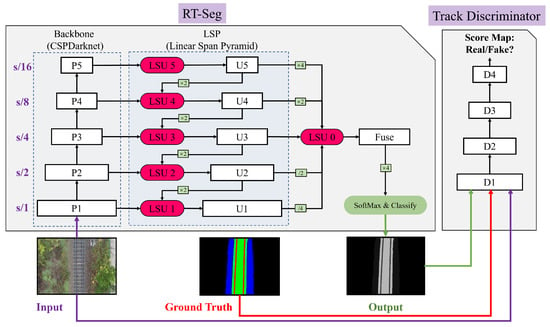

The framework of RT-GAN is shown in Figure 1, which can be divided into two parts: the rail track segmentation network RT-seg and the track discriminator, which are represented by and , respectively. The input of RT-seg is an RGB-3 channel image of arbitrary size, and the output is a pixel-level semantic segmentation image with the same size. Suppose the input image is I and the output result is ,

Figure 1.

Network structure of RT-GAN.

The input of the track discriminator contained 3 parts: the input image, the ground truth image and the output of RT-seg, and its output is the veracity scores of the generated image. In each training, the inference of the discriminator is executed twice, and two different scores are output based on the output result of RT-seg and the ground truth as input, respectively. Suppose the ground truth image is and the output score is F, then, the output of the track discriminator is as follows:

The RT-seg and track discriminator are explained in detail next.

3.1. Rail Track Segmentation Network

As shown in Figure 1, an encoder–decoder network termed RT-seg is designed as the generator. RT-seg is inspired by [14] and uses a linear span unit (LSU)-based feature pyramid network, termed LSP, to concatenate feature maps with different resolutions.

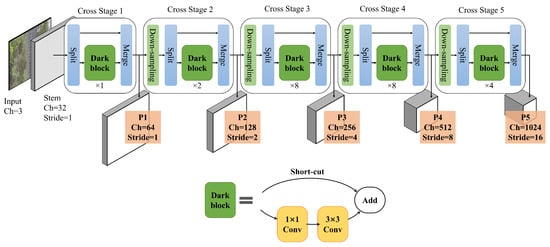

First, the CSPDarknet53 [28] is selected as the backbone network, and its structure is shown as Figure 2. It mainly consists of 5 cross stages. In each stage, the input features are downsampled (except Stage 1) and then split into two parts according to the channels. One part passes through a set of “Dark block”, and the other part does nothing. Finally, the two parts are concatenated as the output of the stage. The outputs of 5 stages (, , , and ) are selected as the output feature maps of the backbone with strides of 1, 2, 4, 8 and 16, respectively.

Figure 2.

Network structure of CSPDarknet53.

Then, these feature maps are passed through the LSP to obtain an optimised combination of features with different resolutions. In contrast to the basic feature pyramid network, the LSP employs LSUs instead of simple convolution operations. Each LSU accepts and accumulates feature maps from the backbone network and LSUs at deeper levels. The number of channels and resolution of its output are aligned to the channels and resolution of the current stage by convolution and upsampling, respectively. An additional LSU is used to accept the output of the LSP and the output fused features to further extend the feature sum-space. Among them, to mitigate the noise caused by upsampling and downsampling, the stride of the fused features is chosen to be 4, and the features of other levels are resolution aligned by downsampling or upsampling,

Finally, the fused features are upsampled, convolved, and SoftMaxed to output a pixel-level semantic segmentation image of the same size as the input.

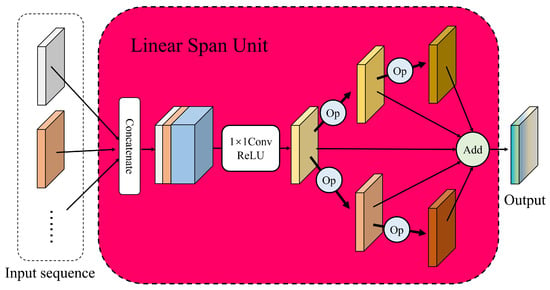

In the network, the LSU is a configurable subnetwork proposed by LIU [14] et al. Its structure is shown in Figure 3. If the feature map is considered a node and the layer operations are considered edges, the subnetwork of LSU can be considered a directed acyclic graph. This directed acyclic graph consists of K input nodes, P intermediate nodes and 1 output node. For multiple input nodes, LSU first merges all the input feature maps by the “concatenate”, “ convolution” and “ReLU” operations. Then, a series of multi-layer combinations of feature transformations are performed, including “skip”, “ convolution”, “ convolution”, “dilated convolution”, etc. Finally, all the intermediate nodes are connected to the output node by the “summing” operation, and an expanded feature map is output that combines all the transformed features.

Figure 3.

Network structure of linear span unit (LSU).

Suppose the input node is , the intermediate node is , and the output node is y.

The set of available transformation operations between nodes is denoted . For an ordered pair of intermediate nodes , the transformation operation between them is , i.e.,

Then, the LSU can be represented as follows:

where denotes the ReLU activation function, denotes the convolution operation, denotes the cancatenate operation, denotes the number of operations experienced from the 1st intermediate node to the i-th intermediate node , and denotes all transform operations experienced from to .

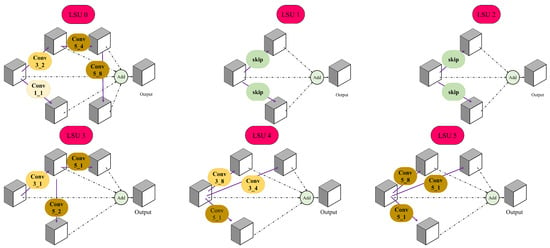

LSU encourages multiple paths for feature transformation and does not have a fixed network structure. Some efficient network structures are obtained by a heuristic search in the literature [14], and the ALSN-Large structure is chosen after experimental validation. The structures of LSUs in the ALSN-Large structure are shown in Figure 4. The concatenate and channel alignment operations are omitted in the figure. The symbol indicates the convolution operation with kernel size X and dilation factor Y. Among them, and are actually only simple direct connections; , , and use large size convolution kernels and shallow-wide structures; , which fuses all resolution feature maps, uses a deep-narrow structure with multiple convolutional layers with increasing dilation factors.

Figure 4.

The LSUs in the ALSN-Large structure.

Image Segmentation Loss

The loss of RT-seg is divided into 3 parts: category loss, gradient loss, and side output loss.

Category loss. For a binary classification task, the general approach is to measure the distance between the predicted result and the true classification by calculating the sum of the binary cross-entropy of each pixel. Considering the diversity of targets in the track images, it is a better choice to use the cross-entropy of multiple classifications to calculate the loss. Due to the unbalanced number of track regions and backgrounds, the focal loss is used to balance the weight of each pixel as Equation (10).

where X is the predicted result , denotes the focal Loss function, and and are the hyperparameters.

Gradient loss. Since railway tracks are linearly connected regions with distinct edges on the image, explicitly incorporating gradient variations into the loss helps to guide the generation of segmentation results with clear boundaries. Therefore, the root-mean-square loss function with mask is used to calculate the gradient loss as Equation (11).

where X is the predicted result , Y is the ground truth , , and are gradient operators. M is the mask of the region of interest, which is used to remove the effects of image augmentation such as masking and cropping. means to calculate the arithmetic mean.

Side output loss. The side output loss refers to the difference between the middle feature layer of a DNN and the ground truth. The side output loss can supervise the changes of intermediate feature layers and guide the DNN to converge faster and is widely used in linear target segmentation tasks such as edge detection [12] and skeleton detection [13,14]. Different feature layers of LSP are used as input, and the focal function is used to calculate the loss as Equation (12).

where is the k-th side output, which achieves channel alignment with the number of target categories through 1 × 1 convolution. is the output of LSP.

In summary, the loss function of RT-seg is s Equation (12)

where , and are the proportions of each loss function selected in this paper.

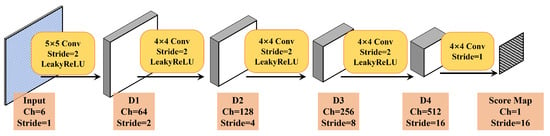

3.2. Track Discriminator

The structure of the track discriminator, shown in Figure 5, is a fully convolutional Patch-GAN discriminator [29], consisting of 5 convolutional layers. The input of the track discriminator is a 6-channel combined feature, consisting of the input image (3 channels), the segmentation result (1 channel) and the gradient feature (2 channels), and the output is a single-channel scoring map F instead of a single score after 4 downsamplings.

Figure 5.

Network structure of the track discriminator.

In contrast to image-specific discriminators, if only the semantic segmentation results of the tracks are fed to the discriminator, the adversarial loss is difficult to optimise, leading to training failure. This may be due to the sparsity of the track regions in the image. Natural images have gradient features on each local region that can be learned, but the sparse pixel distribution of the track-only region contains less gradient information, which makes the training of the discriminator difficult. To solve this problem, the gradients of the input image and the classification result are treated as additional conditions. By concatenating the input images I, the segmented image and the gradient of as inputs, the track discriminator gains enough knowledge to distinguish the difference between the generated distribution and the true distribution, and the training becomes stable. Therefore, for two discriminator inferences with generated image and ground truth image , the corresponding score maps are as follows:

where X is the predicted result , Y is the ground truth , , , , , , , and ⊙ is the same as Equation (11).

Adversarial Loss

The task of the track discriminator is to try to distinguish the RT-seg output result from the ground truth by discriminating as false and as true. Therefore, the loss function of the track discriminator is mainly divided into two parts: for updating the weights of the track discriminator and for updating the weights of the RT-seg. The hinge loss function [30] is used to determine whether the input is true or false. The adversarial losses for training RT-GAN are as follows

where denotes the ReLU activation function, and and are two score maps of the track discriminator output.

3.3. Training

Training RT-GAN is a challenging task, and training often fails if both image segmentation loss and adversarial loss are applied, even if the weights between these two losses are carefully adjusted. To avoid this problem, a two-stage training approach is used. In the first stage, only the RT-seg is trained and achieves as high a segmentation accuracy as possible. In the second stage, the trained RT-seg is used as a generator and trained adversarially with the track discriminator. Experimental validation shows that the higher the initial accuracy of RT-seg, the higher the efficiency of adversarial training and the final segmentation accuracy.

4. Experiment

In this section, the implementation details are first introduced. Then, RT-GAN is compared with the state-of-the-art segmentation networks. Finally, an ablation study is carried out on the improvements adopted in RT-GAN.

4.1. Implementation Details

4.1.1. Dataset

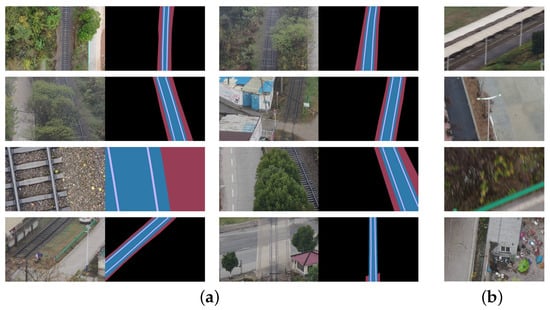

There are few publicly available datasets specified for railway segmentation. This article builds an image segmentation dataset (“iRailway”) for railway lines by using videos captured with on-board cameras on trains and UAVs for more than 30 h. The positive set in iRailway includes 21,379 images (3296 labeled) randomly sampled from four railway lines and under the resolution of . The ballast, sleeper and track areas in the image are manually marked as ground truth. Figure 6a shows some examples, which are composed of railway lines in the field, in the forest, across buildings, under dazzle, weak and backlighting conditions. The negative set includes 552 images collected from the Web, which involve linear targets and false-positives, such as roads, sidewalks and grid floor tiles, Figure 6b.

Figure 6.

Railway line training samples from different scenes: (a) positive examples; (b) negative examples.

4.1.2. Implementation Details

RT-GAN is deployed based on PyTorch and runs on 2 blocks of NVIDIA Tesla V100 (32 GB RAM).

For the first stage of RT-seg training, an Adam optimiser with parameters , a learning rate of 0.0002 and a batch size of 16 was used, and 90 epochs were trained. The three weight ratios in image segmentation loss are , and .

For the second stage of adversarial training, the Adam optimizer with parameters was used, and the initial learning rates of RT-seg and discriminator were 0.0001 and 0.00001, respectively. A total of 100 epochs were trained according to the strategy of 1:1 interaction training between RT-seg and the track discriminator.

These hyperparameters are experimentally validated values, and all network training used the same hyperparameters.

Finally, the intersection over union (IoU) between the semantic segmentation result and the ground truth was used to judge the segmentation effectiveness of all of the algorithms.

4.2. Comparison with the State-of-the-Art Segmentation Networks

RT-GAN was compared with popularly used image segmentation networks such as SegNet [7], PSPnet [31], DeeplabV3+ [9], ALSN [14], SETR [32] and SegFormer [33]. All networks were trained on iRailway. Each network was trained in two groups, one with the original image as input (cropping operation only) and the other with a random augmentation (affine transformations, block masking, mosaic masking, etc.) image as input. Each group of training was repeated 10 times and averaged as the final result to reduce the randomness caused by dataset cropping and image augmentation. For each training, 80% of the samples were used for training and 20% for validation. The results of all experiments are shown in Table 2.

Table 2.

Performance comparison of methods.

For all networks, the IoU of the unaugmented group was greater than the IoU with augmented data because augmentation strategies, such as masking, shading and mosaic work, considerably increase the recognition difficulty.

RT-GAN obtained IOUs that exceed those of the other comparison networks in both training groups, reaching 81.34% (augmentation) and 88.7% (no augmentation), and the computation speed is faster (35.0 fps). Even without adversarial training, RT-GAN achieves 71.77% (augmentation) and 84.63% (no augmentation) IOUs, which are also higher than those of the other comparison networks.

Among the comparison networks, the highest segmentation accuracy is SegNet with IoUs of 68.71% (augmentation) and 80.98% (no augmentation), which are substantially lower than RT-GAN. It is followed by DeepLabv3+, which has lower computation and lower IoUs than SegNet, 61.69% (augmentation) and 78.84% (no augmentation). PSPnet has a faster computation speed (43.0 fps), but the IoU are only 55.98% (augmentation) and 69.37% (no augmentation).

SETR and SegFormer are transformer-based networks that have become popular in the field of image segmentation in recent years. Two SETR models (SETR_MLA and SETR_PUP) and two SegFormer models (mit_b2 and mit_b5) were tested. The experimental results show that the transformer-based model performs poorly in the rail track segmentation task. Among them, SETR_PUP had the slowest computation speed (11.0fps) with IoU 56.32% (augmentation) and 76.52% (no augmentation) over the other transformer models; Mit_b2 has the smallest model size and the fastest computation speed (46.0 fps) with IoUs 50.86% (augmentation) and 70.17% (no augmentation). The poor performance of the transformer-based models may be due to the small dataset and insufficient training.

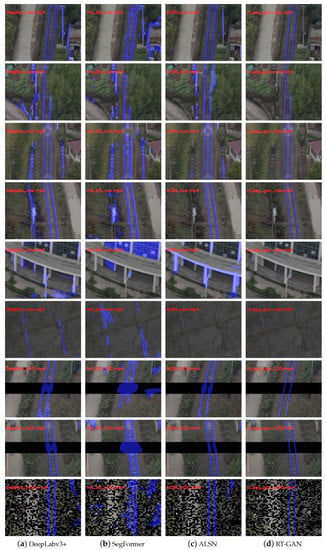

Segmentation Examples.Figure 7 is a visualisation of some segmentation results of railway lines. DeepLabV3+, Segformer(mit_b5) and ALSN are chosen to compare with RT-GAN. It can be seen that RT-GAN can considerably reduce the false detection of nontrack targets in the face of interference targets such as utility poles, streetlights, building edges and ground seams. For occluded and mosaic images, RT-GAN can effectively reason about the occluded tracks, while large mis-segmented regions exist in other models.

Figure 7.

Image segmentation results for different networks. From left to right, DeeplabV3+, Segformer (mit_b5), ALSN and RT-GAN (ours).

4.3. Ablation Study

The essence of RT-GAN is to improve the accuracy of image segmentation networks through adversarial training. Compared to the usual GAN model, RT-GAN uses a loss function containing gradient information and a discriminator input. To verify the effectiveness of these improvements, this subsection compares the effects of different generators, loss functions, and discriminator inputs on RT-GAN.

Different generators. DeepLabV3+ and Segformer are selected as generators for comparison tests with the proposed RT-seg. The experimental results are shown in Table 3, and the numbers in parentheses are the IoUs without adversarial training. It can be seen that (1) using RT-seg as generator is superior to using other generators; and (2) adversarial training can substantially improve the segmentation accuracy of the image segmentation network. For the Mit-b5 network, the IoU for unaugmented images (86.42%) is improved to a level similar to the baseline (88.07%), indicating that adversarial training can effectively reduce the demand of the transformer model for training sample size and improve the training efficiency.

Table 3.

Performance comparison of different generators.

Different image segmentation losses. The effects of different loss functions on the segmentation accuracy of RT-seg and adversarial training of RT-GAN are shown in Table 4. Compared with using only , adding improves the IoU of the RT-seg for augmentation images by 4.64% (from 64.98% to 69.62%), while the improvement for adversarial training is smaller, with only 0.08% (augmentation, from 78.97% to 79.05%) and 0.1% (no augmentation, from 87.13% to 87.23%). The accuracy is further improved if , and are used simultaneously. Before adversarial training, the IoUs increase by 6.79% (augmentation, from 64.98% to 71.77%) and 4.68% (no augmentation, from 79.95% to 84. 63%), and after adversarial training, the IoUs increase by 2.37% (augmentation, from 78.97% to 81.34%) and 0.94% (no augmentation, from 87.13% to 88.07%).

Table 4.

Performance comparison of different loss functions.

Different discriminator inputs.Table 5 shows the effect of different discriminator inputs on the segmentation accuracy of RT-GAN. If only semantic images are used as discriminator inputs, the segmentation IoUs are 79.9% (augmentation) and 83.04% (no augmentation). After adding the input image as auxiliary information to the track discriminator, the IoUs improve by 1.87% (augmentation, from 79.9% to 81.17%) and 4.7% (no augmentation, from 83.04% to 87.74%). The segmentation IoU improves to 81.34% (augmentation) and 88.07% (no augmentation) after further addition of gradient information to the discriminator input.

Table 5.

Performance comparison of different discriminator inputs.

4.4. Analysis

Experimental results show that RT-GAN can obtain stronger track segmentation accuracy than other existing methods, both for unaugmented and augmented images. Upon careful analysis, these enhancements come from three main sources: (1) generator model, (2) adversarial training, and (3) explicit introduction of gradient information.

First, the generator model is the foundation. The LSU and LSP used in RT-seg are able to extract image features at different scales effectively and use the optimal network structure obtained by heuristic search. In terms of results, RT-seg has more potential as a generator compared to other networks.

Second, the adversarial training trained the generator network in a global direction. As the segmentation results show, before adversarial training, RT-seg misidentifies linear regions such as streetlights and eaves as rail tracks, which may be caused by the smaller weights of RT-seg’s higher-level features (which have larger receptive fields) and larger weights of lower-level features (which have smaller receptive fields). In adversarial training, the track discriminator scores the segmentation result as a whole, forcing RT-seg to pay more attention to the global features of the track image, which results in a reduced mis-segmentation rate and an improved inference ability for the occluded targets of the the generator network.

Finally, explicitly adding the gradient information of track targets in the form of loss function and discriminator inputs to the training and adversarial training of the semantic segmentation network enables RT-seg to notice track regions with distinct gradient features earlier and improve the semantic segmentation of track targets. This can be proved by the results of ablation experiments.

5. Conclusions and Future Work

In this paper, an accurate segmentation framework for railway tracks, RT-GAN, is proposed. RT-GAN consists of an encoder–decoder generator RT-seg and a patch-based track discriminator. For the linear features of railway track images, RT-GAN explicitly introduces gradient information and uses a loss function and discriminator input containing gradient information to help obtain track information faster and more accurately. Compared with other popular segmentation networks, RT-GAN achieves more accurate railway track segmentation by adversarial training without reducing the inference rate. RT-GAN is tested on the track segmentation dataset iRailway proposed in this paper. The experimental results show that RT-GAN can obtain more accurate segmentation results than other popular semantic segmentation models and has stronger occlusion inference capability both for images with and without enhancement.

However, the research in this paper mainly focuses on the construction of a GAN-based framework for accurate segmentation of railway images and the exploration of suitable generators, targeting the problem of accurate binary segmentation. In the future, this paper will further investigate the interpretability of image segmentation capability enhanced by adversarial training and find more refined multi-objective segmentation models and hyperparameter selection strategies.

Author Contributions

Conceptualization, H.Y. and X.L.; methodology, H.Y.; software, H.Y.; validation, H.Y.; formal analysis, H.Y.; investigation, H.Y., X.L. and Y.G.; resources, X.L.; data curation, H.Y. and Y.G.; writing—original draft preparation, H.Y.; writing—review and editing, H.Y. and Y.G.; visualization, H.Y.; supervision, X.L and L.J.; project administration, X.L and L.J.; funding acquisition, X.L and L.J.; All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Key Research and Development Program of China under Grant No. 2016YFB1200402.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to express their sincere appreciation to the editors and the reviewers for their constructive comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gibert, X.; Patel, V.M.; Chellappa, R. Deep Multitask Learning for Railway Track Inspection. IEEE Trans. Intell. Transp. Syst. 2017, 18, 153–164. [Google Scholar] [CrossRef]

- Zhang, H.; Jin, X.; Wu, Q.M.J.; Wang, Y.; He, Z.; Yang, Y. Automatic Visual Detection System of Railway Surface Defects With Curvature Filter and Improved Gaussian Mixture Model. IEEE Trans. Instrum. Meas. 2018, 67, 1593–1608. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, L.; Wang, B.; Chen, X.; Wang, Q.; Zheng, T. High Speed Automatic Power Line Detection and Tracking for a UAV-Based Inspection. In Proceedings of the 2012 International Conference on Industrial Control and Electronics Engineering, Xi’an, China, 23–25 August 2012; pp. 266–269. [Google Scholar] [CrossRef]

- Mammeri, A.; Siddiqui, A.J.; Zhao, Y. UAV-assisted Railway Track Segmentation based on Convolutional Neural Networks. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021; pp. 1–7. [Google Scholar]

- Ikshwaku, S.; Srinivasan, A.; Varghese, A.; Gubbi, J. Railway corridor monitoring using deep drone vision. In Computational Intelligence: Theories, Applications and Future Directions-Volume II; Springer: Singapore, 2019; pp. 361–372. [Google Scholar]

- Tong, L.; Wang, Z.; Jia, L.; Qin, Y.; Wei, Y.; Yang, H.; Geng, Y. Fully Decoupled Residual ConvNet for Real-Time Railway Scene Parsing of UAV Aerial Images. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14806–14819. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, L.; Chen, X.; Zhu, S.; Tan, P. Condlanenet: A top-to-down lane detection framework based on conditional convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3773–3782. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Keep your eyes on the lane: Real-time attention-guided lane detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 294–302. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Wang, Y.; Xu, Y.; Tsogkas, S.; Bai, X.; Dickinson, S.; Siddiqi, K. Deepflux for skeletons in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5287–5296. [Google Scholar]

- Liu, C.; Tian, Y.; Chen, Z.; Jiao, J.; Ye, Q. Adaptive linear span network for object skeleton detection. IEEE Trans. Image Process. 2021, 30, 5096–5108. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Li, Q.; Tan, Y.; Gan, J.; Wang, J.; Geng, Y.a.; Jia, L. A Coarse-to-Fine Model for Rail Surface Defect Detection. IEEE Trans. Instrum. Meas. 2019, 68, 656–666. [Google Scholar] [CrossRef]

- Nieniewski, M. Morphological Detection and Extraction of Rail Surface Defects. IEEE Trans. Instrum. Meas. 2020, 69, 6870–6879. [Google Scholar] [CrossRef]

- Jin, X.; Wang, Y.; Zhang, H.; Zhong, H.; Liu, L.; Wu, Q.M.J.; Yang, Y. DM-RIS: Deep Multimodel Rail Inspection System With Improved MRF-GMM and CNN. IEEE Trans. Instrum. Meas. 2020, 69, 1051–1065. [Google Scholar] [CrossRef]

- Kang, G.; Gao, S.; Yu, L.; Zhang, D. Deep Architecture for High-Speed Railway Insulator Surface Defect Detection: Denoising Autoencoder With Multitask Learning. IEEE Trans. Instrum. Meas. 2019, 68, 2679–2690. [Google Scholar] [CrossRef]

- Tu, Z.; Wu, S.; Kang, G.; Lin, J. Real-Time Defect Detection of Track Components: Considering Class Imbalance and Subtle Difference between Classes. IEEE Trans. Instrum. Meas. 2021, 70, 5017712. [Google Scholar] [CrossRef]

- Zhang, D.; Gao, S.; Yu, L.; Kang, G.; Wei, X.; Zhan, D. DefGAN: Defect Detection GANs With Latent Space Pitting for High-Speed Railway Insulator. IEEE Trans. Instrum. Meas. 2021, 70, 5003810. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Luc, P.; Couprie, C.; Chintala, S.; Verbeek, J. Semantic segmentation using adversarial networks. arXiv 2016, arXiv:1611.08408. [Google Scholar]

- Hong, W.; Wang, Z.; Yang, M.; Yuan, J. Conditional generative adversarial network for structured domain adaptation. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1335–1344. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Isola, P.; Saenko, K.; Efros, A.; Darrell, T. Cycada: Cycle-consistent adversarial domain adaptation. In Proceedings of the International Conference on Machine Learning, PMLR 80, Stockholm, Sweden, 10–15 July 2018; pp. 1989–1998. [Google Scholar]

- Li, Y.; Yuan, L.; Vasconcelos, N. Bidirectional learning for domain adaptation of semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6936–6945. [Google Scholar]

- Zhang, C.; Song, Y.; Liu, S.; Lill, S.; Wang, C.; Tang, Z.; You, Y.; Gao, Y.; Klistorner, A.; Barnett, M.; et al. MS-GAN: GAN-based semantic segmentation of multiple sclerosis lesions in brain magnetic resonance imaging. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Liu, K.; Ye, Z.; Guo, H.; Cao, D.; Chen, L.; Wang, F.Y. FISS GAN: A generative adversarial network for foggy image semantic segmentation. IEEE/CAA J. Autom. Sin. 2021, 8, 1428–1439. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5840–5848. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6881–6890. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).