Obtaining Infrared Thermal Camera Sensor Calibration Data for Implementation in FireBot Autonomous Fire Protection Robot System

Abstract

1. Introduction

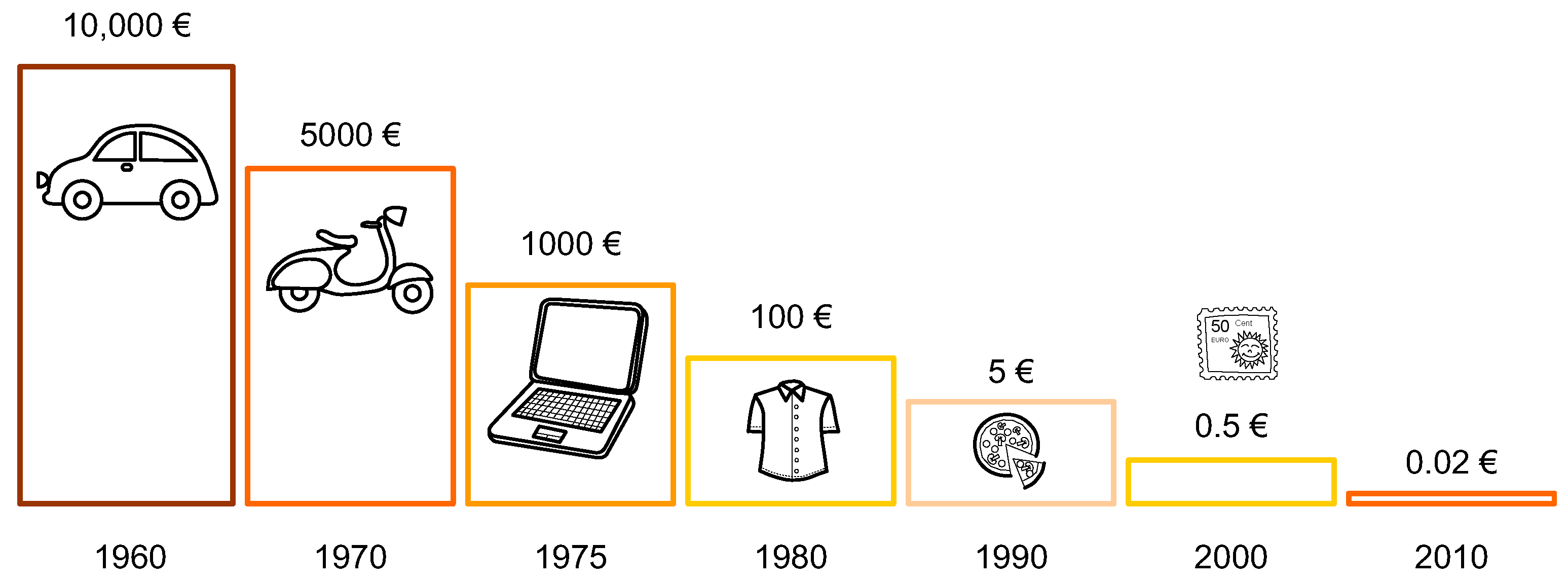

2. Materials and Methods

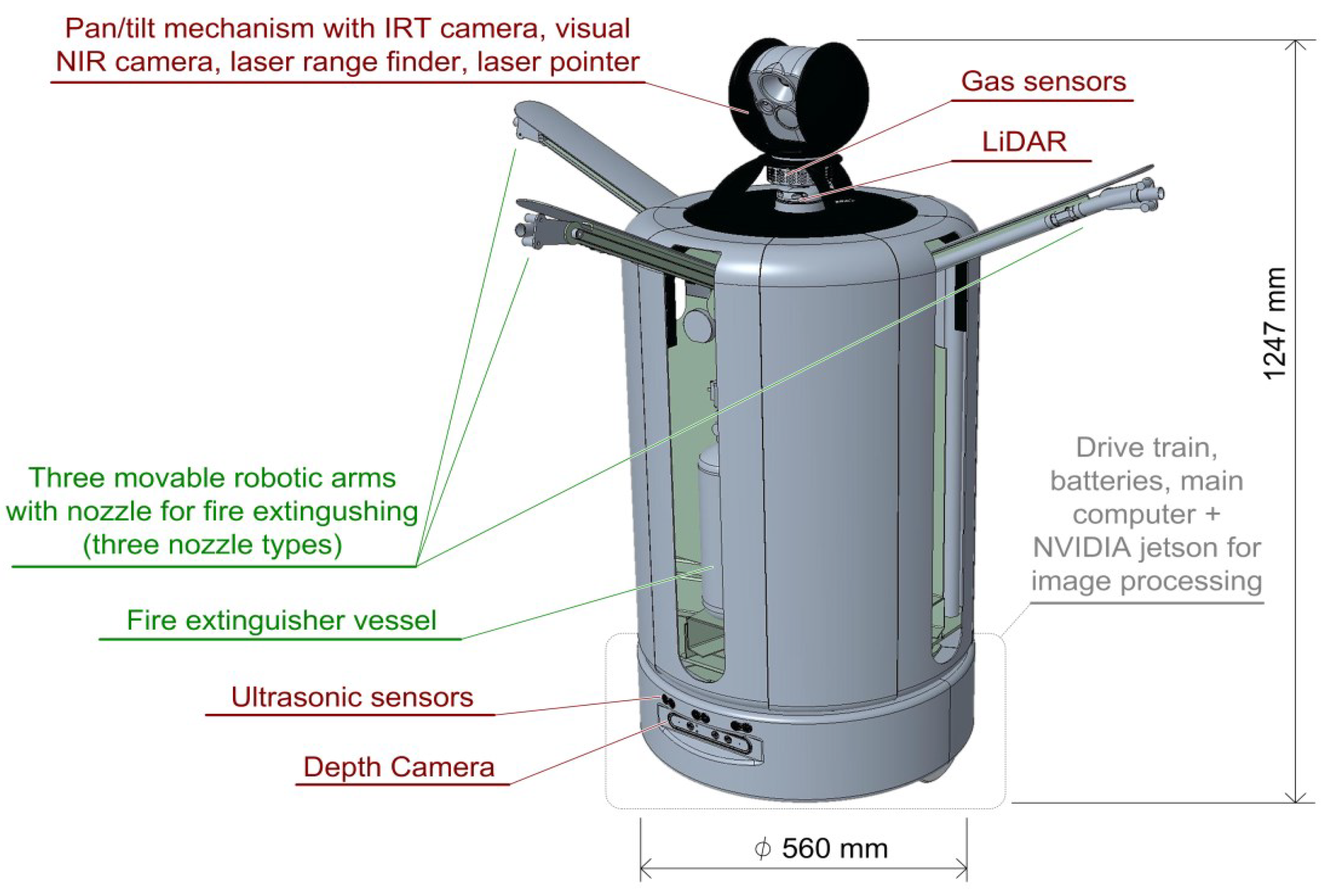

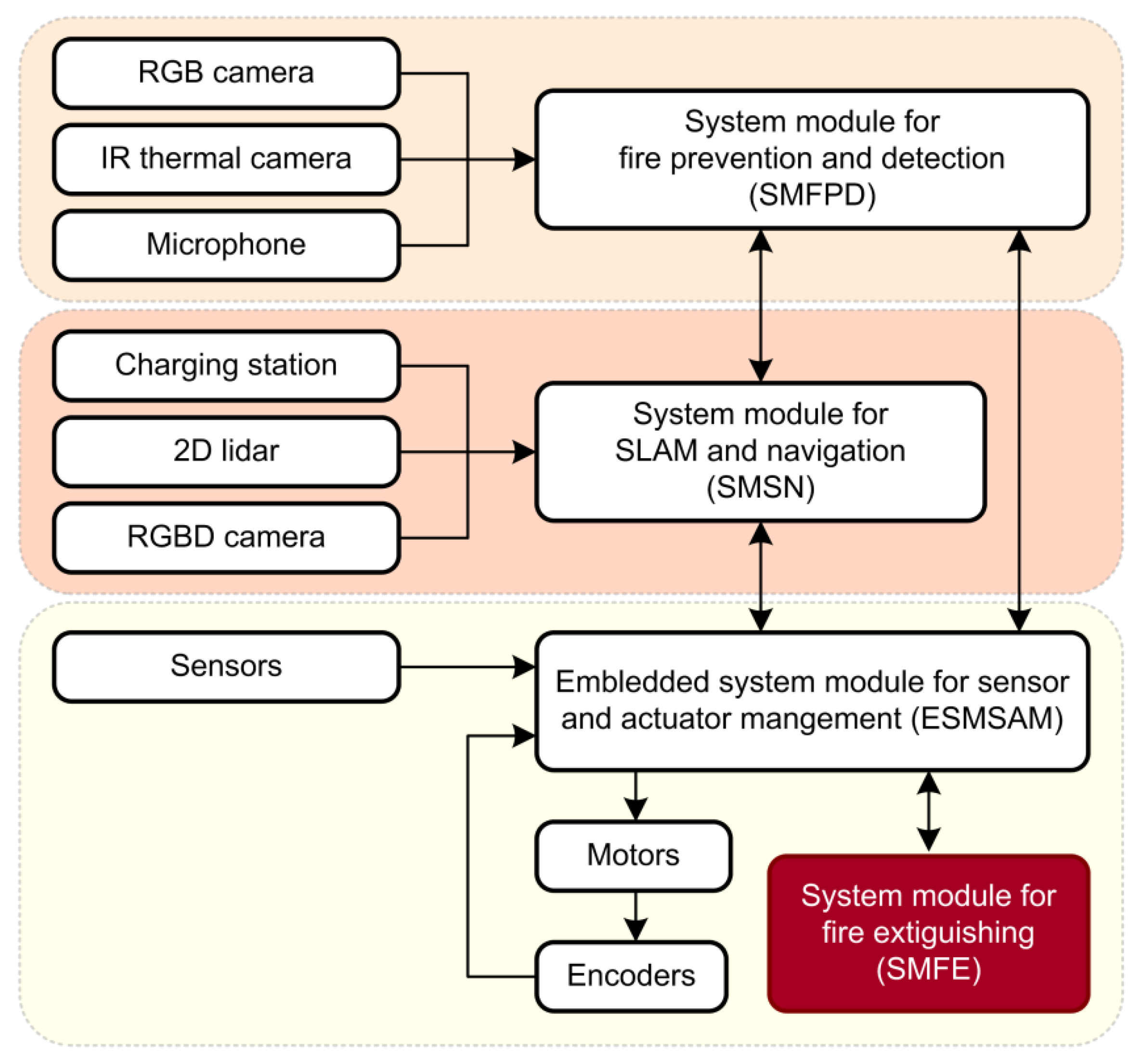

2.1. FireBot System Overview

2.1.1. FireBot Architecture

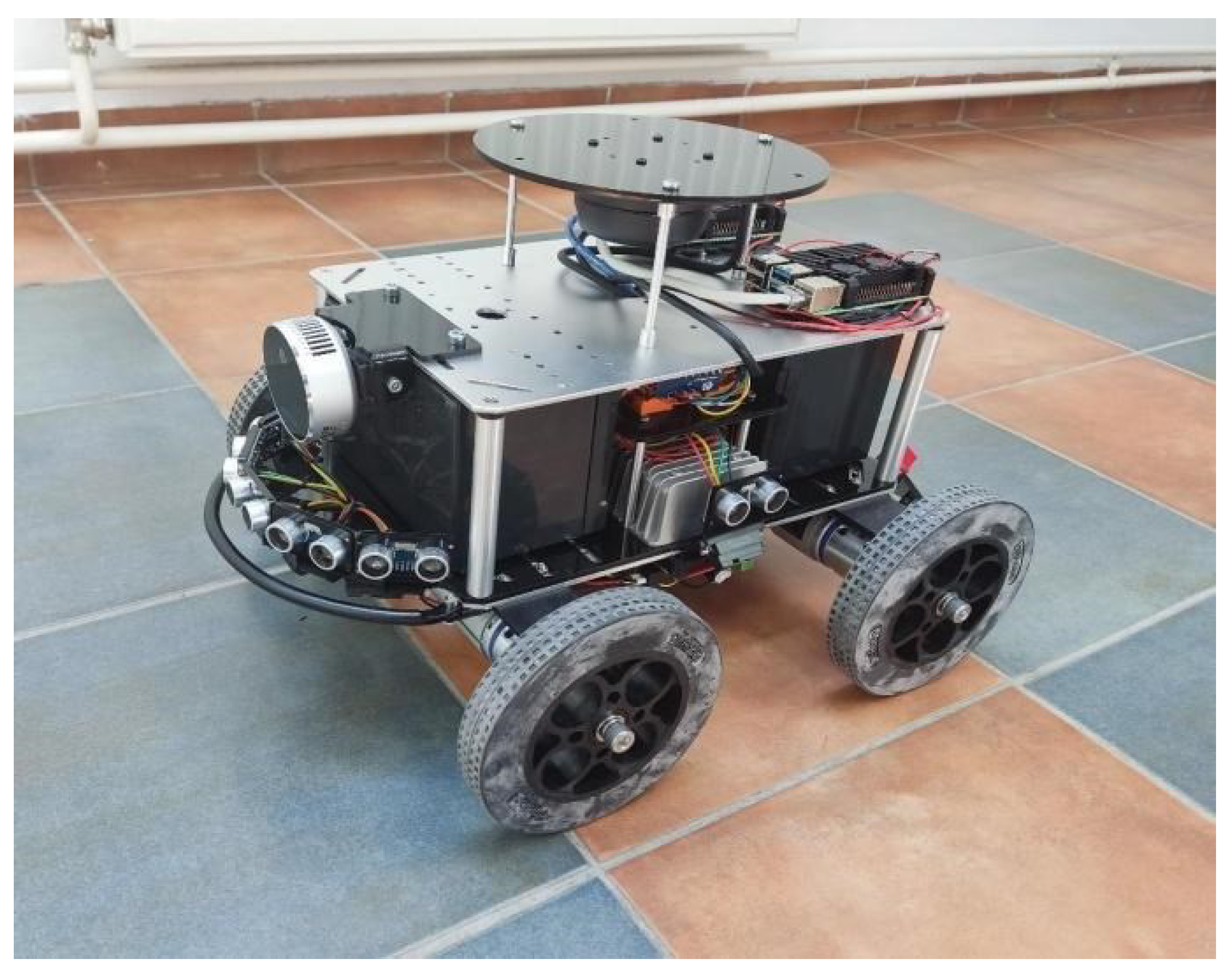

2.1.2. Hardware Specifications of an Experimental Platform

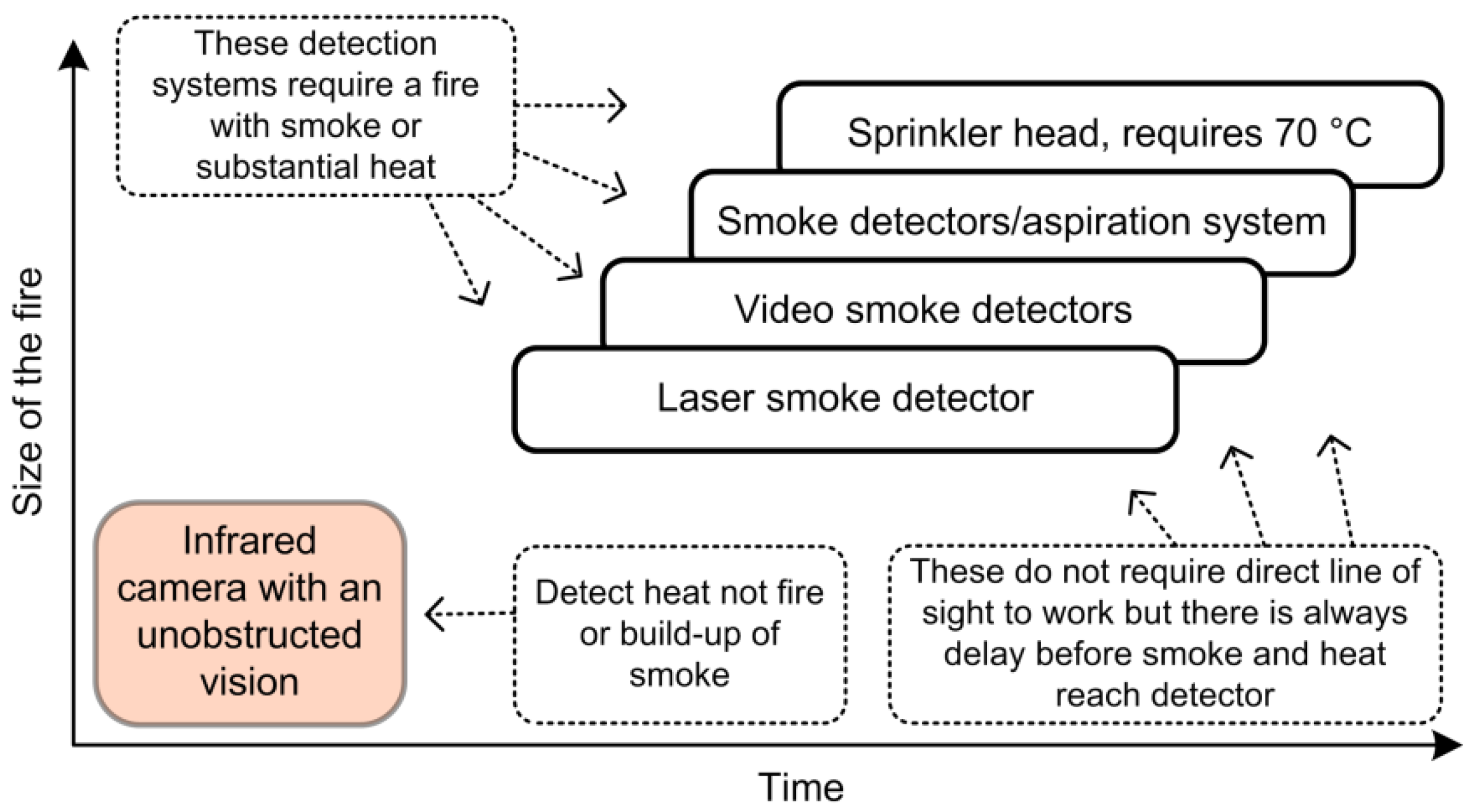

2.2. Fire Prevention and Fire Detection

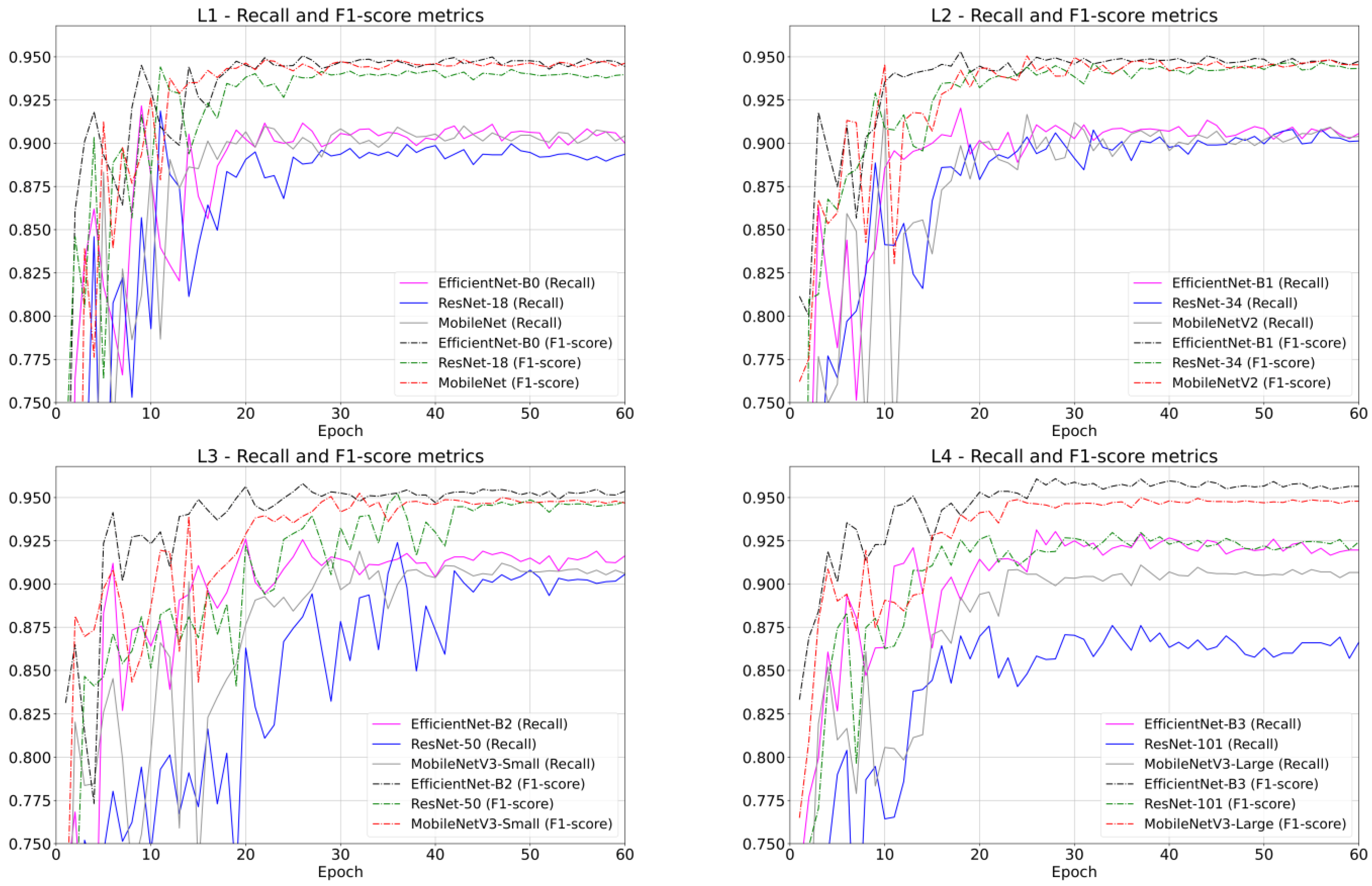

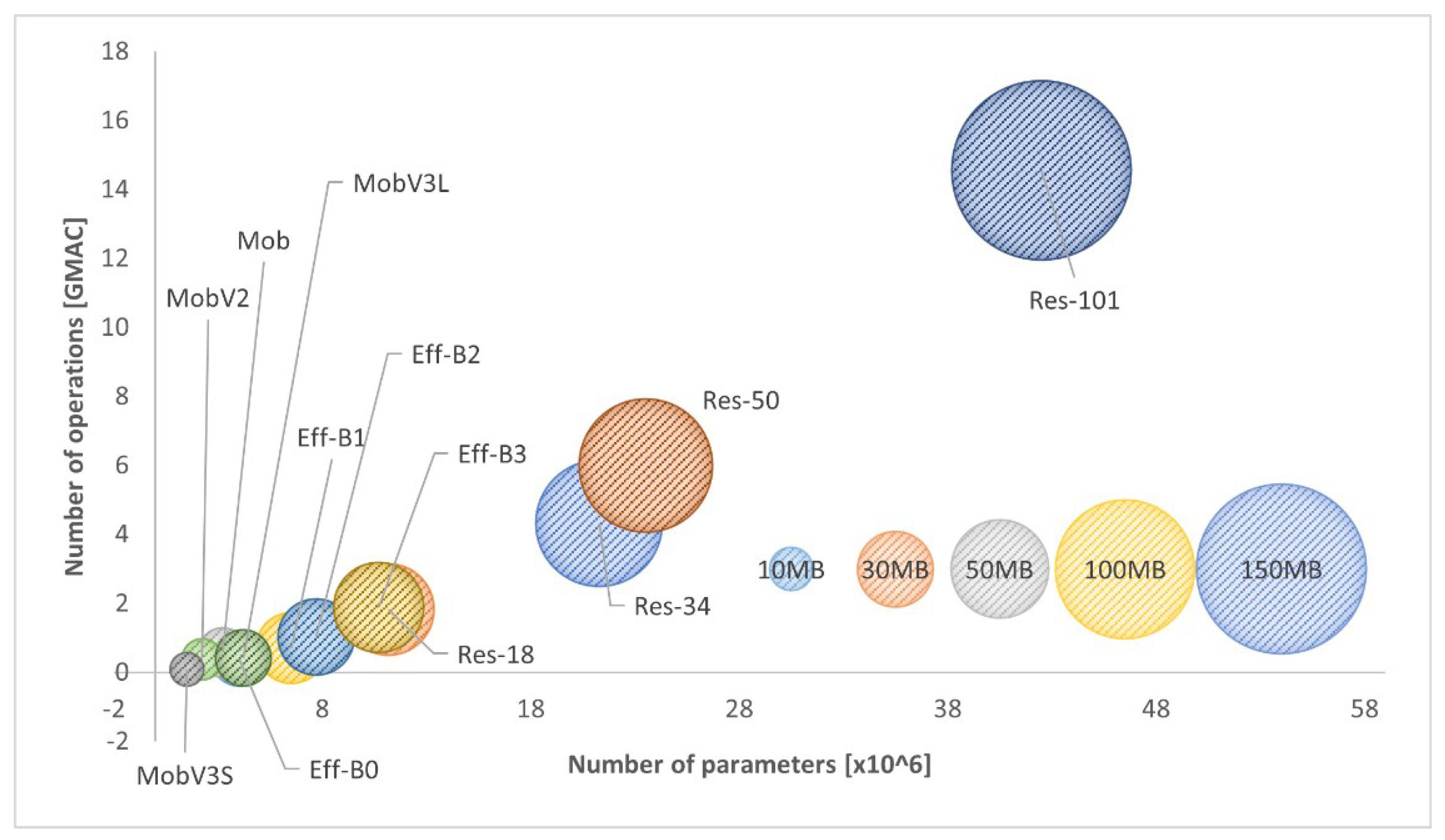

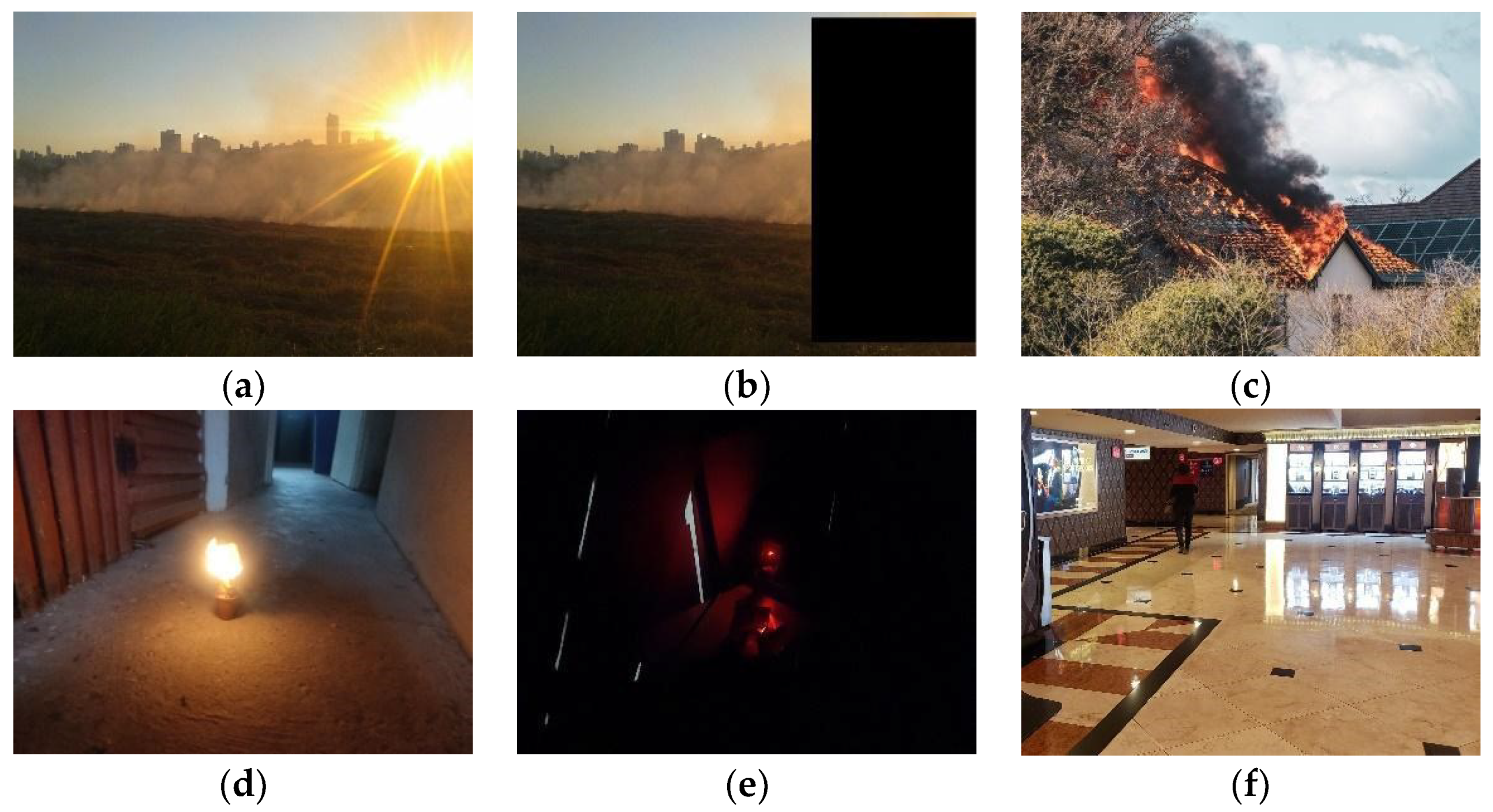

2.2.1. Image Classification

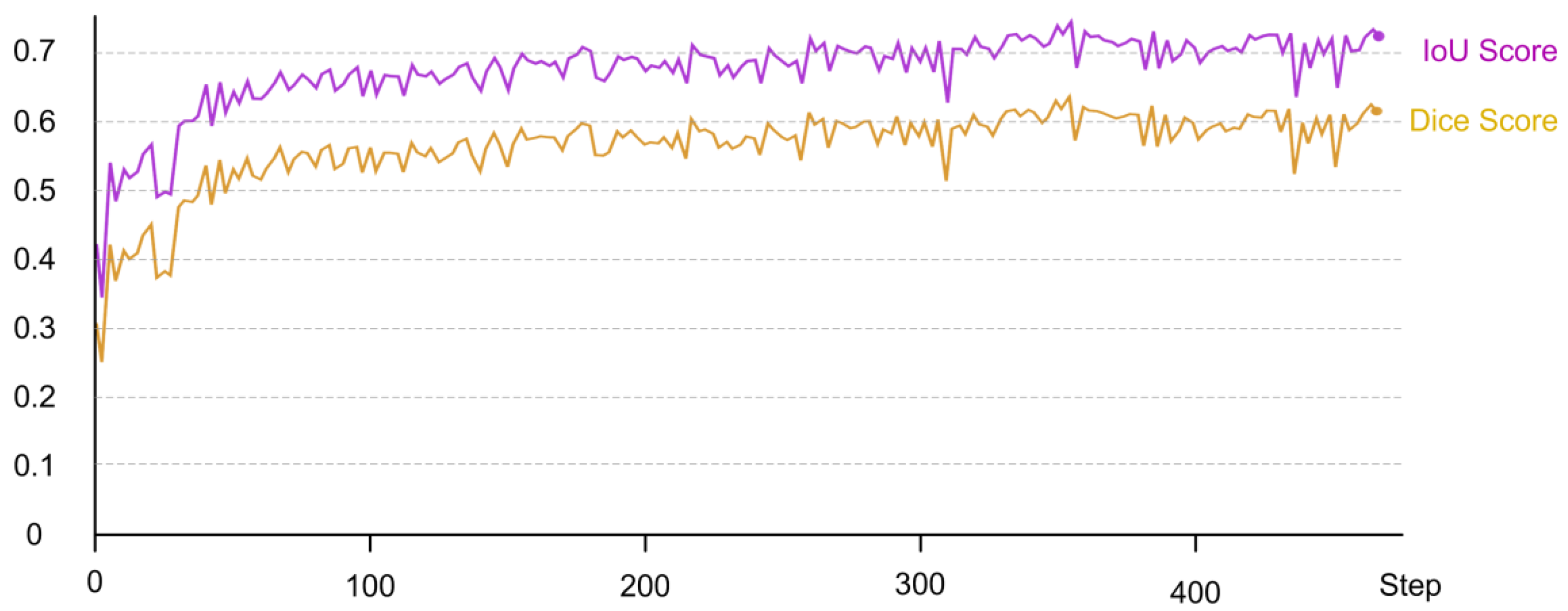

2.2.2. Semantic Segmentation

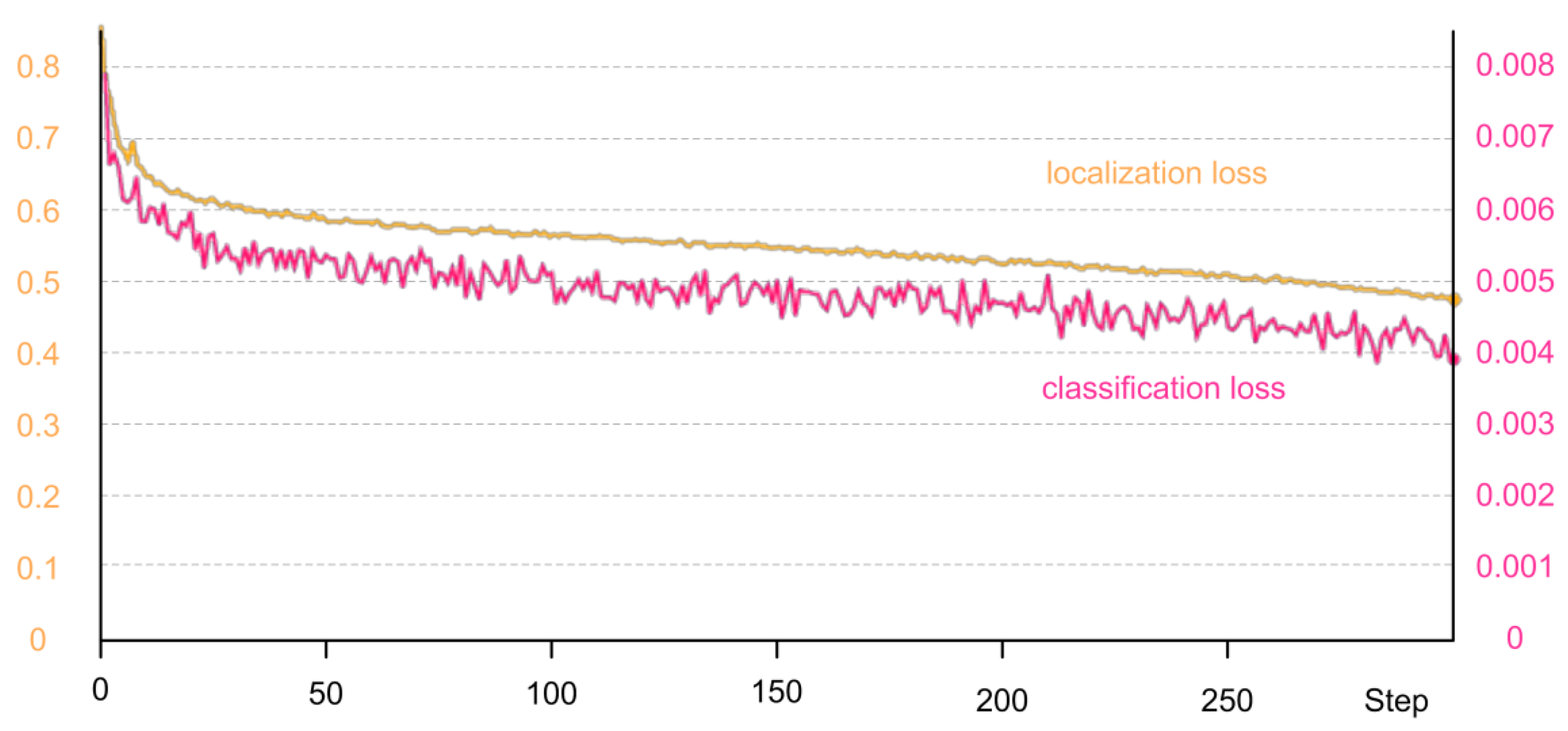

2.2.3. Object Detection

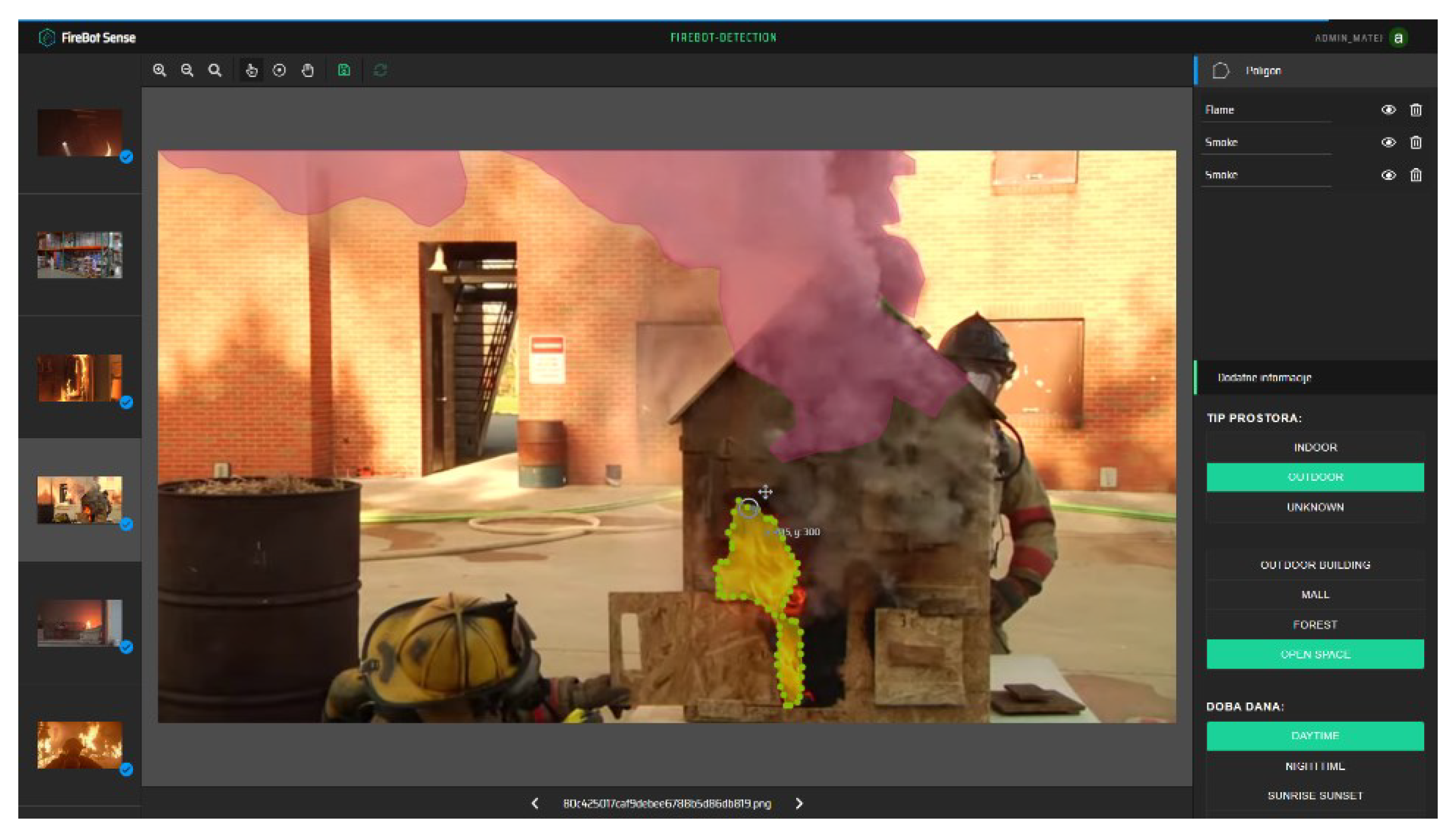

2.2.4. FireSense Image Annotation Tool

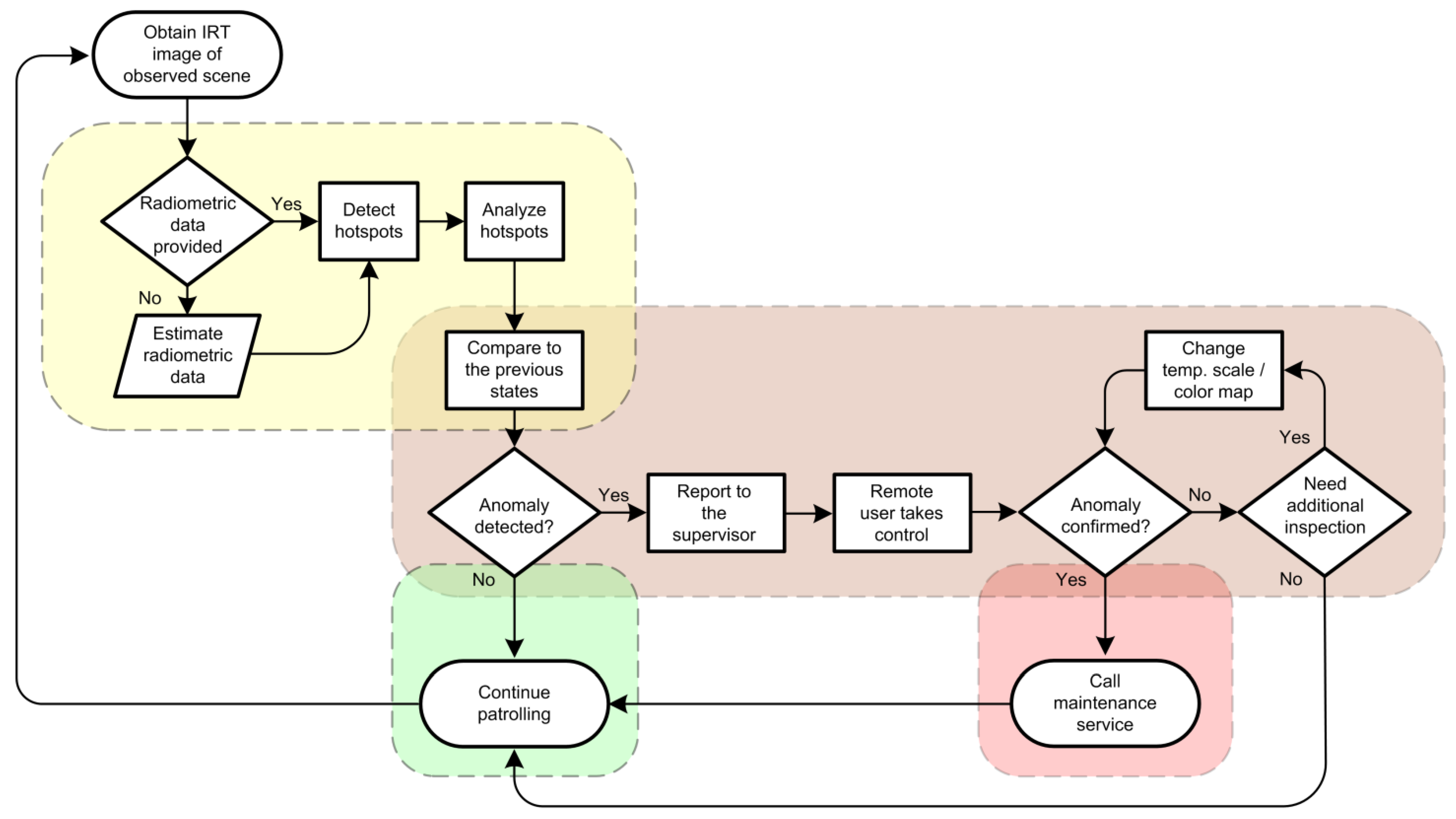

2.2.5. Temperature Anomaly Detection

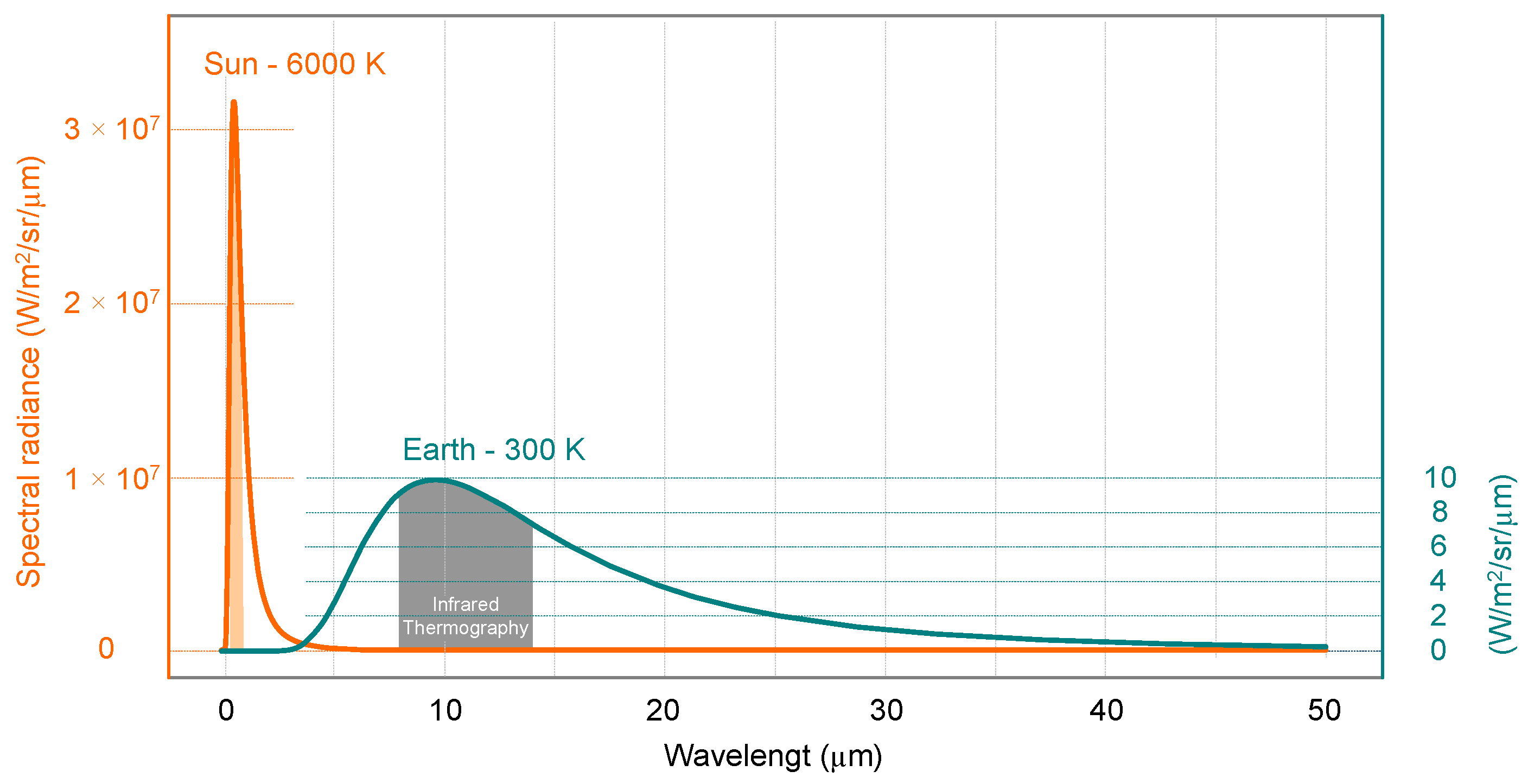

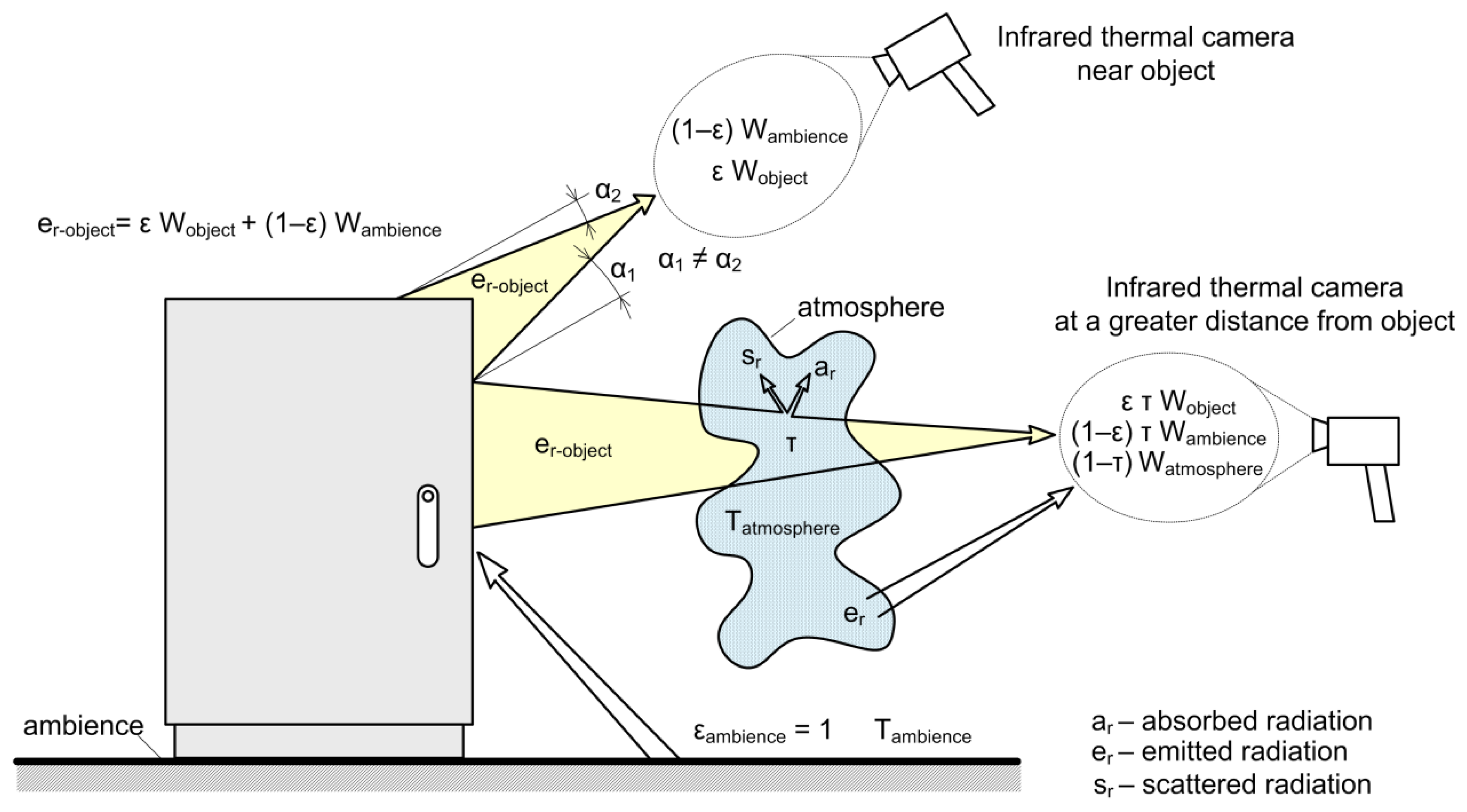

2.2.6. Radiometric Data Estimation

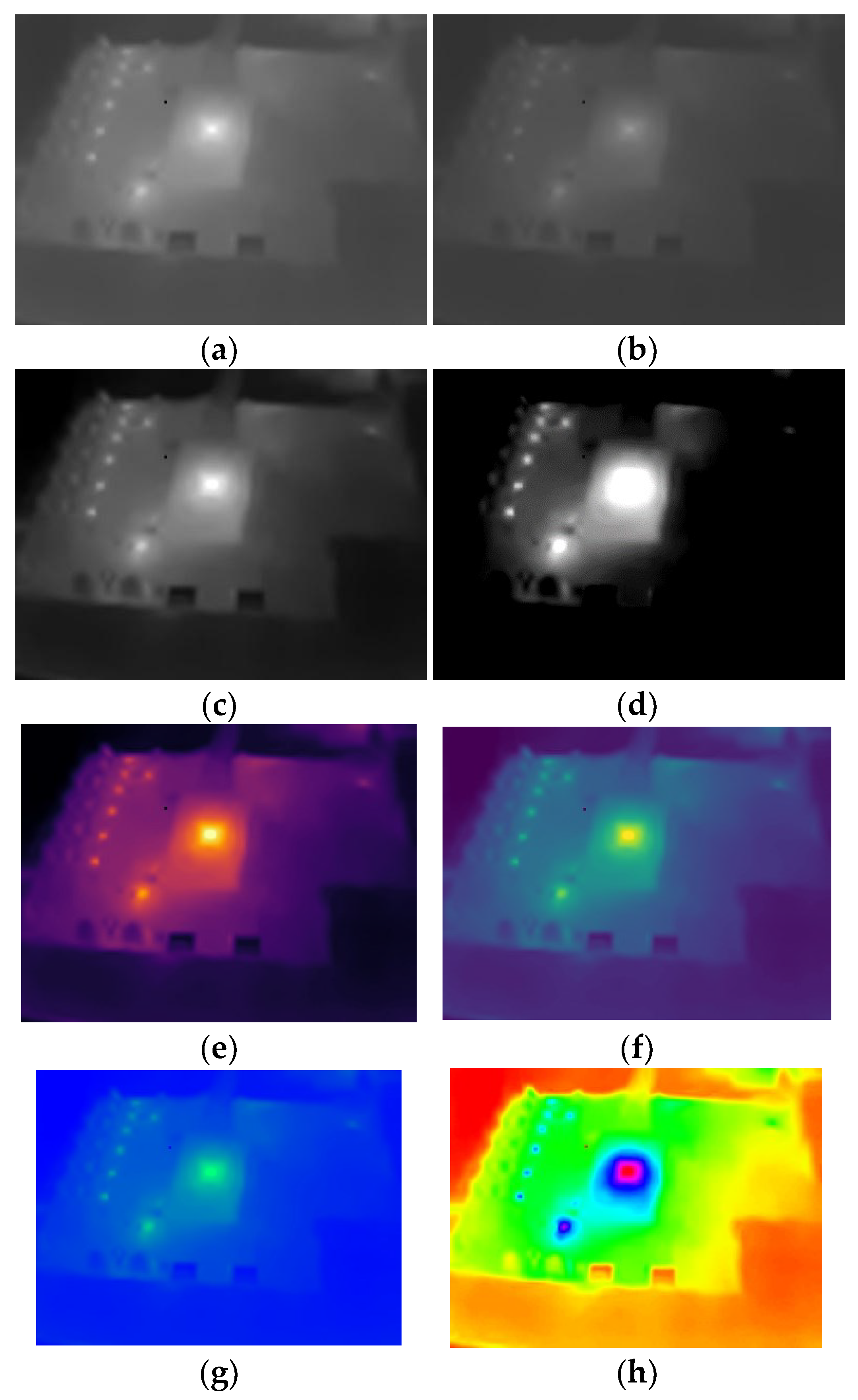

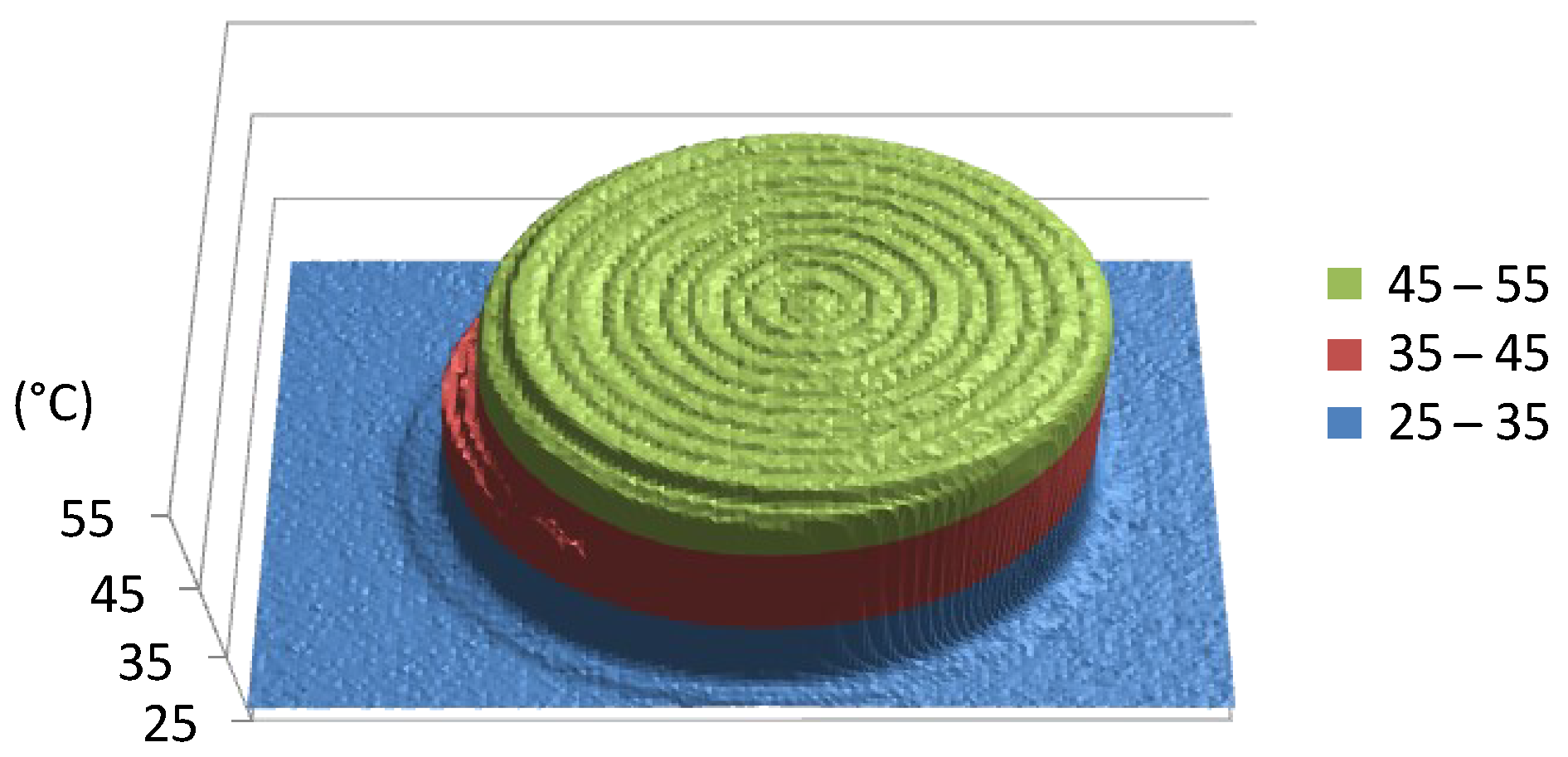

2.2.7. Generating Images from Radiometric Data

3. Results and Analysis of Radiometric Thermal Data

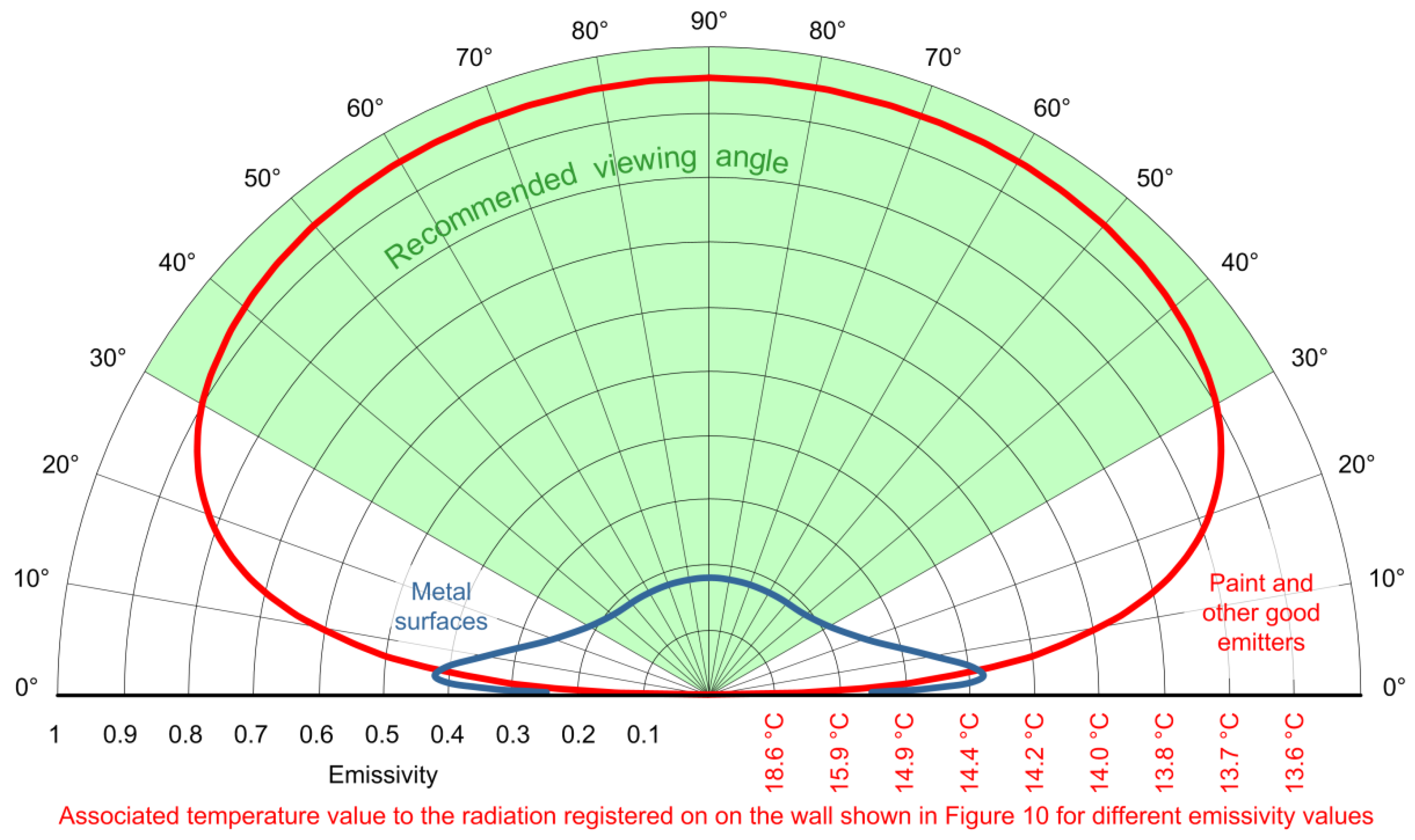

3.1. Influence of Emissivity on Radiometric Data

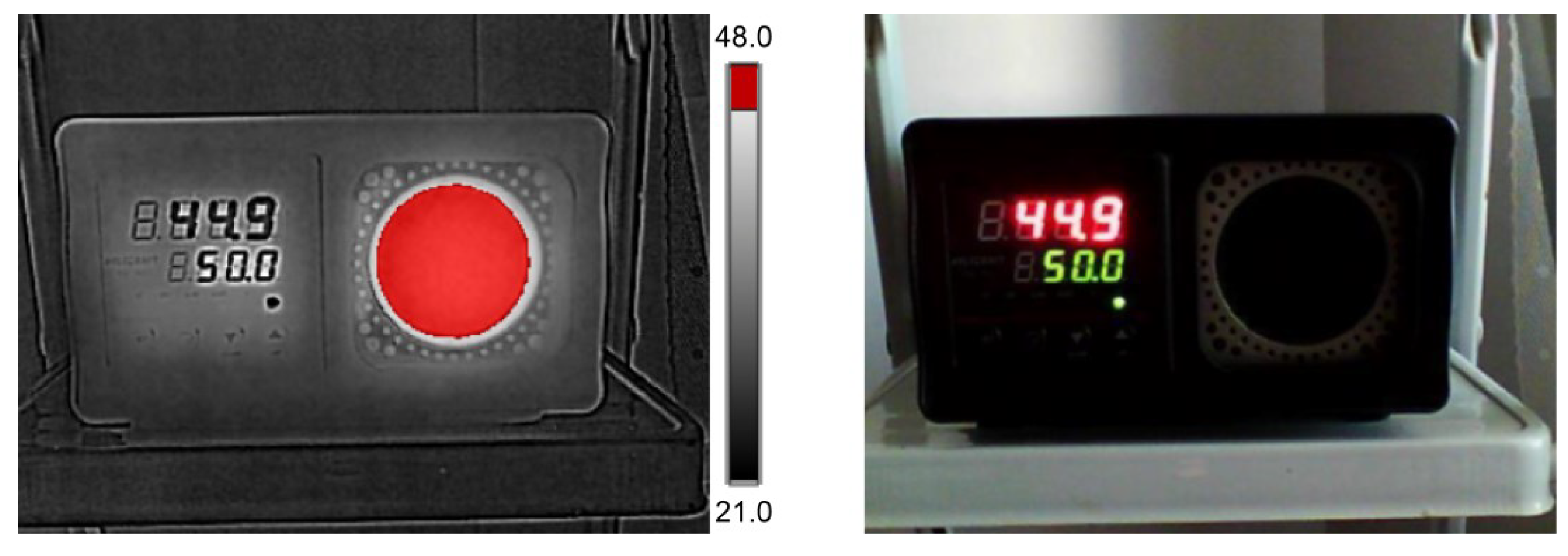

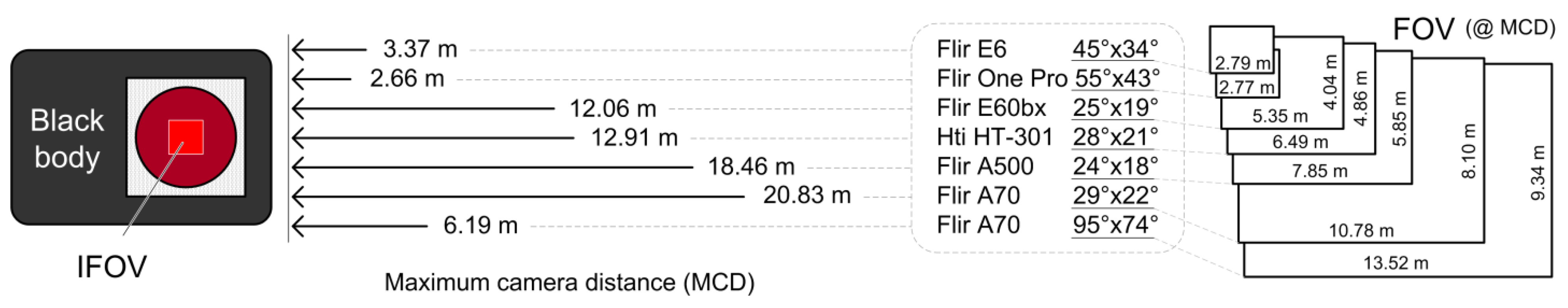

3.2. Basic Calibration When Using Several Different Cameras

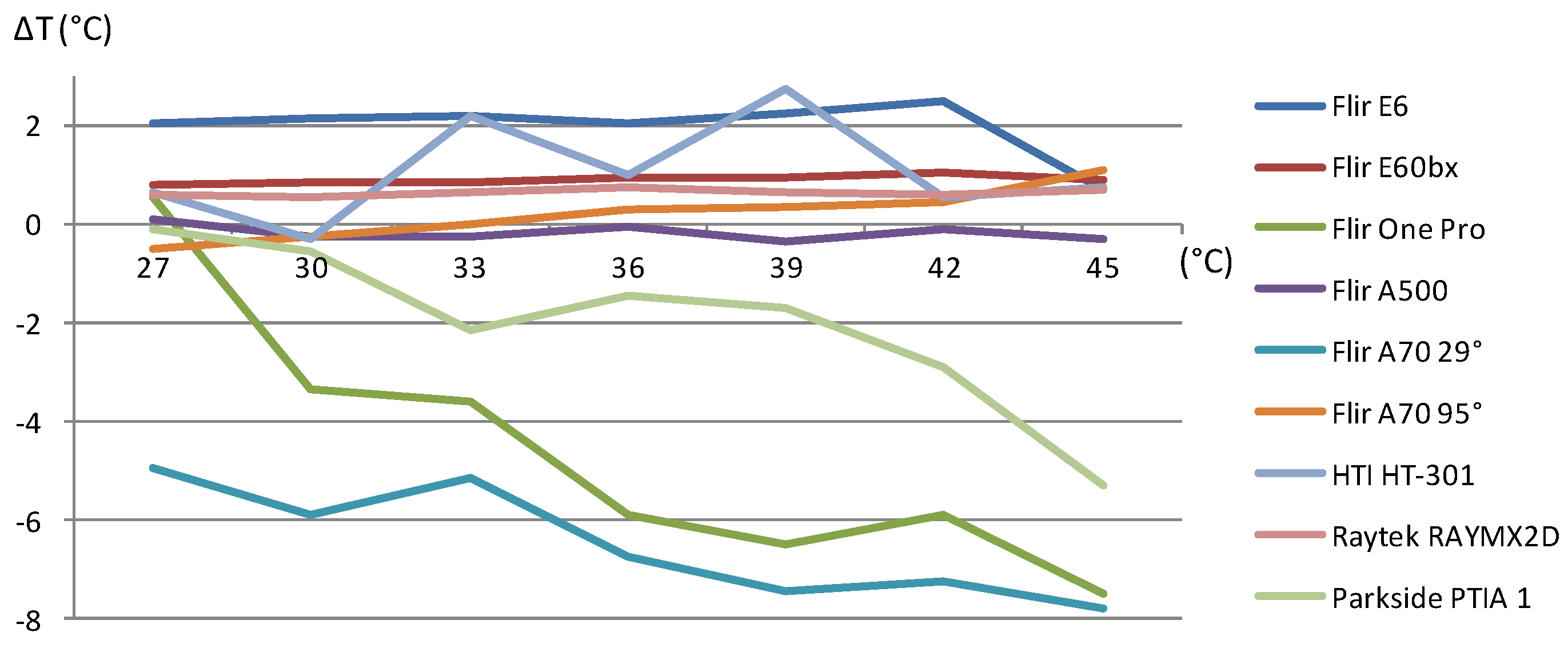

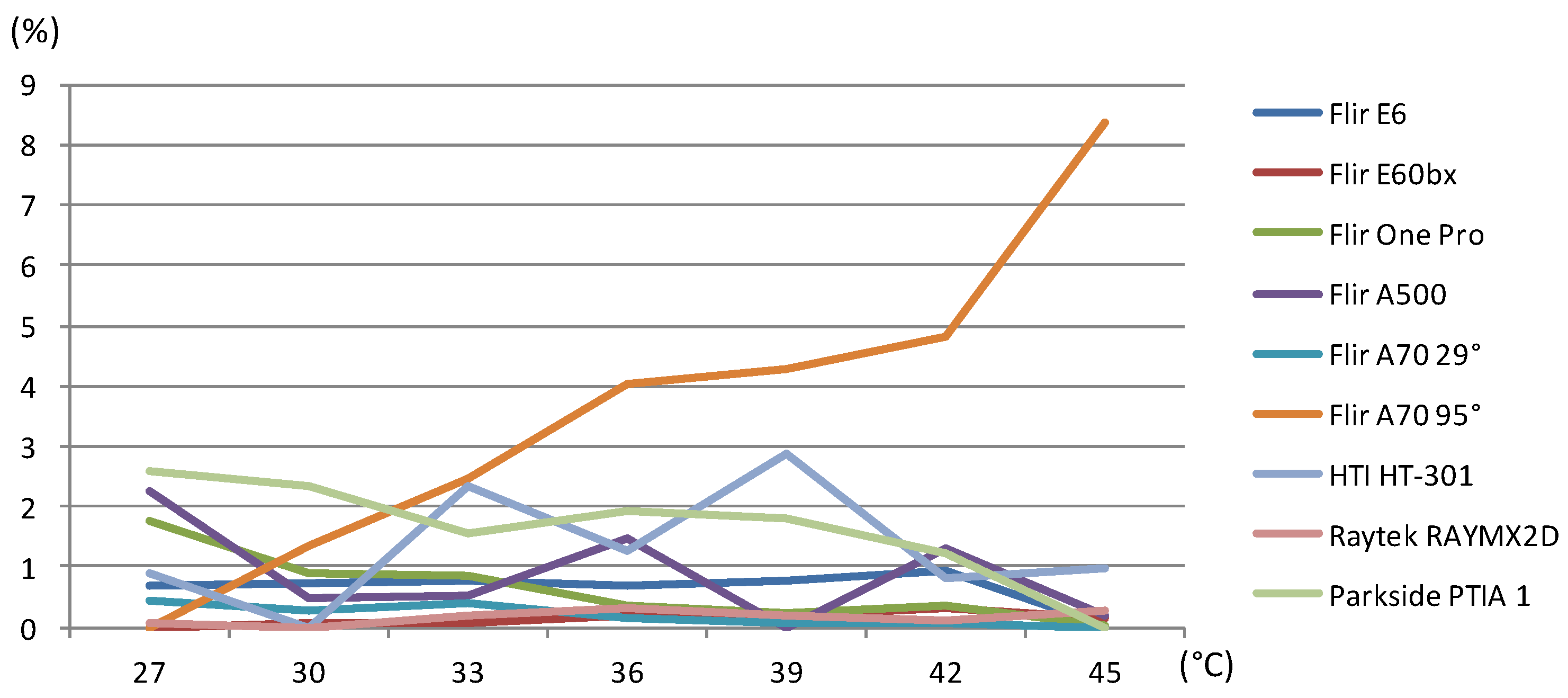

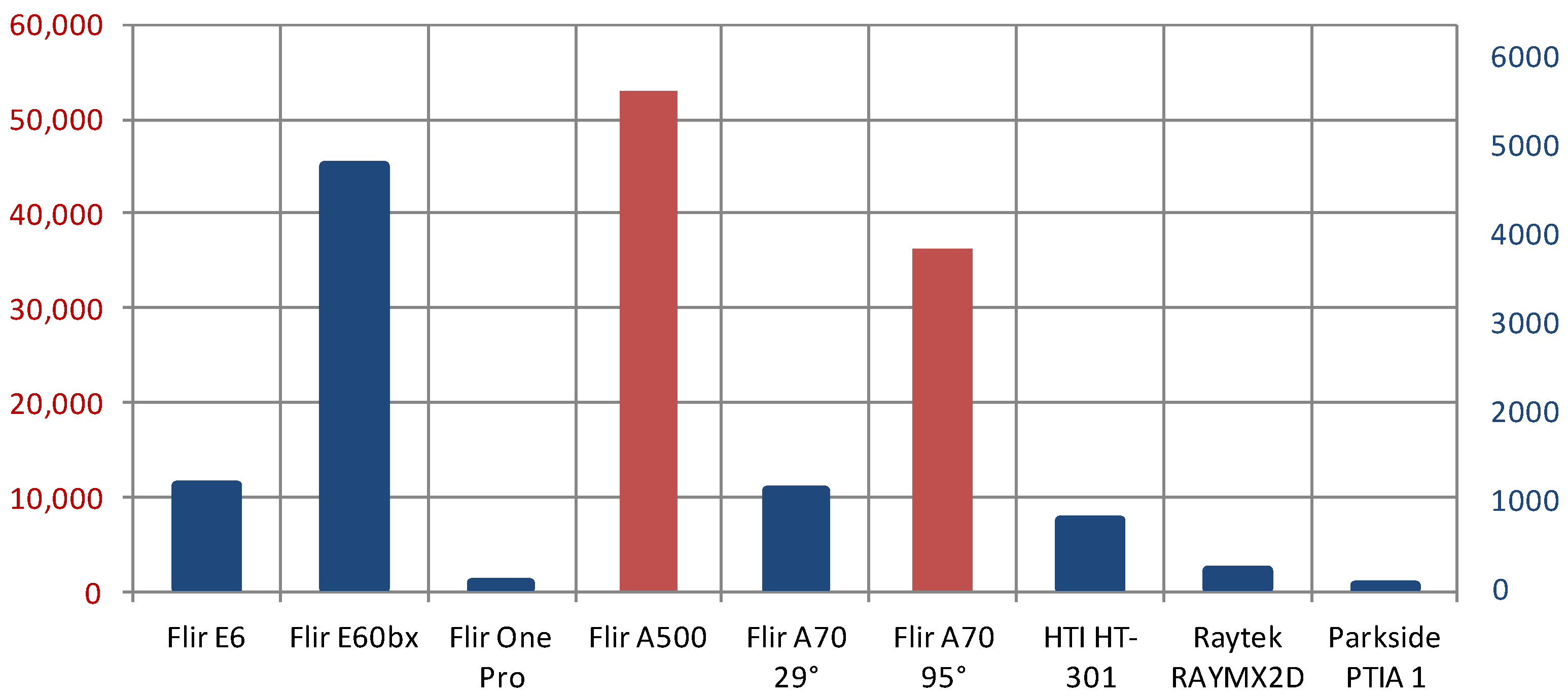

3.3. Analysis of Measurement Result Deviations

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Roldán-Gómez, J.J.; González-Gironda, E.; Barrientos, A. A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety. Appl. Sci. 2021, 11, 363. [Google Scholar] [CrossRef]

- Smart Robots for Fire-Fighting. A Report Encapsulating the Secondary Research and Findings from the Survey to Inform the Database of WP2–Literature Survery; European Commission: Maastricht, The Netherlands, 2018.

- Liu, P.; Yu, H.; Cang, S.; Vladareanu, L. Robot-Assisted Smart Firefighting and Interdisciplinary Perspectives. In Proceedings of the 2016 22nd International Conference on Automation and Computing (ICAC), Colchester, UK, 7–8 September 2016; pp. 395–401. [Google Scholar] [CrossRef]

- Tan, C.F.; Liew, S.M.; Alkahari, M.R.; Ranjit, S.S.S.; Said, M.R.; Chen, W.; Sivakumar, D. Fire Fighting Mobile Robot: State of the Art and Recent Development. Aust. J. Basic Appl. Sci. 2013, 7, 220–230. [Google Scholar]

- Khoon, T.N.; Sebastian, P.; Saman, A.B.S. Autonomous Fire Fighting Mobile Platform. Procedia Eng. 2012, 41, 1145–1153. [Google Scholar] [CrossRef]

- AlHaza, T.; Alsadoon, A.; Alhusinan, Z.; Jarwali, M.; Alsaif, K. New Concept for Indoor Fire Fighting Robot. Procedia Soc. Behav. Sci. 2015, 195, 2343–2352. [Google Scholar] [CrossRef][Green Version]

- Varghese, S.; Paul, A.; George, B.; M.A., F.; Warier, S. Design and Fabrication of Fire Fighting Robotic Arm for Petrochemical Industries. Int. J. Ind. Eng. 2018, 5, 14–17. [Google Scholar] [CrossRef]

- Imdoukh, A.; Shaker, A.; Al-Toukhy, A.; Kablaoui, D.; El-Abd, M. Semi-Autonomous Indoor Firefighting UAV. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 310–315. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early Forest Fire Detection Using Drones and Artificial Intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar] [CrossRef]

- Spurny, V.; Pritzl, V.; Walter, V.; Petrlik, M.; Baca, T.; Stepan, P.; Zaitlik, D.; Saska, M. Autonomous Firefighting Inside Buildings by an Unmanned Aerial Vehicle. IEEE Access 2021, 9, 15872–15890. [Google Scholar] [CrossRef]

- Nii, D.; Namba, M.; Harada, K.; Matsuyama, K.; Tanaka, T. Application of Common-Use Temperature Sensors to Early Fire Detection. In Proceedings of the 11th Asia-Oceania Symposium on Fire Science and Technology, Taipei, Taiwan, 21–25 October 2018; Springer: Singapore, 2018. [Google Scholar] [CrossRef]

- Wu, Q.; Gong, L.-X.; Li, Y.; Cao, C.-F.; Tang, L.-C.; Wu, L.; Zhao, L.; Zhang, G.-D.; Li, S.-N.; Gao, J.; et al. Efficient Flame Detection and Early Warning Sensors on Combustible Materials Using Hierarchical Graphene Oxide/Silicone Coatings. ACS Nano 2018, 12, 416–424. [Google Scholar] [CrossRef] [PubMed]

- Erden, F.; Toreyin, B.U.; Soyer, E.B.; Inac, I.; Gunay, O.; Kose, K.; Cetin, A.E. Wavelet Based Flickering Flame Detector Using Differential PIR Sensors. Fire Saf. J. 2012, 53, 13–18. [Google Scholar] [CrossRef]

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical Sensor Systems and Associated Algorithms for Fire Detection: A Review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef] [PubMed]

- Sharma, J.; Granmo, O.-C.; Goodwin, M.; Fidje, J.T. Deep Convolutional Neural Networks for Fire Detection in Images. Proc. Int. Conf. Eng. Appl. Neural Netw. 2017, 744, 183–193. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Kaza, K.; Grammalidis, N. Fire Detection from Images Using Faster R-CNN and Multidimensional Texture Analysis. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP 2019), Brighton, UK, 12–17 May 2019; pp. 8301–8305. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image Fire Detection Algorithms Based on Convolutional Neural Networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional Neural Networks Based Fire Detection in Surveillance Videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Li, Y.; Du, X.; Wan, F.; Wang, X.; Yu, H. Rotating Machinery Fault Diagnosis Based on Convolutional Neural Network and Infrared Thermal Imaging. Chin. J. Aeronaut. 2020, 33, 427–438. [Google Scholar] [CrossRef]

- Kim, J.S.; Choi, K.N.; Kang, S.W. Infrared Thermal Image-Based Sustainable Fault Detection for Electrical Facilities. Sustainability 2021, 13, 557. [Google Scholar] [CrossRef]

- Haider, M.; Doegar, A.; Verma, R.K. Fault Identification in Electrical Equipment Using Thermal Image Processing. In Proceedings of the 2018 International Conference on Computing, Power and Communication Technologies (GUCON), Greater Noida, Uttar Pradesh, India, 28–29 September 2018; pp. 853–858. [Google Scholar] [CrossRef]

- Labbe, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

- Balen, J.; Damjanovic, D.; Maric, P.; Vdovjak, K.; Arlovic, M. FireBot–An Autonomous Surveillance Robot for Fire Prevention, Early Detection and Extinguishing. In Proceedings of the Future of Information and Communication Conference (FICC) 2023, Virtual, San Francisco, CA, USA, 2–3 March 2023. [Google Scholar]

- Orglmeister, A. Early Fire Detection and Automatic Extinguishing in Waste-to-Energy Power Plants and Waste Treatment Plants, Waste Management. In Waste-to-Enery; Thomé-Kozmiensky, K.J., Thiel, S., Thomé-Kozmiensky, E., Winter, F., Juchelková, D., Eds.; TK Verlag Karl Thomé-Kozmiensky: Neuruppin, Germany, 2017; Volume 7, ISBN 978-3-944310-37-4. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Vdovjak, K.; Maric, P.; Balen, J.; Grbic, R.; Damjanovic, D.; Arlovic, M. Modern CNNs Comparison for Fire Detection in RGB Images. In Proceedings of the 17th International Conference on Machine Learning and Data Mining MLDM 2022, New York, NY, USA, 16–21 July 2022. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; European Conference on Computer Vision. pp. 740–755. [Google Scholar]

- Everingham, M.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Sekachev, B.; Manovich, N.; Zhavoronkov, A. Computer Vision Annotation Tool: A Universal Approach to Data Annotation. 2019. Available online: https://github.com/opencv/cvat (accessed on 23 February 2022).

- Skalski, P. Make Sense. 2019. Available online: https://github.com/SkalskiP/make-sense (accessed on 25 February 2022).

- Maric, P.; Arlovic, M.; Balen, J.; Vdovjak, K.; Damjanovic, D. A Large Scale Dataset For Fire Detection and Segmentation in Indoor Spaces. In Proceedings of the 2nd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Maldives, 16–18 November 2022. [Google Scholar]

- Vdovjak, K.; Maric, P.; Balen, J.; Glavas, H. Radiometric Data Estimation Using Thermogram and Comparison to the Data Provided by the Camera. In Proceedings of the 16th conference on Quantitative Infrared Thermography, Paris, France, 4–8 July 2022; pp. 440–446. [Google Scholar]

- Baehr, H.D.; Stephan, K. Heat and Mass Transfer, Second, Revised Edition; Springer: Berlin, Germany; Springer: New York, NY, USA, 2006; ISBN 13 978-3-540-29526-6. [Google Scholar]

- Corsi, C. New Frontiers for Infrared. Opto-Electron. Rev. 2015, 23, 3–25. [Google Scholar] [CrossRef]

| Component Type | Component | Approximate Price |

|---|---|---|

| Drivetrain | Skid steering, 4 rubber wheels and motors with encoders (40 W) | USD 150 |

| CPU (navigation) | RaspberryPI 4 8 GB | USD 120 |

| CPU (image processing) | RaspberryPI 4 8 GB | USD 120 |

| Battery | 2 × Lead 12 Ah, 5 h autonomy | USD 80 |

| Sensors | Gyroscope, accelerometer, temperature, 5 × ultrasonic sensors in front + 2 on the sides, 3 × IR sensors downward facing | USD 20 |

| Sensor-data management | Arduino Mega | USD 45 |

| LiDAR | RPLidar A2 (2D, 12 m range) | USD 320 |

| RGB-D camera | Orbbec Astra (640 × 480 @ 30 fps, RGB and depth) | USD 150 |

| Weight | 15 kg |

| Component Type | Component | Approximate Price |

|---|---|---|

| Drivetrain | Differential drive, 2 rubber wheels and motors with encoders (200 W), 2 caster wheels for stability, suspension | USD 600 |

| CPU (navigation) | Industrial PC (i7-10610U, 32 GB DDR4, 512 GB SSD) | USD 1000 |

| CPU (image processing) | Nvidia Jetson Xavier AGX 64 GB | USD 1700 |

| Battery | LiFePO4, 24 V, 100 Ah, 10 h autonomy | USD 600 |

| Sensors | Gyroscope, accelerometer, temperature sensor, 3 × ultrasonic sensors in front | USD 300 |

| Sensor-data management | Custom electronics with CAN Bus interface | Indoor built |

| LiDAR | RPLidar A3 (2D, 25 m range) | USD 600 |

| RGB-D camera | Orbbec Astra (640 × 480 @ 30 fps, RGB and depth) | USD 150 |

| Visual camera | 1080p 30 fps RGB camera with NIR area capabilities | Indoor built |

| Infrared thermal camera | 384 × 288 30 fps with full radiometric data | Indoor built |

| Other | Various gas sensors, microphone, LED reflector, laser range finder | USD 200 |

| Fire extinguishing | 3 × 3 kg (powder, foam, CO2), mechanical hand, electronic nozzle | Indoor built |

| Weight | 30 kg |

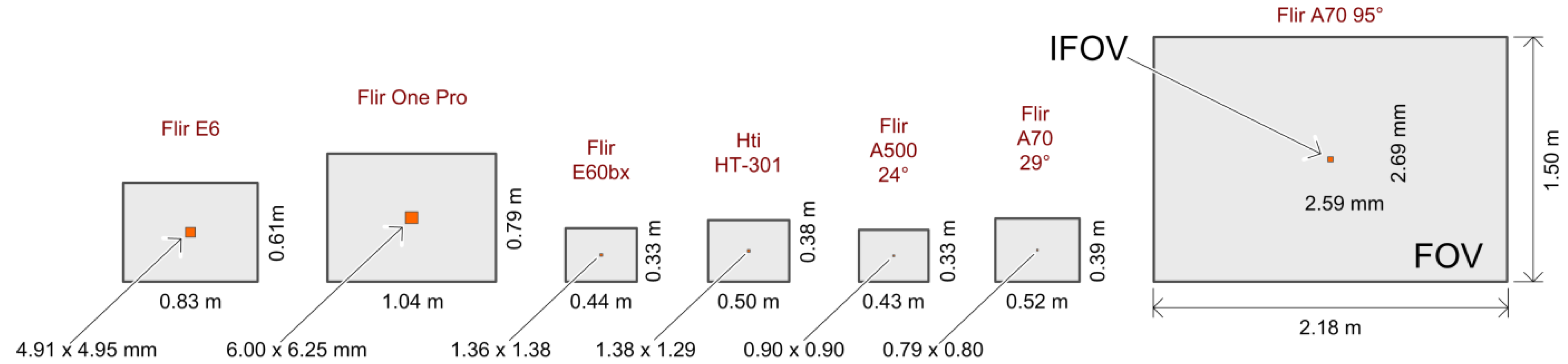

| Specifications | Camera | ||||||

|---|---|---|---|---|---|---|---|

| Flir E6 | Flir One Pro | Flir E60bx | HTI HT-301 | Flir A500 24° | Flir A70 29° | Flir A70 95° | |

| IR resolution | 160 × 120 | 160 × 120 | 320 × 240 | 384 × 288 | 464 × 348 | 640 × 480 | 640 × 480 |

| NETD | 60 mK | 70 mK | 45 mK | 60 mK | 40 mK | 45 mK | 60 mK |

| FOV | 45° × 34° | 55° × 43° | 25° × 19° | 28.2° × 21.3° | 24° × 18° | 29° × 22° | 95° × 74° |

| IFOV | 5.2 mrad | 6 mrad | 1.36 mrad | 1.28 mrad | 0.90 mrad | 0.79 mrad | 2.59 mrad |

| Spectral range | 7.5–13 µm | 8–14 µm | 7.5–13 µm | 8–14 µm | 7.5–14 µm | 7.5–14 µm | 7.5–14 µm |

| Temp. range (°C) | −20 to + 250 | −20 to + 120 | −20 to + 120 | −20 to + 120 | −20 to + 120 | −20 to + 175 | −20 to + 175 |

| Accuracy (°C) or (%) of reading | ±2 °C or ±2% | ±3 °C or ±5% | ±2 °C or ±2% | ±3 °C or ±3% | ±2 °C or ±2% | ±2 °C or ±2% | ±2 °C or ±2% |

| for ambient temperature 15 °C to 35 °C | |||||||

| Blackbody | Voltcraft IRS-350 | Flir Systems BB150-P |

|---|---|---|

| Temperature range | 50 °C to 350 °C | 45 °C to 150 °C |

| Accuracy | ±0.5 °C at 100 °C ±1.2 °C at 350 °C | ±0.7 °C 0 to 10 °C ±0.5 °C 10 to 40 °C |

| Stability | ±0.1 °C at 100 °C ±0.2 °C at 350 °C | ±0.2 °C |

| Emissivity of measuring area | 0.95 | 0.98 |

| Operating temperature | 5 °C to 35 °C | 0 °C to 40 °C |

| Device | Average Measurements for Defined Reference Points (°C) | ||||||

|---|---|---|---|---|---|---|---|

| 27 | 30 | 33 | 36 | 39 | 42 | 45 | |

| Flir E6 | 29.03 | 32.13 | 35.20 | 38.05 | 41.25 | 44.50 | 45.70 |

| Flir E60bx | 27.77 | 30.85 | 33.85 | 36.93 | 39.95 | 43.05 | 45.90 |

| Flir One Pro | 27.52 | 26.63 | 29.40 | 30.08 | 32.50 | 36.10 | 37.50 |

| Flir A500 | 27.07 | 29.73 | 32.74 | 35.92 | 38.64 | 41.89 | 44.68 |

| Flir A70 29° | 22.03 | 24.09 | 27.87 | 29.27 | 31.55 | 34.76 | 37.22 |

| Flir A70 95° | 26.50 | 29.76 | 32.97 | 36.27 | 39.32 | 42.42 | 46.10 |

| HTI HT-301 | 27.64 | 29.67 | 35.18 | 37.01 | 41.72 | 42.53 | 45.73 |

| Raytek RAYMX2D | 27.57 | 30.53 | 33.65 | 36.72 | 39.65 | 42.61 | 45.69 |

| Parkside PTIA 1 | 26.91 | 29.44 | 30.84 | 34.55 | 37.31 | 39.12 | 39.69 |

| Device | Deviation in °C from the Reference Value, and Camera-Price Information | Price (USD) | ||||||

|---|---|---|---|---|---|---|---|---|

| 27 | 30 | 33 | 36 | 39 | 42 | 45 | ||

| Flir E6 | 2.03 | 2.13 | 2.20 | 2.05 | 2.25 | 2.50 | 0.70 | 2259.00 |

| Flir E60bx | 0.77 | 0.85 | 0.85 | 0.93 | 0.95 | 1.05 | 0.90 | 4295.00 |

| Flir One Pro | 0.52 | −3.37 | −3.60 | −5.92 | −6.50 | −5.90 | −7.50 | 259.99 |

| Flir A500 | 0.07 | −0.27 | −0.26 | −0.08 | −0.36 | −0.11 | −0.32 | 10,079.00 |

| Flir A70 29° | −4.97 | −5.91 | −5.13 | −6.73 | −7.45 | −7.24 | −7.78 | 6950.00 |

| Flir A70 95° | −0.50 | −0.24 | −0.03 | 0.27 | 0.32 | 0.42 | 1.10 | 6950.00 |

| HTI HT-301 | 0.64 | −0.33 | 2.18 | 1.01 | 2.72 | 0.53 | 0.73 | 787.00 |

| Raytek RAYMX2D | 0.57 | 0.53 | 0.65 | 0.72 | 0.65 | 0.61 | 0.69 | 124.41 |

| Parkside PTIA 1 | −0.09 | −0.56 | −2.16 | −1.45 | −1.69 | −2.88 | −5.31 | 20.80 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balen, J.; Glavaš, H.; Vdovjak, K.; Jakab, J. Obtaining Infrared Thermal Camera Sensor Calibration Data for Implementation in FireBot Autonomous Fire Protection Robot System. Appl. Sci. 2022, 12, 11657. https://doi.org/10.3390/app122211657

Balen J, Glavaš H, Vdovjak K, Jakab J. Obtaining Infrared Thermal Camera Sensor Calibration Data for Implementation in FireBot Autonomous Fire Protection Robot System. Applied Sciences. 2022; 12(22):11657. https://doi.org/10.3390/app122211657

Chicago/Turabian StyleBalen, Josip, Hrvoje Glavaš, Krešimir Vdovjak, and Josip Jakab. 2022. "Obtaining Infrared Thermal Camera Sensor Calibration Data for Implementation in FireBot Autonomous Fire Protection Robot System" Applied Sciences 12, no. 22: 11657. https://doi.org/10.3390/app122211657

APA StyleBalen, J., Glavaš, H., Vdovjak, K., & Jakab, J. (2022). Obtaining Infrared Thermal Camera Sensor Calibration Data for Implementation in FireBot Autonomous Fire Protection Robot System. Applied Sciences, 12(22), 11657. https://doi.org/10.3390/app122211657