1. Introduction

Among the necessary skills and competencies described by the European Commission in the Recommendation 2006/962/EC on crucial competencies for lifelong learning [

1], we can find “Communicating in foreign languages” defined as “the ability to understand, express and interpret concepts, thoughts, feelings, facts, and opinions in both oral and written form […] in an appropriate range of societal and cultural contexts” in a foreign language (p. 14). According to this model and other international reports [

2,

3], a prerequisite for citizenship is the effective mastering of a second language, with English being the language of choice in most of the countries [

4].

Communication is an essential aspect of our society. Globalization, digitalization, and other aspects make it essential to consider improving communication skills. In the framework of the European Union, as an institution that unifies different cultures and communities, it is important to develop communicative competencies, in order to develop connections and promote equal opportunities in all countries. External evaluation tests of English proficiency in Europeans systematically show results that prove the low capacity of the students from southern European countries for English oral skills (listening and speaking), with an obvious imbalance, when compared to northern Europe. Therefore, we agree about the need to improve the level of English of our students and to promote their skills regarding the use of the primary foreign language. Additionally, this is our main purpose with this innovative proposal based on the use of digital technologies for secondary teachers to assess the oral skills of their students.

As we witness the evolution of digital technologies, we can find researchers who explore the potential of digital tools for the improvement of teaching and learning. Digitalization is at the core of all the educational plans for the next few years [

5], and assessment is one of the topics to be addressed when we study the educational potential of ICT, as we can check both in the European models DigCompEdu and DigCompOrg [

6,

7,

8], where assessment is incidentally one of the areas that takes advantage of the use of digital technologies.

Digital tools can be a key element in the new curricula and the educational practices in all levels and areas of knowledge. A lot of studies [

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21] show us the possibilities and potential of digitalization in the field of foreign languages teaching and learning, and very often, these educational practices with digital tools are associated with innovative methods, such as active learning [

10,

11], collaborative groups [

12,

13], communication practices [

9,

15,

16], or CLIL model [

17,

21]. Thus, ICT tools can also be useful for promoting innovative educational practices in general and, more specifically, experiences based on ICT for the assessment of students.

We also need to consider that assessment is one of the most difficult activities for teachers in all levels, and digital technologies can help us in different ways. According to Di Gregorio y Beaton [

22], it is relevant to consider the use of these digital tools, taking into account the innovative approaches to teaching and new assessment methods. Some researchers [

23] explored the application of ICT in universities, with good results both for summative and formative assessment, but they recommended focusing the institutional effort on teachers’ technical skills. About formative assessment, other studies [

24,

25] explained that it is necessary to improve the use of asynchronous tools and analytical data. For their part, other authors suggested the use of formative E-assessment to also promote motivation and not only learning outcomes [

26,

27]. The interest for a summative assessment has also been proven and, in this sense, other researchers [

28,

29] showed the improvement of students with the use of an APP for self-assessment, based on a highly interactive and ubiquitous learning model, with learners involved in their own processes and promoting automatic feedback.

Furthermore, we can find some studies which focused specifically on ICT for the assessment of foreign language competences, which is our main research topic. In this way, some authors [

30] used artificial intelligence (an “Intelligent Pedagogical Assistance System”) in an experiment with secondary education students throughout ten weeks for them to self-evaluate their progress. The system was useful for evidencing the improvement in oral skills, but also a positive change in their self-opinion. Other authors [

28] studied the use of ICT tools for assessing foreign languages in both online and hybrid learning environments. In general, we can conclude that ICTs are very relevant tools for English teaching and learning, as [

31] evidenced after analysing 75 studies from 2005 to 2020.

Another question we have considered in our project is the importance of collaboration to engage teachers and the professional communities [

32,

33]. Digital tools can also be useful for facilitating the building of these communities, in order to share resources and to participate in innovative educational experiences; therefore, this is the main pillar of the AROSE project. In our project, we have tried to promote the community among teachers from different secondary education schools in Europe: they have shared digital resources, have designed new digital resources in collaboration, and have exchanged good educational practices.

Our project aims to influence the development of teacher evaluation skills to enhance the reflection on the practice and provide a better understanding of the increase in the chances of success of students with the implementation of a competency assessment. Our ultimate goal is to promote the use of digital technologies for the assessment of students’ oral skills. Thus, we have designed the AROSE platform and AROSE open educational resources. These tools will be useful for supporting teachers’ work in the classroom, and they will help teachers to assess and improve the oral competences of their students. This evaluative research will show the satisfaction of educational agents with these digital tools and the possibilities of digital technologies for assessment in any learning environment (be it virtual, blended, or face-to-face).

2. Context of the Research: The AROSE Project

AROSE is a project funded by Eramus+ (2019-1-ES01-KA201-065597) to promote the assessment of English oral skills (speaking and listening) in Secondary Education students. Participants from four different countries are involved (Spain, Portugal, Italy, and Turkey), all of them southern European countries, where the need to reinforce English skills in our students is more necessary. We have technical partners and secondary education schools in every country. A total of nine organizations have participated. As an external expert, we have the participation of Language Cert, as their expertise on online exams in English has been widely proven.

Specifically, the AROSE project aims to:

Develop a model about the assessment of the oral competences in English as a second language of secondary education students.

Design resources to assess oral English skills.

Look for Open Educational Resources to assess oral English skills.

Design and develop a web tool to carry out the assessment of these skills in secondary education.

Create a toolkit for secondary education teachers to help them use these digital resources and the web tool developed in AROSE.

Design, implement, and share an open online course to learn about the AROSE model and the AROSE tools and resources.

The target group of this project was secondary education English teachers, but indirectly, the participants included secondary school students, stakeholders, educational administration, and families.

One of the first steps carried out in the project was the design of a methodological model, on which the rest of the results would be based. This model was based on the CEFR (Common European Framework of Reference for Languages) and was designed to establish a pedagogical framework to help English teachers evaluate their students. Starting with pedagogy and moving on to technology is one of the keys for instructional design with digital technologies.

Another important achievement of the AROSE project was the design and implementation of an online library of open educational resources (OER). These open resources are accessible to anyone interested in the assessment of the English language and aims to serve as support, especially for teachers and students in the assessment of English oral skills of secondary-school students. The AROSE project partners decided to choose A2 and B2 levels, since these are the levels taught at secondary schools in different countries. Although some resources, such as the assessment rubrics, could be adapted to other levels.

The resources bank is divided into audio files, texts, and activities, and it also includes assessment rubrics, examples, and task generators for the resources bank. This repository includes resources created by the project’s teachers and open resources found on the Internet. The design process was interesting because it started from the work of teachers who know the actual practice of oral skills assessment, and this is one of the most valuable aspects of these materials.

The cornerstone of the AROSE project is the web tool. The platform was developed with free software. It was built in PHP8 using Laravel 9 as the framework and MariaDB database. The AROSE web tool is available to everybody on

https://arose.programaseducativos.es/ (accessed on 10 November 2022) (see

Figure 1). The number of registered users on the platform is increasing, as is the number of resources. Currently there are more than 150 registered teachers. This educational platform was evaluated in a long process, which is described in this article (evaluative research based on “Design Based Research-DBR” model), and lastly, the web platform includes the following tools:

A digital repository of public resources to assess English oral skills (mentioned above). At this moment, there are a total of 132 AROSE resources and 32 open educational resources. The tool allows teachers and users to easily upload their own resources.

A tool to register and manage students. You can manually add your students one-by-one or upload a file with a list of students.

A tool to create and manage rubrics for assessment. Some rubrics were created in the project and are available for anyone who wants to use or adapt them. Moreover, the tool includes a simple rubric generator, so that each teacher can create their own rubric. These rubrics can be shared with other users of the platform or kept for private use.

A gradebook to manage the marks. This section of the platform is very useful and interesting because it allows students to be assessed by combining the utilities of the “student” section and the “rubrics” section. This allows teachers to have all the options for assessing their students in the same space.

Communications tools such as a forum and a chat to connect with other secondary school teachers on the platform.

In order to help teachers use the project’s resources, a toolkit with all the information and examples was created as a step-by-step guide to using the platform, implementing the methodological model and creating rubrics.

In addition to the toolkit, a face-to-face training course was designed and developed. All training course materials were published on the project website as a digital training course to be made available to anyone who wants to apply and develop this course.

At this point, it is clear that the project aims to influence the development of teachers’ evaluation skills, to enhance reflection on the practice, and to provide a better understanding of the benefits that implementing a competency assessment can have on students’ performance. Our ultimate goal is to include useful evaluation tests of oral skills in the final exams of these secondary education levels. The levels chosen by the consortium were A2 and B2, as they are the most commonly used in secondary education. In the

Supplementary Materials cited in this article, readers will find more information about the AROSE project and AROSE platform:

https://www.um.es/aroseproject/ and

https://arose.programaseducativos.es/ (accessed on 10 November 2022).

Next, in this article, we present the evaluative research to design, produce, and evaluate the AROSE platform, based on a design-based research (DBR) model.

3. Problem Research and Objectives

The project aims to influence the development of teacher evaluation skills to enhance reflection on the practice and provide a better understanding of the increase in the chances of success of students with the implementation of a competency assessment. The main purpose of the AROSE project was to improve the knowledge about the evaluation of oral English skills in secondary education through the creation of an oral-competency assessment model and a digital assessment tool.

The first phase of the project was focused on the creation of the model based on the common European framework reference for languages. Based on this model, and considering the suggestions collected by secondary education teachers, we designed and evaluated a web assessment tool to improve oral English communication.

Our problem research was focused on the evaluation of this web tool. Thus, our research questions have been: Is this web tool useful for teachers? Could this web tool help them to assess the oral skills of their students? Our research objectives have been:

Objective 1: To know the real needs of teachers of English as a second language, in relation to the identification of methodologies and strategies for the evaluation of oral skills.

Objective 2: To design and develop a digital platform for secondary education teachers to facilitate the oral English skills assessment.

Objective 3: To evaluate the tool quality and teachers’ satisfaction in secondary education (subject: English as a foreign language).

At this point, it is clear (objective 3) that the evaluation has been based on two dimensions: teachers’ satisfaction and quality of the web tool. The quality has been understood as both the technical quality (usability, accessibility, and navigability) and educational quality.

4. Methodology

We have used a mixed method based on an evaluative research design. We have followed a “Design Based Research (DBR)” methodology [

34,

35]. It has its origin in the field of applied sciences, but with increasing potential in the education field. It is research with implications in educational practice, since it aims to design resources, artefacts, or products that can be used in educational contexts. In fact, its main characteristic acknowledges the importance of being situated in a real educational context, focusing on the design and testing of a significant intervention, using mixed methods, involving multiple iterations, and collaborative partnership [

34,

35]. All these statements can be found in the AROSE research.

The research process generally has two stages: research to create a new product and its successive improvements and, on the other hand, provide knowledge in the form of principles that contribute to new design processes. DBR starts from the understanding of reality to make a design and put it into practice, in order to check its usefulness through iterative cycles.

5. Phases and Participants

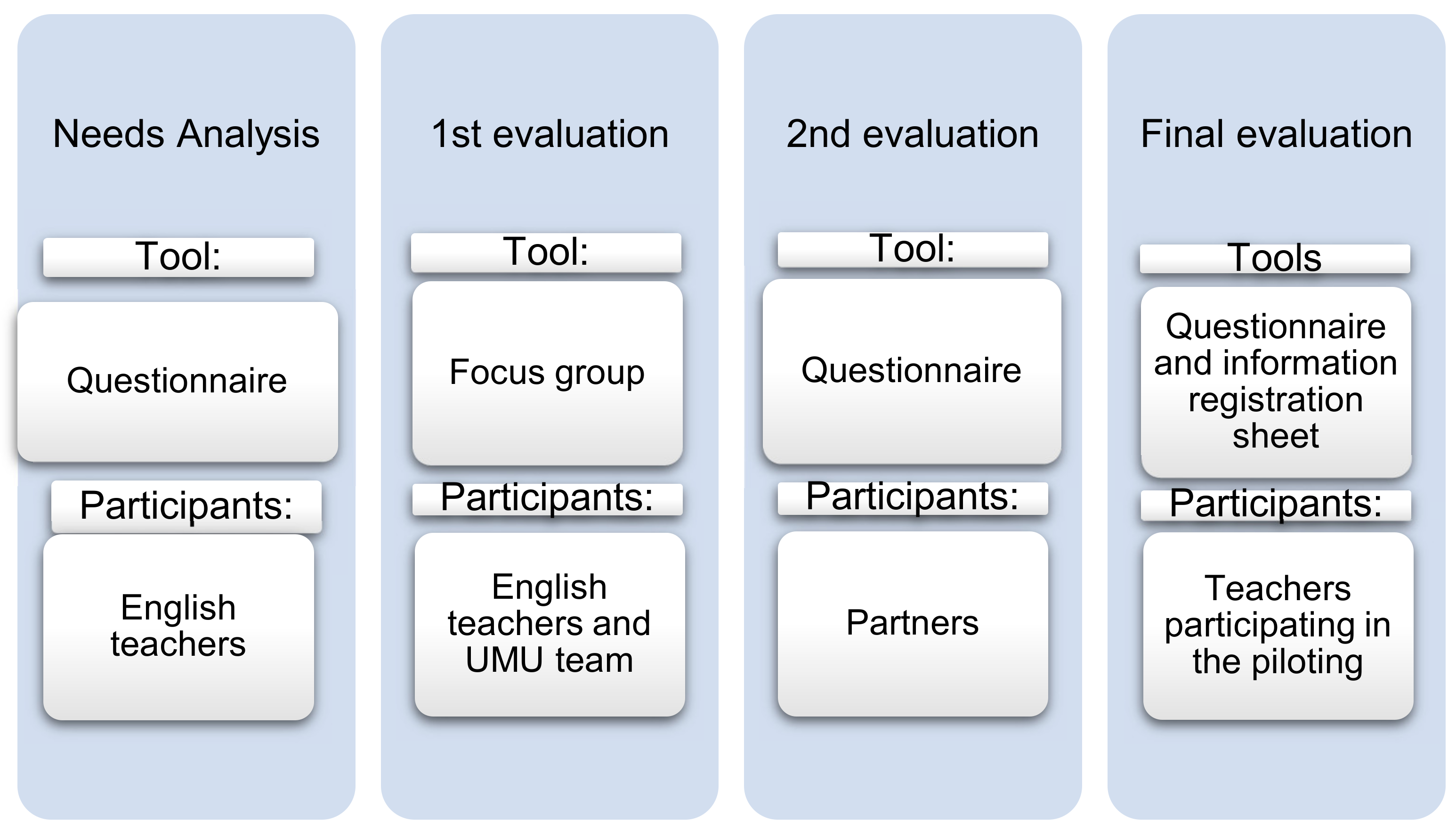

The evaluation implies the collection of valid, reliable information, the elaboration of judgements of merit or value on the basis of previously established criteria, and taking decisions aimed at improvement. Our research began with a need for analysis through a questionnaire for teachers of English as a second language. This need for analysis laid the groundwork for understanding the reality under study and beginning the process of designing and evaluating the platform. The web tool is useful for teachers for assessing their students, so the users and our informers who we have collected research data from have been secondary education teachers of English as a foreign language. In

Figure 2, you can find the process of the work, followed by the design and evaluation of the AROSE web tool.

These are the phases, the participants, and the instruments to collect data in our research process to produce our AROSE web tool:

Needs analysis. This phase aimed at contributing to the elucidation of the real needs of teachers, regarding the available resources related to English language teaching, as well as the identification of the methodologies and strategies for improving oral skills in English as a foreign language for the school environment. It was carried out with a quantitative method, and a total number of 96 teachers participated in the survey. The partners of the AROSE project promoted the application of this questionnaire, sending it by email to the partners.

First phase of evaluation. Two types of focus groups took place. Four of them were carried out in Portugal, Spain, Italy, and Turkey among secondary education teachers of English (at least four for each country). After these focus groups, a focus group was set up at the University of Murcia between the researchers and the computer scientist who was building the tool, in order to work with the data provided by the English teachers and to design the first version of the platform. A set of indicators were established for the focus groups to talk about, and an information recording sheet was established for them to indicate what they would like to change or add in the prototype of the AROSE platform. After the needs analysis and the evaluation of the design, the focus group at the University of Murcia worked with the computer scientist in the creation of the prototype of the platform.

Second phase of evaluation. This evaluation of the prototype took place from October to January 2022. Considering that the evaluation was made by experts in educational technology and secondary education teachers, this evaluation was interesting because it included two different profiles of users from both the theoretical and practical perspectives. An online questionnaire was created and validated. The validation of the questionnaire was carried out by expert judgement (experts in educational research), using a sheet where experts could rate items regarding the clarity and relevance of each one. Additionaly, 13 participants evaluated the prototype and filled in the questionnaires. With the analysis of the results, the web tool was improved, and it was ready to be evaluated again in the next phase.

Third phase of evaluation. This evaluation was developed as a pilot study with real students in the classrooms in every country. This evaluation was carried out between April and June 2022. The piloting evaluation aimed to collect feedback from teachers involved in the development of AROSE, once the platform was implemented and ready for use. Sixteen teachers (from each participating country, Spain, Italy, Portugal, and Turkey) carried out a piloting process using AROSE in secondary schools to assess students’ oral skills in English. A total of 4 secondary education centres, 16 teachers, and 256 students took part. Teachers had to complete two instruments: one during the piloting process, which was completed on a weekly basis and collected information on their use of the platform (registration sheet), and a final questionnaire where they completed evaluations of every tool (usability) and recommendations for improvement.

6. Research Instruments

We have designed, validated, and applied several instruments to collect data along the research process; the summary is in

Table 1. All research instruments included in the project were validated through expert judgement. Expert judgement was used to assess the adequacy, clarity, and relevance of the items and categories of the questionnaires. Expert judgement consists of asking a number of people for their assessment of an object, instrument, or teaching material [

36]. In order to accomplish this in the framework of AROSE project, the criteria for their selection must be taken into account. In this case, five experts participated, and the criteria for participation were to have experience in educational research, in the design of digital resources and tools, and in online teaching. The information was obtained by individual aggregation, obtaining the information on each of them from the validation forms designed for this purpose.

As we have explained, all the information collected was oriented to improve the design of our web tool.

Needs analysis: the questionnaire was composed of 20 questions, open and closed answers, with 6 to 13 answer options. The tool was structured in four parts. The first part focused on contextual information, while the second focused on professional development activities. The third part asked teachers about how to assess students’ skills, and the fourth, and last, part intended to ask about the needs of assessment teachers. The research ended with an open-ended question about other types of classroom performance that teachers use in the teaching–learning context.

First phase. The focus groups worked on a table to register information consisting of a series of closed items on the platform functionalities they would like to see: technical features, interactive tools, and other functionalities. The importance of each could be rated on a Likert scale item from 1 to 4. At the end, an open-ended question was included for them to indicate what they thought.

Second phase. A questionnaire with closed and open questions was designed to allow partners to provide feedback after a period of time testing the platform. The questions were posed in relation to two general blocks: use of the platform and educational issues. These dimensions included Likert scale items ranging from strongly disagree to strongly agree, with five levels for the partners to answer with. Finally, the partners were asked, in an open-ended way, about their opinion and what they would improve about the tool.

Third phase. The last phase refers to the piloting, and two tools were used: a questionnaire for the teachers participating in the piloting (a final questionnaire) and a weekly registration form, in which the process of working with the platform was specified. The aim of the registration form was to find out how they had used the platform. After some background information, they were asked whether they had used the tool individually, in pairs, or in groups, as well as the level (A2 or B2), the type of assessment carried out (initial, process, final), and a series of final questions about whether they had created resources and rubrics or had used the existing ones or other utilities.

The final questionnaire was to be completed at the end of the AROSE training course, after the end of the piloting process. With 57 items, it was organised around the following dimensions: list of utilities, accessibility, usability, navigability, educational quality, and general aspects. These dimensions included items with a Likert-type scale to determine the frequency of use of some of the tools (from never to usually) and statements to indicate their level of agreement or disagreement with the statements. At the end, a series of open-ended questions on the general aspects of the platform and overall satisfaction were included.

7. Results

7.1. Needs Analysis

We have used a questionnaire, and 96 teachers participated in the survey: 69.5% female and 28.4% male. Some interesting findings were found, such as a relevant percentage of respondents answering that they never participated in a short training event (35.9%); however, 20.7% of the interviewed teachers answered that they had the opportunity to attend these training modalities and that they had a very positive impact on their activity as a teacher. About their participation in the collaborative network of teachers with an opportunity for professional development, a relevant percentage (34.8%) did not have this opportunity. However, all the others not only participated, but considered that it provided them with more value, in terms of professional development as teachers. Half of them have never participated in visits and observation experiences in other centres.

In terms of communicative competences and focusing on speaking, teachers considered that one of the aspects that could be improved most was fluency. Regarding listening, comprehension was one of the skills that needed to be improved. When asked about their teaching needs, they indicated that they would need training in a task-based approach to learning English, in order to be able to apply it with their students. They would also like to help them develop different ways for assessment, learn how to use technology, and learn more about digital resource banks.

7.2. First Phase of the Evaluation Process

After the needs analysis questionnaire, the focus groups were developed. The focus groups involved teachers from the four participating countries and the UMU team, who completed a data recording table and submitted the results. The participants (English teachers) were asked what they would like to find in a digital tool for the assessment of oral competences in English. These are the results after the analysis of the information:

- (a)

Total agreement (it includes the items with the highest score shared by all countries):

Usable, clean, and user friendly: all focus groups.

Repository of digital content with multilevel access (open, group, private access).

Automatic feedback (real-time surveys, assessment tools…).

Self-regulated learning tools.

Resource bank: exam models, rubric models, tips for preparing exams, and correction keys.

Oral comprehension skills: tools to record sound fragments and edit them.

Self-assessment tool with multiple choice format, short answer.

- (b)

Medium agreement (these items did not receive the highest rating in all countries, but they had been generally positively evaluated):

Design resolution for every mobile device.

General content management system (CMS) features: adding, editing, and deleting resources.

Having a place where you can visually see the evolution of each student.

Collaborate communities with other teachers/students/schools.

Flexibility for teacher to decide different tool or tasks.

Having the possibility for students to choose levels or topics.

Adaptive learning (profile, styles).

Oral expression skill: recording tool for the user.

Social media (sharing contents from platform to others).

- (c)

Diversity in agreement (these are the least agreed upon items, finding different scores between countries or low scores in general):

Roles and permissions systems for teachers and students.

Group creation and management tool.

Blog integration.

Learning analytics tool.

Having the possibility to share the tasks you received from your students.

Teacher being able to give a code to enroll students to the course.

The result of this first evaluation phase was the blueprint to produce the first version of the platform (prototype), together with the platform tutorial.

7.3. Second Phase of the Evaluation Process

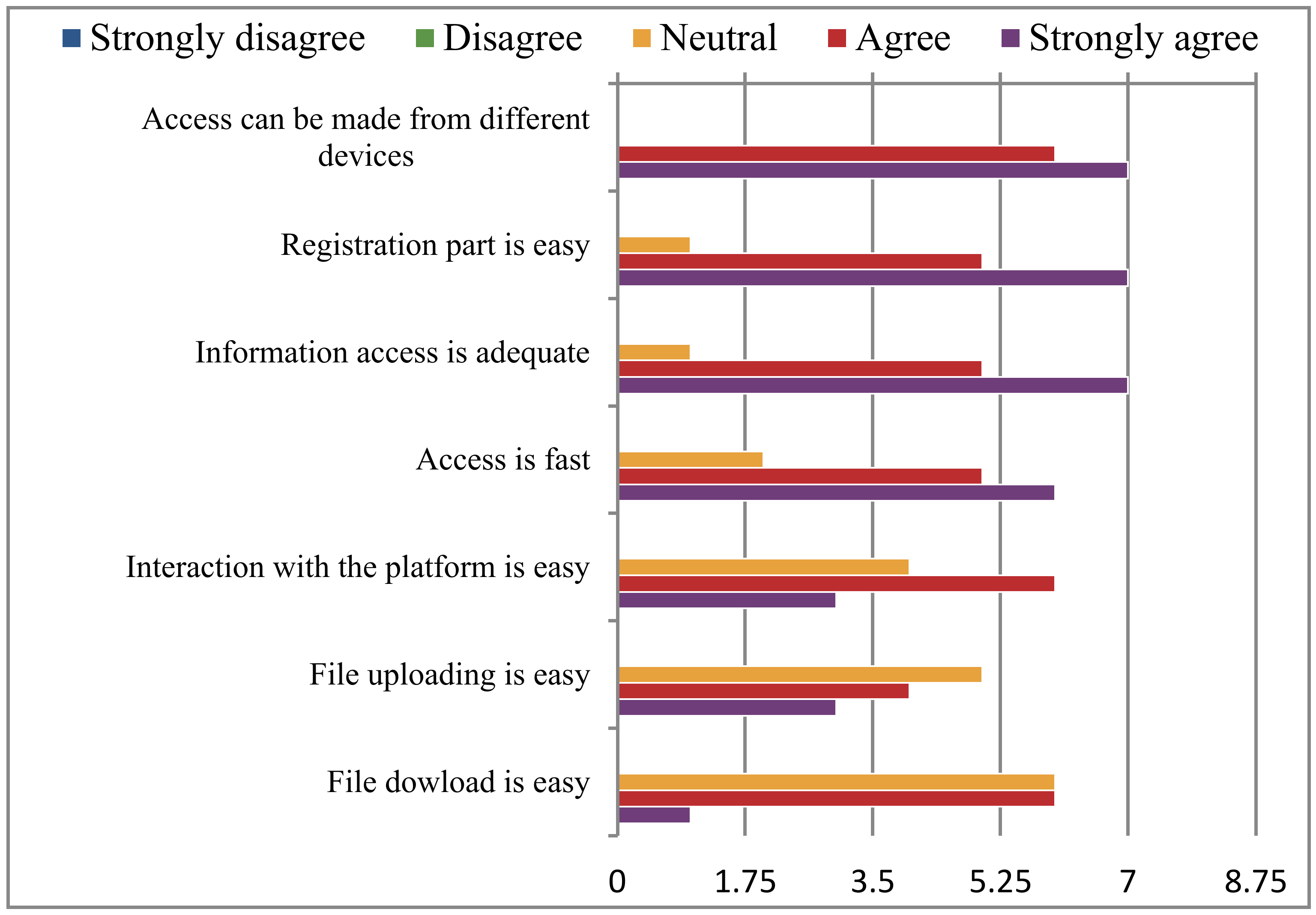

We used a questionnaire; all the participants in the consortium (technical partners and secondary teachers) had to answer it after several weeks of using the beta version of AROSE. There were 13 responses to the questionnaire, 53.8% of which were secondary teachers, and 46.2% were technical partners.

Regarding questions about the use of the platform (see

Figure 3), overall, the tool was assessed positively.

In general, aspects related to registration and navigability of the platform were rated favourably. The organisation of the content was also rated positively, but improvements were found in terms of visualisation. Most of the tools were favourably valued, especially the forum and chat, but there was a need to improve the rubrics section. The menu, navigability, and other technical aspects were also appreciated by all partners.

The need to review the use of the videos was also noted, as there seemed to be some minor technical problems. The rest of the items were favourably valued, especially those related to the grade book.

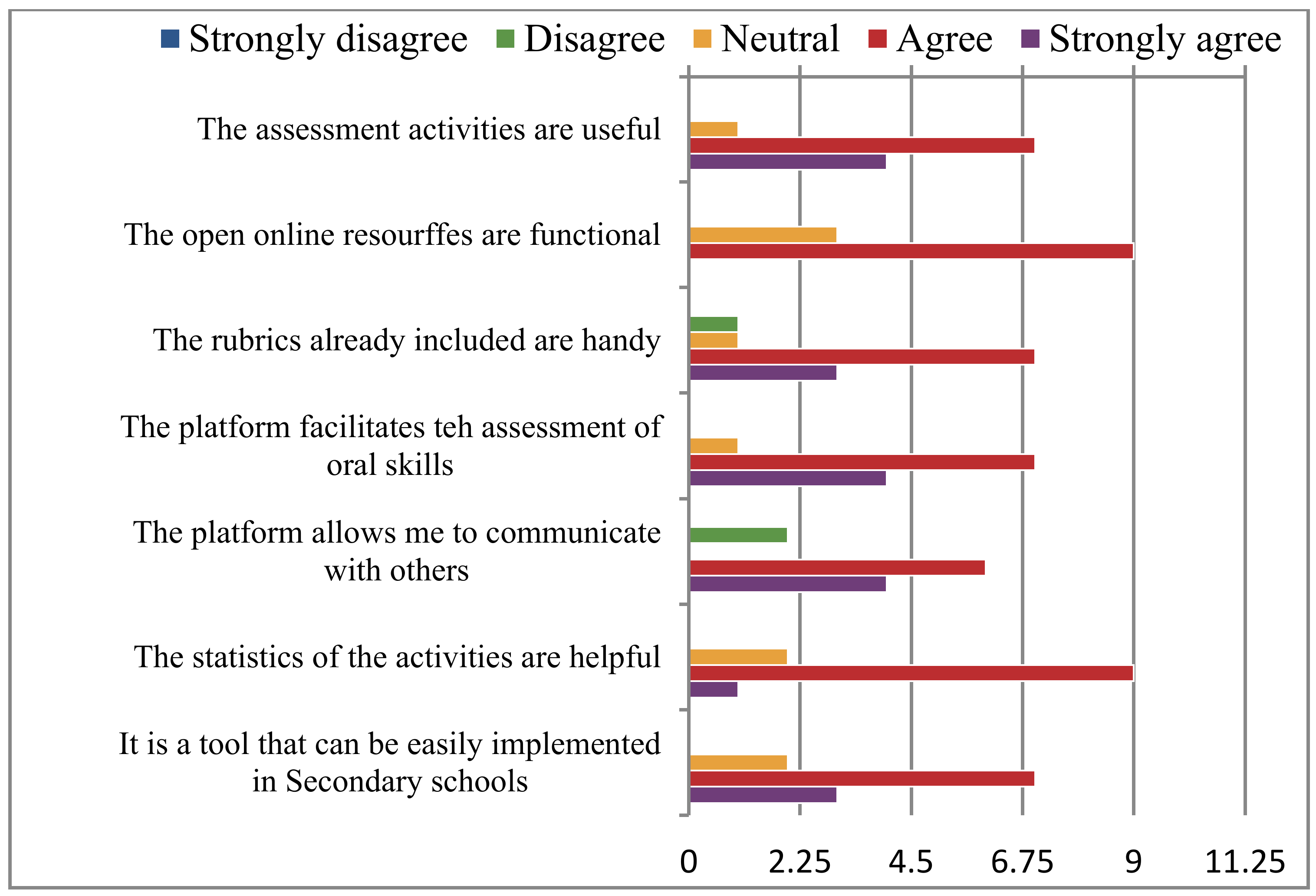

Educational aspects were also evaluated positively in general (see

Figure 4), paying attention to the use of rubrics and the communicative aspects as something to be improved. When analysing the answers to the open questions, partners were asked about the general opinion of the platform. A total of 10 out of 12 responses indicated that the platform was “good” and “useful”. Some recommendations were directed towards improving usability, including more content for evaluation. Two people indicated problems in the “resources” section. In terms of the platform’s strengths, they highlighted that it was focused on evaluation and that it was easy to use. Regarding improvements to make, they answered with some recommendations, such as: give the possibility to upload students’ data from an excel sheet, improve the uploading of resources, give the possibility to include more communicative tools, and improve the rubrics part.

The opinion about the design of the menu and the screen was that it is user-friendly in general, except for one person, who indicated that he did not like the colours. Two people commented on the option of making it more visual with graphics or images.

Finally, the questionnaire asked whether they wanted to add anything else: one person said that the results of the test, for the students’ access, should be added. The rest did not add anything else and congratulated the designer and the team.

These evaluations were communicated to the computer scientist, in order to make the changes, which were mainly focused on improving the rubric section, based on the results of this evaluation.

The working team of the University of Murcia, which met with the computer scientist to make the first assessment of the tool, carried out a focus group. This first phase was carried out transversally while the platform was being built and took place from February to September 2021.

The result of this first phase was the first version of the platform, together with the platform tutorial. This was sent to the rest of the partners to start the evaluation of the second phase.

7.4. Third Evaluation

The evaluation of the piloting was carried out using two tools; this evaluation process was carried out between April and June 2022. Unlike previous evaluations, the piloting evaluation aimed to collect feedback from the teachers involved in the development of AROSE, once the platform had been implemented and was ready to use. As we said before, teachers’ opinions were analysed by means of a registration form and a final questionnaire. These tools allowed us to collect quantitative and qualitative feedback from teachers about the platform, with the intention of answering specific questions derived from specific experiences.

The final questionnaire shared many questions with the one already applied to the partners in the previous phase. The data extracted from the pilot questionnaire showed the responses of 19 users, with a minimum sample of four teachers from the four participating countries.

The main results of the piloting were:

The level most commonly used (75%) was for a B2 and 50% of the time for the final assessment. Most of the participants uploaded new resources to the platform and created rubrics, in addition to having used the ones available on the platform. The level of satisfaction with the platform was good, although several technical elements for improvement were suggested, mainly regarding the gradebook and adding possibilities to manage students’ data.

Students section: A total of 12 of the total number of participants claimed to have added a student sometimes, followed by 5 participants who claimed to add students often, which was 26.31% of the total number of participants surveyed.

Resources: The number of participants using resources three to five was in the majority. The majority of the surveyed participants answered that they have added a new resource at some time, more precisely 10 out of 19 participants.

Rubrics. In relation to this tool, we found different answers, from five respondents who said that they have never added a new rubric to seven respondents who said they added rubrics sometimes, making up 36.84% of the total. On the other hand, three respondents said that they rarely add a new rubric, and three others said that they do it often. Most of the participants rated this usefulness with a value equal to or higher than 3, with 47.37% of the total number of participants claiming to have used the AROSE rubrics at some time. However, only one person claimed to use it normally, although five of the participants claimed to use AROSE rubrics often.

Gradebook: The majority of respondents claimed to have set up a gradebook at some point in time, accounting for 47.36% of the total.

Chat and forum: Teachers thought it was useful, but they were the tools least used in the piloting process.

When asked about the educational possibilities of the platform, in general, it was evaluated positively, especially highlighting the resources and rubrics.

The answers given, both on the scale at the quantitative level and in the answers provided by the students, were taken into consideration for the improvement of the platform, in order to optimise its applicability as much as possible.

Following these results, suggestions from the partners were collected and indicated to the computer scientist for implementation. Specifically, it was indicated:

Improve student registration.

Clarify and simplify the use of gradebooks and rubrics.

Improve and update the tutorial.

In the following table (

Table 2), we include the research phases and the main description of results.

8. Discussion and Conclusions

The research carried out and presented in this article has shown the importance of assessing oral skills in English and the need for the improvement of teaching competence to do so. These issues are related to the main objectives of digital competence of educators in the international context, as we have explained [

2,

3,

6,

7,

8].

In relation to the first of the research objectives, it is important to know the real needs of teachers of English as a second language, in relation to the identification of methodologies and strategies for the evaluation of oral skills. The needs analysis has been key to understanding what teachers need and where to articulate the platform developed, as pointed out by the key authors in the DBR [

34,

35]. We have found that there are some obstacles to the development of oral communication skills; teachers assume that it is necessary to continue to invest in effective teaching–learning strategies that seek to capture the student’s attention, so that it is the centre of learning. In this sense, teachers need to recognise the limits and potential of each student, build trusting relationships with students, plan their pedagogical practices according to the profiles and needs of their students, engage their students to feel committed to their learning process and encouraged to continue developing, and finally, monitor and evaluate the development of their students, in order to ensure that everyone learns with the best tools that they have at their disposal, in our case, with digital technologies, according to the authors who have studied this subject in depth [

9,

21].

Teachers participating in the needs analysis have stated that they need to learn new forms of evaluation and how to use technology and resource banks for this purpose, one of the main areas in the DigCompEdu European model [

6]. This is the second of our objectives and the main purpose of the AROSE web tool. Furthermore, this is one of the most outstanding aspects of DBR, i.e., responding to real needs [

35].

Regarding the second research objective carried out, in order to design and develop a digital platform for secondary school teachers, to facilitate oral English skills assessment, we have produced the AROSE web tool (

https://arose.programaseducativos.es/) (accessed on 10 November 2022). It was designed and developed by a multidisciplinary team, incorporating the main functionalities to meet the needs identified; we have also designed complex evaluative research in three phases, as we have explained. The platform was launched in March 2022, and in October 2022, it had 140 registered users, 326 students incorporated by their secondary school teachers, 132 open resources, and 33 digital rubrics. Moreover, the platform statistics showed positive data: more than 16,000 people have viewed the platform, and there have been 801 downloads of the resources hosted on it. The involvement of the participating teachers with the number of shared resources and the high number of registered users to date (October 2022) demonstrates the importance of digital tools in the creation of collaborative teaching processes and community building [

32,

33], as is the case of the AROSE community. Moreover, according to some authors [

22,

23], our data have shown the importance of digital technology in the assessment process and the importance of implementing new assessment methods [

22], as we have promoted.

As we mentioned in the introduction to this paper, it is necessary to improve oral skills, especially among students from southern countries [

37]—in this sense, the AROSE platform can help, on the one hand, as a useful digital resource in which to create resources and rubrics, but also as a tool that has a large number of resources and rubrics already created by other teachers from different countries in the European Union. Specifically, rubrics are considered one the most important tools for assessing oral competences; the AROSE project has developed some interesting rubrics, and the rubric section allows for the use of this tool in the classrooms. The other important aspect is the resources. Assessment activities already created for other teachers in an open resources bank are one of the products of this project. All of them have been regarded as important in the different phases of the evaluation of the AROSE tool.

In relation to the third objective, i.e., to evaluate the quality and satisfaction of secondary teachers, our results were positive. The data have shown that teachers take advantage of the possibilities of digital tools, and the creation of a tool such as AROSE has given them a digital resource to promote the improvement of their assessment skills because, in the same virtual space, they can use resources and share their own. Moreover, they can manage their students and analyse the evaluation results, in line with some authors [

24,

25], who remarked on this issue as a key innovation element in education.

Overall, the satisfaction with the platform was high, as well as the use that has been made of it. The data presented demonstrate that digital tools are important for teaching and learning English, along the same lines as other previous research, which have demonstrated the same among these last few years [

31]. For readers interested in developing similar initiatives, we recommend considering the teachers’ needs as a starting point and promoting their involvement during the evaluation process. The community of teachers collaborating with the researchers has probably been the most important factor for success in this type of evaluative research, based on DBR and assessing educational digital tools such as the AROSE web tool. We hope that this research and the digital tools provided will be useful for teachers of English and increase our AROSE community. For readers interested in developing similar initiatives, we recommend considering the teachers’ needs as a starting point and promoting their involvement during the evaluation process. The community of teachers collaborating with the researchers has probably been the most important factor for success.

Author Contributions

Conceptualization, supervision, and funding acquisition, P.P.-E.; methodology and validation, I.G.-P. and M.d.M.S.-V.; data analysis, I.G.-P. and M.d.M.S.-V.; writing—original draft preparation, I.G.-P., P.P.-E. and M.d.M.S.-V.; writing—review and editing, P.P.-E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Commission. Project Erasmus+ AROSE Nº 33023 (2019-1-ES01-KA201-065597), coordinated by Paz Prendes-Espinosa from the University of Murcia (Spain).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data will be available on the web site of the project (

www.um.es/aroseproject) (accessed on 10 November 2022).

Acknowledgments

The authors would like to thank all the project partners for their participation: Regional Ministry of Education of Murcia, IES La Flota, Casa do Professor, Agrupamento de Escolas de Amares, CESIE, IS Duca Abruzzi–Libero Grassi, Adıyaman Milli Egitim Müdürlügü, and Adıyaman Bilim ve Sanat Merkezi. Special thanks to the teachers who have used the AROSE web tool and created the resources that are part of it.

Conflicts of Interest

The authors declare no conflict of interest.

References

- European Commission. Recommendation of the European Parliament and the Council of 18 December 2006 on Key Competences for Lifelong Learning. 2006. Available online: http://data.europa.eu/eli/reco/2006/962/oj (accessed on 1 October 2022).

- UNESCO. Global Citizenship Education. In Preparing Learners for the Challenges of the 21st Century; UNESCO: París, France, 2014; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000227729 (accessed on 1 October 2022).

- Burns, T.; Gottschalk, F. (Eds.) Educating 21st Century Children: Emotional Well-Being in the Digital Age, Educational Research and Innovation; OECD Publishing: Paris, France, 2019. [Google Scholar] [CrossRef]

- EUROSTAT. Foreign Language Learning Statistics. 2021. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Foreign_language_learning_statistics (accessed on 10 October 2022).

- European Commission. Digital Education Action Plan 2021–2027. 2021. Available online: https://education.ec.europa.eu/focus-topics/digital/education-action-plan (accessed on 1 October 2022).

- Redecker, C. European Framework for the Digital Competence of Educators: DigCompEdu; EUR 28775 EN; Publications Office of the European Union (EU): Luxembourg, 2017. [Google Scholar] [CrossRef]

- Kampylis, P.; Punie, Y.; Devine, J. Promoting Effective Digital-Age Learning: A European Framework for Digitally-Competent Educational Organisations; Publications Office of the European Union (EU): Luxembourg, 2015. [Google Scholar] [CrossRef]

- Bacigalupo, M. Competence frameworks as orienteering tools. RiiTE Rev. Interuniv. Investig. Tecnol. Educ. 2022, 12, 20–33. [Google Scholar] [CrossRef]

- Albero-Posac, S. Using Digital Resources for Content and Language Integrated Learning: A Proposal for the ICT-Enrichment of a Course on Biology and Geology. Res. Educ. Learn. Innov. Arch. 2019, 22, 11–28. [Google Scholar] [CrossRef]

- Boonbrahm, S.; Kaewrat, C.; Boonbrahm, P. Using Augmented Reality Technology in Assisting English Learning for Primary School Students. In Learning and Collaboration Technologies; Zaphiris, P., Ioannou, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9192. [Google Scholar] [CrossRef]

- Carrión, E.; Pérez, M.; De Ory, E.G. ICT and gamification experiences with CLIL methodology as innovative resources for the development of competencies in compulsory secondary education. Digit. Educ. Rev. 2021, 39, 238–256. [Google Scholar] [CrossRef]

- Cinganotto, L.; Cuccurullo, D. Open educational resources, ICT and virtual communities for content and language integrated learning. Teach. Engl. Technol. 2016, 16, 3–11. Available online: https://bit.ly/3TbuRcI (accessed on 10 November 2022).

- Garcia-Esteban, S.; Villarreal, I.; Bueno-Alastuey, M.C. The effect of telecollaboration in the development of the Learning to Learn competence in CLIL teacher training. Interact. Learn. Environ. 2021, 29, 973–986. [Google Scholar] [CrossRef]

- Gómez-Parra, M.E.; Huertas-Abril, C. La importancia de la competencia digital para la superación dela brecha lingüística en el siglo XXI: Aproximación, factores y estrategias. EDMETIC Rev. Educ. Y TIC 2019, 8, 88–106. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=6775508 (accessed on 1 October 2022).

- Konstantakis, M.; Lykiardopoulou, A.; Lykiardopoulou, E.; Tasiouli, G.; Heliades, G. An Exploratory Study of Mobile-Based Scenarios for Foreign Language Teaching in Early Childhood. Educ. Sci. 2022, 12, 306. [Google Scholar] [CrossRef]

- Navarro-Pablo, M.; López-Gándara, Y.; García-Jiménez, E. The use of digital resources and materials In and outside the bilingual classroom. Comunicar 2019, 59, 83–93. [Google Scholar] [CrossRef]

- Schietroma, E. Innovative stem lessons, CLIL and ICT in multicultural classes. J. E-Learn. Knowl. Soc. 2019, 15, 183–193. [Google Scholar] [CrossRef]

- Sosa-Díaz, M.J.; Sierra-Daza, M.C.; Arriazu-Muñoz, R.; Llamas-Salguero, F.; Durán-Rodríguez, N. “EdTech Integration Framework in Schools”: Systematic Review of the Literature. Front. Educ. 2022, 7, 895042. [Google Scholar] [CrossRef]

- Taillefer, L.; Munoz-Luna, R. Technology Implementation in Language Teaching (TILT): Analysis of ICTs in Teaching English as a Foreign Language. Int. J. Interdiscip. Educ. Stud. 2013, 7, 97–104. [Google Scholar] [CrossRef]

- Guerra, M.S.Y.; Ortiz, A.J.M. El uso de las las TIC y el enfoque AICLE en la educación superior (Kahoot!, cortometrajes y BookTubes). Pixel-Bit Rev. Medios Y Educ. 2021, 63, 257–292. [Google Scholar] [CrossRef]

- Abbate, E. Sustainable E-CLIL: Adapting Texts and Designing Suitable Reading Material for CLIL Lessons Using Simple ICT Learning Aids. Innov. Lang. Learn. 2018, 2018. Available online: https://bit.ly/3Q023Cy (accessed on 10 October 2022).

- Di Gregorio, L.; Beaton, F. Blogs in the modern foreign languages curriculum. A case study on the use of blogging as a pedagogic tool and a mode of assessment for modern foreign languages students. High. Educ. Pedagog. 2019, 4, 406–421. [Google Scholar] [CrossRef]

- Morze, N.; Makhachashvili, R.; Varchenko-Trotsenko, L.; Hrynevych, L. Digital Formats of Learning Outcomes Assessment in the COVID-19 Paradigm: Survey Study. In Digital Humanities Workshop Digit (DHW 2021); Association for Computing Machinery: New York, NY, USA, 2021; pp. 96–102. [Google Scholar] [CrossRef]

- Laato, S.; Murtonen, M. Improving Synchrony in Small Group Asynchronous Online Discussions. Adv. Intell. Syst. Comput. 2020, 1161, 215–224. [Google Scholar] [CrossRef]

- Prendes-Espinosa, P.; García-Tudela, P.A.; Gutiérrez-Porlán, I. Formative E-Assessment: A Qualitative Study Based on Master’s Degrees. Int. Educ. Stud. 2022, 15, 1–13. [Google Scholar] [CrossRef]

- McCallum, S.; Milner, M.M. The effectiveness of formative assessment: Student views and staff reflections. Assess. Evaluation High. Educ. 2020, 46, 1–16. [Google Scholar] [CrossRef]

- Petrova, V.V.; Kreer, M.Y. Using ICTs for Teaching General English and Professional English to Students of Technical Majors. In Integration of Engineering Education and the Humanities: Global Intercultural Perspectives; Anikina, Z., Ed.; IEEHGIP. Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; Volume 499. [Google Scholar] [CrossRef]

- Makhachashvili, R.; Semenist, I. Digital Competencies and Soft Skills for Final Qualification Assessment: Case Study of Students of Foreign Languages Programs in India. In Proceedings of the 7th International Conference on Frontiers of Educational Technologies, Bangkok, Thailand, 4–7 June 2021; pp. 21–30. [Google Scholar] [CrossRef]

- Palomo-Duarte, M.; Berns, A.; Cejas, A.; Dodero, J.M.; Caballero, J.A.; Ruiz-Rube, I. Assessing Foreign Language Learning Through Mobile Game-Based Learning Environments. Int. J. Hum. Cap. Inf. Technol. Prof. (IJHCITP) 2016, 7, 53–67. [Google Scholar] [CrossRef]

- Gràcia, M.; Alvarado, J.M.; Nieva, S. Autoevaluación y Toma de Decisiones para Mejorar la Competencia Oral en Educación Secundaria. RIDEP 2022, 62, 83–99. [Google Scholar] [CrossRef]

- Sánchez, A.A.; Franco, D.M.A.; Cepeda, V.L.R. Enfoques en el currículo, la formación docente y metodología en la enseñanza y aprendizaje del inglés: Una revisión de la bibliografía y análisis de resultados. Rev. Educ. 2022, 46, 538–553. [Google Scholar] [CrossRef]

- Cai, Y.; Wang, L.; Bi, Y.; Tang, R. How Can the Professional Community Influence Teachers’ Work Engagement? The Mediating Role of Teacher Self-Efficacy. Sustainability 2022, 14, 10029. [Google Scholar] [CrossRef]

- Espinosa, M.P.P.; Fernández, I.M.S. Edutec en la red comunidades virtuales para la colaboración de profesionales. Edutec. Rev. Electrónica Tecnol. Educ. 2018, 25, a092. [Google Scholar] [CrossRef]

- Anderson, T.; Shattuck, J. Design-Based Research: A Decade of Progress in Education Research? Educ. Res. 2012, 41, 16–25. Available online: http://edr.sagepub.com/content/41/1/7.full.pdf+html (accessed on 10 November 2022). [CrossRef]

- Crosetti, B.D.B.; Ibáñez, J.M.S. La Investigación Basada en Diseño en Tecnología Educativa. RiiTE Rev. Interuniv. Investig. Tecnol. Educ. 2016, 44–59. [Google Scholar] [CrossRef]

- Cabero, J.; Llorente, M.C. La aplicación del juicio de experto como técnica de evaluación de las Tecnologías de la Información y la Comunicación. Rev. Tecnol. Inf. Y Comun. Educ. 2013, 7, 11–24. Available online: http://servicio.bc.uc.edu.ve/educacion/eduweb/v7n2/art01.pdf (accessed on 1 October 2022).

- EF EPI (EF English Proficiency Index). A Ranking of 100 Countries and Regions by English Skills. 2020. Available online: https://www.ef.com/assetscdn/WIBIwq6RdJvcD9bc8RMd/legacy/__/~/media/centralefcom/epi/downloads/full-reports/v10/ef-epi-2020-english.pdf (accessed on 1 October 2022).

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).