Object Detection for Industrial Applications: Training Strategies for AI-Based Depalletizer

Abstract

1. Introduction

2. Materials and Methods

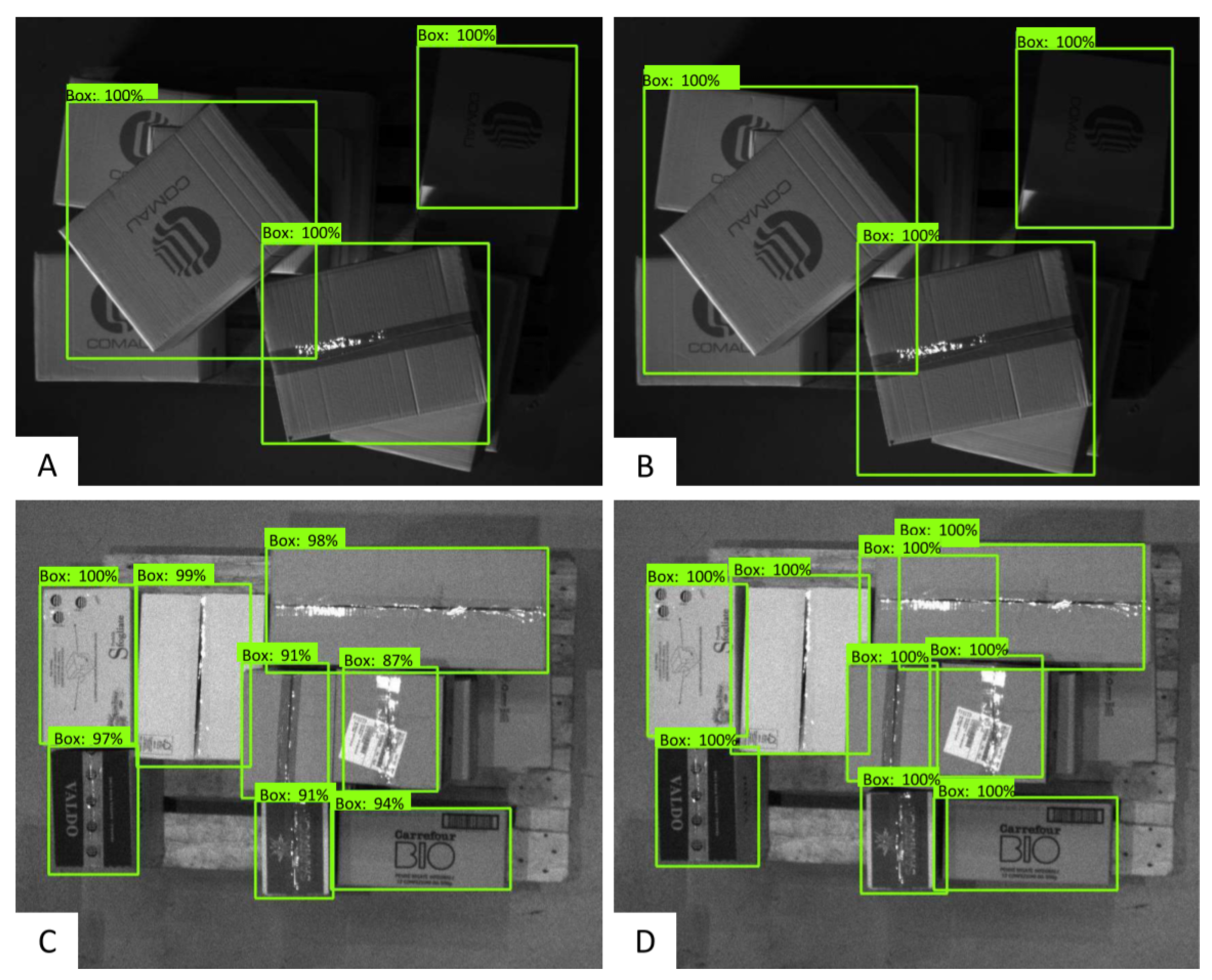

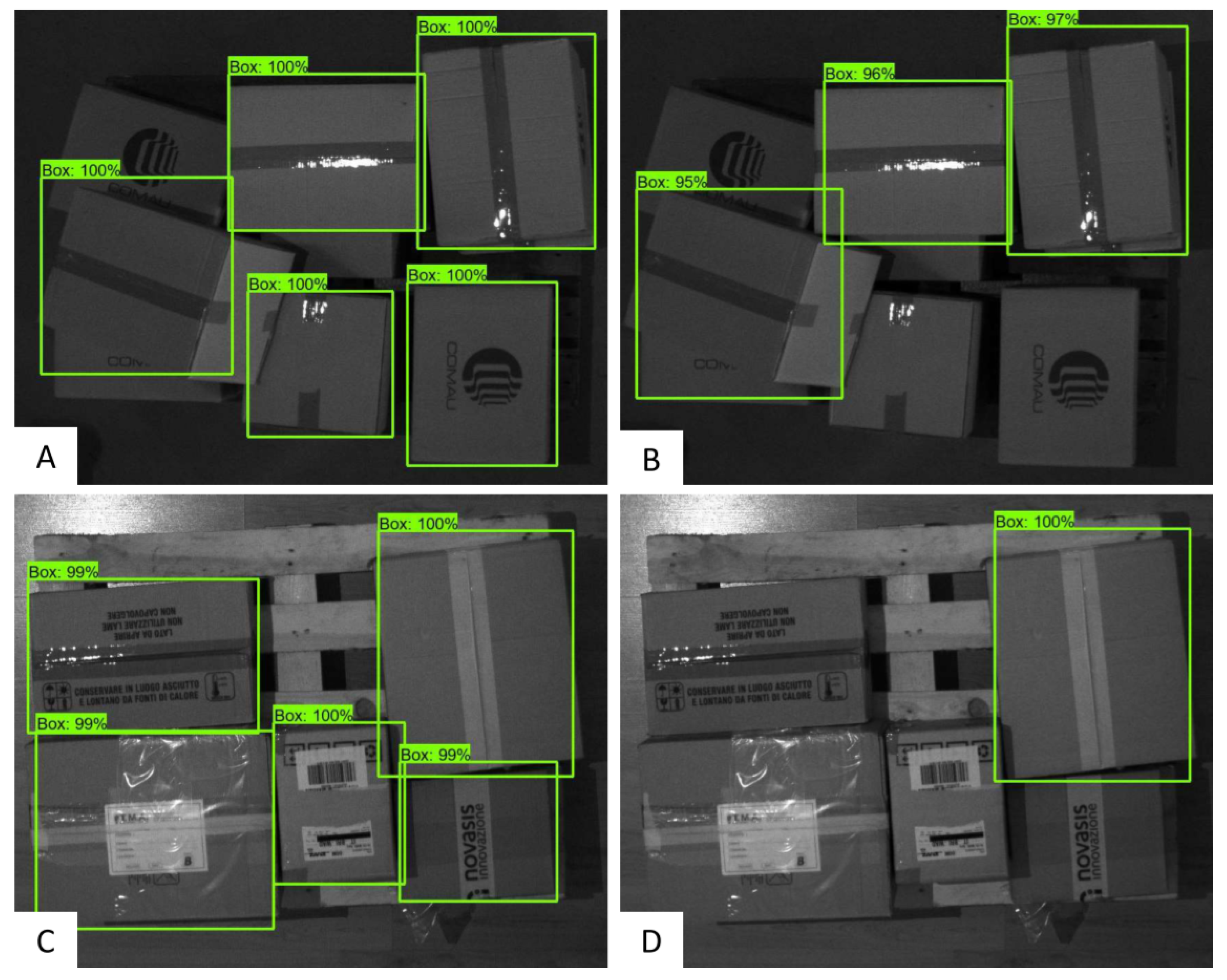

2.1. Datasets

- 1.

- Dataset 1, Figure 2A, composed of 1160 labeled images and 3712 paper boxes, containing only Comau® boxes characterized by several shapes and poses. All the paper boxes are white and present the same logo.

- 2.

- Dataset 2, Figure 2B, composed of 1907 labeled images and 11,566 paper boxes, containing boxes characterized by several prints, logos, shapes, and poses. The boxes belonging to this dataset are different from each other.

- 3.

- Dataset 3, Figure 2C, composed of 889 labeled images and 7575 paper boxes, containing white boxes without prints and logos, characterized by two possible shapes, and placed such that all the most-extended faces are parallel to the floor.

- 1.

- The label should be adhered as tightly as possible to the box, and the box must be inscribed within the label.

- 2.

- If any part of the box is occluded by another box, such that you can only see less than 90% (approximately) of the occluded box, exclude that box from being labeled.

- 3.

- Avoid labeling any boxes with less than 3 corners visible.

- 4.

- If a single box shows more than a single face, the most-extended face must be labeled.

- 5.

- The RoI of the boxes must have the sides parallel with the sides of the image.

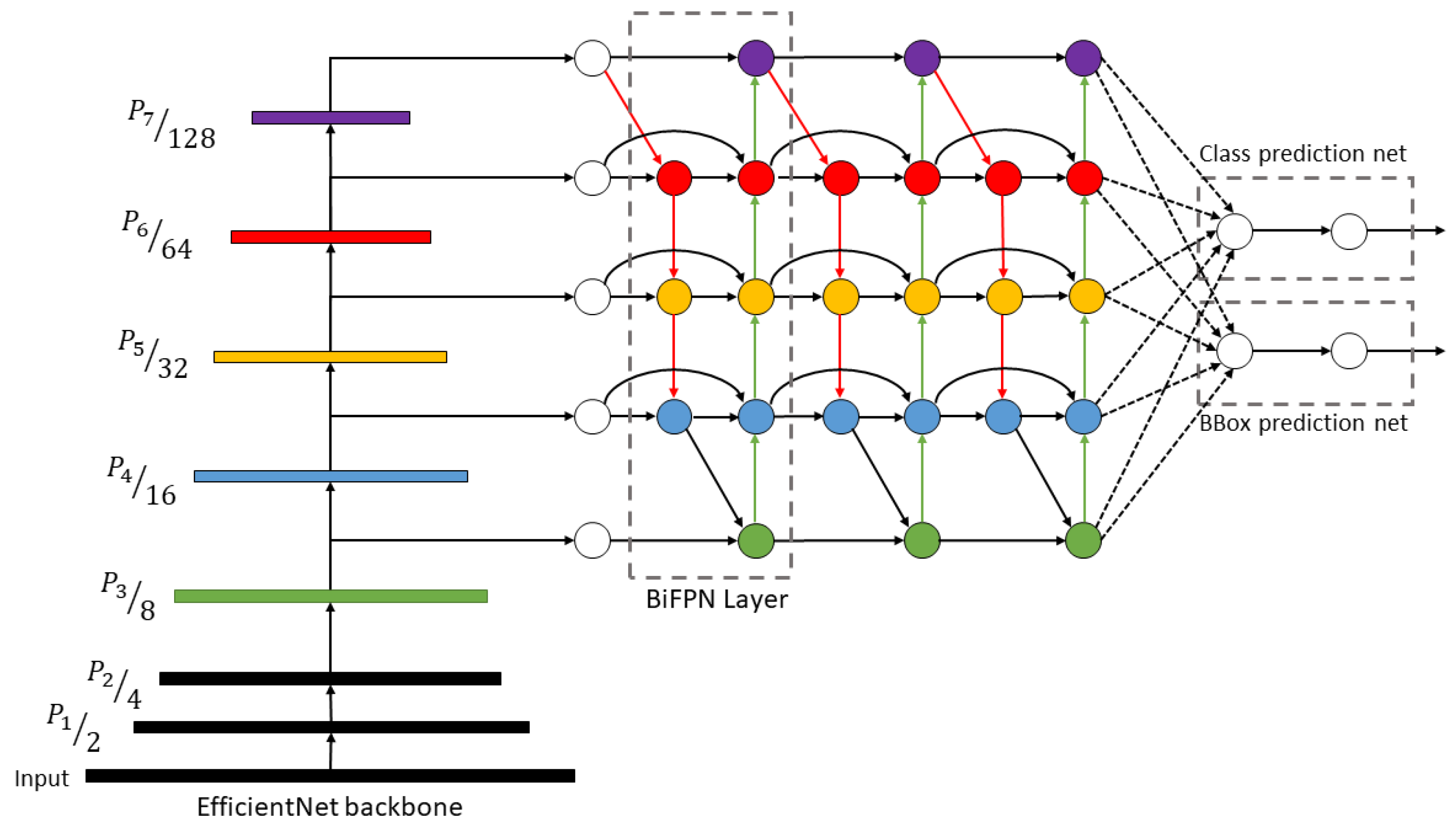

2.2. The Employed Object Detection Model as Part of the Depalletization System

2.2.1. The EfficientDet-D0 Architecture

2.2.2. Training the CNN Model

- 1.

- Classification: Initialize the classification backbone weights, in our case the EfficientNet backbone, with the same weights of the pretrained model on the COCO Dataset, while the BiFPN layers and class/box prediction layers are randomly initialized.

- 2.

- Detection: Initialize the classification backbone and BiFPN weights with the same weights of the pretrained model on the COCO Dataset. The box/class prediction layers are randomly initialized.

- 3.

- Full: Initialize the entire detection model, including the EfficientNet backbone, BiFPN, and box and class prediction layers with the same weights as the pretrained model on the COCO Dataset.

2.3. Data Augmentation

- a

- Random brightness and contrast with the probability to be applied equal to 0.5;

- b

- Horizontal and vertical flip with the probability to be applied equal to 0.5.

2.4. Experimental Design

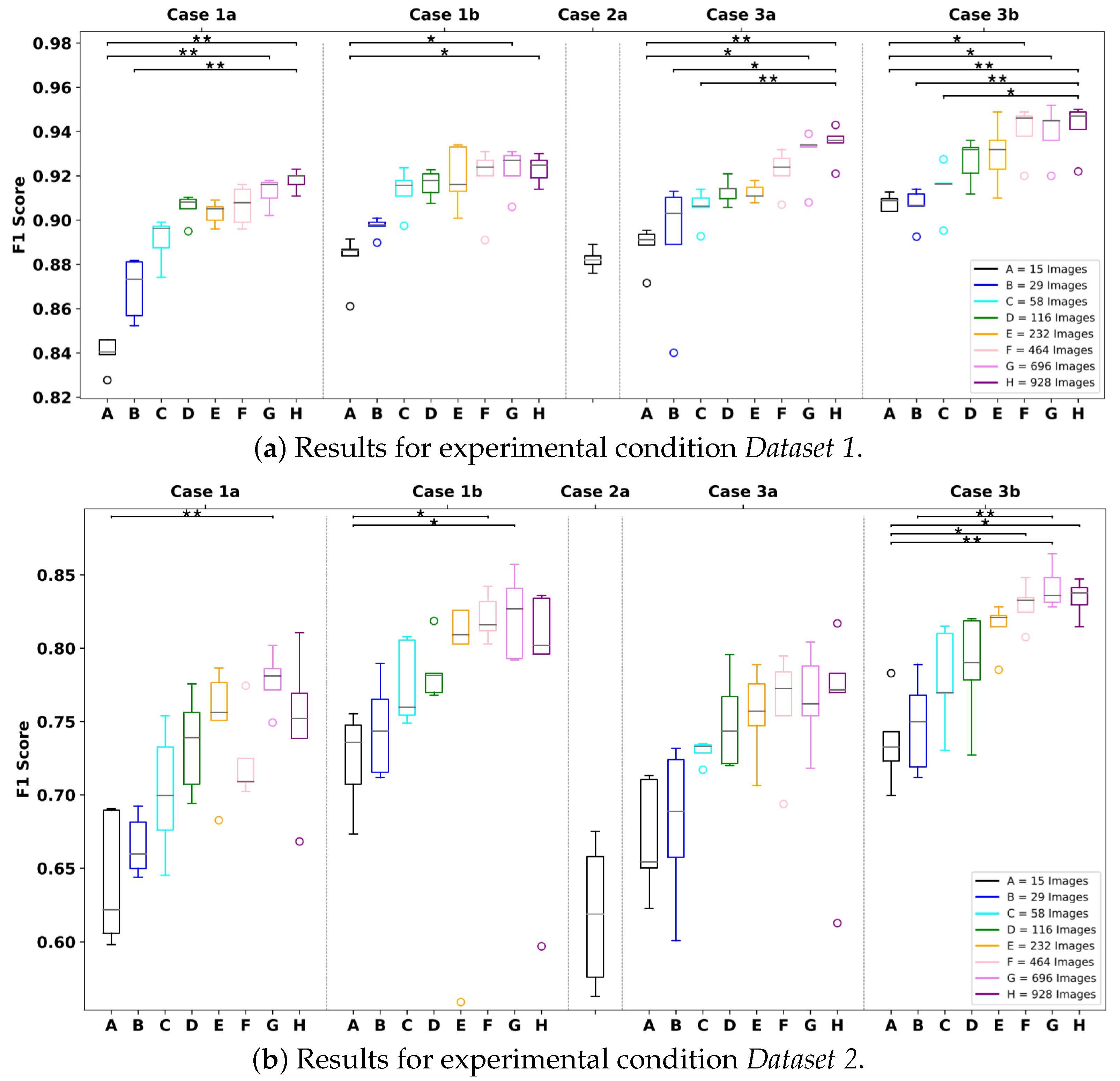

2.4.1. Proposed Approaches

- 1.

- Considering the model already pretrained on the COCO Dataset, using the detection modality (see Section 2.2.2) and updating the whole detection model:

- [Case 1a]—To fine-tune the model with images of the new boxes;

- [Case 1b]—To fine-tune the model with images of the new boxes and using the above-mentioned data augmentation strategy;

- 2.

- [Case 2a]—Considering the model already pretrained on the datasets of images containing different boxes with the detection modality and no frozen layers (see Section 2.2.2), to test the model on new images without any further fine-tuning or training;

- 3.

- Considering the model already pretrained on the datasets of images containing different boxes, using the full modality and the feature extractor frozen (see Section 2.2.2):

- [Case 3a]—To fine-tune the model with images of the new boxes;

- [Case 3b]—To fine-tune the model with images of the new boxes and synthetic copies generated with data augmentation.

2.4.2. Experiment Conditions

- [Dataset 1]—Dataset 1 (see the Datasets Subsection) was considered as the new dataset (the test set included only images of Dataset 1), whereas Dataset 2 and Dataset 3 were used to pretrain the model when evaluating Case 3;

- [Dataset 2]—Dataset 2 (see the Datasets Subsection) was considered as the new dataset (the test set included only images of Dataset 2), whereas Dataset 1 and Dataset 3 were used to pretrain the model when evaluating Case 3.

- Case 3 of both experimental conditions must have in common the training on Dataset 3 since it contains the images of neutral white paper boxes without any logo or texture;

- An experimental condition must focus on the detection of boxes characterized by a simple texture; this is the case of Dataset 1, which considers the detection of the paper boxes of Dataset 1 based on white boxes with the same logo;

- The other experimental condition must focus on boxes featuring more complex textures with a high inter-variability among the boxes in terms of texture and shape; this is the case of Dataset 2, which considers the detection of the paper boxes of Dataset 2 based on boxes characterized by different kinds of prints, logos, and shapes.

2.5. Cross-Validation

2.6. Performance Metric

2.7. Comparisons and Statistics

3. Results and Discussion

3.1. Comparison I Results

3.2. Comparison II Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ballou, R.H. The evolution and future of logistics and supply chain management. Eur. Bus. Rev. 2007, 19, 332–348. [Google Scholar] [CrossRef]

- Tommila, T.; Ventä, O.; Koskinen, K. Next generation industrial automation–needs and opportunities. Autom. Technol. Rev. 2001, 2001, 34–41. [Google Scholar]

- Bangemann, T.; Karnouskos, S.; Camp, R.; Carlsson, O.; Riedl, M.; McLeod, S.; Harrison, R.; Colombo, A.W.; Stluka, P. State of the art in industrial automation. In Industrial Cloud-Based Cyber-Physical Systems; Springer Nature Switzerland AG: Cham, Switzerland, 2014; pp. 23–47. [Google Scholar]

- Gavrilovskaya, N.V.; Kuvaldin, V.P.; Zlobina, I.S.; Lomakin, D.E.; Suchkova, E.E. Developing a robot with computer vision for automating business processes of the industrial complex. J. Phys. Conf. Ser. 2021, 1889, 022024. [Google Scholar] [CrossRef]

- Parmar, H.; Khan, T.; Tucci, F.; Umer, R.; Carlone, P. Advanced robotics and additive manufacturing of composites: Towards a new era in Industry 4.0. Mater. Manuf. Process. 2022, 37, 483–517. [Google Scholar] [CrossRef]

- Ribeiro, J.; Lima, R.; Eckhardt, T.; Paiva, S. Robotic Process Automation and Artificial Intelligence in Industry 4.0—A Literature review. Procedia Comput. Sci. 2021, 181, 51–58. [Google Scholar] [CrossRef]

- Lucas, R.A.I.; Epstein, Y.; Kjellstrom, T. Excessive occupational heat exposure: A significant ergonomic challenge and health risk for current and future workers. Extrem. Physiol. Med. 2014, 3, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kumar, B.; Gupta, V. Industrial automation: A cost effective approach in developing countries. Int. J. Res. Eng. Appl. Sci. 2014, 4, 73–79. Available online: https://www.researchgate.net/publication/301728845_Industrial_Automation_A_Cost_Effective_Approach_In_Developing_Countries (accessed on 1 July 2022).

- Baerveldt, A.J. Contribution to the Bin-Picking Problem. Robust Singulation of Parcels with a Robot System Using Multiple Sensors; ETH Zürich: Zürich, Switzerland, 1993. [Google Scholar]

- Katsoulas, D.; Kosmopoulos, D. An efficient depalletizing system based on 2D range imagery. In Proceedings of the 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 1, pp. 305–312. [Google Scholar]

- Vayda, A.; Kak, A. A robot vision system for recognition of generic shaped objects. CVGIP Image Underst. 1991, 54, 1–46. [Google Scholar] [CrossRef]

- Katsoulas, D.; Bergen, L.; Tassakos, L. A versatile depalletizer of boxes based on range imagery. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 4, pp. 4313–4319. [Google Scholar]

- Rothwell, C.A.; Zisserman, A.; Forsyth, D.A.; Mundy, J.L. Planar object recognition using projective shape representation. Int. J. Comput. Vis. 1995, 16, 57–99. [Google Scholar] [CrossRef]

- Rahardja, K.; Kosaka, A. Vision-based bin-picking: Recognition and localization of multiple complex objects using simple visual cues. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems. IROS ’96, Osaka, Japan, 4–8 November 1996; Volume 3, pp. 1448–1457. [Google Scholar]

- Papazov, C.; Haddadin, S.; Parusel, S.; Krieger, K.; Burschka, D. Rigid 3D geometry matching for grasping of known objects in cluttered scenes. Int. J. Robot. Res. 2012, 31, 538–553. [Google Scholar] [CrossRef]

- Drost, B.; Ulrich, M.; Navab, N.; Ilic, S. Model globally, match locally: Efficient and robust 3D object recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 998–1005. [Google Scholar]

- Choi, C.; Taguchi, Y.; Tuzel, O.; Liu, M.Y.; Ramalingam, S. Voting-based pose estimation for robotic assembly using a 3D sensor. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 1724–1731. [Google Scholar]

- Holz, D.; Topalidou-Kyniazopoulou, A.; Stückler, J.; Behnke, S. Real-time object detection, localization and verification for fast robotic depalletizing. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 1459–1466. [Google Scholar]

- Aleotti, J.; Baldassarri, A.; Bonfè, M.; Carricato, M.; Chiaravalli, D.; Di Leva, R.; Fantuzzi, C.; Farsoni, S.; Innero, G.; Lodi Rizzini, D.; et al. Toward Future Automatic Warehouses: An Autonomous Depalletizing System Based on Mobile Manipulation and 3D Perception. Appl. Sci. 2021, 11, 5959. [Google Scholar]

- Schwarz, M.; Milan, A.; Periyasamy, A.S.; Behnke, S. RGB-D object detection and semantic segmentation for autonomous manipulation in clutter. Int. J. Robot. Res. 2018, 37, 437–451. [Google Scholar] [CrossRef]

- Caccavale, R.; Arpenti, P.; Paduano, G.; Fontanellli, A.; Lippiello, V.; Villani, L.; Siciliano, B. A Flexible Robotic Depalletizing System for Supermarket Logistics. IEEE Robot. Autom. Lett. 2020, 5, 4471–4476. [Google Scholar] [CrossRef]

- Fontana, E.; Zarotti, W.; Rizzini, D.L. A Comparative Assessment of Parcel Box Detection Algorithms for Industrial Applications. In Proceedings of the 2021 European Conference on Mobile Robots (ECMR), Bonn, Germany, 31 August–3 September 2021; pp. 1–6. [Google Scholar]

- Opaspilai, P.; Vongbunyong, S.; Dheeravongkit, A. Robotic System for Depalletization of Pharmaceutical Products. In Proceedings of the 2021 7th International Conference on Engineering, Applied Sciences and Technology (ICEAST), Pattaya, Thailand, 1–3 April 2021; pp. 133–138. [Google Scholar]

- Zhang, Y.; Liu, Y.; Wu, Q.; Zhou, J.; Gong, X.; Wang, J. EANet: Edge-Attention 6D Pose Estimation Network for Texture-Less Objects. In Proceedings of the IEEE Transactions on Instrumentation and Measurement, Ottawa, ON, Canada, 16–19 May 2022; Volume 71, pp. 1–13. [Google Scholar]

- Bevilacqua, V.; Pietroleonardo, N.; Triggiani, V.; Brunetti, A.; Di Palma, A.M.; Rossini, M.; Gesualdo, L. An innovative neural network framework to classify blood vessels and tubules based on Haralick features evaluated in histological images of kidney biopsy. Neurocomputing 2017, 228, 143–153. [Google Scholar] [CrossRef]

- Cascarano, G.D.; Loconsole, C.; Brunetti, A.; Lattarulo, A.; Buongiorno, D.; Losavio, G.; Sciascio, E.D.; Bevilacqua, V. Biometric handwriting analysis to support Parkinson’s Disease assessment and grading. BMC Med. Inform. Decis. Mak. 2019, 19, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Bevilacqua, V.; Buongiorno, D.; Carlucci, P.; Giglio, F.; Tattoli, G.; Guarini, A.; Sgherza, N.; De Tullio, G.; Minoia, C.; Scattone, A.; et al. A supervised CAD to support telemedicine in hematology. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–7. [Google Scholar]

- Altini, N.; De Giosa, G.; Fragasso, N.; Coscia, C.; Sibilano, E.; Prencipe, B.; Hussain, S.M.; Brunetti, A.; Buongiorno, D.; Guerriero, A.; et al. Segmentation and Identification of Vertebrae in CT Scans Using CNN, k-Means Clustering and k-NN. Informatics 2021, 8, 40. [Google Scholar] [CrossRef]

- Buongiorno, D.; Trotta, G.F.; Bortone, I.; Di Gioia, N.; Avitto, F.; Losavio, G.; Bevilacqua, V. Assessment and Rating of Movement Impairment in Parkinson’s Disease Using a Low-Cost Vision-Based System. In Proceedings of the Intelligent Computing Methodologies, Wuhan, China, 15–18 August 2018; Huang, D.S., Gromiha, M.M., Han, K., Hussain, A., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 777–788. [Google Scholar]

- Bevilacqua, V.; Salatino, A.A.; Di Leo, C.; Tattoli, G.; Buongiorno, D.; Signorile, D.; Babiloni, C.; Del Percio, C.; Triggiani, A.I.; Gesualdo, L. Advanced classification of Alzheimer’s disease and healthy subjects based on EEG markers. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–16 July 2015; pp. 1–5. [Google Scholar]

- Photoneo. PhoXi 3D Scanner XL. Available online: https://www.photoneo.com/products/phoxi-scan-xl/ (accessed on 1 July 2022).

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, B.; Osmanov, T.; Kruchinin, D.; Zankevich, A.; Sidnev, D.; et al. Opencv/Cvat: V1.1.0. 2020. Available online: https://zenodo.org/record/4009388#.Y3JJT3bMK38 (accessed on 1 June 2022).

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. arXiv 2016, arXiv:1611.10012. Available online: http://xxx.lanl.gov/abs/1611.10012 (accessed on 1 July 2022).

- Lin, Y.T.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Sepúlveda, J.; Velastin, S.A. F1-score assesment of Gaussian mixture background subtraction algorithms using the MuHAVi dataset. In Proceedings of the 6th International Conference on Imaging for Crime Prevention and Detection (ICDP-15), London, UK, 15–17 July 2015. [Google Scholar]

| Training Set Size | Dataset 1 | Dataset 2 | ||

|---|---|---|---|---|

| Pairwise | Pairwise | |||

| 15 | 1a–3b | 0.0002 | 1b–2a | 0.0137 |

| 2a–3b | 0.0228 | |||

| 29 | 1a–3b | 0.0069 | 1b–2a | 0.0198 |

| 2a–3b | 0.0127 | |||

| 58 | 1b–2a | 0.0148 | 1b–2a | 0.0050 |

| 2a–3b | 0.0109 | 2a–3b | 0.0031 | |

| 116 | 1a–3b | 0.0274 | 2a–3b | 0.0036 |

| 2a–3b | 0.0016 | 1b–2a | 0.0080 | |

| 232 | 1a–3b | 0.0261 | 2a–3b | 0.0036 |

| 2a–3b | 0.0016 | |||

| 464 | 2a–3b | 0.0006 | 1b–2a | 0.0036 |

| 2a–3b | 0.0008 | |||

| 696 | 1a–3b | 0.0271 | 1b–2a | 0.0080 |

| 2a–3b | 0.0008 | 2a–3b | 0.0006 | |

| 2a–3a | 0.0197 | |||

| 928 | 2a–3a | 0.0109 | 2a–3b | 0.0016 |

| 2a–3b | 0.0009 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buongiorno, D.; Caramia, D.; Di Ruscio, L.; Longo, N.; Panicucci, S.; Di Stefano, G.; Bevilacqua, V.; Brunetti, A. Object Detection for Industrial Applications: Training Strategies for AI-Based Depalletizer. Appl. Sci. 2022, 12, 11581. https://doi.org/10.3390/app122211581

Buongiorno D, Caramia D, Di Ruscio L, Longo N, Panicucci S, Di Stefano G, Bevilacqua V, Brunetti A. Object Detection for Industrial Applications: Training Strategies for AI-Based Depalletizer. Applied Sciences. 2022; 12(22):11581. https://doi.org/10.3390/app122211581

Chicago/Turabian StyleBuongiorno, Domenico, Donato Caramia, Luca Di Ruscio, Nicola Longo, Simone Panicucci, Giovanni Di Stefano, Vitoantonio Bevilacqua, and Antonio Brunetti. 2022. "Object Detection for Industrial Applications: Training Strategies for AI-Based Depalletizer" Applied Sciences 12, no. 22: 11581. https://doi.org/10.3390/app122211581

APA StyleBuongiorno, D., Caramia, D., Di Ruscio, L., Longo, N., Panicucci, S., Di Stefano, G., Bevilacqua, V., & Brunetti, A. (2022). Object Detection for Industrial Applications: Training Strategies for AI-Based Depalletizer. Applied Sciences, 12(22), 11581. https://doi.org/10.3390/app122211581