1. Introduction

In recent years, the rapid development of advanced technology is inseparable from the support of power electronics technology, the use of commutation technology can efficiently achieve the conversion of electrical energy, the Insulated Gate Bipolar Transistor (IGBT) is a variety of electronic power equipment undertaking the conversion of electrical energy and transmission control of the core components [

1,

2,

3,

4], it is also the most failure-prone component in converters [

5,

6]. With the rise of new energy industries, the efficiency and lifetime of IGBT have become the key to the global green energy-saving economy [

7]. IGBT modules are subjected to relatively repetitive alternating high and low temperature and thermal cycles in actual operation for long periods, which makes it imperative that they have excellent reliability in terms of temperature. However, it has been found that the reliability of IGBT modules in high-reliability high power places is not high [

8,

9], and that defects in their solder layer interfaces can not only affect the overall model heat transfer but can even lead to the failure of the entire module. Its failure can lead to the failure of electronic and electrical equipment in minor cases, or it can lead to the overall system being unexpectedly plunged into downtime, resulting in serious economic losses [

10,

11,

12,

13]. Therefore, IGBT solder layer defect detection is of great importance to ensure the reliability and stability of IGBT modules.

The traditional methods for defect detection in IGBT modules include destructive testing sampling with microscopic and nondestructive testing. For nondestructive testing, a series of detection methods were proposed, such as ultrasonic inspection [

14], and X-ray detection using digital radiography (DR) and computed tomography (CT). However, in traditional methods, due to the high cost and low efficiency of manual testing, it is difficult to complete the task of large-scale quality testing. As deep learning-based defect detection relies on images, imaging-friendly X-rays become the nexus for detection using deep learning.

Object detection algorithms are divided into three categories: single-stage algorithms, two-stage algorithms, and anchor-free algorithms. The two-stage algorithms generate candidate regions in advance with high precision but low speed such as Faster R-CNN [

15], Mask R-CNN [

16], and Cascade R-CNN [

17], etc. The one-stage algorithms that synchronize the target prediction category with the regression position with high speed and high practicality meet the requirements of industrial real-time quality inspection such as YOLO family series [

18,

19], SSD [

20], RetinaNet [

21], etc. The anchor-free algorithms avoid hand-designed anchor frames, such as CenterNet [

22], FCOS [

23], etc.

In recent years, the rapid development of hardware technology has led to the rapid growth of computer computing power, laying the foundation for long development in various fields. Menelaos et al. [

24] proposed the implementation of an unsupervised image classification task using a time-multiplexed spiking convolutional neural network based on VCSELs; Kim et al. [

25] proposed AI2O3/TiOx-based resistive random access memory (RRAM) in response to the lack of consideration of errors occurring in the program during transfer in previous studies; Indranil et al. [

26] proposed a technology-aware training algorithm to address the problem that various non-idealities in the crossbar implementations of synaptic arrays can degrade the performance of neural networks.The above hardware development cases offer the possibility and feasibility of deep learning to implement industrial defect detection.

With the rapid development of computer computing power, deep learning has contributed to defect detection in industries such as printed circuit boards, steel, capsules, batteries, textiles, fruits and vegetables, and smart cars. For example, Xu et al. [

27] proposed a joint training strategy of Faster R-CNN and Mask R-CNN to achieve intelligent pavement crack detection. Compared with YOLOv3, it improves the performance but leads to a decrease in Mask R-CNN bounding box detection. Guo et al. [

28] proposed a method to improve the detection accuracy by first preprocessing the tile images with uneven illumination, complex surface texture, and low contrast using an adaptive histogram homogenization method with restricted contrast, and then using Mask R-CNN for defect detection. Guo et al. [

29] proposed a scheme of adding a guided anchor frame algorithm to produce an anchor based on Faster R-CNN to achieve intelligent detection of surface defects of three parts, which substantially improved the detection accuracy of the model.

Compared with other algorithms, YOLO series algorithms have non-negligible advantages. Yao et al. [

30] proposed a YOLOv5-based model for the detection of surface defects in kiwifruit, which provides an efficient and intelligent way of post-production quality inspection for agriculture. Wang et al. [

31] proposed a YOLOv5-based algorithm to achieve unmanned quality inspection of tile surface defects on production lines for the current problem that manual quality inspection cannot be avoided on tile surfaces. The results show that the accuracy of the YOLOv5 model is higher than that of Faster R-CNN, SSD, and YOLOv4. Li et al. [

32] used the DBSCAN+K-means clustering algorithm based on YOLOv3 to re-cluster Anchor under Avg IOU criterion, add residual units, and introduce SE attention mechanism to increase the model-specific feature extraction capability to achieve the improvement of YOLOv3 for PCB defect detection accuracy and speed. The above case study provides the feasibility of choosing YOLOv5 for defect detection in IGBT solder layers.

There is a lack of research on the use of deep learning for the detection of bubble defects in the solder layer of IGBT modules, so this paper collects a dataset of labeled IGBT solder layer bubble defects. Considering that the more complex detection process of the two-stage algorithm and the network structure leads to a slower detection speed, which cannot meet the requirements of industrial quality inspection in real-time. Low confidence in the prediction results of the anchor-free frame algorithm. The regression-based, one-stage algorithm is faster and more practical to meet the requirements of industrial real-time unmanned quality control. This paper presents an improved YOLOv5-based defect detection model for IGBT solder layers. The main contributions of this research are as follows:

A tiny bubble detection layer that integrates deep feature information and shallow feature information is added to improve the model’s ability to detect small bubbles.

To speed up model convergence by optimizing anchor frame parameters.

We change the EIoU loss function to the bounding box loss function to solve the sample imbalance of the dataset.

Combine the Swin Transformer structure to improve the convolution module, form a new feature extraction module and introduce it into the backbone layer to improve the detection accuracy.

In the remainder of this paper,

Section 2 introduces the YOLOv5 base model. We explain our proposed methodology in

Section 3, while experimental details and results are discussed in

Section 4. Finally, a brief conclusion is given in

Section 5.

3. Methodology

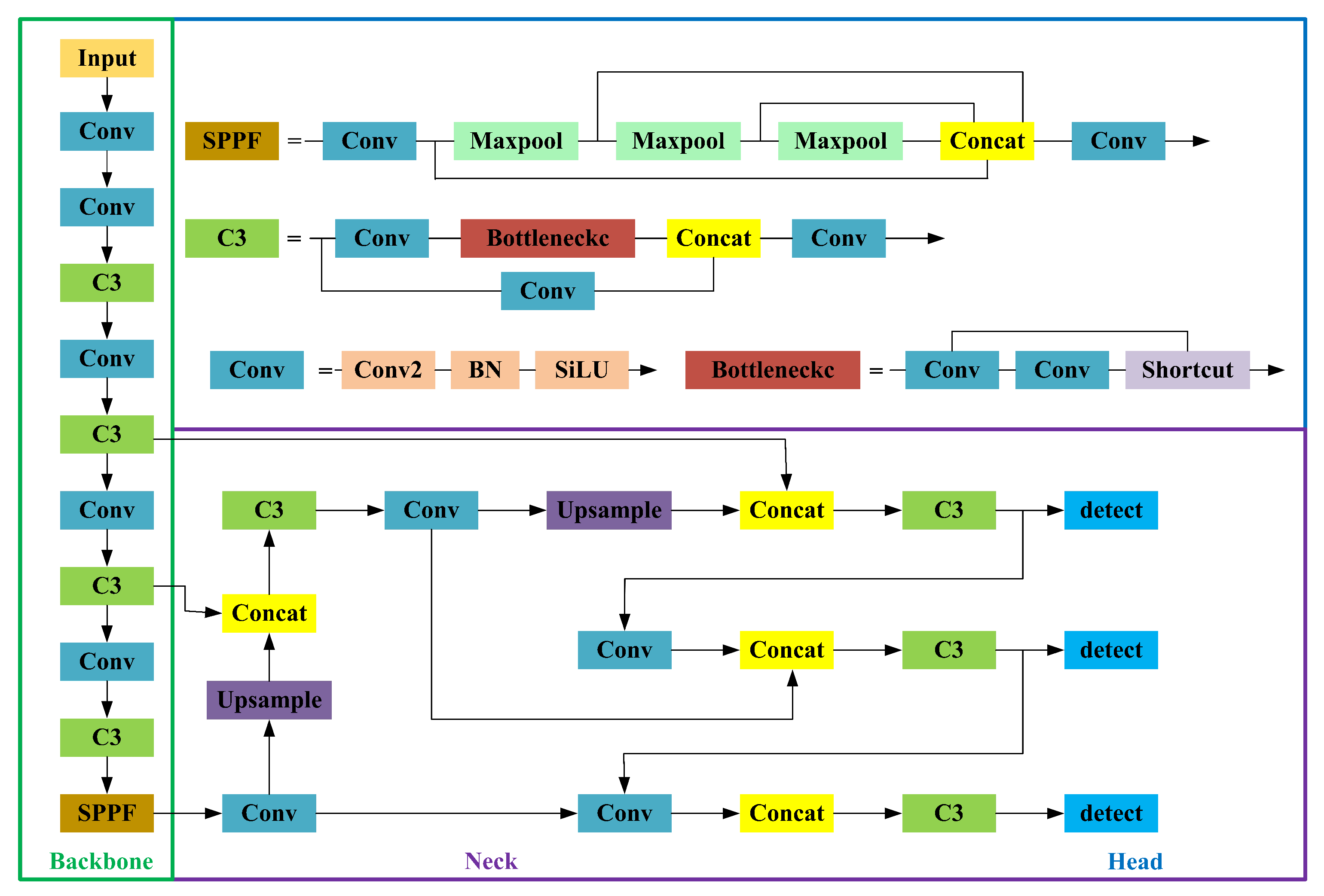

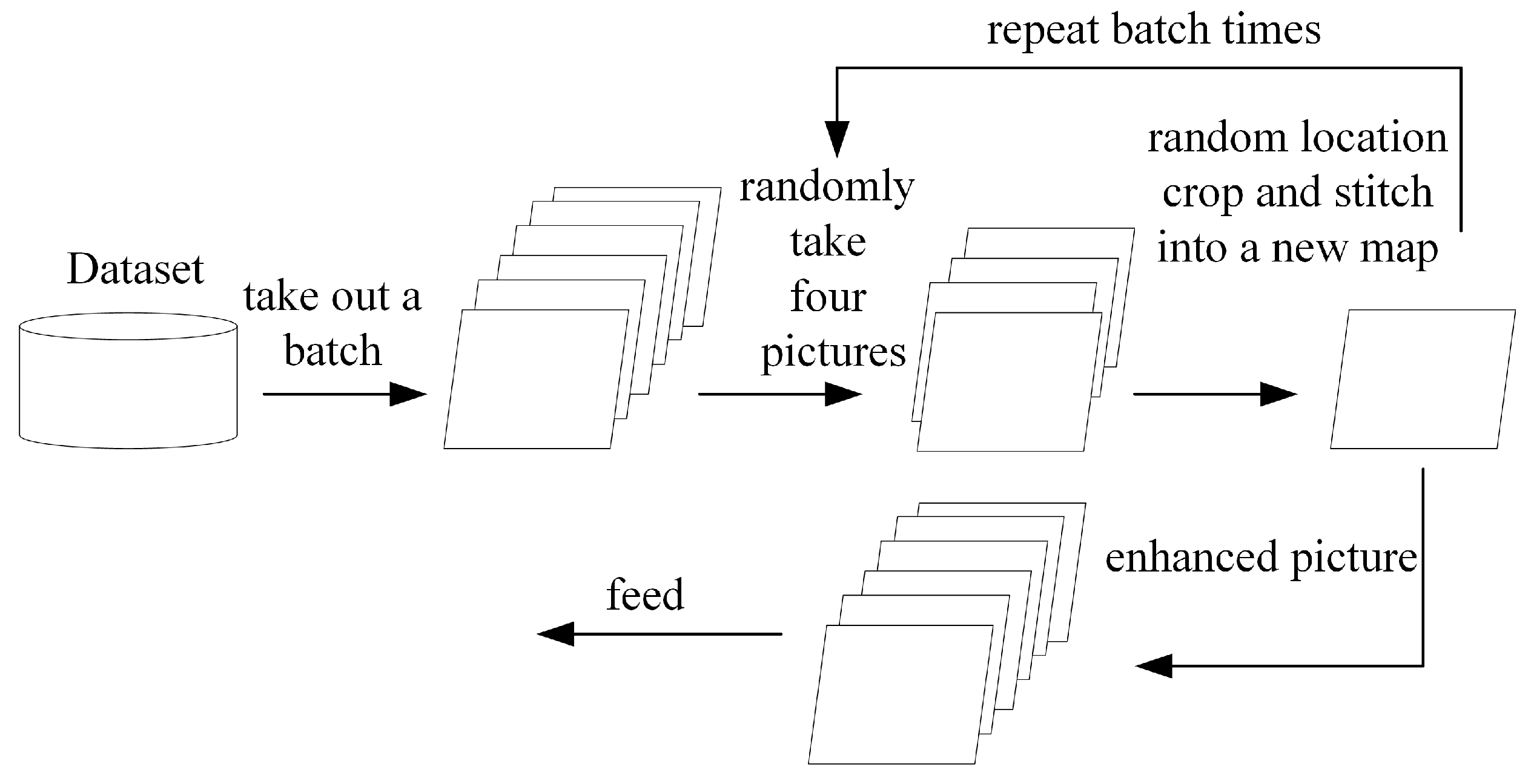

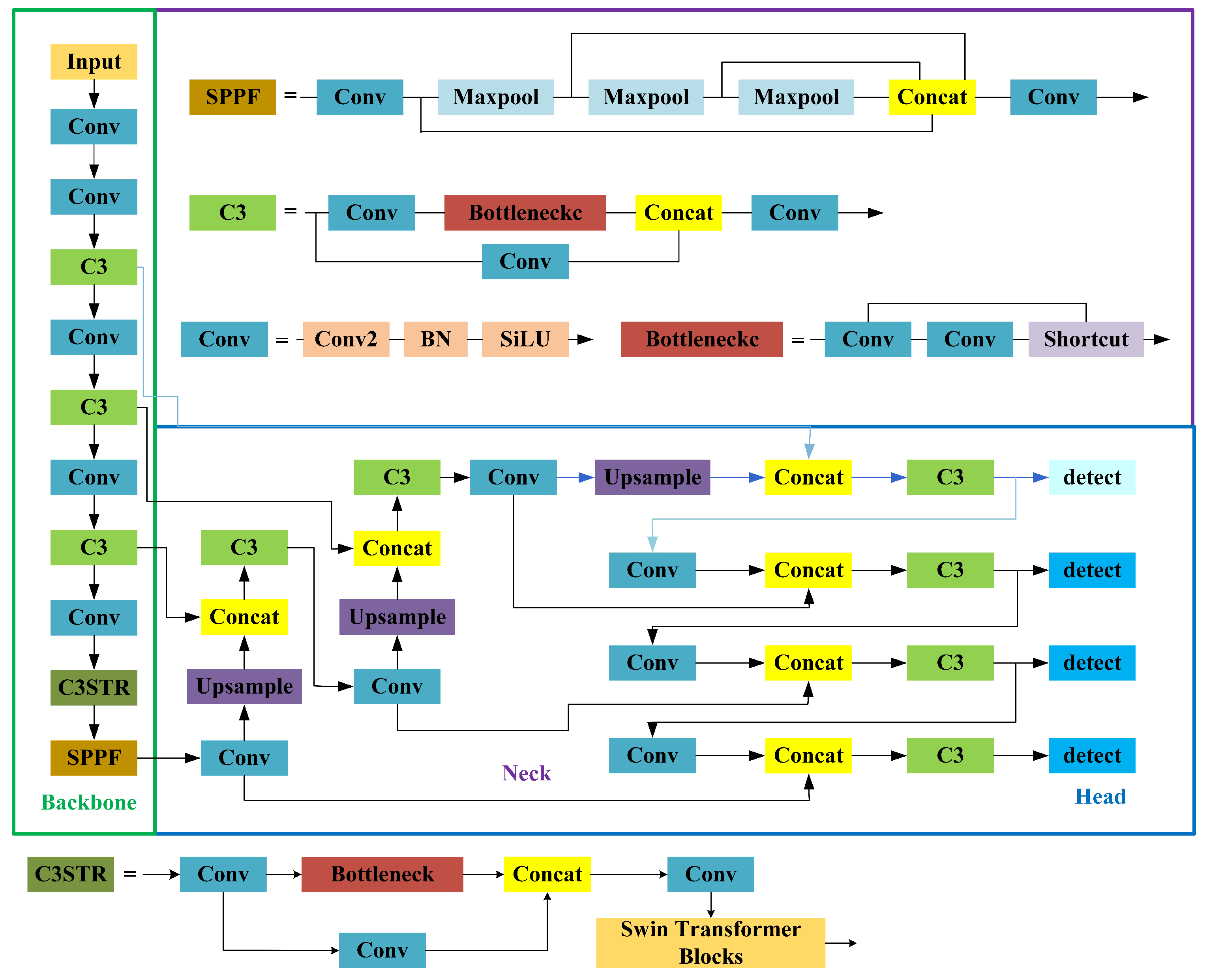

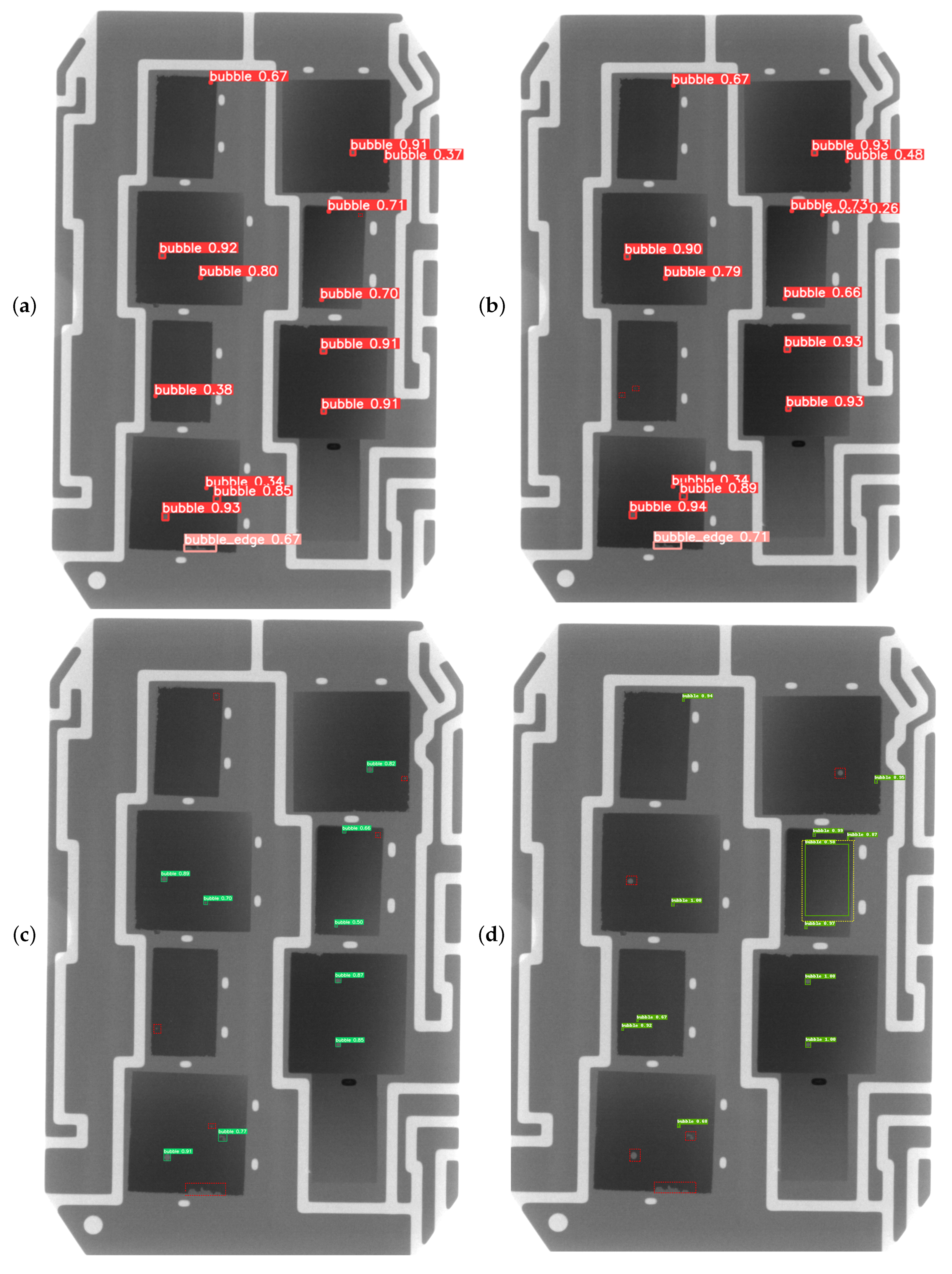

YOLOv5 is a high-performance model that locates and classifies defects in one step. Therefore, YOLOv5 is selected for the corresponding network structure adjustment and improvement to achieve the detection of IGBT solder layer bubble defects. Due to the small size, large number, irregular distribution, and different shapes of defective bubbles in IGBT modules, the detection of solder layer bubble defects by using object detection algorithms such as YOLOv5 are not effective. To improve the detection capability of solder layer defect bubbles, the overall structure of the improved YOLOv5 in this paper is shown in

Figure 3: (1) adding a small target detection layer that fits the tiny bubbles of the IGBT solder layer and adjusting the anchor frame parameters; (2) improving the box regression loss function; (3) adding a new convolution module to the backbone network.

3.1. Add a Small Object Detection Layer

The original YOLOv5 model has three detection layers. They are a detection layer of size 80 × 80 for detecting objects with a size of 8 × 8 and above, a detection layer of 40 × 40 for detecting objects with a size of 16 × 16 and above, and a 20 × 20 size detection layer for detecting targets of size above 32 × 32 [

36]. A larger feature map size detects small targets and a smaller feature map size detects large targets [

37].

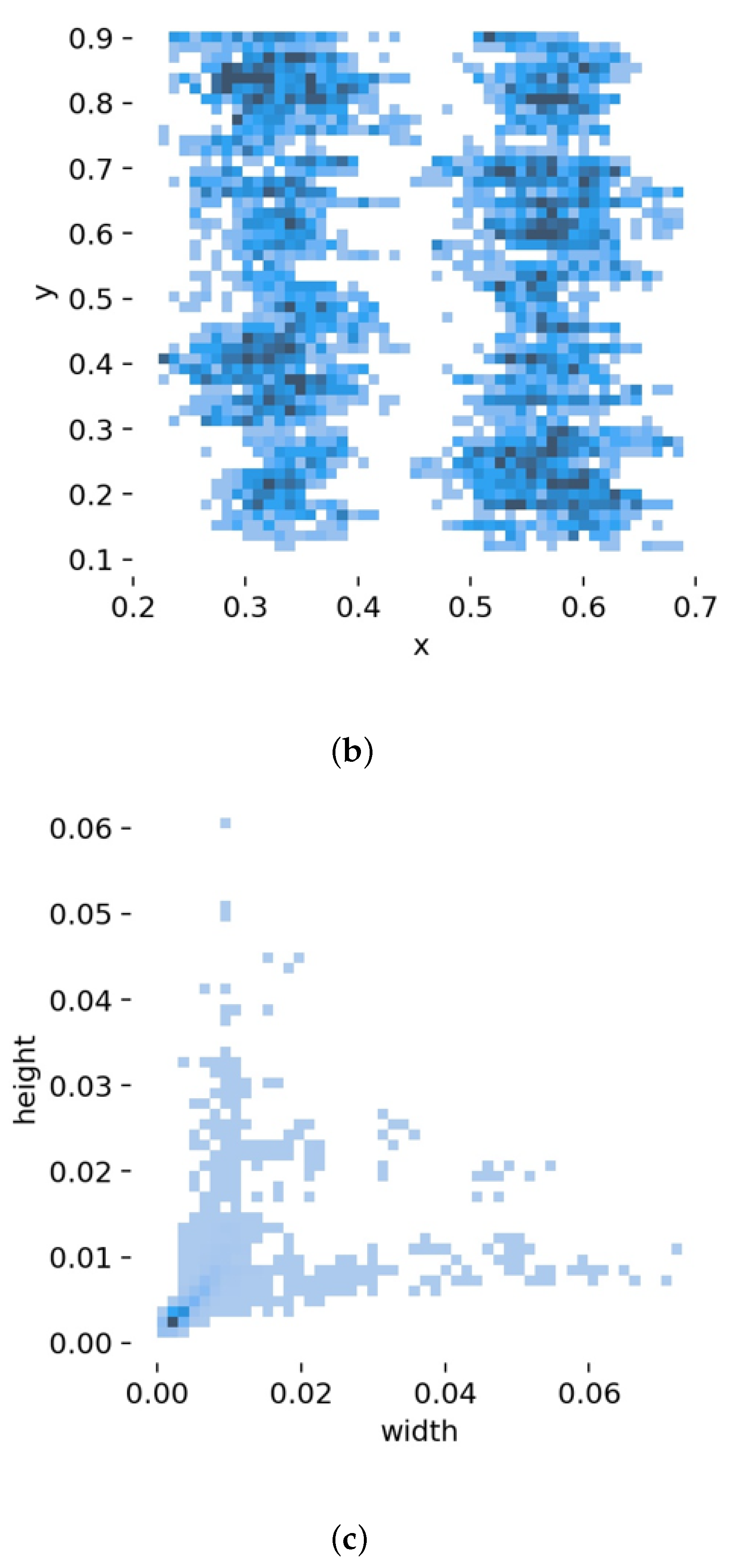

As the relatively high number of tiny defects in IGBT solder layer defect detection, the model is required to improve the detection capability for tiny targets. Therefore, in this paper, based on the original YOLOv5, a set of a priori anchor frames (5,6), (8,14), (15,11) for tiny targets is added to improve the convergence speed during model training; while retaining the original map information, the feature map is further extended by adding a set of convolution modules, upsampling modules for tiny targets. To reduce the loss of location information, the deep semantic information is fused with the shallow information, and the minimum feature map obtained in the backbone network is spliced with the further extended feature map to obtain a new feature map. A detection layer for detecting small targets is added to match the new feature map detection.

3.2. Loss Function

Box regression loss for YOLOv5 calculated by CIoU loss function [

38]. Although CIoU improves the convergence speed and regression accuracy of the network, the

v in the formula only reflects the difference in aspect ratio and does not show the true difference between the aspect ratio and its confidence level. Moreover, as inferred from the prediction frame and the prediction formula,

w and

h cannot be increased and decreased at the same time, so there may be a situation that prevents the model from optimizing the similarity effectively. To address the above problem, EIoU solves theoretically the problem that

w and

h cannot be scaled up or down simultaneously in CIoU Loss by directly penalizing the loss function of the prediction results of

w and

h, as shown in Equation (

7).

It can be seen that the EIoU loss function consists of the intersection ratio loss of the predicted frame and the real frame, the center distance loss of the predicted frame and the real frame, and the width and height loss of the predicted frame and the real frame, where and are the width and height of the two rectangular closures, respectively. Bounding box regression also suffers from the problem of unbalanced training samples, that is, the number of low-quality anchor boxes with large regression errors in a single image is much larger than the number of low-quality samples with small errors, and such samples can cause excessive gradients to affect the training effect. To solve this problem, Focal Loss is introduced to solve the problem of sample imbalance based on the original one, which makes the EIoU Loss loss function more consistent with the detection situation in this study.

3.3. Improvement of C3 Module

The traditional Convolutional Neural Network (CNN) [

39] has been very successful in extracting features as well as structure for image classification with good fitting ability, and the structure has been optimized with the addition of residual structure and the deep neural network has led to a better combination of context and corresponding generalization ability. From the perspective of human vision, the Transformer [

40] based on the self-attention mechanism is more in line with human visual logic, but in the application process, it is found that it cannot adapt to a large range of target size changes and the attention mechanism brings a correspondingly large amount of computation, which makes the accuracy and speed less than that of CNN. Swin Transformer [

41] proposes a hierarchical approach to construct multi-layer feature maps to solve the above problems, as well as to introduce a sliding window operation to restrict attention to a finite window to make the computational complexity linearly related to the input image size.

Swin Transformer is similar to ViT in that the input image with size is first chunked, then the feature dimension of the image block is changed by linear embedding in stage1, and then it is fed into Swin Transformer Block and the input features are calculated; stage2-stage4 is similar in that the adjacent image blocks are merged and stitched together, and then the convolutional network is used to downscale them to achieve the desired feature dimension.

Swin Transformer Blocks are shown in

Figure 4, and the normalized data first enter the Windows Multi-head Self Attention (W-MSA) module that further divides the image blocks into different regions and performs self-attentive calculations on them; immediately into the Multilayer Perception (MLP) machine using the GeLU function as the activation function to implement the nonlinear transformation of the data. Finally, the introduction of the Shifted Windows Multi-head Self Attention (SW-MSA) mechanism, which enables the interaction of information within partial windows between different levels; and connected using residual modules after each MSA module and MLP, as shown in Equations (8) to (11).

The C3 module, which incorporates Swin Transformer Blocks, is named the C3STR module, and its specific structure is shown in

Figure 3. It is experimentally demonstrated that the C3STR module, which introduces the Swin Transformer module, can achieve the same effect of introducing an attention mechanism to improve model accuracy with minimal additional overhead.

5. Conclusions

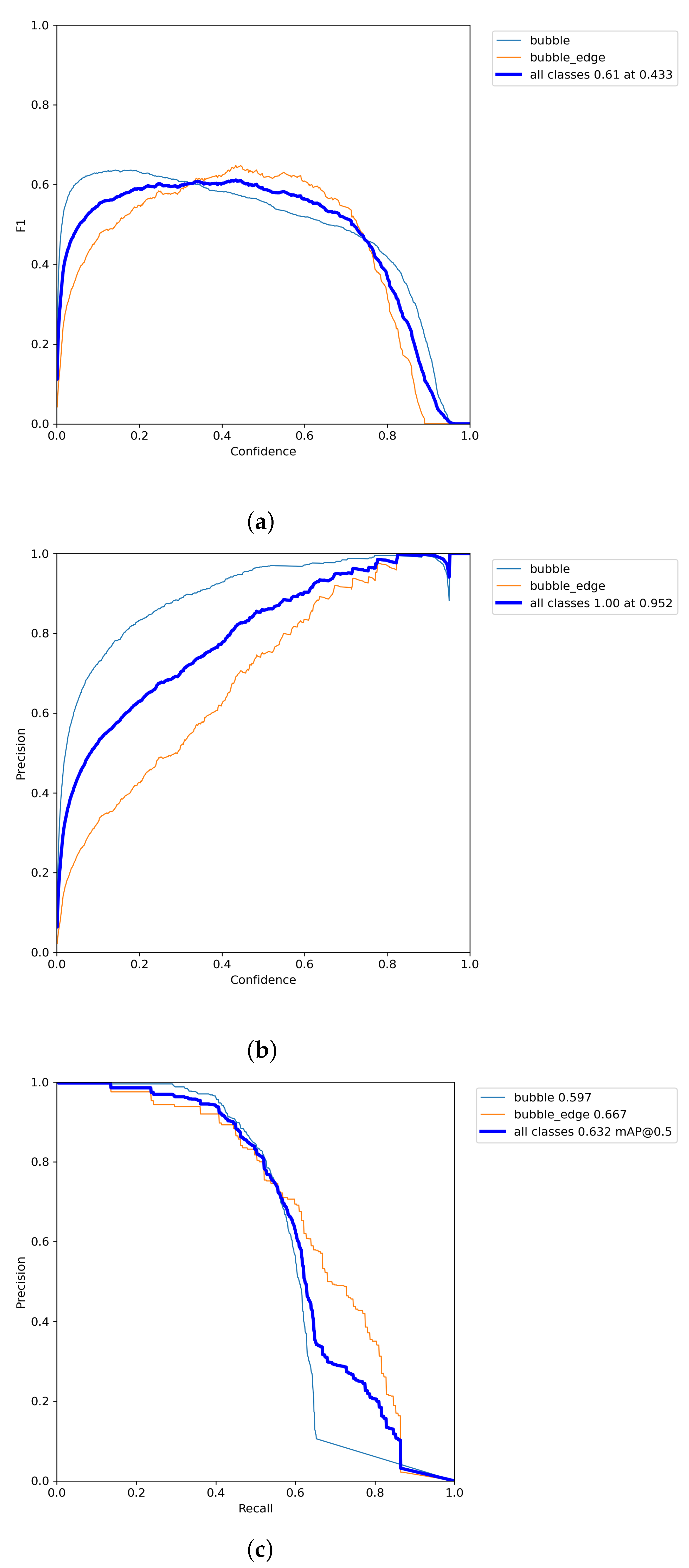

In this paper, for the IGBT module solder layer bubble defect detection task, two types of IGBT module solder layer bubble defect data sets are collected and labeled, and an improved algorithm based on YOLOv5, the YOLOv5n_SEST model, is proposed. A detection layer for small target bubbles is added, which effectively detects more tiny bubbles. For the problem of unbalanced samples in the data set, the bounding box loss function is improved to improve the comprehensive detection effect of the model. For improving the detection capability capacity of bubble defects capability, the C3STR module is added to the backbone network; Swin Transformer Blocks are used to construct multi-layer feature maps; and the self-attention is restricted to the moving window to ensure that the computational effort is within the controllable range to achieve efficient feature extraction. The experimental results show that the improved model achieves 94.5% accuracy for common bubble defects, 85.4% accuracy for all defects, 5.6% improvement in mAP, and 110 f/s speed. The algorithm can well perform the task of real-time detection of industrial IGBT solder layer defects, ensuring the detection speed of the one-stage algorithm and improving the accuracy of the YOLOv5 model. The algorithm can well perform the task of real-time detection of industrial IGBT solder layer defects, ensuring the detection speed of the one-stage algorithm and improving the accuracy of the YOLOv5 model.

The degradation of the detection performance for irregular and dense bubbles at the edges is a limitation of the model. Therefore, we will continue to explore and optimize the performance of this model for edge bubbles, study the algorithms that are universally applicable to various industrial fields, and promote the application of artificial intelligence in unmanned quality inspection and intelligent inspection processes in the future.