Smart Agriculture: A Fruit Flower Cluster Detection Strategy in Apple Orchards Using Machine Vision and Learning

Abstract

Featured Application

Abstract

1. Introduction

1.1. Typical Orchard Setup

1.2. Benefits of Object Detection for Apple Orchards

1.3. Challenges

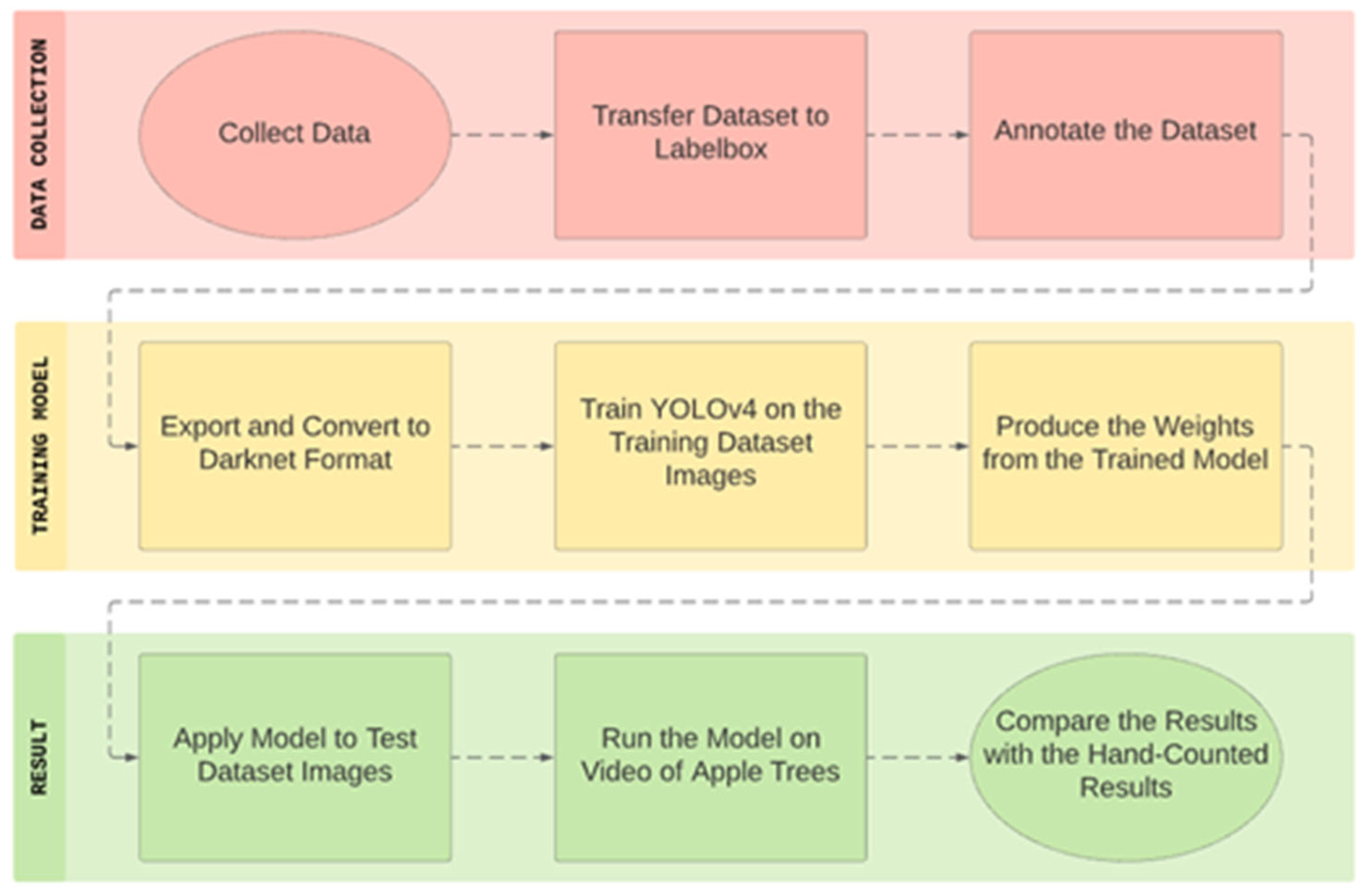

2. Experimental Setup and Detection Method

2.1. Environmental and Experimental Setup

2.2. Data Collection Process

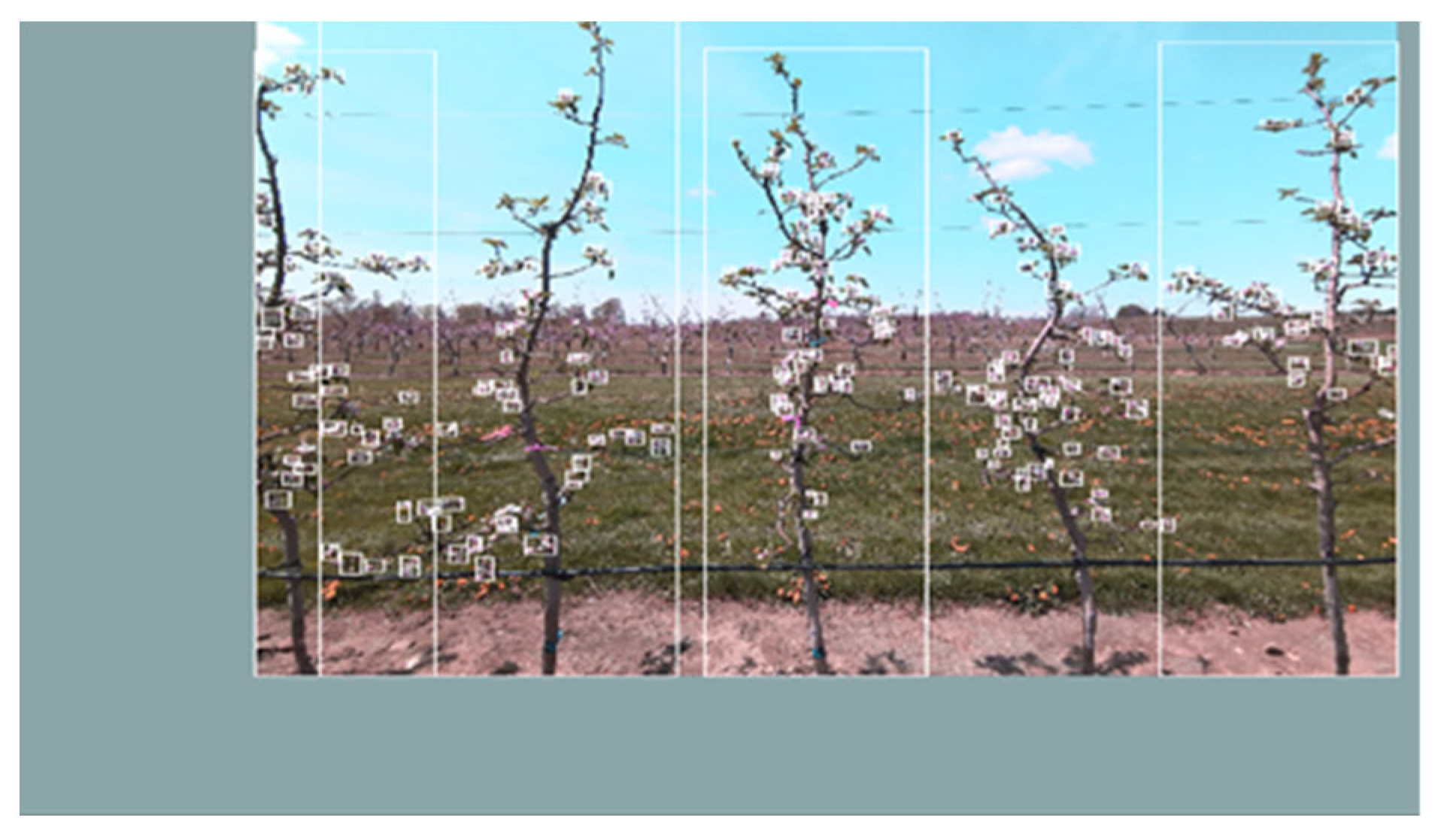

2.2.1. Data Collection of Fruit Flowers

2.2.2. Labelling Process for the Dataset

2.2.3. Processing the Dataset

2.3. Proposed Method for Flower Cluster Detection

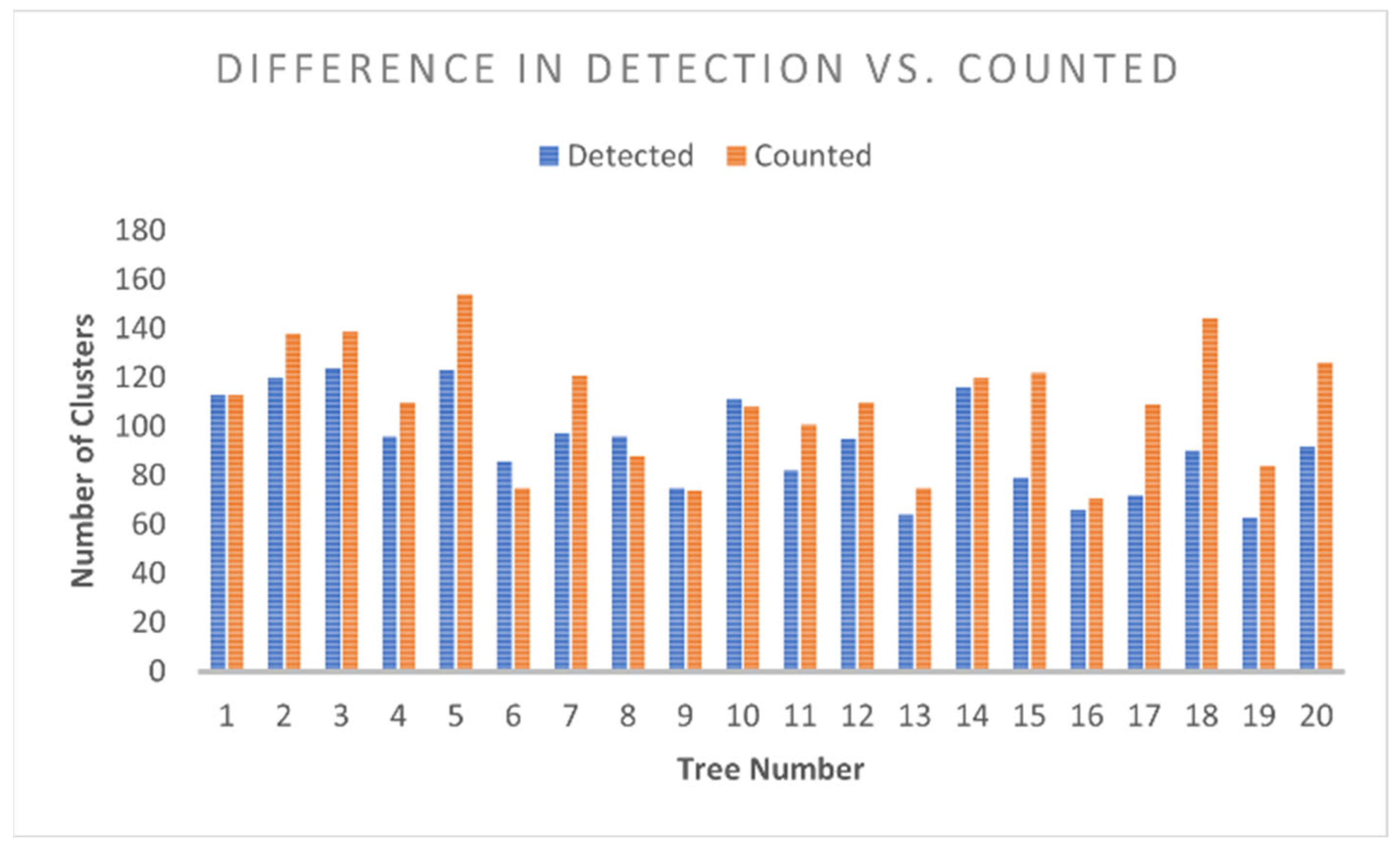

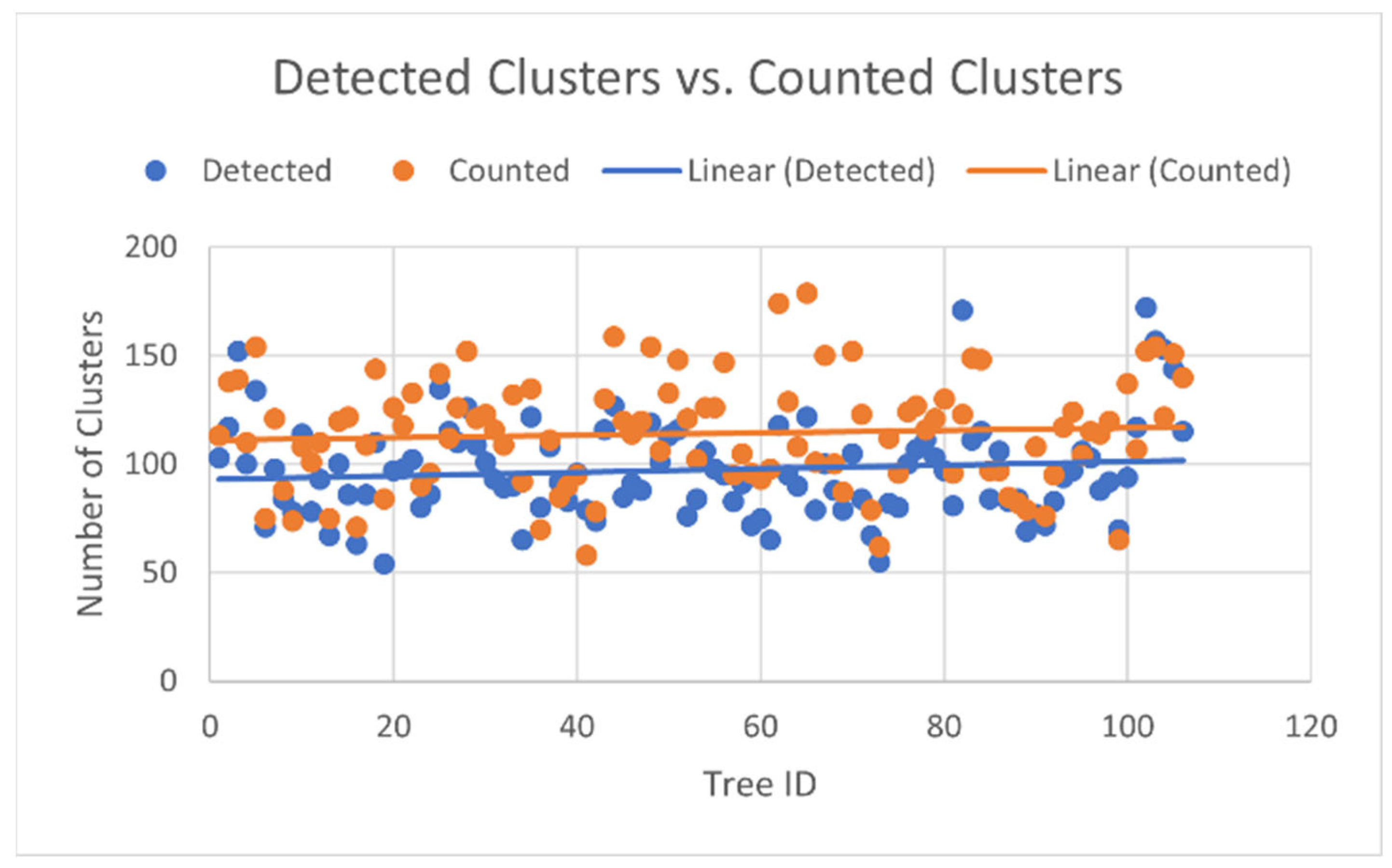

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Melton, A. USDA ERS—Chart Detail. United States Department of Agriculture. 2019. Available online: https://www.ers.usda.gov/data-products/chart-gallery/gallery/chart-detail/?chartId=58322 (accessed on 28 December 2020).

- FAOSTAT. Available online: http://www.fao.org/faostat/en/#data/QC (accessed on 28 December 2020).

- Samnegård, U.; Alins, G.; Boreux, V.; Bosch, J.; García, D.; Happe, A.; Klein, A.; Miñarro, M.; Mody, K.; Porcel, M.; et al. Management trade-offs on ecosystem services in apple orchards across Europe: Direct and indirect effects of organic production. J. Appl. Ecol. 2019, 56, 802–811. [Google Scholar] [CrossRef]

- Sun, G.; Wang, X.; Yang, H.; Zhang, X. A canopy information measurement method for modern standardized apple orchards based on UAV multimodal information. Sensors 2020, 20, 2985. [Google Scholar] [CrossRef] [PubMed]

- Häni, N.; Roy, P.; Isler, V. A comparative study of fruit detection and counting methods for yield mapping in apple orchards. J. Field Robot. 2020, 37, 263–282. [Google Scholar] [CrossRef]

- Porcel, M.; Andersson, G.K.S.; Pålsson, J.; Tasin, M. Organic management in apple orchards: Higher impacts on biological control than on pollination. J. Appl. Ecol. 2018, 55, 2779–2789. [Google Scholar] [CrossRef]

- Chouinard, G.; Veilleux, J.; Pelletier, F.; Larose, M.; Philion, V.; Joubert, V.; Cormier, D. Impact of exclusion netting row covers on ‘honeycrisp’ apple trees grown under northeastern north American conditions: Effects on photosynthesis and fruit quality. Insects 2019, 10, 214. [Google Scholar] [CrossRef]

- Christiansen, P.; Nielsen, L.N.; Steen, K.A.; Jørgensen, R.N.; Karstoft, H. DeepAnomaly: Combining background subtraction and deep learning for detecting obstacles and anomalies in an agricultural field. Sensors 2016, 16, 1904. [Google Scholar] [CrossRef]

- Thinning of Tree Fruit. Available online: http://www.omafra.gov.on.ca/english/crops/hort/thinning.htm (accessed on 28 December 2020).

- Sáez, A.; di Virgilio, A.; Tiribelli, F.; Geslin, B. Simulation models to predict pollination success in apple orchards: A useful tool to test management practices. Apidologie 2018, 49, 551–561. [Google Scholar] [CrossRef]

- Stefas, N.; Bayram, H.; Isler, V. Vision-based monitoring of orchards with UAVs. Comput. Electron. Agric. 2019, 163, 104814. [Google Scholar] [CrossRef]

- Wang, D.; Wang, L. Canopy interception of apple orchards should not be ignored when assessing evapotranspiration partitioning on the Loess Plateau in China. Hydrol. Process. 2019, 33, 372–382. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; Jsselmuiden, J.I.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Kayani, F.A. Effect of climatic factors on sooty blotch, flyspeck intensity andfruit quality of apple (Malus domestica Borkh.). Pure Appl. Biol. 2018, 7, 727–735. [Google Scholar] [CrossRef]

- Horng, G.J.; Liu, M.X.; Chen, C.C. The Smart Image Recognition Mechanism for Crop Harvesting System in Intelligent Agriculture. IEEE Sens. J. 2020, 20, 2766–2781. [Google Scholar] [CrossRef]

- Kragh, M.F.; Christiansen, P.; Laursen, M.; Steen, K.A.; Green, O.; Karstoft, H.; Jørgensen, R.N. FieldSAFE: Dataset for obstacle detection in agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef]

- Zhang, J.; Karkee, M.; Zhang, Q.; Zhang, X.; Yaqoob, M.; Fu, L.; Wang, S. Multi-class object detection using faster R-CNN and estimation of shaking locations for automated shake-and-catch apple harvesting. Comput. Electron. Agric. 2020, 173, 105384. [Google Scholar] [CrossRef]

- Baek, I.; Cho, B.-K.; Gadsden, S.A.; Eggleton, C.; Oh, M.; Mo, C.; Kim, M.S. A novel hyperspectral line-scan imaging method for whole surfaces of round shaped agricultural products. Biosyst. Eng. 2019, 188, 57–66. [Google Scholar] [CrossRef]

- Bonadies, S.; Smith, N.; Niewoehner, N.; Lee, A.S.; Lefcourt, A.M.; Gadsden, S.A. Development of Proportional-Integral-Derivative and Fuzzy Control Strategies for Navigation in Agricultural Environments. J. Dyn. Syst. Meas. Control. Trans. ASME 2018, 140, 061007. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Mbouembe, P.L.T.; Kim, J.H. YOLO-tomato: A robust algorithm for tomato detection based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef]

- Bonadies, S.; Gadsden, S.A. An overview of autonomous crop row navigation strategies for unmanned ground vehicles. Eng. Agric. Environ. Food 2019, 12, 24–31. [Google Scholar] [CrossRef]

- Rong, D.; Xie, L.; Ying, Y. Computer vision detection of foreign objects in walnuts using deep learning. Comput. Electron. Agric. 2019, 162, 1001–1010. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D.; Lin, S. Feeding behavior recognition for group-housed pigs with the Faster R-CNN. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Detection of apple lesions in orchards based on deep learning methods of cyclegan and YoloV3-dense. J. Sens. 2019, 2019, 1–13. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. 2020. Available online: https://github.com/AlexeyAB/darknet (accessed on 11 June 2020).

- Aggelopoulou, A.D.; Bochtis, D.; Fountas, S.; Swain, K.C.; Gemtos, T.A.; Nanos, G.D. Yield prediction in apple orchards based on image processing. Precis. Agric. 2011, 12, 448–456. [Google Scholar] [CrossRef]

- Wang, Q.; Nuske, S.; Bergerman, M.; Singh, S. Automated Crop Yield Estimation for Apple Orchards. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 745–758. [Google Scholar] [CrossRef]

- Azure Kinect DK Documentation | Microsoft Docs. Available online: https://docs.microsoft.com/en-us/azure/kinect-dk/ (accessed on 3 January 2021).

- Labelbox: The Leading Training Data Platform. Available online: https://labelbox.com/ (accessed on 3 January 2021).

- Roboflow: Raw Images to Trained Computer Vision Model. Available online: https://roboflow.com/ (accessed on 3 January 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2014; Volume 8691, pp. 346–361. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multi-species fruit flower detection using a refined semantic segmentation network. IEEE Robot. Autom. Lett. 2018, 3, 3003. [Google Scholar] [CrossRef]

- Lim, J.Y.; Ahn, H.S.; Nejati, M.; Bell, J.; Williams, H.; MacDonald, B.A. Deep neural network based real-time kiwi fruit flower detection in an orchard environment. In Proceedings of the Australasian Conference on Robotics and Automation, ACRA, Adelaide, Australia, 9–11 December 2019. [Google Scholar]

- Lee, J. The Detection of Fruit Flower Clusters in Apple Orchards Using Machine Learning. Master’s Thesis, University of Guelph, Guelph, ON, Canada, December 2021. [Google Scholar]

| Precision | Recall | F1-Score | Cluster Precision | |

|---|---|---|---|---|

| Training | 0.95 | 0.85 | 0.89 | 0.98 |

| Testing | 0.90 | 0.72 | 0.80 | 0.88 |

| Side of Row | Percentage Error | Maximum Difference | Minimum Difference | Average Difference |

| Right | −14.52% | 72 | 0 | 21 |

| Left | −13.49% | 57 | 1 | 20 |

| Average Error | Maximum Difference | Minimum Difference | Average Difference | Average Clusters Counted | Most Clusters Detected | Least Clusters Detected | Most Clusters Counted | Least Clusters Counted | Average Standard Deviation |

|---|---|---|---|---|---|---|---|---|---|

| −14.00% | 65 | 1 | 21 | 114 | 172 | 54 | 179 | 58 | 6.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Gadsden, S.A.; Biglarbegian, M.; Cline, J.A. Smart Agriculture: A Fruit Flower Cluster Detection Strategy in Apple Orchards Using Machine Vision and Learning. Appl. Sci. 2022, 12, 11420. https://doi.org/10.3390/app122211420

Lee J, Gadsden SA, Biglarbegian M, Cline JA. Smart Agriculture: A Fruit Flower Cluster Detection Strategy in Apple Orchards Using Machine Vision and Learning. Applied Sciences. 2022; 12(22):11420. https://doi.org/10.3390/app122211420

Chicago/Turabian StyleLee, Joseph, S. Andrew Gadsden, Mohammad Biglarbegian, and John A. Cline. 2022. "Smart Agriculture: A Fruit Flower Cluster Detection Strategy in Apple Orchards Using Machine Vision and Learning" Applied Sciences 12, no. 22: 11420. https://doi.org/10.3390/app122211420

APA StyleLee, J., Gadsden, S. A., Biglarbegian, M., & Cline, J. A. (2022). Smart Agriculture: A Fruit Flower Cluster Detection Strategy in Apple Orchards Using Machine Vision and Learning. Applied Sciences, 12(22), 11420. https://doi.org/10.3390/app122211420