Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data

Abstract

1. Introduction

1.1. Motivation and Incitement

1.2. Literature Review and Research Gaps

1.3. Major Contribution and Organization

- A Novel Hybridisation of the LSTM and Capsule layers is proposed using LSTM layers as encoders and Capsules as decoders;

- The hybridisation is implemented in a novel multi-channel input, merged output model architecture for use on raw multivariate time series data;

- The model is tested on a real-world dataset and benchmarked on another real-world dataset against prominent detection methods in the field.

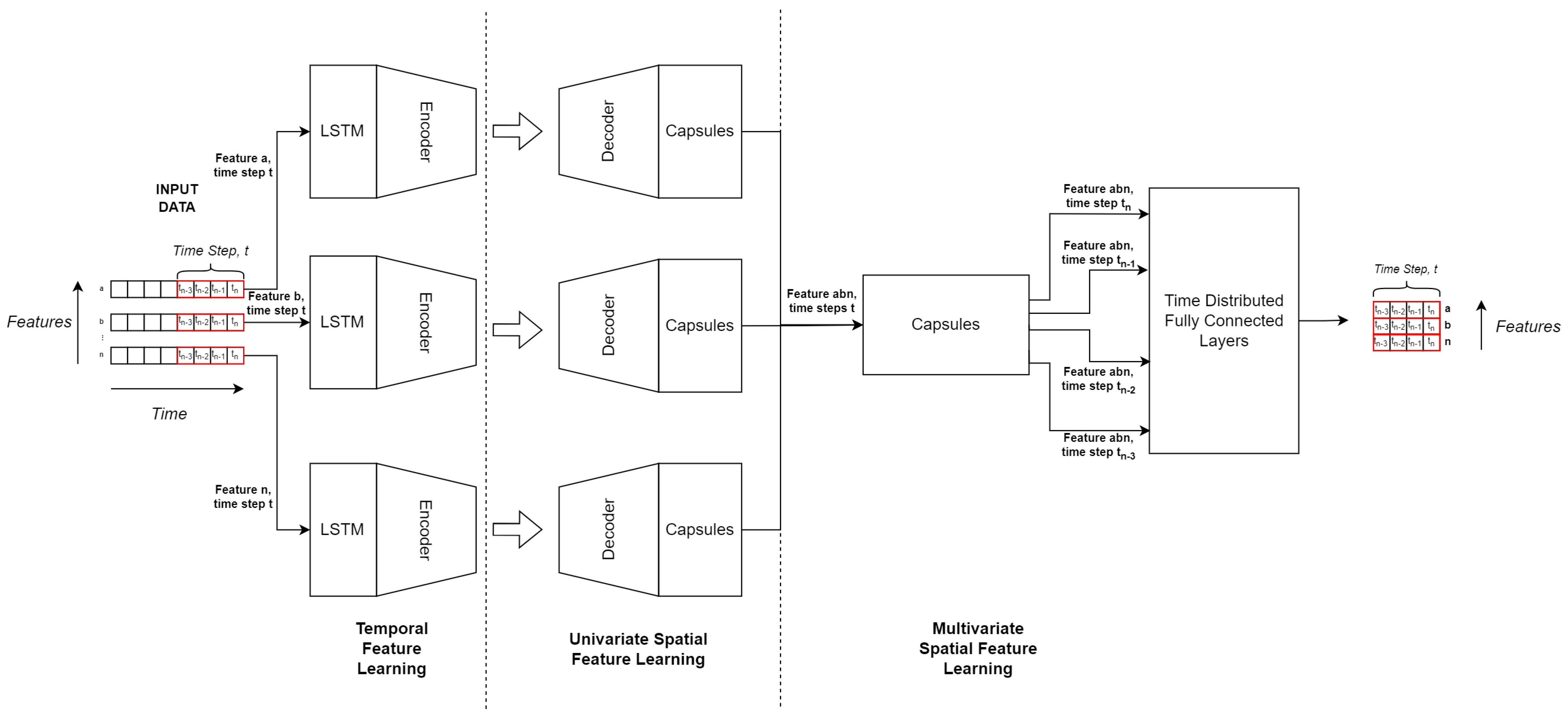

2. LSTMCaps Autoencoder Network

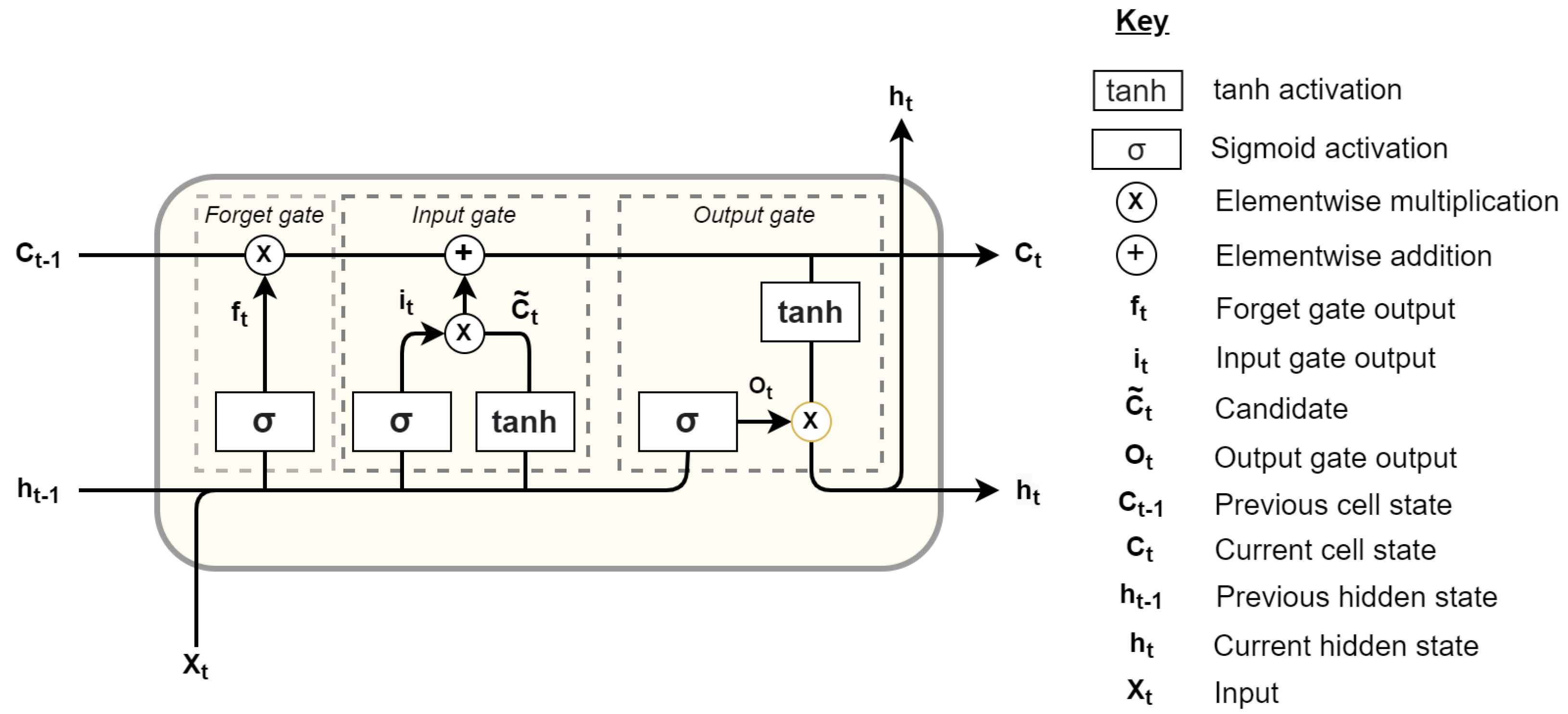

2.1. Long Short-Term Memory Network

2.2. Capsule Network

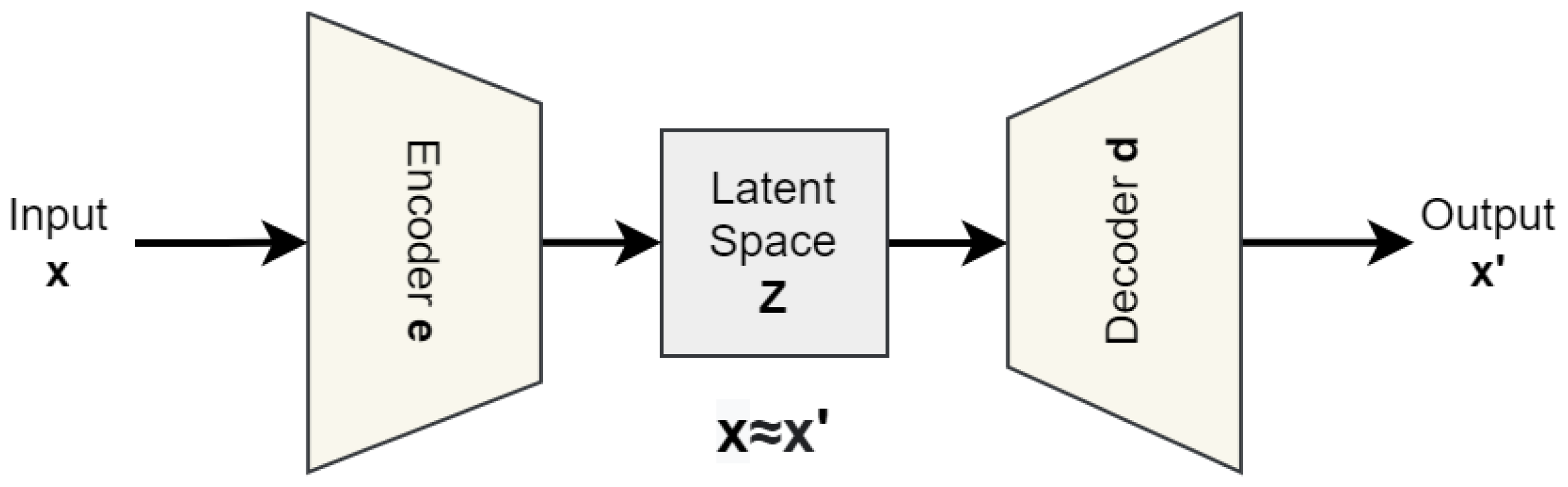

2.3. Autoencoder

2.4. Proposed Model Architecture

2.5. Model Training and Anomaly Detection Method

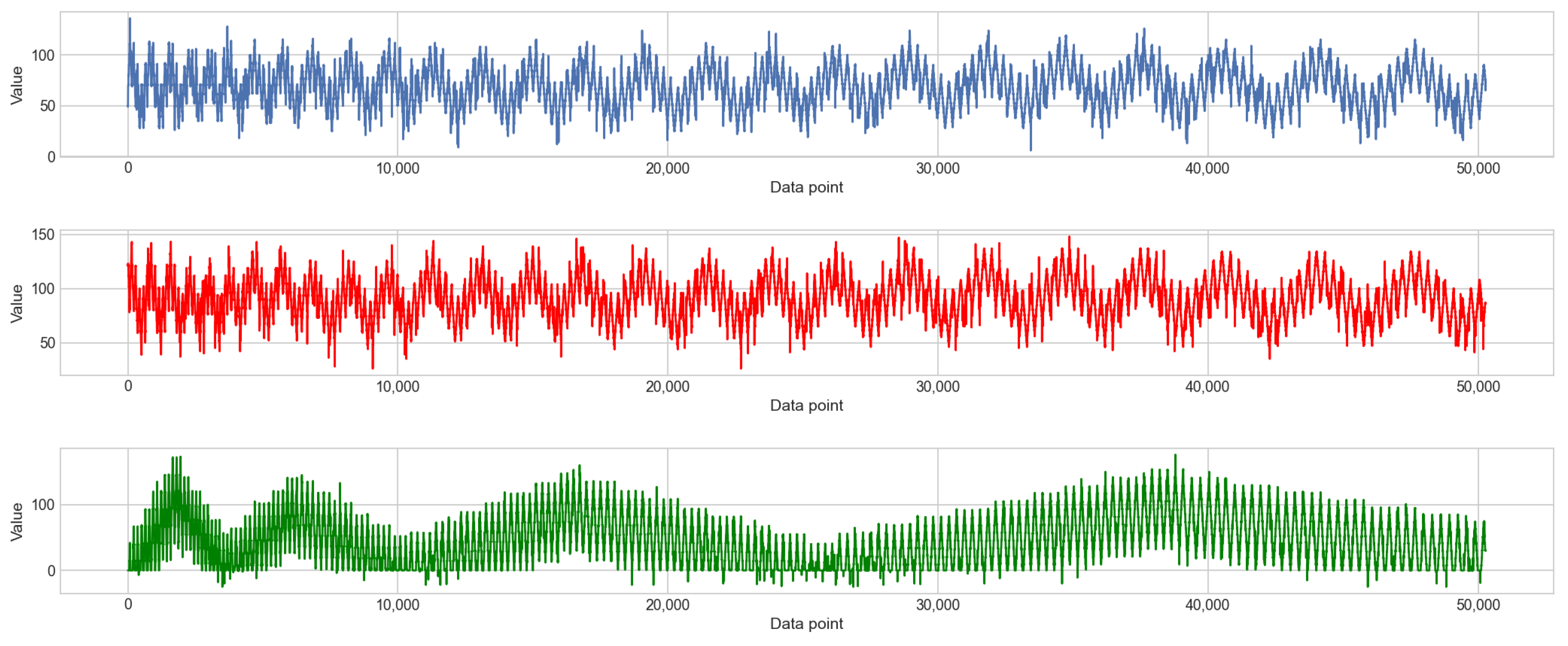

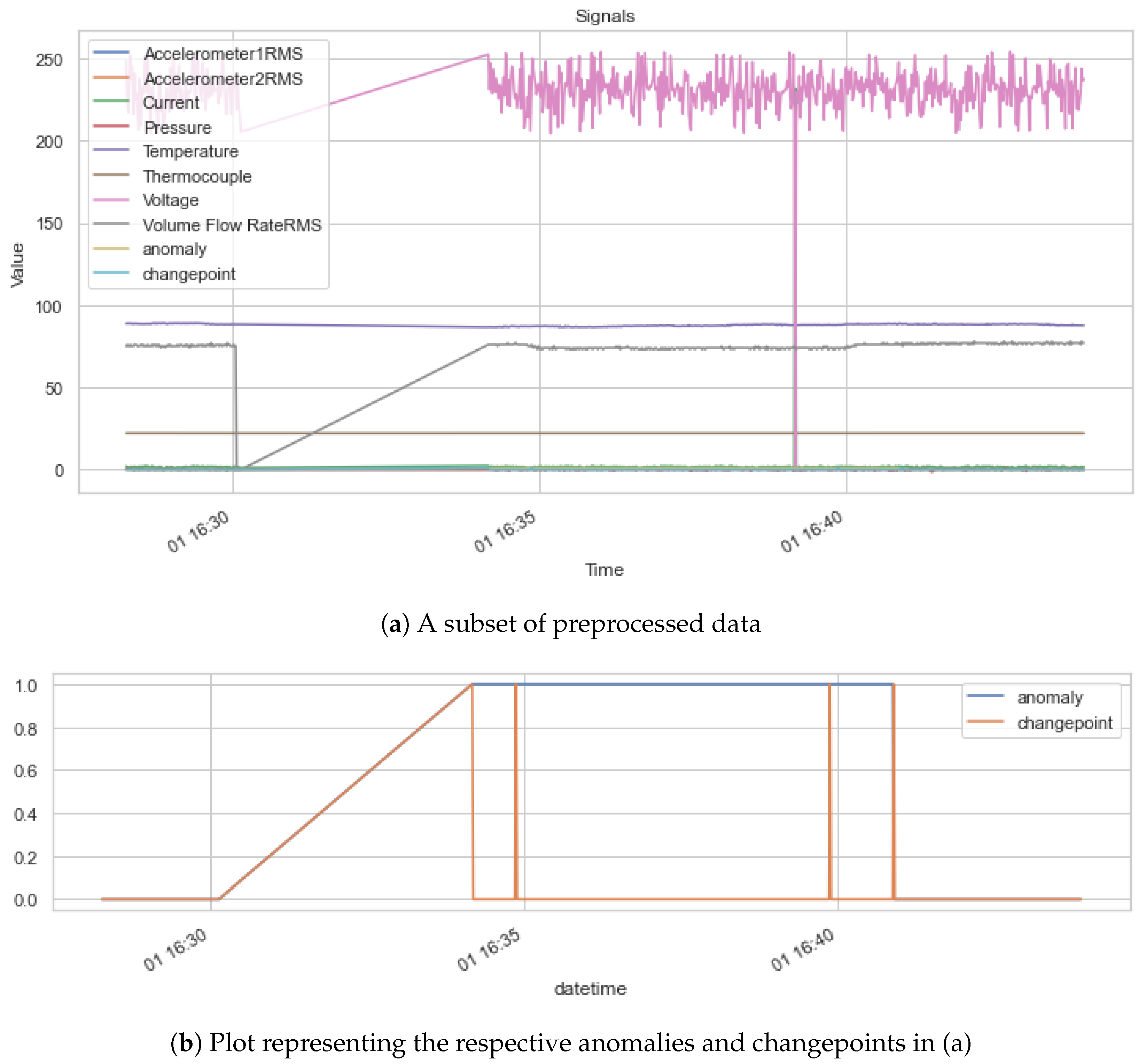

2.5.1. Data Preprocessing

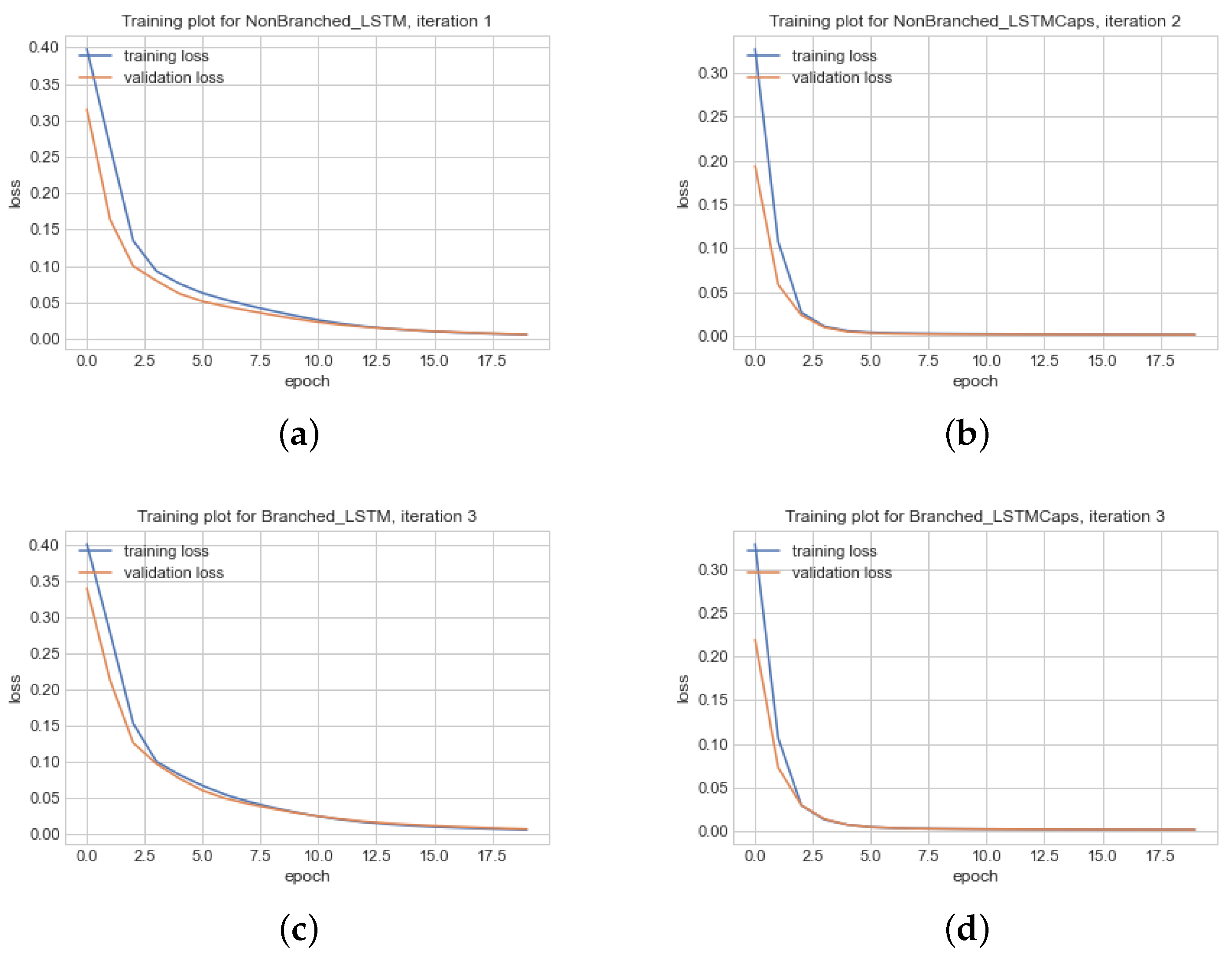

2.5.2. Training

2.5.3. Anomaly Detection

2.6. Experimental Results

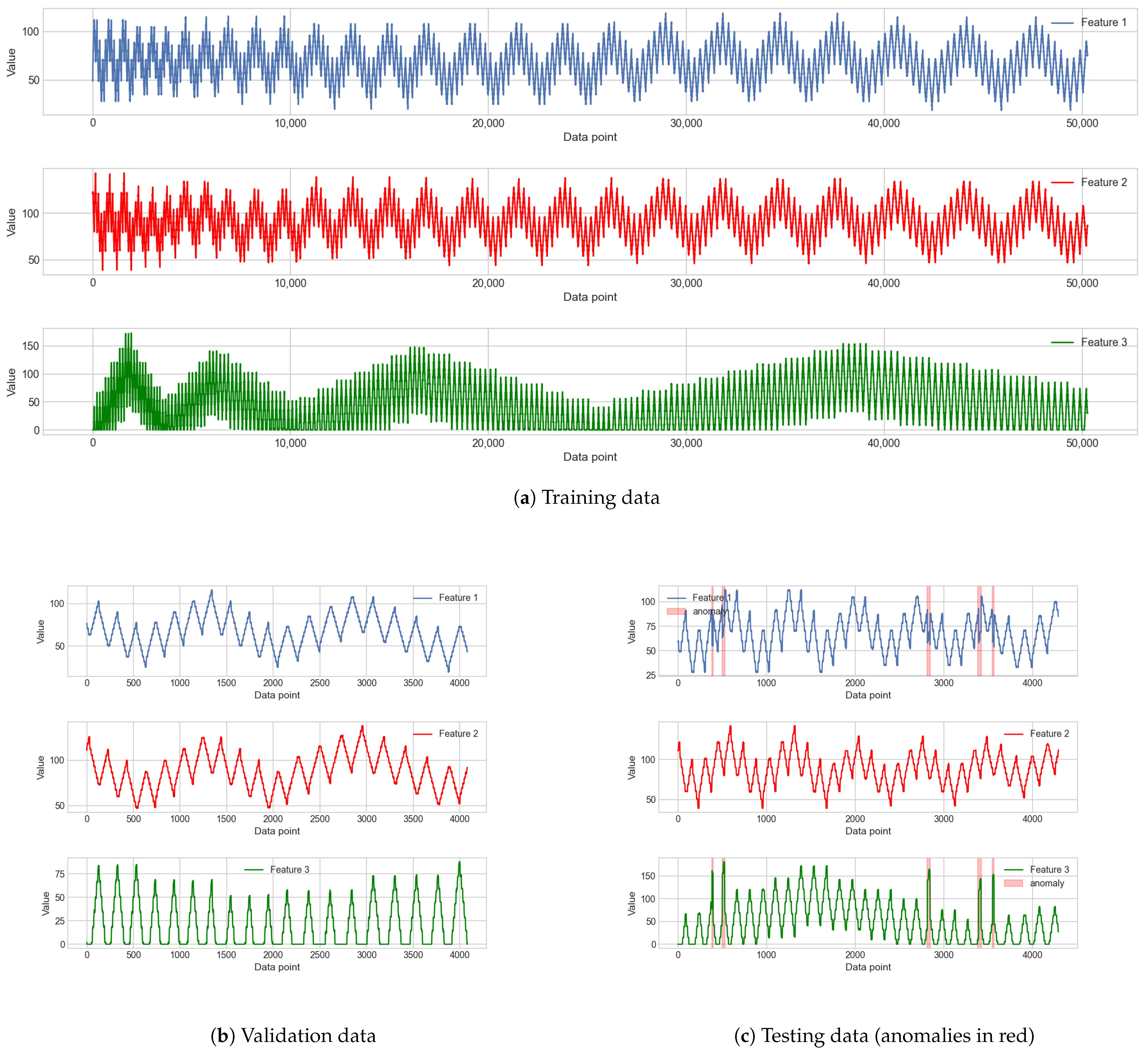

2.6.1. Experimental Design

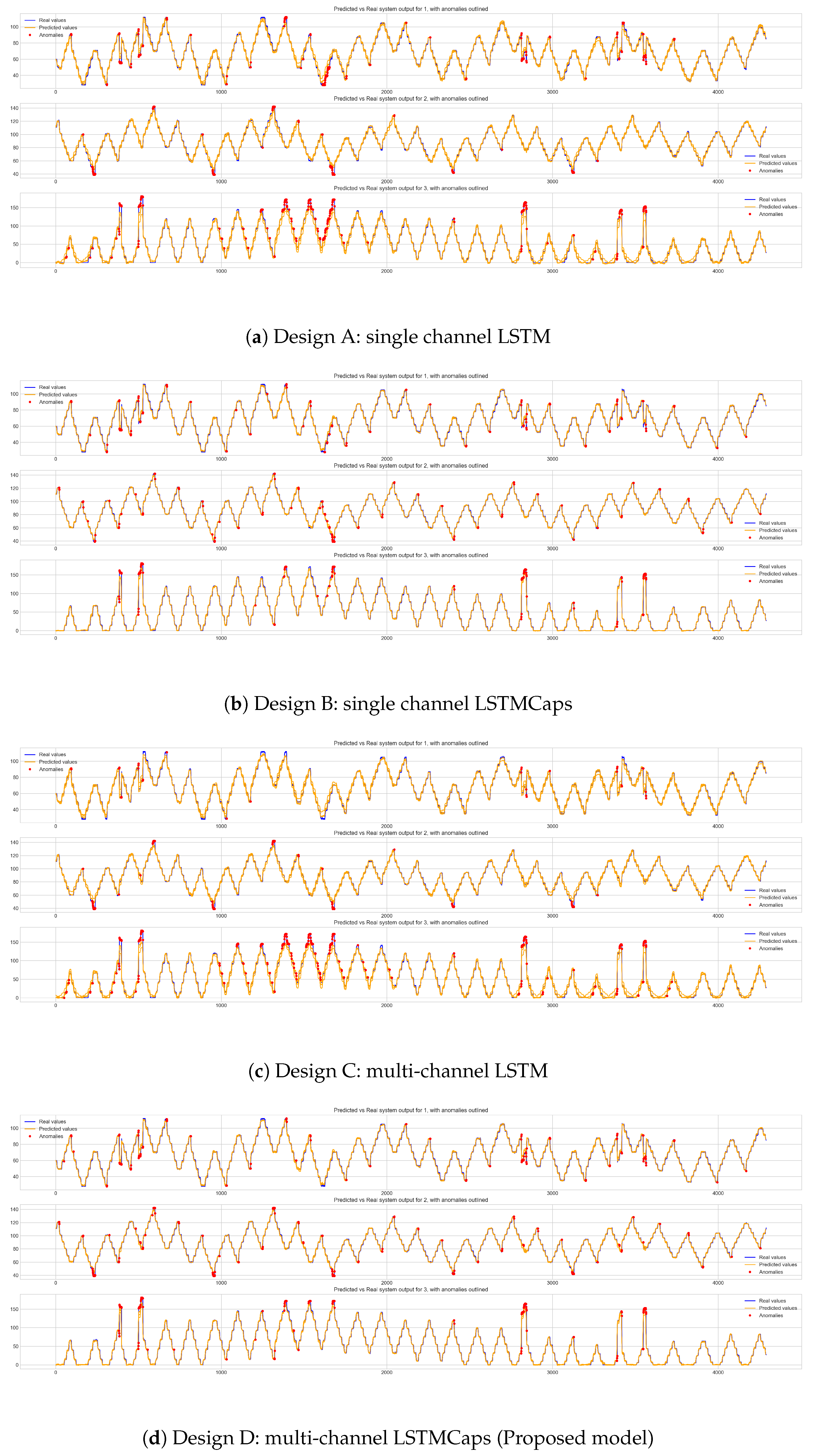

2.6.2. Drone Dataset Anomaly Detection

2.6.3. Drone Dataset Outlier Resilient Anomaly Detection

2.6.4. SKAB Anomaly Benchmark

3. Discussion

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, J.; Kao, H.A.; Yang, S. Service innovation and smart analytics for Industry 4.0 and big data environment. Procedia CIRP 2014, 16, 3–8. [Google Scholar] [CrossRef]

- Kim, H.S.; Park, S.K.; Kim, Y.; Park, C.G. Hybrid Fault Detection and Isolation Method for UAV Inertial Sensor Redundancy Management System. IFAC Proc. Vol. 2005, 16, 265–270. [Google Scholar] [CrossRef]

- Jafari, M. Optimal redundant sensor configuration for accuracy increasing in space inertial navigation system. Aerosp. Sci. Technol. 2015, 47, 467–472. [Google Scholar] [CrossRef]

- Dubrova, E. Hardware Redundancy; Springer: New York, NY, USA, 2013; pp. 55–86. [Google Scholar] [CrossRef]

- Zhang, S.; Lang, Z.Q. SCADA-data-based wind turbine fault detection: A dynamic model sensor method. Control Eng. Pract. 2020, 102, 104546. [Google Scholar] [CrossRef]

- Zimek, A.; Filzmoser, P. There and back again: Outlier detection between statistical reasoning and data mining algorithms. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, 1–26. [Google Scholar] [CrossRef]

- Gangsar, P.; Tiwari, R. Signal based condition monitoring techniques for fault detection and diagnosis of induction motors: A state-of-the-art review. Mech. Syst. Signal Process. 2020, 144, 106908. [Google Scholar] [CrossRef]

- Paliwal, M.; Kumar, U.A. Neural networks and statistical techniques: A review of applications. Expert Syst. Appl. 2009, 36, 2–17. [Google Scholar] [CrossRef]

- Ergen, T.; Kozat, S.S. Unsupervised anomaly detection with LSTM neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3127–3141. [Google Scholar] [CrossRef]

- Lin, S.; Clark, R.; Birke, R.; Sch, S. Anomaly Detection for Time Series Using Vae-Lstm Hybrid Model. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 4322–4326. [Google Scholar]

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-head CNN–RNN for multi-time series anomaly detection: An industrial case study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Sabir, R.; Rosato, D.; Hartmann, S.; Gühmann, C. LSTM based Bearing Fault Diagnosis of Electrical Machines using Motor Current Signal. In Proceedings of the 2019 18th IEEE International Conference on Machine Learning And Applications, Boca Raton, FL, USA, 16–19 December 2019; pp. 613–618. [Google Scholar] [CrossRef]

- Wang, R.; Feng, Z.; Huang, S.; Fang, X.; Wang, J. Research on Voltage Waveform Fault Detection of Miniature Vibration Motor Based on Improved WP-LSTM. Micromachines 2020, 11, 753. [Google Scholar] [CrossRef]

- Deng, F.; Pu, S.; Chen, X.; Shi, Y.; Yuan, T.; Shengyan, P. Hyperspectral image classification with capsule network using limited training samples. Sensors 2018, 18, 3153. [Google Scholar] [CrossRef] [PubMed]

- Byerly, A.; Kalganova, T.; Dear, I. No routing needed between capsules. Neurocomputing 2021, 463, 545–553. [Google Scholar] [CrossRef]

- Nanduri, A.; Sherry, L. Anomaly detection in aircraft data using Recurrent Neural Networks (RNN). In Proceedings of the ICNS 2016: Securing an Integrated CNS System to Meet Future Challenges, Herndon, VA, USA, 19–21 April 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowlege-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Provotar, O.I.; Linder, Y.M.; Veres, M.M. Unsupervised Anomaly Detection in Time Series Using LSTM-Based Autoencoders. In Proceedings of the 2019 IEEE International Conference on Advanced Trends in Information Theory, ATIT 2019–Proceedings, Kyiv, Ukraine, 18–20 December 2019; pp. 513–517. [Google Scholar] [CrossRef]

- Li, Q.; Cai, W.; Wang, X.; Zhou, Y.; Feng, D.D.; Chen, M. Medical image classification with convolutional neural network. In Proceedings of the 2014 13th International Conference on Control Automation Robotics and Vision, ICARCV 2014, Singapore, 10–12 December 2014; Volume 2014, pp. 844–848. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse region-based CNN for hyperspectral image classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Litoiu, M. Fault Detection in Sensors Using Single and Multi-Channel Weighted Convolutional Neural Networks. In Proceedings of the 10th International Conference on the Internet of Things, Malmö, Sweden, 6–9 October 2020; pp. 1–8. [Google Scholar]

- Hsu, C.Y.; Liu, W.C. Multiple time-series convolutional neural network for fault detection and diagnosis and empirical study in semiconductor manufacturing. J. Intell. Manuf. 2021, 32, 823–836. [Google Scholar] [CrossRef]

- Kim, T.Y.; Cho, S.B. Web traffic anomaly detection using C-LSTM neural networks. Expert Syst. Appl. 2018, 106, 66–76. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. Adv. Neural Inf. Process. Syst. 2017, 2017, 3857–3867. [Google Scholar]

- Afshar, P.; Mohammadi, A.; Plataniotis, K.N. Brain Tumor Type Classification via Capsule Networks. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3129–3133. [Google Scholar] [CrossRef]

- Paoletti, M.E.; Haut, J.M.; Fernandez-Beltran, R.; Plaza, J.; Plaza, A.; Li, J.; Pla, F. Capsule Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2145–2160. [Google Scholar] [CrossRef]

- Ma, X.; Zhong, H.; Li, Y.; Ma, J.; Cui, Z.; Wang, Y. Forecasting Transportation Network Speed Using Deep Capsule Networks With Nested LSTM Models. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4813–4824. [Google Scholar] [CrossRef]

- Huang, R.; Li, J.; Li, W.; Cui, L. Deep Ensemble Capsule Network for Intelligent Compound Fault Diagnosis Using Multisensory Data. IEEE Trans. Instrum. Meas. 2020, 69, 2304–2314. [Google Scholar] [CrossRef]

- Fahim, S.R.R.; Sarker, S.K.; Muyeen, S.M.; Sheikh, M.R.I.; Das, S.K.; Simoes, M.G. A Robust Self-Attentive Capsule Network for Fault Diagnosis of Series-Compensated Transmission Line. IEEE Trans. Power Deliv. 2021, 8977, 3846–3857. [Google Scholar] [CrossRef]

- Wang, Z.; Oates, T. Encoding time series as images for visual inspection and classification using tiled convolutional neural networks. AAAI Workshop Tech. Rep. 2015, WS-15-14, 40–46. [Google Scholar]

- Shen, L.; Yu, Z.; Ma, Q.; Kwok, J.T. Time Series Anomaly Detection with Multiresolution Ensemble Decoding. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 9567–9575. [Google Scholar] [CrossRef]

- Kieu, T.; Yang, B.; Guo, C.; Cirstea, R.G.; Zhao, Y.; Song, Y.; Jensen, C.S. Anomaly Detection in Time Series with Robust Variational Quasi-Recurrent Autoencoders. In Proceedings of the 2022 IEEE 38th International Conference on Data Engineering, Kuala Lumpur, Malaysia, 9–12 May 2022; pp. 1342–1354. [Google Scholar] [CrossRef]

- Radaideh, M.I.; Pappas, C.; Walden, J.; Lu, D.; Vidyaratne, L.; Britton, T.; Rajput, K.; Schram, M.; Cousineau, S. Time series anomaly detection in power electronics signals with recurrent and ConvLSTM autoencoders. Digit. Signal Process. 2022, 130, 103704. [Google Scholar] [CrossRef]

- Hinton, G.; Salakhutdinov, R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Ahsan, M.M.; Mahmud, M.A.P.; Saha, P.K.; Gupta, K.D.; Siddique, Z. Effect of Data Scaling Methods on Machine Learning Algorithms and Model Performance. Technologies 2021, 9, 52. [Google Scholar] [CrossRef]

- Fisher, R.A. Statistical Methods for Research Workers. In Breakthroughs in Statistics: Methodology and Distribution; Springer: New York, NY, USA, 1992; pp. 66–70. [Google Scholar] [CrossRef]

- Sternharz, G.; Skackauskas, J.; Elhalwagy, A.; Grichnik, A.J.; Kalganova, T.; Huda, M.N. Self-Protected Virtual Sensor Network for Microcontroller Fault Detection. Sensors 2022, 22, 454. [Google Scholar] [CrossRef]

- Van Rijsbergen, C.; Van Rijsbergen, C. Information Retrieval; Butterworths: New York, NY, USA, 1979. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015–Conference Track Proceedings, Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Katser, I.D.; Kozitsin, V.O. Skoltech Anomaly Benchmark (SKAB). Kaggle 2020. [Google Scholar] [CrossRef]

- Lavin, A.; Ahmad, S. Evaluating Real-time Anomaly Detection Algorithms—The Numenta Anomaly Benchmark. CoRR 2015. Available online: http://xxx.lanl.gov/abs/1510.03336 (accessed on 4 June 2021).

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of Adam and Beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Zhang, C.; Song, D.; Chen, Y.; Feng, X.; Lumezanu, C.; Cheng, W.; Ni, J.; Zong, B.; Chen, H.; Chawla, N.V. A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, 31st Innovative Applications of Artificial Intelligence Conference, IAAI 2019 and the 9th AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 1409–1416. [Google Scholar] [CrossRef]

- Filonov, P.; Lavrentyev, A.; Vorontsov, A. Multivariate Industrial Time Series with Cyber-Attack Simulation: Fault Detection Using an LSTM-based Predictive Data Model. arXiv 2016, arXiv:1612.06676. [Google Scholar]

- Chollet, F. Building Autoencoders in Keras. Keras Blog 2016, 14. Available online: https://blog.keras.io/building-autoencoders-in-keras.html (accessed on 3 October 2022).

- Gross, K.; Singer, R.; Wegerich, S.; Herzog, J.; VanAlstine, R.; Bockhorst, F. Application of a Model-based Fault Detection System to Nuclear Plant Signals. In Proceedings of the International Conference on Intelligent Systems Applications to Power Systems, Las Palmas de Gran Canaria, Spain, 7–9 January 1997; p. 6. [Google Scholar]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008. [Google Scholar] [CrossRef]

- Vijay, P. Timeseries anomaly detection using an Autoencoder. Keras 2020. Available online: https://keras.io/examples/timeseries/timeseries_anomaly_detection/ (accessed on 3 October 2022).

- Bowman, S.R.; Vilnis, L.; Vinyals, O.; Dai, A.M.; Jozefowicz, R.; Bengio, S. Generating sentences from a continuous space. In Proceedings of the CoNLL 2016—20th SIGNLL Conference on Computational Natural Language Learning, Berlin, Germany, 11–12 August 2016; pp. 10–21. [Google Scholar] [CrossRef]

- Chen, J.; Sathe, S.; Aggarwal, C.; Turaga, D. Outlier detection with autoencoder ensembles. In Proceedings of the 17th SIAM International Conference on Data Mining, SDM 2017, Houston, TX, USA, 27–29 April 2017; pp. 90–98. [Google Scholar] [CrossRef]

- Blázquez-García, A.; Conde, A.; Mori, U.; Lozano, J.A. A Review on Outlier/Anomaly Detection in Time Series Data. ACM Comput. Surv. 2021, 54, 1–33. [Google Scholar] [CrossRef]

| Source | Degrees of Freedom (DF) | Sum of Squares (SS) | Mean Square (MS) | F-Ratio | p-Value |

|---|---|---|---|---|---|

| Between Groups | Right tail | ||||

| Error | |||||

| Total | |||||

| Model | Trainable Parameters |

|---|---|

| Design A: single channel LSTM | 25,338 |

| Design B: single channel LSTMCaps | 25,248 |

| Design C: multi-channel LSTM | 25,473 |

| Design D: multi-channel LSTMCaps (proposed) | 24,663 |

| Hyperparameter | Value | Hyperparameter | Value |

|---|---|---|---|

| Epochs | 20 | Loss Function | Huber |

| Batch size | 1024 | Optimiser | Adam [40] |

| Learning rate | 0.0003 | LSTM Activation | tanh |

| Time Steps | 4 | Capsule Activation | relu |

| Model | Score | Final Training Loss | Final Validation Loss | Training Time (s) | MSE | % Overfitting | % Val Loss Improvement from Non-Caps |

|---|---|---|---|---|---|---|---|

| Design A | Average over 5 runs | 0.00591 | 0.00639 | 37.75 | 10.37 | 7.45 | N/A - Non-Caps |

| Best over 5 runs | 0.00589 | 0.00614 | 43.42 | 10.10 | 4.09 | ||

| Standard Deviation | 0.00034 | 0.00046 | 3.22 | 0.85 | 0.04953 | ||

| Design B | Average over 5 runs | 0.00158 | 0.00157 | 50.44 | 2.12 | −0.27 | 3.07 |

| Best over 5 runs | 0.00164 | 0.00159 | 50.71 | 2.15 | −3.15 | 2.87 | |

| Standard Deviation | 0.00006 | 0.00008 | 0.58 | 0.10 | 0.00005 | ||

| Design C | Average over 5 runs | 0.00575 | 0.00620 | 56.52 | 10.08 | 7.28 | N/A - Non-Caps |

| Best over 5 runs | 0.00610 | 0.00709 | 56.09 | 12.41 | 14.01 | ||

| Standard Deviation | 0.00034 | 0.00074 | 0.83 | 1.89 | 0.00034 | ||

| Design D (proposed) | Average over 5 runs | 0.00162 | 0.00161 | 143.34 | 2.14 | −0.69 | 2.84 |

| Best over 5 runs | 0.00168 | 0.00172 | 142.59 | 2.32 | 2.52 | 3.12 | |

| Standard Deviation | 0.00005 | 0.00006 | 1.63 | 0.11 | 0.00005 |

| Model | Score | MAE Threshold 1 | MAE Threshold 2 | MAE Threshold 3 | Precision | Recall | F |

|---|---|---|---|---|---|---|---|

| Design A | Average over 5 runs | 6.13 | 6.06 | 10.81 | 0.37 | 0.59 | 0.45 |

| Best over 5 runs | 5.60 | 6.70 | 11.90 | 0.40 | 0.63 | 0.49 | |

| Standard Deviation | 0.63 | 0.49 | 0.75 | 0.03 | 0.04 | 0.03 | |

| Design B | Average over 5 runs | 4.27 | 4.12 | 9.98 | 0.51 | 0.41 | 0.45 |

| Best over 5 runs | 4.31 | 4.08 | 10.62 | 0.57 | 0.51 | 0.54 | |

| Standard Deviation | 0.15 | 0.06 | 0.59 | 0.06 | 0.11 | 0.08 | |

| Design C | Average over 5 runs | 7.96 | 6.87 | 9.88 | 0.54 | 0.50 | 0.52 |

| Best over 5 runs | 7.70 | 6.92 | 11.22 | 0.58 | 0.50 | 0.53 | |

| Standard Deviation | 0.71 | 0.64 | 1.01 | 0.05 | 0.01 | 0.02 | |

| Design D (proposed) | Average over 5 runs | 4.37 | 4.20 | 9.79 | 0.53 | 0.54 | 0.54 |

| Best over 5 runs | 4.50 | 4.21 | 9.81 | 0.61 | 0.59 | 0.60 | |

| Standard Deviation | 0.15 | 0.07 | 0.61 | 0.06 | 0.06 | 0.05 |

| Source | Degrees of Freedom (DF) | Sum of Squares (SS) | Mean Square (MS) | F-Ratio | p-Value |

|---|---|---|---|---|---|

| Between Groups | 3 | 0.0331 | 0.011 | 3.8412 | 0.0302 |

| Within Groups | 16 | 0.0459 | 0.0029 | ||

| Total: | 19 | 0.079 |

| Model | Score | Final Training Loss | Final Validation Loss | Training Time (s) | MSE | % Overfitting | % Val Loss Improvement from Non-Caps |

|---|---|---|---|---|---|---|---|

| Design A | Average over 5 runs | 0.00626 | 0.00667 | 35.89 | 10.61 | 6.12 | N/A–Non-Caps Version |

| Best over 5 runs | 0.00614 | 0.00653 | 36.53 | 10.19 | 5.98 | ||

| Standard Deviation | 0.00024 | 0.00035 | 0.41 | 0.71 | 0.00035 | ||

| Design B | Average over 5 runs | 0.00188 | 0.00155 | 49.77 | 2.08 | −21.24 | 3.31 |

| Best over 5 runs | 0.00194 | 0.00158 | 49.62 | 2.09 | −22.59 | 3.14 | |

| Standard Deviation | 0.00004 | 0.00003 | 0.23 | 0.04 | 0.00003 | ||

| Design C | Average over 5 runs | 0.00575 | 0.00620 | 56.52 | 10.08 | 7.28 | N/A–Non-Caps Version |

| Best over 5 runs | 0.00610 | 0.00709 | 56.09 | 12.41 | 14.01 | ||

| Standard Deviation | 0.00052 | 0.00071 | 0.83 | 1.13 | 0.00071 | ||

| Design D (proposed) | Average over 5 runs | 0.00162 | 0.00161 | 143.34 | 2.14 | −0.69 | 2.84 |

| Best over 5 runs | 0.00168 | 0.00172 | 142.59 | 2.32 | 2.52 | 3.12 | |

| Standard Deviation | 0.00007 | 0.00007 | 0.76 | 0.084 | 0.00007 |

| Model | Score | MAE Threshold 1 | MAE Threshold 2 | MAE Threshold 3 | Precision | Recall | F |

|---|---|---|---|---|---|---|---|

| Design A | Average over 5 runs | 6.34 | 6.65 | 10.91 | 0.37 | 0.59 | 0.45 |

| Best over 5 runs | 6.20 | 7.56 | 10.87 | 0.37 | 0.67 | 0.48 | |

| Standard Deviation | 0.20 | 0.63 | 0.56 | 0.02 | 0.05 | 0.03 | |

| Design B | Average over 5 runs | 4.09 | 4.11 | 9.61 | 0.46 | 0.47 | 0.46 |

| Best over 5 runs | 3.92 | 3.97 | 9.77 | 0.49 | 0.52 | 0.50 | |

| Standard Deviation | 0.17 | 0.10 | 0.61 | 0.05 | 0.04 | 0.04 | |

| Design C | Average over 5 runs | 7.73 | 6.10 | 9.25 | 0.54 | 0.50 | 0.52 |

| Best over 5 runs | 7.10 | 5.57 | 9.41 | 0.49 | 0.51 | 0.50 | |

| Standard Deviation | 0.47 | 0.36 | 0.39 | 0.03 | 0.01 | 0.01 | |

| Design D (proposed) | Average over 5 runs | 4.44 | 4.15 | 9.58 | 0.52 | 0.53 | 0.53 |

| Best over 5 runs | 4.19 | 4.08 | 10.36 | 0.60 | 0.65 | 0.62 | |

| Standard Deviation | 0.24 | 0.17 | 0.52 | 0.05 | 0.07 | 0.06 |

| Source | Degrees of Freedom (DF) | Sum of Squares (SS) | Mean Square (MS) | F-Ratio | p-Value |

|---|---|---|---|---|---|

| Between Groups | 3 | 0.0219 | 0.0073 | 5.526 | 0.0085 |

| Within Groups | 16 | 0.0211 | 0.0013 | ||

| Total: | 19 | 0.043 |

| Hyperparameter | LSTMCaps Optimised for Outlier Detection (LSTMCaps Outlier Detector) | LSTMCaps Optimised for Changepoint Detection (LSTMCaps Changepoint Detector) |

|---|---|---|

| Optimiser | Amsgrad [43] | Adam [40] |

| MAE Threshold Multiplier | 0.925 | 0.99 |

| Epochs | 100 | |

| Learning rate | 0.003 | |

| Time steps | 3 | |

| Capsule activation | relu | |

| LSTM activation | tanh | |

| Validation split | 0.2 | |

| Batch size | 128 | |

| Branched layer width | 32 | |

| Full layer width | 256 | |

| Loss function | huber | |

| Algorithm | F | FAR, % | MAR, % | NAB (Standard) | NAB (LowFP) | NAB (LowFN) | Overall Accuracy |

|---|---|---|---|---|---|---|---|

| Perfect score | 1 | 0 | 0 | 100 | 100 | 100 | 1 |

| LSTMCaps Changepoint Detector (Proposed) | 0.71 | 14.45 | 30.86 | 27.39 | 17.08 | 31.13 | 0.49195 |

| MSCRED [44] | 0.7 | 16.82 | 31.28 | 26.13 | 17.81 | 29.53 | 0.48065 |

| LSTMCaps Outlier Detector (Proposed) | 0.74 | 21.66 | 18.74 | 21.58 | 5.12 | 27.49 | 0.4779 |

| LSTM [45] | 0.65 | 14.89 | 39.4 | 26.61 | 11.78 | 32 | 0.45805 |

| LSTM-AE [46] | 0.64 | 14.81 | 39.5 | 22.97 | 20.95 | 23.93 | 0.43485 |

| MSET [47] | 0.73 | 20.82 | 20.08 | 12.71 | 11.04 | 13.6 | 0.42855 |

| Isolation forest [48] | 0.4 | 6.86 | 72.09 | 37.53 | 17.09 | 45.02 | 0.38765 |

| Conv-AE [49] | 0.66 | 5.57 | 46.16 | 11.12 | 10.35 | 11.77 | 0.3856 |

| LSTM-VAE [50] | 0.56 | 9.04 | 54.75 | 21.09 | 17.52 | 22.73 | 0.38545 |

| Autoencoder [51] | 0.45 | 7.52 | 66.59 | 15.65 | 0.48 | 21 | 0.30325 |

| Null score | 0 | 100 | 100 | 0 | 0 | 0 | 0 |

| Algorithm | F | FAR, % | MAR, % | NAB (Standard) | NAB (LowFP) | NAB (LowFN) | Overall Accuracy |

|---|---|---|---|---|---|---|---|

| Perfect score | 1 | 0 | 0 | 100 | 100 | 100 | 1 |

| LSTMCaps Changepoint Detector (Proposed) | 0.71 | 14.51 | 30.59 | 27.77 | 17.14 | 31.59 | 0.494 |

| LSTMCaps Anomaly Detector (Proposed) | 0.74 | 21.5 | 18.74 | 24.02 | 8.14 | 29.6 | 0.490 |

| MSCRED [44] | 0.7 | 16.2 | 30.87 | 24.99 | 17.9 | 27.94 | 0.475 |

| LSTM [45] | 0.67 | 15.42 | 36.02 | 26.76 | 12.92 | 31.93 | 0.468 |

| LSTM-AE [46] | 0.65 | 14.59 | 39.42 | 24.77 | 22.69 | 25.75 | 0.449 |

| MSET [47] | 0.73 | 20.82 | 20.08 | 12.71 | 11.04 | 13.6 | 0.429 |

| LSTM-VAE [50] | 0.56 | 9.2 | 54.81 | 21.92 | 18.45 | 23.59 | 0.390 |

| Isolation forest [48] | 0.4 | 6.86 | 72.09 | 37.53 | 17.09 | 45.02 | 0.388 |

| Conv-AE [49] | 0.66 | 5.58 | 46.05 | 11.21 | 10.45 | 11.83 | 0.386 |

| Autoencoder [51] | 0.45 | 7.55 | 66.57 | 16.27 | 1.04 | 21.62 | 0.306 |

| Null score | 0 | 100 | 100 | 0 | 0 | 0 | 0 |

| Source | Degrees of Freedom (DF) | Sum of Squares (SS) | Mean Square (MS) | F-Ratio | p-Value |

|---|---|---|---|---|---|

| Between Groups | 9 | 0.1537 | 0.0171 | 238.2433 | 0 |

| Within Groups | 40 | 0.0029 | 0.0001 | ||

| Total: | 49 | 0.1565 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elhalwagy, A.; Kalganova, T. Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data. Appl. Sci. 2022, 12, 11393. https://doi.org/10.3390/app122211393

Elhalwagy A, Kalganova T. Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data. Applied Sciences. 2022; 12(22):11393. https://doi.org/10.3390/app122211393

Chicago/Turabian StyleElhalwagy, Ayman, and Tatiana Kalganova. 2022. "Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data" Applied Sciences 12, no. 22: 11393. https://doi.org/10.3390/app122211393

APA StyleElhalwagy, A., & Kalganova, T. (2022). Multi-Channel LSTM-Capsule Autoencoder Network for Anomaly Detection on Multivariate Data. Applied Sciences, 12(22), 11393. https://doi.org/10.3390/app122211393