Learning from Multiple Instances: A Two-Stage Unsupervised Image Denoising Framework Based on Deep Image Prior

Abstract

1. Introduction

2. Related Work

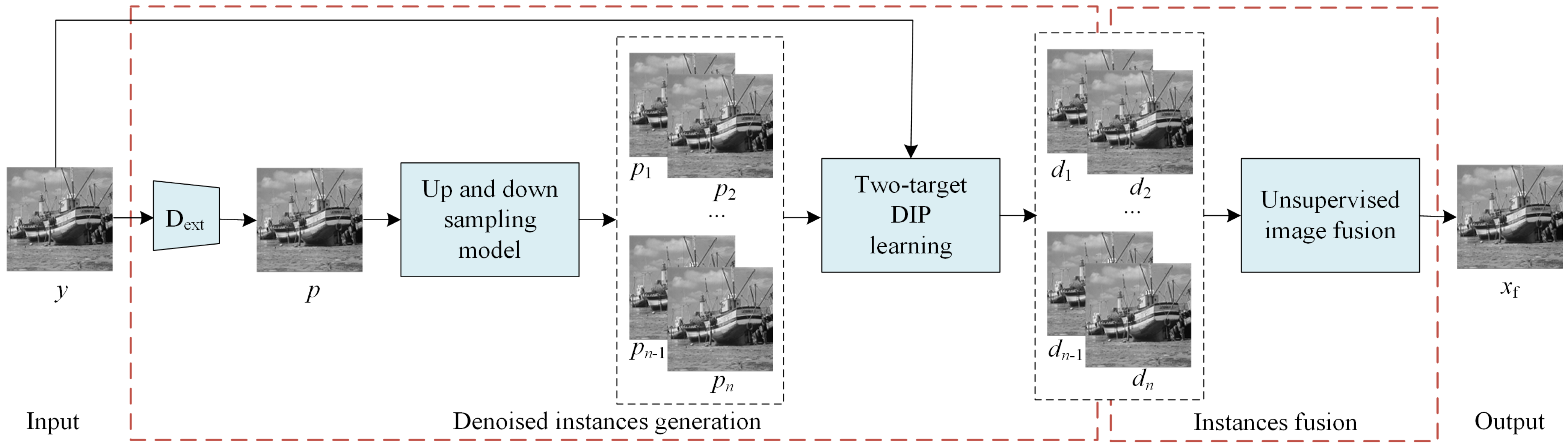

3. Methodology

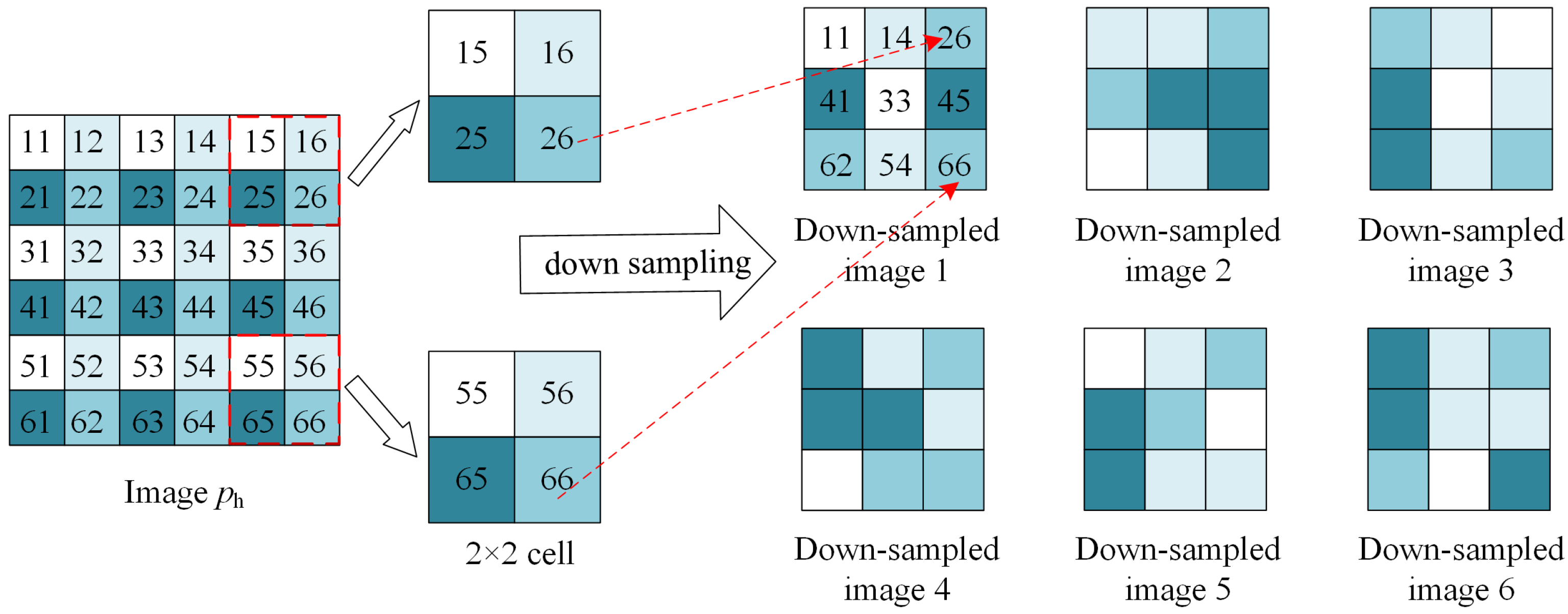

3.1. Preliminary Images Generation

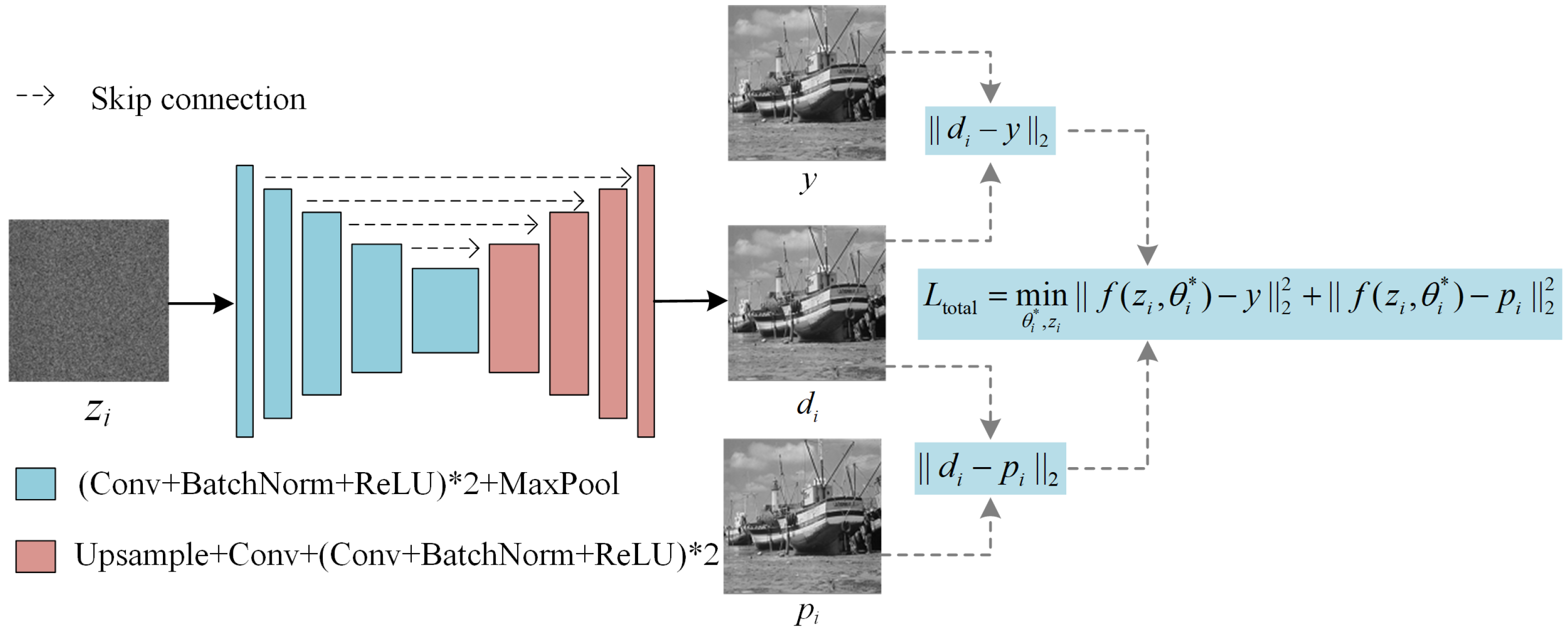

3.2. Two-Target DIP Learning

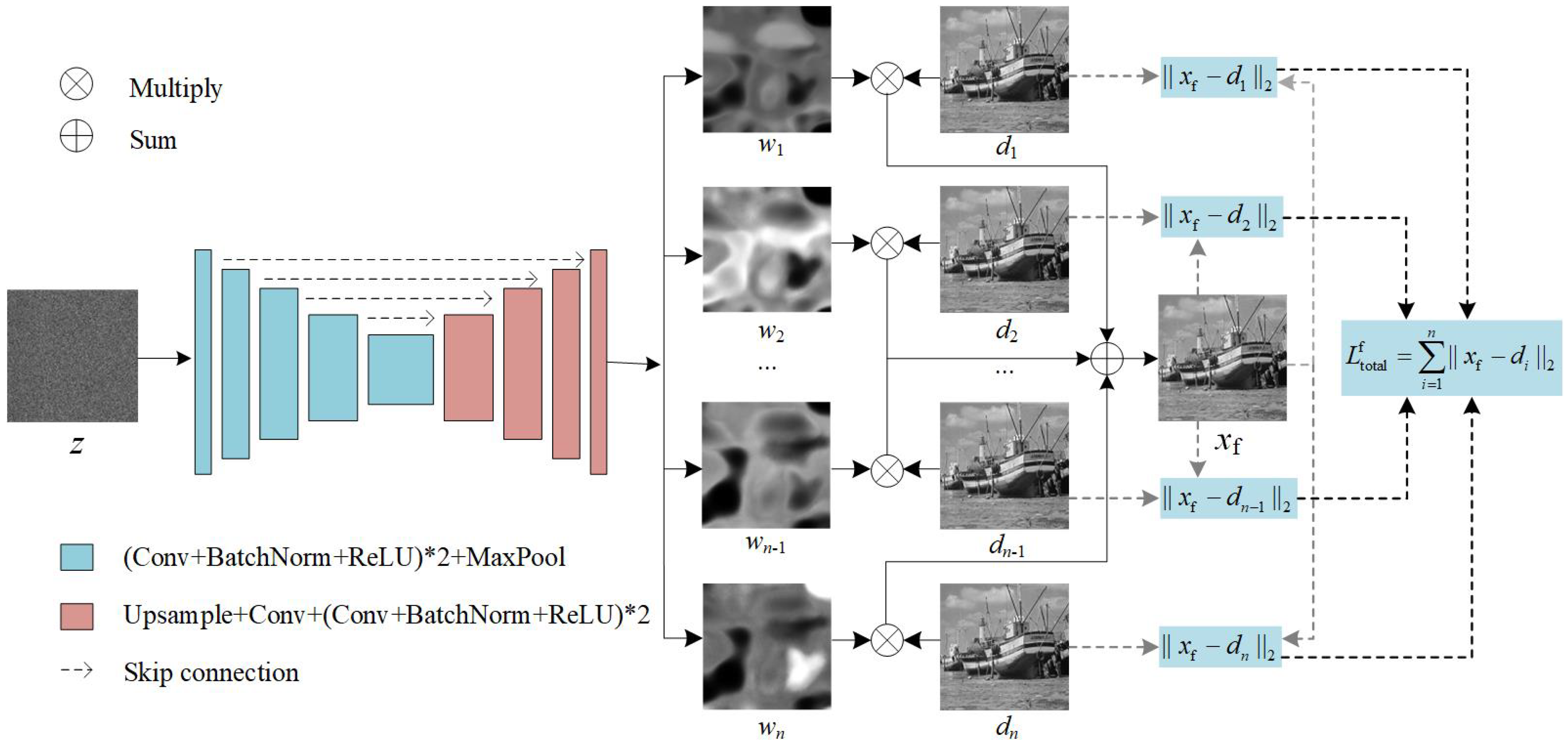

3.3. Unsupervised Image Fusion

4. Experiments

4.1. Experimental Setting

4.2. Ablation Experiments

4.3. Gray Image Denoising

4.4. Color Image Denoising

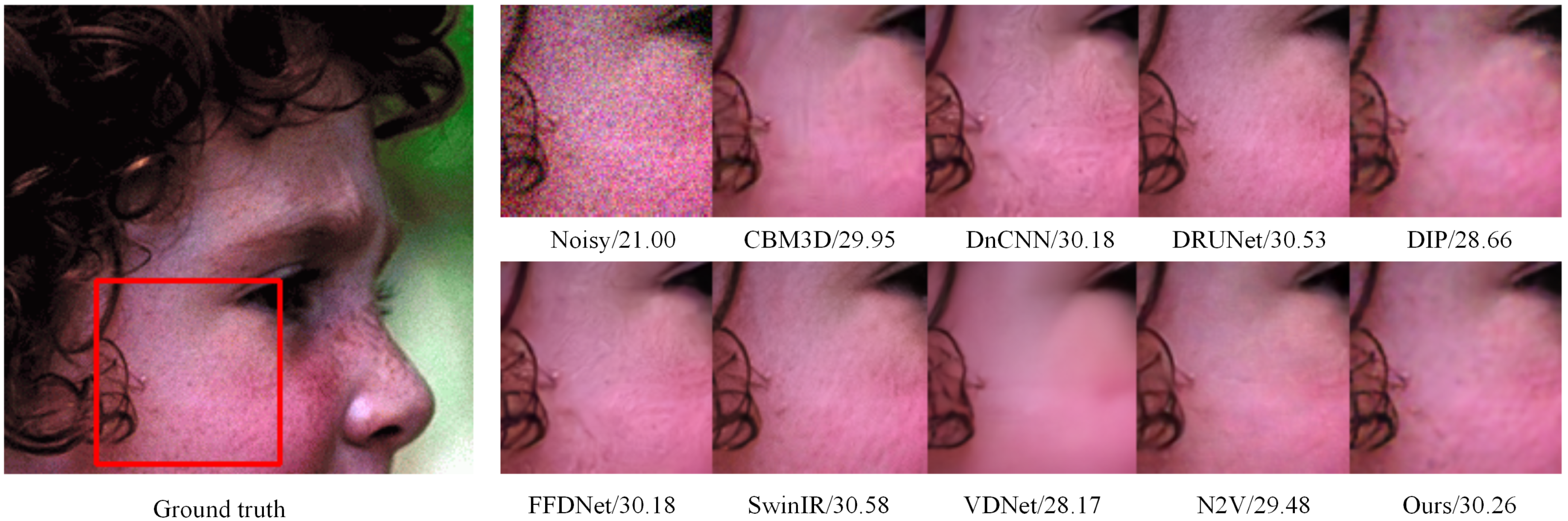

4.5. Visual Comparison

4.6. Real-Image Denoising

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 60–65. [Google Scholar]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Weighted nuclear norm minimization with application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef]

- Zhong, Y.; Liu, L.; Zhao, D.; Li, H. A generative adversarial network for image denoising. Multimed. Tools Appl. 2020, 79, 16517–16529. [Google Scholar] [CrossRef]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1712–1722. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Liu, D.; Wen, B.; Fan, Y.; Loy, C.C.; Huang, T.S. Non-local recurrent network for image restoration. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS), Montreal, PQ, Canada, 2–8 December 2018; pp. 1673–1682. [Google Scholar]

- Liu, P.; Zhang, H.; Zhang, K.; Lin, L.; Zuo, W. Multi-level wavelet-CNN for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 886–895. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Gool, L.V.; Timofte, R. SwinIR: Image restoration using swin transformer. arXiv 2021, arXiv:2108.10257. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. arXiv 2021, arXiv:2111.09881. [Google Scholar]

- Jagatap, G.; Hegde, C. High dynamic range imaging using deep image priors. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 9289–9293. [Google Scholar]

- Sun, H.; Peng, L.; Zhang, H.; He, Y.; Cao, S.; Lu, L. Dynamic PET image denoising using deep image prior combined with regularization by denoising. IEEE Access 2021, 9, 52378–52392. [Google Scholar] [CrossRef]

- Lehtinen, J.; Munkberg, J.; Hasselgren, J.; Laine, S.; Karras, T.; Aittala, M.; Aila, T. Noise2noise: Learning image restoration without clean data. arXiv 2018, arXiv:1803.04189. [Google Scholar]

- Krull, A.; Buchholz, T.O.; Jug, F. Noise2void-learning denoising from single noisy images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2129–2137. [Google Scholar]

- Batson, J.; Royer, L. Noise2self: Blind denoising by self-supervision. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 10–15 June 2019; pp. 524–533. [Google Scholar]

- Moran, N.; Schmidt, D.; Zhong, Y.; Coady, P. Noisier2noise: Learning to denoise from unpaired noisy data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12061–12069. [Google Scholar]

- Xu, J.; Huang, Y.; Cheng, M.M.; Liu, L.; Zhu, F.; Xu, Z.; Shao, L. Noisy-as-clean: Learning self-supervised from corrupted image. IEEE Trans. Image Process. 2020, 29, 9316–9329. [Google Scholar] [CrossRef] [PubMed]

- Pang, T.; Zheng, H.; Quan, Y.; Ji, H. Recorrupted-to-recorrupted: Unsupervised deep learning for image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2043–2052. [Google Scholar]

- Quan, Y.; Chen, M.; Pang, T.; Ji, H. Self2self with dropout: Learning self-supervised denoising from single image. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1887–1895. [Google Scholar]

- Huang, T.; Li, S.; Jia, X.; Lu, H.; Liu, J. Neighbor2neighbor: Self-supervised denoising from single noisy images. In Proceedings of the IEEE Conference on Computer Vision and Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14781–14790. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for single image super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4323–4337. [Google Scholar] [CrossRef] [PubMed]

- Mou, C.; Zhang, J.; Wu, Z.Y. Dynamic attentive graph learning for image restoration. arXiv 2021, arXiv:2109.06620. [Google Scholar]

- Ren, C.; He, X.; Wang, C.; Zhao, Z. Adaptive consistency prior based deep network for image denoising. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8592–8602. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Gool, L.V.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. arXiv 2020, arXiv:2008.13751. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep CNN denoiser prior for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the British Machine Vision Conference (BMVC), Guildford, UK, 3–7 September 2012; pp. 135.1–135.10. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. Proceddings of the IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; pp. 416–423. [Google Scholar]

- Xu, S.; Chen, X.; Luo, J.; Cheng, X.; Xiao, N. An unsupervised fusion network for boosting denoising performance. J. Vis. Commun. Image Represent. 2022, 88, 103626. [Google Scholar] [CrossRef]

- Yu, L.; Luo, J.; Xu, S.; Chen, X.; Xiao, N. An unsupervised weight map generative network for pixel-level combination of image denoisers. Appl. Sci. 2022, 12, 6227. [Google Scholar] [CrossRef]

- Yue, Z.; Yong, H.; Zhao, Q.; Meng, D.; Zhang, L. Variational denoising network: Toward blind noise modeling and removal. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Guo, X.; Yu, L.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.; Gu, S.; Zhang, L. Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 2018, 27, 2049–2062. [Google Scholar] [CrossRef] [PubMed]

- Nam, S.; Hwang, Y.; Matsushita, Y.; Kim, S.J. A holistic approach to cross-channel image noise modeling and its application to image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1683–1691. [Google Scholar]

- Yang, Q.; Yan, P.; Zhang, Y.; Yu, H.; Shi, Y.; Mou, X.; Kalra, M.K.; Zhang, Y.; Sun, L.; Wang, G. Low-dose CT image denoising using a generative adversarial network with wasserstein distance and perceptual loss. IEEE Trans. Med. Imaging 2018, 37, 1348–1357. [Google Scholar] [CrossRef] [PubMed]

| Target Image | Noisy | Noisy+ | |

|---|---|---|---|

| PSNR |

| Instance Numbers | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|

| PSNR | 30.86 | 30.95 | 31.02 | 31.06 | 31.09 | 31.1 | 31.11 |

| Method | Set12 | BSD68 | ||||||

|---|---|---|---|---|---|---|---|---|

| 15 | 25 | 50 | Avg. | 15 | 25 | 50 | Avg. | |

| BM3D | 32.36 | 29.96 | 26.70 | 29.67 | 31.15 | 28.57 | 25.58 | 28.43 |

| WNNM | 32.71 | 30.26 | 26.88 | 31.45 | 31.35 | 28.80 | 25.81 | 28.65 |

| DnCNN | 32.67 | 30.35 | 27.18 | 30.07 | 31.62 | 29.14 | 26.18 | 28.98 |

| FFDNet | 32.75 | 30.43 | 27.32 | 30.17 | 31.65 | 29.17 | 26.24 | 29.02 |

| DAGL | 33.19 | 30.86 | 27.75 | 30.60 | 31.81 | 29.33 | 26.37 | 29.17 |

| DeamNet | 33.13 | 30.78 | 27.72 | 30.55 | 31.83 | 29.35 | 26.42 | 29.20 |

| DRUNet | 33.25 | 30.94 | 27.90 | 30.69 | 31.86 | 29.39 | 26.49 | 29.25 |

| IRCNN | 32.76 | 30.37 | 27.12 | 30.08 | 31.65 | 29.13 | 26.14 | 28.97 |

| SwinIR | 33.36 | 31.01 | 27.91 | 30.76 | 31.91 | 29.41 | 26.47 | 29.26 |

| DIP | 31.31 | 28.92 | 25.57 | 28.60 | 30.05 | 27.47 | 24.25 | 27.26 |

| N2V | 30.86 | 29.46 | 25.83 | 28.71 | 29.38 | 27.81 | 25.37 | 27.52 |

| Yu2022 | 34.05 | 31.58 | 28.11 | 31.24 | 32.92 | 30.17 | 26.92 | 30.00 |

| Xu2022 | 33.27 | 31.13 | 28.10 | 30.83 | 32.24 | 30.00 | 26.48 | 29.57 |

| Ours | 33.36 | 31.02 | 27.71 | 30.70 | 32.36 | 29.66 | 26.30 | 29.44 |

| Image | BM3D | WNNM | DnCNN | FFDNet | DAGL | DeamNet | DRUNet | IRCNN | SwinIR | DIP | N2V | Ours | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 15 | C.man | 31.90 | 32.19 | 32.14 | 32.42 | 32.69 | 32.76 | 32.91 | 32.53 | 33.04 | 30.90 | 29.09 | 32.75 |

| House | 34.92 | 35.14 | 34.96 | 35.01 | 35.76 | 35.68 | 35.83 | 34.88 | 35.91 | 34.04 | 34.22 | 35.55 | |

| Peppers | 32.71 | 32.98 | 33.09 | 33.10 | 33.41 | 33.42 | 33.56 | 33.21 | 33.63 | 31.85 | 31.39 | 33.89 | |

| Starfish | 31.12 | 31.82 | 31.92 | 32.02 | 32.61 | 32.50 | 32.44 | 31.96 | 32.57 | 30.98 | 30.62 | 33.04 | |

| Monarch | 31.95 | 32.71 | 33.08 | 32.77 | 33.48 | 33.51 | 33.61 | 32.98 | 33.68 | 31.52 | 30.84 | 33.51 | |

| Airplane | 31.10 | 31.41 | 31.54 | 31.58 | 31.95 | 31.90 | 31.99 | 31.66 | 32.08 | 30.41 | 27.99 | 32.57 | |

| Parrot | 31.31 | 31.64 | 31.64 | 31.77 | 31.96 | 32.01 | 32.13 | 31.88 | 32.16 | 30.51 | 28.35 | 32.29 | |

| Lena | 34.23 | 34.37 | 34.52 | 34.63 | 34.84 | 34.86 | 34.93 | 34.50 | 34.97 | 33.37 | 33.69 | 35.28 | |

| Barbara | 33.04 | 33.59 | 32.03 | 32.50 | 33.73 | 33.16 | 33.44 | 32.41 | 33.98 | 29.62 | 31.15 | 32.25 | |

| Boat | 32.09 | 32.29 | 32.36 | 32.35 | 32.64 | 32.62 | 32.71 | 32.36 | 32.81 | 31.05 | 31.34 | 33.09 | |

| Man | 31.90 | 32.14 | 32.37 | 32.40 | 32.52 | 32.52 | 32.61 | 32.36 | 32.67 | 30.76 | 31.01 | 33.02 | |

| Couple | 32.04 | 32.19 | 32.38 | 32.45 | 32.67 | 32.68 | 32.78 | 32.37 | 32.83 | 30.75 | 30.58 | 33.10 | |

| 25 | C.man | 29.38 | 29.66 | 30.03 | 30.06 | 30.32 | 30.38 | 30.61 | 30.12 | 30.69 | 28.46 | 28.04 | 30.25 |

| House | 32.86 | 33.20 | 33.04 | 33.27 | 33.80 | 33.73 | 33.93 | 33.02 | 33.91 | 31.98 | 32.43 | 34.00 | |

| Peppers | 30.20 | 30.42 | 30.73 | 30.79 | 31.04 | 31.09 | 31.22 | 30.81 | 31.28 | 29.22 | 29.19 | 31.57 | |

| Starfish | 28.52 | 29.04 | 29.24 | 29.33 | 30.09 | 29.89 | 29.88 | 29.21 | 29.96 | 28.41 | 29.19 | 30.52 | |

| Monarch | 29.30 | 29.85 | 30.37 | 30.14 | 30.72 | 30.72 | 30.89 | 30.20 | 30.95 | 28.82 | 29.03 | 31.04 | |

| Airplane | 28.42 | 28.71 | 29.06 | 29.05 | 29.41 | 29.30 | 29.35 | 29.05 | 29.44 | 27.71 | 27.03 | 29.98 | |

| Parrot | 28.94 | 29.16 | 29.35 | 29.43 | 29.54 | 29.60 | 29.72 | 29.47 | 29.75 | 28.33 | 27.78 | 29.81 | |

| Lena | 32.07 | 32.24 | 32.40 | 32.59 | 32.84 | 32.85 | 32.97 | 32.40 | 32.98 | 31.34 | 31.80 | 33.29 | |

| Barbara | 30.66 | 31.24 | 29.67 | 29.98 | 31.49 | 30.74 | 31.23 | 29.93 | 31.69 | 26.63 | 29.03 | 29.36 | |

| Boat | 29.87 | 30.04 | 30.19 | 30.23 | 30.49 | 30.48 | 30.58 | 30.17 | 30.64 | 28.91 | 29.49 | 30.97 | |

| Man | 29.59 | 29.79 | 30.06 | 30.10 | 30.20 | 30.17 | 30.30 | 30.02 | 30.32 | 28.71 | 29.49 | 30.65 | |

| Couple | 29.70 | 29.83 | 30.05 | 30.18 | 30.38 | 30.44 | 30.56 | 30.05 | 30.57 | 28.48 | 29.28 | 30.78 | |

| 50 | C.man | 26.19 | 26.24 | 27.26 | 27.03 | 27.29 | 27.42 | 27.80 | 27.16 | 27.79 | 24.67 | 24.66 | 27.06 |

| House | 29.57 | 30.16 | 29.91 | 30.43 | 31.04 | 31.16 | 31.26 | 29.91 | 31.11 | 28.20 | 29.00 | 30.78 | |

| Peppers | 26.72 | 26.79 | 27.35 | 27.43 | 27.60 | 27.76 | 27.87 | 27.33 | 27.91 | 25.85 | 25.82 | 27.93 | |

| Starfish | 24.90 | 25.29 | 25.60 | 25.77 | 26.46 | 26.47 | 26.49 | 25.48 | 26.55 | 24.61 | 25.23 | 26.77 | |

| Monarch | 25.71 | 26.05 | 26.84 | 26.88 | 27.30 | 27.18 | 27.31 | 26.66 | 27.31 | 25.61 | 25.81 | 27.65 | |

| Airplane | 25.17 | 25.26 | 25.82 | 25.90 | 26.21 | 26.07 | 26.08 | 25.78 | 26.14 | 24.65 | 24.78 | 26.57 | |

| Parrot | 25.87 | 25.98 | 26.48 | 26.58 | 26.66 | 26.71 | 26.92 | 26.48 | 26.91 | 24.74 | 22.69 | 26.85 | |

| Lena | 29.02 | 29.16 | 29.34 | 29.68 | 29.92 | 29.93 | 30.15 | 29.36 | 30.11 | 28.11 | 28.39 | 30.27 | |

| Barbara | 27.21 | 27.46 | 26.32 | 26.48 | 28.26 | 27.60 | 28.16 | 26.17 | 28.41 | 23.41 | 23.97 | 25.45 | |

| Boat | 26.73 | 26.85 | 27.18 | 27.32 | 27.56 | 27.59 | 27.66 | 27.17 | 27.70 | 25.65 | 26.57 | 27.86 | |

| Man | 26.79 | 26.83 | 27.17 | 27.30 | 27.39 | 27.36 | 27.46 | 27.14 | 27.45 | 26.10 | 26.79 | 27.75 | |

| Couple | 26.47 | 26.53 | 26.87 | 27.07 | 27.33 | 27.43 | 27.59 | 26.86 | 27.53 | 25.22 | 26.21 | 27.51 |

| Method | PSNR | |||

|---|---|---|---|---|

| 15 | 25 | 50 | Avg. | |

| DnCNN | 34.29 | 32.12 | 29.25 | 31.89 |

| FFDNet | 34.31 | 32.12 | 29.27 | 31.90 |

| CBM3D | 34.03 | 31.62 | 28.66 | 31.44 |

| VDNet | 29.94 | 29.03 | 27.74 | 28.90 |

| DRUNet | 34.89 | 32.71 | 29.84 | 32.48 |

| SwinIR | 35.06 | 32.81 | 29.89 | 32.59 |

| DIP | 32.84 | 30.24 | 25.84 | 29.64 |

| N2V | 31.90 | 30.73 | 27.45 | 30.03 |

| Ours | 34.78 | 32.53 | 29.01 | 32.10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, S.; Chen, X.; Tang, Y.; Jiang, S.; Cheng, X.; Xiao, N. Learning from Multiple Instances: A Two-Stage Unsupervised Image Denoising Framework Based on Deep Image Prior. Appl. Sci. 2022, 12, 10767. https://doi.org/10.3390/app122110767

Xu S, Chen X, Tang Y, Jiang S, Cheng X, Xiao N. Learning from Multiple Instances: A Two-Stage Unsupervised Image Denoising Framework Based on Deep Image Prior. Applied Sciences. 2022; 12(21):10767. https://doi.org/10.3390/app122110767

Chicago/Turabian StyleXu, Shaoping, Xiaojun Chen, Yiling Tang, Shunliang Jiang, Xiaohui Cheng, and Nan Xiao. 2022. "Learning from Multiple Instances: A Two-Stage Unsupervised Image Denoising Framework Based on Deep Image Prior" Applied Sciences 12, no. 21: 10767. https://doi.org/10.3390/app122110767

APA StyleXu, S., Chen, X., Tang, Y., Jiang, S., Cheng, X., & Xiao, N. (2022). Learning from Multiple Instances: A Two-Stage Unsupervised Image Denoising Framework Based on Deep Image Prior. Applied Sciences, 12(21), 10767. https://doi.org/10.3390/app122110767