Partitioning DNNs for Optimizing Distributed Inference Performance on Cooperative Edge Devices: A Genetic Algorithm Approach

Abstract

1. Introduction

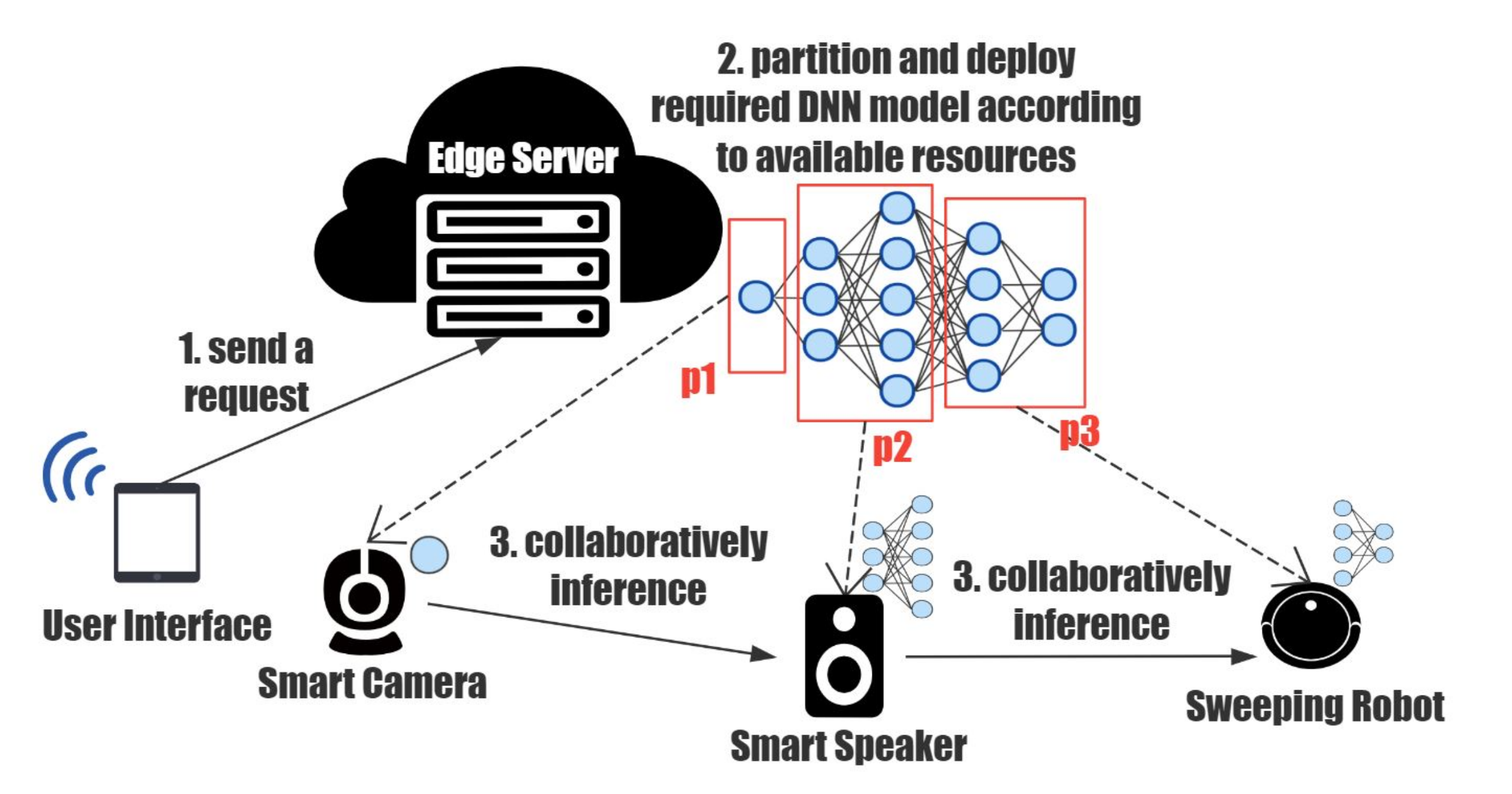

- Firstly, the DNN model partitioning problem is modeled as a constrained optimization problem and the corresponding problem is introduced.

- Secondly, the paper puts forward a novel genetic algorithm to shorten solving time by ensuring the validity of chromosomes after crossover and mutation operation.

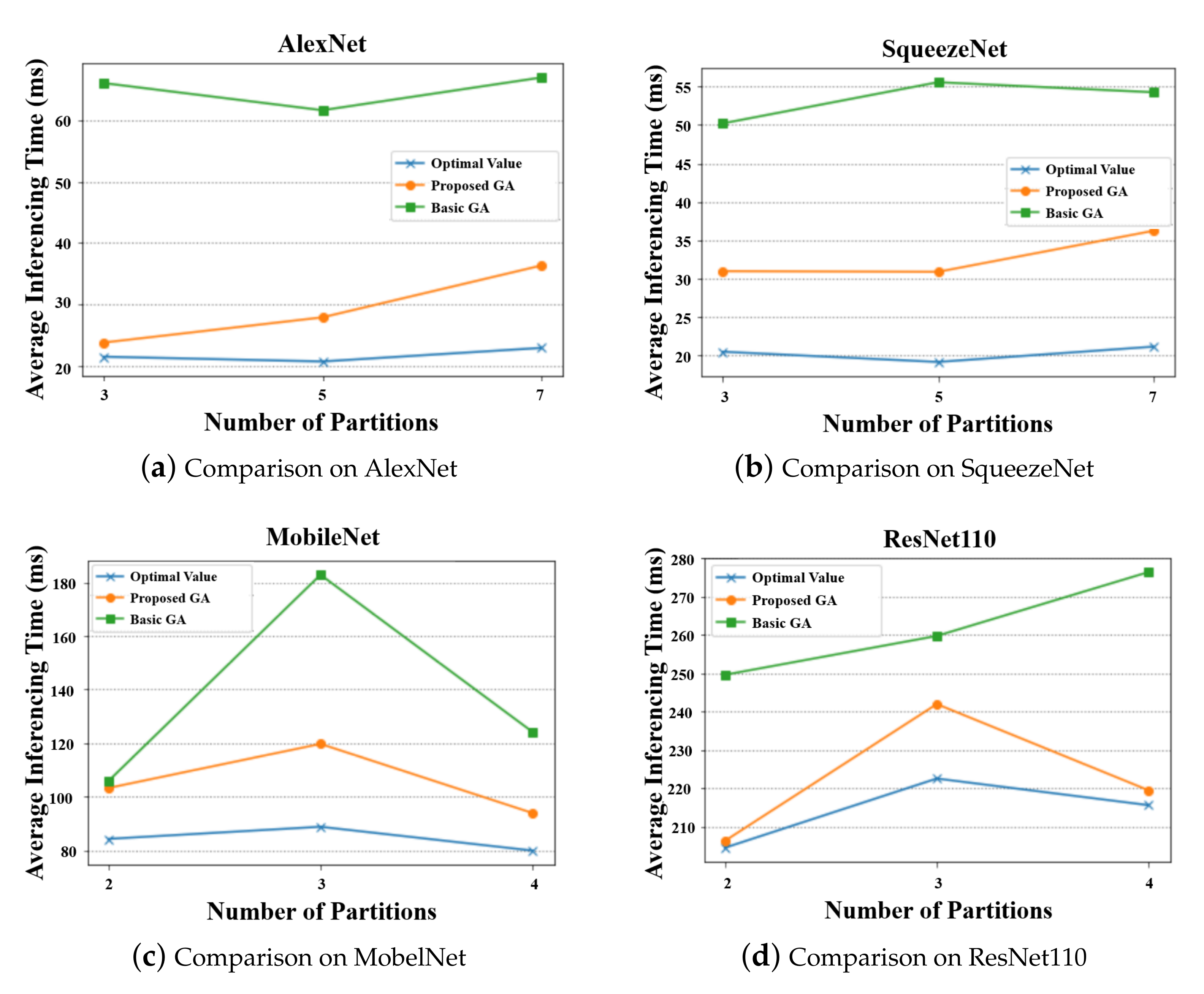

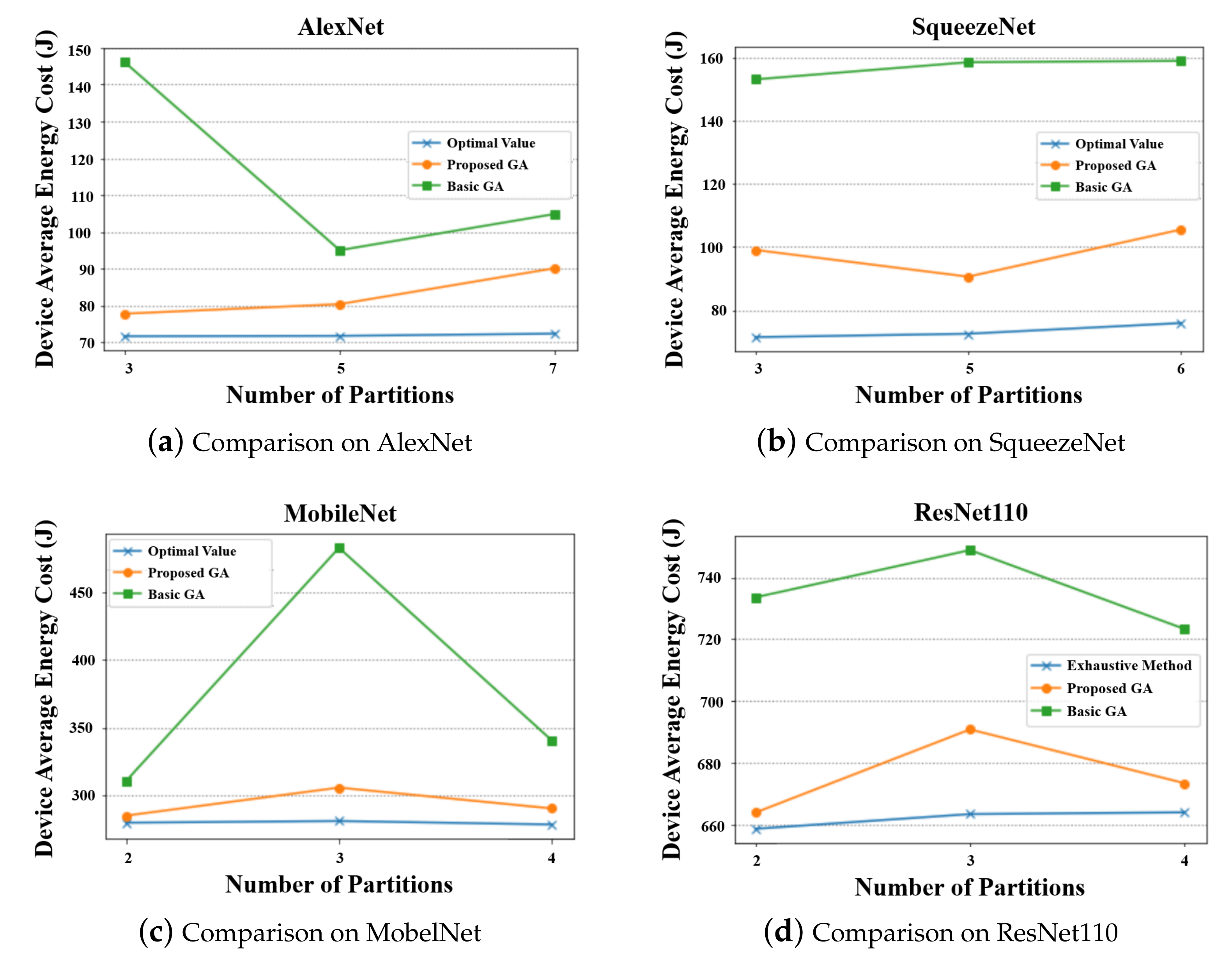

- Finally, experiments are performed on several existing DNN models, including AlexNet, ResNet110, MobelNet, and SqueenzeNet, to present a more comprehensive evaluation.

2. Literature Review

3. System Model and Problem Formulation

4. The Proposed Genetic Algorithm

4.1. Problems of Applying Basic GA for DNN Partitioning

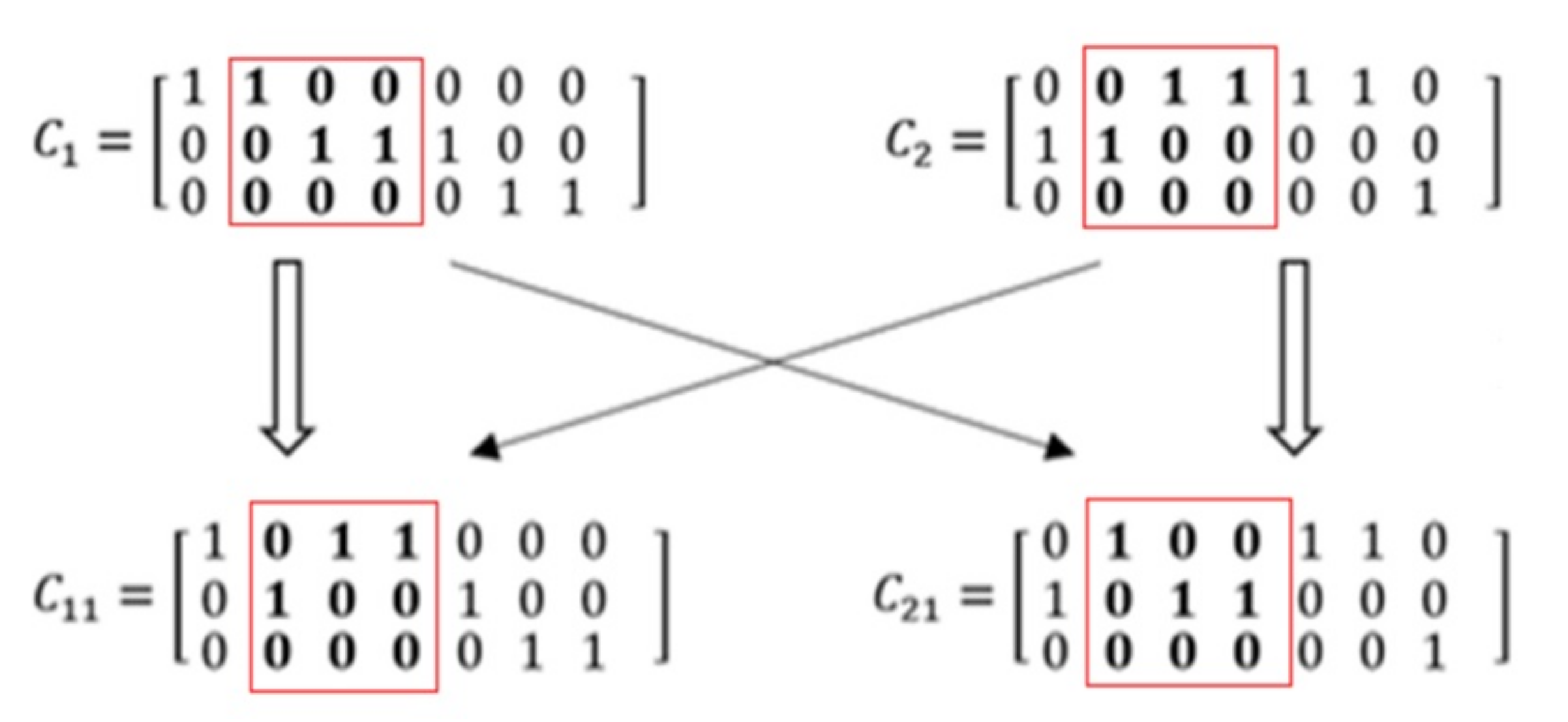

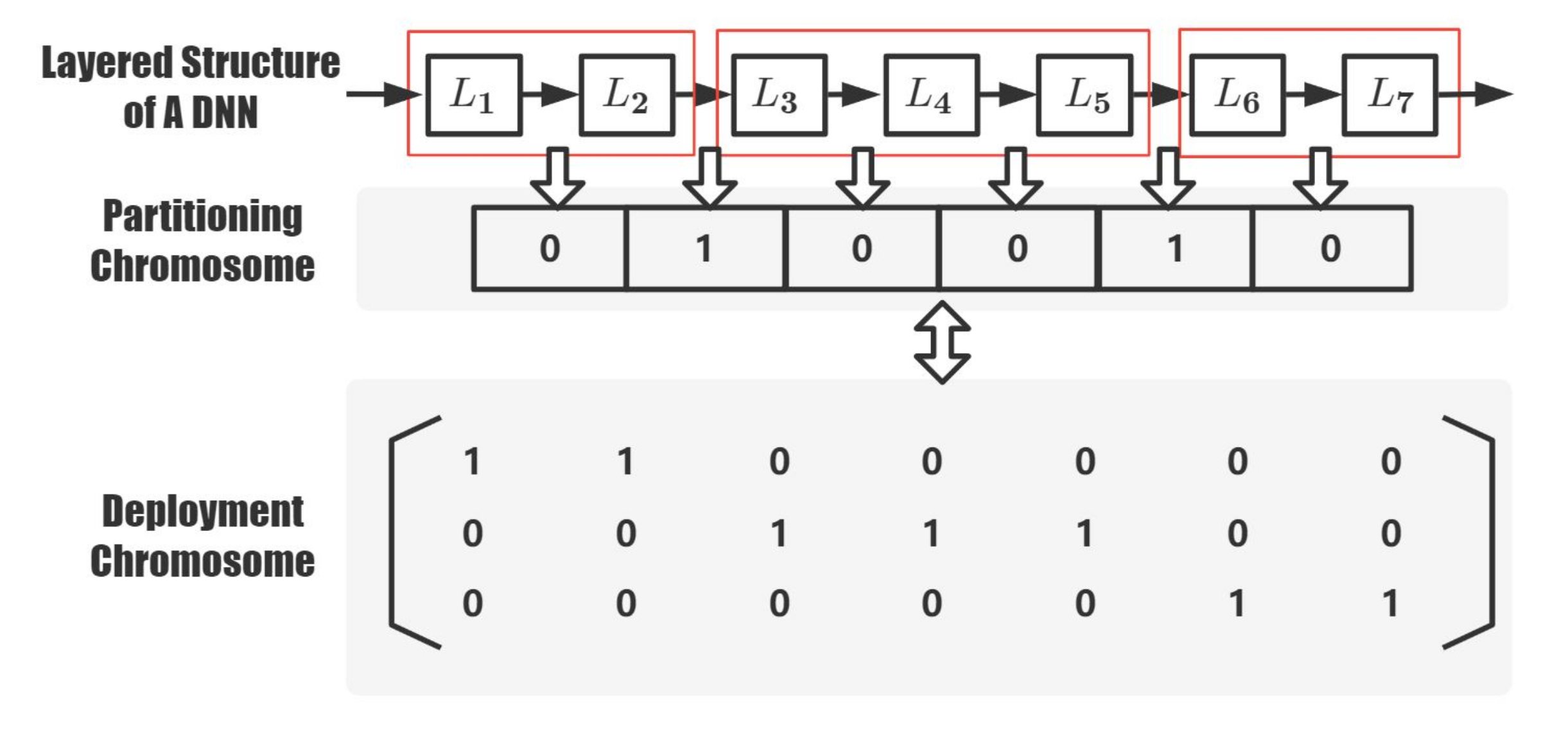

4.2. The Proposed Improvement

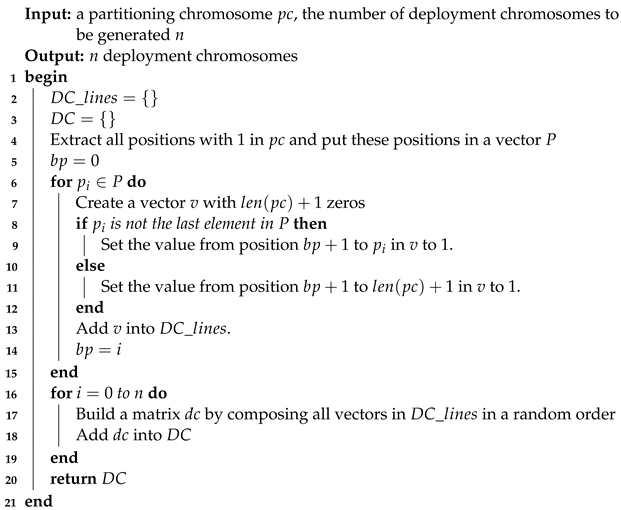

| Algorithm 1: Deployment Chromosome Generation Algorithm |

|

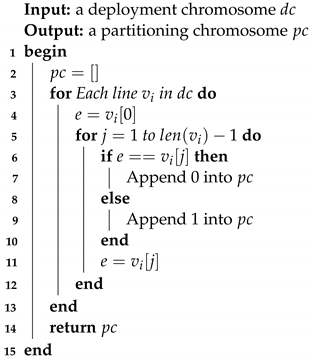

| Algorithm 2: Partitioning Chromosome Extraction Algorithm |

|

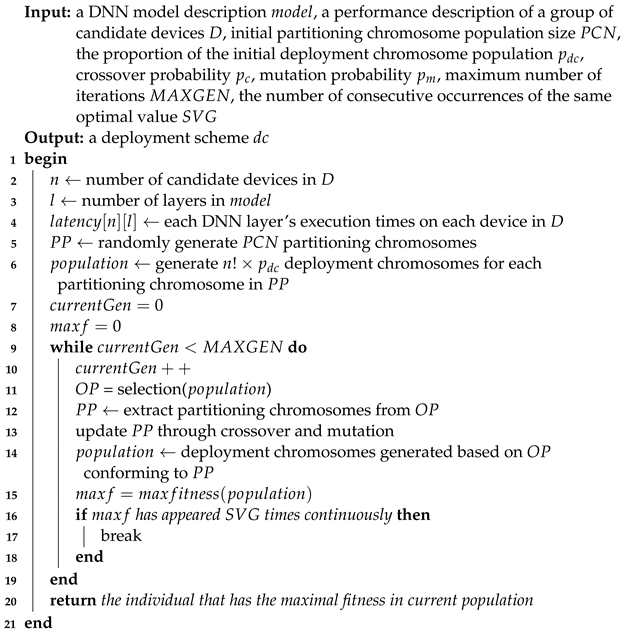

- On the one hand, the initial population generation needs to be modified according to the above chromosome classification. The initialization process should be divided into two steps: first, the random generation of a partitioning population. Then, the derivation of the corresponding deployment population based on Algorithm 1.

- On the other hand, after selecting excellent individuals out of the deployment population, the corresponding partitioning population should be extracted based on Algorithm 2. Then, crossover and mutation should be performed on these partitioning chromosomes and corresponding deployment individuals should be selected to produce a new deployment population.

| Algorithm 3: The Framework of the Proposed Genetic Algorithm |

|

5. Performance Evaluation

5.1. Experiment Setting

5.2. Comparison of Inference Performance

5.2.1. Comparison in Considering Partitioning Optimization and Deployment Optimization Separately

5.2.2. Comparison in Considering Partitioning Optimization and Deployment Optimization Simultaneously

5.3. Comparison of Algorithm Efficiency

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dec, G.; Stadnicka, D.; Paśko, Ł.; Mądziel, M.; Figliè, R.; Mazzei, D.; Tyrovolas, M.; Stylios, C.; Navarro, J.; Solé-Beteta, X. Role of Academics in Transferring Knowledge and Skills on Artificial Intelligence, Internet of Things and Edge Computing. Sensors 2022, 22, 2496. [Google Scholar] [CrossRef] [PubMed]

- Paśko, Ł.; Mądziel, M.; Stadnicka, D.; Dec, G.; Carreras-Coch, A.; Solé-Beteta, X.; Pappa, L.; Stylios, C.; Mazzei, D.; Atzeni, D. Plan and Develop Advanced Knowledge and Skills for Future Industrial Employees in the Field of Artificial Intelligence, Internet of Things and Edge Computing. Sustainability 2022, 14, 3312. [Google Scholar]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge intelligence: Paving the last mile of artificial intelligence with edge computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Murshed, M.S.; Murphy, C.; Hou, D.; Khan, N.; Ananthanarayanan, G.; Hussain, F. Machine learning at the network edge: A survey. Acm Comput. Surv. 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep learning with edge computing: A review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Liang, X.; Liu, Y.; Chen, T.; Liu, M.; Yang, Q. Federated transfer reinforcement learning for autonomous driving. arXiv 2019, arXiv:1910.06001. [Google Scholar]

- Zhang, Q.; Sun, H.; Wu, X.; Zhong, H. Edge video analytics for public safety: A review. Proc. IEEE 2019, 107, 1675–1696. [Google Scholar] [CrossRef]

- Liang, F.; Yu, W.; Liu, X.; Griffith, D.; Golmie, N. Toward edge-based deep learning in industrial Internet of Things. IEEE Internet Things J. 2020, 7, 4329–4341. [Google Scholar] [CrossRef]

- Qolomany, B.; Al-Fuqaha, A.; Gupta, A.; Benhaddou, D.; Alwajidi, S.; Qadir, J.; Fong, A.C. Leveraging machine learning and big data for smart buildings: A comprehensive survey. IEEE Access 2019, 7, 90316–90356. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. A survey of model compression and acceleration for deep neural networks. arXiv 2017, arXiv:1710.09282. [Google Scholar]

- Deng, L.; Li, G.; Han, S.; Shi, L.; Xie, Y. Model compression and hardware acceleration for neural networks: A comprehensive survey. Proc. IEEE 2020, 108, 485–532. [Google Scholar] [CrossRef]

- Choudhary, T.; Mishra, V.; Goswami, A.; Sarangapani, J. A comprehensive survey on model compression and acceleration. Artif. Intell. Rev. 2020, 53, 5113–5155. [Google Scholar] [CrossRef]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative intelligence between the cloud and mobile edge. ACM Sigarch Comput. Archit. News 2017, 45, 615–629. [Google Scholar] [CrossRef]

- Ko, J.H.; Na, T.; Amir, M.F.; Mukhopadhyay, S. Edge-host partitioning of deep neural networks with feature space encoding for resource-constrained internet-of-things platforms. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Jeong, H.J.; Lee, H.J.; Shin, C.H.; Moon, S.M. IONN: Incremental offloading of neural network computations from mobile devices to edge servers. In Proceedings of the ACM Symposium on Cloud Computing, Carlsbad, CA, USA, 11–13 October 2018; pp. 401–411. [Google Scholar]

- Jouhari, M.; Al-Ali, A.; Baccour, E.; Mohamed, A.; Erbad, A.; Guizani, M.; Hamdi, M. Distributed CNN Inference on Resource-Constrained UAVs for Surveillance Systems: Design and Optimization. IEEE Internet Things J. 2021, 9, 1227–1242. [Google Scholar] [CrossRef]

- Tang, E.; Stefanov, T. Low-memory and high-performance CNN inference on distributed systems at the edge. In Proceedings of the 14th IEEE/ACM International Conference on Utility and Cloud Computing Companion, Leicester, UK, 6–9 December 2021; pp. 1–8. [Google Scholar]

- Zhou, J.; Wang, Y.; Ota, K.; Dong, M. AAIoT: Accelerating artificial intelligence in IoT systems. IEEE Wirel. Commun. Lett. 2019, 8, 825–828. [Google Scholar] [CrossRef]

- Zhou, L.; Wen, H.; Teodorescu, R.; Du, D.H. Distributing deep neural networks with containerized partitions at the edge. In Proceedings of the 2nd USENIX Workshop on Hot Topics in Edge Computing (HotEdge 19), Renton, WA, USA, 9 July 2019. [Google Scholar]

- Zhao, Z.; Barijough, K.M.; Gerstlauer, A. Deepthings: Distributed adaptive deep learning inference on resource-constrained iot edge clusters. IEEE Trans.-Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 2348–2359. [Google Scholar] [CrossRef]

- Li, E.; Zeng, L.; Zhou, Z.; Chen, X. Edge AI: On-demand accelerating deep neural network inference via edge computing. IEEE Trans. Wirel. Commun. 2019, 19, 447–457. [Google Scholar] [CrossRef]

- Wang, H.; Cai, G.; Huang, Z.; Dong, F. ADDA: Adaptive distributed DNN inference acceleration in edge computing environment. In Proceedings of the 2019 IEEE 25th International Conference on Parallel and Distributed Systems (ICPADS), Tianjin, China, 4–6 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 438–445. [Google Scholar]

- Gao, M.; Cui, W.; Gao, D.; Shen, R.; Li, J.; Zhou, Y. Deep neural network task partitioning and offloading for mobile edge computing. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Mao, J.; Chen, X.; Nixon, K.W.; Krieger, C.; Chen, Y. Modnn: Local distributed mobile computing system for deep neural network. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1396–1401. [Google Scholar]

- Mao, J.; Yang, Z.; Wen, W.; Wu, C.; Song, L.; Nixon, K.W.; Chen, X.; Li, H.; Chen, Y. Mednn: A distributed mobile system with enhanced partition and deployment for large-scale dnns. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 751–756. [Google Scholar]

- Shahhosseini, S.; Albaqsami, A.; Jasemi, M.; Bagherzadeh, N. Partition pruning: Parallelization-aware pruning for deep neural networks. arXiv 2019, arXiv:1901.11391. [Google Scholar]

- Kilcioglu, E.; Mirghasemi, H.; Stupia, I.; Vandendorpe, L. An energy-efficient fine-grained deep neural network partitioning scheme for wireless collaborative fog computing. IEEE Access 2021, 9, 79611–79627. [Google Scholar] [CrossRef]

- Hadidi, R.; Cao, J.; Woodward, M.; Ryoo, M.S.; Kim, H. Musical chair: Efficient real-time recognition using collaborative iot devices. arXiv 2018, arXiv:1802.02138. [Google Scholar]

- de Oliveira, F.M.C.; Borin, E. Partitioning convolutional neural networks for inference on constrained Internet-of-Things devices. In Proceedings of the 2018 30th International Symposium on Computer Architecture and High Performance Computing (SBAC-PAD), Lyon, France, 24–27 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 266–273. [Google Scholar]

- Mohammed, T.; Joe-Wong, C.; Babbar, R.; Di Francesco, M. Distributed inference acceleration with adaptive DNN partitioning and offloading. In Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 854–863. [Google Scholar]

- He, W.; Guo, S.; Guo, S.; Qiu, X.; Qi, F. Joint DNN partition deployment and resource allocation for delay-sensitive deep learning inference in IoT. IEEE Internet Things J. 2020, 7, 9241–9254. [Google Scholar] [CrossRef]

- Tang, X.; Chen, X.; Zeng, L.; Yu, S.; Chen, L. Joint multiuser dnn partitioning and computational resource allocation for collaborative edge intelligence. IEEE Internet Things J. 2020, 8, 9511–9522. [Google Scholar] [CrossRef]

- Dong, C.; Hu, S.; Chen, X.; Wen, W. Joint Optimization With DNN Partitioning and Resource Allocation in Mobile Edge Computing. IEEE Trans. Netw. Serv. Manag. 2021, 18, 3973–3986. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Qi, H.; Sparks, E.R.; Talwalkar, A. Paleo: A performance Model for Deep Neural Networks. 2016. Available online: https://openreview.net/pdf?id=SyVVJ85lg (accessed on 12 June 2021).

- Tian, X.; Zhu, J.; Xu, T.; Li, Y. Mobility-included DNN partition offloading from mobile devices to edge clouds. Sensors 2021, 21, 229. [Google Scholar] [CrossRef]

| Device No. | GFLOPS | Battery Capacity (J) | I/O Bandwidth (MBPS) |

|---|---|---|---|

| 1 | 0.218 | 250 | 140.85 |

| 2 | 9.92 | 20 | 1525.63 |

| 3 | 0.213 | 500 | 135.89 |

| 4 | 13.5 | 10 | 1698.25 |

| 5 | 0.247 | 300 | 140.91 |

| 6 | 3.62 | 200 | 159.45 |

| DNN Model | Method | Avg | Max | Min | Mode | SD |

|---|---|---|---|---|---|---|

| AlexNet | Method-1 | 49.73 | 92.88 | 20.97 | 20.97 | 35.23 |

| Method-2 | 42.54 | 92.88 | 20.97 | 20.97 | 32.95 | |

| Proposed method | 28.90 | 92.88 | 20.97 | 20.97 | 21.44 | |

| SqueenzeNet | Method-1 | 36.13 | 51.81 | 20.45 | 51.81 | 15.68 |

| Method-2 | 32.99 | 51.81 | 20.45 | 20.45 | 15.36 | |

| Proposed method | 30.54 | 52.04 | 20.45 | 20.45 | 14.05 | |

| MobileNet | Method-1 | 96.20 | 114.10 | 84.27 | 84.27 | 14.61 |

| Method-2 | 93.22 | 114.10 | 84.27 | 84.27 | 13.67 | |

| Proposed method | 95.86 | 117.41 | 84.27 | 114.10 | 15.11 | |

| ResNet110 | Method-1 | 232.05 | 259.54 | 204.56 | 259.54 | 27.49 |

| Method-2 | 226.55 | 259.54 | 204.56 | 204.56 | 26.93 | |

| Proposed method | 220.03 | 254.99 | 204.56 | 233.89 | 16.77 |

| DNN Model | Number of Partitions | Exhaustive Method | Improved GA | Basic GA |

|---|---|---|---|---|

| AlexNet | 3 | 490.43 | 267.20 | 275.46 |

| 5 | 7870.74 | 6563.56 | 7214.8 | |

| 7 | 3,675,603.00 | 6563.56 | 24,991.9 | |

| SqueezeNet | 3 | 490.97 | 372.15 | 381.81 |

| 5 | 41,385.8 | 6568.28 | 6713.38 | |

| 6 | 1,476,833 | 11,197.10 | 12,572.80 | |

| MobileNet | 2 | 485.28 | 231.70 | 268.69 |

| 3 | 751.76 | 335.14 | 341.12 | |

| 4 | 29,311.00 | 1238.22 | 1357.69 | |

| ResNet110 | 2 | 530.85 | 269.12 | 381.98 |

| 3 | 12,665.30 | 1620.24 | 4086.40 | |

| 4 | 5,217,793.75 | 7322.81 | 19,345.10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Na, J.; Zhang, H.; Lian, J.; Zhang, B. Partitioning DNNs for Optimizing Distributed Inference Performance on Cooperative Edge Devices: A Genetic Algorithm Approach. Appl. Sci. 2022, 12, 10619. https://doi.org/10.3390/app122010619

Na J, Zhang H, Lian J, Zhang B. Partitioning DNNs for Optimizing Distributed Inference Performance on Cooperative Edge Devices: A Genetic Algorithm Approach. Applied Sciences. 2022; 12(20):10619. https://doi.org/10.3390/app122010619

Chicago/Turabian StyleNa, Jun, Handuo Zhang, Jiaxin Lian, and Bin Zhang. 2022. "Partitioning DNNs for Optimizing Distributed Inference Performance on Cooperative Edge Devices: A Genetic Algorithm Approach" Applied Sciences 12, no. 20: 10619. https://doi.org/10.3390/app122010619

APA StyleNa, J., Zhang, H., Lian, J., & Zhang, B. (2022). Partitioning DNNs for Optimizing Distributed Inference Performance on Cooperative Edge Devices: A Genetic Algorithm Approach. Applied Sciences, 12(20), 10619. https://doi.org/10.3390/app122010619