1. Introduction

As science and technology advance daily, the quality of life has improved, but air pollution is also increasing in various forms. Fine dust causes or worsens symptoms, such as heart- and lung-related diseases [

1]. Among many studies on air pollution, studies on predicting fine dust have been conducted using prediction models of time series data to prevent such health damage. However, most studies include various types of data (e.g., PM

10, temperature, dew point, and wind speed) but do not include aerosol data. Aerosol refers to fine substances floating in the atmosphere, including fine dust, and it is useful for predicting fine dust movement and accumulation because of atmospheric diffusion.

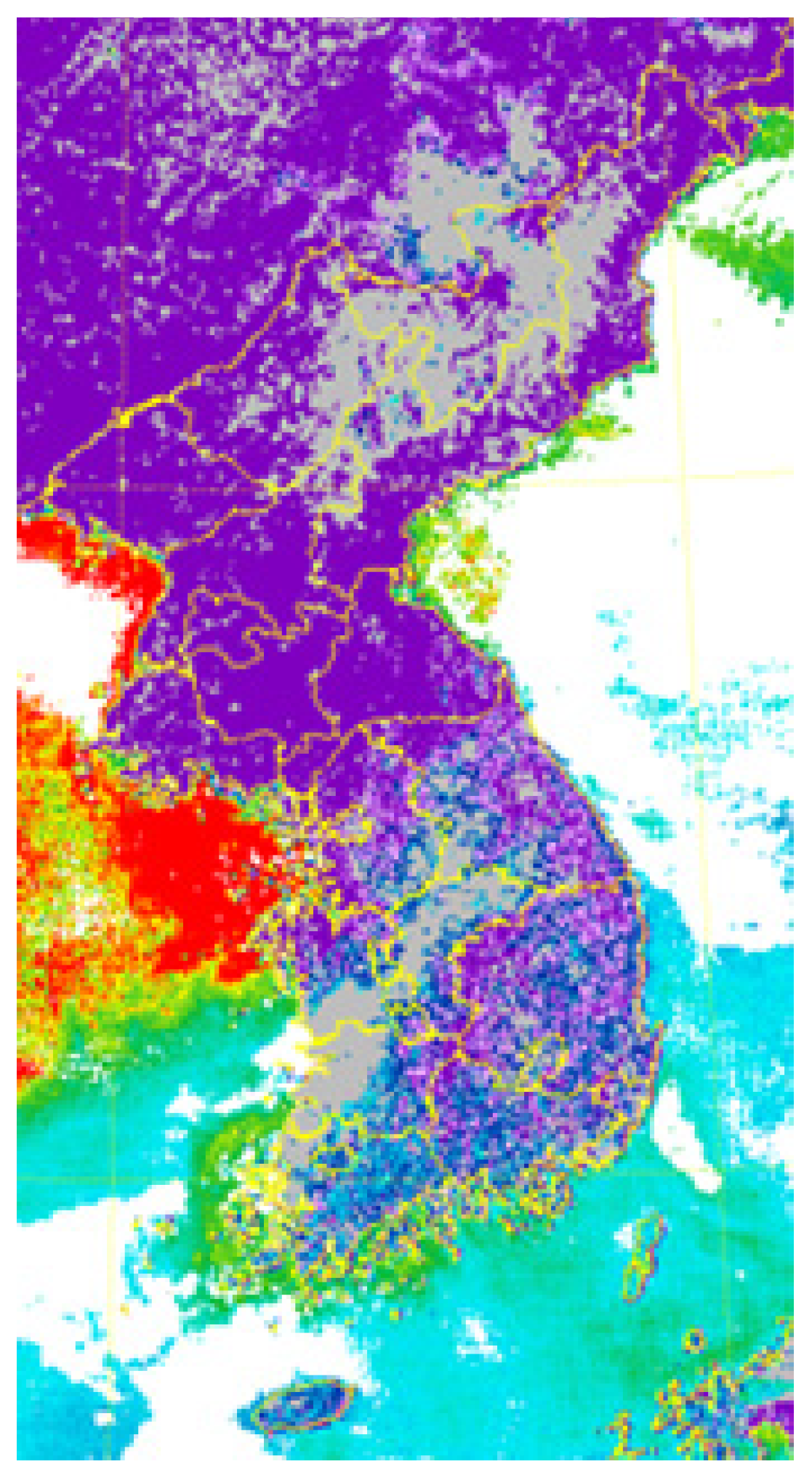

In this paper, the numerical dataset that demonstrated the best performance among the datasets constructed in the previous study [

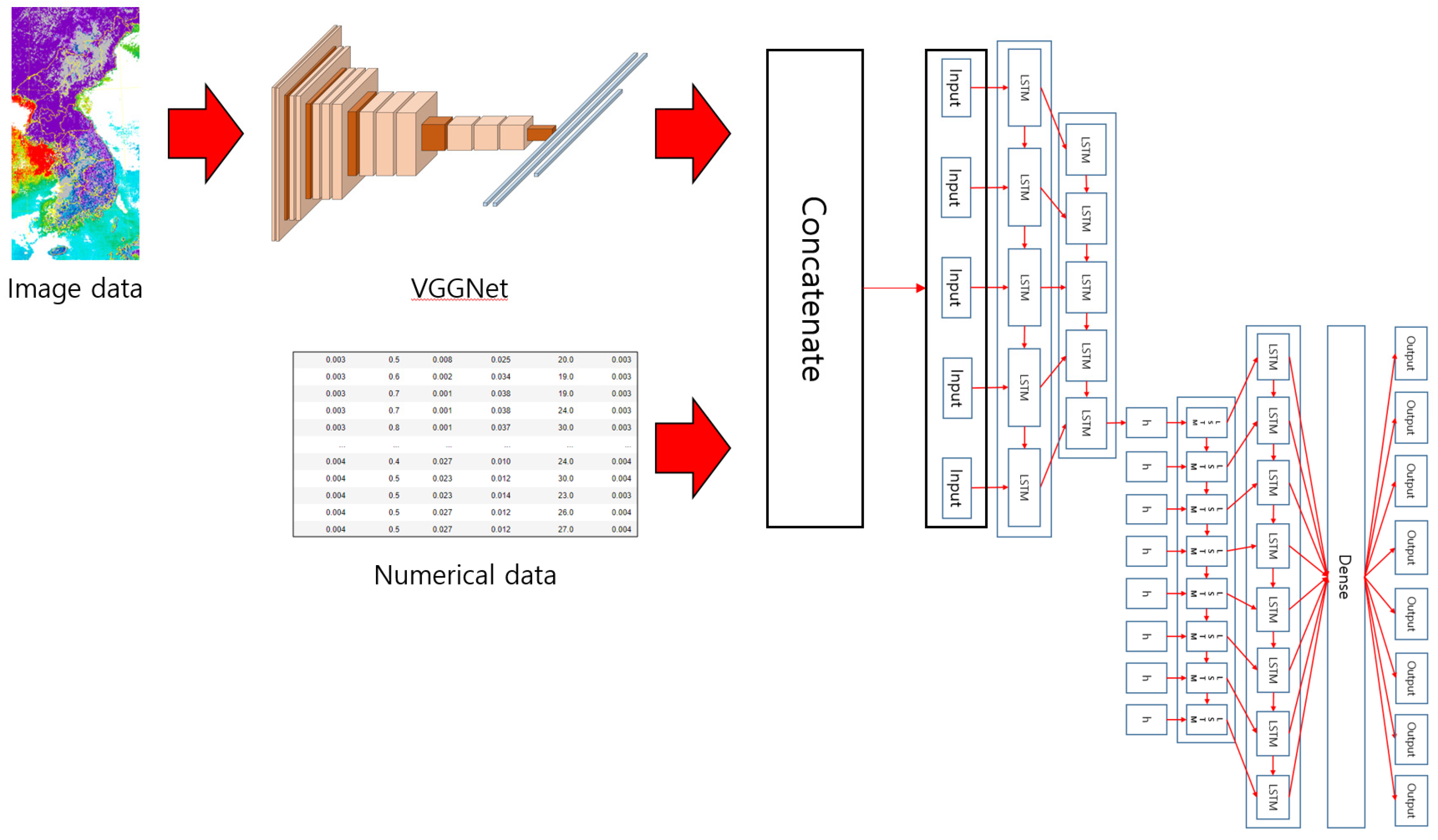

2] was referred to, and the aerosol image data highly related to fine dust was added to enhance the model’s performance. However, the preconfigured numerical data was in units of hour, so the multimodal dataset was constructed by adding a satellite image with information on aerosol particle size, which was organized by the hour to match this. In addition, the satellite image contains aerosol data of the entire Korean Peninsula, so the overall flow of fine dust on the Korean Peninsula can be observed.

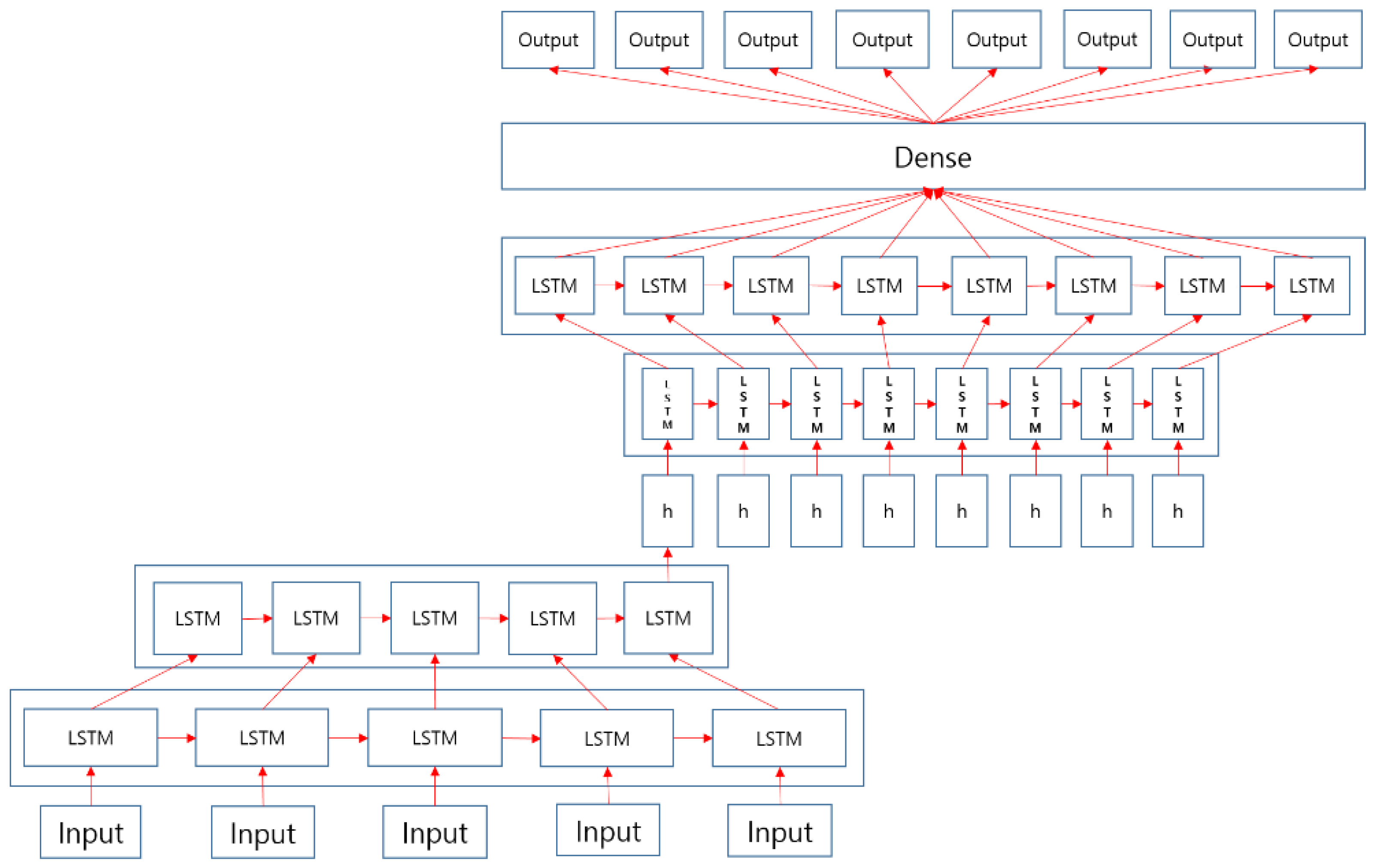

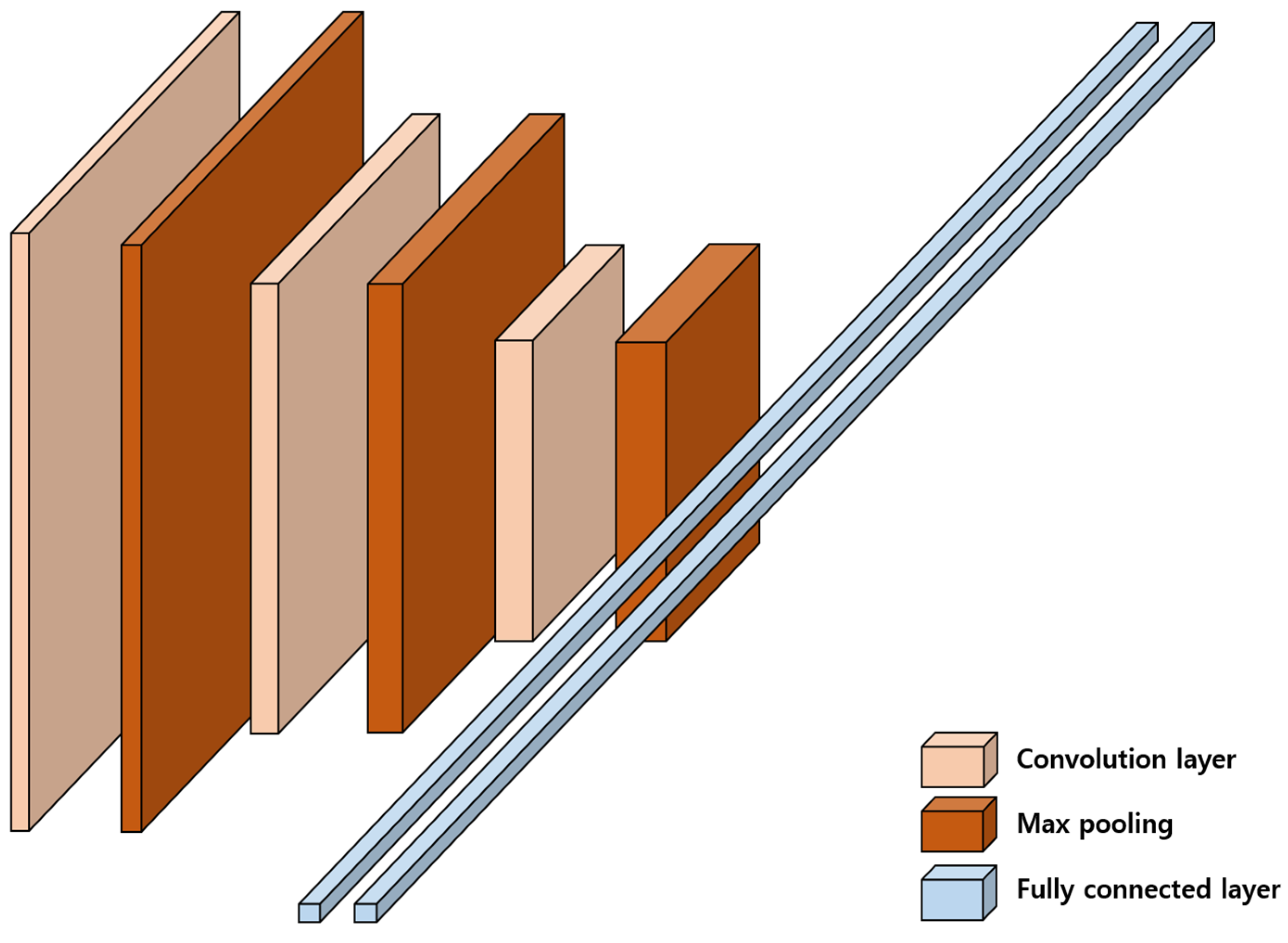

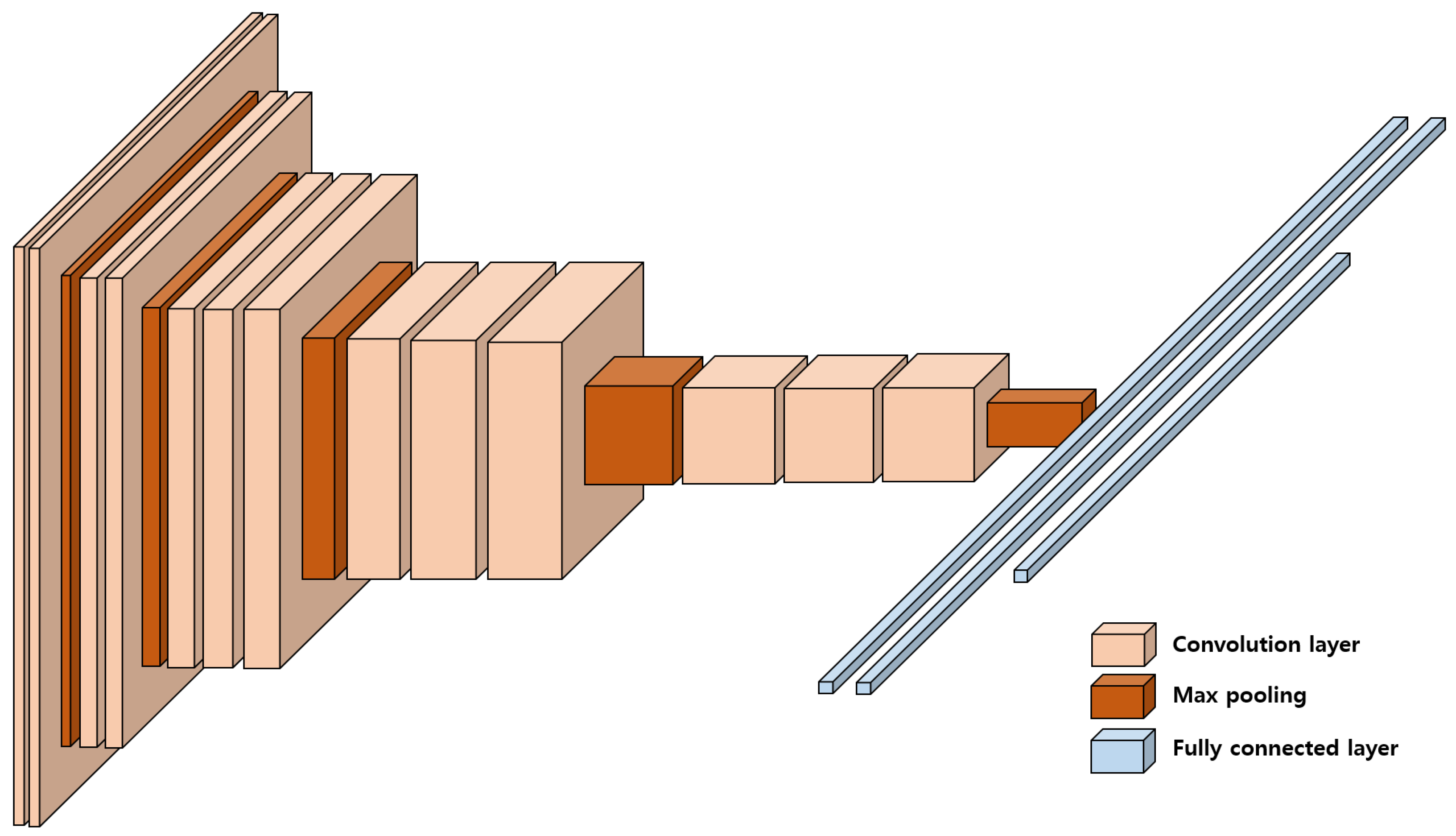

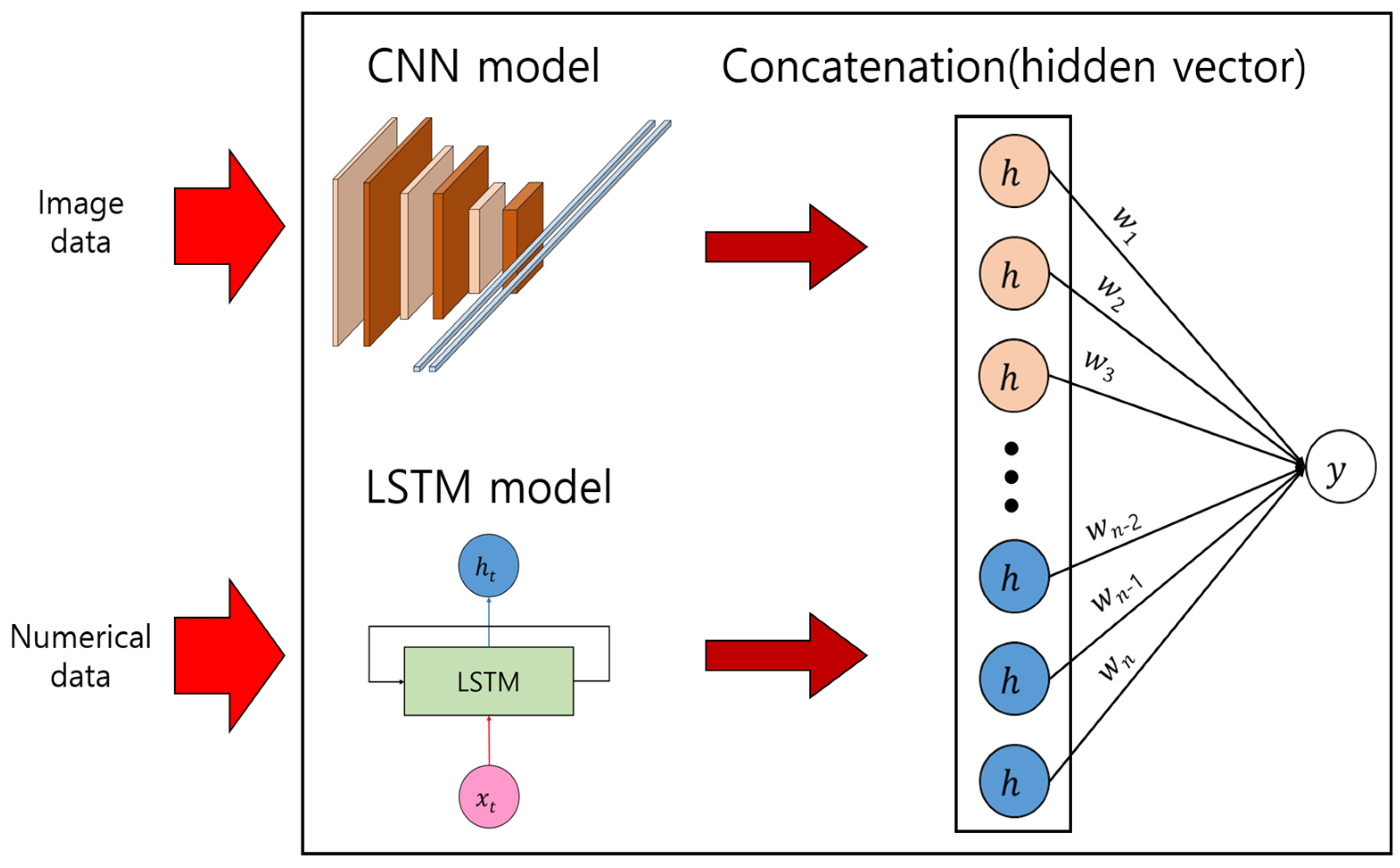

In this paper, we propose a multimodal deep learning model by combining the Long Short Term Memory (LSTM) series model that shows superior performance in time series data prediction and the Convolutional Neural Network (CNN) series models useful for image processing to learn the configured dataset. The multimodal deep learning model suggested in this paper combines the features of the numerical and image datasets processed by the CNN series model, so it is necessary to process many features. Therefore, the LSTM series model used the LSTM AutoEncoder, which had the best performance when there were many features. In the previous study [

2], the CNN series model used a basic CNN and VGGNet (VGG16, VGG19) to compare the performance difference according to network depth. The VGGNet model is a standard deep CNN architecture with multiple layers, developed by the Visual Geometry Group, a research team at Oxford University.

As a result, the multimodal deep learning models using numerical and image data performed better than the LSTM series model using only numerical data. Among them, the performance was the best when using VGG19, which has the deepest network depth among the CNN series models. In addition, we divided the image data into original and cropped images and applied each to a multimodal deep learning model to compare the performance difference. To maximize the model’s performance, the hyperparameter set in the model was optimized using katib, a hyperparameter optimization system. The contents mentioned above are described in detail below.

The paper is composed as follows.

Section 2 describes related research, and

Section 3 presents the constructed dataset. Next,

Section 4 defines the proposed model for the dataset, and

Section 5 explains the hyperparameter optimization of the configured model.

Section 6 describes the experiment and ends with the conclusion and future work in

Section 7.

2. Related Research

Various time series prediction models for weather forecasting have been studied. Athira [

3] utilized deep learning models using AirNet, which is pollution and weather time series data for future PM

10 predictions. In this study, RNN, LSTM, and GRU were used for time series data learning, and GRU performed best because only part of AirNet was used due to resource problems.

Chau [

4] suggested deep learning-based Weather Normalized Models (WNM) to quantify air quality changes during the partial shutdown period due to the COVID-19 pandemic in Quito, Ecuador. Deep learning algorithms used CNN, LSTM, RNN, Bi-RNN, and GRU, among which WNM using LSTM and Bi-RNN performed the best.

Moreover, Salman [

5] presented an LSTM model that adds intermediate variable signals to LSTM memory blocks to predict the weather in the Indonesian airport region and explores various architectures such as single- and multilayer LSTM. The proposed model showed that the intermediate variable could enhance the model’s predictive ability. The best LSTM model and intermediate data were the multilayer LSTM model and pressure variable, respectively.

Bekkar [

6] proposed a hybrid model based on CNN and LSTM to predict PM

2.5 per hour in Beijing, China. Based on the hybrid model, we use CNN to extract spatial and internal properties for input values and apply time series data for extracted values using LSTM. The suggested model performed better than the existing deep learning models (i.e., LSTM, Bi-LSTM, GRU, and Bi-GRU).

The studies mentioned earlier use time series prediction models, such as RNN, LSTM, and GRU, similar to our research but do not use multimodal data in contrast to our approach.

Few works adopt multimodal data for weather forecasting prediction performance. Xie [

7] presented a multimodal deep learning model that combines CNN and GRU to perform PM

2.5 predictions in the Wuxi region over 6 h based on data provided by the Wuxi environmental protection agency. The CNN utilizes a one-dimensional convolution layer, which extracts and integrates local variation trends and spatial correlation characteristics of multimodal air quality data. A GRU learns long-term dependencies based on the CNN results. The proposed model performed better than the existing single deep learning model (i.e., Shallow Neural Network, LSTM, and GRU). Kalajdjieski [

8] recommended a custom pretrained inception model to estimate (i.e., classify whether images collected with observations are contaminated) by air pollution in the Skopje region in northern Macedonia using multimodal data composed of weather data retrieved via sensors and image data from Skopje using cameras. The proposed model is a structure that adds a new sub-model path to the pretrained inception model that processes image data, receives weather data as input, and connects the result of three fully connected layers and the output processed by the pretrained inception model to the fully connected layer again. It performed better than existing models (e.g., CNN, ResNet, and pretrained inception).

Our research differs from Xie’s [

7] and Kalajdjieski’s [

8] work. While the multimodal data in Xie [

7] are both numerical, we use image and numerical data, such as SO

2, PM

10, and wind speed, from various regions. Kalajdjieski’s work aims to classify images into polluted or unpolluted, utilizing sensory weather data (e.g., temperature). Unlike Kalajdjieski’s study, our goal is to predict future PM

10 based on the satellite image and numerical weather data.

This paper configured multimodal data by adding image data because the image data has information on aerosol particle size. Aerosol can determine the possibility of fine dust movement and accumulation due to atmospheric diffusion, which helps predict fine dust. Thus, we tried to increase performance by constructing multimodal data with numerical and image data that affect fine dust.

6. Experimental Evaluation

We evaluated the performance of newly proposed models using the dataset described in

Section 3. This experiment used Python program language, Tensorflow, Keras, CentOS Linux release 9 July 2009 (Core) for software, and Intel(R) Xeon(R) Silver 4210 CPU @ 2.20 GHz, 125 GB of RAM for hardware. However, we used 90 GB of memory for model learning. LSTM AutoEncoder is denoted as LSTM_AE. In the case of the multimodal deep learning model, the model combining LSTM AutoEncoder and the basic CNN is denoted as Multimodal1, the model combining LSTM AutoEncoder and VGG16 is denoted Multimodal2, and the model combining LSTM AutoEncoder and VGG19 is denoted Multimodal3, respectively. LSTM_AE used only numerical data, and multimodal deep learning models used multimodal data. We used RMSE (Root Mean Square Error) as a performance indicator for the model’s performance evaluation. The ratio of training sets/verification sets/test sets is set to 6:2:2. The basic activation function of the LSTM layer was tanh, the cyclic activation function was sigmoid, and the activation function of the dense layer was ReLU, respectively. The optimization function was set to Adam.

Table 1 shows the range of hyperparameters considering the accuracy of each model. The contents of

Table 1 are applied to katib.

For Multimodal2 and Multimodal3, which are LSTM_AE + VGGNet16/19, the maximum batch size is set to 60 because it often diverges infinitely when the batch size exceeds 60, for multimodal deep learning models (Multimodal1, Multimodal2, and Multimodal3), almost all of them diverge infinitely when the epoch exceeds 200, setting the maximum epoch to 200.

We conducted 10 experiments on each model, and

Table 2 shows the top 5 in order of good performance. For the multimodal deep learning model, numerical data used the same data as LSTM_AE, and all image data used the original image data. This paper compares the performance based on the minimum and average values among the experimental results.

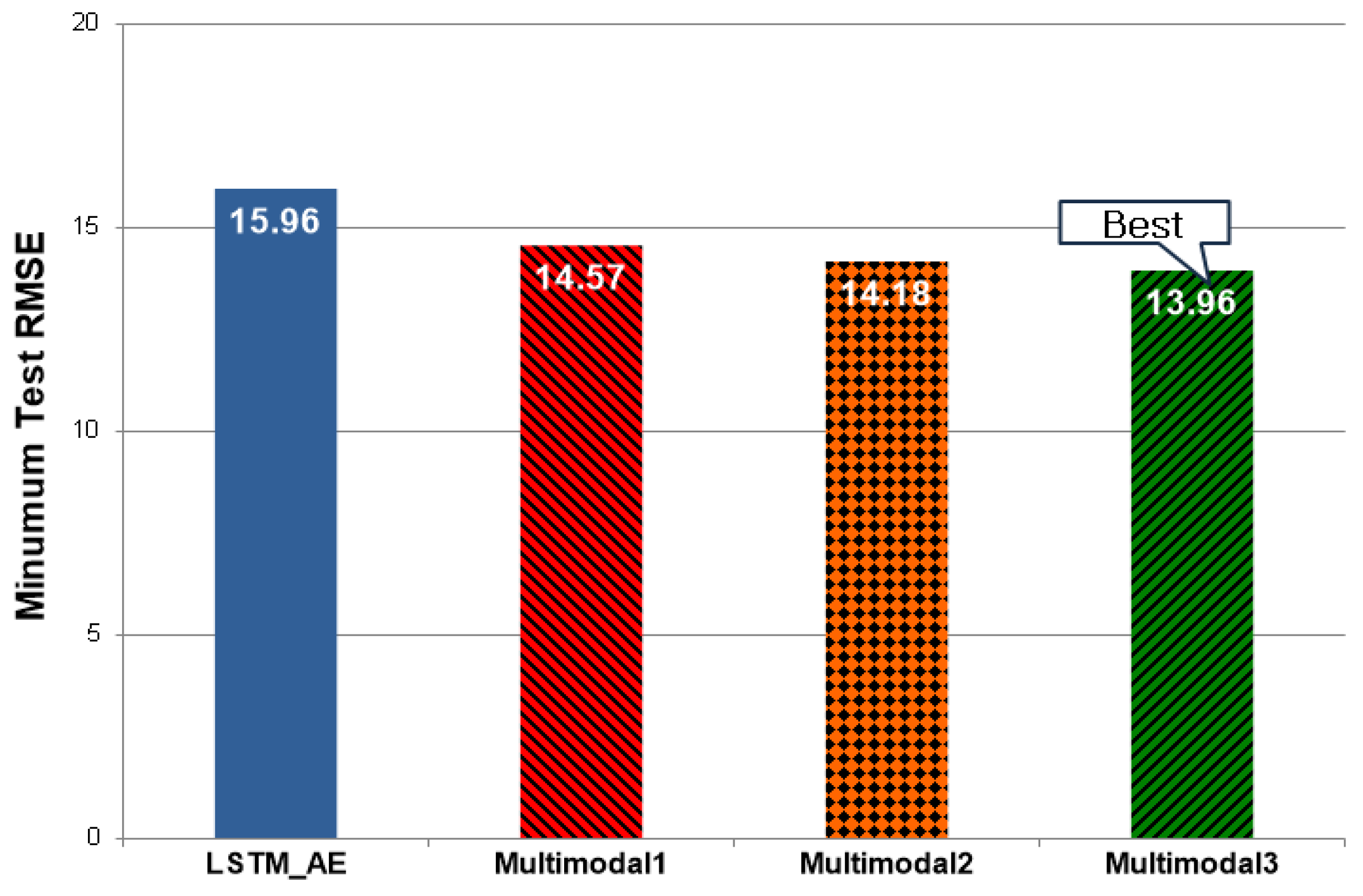

Figure 9 displays the minimum Test RMSE (Rank 1) of each model listed in

Table 2. The RMSE of LSTM_AE, which processes numerical data, showed a value of 15.96, and the RMSE of Multimodal1, which is LSTM_AE + CNN, was 14.57, decreased by 8.71% compared with LSTM_AE. The RMSE of Multimodal2, LSTM_AE + VGG16, was 14.18, which decreased by 2.68% compared with Multimodal1. The RMSE of Multimodal3, LSTM_AE + VGG19, was 13.96, which decreased by 1.55% compared with Multimodal2.

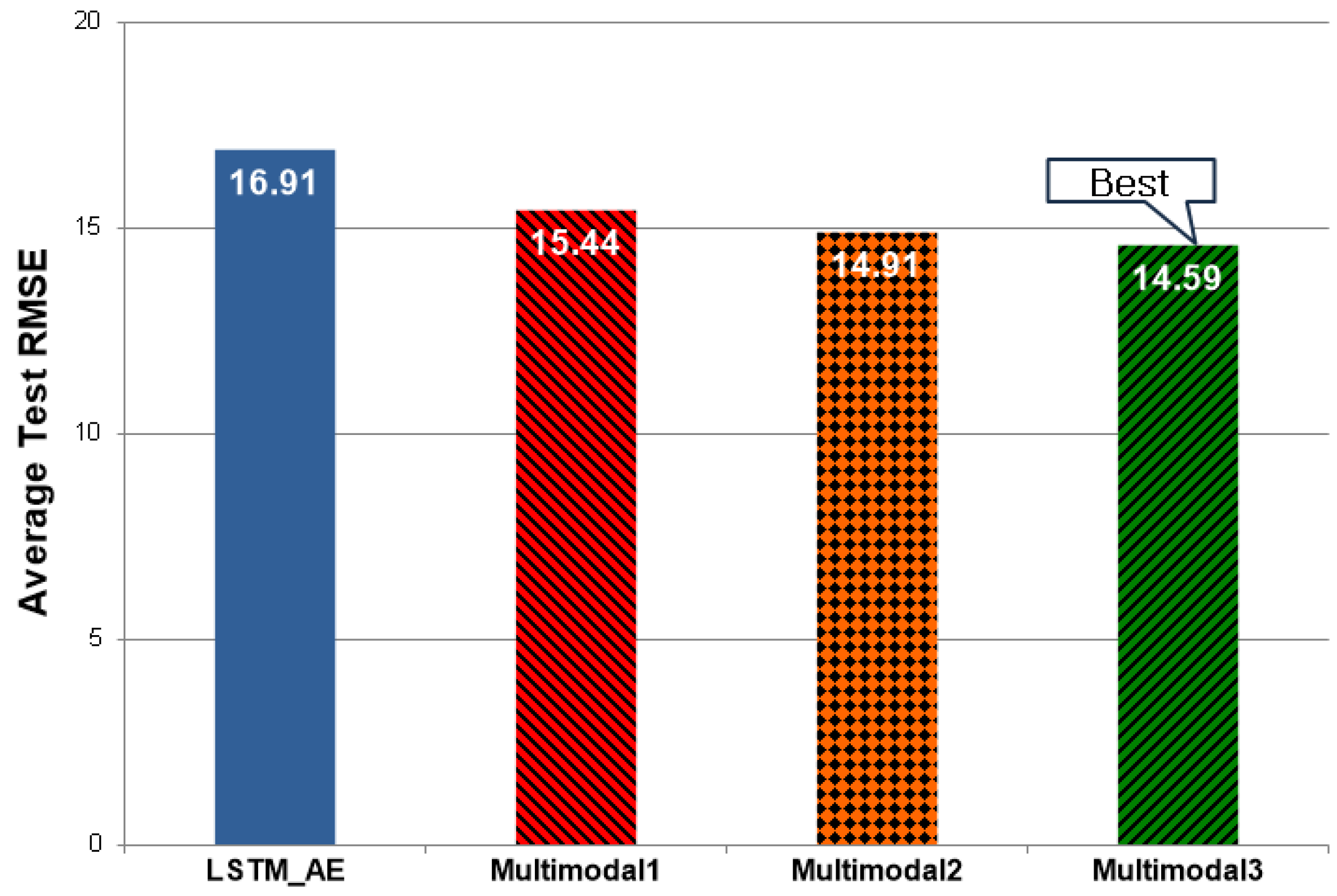

Figure 10 shows the average Test RMSE (Average of Rank) of each model shown in

Table 2. The RMSE of LSTM_AE, which processes numerical data, showed a value of 16.91, and the RMSE of Multimodal1, which is LSTM_AE + CNN, was 15.44, decreased by 8.69% compared with LSTM_AE. The RMSE of Multimodal2, LSTM_AE + VGG16, was 14.91, which decreased by 3.43% compared with Multimodal1. The RMSE of Multimodal3, LSTM_AE + VGG19, was 14.59, which decreased by 2.15% compared with Multimodal2.

Table 3 shows the top five out of 10 experiments for proposed models in the order of good performance. However, unlike

Table 2, in the case of the multimodal deep learning model, numerical data used the same data as LSTM_AE, but all image data used cropped image data. Again, the performance is compared based on the minimum and average values among the experimental results.

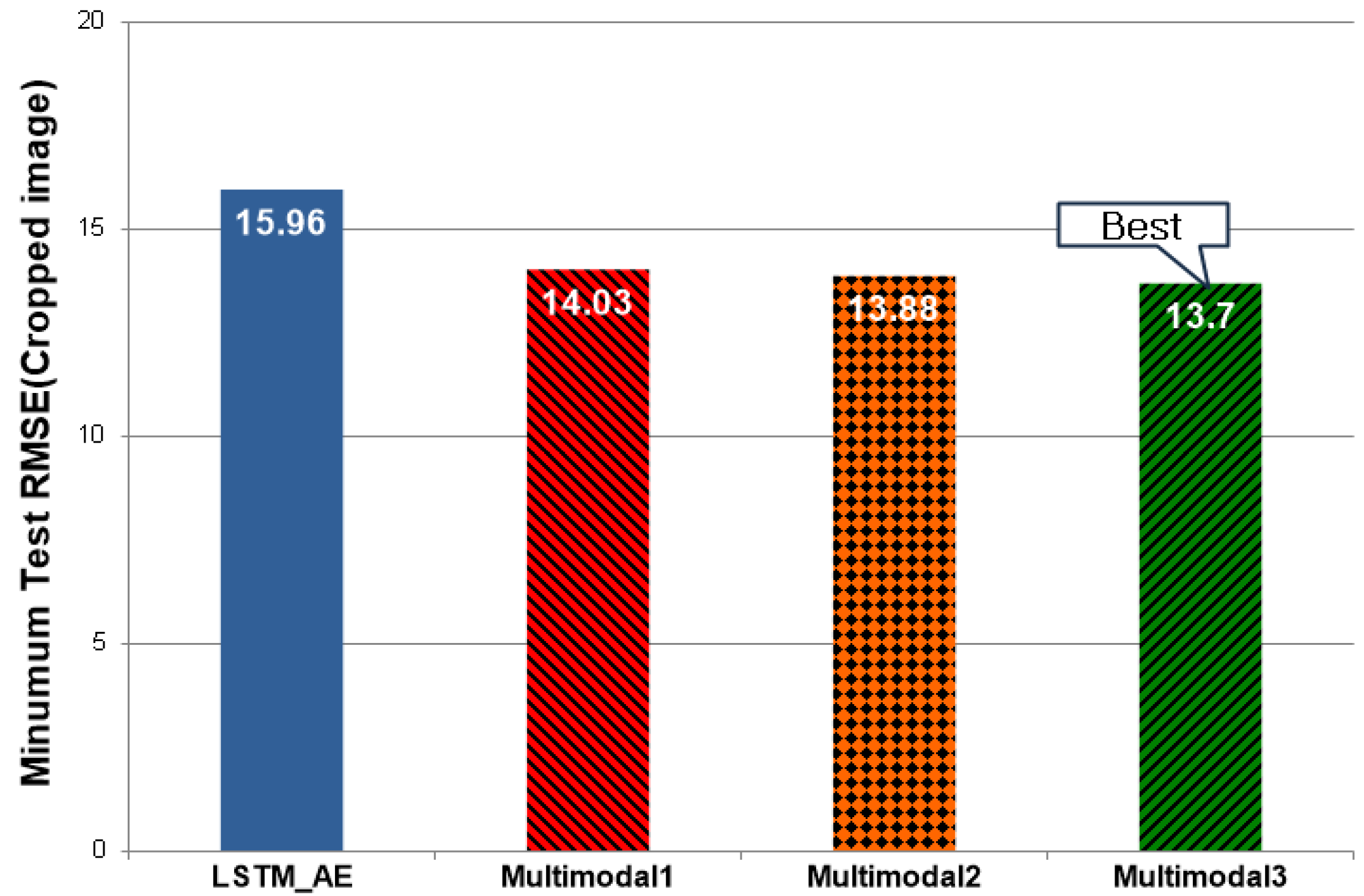

Figure 11 shows the minimum Test RMSE (Rank 1) of each model shown in

Table 3. The RMSE of LSTM_AE, which processes numerical data, showed a value of 15.96, and the RMSE of Multimodal1, which is LSTM_AE + CNN, was 14.03, decreased by 12.09% compared with LSTM_AE. The RMSE of Multimodal2, LSTM_AE + VGG16, was 13.88, which decreased by 1.07% compared with Multimodal1. The RMSE of Multimodal3, LSTM_AE + VGG19, was 13.7, which decreased by 1.3% compared with Multimodal2.

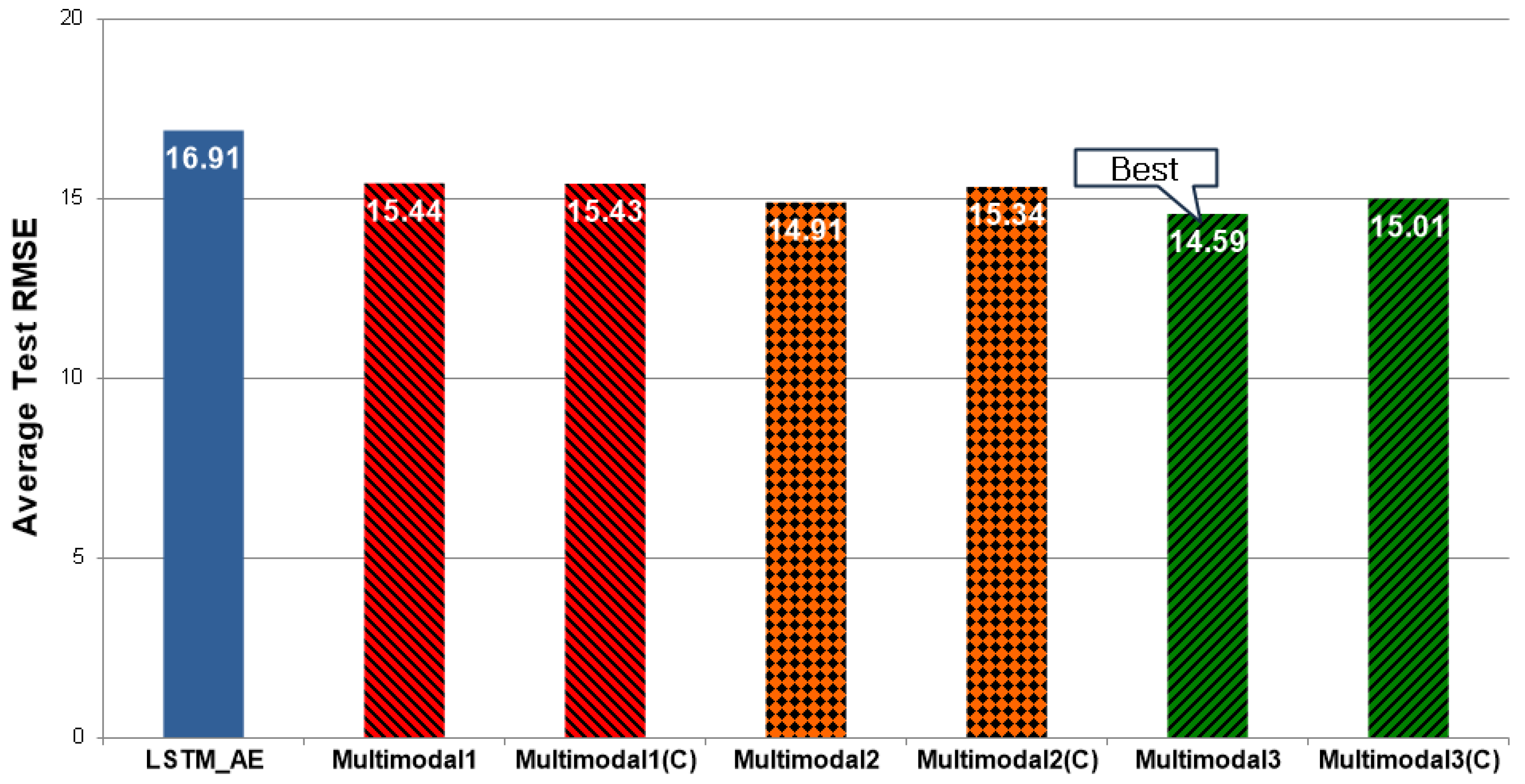

Figure 12 shows the average Test RMSE (Average of Rank) of each model shown in

Table 3. The RMSE of LSTM_AE, which processes numerical data, showed a value of 16.91, and the RMSE of Multimodal1, which is LSTM_AE + CNN, was 15.43, decreased by 8.75% compared with LSTM_AE. The RMSE of Multimodal2, LSTM_AE + VGG16, was 15.34, which decreased by 0.58% compared with Multimodal1. The RMSE of Multimodal3, LSTM_AE + VGG19, was 15.01, which decreased by 2.15% compared with Multimodal2.

Figure 13 compares minimum Test RMSE for all experimental results in

Table 2 and

Table 3, and C means using cropped images. Overall, the performance was better when cropped images were applied, and Multimodal3(C) was the best among them.

The RMSE of Multimodal1(C) was 14.03, which decreased by 3.7% compared with Multimodal1. The RMSE of Multimodal2(C) was 13.88, which decreased by 2.11% compared with Multimodal2. The RMSE of Multimodal3(C) was 13.7, which decreased by 1.86% compared with Multimodal2.

Figure 14 compares the average Test RMSE for all experimental results in

Table 2 and

Table 3, and C means using cropped images. Unlike the minimum Test RMSE, the performance was not good when cropped images were applied. The RMSE of Multimodal1(C) is 15.43, which is very similar to Multimodal1, and the RMSE of Multimodal2(C) is 15.34, increased by 2.88% compared with Multimodal2. The RMSE of Multimodal3(C) was 15.01, which increased by 2.88% compared with Multimodal3.

Combining the experimental results, the multimodal deep learning model (Multimodal1, Multimodal2, Multimodal3) using both numerical and image data performed better than a single deep learning model (LSTM_AE) using only numerical data.

If the image data is the original image, the minimum Test RMSE of the multimodal deep learning model decreased by approximately 8.71–12.53% compared with the single deep learning model, and the average Test RMSE of the multimodal deep learning model was reduced by approximately 8.69–13.72% compared with the single deep learning model. If the image data is the cropped image, the minimum Test RMSE of the multimodal deep learning model was decreased by approximately 12.09–14.16% compared with the single deep learning model, and the average Test RMSE of the multimodal deep learning model was lowered by approximately 8.75–11.24% compared with the single deep learning model.

In addition, from the viewpoint of minimum Test RMSE of the multimodal deep learning model, when the cropped image was used, it decreased by approximately 1.86–3.7% compared with when the original image was used, but from the viewpoint of average Test RMSE of the multimodal deep learning model, it increased by approximately 0–2.88%.