Abstract

Asteroseismology studies the physical structure of stars by analyzing their solar-type oscillations as seismic waves and frequency spectra. The physical processes in stars and oscillations are similar to the Sun, which is more evolved to the red-giant branch (RGB), representing the Sun’s future. In stellar astrophysics, the RGB is a crucial problem to determine. An RGB is formed when a star expands and fuses all the hydrogen in its core into helium which starts burning, resulting in helium burning (HeB). According to a recent state by NASA Kepler mission, 7000 HeB and RGB were observed. A study based on an advanced system needs to be implemented to classify RGB and HeB, which helps astronomers. The main aim of this research study is to classify the RGB and HeB in asteroseismology using a deep learning approach. Novel bidirectional-gated recurrent units and a recurrent neural network (BiGR)-based deep learning approach are proposed. The proposed model achieved a 93% accuracy score for asteroseismology classification. The proposed technique outperforms other state-of-the-art studies. The analyzed fundamental properties of RGB and HeB are based on the frequency separation of modes in consecutive order with the same degree, maximum oscillation power frequency, and mode location. Asteroseismology Exploratory Data Analysis (AEDA) is applied to find critical fundamental parameters and patterns that accurately infer from the asteroseismology dataset. Our key findings from the research are based on a novel classification model and analysis of root causes for the formation of HeB and RGB. The study analysis identified that the cause of HeB increases when the value of feature Numax is high and feature Epsilon is low. Our research study helps astronomers and space star oscillations analyzers meet their astronomy findings.

1. Introduction

Asteroseismology is the study of oscillations in stars [1]. The star’s different oscillation modes are sensitive to different parts of the star. They inform astronomers about the star’s internal structure, which is not directly possible from overall properties such as brightness and surface temperature. Asteroseismology is closely related to helioseismology [2], which studies stellar pulsation, specifically in the Sun. In asteroseismology and helioseismology, qualitatively different information is available for the Sun because its surface can be resolved.

Stellar evolution refers to how stars change with time [3]. The primary factor in determining how a star evolves is its mass. In asteroseismology, the evolution of a star after the red-giant phase depends on its mass [4]. An RGB is formed when a star expands and fuses all the hydrogen in its core into helium, which then starts burning. This burning results in HeB. The RGB is a cool, bright, and evolved star. The RGB stars have a helium core surrounded by a shell of hydrogen. The ranging amplitudes of RGB oscillate from a few tens to thousands of parts per million. In the final stages, an RGB is a dying star of stellar evolution. In about five billion years, our Sun will turn into an RGB. The Sun will expand and overwhelm the inner planets, possibly even Earth [5].

The change in the Sun may serve as a source of hope for other planets. When a star transforms into RGB, it changes the habitable zone. The range of habitable zone distance where liquid water can exist on a world’s surface. Due to stars remaining RGB for a billion years, it may be possible for life to arise on orbiting planets and moons by receiving some warmth [6].

According to a recent state by the NASA Kepler mission, 7000 helium-burning red giants were observed [7]. The RGB bloats to 62 million to 620 million miles in diameter, which is 100 to 1000 times wider than our Sun. The RGB stars cover a large area to spread energy. The surface temperatures of RGB stars are relatively calm. The temperature reaches from 4000 to 5800 degrees Fahrenheit, which is slightly over half as hot as the Sun. This temperature causes stars to shine in the spectrum.

Deep learning is a sub-type of the machine learning area [8] and a core element in the data science field. Deep learning [9] algorithms are inspired by the working function of the human brain called artificial neural networks. Deep learning models are used for automated predictive modeling tasks [10]. The deep learning model solves the pattern recognition problem faster without human involvement [11]. Deep learning is utilized to create new business models increasingly in more companies. Deep learning applications are in language translation [12], image classification [13], speech recognition [14], asteroseismology [15], neuroscience [16], and many more.

The artificial neural networks [17] are based on the layers of nodes. The nodes in the individual layers are connected to adjacent layers, making a fully connected network graph [18]. The information signals travel between nodes and assign corresponding weights. The final output layer compiles the weights to produce an output. Our research study uses a deep-learning-based approach for classification in asteroseismology. Our research study in the context of asteroseismology has the following prominent contributions:

- A novel bidirectional-gated recurrent unit and recurrent neural network (BiGR)-based deep learning model is proposed to classify the RGB (red-giant branch) and HeB (helium burning);

- The Asteroseismology Exploratory Data Analysis (AEDA) is conducted to find the dataset feature patterns disorders and obtain a fruitful data visualization analysis for a better understanding of asteroseismology norms;

- A comparative analysis of the past approaches in the context of asteroseismology classification with our novel approach is conducted and examined in this research study. The proposed technique outperforms other state-of-the-art studies;

- The layered architectural analysis of our novel deep-learning-based BiGR model is conducted to analyze the working layers stack involved in model building and classification tasks;

- The hyperparameter tuning is applied to obtain the best-fit parameters for our proposed model to classify the RGB and HeB in asteroseismology;

- The effects of the number of iterations per epoch during the training of our proposed deep learning model are analyzed by its each epoch accuracy, data loss, complete training time, and data validation;

- The time-series analysis of the proposed model in terms of data loss and accuracy score among each epoch utilized in the model building;

- The ROC (receiver operating characteristic) curve analyses our novel deep-learning-based model at different threshold levels of the asteroseismology target category.

The rest of the research study is organized as follows: Section 2 is based on the related literature summary and comparison with our proposed approach. The research methodology is examined in Section 3. The novel proposed BiGR approach for classification in asteroseismology is examined in Section 4. The research results validation and evaluations are examined in Section 5. Section 6 is based on our research conclusions and study limitations.

2. Related Work

The asteroseismology research-related literature work is examined in this section. Several past research approaches are analyzed in the context of asteroseismology classification. The learning techniques were utilized and evaluated for the classification task.

The classical deep-learning-based 1D CNN [19] supervised learning model was applied to classify the red giant’s branch in asteroseismology [20]. The 1D CNN model was trained using the asteroseismology dataset. The visual features [21] from the oscillation spectra were utilized in this study. These features help define the oscillating properties of stars and the beginning of helium core burning. The model was tested for classifying the helium-burning red giants in asteroseismology.

A new method was proposed to determine the evolutionary phase of HeB and RGB stars for classification [22]. The asteroseismic data help to achieve the information necessary for classification. The proposed research presents an autonomous way of finding the evolutionary state through the data analysis of the power spectrum. The proposed method was efficient in providing a large number of star results. The dipole-mode oscillations structure was used that has a mixed character in RGB. These oscillation structures were utilized for classification.

Network intrusion detection using the deep-learning-based model [23] was proposed in this study [24]. The Convolutional Neural Network (CNN) and Gated Recurrent Units (GRU) based model was applied for the network intrusion detection task. The ADASYN technique [21] was utilized to balance the employed datasets. The dataset feature selection was made using the Random Forest model [25]. The proposed model was evaluated on three NSL-KDD, UNSW_NB15, and CIC-IDS2017 datasets.

The Twitter depression classification using recurrent neural networks (RNN) was proposed [26]. The Twitter tweets are scraped for model training and testing. The depression analysis was carried out based on textual data. The findings protect people from disorders by developing a diagnostic classification system.

The literature summary and comparative analysis of our proposed research study with the existing research approaches are expressed in Table 1. The analysis contains the year of publication of the literature, the dataset used for model building, the type of learning techniques utilized, proposed approaches, and the analysis remarks.

Table 1.

The literature summary and comparative analysis with other state-of-the-art studies with our proposed research.

3. Methodology

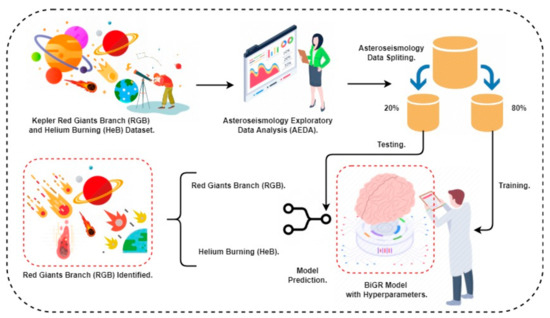

The asteroseismology dataset is utilized to classify red-giant branches and helium burning. The Asteroseismology Exploratory Data Analysis (AEDA) is performed to obtain valuable insights from the dataset features. The dataset splitting is performed to split the dataset into two portions: training and testing. The data splitting is divided into 80% for training and 20% for testing. The novel proposed BiGR model is trained in the training data portion and tested with the testing data portion for evaluations. The proposed model is then fully hyper-parametrized in all aspects. Finally, the proposed BiGR model is ready for the prediction process and employed in asteroseismology classification, as shown in Figure 1.

Figure 1.

The methodology architecture analysis of the proposed research study in the context of Asteroseismology.

3.1. Asteroseismology Dataset

The existing asteroseismology dataset is utilized for RGB (red-giant branch) and HeB (helium burning) classification. The dataset was collected by using space telescope sensors [27]. The dataset columns elaborated as the POP column is the target column containing 0 or 1 Population as shown in Table 2 and Table 3. The Population is 0 for RGB and 1 for HeB. The column Dnu F8.5 (uHz) means significant frequency separation of modes with the same degree and consecutive order. The column’s name represents {DELTA}nu. The column Numax F9.5 (uHz) frequency of maximum oscillation power. The column Epsilon F7.3 Location of the l = 0 mode. The dataset memory size is 31.4 kb. The asteroseismology dataset is publicly available for research purposes at Kaggle [28] and by the Royal Astronomical Society (RAS) at Oxford academics [29].

Table 2.

The asteroseismology dataset features’ related information.

Table 3.

The top 5 records analysis of asteroseismology dataset head.

3.2. Asteroseismology Exploratory Data Analysis (AEDA)

The Asteroseismology Exploratory Data Analysis (AEDA) is applied to obtain valuable insights and astronomic data patterns from the asteroseismology dataset. The AEDA is based on statistical data analysis, feature correlation graphs, bar charts, density graphs, 3D plots, and scatter plots. These graphs help us analyze the fundamental properties of RGB and HeB in asteroseismology.

During analysis, first, we conducted the statical data analysis on the asteroseismology features and counted the total number of instances in a dataset. The analysis demonstrates that the dataset contains 1001 instances, as analyzed in Table 4. The mean values of every feature in the data set are calculated. The mean is the average or the most common value in a data collection calculated using Equation (1).

Table 4.

The statistical data analysis of asteroseismology features analysis.

POP, Dnu, Numax, and Epsilon mean are 0.712288, 5.774810, 58.441771, and 0.610774, respectively. The standard deviation (STD) is the average amount of variability in the dataset. The STD, on average used to calculate how far each value lies from the mean. The STD is calculated using Equation (2). The STD of POP, Dnu, Numax, and Epsilon is 0.452923, 2.998103, 43.425561, and 0.342518, respectively.

The minimum value of every feature in the data set is calculated. The minimum value of POP is 0, Dnu is 2.5008, Numax is 17.97978, and Epsilon is 0.00500. The low percentile, 50% percentile, and high percentile are described in Table 4. The maximum value of all features in the data set is also calculated. The maximum value of POP is 1, Dnu is 17.69943, Numax is 239.64848, and Epsilon is 1.

The mean value of Dnu Numax and Epsilon is calculated according to the target label. For the target label 0, the mean value of Dnu, Numax, and Epsilon is 9.231920, 106.477706, and 0.22722, respectively. When the target label is one, the mean value of features Dnu, Numax, and Epsilon are 4.378389, 39.038756, and 0.765701, as shown in Table 5.

Table 5.

The features mean values to group by target label POP.

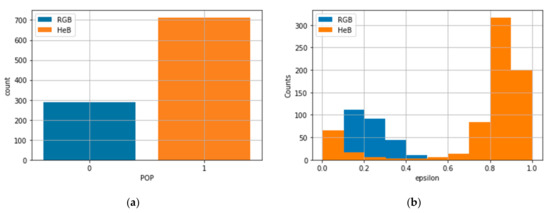

In the asteroseismology dataset, the pop column has 713 HeB classes and 288 RGB classes, as shown in Figure 2a. A histogram is plotted to show RGB and Heb frequency distribution according to epsilon features, as represented in Figure 2b. RGB has more frequency distribution between 0.1 to 0.4, and HeB has the highest bin between 0.8 and 1.

Figure 2.

The asteroseismology feature analysis among the target category is listed as (a) the target class bar chart distribution analysis; (b) the Epsilon feature distribution analysis.

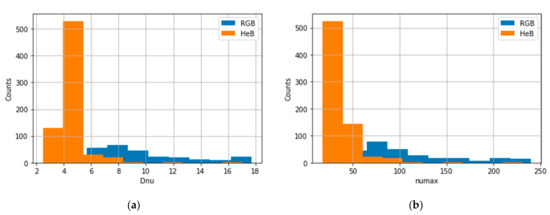

The frequency distribution of HeB and the RGB is analyzed according to feature Dnu using a histogram in Figure 3a. There is more chance of HeB when the value is between two and six of Dnu. The frequency of HeB is high before 60 in the Numax feature, as shown in Figure 3b.

Figure 3.

The asteroseismology feature analysis among the target category is listed as (a) the Dnu feature distribution analysis; (b) the Numax feature distribution analysis.

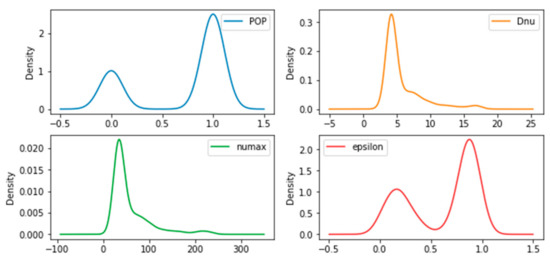

To analyze the values of the asteroseismology data features, density graphs are implemented in the most values line plot drawn in Figure 4. The POP value is high in the leftmost corner of the first row, between 0.6 and 1.3. The frequency of occurrence of the POP value is one, as described in Figure 4. Additionally, in the rightmost corner of the first row, the Dnu plot is described. Additionally, most of the instance values are five, as represented. The density of Numax occurs between 0 and 100, as shown in the leftmost corners of the second row. The highest density of Epsilon is 5 to 12, as represented in the rightmost corners of the second row.

Figure 4.

The density graphs analysis of asteroseismology dataset features in time series.

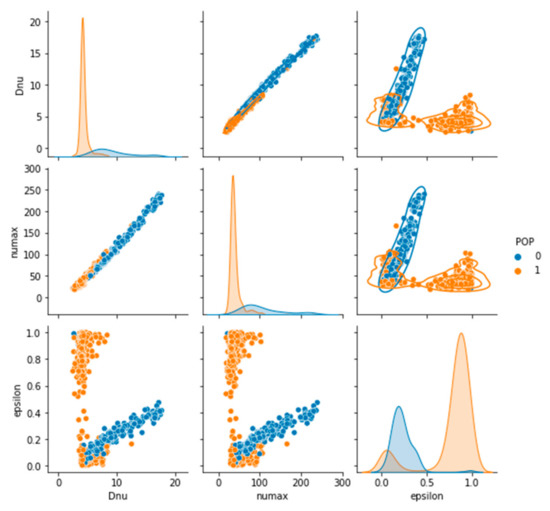

The pair plots determine the optimal features to explain a relationship between two variables or construct the most distinct clusters. The pairs plot is based on two fundamental figures: the histogram and the scatter plot. The diagonal histogram shows the distribution of such a single variable. Still, the upper and lower triangular scatter plots indicate the relationship between the two variables, as shown in Figure 5. We have analyzed the relationship between two features in the leftmost plot in the third row. Through analysis of the relationship of Dnu and Epsilon in the scatter plot, the HeB happens when the value of Dnu is less than 10. There is more chance of RGB occurring when the value of Epsilon is less than 0.6. In the rightmost corner of the third row, we can analyze the histogram of Epsilon. The cause of HeB increases when the values of Epsilon increase from 0.5. The cause of the RGB increases when the value of Epsilon remains below 0.5.

Figure 5.

The joint plot analysis of dataset features in hue with POP.

The scatter plot in three dimensions is plotted to analyze the asteroseismology feature in 3D space, as shown in Figure 6. The cause of HeB increases when the value of Numax is above 50 and Epsilon is less than 0.6. The RGB occurs when the value of Epsilon is less than 0.4, as represented in the 3D plot of Figure 6a. When the value of the Dnu is less than 8, and the Epsilon value is high than 0.2, then the chance of HeB increases. The RGB happens when the value of Epsilon is less than 0.6 and the value of Dnu is higher than 4, as shown in Figure 6b.

Figure 6.

The 3-dimensional (3D) analysis of Epsilon, Numax and Dnu with the POP is conducted. The analysis is listed as: (a) the 3D analysis of Epsilon and Numax by target POP; (b) the 3D analysis of Epsilon and Dnu by target POP.

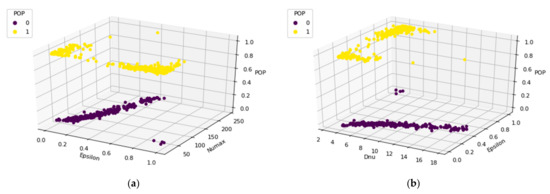

3.3. Asteriosismolgy Dataset Feature Analysis

A correlation matrix is a straightforward means of summarizing the linear relationships between all features in a dataset. The asteroseismology dataset features are analyzed by using the feature correlation graph visualized in Figure 7. Dnu and Numax have a perfectly positive relationship. The correlation matrix represents little association between the POP and Epsilon.

Figure 7.

The asteroseismology dataset features correlation analysis.

The correlation shows all the features are essential for RGB and HeB classification in asteroseismology. The correlation analysis shows that the dataset feature has good relation values. Epsilon, Numax, Dnu, and POP are the four features selected for model training and testing. The results of our proposed approach to these features are efficient.

3.4. Asteroseismology Dataset Splitting

The dataset splitting is applied to split the asteroseismology data into two portions training and testing data. We applied data spiting to overcome the model overfitting and the model evolution on the unseen data. The splitting ratio is 80:20 utilized in this research study. The 80% portion of the data is utilized for model training, and the 20% portion of the data is utilized for model result evaluations on new data. This data splinting is necessary to generalize our learning model.

4. Proposed BiGR Approach

The novel proposed BiGR approach is based on the hybrid layers stack of Bi-directional Gated Recurrent Units (GRUs) and Recurrent Neural Networks (RNN). The proposed approach is examined in this section.

The Gated Recurrent Unit (GRU) [30] is a variation of Long Short-Term Memory (LSTM) [31], due to both being designed similarly and giving equally excellent results. The GRU solves the vanishing gradient issue, which a classical recurrent neural network has. The GRU uses gates to overcome the vanishing gradient. The reset gate and update gate are the two main blocks of GRU to save long-term information data. These two gate vectors decide which data is passed to the output. Long-term information keeping is the property of GRU. The typical structure of Bi-directional GRU (Bi-GRU) [32] is a sequence model based on two GRU models. The one GRU takes the input in the forward direction. The other GRU takes it in the backward direction. The Bi-directional GRU has only the input and forgets gates. The update gate and reset gate are calculated by Equations (3) and (4). The current and final memory content is calculated as expressed in Equations (5) and (6).

Recurrent Neural Networks (RNN) [33] are best known for sequence modeling tasks. The RNN used the backpropagation algorithm to calculate the gradients that are specific to sequence data. In RNN, the input data cycles through a loop that takes input and passes it to the middle layer present in the network. The RNN [34] activation functions, biases, and weights will standardize so that each layer has the same parameters. The RNN model is trained based on the errors calculated from its output layer to its input layer. The principle of the RNN model works on saving the individual layer output and feeding this back to the input layer to predict through the output layer. The RNN handles sequential data by memorizing previous inputs. Equations (7) and (8) expressed mathematical notations to calculate the RNN layer output. Where Yt contains the m × n neurons matrix layers outputs at time step t, Xt contains the m × n input matrix for all instances, Wx contains the weights for the inputs, Wy contains the weights for the outputs, and b is the neuron bias term.

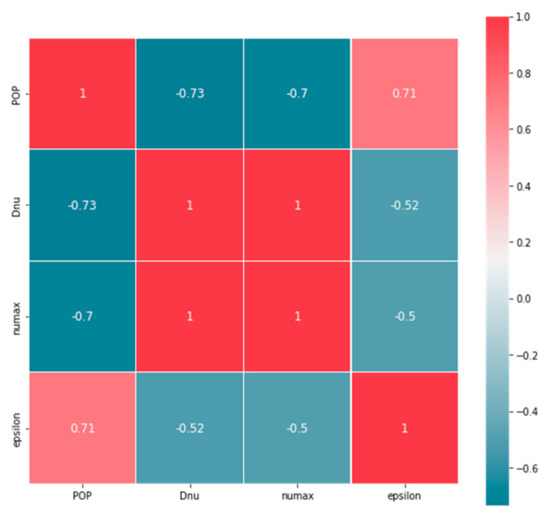

4.1. The BiGR Model Configuration Hyperparameter Parameters

The proposed BiGR model configuration hyperparameter parameters [35] are analyzed in Table 6. The ADAM optimizer is utilized with a learning rate of 0.001 in model building. The binary cross-entropy is used as a loss function. The accuracy is the performance measure matrix. The 7,955,183 are the total trainable parameters involved in the model layer stack. The first input layer of the model is the feature embedding layer with 50,000 neuron units. The second layer is a bidirectional-gated recurrent unit layer with 512 neuron units utilized for model building. The next layer is the recurrent neural networks layer of 1026 neuron units involved. Then, a family of dense layers is involved with the RELU activation function. The last layer of the model is the dense output layer of unit 1 with the SIGMOID activation function. The proposed model configuration parameters are examined.

Table 6.

The Deep BiGR model hyperparameter configuration parameters.

4.2. The BiGR Layers Architecture Analysis

The layer stack architecture analysis of our proposed BiGR approach is visualized in Figure 8. The layer stack architecture contains the details about each active layer with the input and output shapes. The first layer of the architecture is the input embedding layer. The input layer has the (None, 3) input and output shape with the float data type. The input layer is then passed to the next embedding layer present in the stack. The embedding layer has the input shape of (None, 3) and an output shape of (None, 3, 64). Then, a bidirectional layer of the Gated recurrent unit layer received the output shape of (None, 3, 64) from the previous layer as input and has an output shape of (None, 3, 1024). The next layer in the architecture stack is the Recurrent neural networks layer, which has an input shape of (None, 3, 1024) and an output shape of (None, 1024). Next, a family of dense layers is involved in architecture sequentially. The architecture’s last layer is the model’s output layer with an input shape of (None, 32) and an output shape of (None, 1). The novel proposed BiGR architecture is deeply analyzed to understand the model layer stack.

Figure 8.

The architectural analysis of our proposed BiGR approach.

5. Results and Evaluations

The results and evaluations of our proposed novel approach for asteroseismology classification are examined in this section. The data analysis and deep learning model building are based on the Python TensorFlow tool. The performance evaluation metrics are the accuracy score, precision score, recall score, f1 score, ROC accuracy score, and log loss. The performance evaluation metrics are examined to validate our proposed approach scientifically.

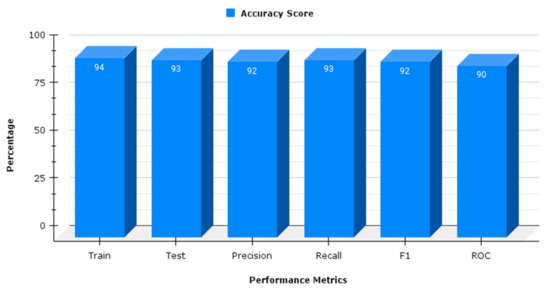

The accuracy score is the correct number of predicted values by the proposed model. The accuracy score shows how well our model predicts the target label. The accuracy score of our model on training data is 94%, and on testing data is 93%. This shows that our proposed model is in generalized form. The mathematical notation to express the accuracy score is analyzed in Equation (9).

The recall and precision evaluation metrics measure the model outcome data relevancy. The precision score analyzes how reliable the proposed model is in classifying data samples as positive. The recall determines the dataset’s positive class prediction number of all positive samples. The precision score of our proposed model is 92%, and the recall score of our model is 93%. The mathematical notation to express recall and precision are Equations (10) and (11), respectively, where the true positive is correctly classified as a positive sample, and the false negative is incorrectly classified as a positive sample, which is unfavorable. The false positive is incorrectly classified as a negative sample.

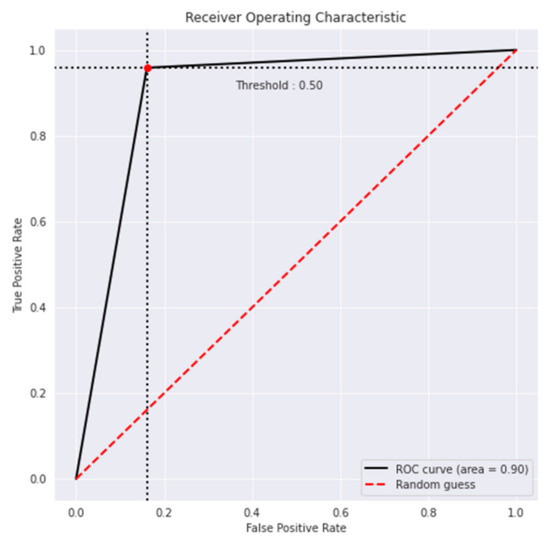

The F1 score sums up the predictive performance by combining the recall and precision values. The F1 score is based on the harmonic mean of recall and precision values. The f1 score value of our proposed model is 92%. The ROC curve accuracy analysis is conducted to evaluate our model using different probability threshold measures. The ROC curve accuracy determines the trade-off between specificity and sensitivity between each class. The ROC curve accuracy score of our proposed model is 90%. The mathematical notations to analyze the F1 score and ROC curve accuracy is expressed in Equations (12) and (13), respectively.

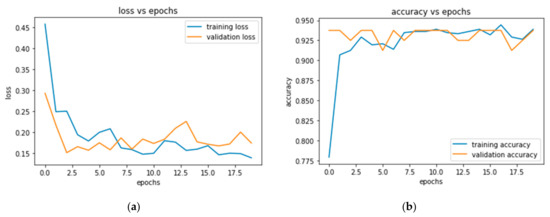

The bar chart-based performance analysis of our proposed approach is analyzed in Figure 9. The performance effects of the number of epochs during training of the proposed BiGR model are examined in Table 7. The analysis is based on the training time, training loss, accuracy, validation loss, and validation accuracy of one epoch at a time. The analysis demonstrates that training time and loss are high when the model is trained at the first epoch. The accuracy score values are low. The loss and time decreased during training with the increase in an epoch. The accuracy results are increased. This analysis examined the training validity of our proposed model.

Figure 9.

The metric performance analysis of our proposed BiGR approach.

Table 7.

The performance effects of the number of epochs on the BiGR model.

The proposed BiGR model results without and with hyperparameter tuning are examined in Table 8 and Table 9, respectively. The analysis demonstrates that we have achieved the best performance metrics accuracy scores by applying hyperparameter tuning to our proposed model. The best-fit parameters that give us the highest possible score in asteroseismology classification are analyzed.

Table 8.

The proposed BiGR model results in evaluations without hyperparameter tuning.

Table 9.

The proposed BiGR model results in evaluations with hyperparameter tuning.

The proposed BiGR model classification report is analyzed in Table 10 and Table 11. The analysis is based on the parameter’s precision, recall, f1 score, support score category-wise, and the average case. In the weighted average case, the precision score is 92%, the recall score is 93%, the f1 score is 92%, and the support score is 201. This classification report examined the performance evaluation metrics of our proposed model.

Table 10.

The BiGR classification report analysis by the target category.

Table 11.

The BiGR classification report analysis by the average case.

The time-series analysis of the accuracy and validation score of our proposed approach is examined in Figure 10. The analysis of loss and epochs shows that as the epochs increase, the model loss is decreased. The analysis of training accuracy and validation accuracy demonstrates that with the increase in the epochs, the accuracy scores also increased. This analysis validates the performance of the proposed model in time series.

Figure 10.

The time series analysis of proposed BiGR is represented as: (a) the time series analysis of proposed BiGR among the training loss and validation loss; (b) the time series analysis of proposed BiGR among the training accuracy and validation accuracy.

The ROC curve analysis of our proposed BiGR model is examined in Figure 11. The ROC curve analysis is related to determining the area under the curve. The higher the ROC curve area better the proposed model is in classification. The analysis shows that our proposed model achieved an efficient ROC curve area. This analysis validates our proposed model in the best classification task.

Figure 11.

The ROC (receiver operating characteristic) curve analysis of POP at different threshold values.

The comparative performance analysis of the proposed model with other state-of-the-art studies is analyzed in Table 12. The state-of-the-art studies from the year 2019 to 2022 are taken for comparison. The proposed approach outperformed with high accuracy compared to the past applied state-of-the-art studies. This analysis demonstrates that our proposed model achieved high results in comparison.

Table 12.

The performance of the proposed approach with other state-of-the-art studies.

6. Conclusions

The RGB and HeB classification in asteroseismology is proposed using a novel deep-learning model. The AEDA is applied to obtain critical insights and patterns from the asteroseismology dataset. The analyzed features are based on the maximum oscillation power frequency, frequency separation of modes in consecutive order with the same degree, and mode location. The study analysis identified that the cause of HeB increases when the value of feature Numax is high and feature Epsilon is low. The proposed BiGR model has achieved an accuracy score of 93%, a precision score of 92%, a recall score of 93%, a ROC curve accuracy score of 90%, and an F1 score of 92%. The proposed research model performance validation and comparative analysis with past applied studies are examined. Our proposed model outperformed other state-of-the-art studies with high accuracy. Our proposed research assists astronomers in analyzing the space star’s oscillations with high performance. In our research limitations and future work, we will conduct the data balancing analysis and balance the dataset. Additionally, we will try to increase the training feature space and apply transfer learning models.

Author Contributions

Conceptualized the study, conducted the survey and data collection A.R. and F.Y.; supervision, data analysis and writing the manuscript A.R. and F.Y.; resources, data curation, funding acquisition, and project administration M.M.S.F., G.A., M.A. and K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by University of Hafr Albatin, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The supporting data for the findings of this study are available from the corresponding author on reasonable request.

Acknowledgments

The authors would like to thank all participants for their fruitful cooperation and support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Aerts, C. Probing the interior physics of stars through asteroseismology. Rev. Mod. Phys. 2021, 93, 015001. [Google Scholar] [CrossRef]

- Zhivanovich, I.; Solov’ev, A.A.; Efremov, V.I. Differential Rotation of the Sun, Helioseismology Data, and Estimation of the Depth of Superconvection Cells. Geomagn. Aeron. 2021, 61, 940–948. [Google Scholar] [CrossRef]

- Di Luzio, L.; Fedele, M.; Giannotti, M.; Mescia, F.; Nardi, E. Stellar evolution confronts axion models. J. Cosmol. Astropart. Phys. 2022, 2022, 35. [Google Scholar] [CrossRef]

- Merlov, A.; Bear, E.; Soker, N. A Red Giant Branch Common-envelope Evolution Scenario for the Exoplanet WD 1856 b. Astrophys. J. Lett. 2021, 915, L34. [Google Scholar] [CrossRef]

- Tillman, N.T. Red Giant Stars: Facts, Definition & the Future of the Sun|Space. Available online: https://www.space.com/22471-red-giant-stars.html (accessed on 15 May 2022).

- COSMOS. Stellar Evolution. Available online: https://astronomy.swin.edu.au/cosmos/s/Stellar+Evolution (accessed on 15 May 2022).

- Li, Y.; Bedding, T.R.; Murphy, S.J.; Stello, D.; Chen, Y.; Huber, D.; Joyce, M.; Marks, D.; Zhang, X.; Bi, S.; et al. Discovery of post-mass-transfer helium-burning red giants using asteroseismology. Nat. Astron. 2022, 6, 673–680. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Bartlett, P.L.; Montanari, A.; Rakhlin, A. Deep learning: A statistical viewpoint. Acta Numer. 2021, 30, 87–201. [Google Scholar] [CrossRef]

- Osipov, A.; Pleshakova, E.; Gataullin, S.; Korchagin, S.; Ivanov, M.; Finogeev, A.; Yadav, V. Deep Learning Method for Recognition and Classification of Images from Video Recorders in Difficult Weather Conditions. Sustainability 2022, 14, 2420. [Google Scholar] [CrossRef]

- Gul, F.; Mir, I.; Alarabiat, D.; Alabool, H.M.; Abualigah, L.; Mir, S. Implementation of bio-inspired hybrid algorithm with mutation operator for robotic path planning. J. Parallel Distrib. Comput. 2022, 169, 171–184. [Google Scholar] [CrossRef]

- Ananthanarayana, T.; Srivastava, P.; Chintha, A.; Santha, A.; Landy, B.; Panaro, J.; Webster, A.; Kotecha, N.; Sah, S.; Sarchet, T.; et al. Deep Learning Methods for Sign Language Translation. ACM Trans. Access. Comput. 2021, 14, 1–30. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Tan, T.-H.; Lee, W.-H.; Chang, L.; Chen, Y.-N.; Fan, K.-C.; Alkhaleefah, M. Consolidated Convolutional Neural Network for Hyperspectral Image Classification. Remote Sens. 2022, 14, 1571. [Google Scholar] [CrossRef]

- Trinh Van, L.; Dao Thi Le, T.; Le Xuan, T.; Castelli, E. Emotional Speech Recognition Using Deep Neural Networks. Sensors 2022, 22, 1414. [Google Scholar] [CrossRef]

- Vescovi, D. Mixing and Magnetic Fields in Asymptotic Giant Branch Stars in the Framework of FRUITY Models. Universe 2021, 8, 16. [Google Scholar] [CrossRef]

- Lin, B. Regularity Normalization: Neuroscience-Inspired Unsupervised Attention across Neural Network Layers. Entropy 2021, 24, 59. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.H.; Min, J.Y.; Byun, S. Electromyogram-Based Classification of Hand and Finger Gestures Using Artificial Neural Networks. Sensors 2021, 22, 225. [Google Scholar] [CrossRef]

- Corchado, J.M.; Hussein, F.; Mughaid, A.; Alzu’bi, S.; El-Salhi, S.M.; Abuhaija, B.; Abualigah, L.; Gandomi, A.H. Hybrid CLAHE-CNN Deep Neural Networks for Classifying Lung Diseases from X-ray Acquisitions. Electronics 2022, 11, 3075. [Google Scholar] [CrossRef]

- Mehmet Bilal, E.R. Heart sounds classification using convolutional neural network with 1D-local binary pattern and 1D-local ternary pattern features. Appl. Acoust. 2021, 180, 108152. [Google Scholar] [CrossRef]

- Hon, M.; Stello, D.; Yu, J. Deep learning classification in asteroseismology. Mon. Not. R. Astron. Soc. 2017, 469, 4578–4583. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Ewees, A.A.; Al-qaness, M.A.A.; Abualigah, L.; Ibrahim, R.A. Sine–Cosine-Barnacles Algorithm Optimizer with disruption operator for global optimization and automatic data clustering. Expert Syst. Appl. 2022, 207, 117993. [Google Scholar] [CrossRef]

- Elsworth, Y.; Hekker, S.; Basu, S.; Davies, G.R. A new method for the asteroseismic determination of the evolutionary state of red-giant stars. Mon. Not. R. Astron. Soc. 2017, 466, 3344–3352. [Google Scholar] [CrossRef]

- Mughaid, A.; AlZu’bi, S.; Alnajjar, A.; AbuElsoud, E.; Salhi, S.E.; Igried, B.; Abualigah, L. Improved dropping attacks detecting system in 5g networks using machine learning and deep learning approaches. Multimed. Tools Appl. 2022, 1–23. [Google Scholar] [CrossRef]

- Cao, B.; Li, C.; Song, Y.; Qin, Y.; Chen, C. Network Intrusion Detection Model Based on CNN and GRU. Appl. Sci. 2022, 12, 4184. [Google Scholar] [CrossRef]

- Liu, K.; Hu, X.; Zhou, H.; Tong, L.; Widanage, W.D.; Marco, J. Feature Analyses and Modeling of Lithium-Ion Battery Manufacturing Based on Random Forest Classification. IEEE/ASME Trans. Mechatron. 2021, 26, 2944–2955. [Google Scholar] [CrossRef]

- Amanat, A.; Rizwan, M.; Javed, A.R.; Abdelhaq, M.; Alsaqour, R.; Pandya, S.; Uddin, M. Deep Learning for Depression Detection from Textual Data. Electronics 2022, 11, 676. [Google Scholar] [CrossRef]

- Gilliland, R.L.; McCullough, P.R.; Nelan, E.P.; Brown, T.M.; Charbonneau, D.; Nutzman, P.; Christensen-Dalsgaard, J.; Kjeldsen, H. Asteroseismology of the transiting exoplanet host hd 17156 with hubble space telescope fine guidance sensor. Astrophys. J. 2010, 726, 2. [Google Scholar] [CrossRef]

- Filho, F.J.S.L. Classification in Asteroseismology | Kaggle. Available online: https://www.kaggle.com/datasets/fernandolima23/classification-in-asteroseismology (accessed on 15 May 2022).

- Oxford Academic. Deep Learning Classification in Asteroseismology | Monthly Notices of the Royal Astronomical Society. Available online: https://academic.oup.com/mnras/article/469/4/4578/3828087#supplementary-data (accessed on 15 May 2022).

- Zulqarnain, M.; Ghazali, R.; Hassim, Y.M.M.; Aamir, M. An Enhanced Gated Recurrent Unit with Auto-Encoder for Solving Text Classification Problems. Arab. J. Sci. Eng. 2021, 46, 8953–8967. [Google Scholar] [CrossRef]

- Nwakanma, C.I.; Islam, F.B.; Maharani, M.P.; Kim, D.S.; Lee, J.M. IoT-Based Vibration Sensor Data Collection and Emergency Detection Classification using Long Short Term Memory (LSTM). In Proceedings of the 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Korea, 13–16 April 2021; pp. 273–278. [Google Scholar] [CrossRef]

- Vatsya, R.; Ghose, S.; Singh, N.; Garg, A. Toxic Comment Classification Using Bi-directional GRUs and CNN. Lect. Notes Data Eng. Commun. Technol. 2022, 91, 665–672. [Google Scholar] [CrossRef]

- Qi, W.; Ovur, S.E.; Li, Z.; Marzullo, A.; Song, R. Multi-Sensor Guided Hand Gesture Recognition for a Teleoperated Robot Using a Recurrent Neural Network. IEEE Robot. Autom. Lett. 2021, 6, 6039–6045. [Google Scholar] [CrossRef]

- Khan, M.A. HCRNNIDS: Hybrid Convolutional Recurrent Neural Network-Based Network Intrusion Detection System. Processes 2021, 9, 834. [Google Scholar] [CrossRef]

- Rao, S.; Narayanaswamy, V.; Esposito, M.; Thiagarajan, J.; Spanias, A. Deep Learning with hyper-parameter tuning for COVID-19 Cough Detection. In Proceedings of the 2021 12th International Conference on Information, Intelligence, Systems & Applications (IISA), Chania Crete, Greece, 12–14 July 2021. [Google Scholar] [CrossRef]

- Hamm, C.A.; Wang, C.J.; Savic, L.J.; Ferrante, M.; Schobert, I.; Schlachter, T.; Lin, M.D.; Duncan, J.S.; Weinreb, J.C.; Chapiro, J.; et al. Deep learning for liver tumor diagnosis part I: Development of a convolutional neural network classifier for multi-phasic MRI. Eur. Radiol. 2019, 29, 3338–3347. [Google Scholar] [CrossRef]

- de Groof, A.J.; Struyvenberg, M.R.; van der Putten, J.; van der Sommen, F.; Fockens, K.N.; Curvers, W.L.; Zinger, S.; Pouw, R.E.; Coron, E.; Baldaque-Silva, F.; et al. Deep-Learning System Detects Neoplasia in Patients with Barrett’s Esophagus with Higher Accuracy Than Endoscopists in a Multistep Training and Validation Study with Benchmarking. Gastroenterology 2020, 158, 915–929.e4. [Google Scholar] [CrossRef] [PubMed]

- Reddy, V.S.M.; Poovizhi, T. A Novel Method for Enhancing Accuracy in Mining Twitter Data Using Naive Bayes over Logistic Regression. In Proceedings of the 2022 International Conference on Business Analytics for Technology and Security (ICBATS), Dubai, United Arab Emirates, 16–17 February 2022. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).