Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide

Abstract

1. Introduction

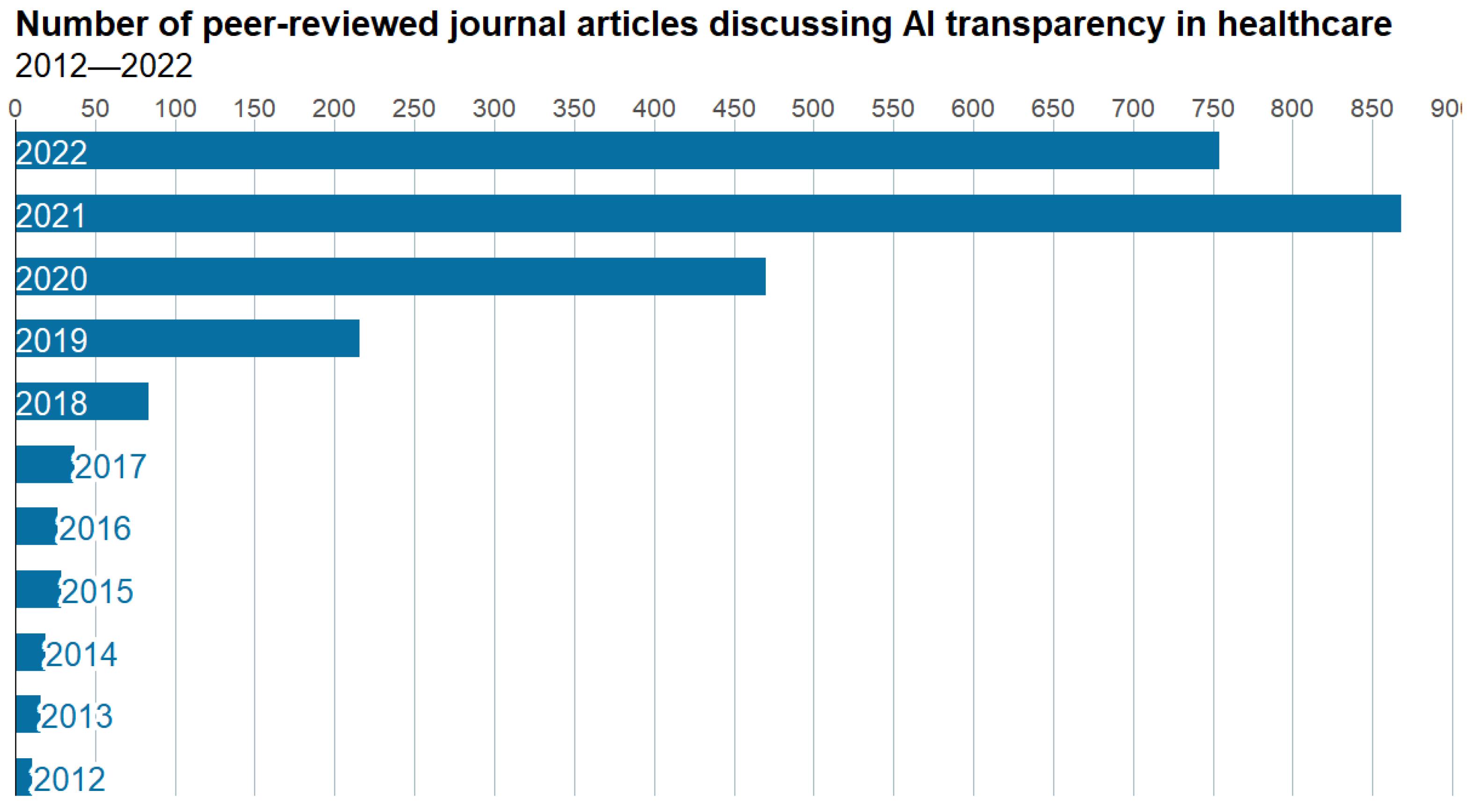

1.1. Background

1.1.1. Interpretability

1.1.2. Privacy

1.1.3. Security

1.1.4. Equity

1.1.5. Intellectual Property

2. Material and Methods

2.1. Online International Survey

2.1.1. Data Collection

2.1.2. Aspects of Interest

2.1.3. Data and Statistical Methods

3. Survey Results

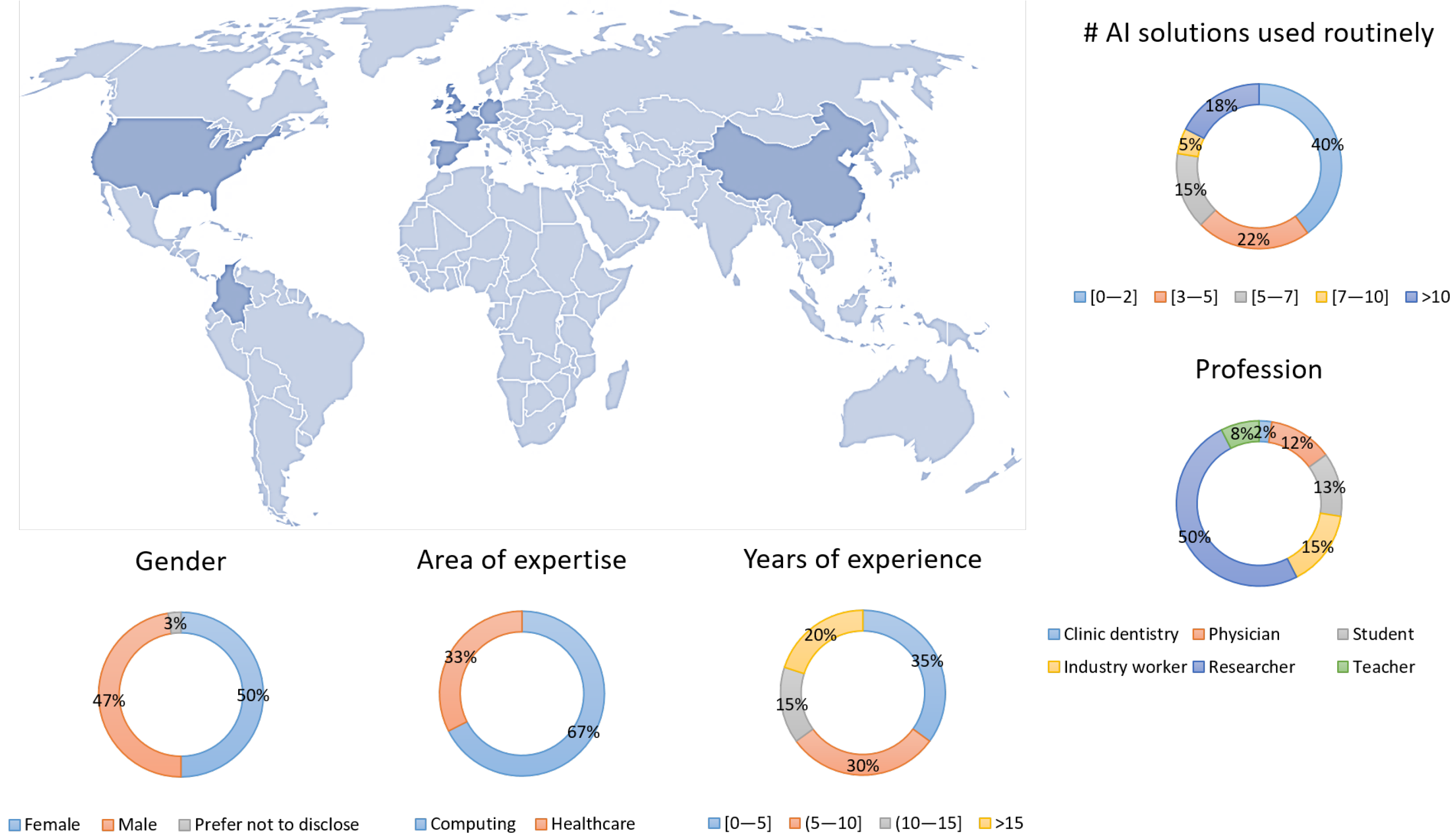

3.1. Sample Characteristics

3.2. Interpretability

- Q9

- Do the AI systems that you use routinely explain to you how decisions and recommendations are made (underlying method)? Only of participants, all of whom have a computer science background, answered AI systems explained how decisions and recommendations were made.

- Q10

- Does the AI systems that you use routinely explain to you why decisions and recommendations are made (medical guideline)? The majority of participants () are unsure of whether AI systems adhere to medical guidelines.

- Q11

- Can the AI system be interpreted so well that end users could manipulate it to obtain certain outcome? Most respondents () stated that because end users only have access to the final choice, it is difficult for them to trick AI systems on their own. However, around of participants feel AI systems can be understood to the point where end-user manipulation is possible.

- Q12

- In general, are your needs regarding interpretability addressed adequately by AI systems? The great majority of respondents agreed interpretability demands have been met thus far (yes: ; no: ), arguing that “giving more information to end users will be confusing and overall, time-consuming”. Those in disagreement recognised interpretability is still a “work in progress” but that “simpler explanations”, including “literature, references, or step by step”, should be displayed to professionals along with the final response.

3.3. Privacy

- Q13

- Do you know the patient population characteristics used for training and validating? A considerable proportion of respondents () is unaware of patient population characteristics used for training and validating AI systems they use on a regular basis or are unable to access such information.

- Q14

- Do you know what equipment, protocols, and data processing workflows were used to build the training and validation dataset? Answers to this question were even: around half of the participants manifest to know or have access to such information, and the remaining half that they do not.

- Q15

- Do you know who annotated or labelled the datasets used for training and validating the AI system (credentials, expertise, and company)? Around of participants are not aware of the team in charge of this process.

- Q16

- Are both training and validation datasets available to the general public or via request? Only a fourth of the participants are aware of AI systems whose training and validation datasets are available to the general public; the remainder were evenly split between not knowing whether such data were available () and knowing such data were either partially or completely unavailable ().

- Q17

- In general, are datasets typically available in your area of expertise? More than half of participants stated datasets tend to be unavailable to the general public (), although a few private ones can be accessed after approval ().

- Q18

- In general, are your needs regarding privacy addressed adequately by AI systems? According to survey respondents, their privacy demands are properly met by the AI system they typically employ (yes: ; no: ). Nonetheless, some expressed their concerns about data availability, the lack of specifics about how AI systems were built and evaluated, and the loss of control when employing cloud-based technology owing to privacy regulations.

3.4. Security

- Q19

- Does the AI system you have been in contact with require any data storage service? For AI systems that survey participants use on a regular basis, no storage or internal data storage is usual (none: ; internal: ; external: ).

- Q20

- Does the data storage service used by the AI system contemplate secure data access (e.g., user hierarchy and permissions)? Although in most cases, participants manifested an internal information technology team managed data access (), about a third of them agreed with the following statement: “all users have full permission to write, read, and modify files within the data storage”.

- Q21

- Who is responsible if something goes wrong concerning AI system security? The responsibility on whom security issues fall is not sufficiently clear to many survey participants (). Only of participants would blame the AI system for data breaches, and the rest would make responsible someone within their institution: the person causing the breach (), line manager or principal investigator (), information technology team (), the institution which acquired the AI system (), or all of the above ().

- Q22

- In general, are your needs regarding security addressed adequately by AI systems? The vast majority of respondents indicate security needs are currently met (). Even though some answered positively to this question, they rely on “team awareness” and recognise that “security breaches are always possible”.

- Q23

- In general, are the needs of the patients regarding security addressed adequately by AI systems? The great majority of survey participants () answered their security demands are addressed thus far. Those disagreeing manifest “security breaches are always possible” and that additional “protection besides patient anonymisation” would be required to minimise security problems.

3.5. Equity

- Q24

- Are the training and validation datasets biased in such a way that they may produce unfair outcomes for marginalised or vulnerable populations? About half of survey participants believed that bias during training and validation of the AI systems could produce unjust outcomes for marginalised or vulnerable groups (yes: ; no: ; unaware: ).

- Q25

- Are the training and validation datasets varied enough (e.g., age, biological sex, pathology) so that they are representative of the people for whom it will be making decisions? A fourth of participants indicated that representativeness in training and validation datasets is lacking and a third are unaware of such details (yes: ; no: ; unaware: ).

3.6. Intellectual Property

- Q26

- In general, do you think there is the right balance between IP rights and AI transparency/fairness goals? Most respondents do not have enough information to determine whether there is a proper balance between IP rights and transparency (). Participants expressed the following concerns: “The big problem with IP is that individuals are cosmetically involved; generally, the good part of the IP goes to the institutions. This remains true for all research-derived products, including AI” and “I think the community is very open to sharing advances and new discoveries and results. These are two things on the two sides of scales. More IP rights leads to less transparency, and vice-versa”.

- Q27

- In general, should the data used to train and validate an AI system be disclosed and described in full? The great majority of survey participants agreed information concerning training and validation needs to be described in full (yes: ; no: ; not confident to judge this aspect: ). Participants manifested this situation would enable “reproducibility” and “repeatability” in research, and professionals using an AI system can detect “any possible bias” or “limitation” and “be more confident about [using] it”. However, some pointed out the importance of “sharing as much as possible” without violating the “GDPR regulations”.

3.7. General Perceptions by Area of Expertise and by Years of Experience

4. Discussion

4.1. Interpretability

- Involvement of all stakeholders throughout all phases of the software development life cycle. The development of a healthcare system leveraging AI is a multidisciplinary process that requires continuous communication between system creators and those affected by their use (e.g., healthcare professionals, policy-makers, and patients) [17,64]. This ensures explanations are clear and sufficient, real interpretability needs are addressed timely, and limitations are known by the target population.

- Interpretability over complexity. Complexity is entangled with interpretability: predictions made by a complex model are more difficult to comprehend and explain than a simpler one. Since trust in AI systems can only be improved by improving interpretability, the use of simpler yet interpretable methods should be considered for healthcare applications over black boxes [39,64].

- Uncertainty quantification. Real-world data are far from perfect; they contain missing values, outliers, and invalid data. Inputting it into models trained and tested on well-selected datasets may result in flawed verdicts [65]. Quantifying uncertainty for a certain input–output pair thus allows developers and users to understand whether they can trust a model’s prediction, whether the cause of the problem is in the input data or the AI system’s inability to handle such a case. It should be noted that the latter could be employed to improve model robustness.

- Evaluation beyond accuracy. “[…] AI research should not be limited to reporting accuracy and sensitivity compared with those of the radiologist, pathologist, or clinician” [66]. Performance evaluation should be accompanied by a thorough and multidisciplinary assessment of interpretability [67]. Evaluations striving to explain the reasoning behind a certain prediction can improve our understanding of healthcare. Assessing interpretability and explainability requires maturation [39].

4.2. Privacy

- Data privacy impact assessments. The implementation of rigorous data privacy impact assessments within healthcare centres permits finding practices that violate privacy and determining whether additional procedures need to be implemented to protect patient data further [70]. Likewise, procurement law must ensure all AI systems provided by third parties comply with strict privacy policies [70].

- Audit trails. Records of who is doing what, what data are being utilised/transmitted, and what system modifications are being performed must be retained, for example, by means of audit trails [70].

- Cloud platforms and privacy. Cloud platforms are excellent solutions for reducing operational costs and increasing productivity (processing speed, efficiency, and performance). At the same time, they require transmitting data from healthcare centres to an external location, thereby increasing the likelihood of tracking sensitive and private information [42,71]. Appropriate strategies to regulate data access, anonymisation prior to transmission and storage are critical to ensure the privacy of patients is not put at risk.

- Information availability. Information regarding training and testing population characteristics, data acquisition protocols and equipment, and team expertise must be made clear and available to stakeholders since these permit determining potential limitations of the AI system. For example, evidence AI systems that have been tested on multiple and heterogeneous datasets need to be handed over by providers as proof it generalises well and can be safely deployed into healthcare practice.

4.3. Security

- Device encryption. All devices that employees use to access corporate data should be completely encrypted, and sensitive data stored and transferred in an encrypted way [74].

- Security testing. Effort, time, and money should be invested in cyber-security. Hiring a professional organisation to conduct security audits is an excellent way to test data security, as these reveal security weaknesses [75], especially in remote work setups. These professionals, as independent organisations, can verify that AI systems comply with the utmost security standards.

- Restrict and control access/modification/delete/storage data and device use access. Our survey showed a third of respondents did not have user hierarchies and permission settings set up in their organisations, increasing the risk of data breaches or mishandling. Employees should only have access to data that is absolutely necessary for them to accomplish their jobs [76].

- Employee training. Training all members on why security is important and how they can contribute can not only decrease risks but also improve reaction times when breaches occur [77]. Frequent training sessions and up-to-date policy information can encourage employees to put these guidelines into practice. For example, an easy and common employee mistake is to write down their passwords on a sticky note.

4.4. Equity

- Release information to the public. AI system developers must release demographic information on training and testing population characteristics, data acquisition, and processing protocols. This information can be useful to judge whether developers paid attention to equity.

- Consistency in heterogeneous datasets. Research conducted around the world by multiple institutions has demonstrated the effectiveness of AI in relatively small cohorts of centralised data [3]. Nonetheless, two key problems regarding validation and equity remain. First, AI systems are primarily trained on small and curated datasets, and hence, it is possible that they are unable to generalise, i.e., process real-life medical data in the wild [2]. Second, gathering enormous amounts of sufficiently heterogeneous and correctly annotated data from a single institution is challenging and costly since annotation is time-consuming and laborious, and heterogeneity is evidently limited by population, pathologies, raters, scanners, and imaging protocols. Moreover, sharing data in a centralised configuration requires addressing legal, privacy, and technical considerations to comply with good clinical practice standards and general data protection regulations and prevent patient information from leaking. The use of federated learning, for example, can help overcome data-sharing limitations and enable training and validating AI systems on heterogeneous datasets of unprecedented size [80].

4.5. Intellectual Property

- Invest in the correct guidance early on. Identifying sensitive data and evaluating the critical importance of protecting it from the public domain should be the first priority. Our survey participants agree greatly with the need for disclosing and describing information related to training and validation in full. This should evidently be accompanied after evaluating what information must be safeguarded and what information may be made available to the public—e.g., by performing a risk and cost-benefit analysis without jeopardizing openness.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| GDPR | General Data Protection Regulation |

| HIPAA | United States’ Health Insurance Portability and Accountability Act |

| ECPA | Electronic Communications Privacy Act |

| COPPA | Children’s Online Privacy Protection Act |

References

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Bernal, J.; Kushibar, K.; Clèrigues, A.; Arnau, O.; Lladó, X. Deep learning for medical imaging. In Deep Learning in Biology and Medicine; Bacciu, D., Lisboa, P.J.G., Vellido, A., Eds.; World Scientific (Europe): London, UK, 2021. [Google Scholar] [CrossRef]

- Bernal, J.; Kushibar, K.; Asfaw, D.S.; Valverde, S.; Oliver, A.; Martí, R.; Lladó, X. Deep convolutional neural networks for brain image analysis on magnetic resonance imaging: A review. Artif. Intell. Med. 2019, 95, 64–81. [Google Scholar] [CrossRef]

- Davenport, T.; Kalakota, R. The potential for artificial intelligence in healthcare. Future Healthc. J. 2019, 6, 94–98. [Google Scholar] [CrossRef] [PubMed]

- Mazo, C.; Kearns, C.; Mooney, C.; Gallagher, W.M. Clinical Decision Support Systems in Breast Cancer: A Systematic Review. Cancers 2020, 12, 369. [Google Scholar] [CrossRef]

- Du, A.X.; Emam, S.; Gniadecki, R. Review of Machine Learning in Predicting Dermatological Outcomes. Front. Med. 2020, 7, 266. [Google Scholar] [CrossRef]

- Jaiswal, V.; Negi, A.; Pal, T. A review on current advances in machine learning based diabetes prediction. Prim. Care Diabetes 2021, 15, 435–443. [Google Scholar] [CrossRef]

- Vellameeran, F.A.; Brindha, T. An integrated review on machine learning approaches for heart disease prediction: Direction towards future research gaps. Bio-Algorithms-Med-Syst. 2021, 20200069. [Google Scholar] [CrossRef]

- Jamie, M.; Janette, T.; Richard, J.; Julia, W.; Suzanne, M. Using machine-learning risk prediction models to triage the acuity of undifferentiated patients entering the emergency care system: A systematic review. Diagn. Progn. Res. 2020, 4, 1–2. [Google Scholar] [CrossRef]

- Matthew, B.S.; Karen, C.; Valentina, E.P. Machine learning for genetic prediction of psychiatric disorders: A systematic review. Mol. Psychiatry 2021, 26, 70–79. [Google Scholar] [CrossRef]

- Du, Y.; Rafferty, A.R.; McAuliffe, F.M.; Wei, L.; Mooney, C. An explainable machine learning-based clinical decision support system for prediction of gestational diabetes mellitus. Sci. Rep. 2019, 12, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Alballa, N.; Al-Turaiki, I. Machine learning approaches in COVID-19 diagnosis, mortality, and severity risk prediction: A review. Inform. Med. Unlocked 2021, 24, 100564. [Google Scholar] [CrossRef]

- Myszczynska, M.A.; Ojamies, P.N.; Lacoste, A.; Neil, D.; Saffari, A.; Mead, R.; Ferraiuolo, L. Applications of machine learning to diagnosis and treatment of neurodegenerative diseases. Nat. Rev. Neurol. 2020, 16, 440–456. [Google Scholar] [CrossRef]

- Bhavsar, K.A.; Abugabah, A.; Singla, J.; AlZubi, A.A.; Bashir, A.K. A Comprehensive Review on Medical Diagnosis Using Machine Learning. Comput. Mater. Contin. 2021, 67, 1997–2014. [Google Scholar] [CrossRef]

- Thomsen, K.; Iversen, L.; Titlestad, T.L.; Winther, O. Systematic review of machine learning for diagnosis and prognosis in dermatology. J. Dermatol. Treat. 2020, 31, 496–510. [Google Scholar] [CrossRef]

- Xue-Qiang, W.; Hasan, F.T.; Bin, J.N.; Yap, A.J.; Khursheed, A.M. Machine Learning and Intelligent Diagnostics in Dental and Orofacial Pain Management: A Systematic Review. Pain Res. Manag. 2021, 2021, 6659133. [Google Scholar] [CrossRef]

- Borchert, R.; Azevedo, T.; Badhwar, A.; Bernal, J.; Betts, M.; Bruffaerts, R.; Burkhart, M.; Dewachter, I.; Gellersen, H.; Low, A.; et al. Artificial intelligence for diagnosis and prognosis in neuroimaging for dementia; a systematic review. medRxiv 2021. [Google Scholar] [CrossRef]

- van Doorn, K.A.; Kamsteeg, C.; Bate, J.; Aafjes, M. A scoping review of machine learning in psychotherapy research. Psychother. Res. 2021, 31, 92–116. [Google Scholar] [CrossRef] [PubMed]

- Sajjadian, M.; Lam, R.W.; Milev, R.; Rotzinger, S.; Frey, B.N.; Soares, C.N.; Parikh, S.V.; Foster, J.A.; Turecki, G.; Müller, D.J.; et al. Machine learning in the prediction of depression treatment outcomes: A systematic review and meta-analysis. Psychol. Med. 2021, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Fitzgerald, J.; Higgins, D.; Mazo Vargas, C.; Watson, W.; Mooney, C.; Rahman, A.; Aspell, N.; Connolly, A.; Aura Gonzalez, C.; Gallagher, W. Future of biomarker evaluation in the realm of artificial intelligence algorithms: Application in improved therapeutic stratification of patients with breast and prostate cancer. J. Clin. Pathol. 2021, 74, 429–434. [Google Scholar] [CrossRef] [PubMed]

- Bian, Y.; Xiang, Y.; Tong, B.; Feng, B.; Weng, X. Artificial Intelligence–Assisted System in Postoperative Follow-up of Orthopedic Patients: Exploratory Quantitative and Qualitative Study. J. Med. Internet. Res. 2020, 22, e16896. [Google Scholar] [CrossRef] [PubMed]

- Berlyand, Y.; Raja, A.S.; Dorner, S.C.; Prabhakar, A.M.; Sonis, J.D.; Gottumukkala, R.V.; Succi, M.D.; Yun, B.J. How artificial intelligence could transform emergency department operations. Am. J. Emerg. Med. 2018, 36, 1515–1517. [Google Scholar] [CrossRef] [PubMed]

- Del-Valle-Soto, C.; Nolazco-Flores, J.A.; Puerto-Flores, D.; Alberto, J.; Velázquez, R.; Valdivia, L.J.; Rosas-Caro, J.; Visconti, P. Statistical Study of User Perception of Smart Homes during Vital Signal Monitoring with an Energy-Saving Algorithm. Int. J. Environ. Res. Public Health 2022, 19, 9966. [Google Scholar] [CrossRef] [PubMed]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef] [PubMed]

- Ho, P.S.; Young-Hak, K.; Young, L.J.; Soyoung, Y.; Jai, K.C. Ethical challenges regarding artificial intelligence in medicine from the perspective of scientific editing and peer review. Sci. Ed. 2019, 6, 91–98. [Google Scholar] [CrossRef]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and Legal Challenges of Artificial Intelligence-Driven Health Care. In Artificial Intelligence in Healthcare, 1st ed.; Bohr, A., Memarzadeh, K., Eds.; Elsevier: Amsterdam, The Netherlands, 2020; pp. 295–336. [Google Scholar]

- WHO. Health Ethics & Governance of Artificial Intelligence for Health; World Health Organization: Geneva, Switzerland, 2021; p. 150. [Google Scholar]

- Martinho, A.; Kroesen, M.; Chorus, C. A healthy debate: Exploring the views of medical doctors on the ethics of artificial intelligence. Artif. Intell. Med. 2021, 121, 102190. [Google Scholar] [CrossRef]

- Schönberger, D. Artificial intelligence in healthcare: A critical analysis of the legal and ethical implications. Int. J. Law Inf. Technol. 2019, 27, 171–203. [Google Scholar]

- Manne, R.; Kantheti, S.C. Application of Artificial Intelligence in Healthcare: Chances and Challenges. Curr. J. Appl. Sci. Technol. 2021, 40, 78–89. [Google Scholar] [CrossRef]

- Mazo, C.; Aura, C.; Rahman, A.; Gallagher, W.M.; Mooney, C. Application of Artificial Intelligence Techniques to Predict Risk of Recurrence of Breast Cancer: A Systematic Review. J. Pers. Med. 2022, 12, 1496. [Google Scholar] [CrossRef]

- Heike, F.; Eduard, F.V.; Christoph, L.; Aurelia, T.L. Towards Transparency by Design for Artificial Intelligence. Sci. Eng. Ethics 2020, 26, 3333–3361. [Google Scholar] [CrossRef]

- Weller, A. Transparency: Motivations and challenges. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 23–40. [Google Scholar]

- Van Essen, D.C.; Smith, S.M.; Barch, D.M.; Behrens, T.E.; Yacoub, E.; Ugurbil, K.; Consortium, W.M.H. The WU-Minn human connectome project: An overview. Neuroimage 2013, 80, 62–79. [Google Scholar] [CrossRef]

- Esmaeilzadeh, P. Use of AI-based tools for healthcare purposes: A survey study from consumers’ perspectives. BMC Med. Inform. Decis. Mak. 2020, 20, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Elemento, O.; Leslie, C.; Lundin, J.; Tourassi, G. Artificial intelligence in cancer research, diagnosis and therapy. Nat. Rev. Cancer 2021, 21, 747–752. [Google Scholar] [CrossRef] [PubMed]

- Bhatt, U.; Xiang, A.; Sharma, S.; Weller, A.; Taly, A.; Jia, Y.; Ghosh, J.; Puri, R.; Moura, J.M.F.; Eckersley, P. Explainable Machine Learning in Deployment. arXiv 2019, arXiv:1909.06342. [Google Scholar]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl. Acad. Sci. USA 2019, 116, 22071–22080. [Google Scholar] [CrossRef]

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current Challenges and Future Opportunities for XAI in Machine Learning-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2021, 11, 5088. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Pagallo, U. Algo-Rhythms and the Beat of the Legal Drum. Philos. Technol. 2018, 31, 507–524. [Google Scholar] [CrossRef]

- Bartneck, C.; Lütge, C.; Wagner, A.; Welsh, S. Privacy Issues of AI; Springer International Publishing: Cham, Switzerland, 2021; pp. 61–70. [Google Scholar] [CrossRef]

- USA. Health Insurance Portability and Accountability Act of 1996. 1996. Available online: https://www.govinfo.gov/content/pkg/PLAW-104publ191/pdf/PLAW-104publ191.pdf (accessed on 8 November 2021).

- Johnson, L. Chapter 3—Statutory and Regulatory GRC. In Security Controls Evaluation, Testing, and Assessment Handbook; Johnson, L., Ed.; Syngress: Boston, MA, USA, 2016; pp. 11–33. [Google Scholar] [CrossRef]

- FEDERAL-TRADE-COMMISSION. Children’s Online Privacy Protection Rule. 2013. Available online: https://www.ftc.gov/system/files/2012-31341.pdf (accessed on 8 November 2021).

- EU. General Data Protection Regulation. 2016. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 (accessed on 27 October 2021).

- Gola, D.; Erdmann, J.; Läll, K.; Mägi, R.; Müller-Myhsok, B.; Schunkert, H.; König, I.R. Population Bias in polygenic risk prediction models for coronary artery disease. Circ. Genom. Precis. Med. 2020, 13, e002932. [Google Scholar] [CrossRef]

- Noor, P. Can we trust AI not to further embed racial bias and prejudice? BMJ 2020, 368. [Google Scholar] [CrossRef]

- Kapur, S. Reducing racial bias in AI models for clinical use requires a top-down intervention. Nat. Mach. Intell. 2021, 3, 460. [Google Scholar] [CrossRef]

- Parikh, R.B.; Teeple, S.; Navathe, A.S. Addressing bias in artificial intelligence in health care. JAMA 2019, 322, 2377–2378. [Google Scholar] [CrossRef]

- WIPO. What Is Intellectual Property? World Intellectual Property Organization (WIPO): Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- De Koning, R.; Egiz, A.; Kotecha, J.; Ciuculete, A.C.; Ooi, S.Z.Y.; Bankole, N.D.A.; Erhabor, J.; Higginbotham, G.; Khan, M.; Dalle, D.U.; et al. Survey Fatigue During the COVID-19 Pandemic: An Analysis of Neurosurgery Survey Response Rates. Front. Surg. 2021, 8, 690680. [Google Scholar] [CrossRef]

- Gnanapragasam, S.N.; Hodson, A.; Smith, L.E.; Greenberg, N.; Rubin, G.J.; Wessely, S. COVID-19 Survey Burden for Healthcare Workers: Literature Review and Audit. Public Health 2022, 206, 94–101. [Google Scholar] [CrossRef]

- Eysenbach, G. Improving the Quality of Web Surveys: The Checklist for Reporting Results of Internet E-Surveys (CHERRIES). J. Med. Internet Res. 2004, 6, e34. [Google Scholar] [CrossRef]

- Martinez-Millana, A.; Saez, A.; Tornero, R.; Azzopardi-Muscat, N.; Traver, V.; Novillo-Ortiz, D. Artificial intelligence and its impact on the domains of Universal Health Coverage, Health Emergencies and Health Promotion: An overview of systematic reviews. Int. J. Med. Inform. 2022, 166, 104855. [Google Scholar] [CrossRef]

- Vellido, A. The importance of interpretability and visualization in machine learning for applications in medicine and health care. Neural Comput. Appl. 2019, 32, 18069–18083. [Google Scholar] [CrossRef]

- Price, I.; Nicholson, W. Artificial intelligence in health care: Applications and legal issues. SciTech. Lawyer 2017, 10. Available online: https://ssrn.com/abstract=3078704 (accessed on 4 September 2022).

- Richardson, J.P.; Smith, C.; Curtis, S.; Watson, S.; Zhu, X.; Barry, B.; Sharp, R.R. Patient apprehensions about the use of artificial intelligence in healthcare. NPJ Digit. Med. 2021, 4, 1–6. [Google Scholar] [CrossRef]

- ElShawi, R.; Sherif, Y.; Al-Mallah, M.; Sakr, S. Interpretability in healthcare: A comparative study of local machine learning interpretability techniques. Comput. Intell. 2019, 37, 1633–1650. [Google Scholar] [CrossRef]

- Kolyshkina, I.; Simoff, S. Interpretability of Machine Learning Solutions in Public Healthcare: The CRISP-ML Approach. Front. Big Data 2021, 4, 18. [Google Scholar] [CrossRef] [PubMed]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 1–9. [Google Scholar] [CrossRef]

- Stiglic, G.; Kocbek, P.; Fijacko, N.; Zitnik, M.; Verbert, K.; Cilar, L. Interpretability of machine learning based prediction models in healthcare. arXiv 2020, arXiv:2002.08596. [Google Scholar] [CrossRef]

- Das, A.; Rad, P. Opportunities and Challenges in Explainable Artificial Intelligence (XAI): A Survey. arXiv 2020, arXiv:2006.11371. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Begoli, E.; Bhattacharya, T.; Kusnezov, D. The need for uncertainty quantification in machine-assisted medical decision making. Nat. Mach. Intell. 2019, 1, 20–23. [Google Scholar] [CrossRef]

- Ghosh, A.; Kandasamy, D. Interpretable Artificial Intelligence: Why and When. Am. J. Roentgenol. 2020, 214, 1137–1138. [Google Scholar] [CrossRef]

- Zhou, J.; Gandomi, A.H.; Chen, F.; Holzinger, A. Evaluating the quality of machine learning explanations: A survey on methods and metrics. Electronics 2021, 10, 593. [Google Scholar] [CrossRef]

- Espinosa, A.L. Availability of health data: Requirements and solutions. Int. J. Med. Inform. 1998, 49, 97–104. [Google Scholar] [CrossRef]

- Pastorino, R.; De Vito, C.; Migliara, G.; Glocker, K.; Binenbaum, I.; Ricciardi, W.; Boccia, S. Benefits and challenges of Big Data in healthcare: An overview of the European initiatives. Eur. J. Public Health 2019, 29, 23–27. [Google Scholar] [CrossRef]

- Bartoletti, I. AI in healthcare: Ethical and privacy challenges. In Proceedings of the Conference on Artificial Intelligence in Medicine in Europe, Poznan, Poland, 26–29 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 7–10. [Google Scholar]

- Georgiou, D.; Lambrinoudakis, C. Compatibility of a Security Policy for a Cloud-Based Healthcare System with the EU General Data Protection Regulation (GDPR). Information 2020, 11, 586. [Google Scholar] [CrossRef]

- Seh, A.H.; Zarour, M.; Alenezi, M.; Sarkar, A.K.; Agrawal, A.; Kumar, R.; Ahmad Khan, R. Healthcare data breaches: Insights and implications. Healthcare 2020, 8, 133. [Google Scholar] [CrossRef] [PubMed]

- Chernyshev, M.; Zeadally, S.; Baig, Z. Healthcare data breaches: Implications for digital forensic readiness. J. Med. Syst. 2019, 43, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Shavers, B.; Bair, J. Chapter 6—Cryptography and Encryption. In Hiding Behind the Keyboard; Shavers, B., Bair, J., Eds.; Syngress: Boston, MA, USA, 2016; pp. 133–151. [Google Scholar] [CrossRef]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (gdpr). In A Practical Guide, 1st ed.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10, p. 3152676. [Google Scholar]

- Paul, P.; Aithal, P.S. Database Security: An Overview and Analysis of Current Trend. Int. J. Manag. Technol. Soc. Sci. (IJMTS) 2019, 4, 53–58. [Google Scholar] [CrossRef]

- Regulation, G.D.P. General data protection regulation (GDPR). Intersoft Consult. Accessed Oct. 2018, 24. [Google Scholar]

- Tsamados, A.; Aggarwal, N.; Cowls, J.; Morley, J.; Roberts, H.; Taddeo, M.; Floridi, L. The ethics of algorithms: Key problems and solutions. AI Soc. 2022, 37, 215–230. [Google Scholar] [CrossRef]

- Tobin, M.J.; Jubran, A. Pulse oximetry, racial bias and statistical bias. Ann. Intensive Care 2022, 12, 1–2. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Amon, F.; Dahlbom, S.; Blomqvist, P. Challenges to transparency involving intellectual property and privacy concerns in life cycle assessment/costing: A case study of new flame retarded polymers. Clean. Environ. Syst. 2021, 3, 100045. [Google Scholar] [CrossRef]

- WHO. Operationalising the COVID-19 Technology Access Pool (C-TAP). 2020. Available online: https://www.who.int/publications/m/item/c-tap-a-concept-paper (accessed on 24 January 2022).

- Chaudhry, P.E.; Zimmerman, A.; Peters, J.R.; Cordell, V.V. Preserving intellectual property rights: Managerial insight into the escalating counterfeit market quandary. Bus. Horizons 2009, 52, 57–66. [Google Scholar] [CrossRef]

- Kim, H.L.; Hovav, A.; Han, J. Protecting intellectual property from insider threats: A management information security intelligence perspective. J. Intellect. Cap. 2020. Available online: https://www.emerald.com/insight/content/doi/10.1108/JIC-05-2019-0096/full/html (accessed on 4 September 2022). [CrossRef]

- Halt, G.B.; Donch, J.C.; Stiles, A.R.; VanLuvanee, L.J.; Theiss, B.R.; Blue, D.L. Tips for Avoiding and Preventing Intellectual Property Problems. In FDA and Intellectual Property Strategies for Medical Device Technologies; Springer: Berlin/Heidelberg, Germany, 2019; pp. 267–272. [Google Scholar]

- EU4Health-Programme. eHealth Action Plan 2021–2027. 2021. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L:2021:107:FULL&from=EN (accessed on 27 October 2021).

- ACM. US Technology Policy Committee. 2019. Available online: https://www.acm.org/binaries/content/assets/public-policy/ustpc-comments-fda-software-based-device-safety-060319.pdf (accessed on 27 October 2021).

- ACM. Statement on Algorithmic Transparency and Accountability. 2017. Available online: https://iapp.org/media/pdf/resource_center/2017_usacm_statement_algorithms.pdf (accessed on 27 October 2021).

- Monaghesh, E.; Hajizadeh, A. The role of telehealth during COVID-19 outbreak: A systematic review based on current evidence. BMC Public Health 2020, 20, 1–9. [Google Scholar] [CrossRef]

| Section | Question | Answer |

|---|---|---|

| Survey participants | Q1. What country do you live in? | Open text |

| Q2. How old are you? | [18–25]; (25–35]; (35–45]; (45–55]; >55 | |

| Q3. To which gender identity do you most identify? | Man; woman; non-binary; prefer not to disclose; prefer to self-describe | |

| Q4. What is your profession? | Physician; industry worker; researcher; teacher; graduate student; undergraduate student; other (open text) | |

| Q5. What is your area of expertise? | Computer science; morphological science; physiopathology; neuroanatomy; embryology; histology; anatomy; neurology; radiology; other (open text) | |

| Q6. How many years of experience do you have in your area of expertise? | [0–5]; (5–10]; (10–15]; >15 | |

| Q7. Do you use AI systems routinely? | Yes; no | |

| Q8. How many AI systems have you used? | [0–2]; (2–5]; (5–7]; (7-10]; >10 | |

| Interpretability | Q9. Do AI systems that you use routinely explain to you how decisions and recommendations were made (underlying method)? | Yes, I clearly understand the underlying method embedded in the AI system; yes, I superficially understand the underlying method embedded in the AI system; no, I do not know anything about the underlying method embedded in the AI system |

| Q10. Do AI systems that you use routinely explain to you why decisions and recommendations were made (medical guideline)? | Yes, I am sure that the medical guideline used within the AI system is appropriate; no, I am not sure that the medical guideline used within the AI system is appropriate | |

| Q11. Can the AI system be interpreted so well the end user can manipulate it to obtain a desired outcome? | Yes, the AI system is explainable enough to the level where end users can influence it to produce desired; no, even though the AI system is explainable enough, the system is robust enough to avoid this problem; no, end users only have access to the final decision or recommendation | |

| Q12. In general, are your needs regarding interpretability addressed adequately by AI systems? | Yes, I agree; no; if not, why not? | |

| Privacy | Q13. Do you know the patient population characteristics used for training and validating the AI system? | Yes, I know or have access to the patient population characteristics used for training and validating the AI system; no, I do not know or have access to information concerning AI system training or validation |

| Q14. Do you know what equipment, protocols, and data processing workflows were used to build the training and validation dataset? | Yes, I know or have access to the afore information; no, I do not know or have access to the afore information | |

| Q15. Do you know who annotated or labelled the datasets used for training and validating the AI system (credentials, expertise, and company)? | Yes, I am fully aware of the team who phenotyped or annotated the training and validation datasets; no, I am not aware of who carried out such a process | |

| Q16. Are both training and validation datasets available to the general public or via request? | Yes, both datasets are available to the general public or via request; no, data are available, but annotations are not; no, one of these datasets is not available; no, neither of these datasets is available; I am not aware of this information | |

| Q17. In general, are datasets typically available in your area of expertise? | Yes, datasets are often available to the general public in my area of expertise; yes, datasets are private but they may be accessed after approval; no, datasets are typically private and difficult to access | |

| Q18. In general, are your needs regarding privacy addressed adequately by AI systems? | Yes, I agree; no; if not, why not? | |

| Security - GDPR | Q19. Does the AI system you have been in contact with require any data storage service? | No; yes, an internal data storage service; yes, an external data storage service |

| Q20. Does the data storage service used by the AI system contemplate secure data access (e.g., user hierarchy and permissions)? | No, all users have full permission to write, read, modify files within the data storage; yes, an internal information technology team manages secure data access; yes, an external manages secure data access | |

| Q21. Who is responsible if something goes wrong concerning AI system security? | The person causing the security breach; the principal investigator or line manager; the information technology team; the AI system manufacture company; the institution which bought the AI system; I am not aware of who would be responsible in such a case; other (open text) | |

| Q22. In general, are your needs regarding security addressed adequately by AI systems? | Yes, I agree; no; If not, why not? (open text) | |

| Q23. In general, are the needs of the patients regarding security addressed adequately by AI systems? | Yes, I agree; no; if not, why not? (open text) | |

| Equity | Q24. Are the training and validation datasets biased in such a way it may produce unfair outcomes for marginalised or vulnerable populations? | Yes, I am aware of data bias which may potentially discriminate marginalised or vulnerable populations; no, neither presents data bias that may potentially discriminate marginalised or vulnerable populations; no, I am not aware of such information |

| Q25. Are the training and validation datasets varied enough (e.g., age, biological sex, pathology) so that they are representative of the people for whom it will be making decisions? | Yes, both datasets are varied enough to be considered representative of the target population; no, both datasets are not varied enough to be considered representative of the target population (e.g., low sample sizes or imbalance datasets); no, I am not aware of such information | |

| Intellectual property | Q26. In general, do you think there is the right balance between intellectual property rights and AI transparency/fairness goals? | Yes; no; I do not have enough information to judge this aspect; if yes/no, why/why not? |

| Q27. In general, should the data used to train and validate an AI system be disclosed and described in full? | Yes; no; I do not have enough information to judge this aspect; if yes/no, why/why not? |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bernal, J.; Mazo, C. Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide. Appl. Sci. 2022, 12, 10228. https://doi.org/10.3390/app122010228

Bernal J, Mazo C. Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide. Applied Sciences. 2022; 12(20):10228. https://doi.org/10.3390/app122010228

Chicago/Turabian StyleBernal, Jose, and Claudia Mazo. 2022. "Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide" Applied Sciences 12, no. 20: 10228. https://doi.org/10.3390/app122010228

APA StyleBernal, J., & Mazo, C. (2022). Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide. Applied Sciences, 12(20), 10228. https://doi.org/10.3390/app122010228