Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis

Abstract

1. Introduction

- Machine learning algorithms were analyzed in terms of their use in solving the problem of classification of cancer lesions based on image data.

- Selected feature descriptors and their combinations were analyzed in terms of their impact on the classification efficiency of selected algorithms, assessed on the basis of classification metrics.

- A comparative analysis of algorithms was performed in terms of the impact of computational complexity on the effectiveness of classification in the context of the implementation of the algorithm on mobile devices with limited computing performance.

2. Related Works

3. Materials

4. Method

- Preprocessing and extraction of features vectors from input images;

- Preparing combinations of features vectors obtained by means of individual descriptors;

- Carrying out the image classification process.

4.1. Processing and Extraction of Image Features

4.1.1. Shape

4.1.2. Color

4.1.3. Texture

4.2. Preparing Combinations of Features Vectors

- n—amount of elements;

- k—amount of elements in each combination;

4.3. Classsification of Skin Lesions

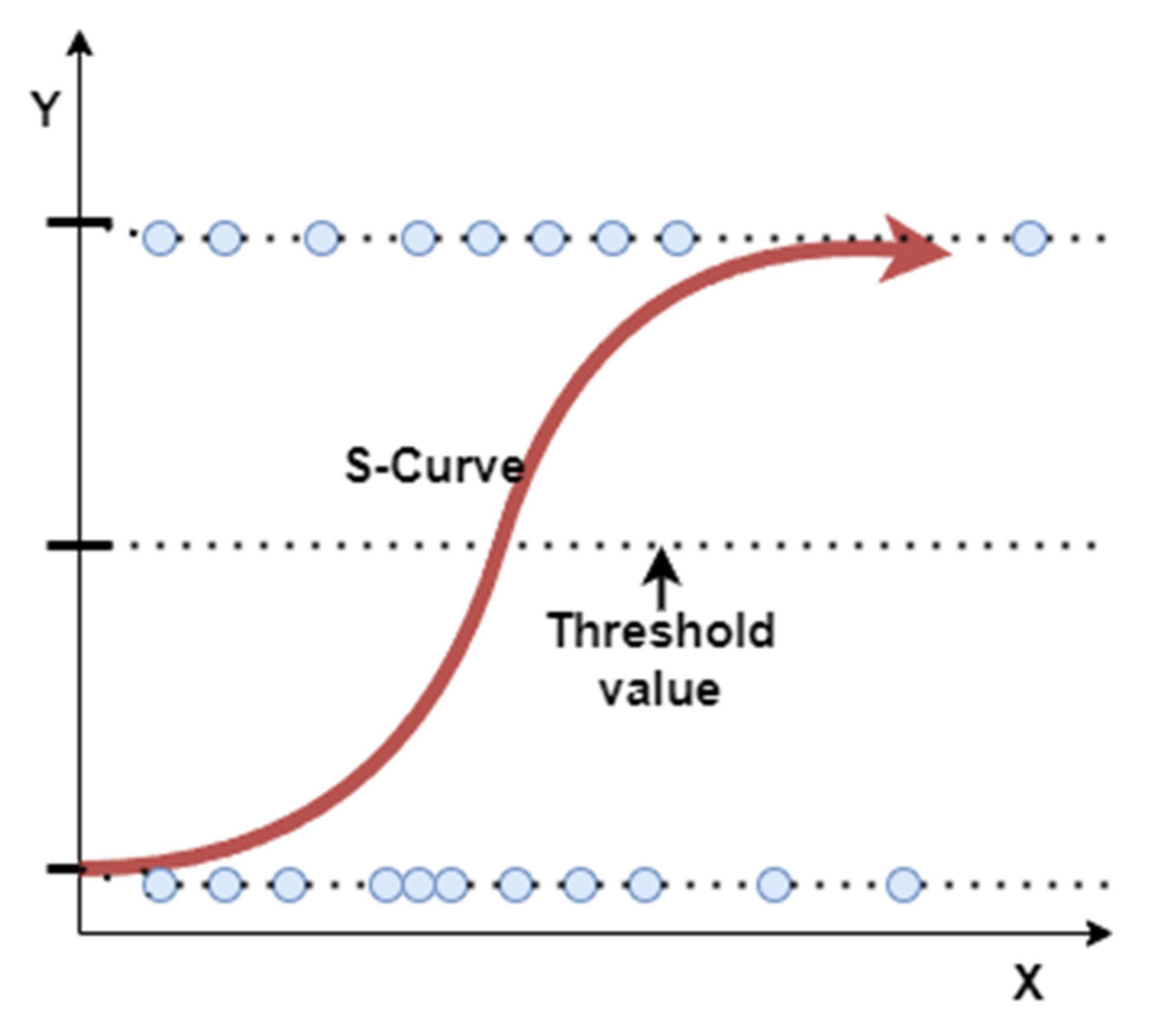

4.3.1. Logistic Regression

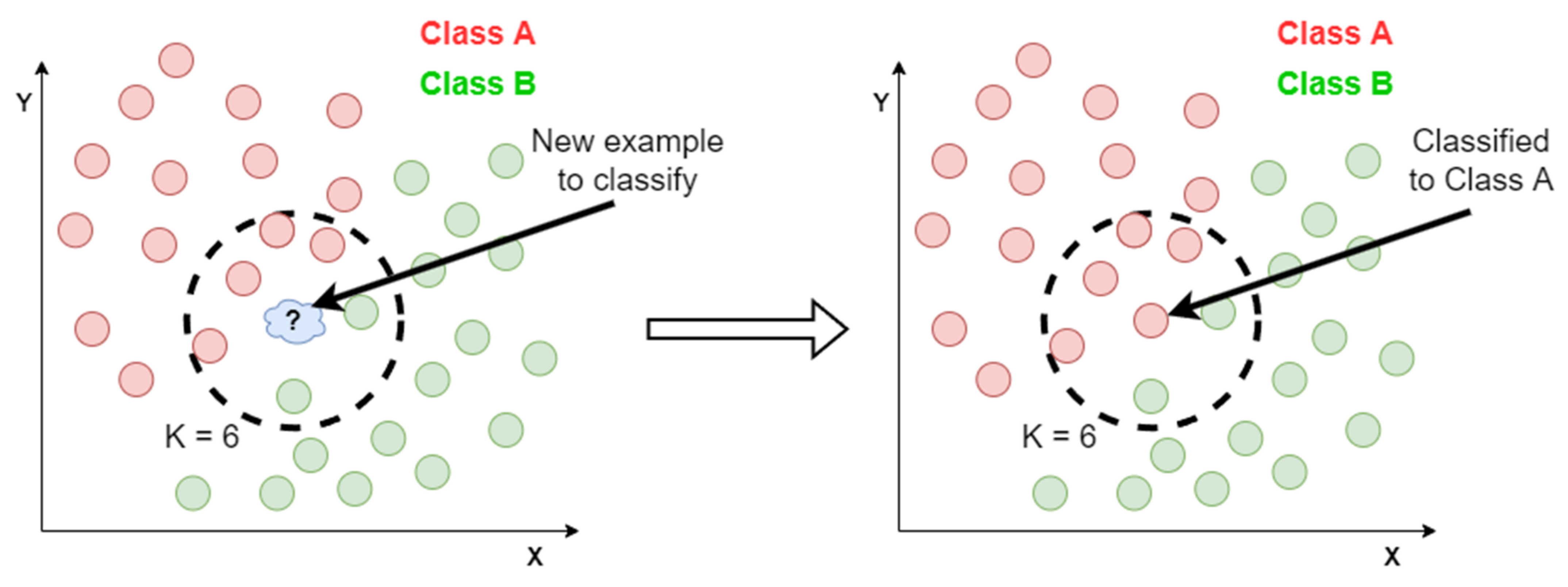

4.3.2. K-Nearest Neighbor

4.3.3. Naïve Bayes

- —conditional probability; the likelihood of event A occurring given that event B occurred,

- —conditional probability; the likelihood of event B occurring given that event A occurred,

- , —probabilities of observing A and B.

4.3.4. Decision Tree

4.3.5. Random Forest

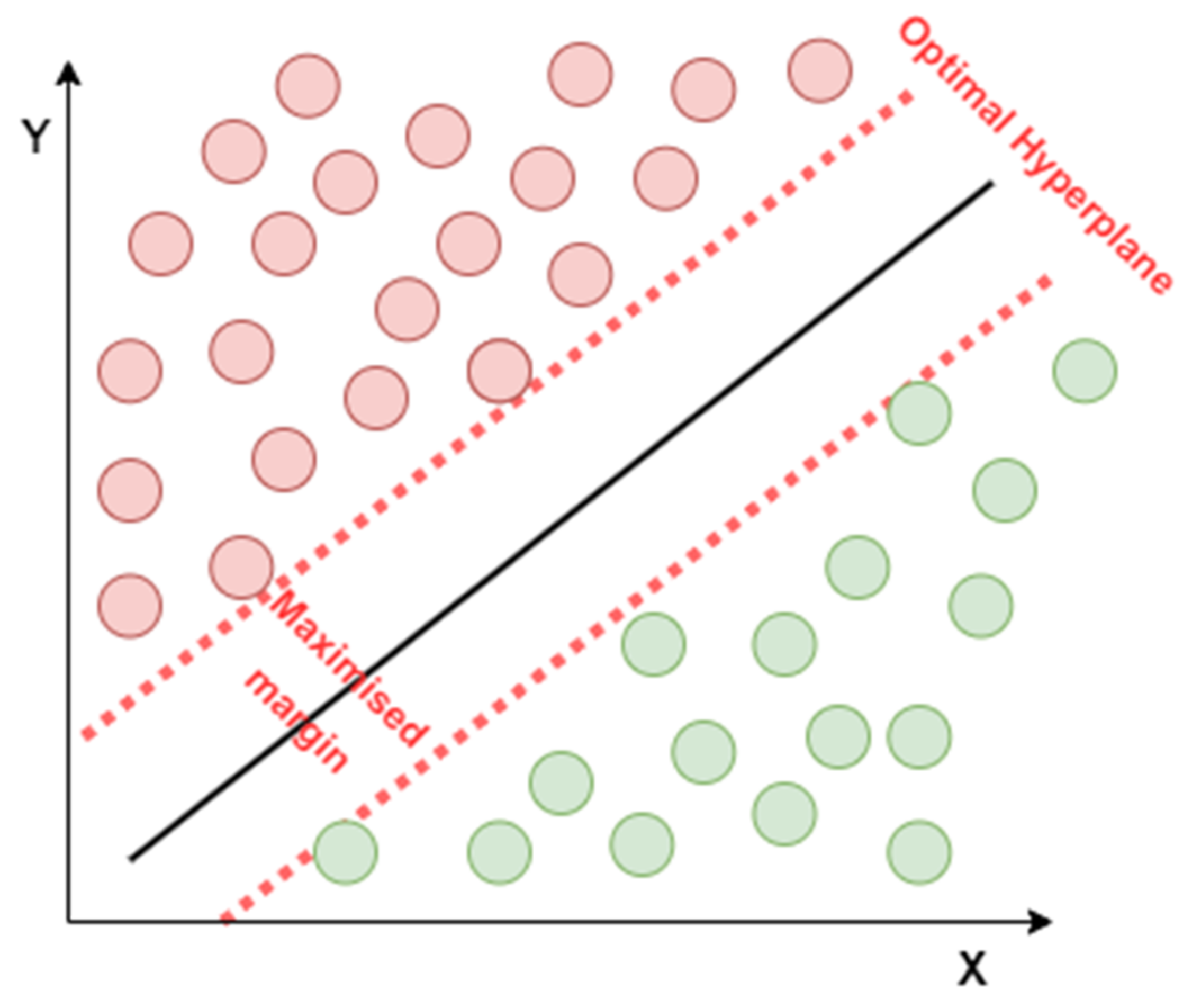

4.3.6. Support Vector Machine

5. Results

5.1. Classic Machine Learning Algorithms

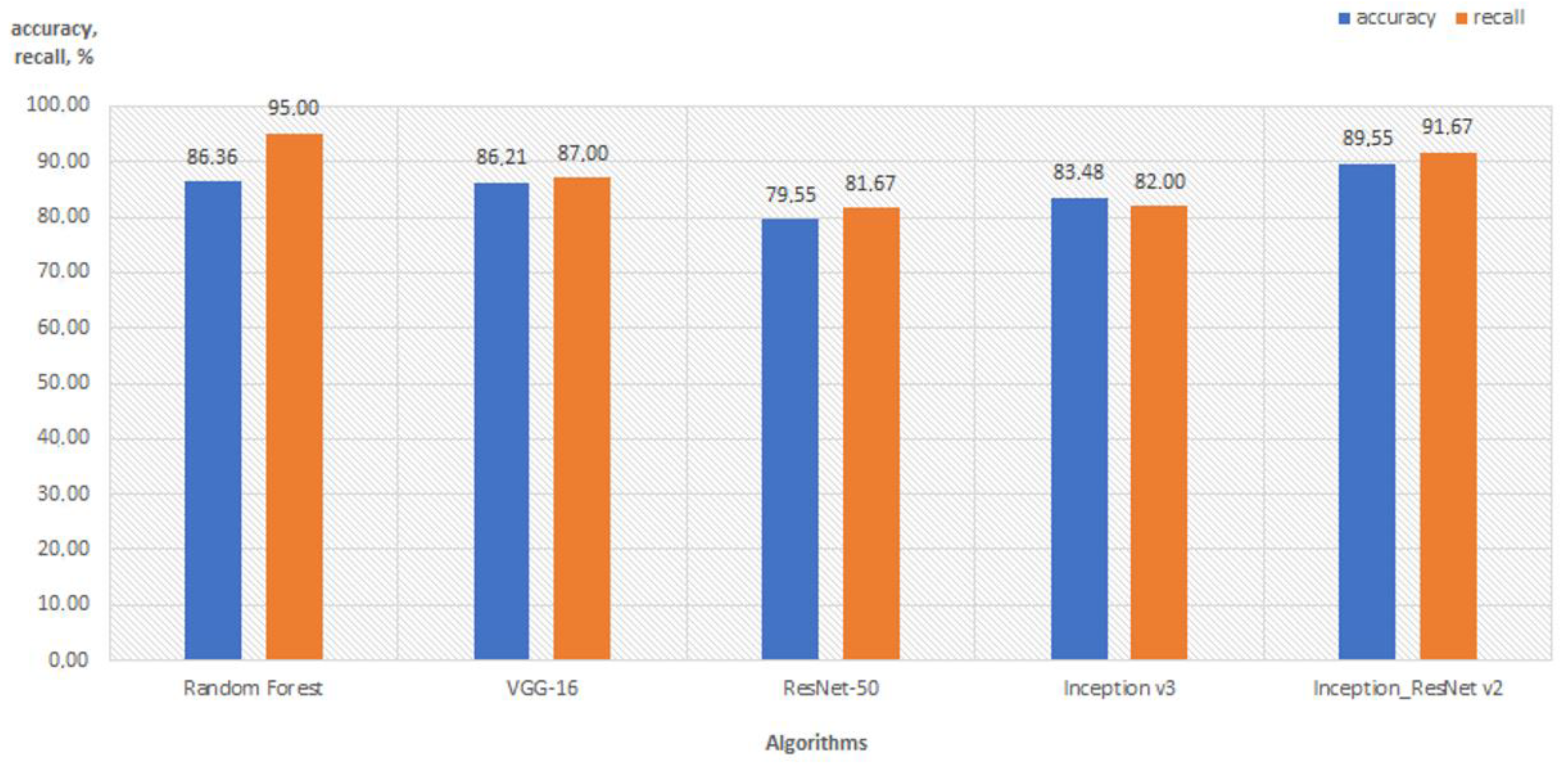

5.2. Comparison with Deep Learning Algorithms

5.3. Comparative Analysis of Performance and Effectiveness

6. Conclusions

- extending the pre-processing of images with the segmentation operation to reduce the background influence on the obtained results;

- extending the database of used feature descriptors, and comparison of their impact on the effectiveness and performance of algorithms.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Davis, L.E.; Shalin, S.C.; Tackett, A.J. Current state of melanoma diagnosis and treatment. Cancer Biol. Ther. 2019, 20, 1366–1379. [Google Scholar] [CrossRef] [PubMed]

- MacGill, M. What to Know about Melanoma. Medical News Today. Available online: https://www.medicalnewstoday.com/articles/154322 (accessed on 15 September 2022).

- Heistein, J.B.; Archarya, U. Malignant Melanoma. StatPearls. Available online: https://www.statpearls.com/ArticleLibrary/viewarticle/24678 (accessed on 15 September 2022).

- Blundo, A.; Cignoni, A.; Banfi, T.; Ciuti, G. Comparative Analysis of Diagnostic Techniques for Melanoma Detection: A Systematic Review of Diagnostic Test Accuracy Studies and Meta-Analysis. Front. Med. 2021, 8, 637069. [Google Scholar] [CrossRef] [PubMed]

- Silva, T.A.E.d.; Silva, L.F.d.; Muchaluat-Saade, D.C.; Conci, A.A. Computational Method to Assist the Diagnosis of Breast Disease Using Dynamic Thermography. Sensors 2020, 20, 3866. [Google Scholar] [CrossRef] [PubMed]

- Fanizzi, A.; Losurdo, L.; Basile, T.M.A.; Bellotti, R.; Bottigli, U.; Delogu, P.; Diacono, D.; Didonna, V.; Fausto, A.; Lombardi, A.; et al. Fully Automated Support System for Diagnosis of Breast Cancer in Contrast-Enhanced Spectral Mammography Images. J. Clin. Med. 2019, 8, 891. [Google Scholar] [CrossRef] [PubMed]

- Sieczkowski, K.; Sondej, T.; Dobrowolski, A.; Olszewski, R. Autocorrelation algorithm for determining a pulse wave delay. In Proceedings of the 2016 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 21–23 September 2016; pp. 321–326. [Google Scholar] [CrossRef]

- Hasan, M.J.; Shon, D.; Im, K.; Choi, H.-K.; Yoo, D.-S.; Kim, J.-M. Sleep State Classification Using Power Spectral Density and Residual Neural Network with Multichannel EEG Signals. Appl. Sci. 2020, 10, 7639. [Google Scholar] [CrossRef]

- Hasan, M.J.; Kim, J.-M. A Hybrid Feature Pool-Based Emotional Stress State Detection Algorithm Using EEG Signals. Brain Sci. 2019, 9, 376. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Xie, H. Face Gender Recognition based on Face Recognition Feature Vectors. In Proceedings of the 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2020; pp. 162–166. [Google Scholar] [CrossRef]

- Alay, N.; Al-Baity, H.H. Deep Learning Approach for Multimodal Biometric Recognition System Based on Fusion of Iris, Face, and Finger Vein Traits. Sensors 2020, 20, 5523. [Google Scholar] [CrossRef] [PubMed]

- Kanimozhi, S.; Gayathri, G.; Mala, T. Multiple Real-time object identification using Single shot Multi-Box detection. In Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Gurugram, India, 6–7 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Rapacz, S.; Chołda, P.; Natkaniec, M. A Method for Fast Selection of Machine-Learning Classifiers for Spam Filtering. Electronics 2021, 10, 2083. [Google Scholar] [CrossRef]

- Xia, H.; Wang, C.; Yan, L.; Dong, X.; Wang, Y. Machine Learning Based Medicine Distribution System. In Proceedings of the 2019 10th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Metz, France, 18–21 September 2019; pp. 912–915. [Google Scholar] [CrossRef]

- Ullrich, M.; Küderle, A.; Reggi, L.; Cereatti, A.; Eskofier, B.M.; Kluge, F. Machine learning-based distinction of left and right foot contacts in lower back inertial sensor gait data. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico City, Mexico, 1–5 November 2021; pp. 5958–5961. [Google Scholar] [CrossRef]

- Seif, G. Deep Learning vs. Classical Machine Learning. Available online: https://towardsdatascience.com/deep-learning-vs-classical-machine-learning-9a42c6d48aa (accessed on 9 April 2021).

- Jianu, S.R.S.; Ichim, L.; Popescu, D. Automatic Diagnosis of Skin Cancer Using Neural Networks. In Proceedings of the 2019 11th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 28–30 March 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Pillay, V.; Hirasen, D.; Viriri, S.; Gwetu, M. Melanoma Skin Cancer Classification Using Transfer Learning. In Advances in Computational Collective Intelligence. ICCCI 2020. Communications in Computer and Information Science; Hernes, M., Wojtkiewicz, K., Szczerbicki, E., Eds.; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Vasconcelos, C.N.; Vasconcelos, B.N. Convolutional neural network committees for melanoma classification with classical and expert knowledge based image transforms data augmentation. arXiv 2017, arXiv:1702.07025. [Google Scholar]

- Huang, H.W.; Hsu, B.W.Y.; Lee, C.H.; Tseng, V.S. Development of a light-weight deep learning model for cloud applications and remote diagnosis of skin cancers. J. Dermatol. 2020, 48, 310–316. [Google Scholar] [CrossRef] [PubMed]

- Sabri, M.A.; Filali, Y.; el Khoukhi, H.; Aarab, A. Skin Cancer Diagnosis Using an Improved Ensemble Machine Learning model. In Proceedings of the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 9–11 June 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Tumuluru, P.; Lakshmi, C.P.; Sahaja, T.; Prazna, R. A Review of Machine Learning Techniques for Breast Cancer Diagnosis in Medical Applications. In Proceedings of the 2019 Third International conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Palladam, India, 12–14 December 2019; pp. 618–623. [Google Scholar] [CrossRef]

- Yu, Z.; Jiang, X.; Zhou, F.; Qing, J.; Ni, D.; Chen, S.; Lei, B.; Wang, T. Melanoma Recognition in Dermoscopy Images via Aggregated Deep Convolutional Features. IEEE Trans. Biomed. Eng. 2019, 66, 1006–1016. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- The International Skin Imaging Collaboration. Available online: https://www.isic-archive.com/#!/topWithHeader/wideContentTop/main (accessed on 5 January 2021).

- Vadivel, A.; Sural, S.; Majumdar, A.K. Human color perception in the HSV space and its application in histogram generation for image retrieval. In Proceedings of the Color Imaging X: Processing, Hardcopy, and Applications, San Jose, CA, USA, 17 January 2005; Volume 5667. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Harwood, D. Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In Proceedings of the 12th International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994; Volume 1, pp. 582–585. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI’17), San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

| Algorithm | Accuracy, % | Descriptor for Accuracy | Recall, % | Descriptor for Recall |

|---|---|---|---|---|

| Logistic Regression | 82.12 | Histogram_LBP_Haralick_ _HuMoments | 85.00 | Histogram_LBP_HuMoments |

| Histogram_LBP_Haralick | Histogram_LBP | |||

| Histogram_Haralick_ _HuMoments | Histogram | |||

| Histogram_ HuMoments | ||||

| 81.82 | Histogram_Haralick | 84.00 | Histogram_LBP_Haralick_ _HuMoments | |

| 79.85 | Histogram | Histogram_LBP_Haralick | ||

| Histogram_Haralick_ _HuMoments | ||||

| k-Nearest Neighbors | 80.00 | Histogram_LBP_Haralick_ _HuMoments | 78.67 | Histogram_LBP_HuMoments |

| Histogram_LBP | ||||

| Histogram_LBP_Haralick | ||||

| 79.70 | Histogram_LBP_Haralick | 78.00 | Histogram_Haralick | |

| Histogram_LBP | Histogram_Haralick_ _HuMoments | |||

| Naive Bayes | 74.09 | Histogram_Haralick | 100 | HuMoments |

| Histogram_LBP_Haralick | Haralick_HuMoments | |||

| Histogram_LBP | LBP_Haralick_HuMoments | |||

| Histogram | ||||

| 69.85 | LBP_Haralick | 99.67 | Histogram_Haralick_ _HuMoments | |

| Histogram_LBP_Haralick_ _HuMoments | ||||

| LBP_HuMoments | Histogram_ HuMoments | |||

| Histogram_LBP_HuMoments | ||||

| Decision Tree | 78.18 | Histogram_Haralick | 75.33 | Histogram_Haralick |

| 78.03 | Histogram_LBP | 74.33 | Histogram_LBP_Haralick | |

| Histogram | Histogram | |||

| Haralick_HuMoments | ||||

| Random Forest | 86.36 | Histogram_Haralick_ _HuMoments | 95.00 | Histogram_LBP_Haralick |

| 85.45 | Histogram_LBP_HuMoments | 94.67 | Histogram_Haralick_ _HuMoments | |

| SVM | 82.42 | Histogram_Haralick | 93.00 | Histogram_ HuMoments |

| 81.97 | Histogram_LBP_Haralick_ _HuMoments | 91.00 | Histogram_LBP_HuMoments |

| Algorithm | Time, s |

|---|---|

| Logistic Regression_ Histogram_LBP_Haralic_HuMoments | 53.50 |

| k-Nearest Neighbors_ Histogram_LBP_Haralic_HuMoments | 31.09 |

| Naive Bayes_Histogram_Haralick | 22.35 |

| Decision Tree_ Histogram_Haralick | 22.78 |

| Random Forest_ Histogram_Haralick_ _HuMoments | 22.97 |

| SVM_ Histogram_Haralick | 26.72 |

| VGG-16 | 749.00 |

| ResNet-50 | 615.00 |

| InceptionV3 | 612.00 |

| ResNet-InceptionV2 | 800.00 |

| Histogram | 0.5 |

| Haralick | 21.8 |

| Hu Moments | 0.16 |

| LBP | 8.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bistroń, M.; Piotrowski, Z. Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis. Appl. Sci. 2022, 12, 9960. https://doi.org/10.3390/app12199960

Bistroń M, Piotrowski Z. Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis. Applied Sciences. 2022; 12(19):9960. https://doi.org/10.3390/app12199960

Chicago/Turabian StyleBistroń, Marta, and Zbigniew Piotrowski. 2022. "Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis" Applied Sciences 12, no. 19: 9960. https://doi.org/10.3390/app12199960

APA StyleBistroń, M., & Piotrowski, Z. (2022). Comparison of Machine Learning Algorithms Used for Skin Cancer Diagnosis. Applied Sciences, 12(19), 9960. https://doi.org/10.3390/app12199960