Abstract

Federated Learning is a widely adopted method for training neural networks over distributed data. One main limitation is the performance degradation that occurs when data are heterogeneously distributed. While many studies have attempted to address this problem, a more recent understanding of neural networks provides insight to an alternative approach. In this study, we show that only certain important layers in a neural network require regularization for effective training. We additionally verify that Centered Kernel Alignment (CKA) most accurately calculates similarities between layers of neural networks trained on different data. By applying CKA-based regularization to important layers during training, we significantly improved performances in heterogeneous settings. We present FedCKA, a simple framework that outperforms previous state-of-the-art methods on various deep learning tasks while also improving efficiency and scalability.

1. Introduction

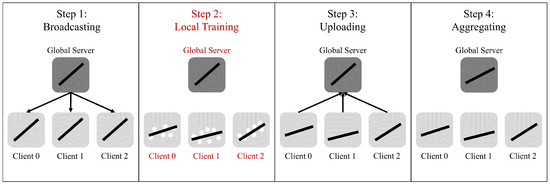

The success of deep learning in a plethora of fields has led to a countless number of research studies conducted to leverage its strengths [1]. One main outcome resulting from this success is the mass collection of data [2]. As the collection of data increases at a rate much faster than that of the computing performance and storage capacity of consumer products, it is becoming progressively difficult to deploy trained state-of-the-art models within a reasonable budget. In light of this, Federated Learning [3] has been introduced as a method to train a neural network with massively distributed data. The most widely used and accepted approach for the training and aggregation process is FedAvg [3]. FedAvg typically progresses with the repetition of four steps, as shown in Figure 1. (1) A centralized or de-centralized server broadcasts a model (the global model) to each of its clients. (2) Each client trains its copy of the model (the local model) with its local data. (3) Clients upload their trained model to the server. (4) The server aggregates the trained models into a single model and prepares it to be broadcast in the next round. These steps are repeated until convergence or other criteria are met.

Figure 1.

The typical steps of Federated Learning (FedAvg).

Federated learning is appealing for many reasons, such as negating the cost of collecting data into a centralized location and effective parallelization across computing units [4]. Thus, it has been applied to a wide range of research studies, including a distributed learning framework on vehicular networks [5], IoT devices [6], and even as a privacy-preserving method for medical records [7]. However, one major issue with the application of Federated Learning is the performance degradation that occurs with heterogeneous data. This refers to settings in which data are not independent and identically distributed (non-IID) across clients. The drop in performance is observed to be caused by a disagreement in local optima. That is, because different clients train its copy of the neural network according to its individual local data, the resulting average can stray from the true optimum. Unfortunately, it is realistic to expect non-IID data in many real-world applications [8,9]. While many studies have attempted to address this problem by regularizing the entire model during the training process, we argue that a more recent understanding of neural networks suggests regularizing every layer may limit performance.

In this study, we present FedCKA to address these limitations. First, we show that regularizing the first two naturally similar layers is most important for improving performance in non-IID settings. Previous studies had regularized each individual layers. Not only is this ineffective for training, it also limits scalability as the number of layers in a model increases. By regularizing only these important layers, performance improves beyond previous studies. Efficiency and scalability also improved, as we do not need to calculate regularization terms for every layer. Second, we show that Centered Kernel Alignment (CKA) is most suitable when comparing the representational similarity between layers of neural networks. Previous studies added a regularization term by comparing the representation of neural networks with the l2-distance (FedProx) or cosine similarity (MOON). By using CKA, we improve performances, as representations between important layers can accurately be regularized regardless of dimension or rank [10]; hence, the name FedCKA. Our contributions are summarized as follows:

- We improve performances in heterogeneous settings. By building on the most up-to-date understanding of neural networks, we apply layer-wise regularization to only important layers.

- We improve the efficiency and scalability of regularization. By regularizing only important layers, we exclusively show training times that are comparable to FedAvg.

2. Related Works

Layers in Neural Networks

Understanding the function of layers in a neural network is an under-researched field of deep learning. It is, however, an important prerequisite for the application of layer-wise regularization. We build our study based on findings of two relevant papers.

The first study [11] showed that there are certain ’critical’ layers that define a model’s performance. In particular, when trained layers were re-initialized back to their original weights, ‘critical’ layers heavily decreased performance, while ‘robust’ layers had minimal impact. This study drew several relevant conclusions. First, the very first layer of neural networks is the most sensitive to re-initialization. Second, robustness is not correlated with the l2-norm or l∞-norm between initial weights and trained weights. Third, while ‘robust’ layers did not affect performance when changed to their initial weights, most layers heavily decreased performance when changed to non-initial random weights. Considering these conclusions, we understand that certain layers are not important in defining performance. Regularizing these non-important layers would be ineffective and may even hurt performance.

The second study [10] introduced Centered Kernel Alignment (CKA) as a metric for measuring the similarity between layers of neural networks. In particular, the study showed that metrics that calculate the similarity between representations of neural networks should be invariant to orthogonal transformations and isotropic scaling while being invertible to linear transformations. This study drew one very relevant conclusion. For neural networks trained on different datasets, early layers, but not late layers, learn similar representations. Considering this conclusion, if we were to properly regularize neural networks trained on different datasets, we should focus on layers that are naturally similar and not on those that are naturally different.

Studies that improve performances on non-IID data generally fall into two categories. The first focuses on regularizing or modifying the client training process (step 2). The second focuses on modifying the aggregation process (step 4). Some studies employ knowledge distillation techniques [12,13], while others use data sharing [14]. Other works such as FedMA [15] aggregates each layer separately, starting with the layer closest to the input. After a single layer is aggregated, it is frozen, and each client trains all subsequent layers. This process is repeated until all layers are individually aggregated. FedBN [16] aggregates all layers except for batch normalization layers, which are locally stored to reduce local data bias. While these approaches are significant and relevant to our work, they focus more on the aggregation process. Thus, we focus on works that regularize local training as it is more closely related. Namely, we focus on FedProx [17], SCAFFOLD [18], and MOON [19], all of which add a regularization term to the default FedAvg [3] training process.

FedAvg was the first study to introduce Federated Learning. Each client trains a model using a gradient descent loss function, and the server averages the trained model based on the number of data samples that each client holds. However, due to the performance degradation in non-IID settings, many studies added a regularization term to the default FedAvg training process. The objective of these methods is to decrease the disagreement in local optima by limiting local updates that stray too far from the global model. FedProx adds a proximal regularization term that calculates the l2-distance between the local and global model. SCAFFOLD adds a control variate regularization term that induces variance reductions on local updates based on the updates of other clients. Most recent and most similar to our work is MOON. MOON adds a contrastive regularization term that calculates the cosine similarity between the MLP projections of the local and global model. The study takes inspiration from contrastive learning, particularly SimCLR [20]. The intuition is that the global model is less biased than local models; thus, local updates should be more similar to the global model than past local models. One difference to note is that while contrastive learning trains a model using the projections of one model on many different images (i.e., one model, different data), MOON regularizes a model using the projections of different models on the same images (i.e., three models, same data).

Overall, these studies add a regularization term by comparing all layers of the neural network. However, we argue that only important layers should be regularized. Late layers are naturally dissimilar when trained on different datasets. Regularizing a model based on these naturally dissimilar late layers would be ineffective. Rather, it may be beneficial to focus only on the naturally similar early layers of the model.

3. FedCKA

3.1. Regularizing Naturally Similar Layers

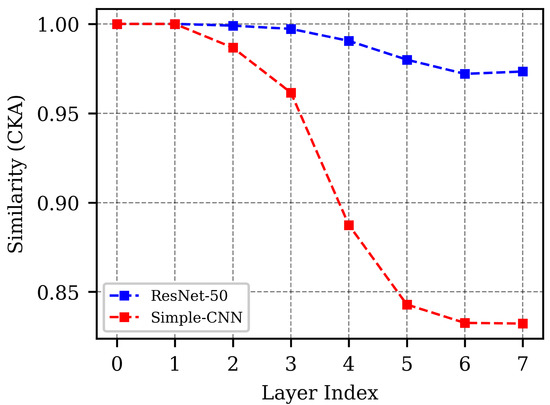

FedCKA is designed on the principle that naturally similar, but not naturally dissimilar, layers should be regularized. This is based on the premise that early layers, but not late layers, develop similar representations when trained on different datasets [10]. We verify this in a Federated Learning environment. Using a small seven-layer convolutional neural network and the ResNet-50 model [21], we trained 10 clients for 20 communications rounds on independently and identically distributed (IID) subsets of the CIFAR-10 [22] dataset. After training, we viewed the similarity between the layers of local models, calculated by the Centered Kernel Alignment [10] on the CIFAR-10 test set.

3.2. Federated Learning with Non-IID Data

Figure 2 shows the similarity of layers between local models after training. We report all layers for the simple CNN. For the ResNet-50 model, we report the accuracy of the initial convolution layer, each of the four blocks of the ResNet, the two layers in the projector, and the output layer. We verify that early layers, but not late layers, develop similar representations in the ideal Federated Learning setting, where the distribution of data across clients are IID. For convolutional neural networks without residual blocks, the first two naturally similar layers are the two layers closest to the input. For ResNets, it is the initial convolutional layer and the first post-residual block. As also mentioned in Kornblith et al. [10], post-residual layers, but not layers within residuals, develop similar representations.

Figure 2.

CKA similarity between clients at the end of training (refer to Experimental Setup for more information on training).

The objective of regularization in Federated Learning is to penalize local updates that stray from the global model. Since late layers are naturally dissimilar in an IID Federated Learning setting, all settings, including sub-ideal non-IID settings, should emulate the representational similarity of the ideal IID setting—achieved by regularizing only naturally similar layers. Furthermore, regularizing the first two naturally similar layers is unique from previous studies, which had regularized local updates based on all layers. This allows FedCKA to be much more scalable than other methods. The computational overhead for previous studies increases rapidly in proportion to the number of parameters, because all layers are regularized. FedCKA keeps the overhead nearly constant, as only two layers close to the input are regularized.

3.3. Measuring Layer-Wise Similarity

FedCKA is designed to regularize dissimilar updates in layers that should naturally be similar. However, there is currently no standard for measuring the similarity of layers between neural networks. While there are classical methods of applying univariate or multivariate analysis for comparing matrices, these methods are not suitable for comparing the similarity of layers and representations of different neural networks [10]. As for norms, Zhang et al. [11] concluded that a layer’s robustness to re-initialization is not correlated with the l2-norm or l∞-norm. This suggests that using these norms to regularize dissimilar updates, as in previous works, may be inaccurate.

Kornblith et al. [10] concluded that similarity metrics for comparing the representation of different neural networks should be invariant to orthogonal transformations and isotropic scaling, while they are invertible to linear transformation. The study introduced centered kernel alignments (CKAa) and showed that the metric is most consistent in measuring the similarity between representation of neural networks. Thus, FedCKA regularizes local updates using the CKA metric as a similarity measure.

3.4. Modifications to FedAvg

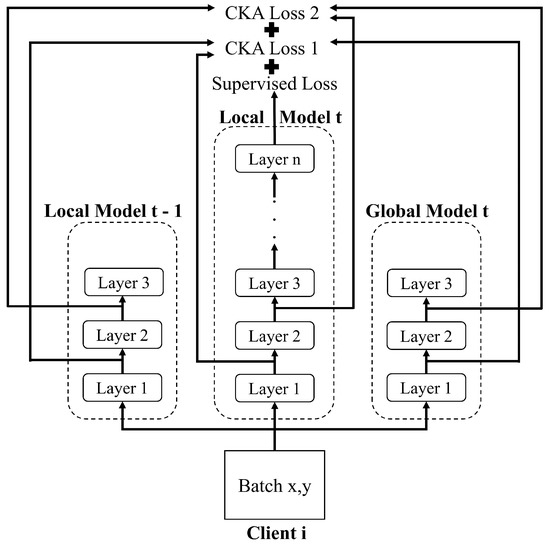

FedCKA adds a regularization term to the local training process of the default FedAvg algorithm, keeping the entire framework simple. Algorithm 1 and Figure 3 shows the FedCKA framework in algorithm and figure form, respectively. More formally, we add as a regularization term to the FedAvg training algorithm. The local loss function is as shown in Equation (1).

Figure 3.

Training process of FedCKA.

Here, is the cross-entropy loss, is a hyper-parameter to control the strength of the regularization term, , in proportion to . is shown in more detail in Equation (2).

| Algorithm 1: FedCKA |

Input: number of communication rounds R, number of clients C, number of local epochs E, loss weighting variable , learning rate Output: The trained model w

|

The formula of is a slight modification to the contrastive loss that is used in SimCLR [20]. There are four main differences. First, SimCLR uses the representations of one model on different samples in a batch to calculate contrastive loss. FedCKA uses the representation of three models on the same samples in a batch to calculate . , , and are the representations of client i’s current local model, client i’s previous round local model, and the current global model, respectively. Second, SimCLR uses the temperature parameter to increase performance on difficult samples. FedCKA excludes , as it was not seen to help performance. Third, SimCLR uses cosine similarity to measure the similarity between the representations of difference datasets. FedCKA uses CKA as its measure of similarity. Fourth, SimCLR calculates contrastive loss once per batch, using the representations of the projection head. Here, M represents the number of layers being regularized. FedCKA use calculates M times per batch, using the representations of the first M naturally similar layers, indexed by n, and averages the loss based on the number of layers to regularize. M is set to two by default unless otherwise stated.

As per Kornblith et al. [10], CKA is shown in Equation (3). Here, the eigenvalue of is . While Kornblith et al. [10] also presented a method to use kernels with CKA, we use the linear variant, as it is more computationally efficient, while having minimal impact on accuracy.

4. Experimental Results and Discussion

4.1. Experiment Setup

We compare FedCKA with the current state-of-the-art method, MOON [19], as well as FedAvg [3], FedProx [17], and SCAFFOLD [18]. We purposefully use a similar experimental setup to MOON, both because it is the most recent study and also reports the highest performance. In particular, CIFAR-10, CIFAR-100 [22], and Tiny ImageNet [23] datasets are used to test the performance of all methods.

For CIFAR-10, we use a small convolutional neural network. Two 5 × 5 convolutional layers comprise the base encoder, with 16 and 32 channels and two 2 × 2 max-pooling layers following each convolutional layer. A projection head of four fully connected layers follow the encoder, with 120, 84, 84, and 256 neurons. The final layer is the output layer with the number of classes. Although FedCKA and other studies can perform without this projection head, we include it because MOON shows a high discrepancy in performance without it. For CIFAR-100 and Tiny ImageNet, we use ResNet-50 [21]. We also add the projection head before the output layer, as per MOON.

We use the cross-entropy loss and SGD as our optimizer with a learning rate of 0.1, momentum of 0.9, and weight decay of 0.00001. Local epochs are set to 10. These are also the parameters used in MOON. Some small changes we made were with the batch size and communication rounds. We use a constant 128 for the batch size and trained for 100 communication rounds on CIFAR-10, 40 communication rounds on CIFAR-100, and 20 communication rounds on Tiny ImageNet. We used a lower number of communication rounds for the latter two datasets, because the ResNet-50 model overfit quite quickly.

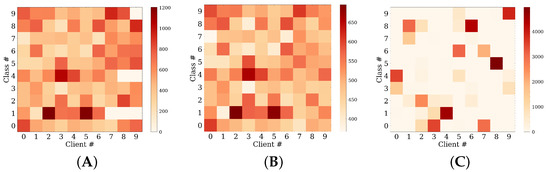

As with many previous studies, we use the Dirichlet distribution to simulate heterogeneous settings [9,19,24]. The parameter controls the strength of heterogeneity, with being the most heterogeneous, and being non-heterogeneous. We report results for , similarly to MOON. Figure 4 shows the distribution of data across clients on the CIFAR-10 dataset with different . Figure 4A shows , Figure 4B shows , and Figure 4C shows . All experiments were conducted using the PyTorch [25] library on a single GTX Titan V and four Intel Xeon Gold 5115 processors.

Figure 4.

Distribution of the CIFAR-10 dataset across 10 clients according to the Dirichlet distribution. The x-axis shows the index of the client, and the y-axis shows the index of the class (label). (A–C) shows the data distribution of , , and , respectively. As parameter approaches 0, the heterogeneity of class distribution increases.

4.2. Accuracy

FedCKA adds a hyperparameter to control the strength of . We tune from [3, 5, 10] and report the best results. MOON and FedProx also have a term. We also tune the hyperparameter with these methods. For MOON, we tune from [0.1, 1, 5, 10], and for FedProx, we tune from [0.001, 0.01, 0.1, 1], as used in each work. In addition, for MOON, we use as reported in their work.

Table 1 shows the performance across CIFAR-10, CIFAR-100, and Tiny ImageNet with . For FedProx, MOON, and FedCKA, we report performances with the best . For FedCKA, the best is 3, 10, and 3 for CIFAR-10, CIFAR-100, and Tiny ImageNet, respectively. For MOON, the best is 10, 5, and 0.1. For FedProx, the best is 0.001, 0.1, and 0.1. Table 2 shows the performance across increasing heterogeneity on the CIFAR-10 dataset with . For FedCKA, the best is 5, 3, and 3 for each , respectively. For MOON, the best is 0.1, 10, and 10. For FedProx, the best is 0.001, 0.1, and 0.001.

Table 1.

Accuracy across datasets ().

Table 2.

Accuracy across (CIFAR-10).

We observe that FedCKA consistently outperforms previous methods across different datasets and across different . FedCKA improves performances in heterogeneous settings due to regularizing layers that are naturally similar and not layers that are naturally dissimilar. It is also interesting to see that FedCKA performs better by a larger margin when is substantial. This is likely because the global model can more effectively regularize updates as it is less biased when data distribution approaches IID settings. However, we also observe that other studies consistently improve performance, albeit by a smaller margin than FedCKA. FedProx and SCAFFOLD improve performances, likely due to their inclusion of naturally similar layers in regularization. The performance gain is lower, as they also include naturally dissimilar layers in regularization. MOON generally improves performance compared to FedProx and SCAFFOLD likely due to their use of a contrastive loss. That is, MOON shows that neural networks should be trained to be more similar to the global model than past local model, rather than only being blindly similar to the global model. By only regularizing naturally similar layers using a contrastive loss based on CKA, FedCKA outperforms all methods.

Note that across most methods and settings, there are discrepancies to the accuracy reported by MOON [19]. In particular, MOON reports higher accuracy across all methods, although the model’s architecture are similar if not equivalent. We verify that this discrepancy is caused by the data augmentation used in experiments with MOON. We disclude augmentation as it would be unfair to generalize results across different non-IID settings if augmentations were to be used. The reported non-IIDness would decrease, as clients could create a more balanced distribution.

4.3. Communication Rounds and Local Epochs

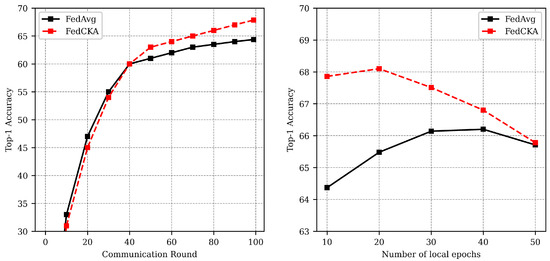

We study the effects of regularization on the performance improvement per communication rounds. Results are shown in Figure 5. As expected with any regularization methods, we find that the accuracy for FedCKA is lower for the 40 communication rounds. However, we also find that after 40 communication epochs, FedCKA improves performances due to effective regularization. FedCKA decreases the bias that would have otherwise limited performance by penalizing weight updates that are not in agreement with the global model.

Figure 5.

Effects of communication round and local epochs.

We also explore the effects of the number of local epochs on overall performance. We find that the performance of both FedAvg and FedCKA increases slightly when the number of local epochs increased. However, when further increasing the number of local epochs, we find that accuracy decreases, suggesting overfitting. The small increase in performance does not warrant additional local epochs. Clients are limited in their computational budget. Thus, computation cannot be used sparingly.

4.4. Regularizing Only Important Layers

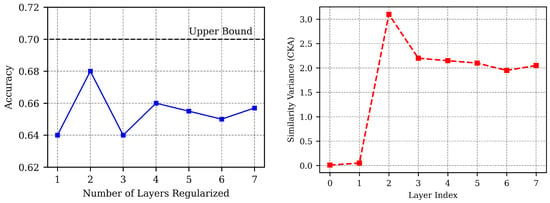

We study the effects of regularizing different number of layers. Using the CIFAR-10 dataset with , we change the number of layers to regularize through . Formally, we change M in Equation (2) by scaling , and report the accuracy in Figure 6. Accuracy is the highest when only the first two layers are regularized. Note the dotted line representing the upper bound for Federated Learning. When the same model is trained on a centralized server with the entire CIFAR-10 dataset, accuracy is 70%. FedCKA with regularization on the first two naturally similar layers nearly reaches this upper bound.

Figure 6.

Accuracy with respect to the number of layers regularized and variance in similarity measures between clients on CIFAR-10 and .

This verifies our hypothesis that only naturally similar, but not naturally dissimilar layers, should be regularized. By regularizing only one layer, a naturally similar layer (the second layer) would be excluded, thus decreasing performance. By regularizing three layers, a non-similar layer (the third layer) with the highest variance of non-similarity (see Figure 6) would be regularized, thus decreasing performance. The performance increase when 4–7 layers are regularized may seem anomalous, yet is valid when considering that both the first two naturally similar layers are included, and the weight of regularization of the third layer decreases with a higher M.

4.5. Using the Best Similarity Metric

We study the effects of regularizing the first two naturally similar layers with different similarity metrics. Using the CIFAR-10 dataset with , we change the similarity metric to regularize through . Formally, we change in Equation (2) to three other similarity metrics: first, the kernel CKA, introduced in Kornblith et al. [10] (); second, the squared Frobenius norm (); third, the vectorized cosine similarity (). We compare the results with these different metrics as well as the baseline, FedAvg. The results are shown in Table 3.

Table 3.

Accuracy and training duration with FedCKA with different similarity metrics (CIFAR-10).

We observe that performance is the highest with CKA due to the increased accuracy of measuring similarity. Only truly dissimilar updates are penalized, thus improving performance. Note Equation (3) includes an inner product in the numerator. While the l2-distance or cosine similarity are also inner products, CKA is more suitable for measuring similarities between matrices of higher dimension than the number of data points, as is the case for neural network representations [10].

Furthermore, while kernel CKA slightly outperforms linear CKA, we opt to use linear CKA considering the computational overhead. We also observe that the squared Frobenius norm and vectorized cosine similarity decreased performance only slightly. These methods outperformed most previous works. This verifies that while it is important to use an accurate similarity measure, it is more important to focus on regularizing naturally similar layers.

4.6. Efficiency and Scalability

Efficient and scalable local training procedures are important engineering principles in Federated Learning. That is, for Federated Learning to be applied to real-world applications, we must assume that clients have limited computing resources. Thus, we analyze the local training time of all methods, as shown in Table 4. Note that FedAvg is the lower bound for training time, since all other methods add a regularization term.

Table 4.

Average Training Duration Per Communication Round (in seconds).

For a seven-layer CNN trained on CIFAR-10, the training time for all methods are fairly similar. FedCKA extends training by the largest amount, as the matrix multiplication operation to calculate the CKA similarity is proportionally expensive to the forward and back propagation of the small model. However, for ResNet-50 trained on Tiny ImageNet, we see that the training time of FedProx, SCAFFOLD, and MOON increased substantially. Only FedCKA has comparable training times to FedAvg. This is because FedProx and SCAFFOLD perform expensive operations on the weights of each layer, and MOON performs forward propagation on three models until the penultimate layer. All these operations scale substantially as the number of layers increase. While FedCKA also performs forward propagation on three models, the number of layers remains static, thus being most efficient with medium-sized models.

We emphasize that regularization must remain scalable for Federated Learning to be applied to state-of-the-art models. Even on ResNet-50, which is no longer considered a large model, other Federated Learning regularization methods lack scalabililty. This causes difficulty in testing these methods with the current state-of-the-art models, such as ViT [26] posessing 1.843 billion parameters, or slightly older models, such as EfficientNet-B7 [27] posessing 813 layers.

5. Conclusions and Future Work

Improving the performance of Federated Learning on heterogeneous data is a widely researched topic. However, many previous studies suggested that regularizing every layer of neural networks during local training is the best method to increase performance. We propose FedCKA, an alternative approach built on the most up-to-date understanding of neural networks. By regularizing naturally similar, but not naturally dissimilar layers, during local training, performance improves beyond previous studies. We also show that FedCKA is currently the one of the best regularization methods with adequate scalability when trained with a moderatly sized model.

FedCKA shows that the proper regularization of important layers improves the performance of Federated Learning on heterogeneous data. However, standardizing the comparison of neural networks is an important step in a deeper understanding of neural networks. Moreover, there are questions as to the accuracy of CKA in measuring similarity in models such as Transformers or Graph Neural Networks. These are some topics we leave for future studies.

Author Contributions

Conceptualization, H.M.S.; formal analysis, H.M.S., M.H.K. and T.-M.C.; funding acquisition, M.H.K. and T.-M.C.; Investigation, H.M.S. and M.H.K.; methodology, H.M.S.; project administration, M.H.K. and T.-M.C.; software, H.M.S.; supervision, T.-M.C.; validation, H.M.S. and M.H.K.; visualization, H.M.S.; writing—original draft, H.M.S.; writing—review and editing, H.M.S., M.H.K. and T.-M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Institute of Information and communications Technology Planning and Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2020-0-00990, Platform Development and Proof of High Trust and Low Latency Processing for Heterogeneous·Atypical·Large Scaled Data in 5G-IoT Environment). The APC was funded by the Sungkyunkwan University and the BK21 FOUR (Graduate School Innovation) funded by the Ministry of Education (MOE, Korea) and National Research Foundation of Korea (NRF).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The CIFAR-10/CIFAR-100 dataset is available at https://www.kaggle.com/c/tiny-imagenet and the Tiny ImageNet dataset is available at https://www.kaggle.com/c/tiny-imagenet.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| FL | Federated Learning |

| IID | Independent and Identically Distributed |

| CKA | Centered Kernel Alignment |

References

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sejnowski, T.J. (Ed.) The Deep Learning Revolution; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Verbraeken, J.; Wolting, M.; Katzy, J.; Kloppenburg, J.; Verbelen, T.; Rellermeyer, J.S. A Survey on Distributed Machine Learning. arXiv 2019, arXiv:1912.09789. [Google Scholar] [CrossRef]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2020, 68, 1146–1159. [Google Scholar] [CrossRef]

- Yang, K.; Jiang, T.; Shi, Y.; Ding, Z. Federated Learning via Over-the-Air Computation. IEEE Trans. Wirel. Commun. 2020, 19, 2022–2035. [Google Scholar] [CrossRef]

- Brisimi, T.S.; Chen, R.; Mela, T.; Olshevsky, A.; Paschalidis, I.C.; Shi, W. Federated learning of predictive models from federated Electronic Health Records. Int. J. Med. Inform. 2018, 112, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. arXiv 2021, arXiv:1912.04977. [Google Scholar]

- Hsu, T.M.H.; Qi, H.; Brown, M. Measuring the Effects of Non-Identical Data Distribution for Federated Visual Classification. arXiv 2019, arXiv:1909.06335. [Google Scholar]

- Kornblith, S.; Norouzi, M.; Lee, H.; Hinton, G. Similarity of Neural Network Representations Revisited. In Proceedings of the 36th International Conference on Machine Learning, Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 3519–3529. [Google Scholar]

- Zhang, C.; Bengio, S.; Singer, Y. Are All Layers Created Equal? In Proceedings of the ICML 2019 Workshop on Identifying and Understanding Deep Learning Phenomena, Long Beach, CA, USA, 15 June 2019. [Google Scholar]

- Zhang, L.; Shen, L.; Ding, L.; Tao, D.; Duan, L.Y. Fine-tuning global model via data-free knowledge distillation for non-iid federated learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 10174–10183. [Google Scholar]

- Shen, Y.; Zhou, Y.; Yu, L. CD2-pFed: Cyclic Distillation-guided Channel Decoupling for Model Personalization in Federated Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 10041–10050. [Google Scholar]

- Yoon, T.; Shin, S.; Hwang, S.J.; Yang, E. Fedmix: Approximation of mixup under mean augmented federated learning. arXiv 2021, arXiv:2107.00233. [Google Scholar]

- Wang, H.; Yurochkin, M.; Sun, Y.; Papailiopoulos, D.; Khazaeni, Y. Federated Learning with Matched Averaging. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Li, X.; JIANG, M.; Zhang, X.; Kamp, M.; Dou, Q. FedBN: Federated Learning on Non-IID Features via Local Batch Normalization. In Proceedings of the International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. arXiv 2020, arXiv:1812.06127. [Google Scholar]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.; Stich, S.; Suresh, A.T. SCAFFOLD: Stochastic Controlled Averaging for Federated Learning. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Volume 119, pp. 5132–5143. [Google Scholar]

- Li, Q.; He, B.; Song, D. Model-Contrastive Federated Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2021), Nashville, TN, USA, 20–25 June 2021; pp. 10713–10722. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. arXiv 2020, arXiv:2002.05709. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://www.bibsonomy.org/bibtex/cc2d42f2b7ef6a4e76e47d1a50c8cd86 (accessed on 27 September 2022).

- Li, F.F.; Karpathy, A.; Johnson, J. Tiny ImageNet. 2014. Available online: https://www.kaggle.com/c/tiny-imagenet (accessed on 27 September 2022).

- Lin, T.; Kong, L.; Stich, S.U.; Jaggi, M. Ensemble Distillation for Robust Model Fusion in Federated Learning. arXiv 2021, arXiv:2006.07242. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR 2021), Vienna, Austria, 4 May 2021. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 6105–6114. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).