Abstract

A convolutional neural network (CNN) is a representative deep-learning algorithm that has a significant advantage in image recognition and classification. Using anteroposterior pelvic radiographs as input data, we developed a CNN algorithm to determine the presence of pre-collapse osteonecrosis of the femoral head (ONFH). We developed a CNN algorithm to differentiate between ONFH and normal radiographs. We retrospectively included 305 anteroposterior pelvic radiographs (right hip: pre-collapsed ONFH = 79, normal = 226; left hip: pre-collapsed ONFH = 62, normal = 243) as data samples. Pre-collapsed ONFH was diagnosed using pelvic magnetic resonance imaging data for each patient. Among the 305 cases, 69.8% of the included data samples were randomly selected as the training set, 21.0% were selected as the validation set, and the remaining 9.2% were selected as the test set to evaluate the performance of the developed CNN algorithm. The area under the curve of our developed CNN algorithm on the test data was 0.912 (95% confidence interval, 0.773–1.000) for the right hip and 0.902 (95% confidence interval, 0.747–1.000) for the left hip. We showed that a CNN algorithm trained using pelvic radiographs would help diagnose pre-collapse ONFH.

1. Introduction

Osteonecrosis of the femoral head (ONFH) is a common disorder characterized by bone necrosis of the hip induced by decreased blood flow to the femoral head [1]. It often develops into subchondral fracture and results in collapse of the femoral head, which causes disabling hip pain and limitation of hip motion, eventually requiring hip arthroplasty [2]. Magnetic resonance imaging (MRI) is the gold standard for the diagnosis of ONFH, with a sensitivity of almost 100%, even for early ONFH [3]. However, in clinical practice, radiography is usually the first choice for diagnosing ONFH in patients with hip pain owing to its low cost [2]. Hip or pelvic radiography is limited: its diagnostic sensitivity for ONFH is low [2,4,5]. In particular, the sensitivity of radiography for diagnosing pre-collapse ONFH is only 50–70% [2]. Therefore, ONFH in the pre-collapse stage is often overlooked or misdiagnosed.

Deep learning is a technique in which the artificial intelligence algorithm learns rules and patterns from the given information using various trial-and-error approaches and extracts meaningful information [6,7,8,9]. In particular, artificial neural networks have structures and functions similar to those of the human brain using a large number of hidden layers [6,7,8,9]. The deep learning technique can outperform traditional data analysis techniques and can learn from unstructured and perceptual data, such as images and languages, and can extract key features [10]. A convolutional neural network (CNN) is a deep learning technique widely used in image recognition and classification [11,12].

The CNN model commonly consists of an input layer, convolution layers, a pooling layer, a fully connected layer, and an output layer. The learnable kernel of the convolutional layer plays a crucial role in reducing the computational complexity compared to performing image classification using a basic artificial neural network model [13]. The CNN represents substantial progress in automated image classification tasks without the need for image preprocessing that is essential for classical machine learning algorithms [14].

CNN can find features in image data that humans cannot recognize or detect [6,7,11,12]. Recently, several studies have demonstrated the usefulness of CNN for diagnosing several disorders based on various imaging data, and the clinical use of CNN is gradually increasing [15,16,17]. We believe that a CNN would also be useful for diagnosing pre-collapse ONFH.

In the current study, we developed a CNN algorithm to diagnose pre-collapse ONFH using anteroposterior pelvic radiographs as input data and evaluated its diagnostic accuracy.

2. Materials and Methods

This retrospective study was conducted at a single hospital. It was approved by the institutional review board of Yeungnam university hospital. The requirement for informed consent was waived by the institutional review board of Yeungnam university hospital owing to the retrospective nature of the study. All methods were carried out in accordance with relevant guidelines and regulations.

2.1. Subjects

A total of 102 patients (mean age = 55.3 ± 15.7, M:F = 55:47, right ONFH = 40 patients, left ONFH = 23 patients, both ONFH = 39 patients) with pre-collapse ONFH who visited our university hospital from January 2000 to December 2021 were consecutively recruited according to the following inclusion criteria: (1) pre-collapse ONFH was proven by pelvic MRI; (2) age ≥ 20 years at the time of diagnosis; (3) pelvic anteroposterior radiograph taken, with the interval between pelvic radiograph and pelvic MRI ≤ 30 days; and (4) absence of a history of previous hip operation, coexisting fracture, or osteomyelitis. In addition, a total of 203 subjects (mean age = 52.5 ± 19.0, M:F = 82:121) with normal hip joints who visited our university hospital from Jan 2010 to Dec 2021 were consecutively recruited according to the following inclusion criteria: (1) pelvic MRI showed no specific abnormal findings on the bilateral hip joint, and the other criteria were the same as criteria (2), (3), and (4) for patients with pre-collapse ONFH.

Out of 305 radiographs of the right hip, 79 were ONFH radiographs and 226 were normal radiographs. Out of 305 radiographs of the left hip, 62 were ONFH radiographs and 243 were normal radiographs.

2.2. Pelvic Anteroposterior Radiograph and MRI

Pelvic anteroposterior radiographs were obtained using a single system (Innovision-SH, Seoul, Korea). Pelvic magnetic resonance (MR) images were obtained using a 1.5-T Gyroscan Intera (Philips, Amsterdam, The Netherlands), 3.0-T MAGNETOM Skyra (Siemens, Berlin, Germany), and 3.0-T Ingenia Elition (Philips, The Netherlands). Owing to the retrospective nature of our study, MR images were acquired using various protocols.

One pelvic anteroposterior radiograph image for each patient was used as an input image to develop the deep learning algorithm.

2.3. Deep Learning Model

We used Python 3.8.8, TensorFlow 2.8.0 with Keras, and the SciKit-Learn 0.24.1 library to develop a deep learning model for ONFH analysis. We trained two pre-trained CNN models separately using four state-of-the-art CNN models, including EfficientNetV2B0, B1, B2, and Xception. EfficientNetV2 is a lightweight model that reduces the number of parameters by almost 45% compared to the previous version, EfficientNet, but it is an excellent model that shows the same level as EfficientNet in Top-1 accuracy based on ImageNet data. Xception CNN model comprises depth-wise separable convolution layers and shows excellent classification performance compared to Inception V3 and ResNet-152 models [18,19].

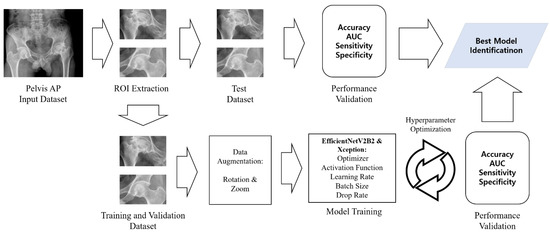

The EfficientNetV2B2 CNN model was employed for the right ONFH model with fine-tuning (unfreeze top three layers). For the left ONFH model, the Xception CNN model was employed, and the network was fully trained. The details of the model training process and results are summarized in Figure 1 and Table 1.

Figure 1.

Diagram of osteonecrosis of the femoral head classification process. AP, anteroposterior; ROI, region of interest, AUC: area under the curve.

Table 1.

Performances of deep-learning models for diagnosing osteonecrosis of femoral head.

From patients’ original radiographs, initial region-of-interest (ROI) images were extracted and standardized to a size of 500(H) × 1200(W). The ROI images included only the left and right hip portions, which are essential for detecting ONFH in patients’ radiographs. Two ROI images containing only the left or right hip portions were extracted from the initial ROI images of size 500(H) × 500(W). The left and right hip ROI images were fed into the left hip and right hip ONFH detection models for training.

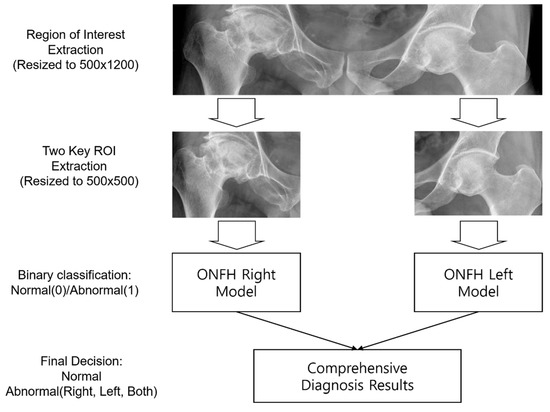

The ONFH diagnosis process using the proposed deep learning model is summarized in Figure 2. In the left and right ONFH models, the left and right hips were diagnosed as 0 (normal) or 1 (ONFH), respectively. By combining the results of the two models, each patient’s diagnosis was determined as normal (both left and right 0), left ONFH (left 1, right 0), right ONFH (left 0, right 1), or both ONFH (both left and right 1).

Figure 2.

Steps for developing a convolutional neural network algorithm; ROI: region of interest; ONFH: Osteonecrosis of the femoral head.

2.4. Experiment

To prevent overfitting of the developed model, 305 images were divided as follows: 69.8% (213 images), 21.0% (64 images), and 9.2% (28 images) were assigned to the training, validation, and test sets, respectively. Details of the dataset configurations are listed in Table 1. TensorFlow version 2.8.0 (Google, Mountain View, CA, USA) and the Scikit-Learn toolkit version 0.24.1 were used to train the machine learning model. Table 2 shows the details of proposed models’ layers and parameters.

Table 2.

Layer types and parameters in osteonecrosis of femoral head (ONFH) diagnosis model.

To reduce overfitting, the global average pooling 2d layer was added instead of the fully connected dense layer and 20% of the drop rate was applied. Lastly, the output dense layer contained one neuron with a sigmoid activation function for binary classification.

2.5. Statistical Analysis

Statistical analyses were performed using Python 3.8.8 and Scikit-Learn version 0.24.1. A receiver operating characteristic curve analysis was performed, and the area under the curve (AUC) was calculated. The 95% confidence interval (CI) for AUC was calculated using the approach described by DeLong et al. [20]. Additionally, we estimated the diagnostic accuracy of the developed CNN algorithm. Furthermore, two physiatrists (S.Y.K. and G.S.C.) who had more than 15 years of experience in the field of musculoskeletal disorders determined the presence of ONFH based on the pelvic anteroposterior radiographs that were used as input data to develop the CNN algorithm. They were blinded to the MRI results during the determination of the presence of ONFH and assessed the same set of pelvic radiographs.

3. Results

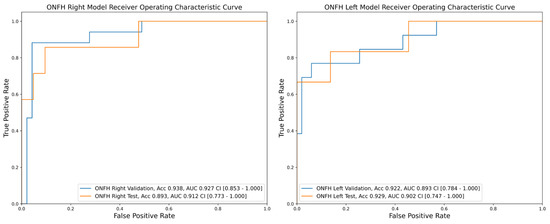

For the diagnosis of pre-collapse ONFH in the right hip, the AUCs of the validation and test datasets for the deep learning model were 0.927 (95% CI, 0.853–1.000) and 0.893 (95% CI, 0.773–1.000), respectively (Figure 3). For the diagnosis of pre-collapse ONFH in the left hip, the AUCs of the validation and test datasets for the deep learning model were 0.893 (95% CI, 0.784–1.000) and 0.902 (95% CI, 0.747–1.000), respectively (Figure 3).

Figure 3.

Receiver operating characteristic curve for the validation and test dataset of the anteroposterior pelvic radiographs for the convolutional neural network algorithms for diagnosing osteonecrosis of the femoral head of right and left hips; ONFH, Osteonecrosis of the femoral head; Acc, accuracy; AUC, area under the curve.

The diagnostic accuracies of the pre-collapse ONFH on the left side were 92.2% and 92.9% in the validation and test sets, respectively. Those on the right side were 93.8% and 89.3% for the validation and test sets, respectively. Regarding the diagnostic accuracy of the two physicians, the accuracies on the right side were 78.0% and 76.7%, respectively, and 79.7% and 78.4% on the left and left sides, respectively.

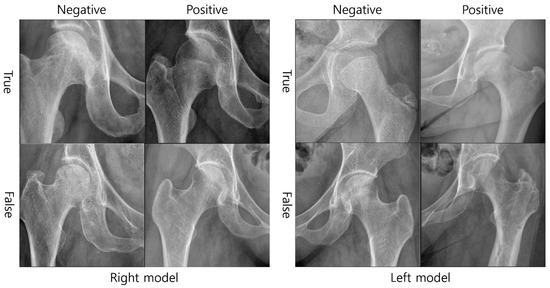

Additionally, Figure 4 shows the actual X-ray images as an example by classifying the diagnostic results of the right and left diagnostic models into correct diagnosis (true negative and true positive) and incorrect diagnosis (false negative and false positive).

Figure 4.

Examples of classification of diagnostic results by the developed model.

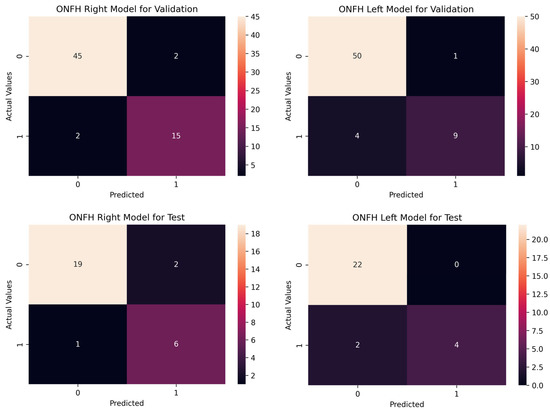

Figure 5 shows the correct classification and misclassification cases of the ONFH model. The ONFH right model correctly classified 60 images out of 64 validation data and misclassified four images (two false positives and two false negatives). The left model correctly classified 59 images out of 64 validation data and misclassified five images (one false positive and one false negative).

Figure 5.

Confusion matrix for osteonecrosis of the femoral head (ONFH) right and left models.

4. Discussion

4.1. Overall Performance

In this study, we developed a CNN model to determine the presence of pre-collapse ONFH. The AUCs of our models, as evaluated on the test dataset, were 0.848 and 0.939 for the right and left hip joints, respectively, based on anteroposterior pelvic radiographs classified into “ONFH” and “normal”. Considering that an AUC ≥ 0.9 and 0.9 > AUC ≥ 0.8 are generally outstanding and excellent, respectively, our model for determining the presence of ONFH based on anteroposterior pelvic radiographs seems to be excellent to outstanding [21].

In addition, whereas the accuracy of the two physicians specializing in musculoskeletal disorders in determining the presence of ONFH was approximately 76–80%, that of our model was approximately 90%. We believe that our model would be useful for clinicians in diagnosing pre-collapse ONFH using pelvic radiographs but not MRI or computed tomography (CT) scans. In clinical practice, because of the high cost and limited accessibility of MRI, clinicians frequently diagnose hip joint pathologies using physical examination, and pelvic or hip radiography. However, because of its low diagnostic accuracy, the exact diagnosis of ONFH is often limited in clinical scenarios [2,4,5]. Although the accuracy of our CNN model was not comparable with that of MRI, we believe that a CNN model trained using anteroposterior pelvic radiographs can be used as a supplementary tool for clinicians to determine the presence of pre-collapse ONFH.

A deep learning algorithm consists of a multilayer perceptron with multiple hidden layers and a feedforward neural network, which allows it to recognize detailed characteristics of input data [6,7,12]. A CNN is a deep-learning algorithm that receives image data as input and transforms these data repeatedly using convolution and pooling operations [6,7,12]. Through these processes, important features or patterns of input data can be extracted and differentiated [6,7,12]. We believe that our developed CNN algorithm can recognize valuable features or patterns of pelvic radiographic images and can extract specific characteristics, such as sclerosis or cystic changes, from ONFH and normal images.

4.2. Performance Comparison with Previous Studies

Two previous studies reported deep learning models for diagnosing ONFH using pelvic or hip radiographs as input data (Table 3) [22,23]. In 2019, Chee et al. [22] developed a deep learning algorithm using anteroposterior hip radiographs for 1892 anteroposterior hip radiographs as the input data. Its sensitivity and specificity were 84.8% and 91.3%, respectively. The AUC was 0.930. In 2020, Li et al. [23] used 3,136 anteroposterior hip radiographs as input data to develop a deep learning algorithm. The AUC of their algorithm was 0.974. The sensitivity and specificity were 90% and 94.6%, respectively.

Table 3.

Classification performance comparison with prior studies.

In our study, AUC, sensitivity, and specificity metrics were calculated based on test data. The AUCs were found to be 0.912 (right hip model) and 0.902 (left hip model), which were comparable with the results of previous studies that used large amounts of data. The sensitivity and specificity were 85.7% and 90.5%, respectively, for the right hip model and 66.7% and 100%, respectively, for the left hip model. The sensitivity of the left hip model was relatively low, but the specificity was very high. The proportion of ONFH-positive images for the left hip (20%) was smaller than that for the right hip (25%), which indicates that learning about the positive image might be insufficient.

However, these previous studies included radiographs of both pre-collapsed and collapsed ONFH. Furthermore, they did not evaluate diagnostic accuracy without separating pre-collapsed and collapsed ONFH. Therefore, to the best of our knowledge, our study is the first to demonstrate the possibility of using a deep learning algorithm that is trained using pelvic radiographs to diagnose pre-collapse ONFH.

4.3. Limitations

However, our study was limited because we used a small number of pelvic radiographs to train the CNN algorithm. If a larger amount of input data is used, the accuracy of the model has the potential to increase. Additionally, we used image data obtained from a single machine in a single center; therefore, the generalizability of the study may be limited. Radiographs obtained from a wider array of machines from external centers are required in the future.

5. Conclusions

In the current study, we showed that a CNN algorithm trained using anteroposterior pelvic radiographs can help diagnose pre-collapsed ONFH. We believe that our CNN algorithm would be useful for clinicians to determine the necessity of further evaluation with MRI studies to confirm the diagnosis of ONFH. Early diagnosis of ONFH by our developed CNN algorithm could prevent further aggravation (e.g., subchondral fracture or cortical depression) of ONFH [24]. In the future, we plan to improve the diagnostic accuracy of the CNN model for its practical use.

Author Contributions

Conceptualization, J.K.K., G.-S.C., S.Y.K. and M.C.C.; methodology, J.K.K., G.-S.C., S.Y.K. and M.C.C.; software, J.K.K. and M.C.C.; validation, J.K.K., G.-S.C., S.Y.K. and M.C.C.; formal analysis, J.K.K. and M.C.C.; investigation, J.K.K., G.-S.C., S.Y.K. and M.C.C.; resources, J.K.K., G.-S.C., S.Y.K. and M.C.C.; data curation, J.K.K., G.-S.C., S.Y.K. and M.C.C.; writing—original draft preparation, J.K.K., G.-S.C., S.Y.K. and M.C.C.; writing—review and editing, J.K.K., G.-S.C., S.Y.K. and M.C.C.; visualization, J.K.K., G.-S.C., S.Y.K. and M.C.C.; supervision, J.K.K., G.-S.C., S.Y.K. and M.C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Research Foundation of Korea Grant funded by the Korean government, No. NRF-2021R1A2C1013073. This work was supported by the 2022 Yeungnam University Research Grant.

Institutional Review Board Statement

The Institutional Review Board of Yeungnam University Hospital approved the study (2021-05-028).

Informed Consent Statement

Patient consent was waived due to retrospective nature of this study.

Data Availability Statement

The datasets generated and/or analyzed during the current study are not publicly available due privacy reasons but are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hines, J.T.; Jo, W.L.; Cui, Q.; Mont, M.A.; Koo, K.H.; Cheng, E.Y.; Goodman, S.B.; Ha, Y.C.; Hernigou, P.; Jones, L.C.; et al. Osteonecrosis of the Femoral Head: An Updated Review of ARCO on Pathogenesis, Staging and Treatment. J. Korean Med. Sci. 2021, 36, e177. [Google Scholar] [CrossRef] [PubMed]

- Chee, C.G.; Cho, J.; Kang, Y.; Kim, Y.; Lee, E.; Lee, J.W.; Ahn, J.M.; Kang, H.S. Diagnostic accuracy of digital radiography for the diagnosis of osteonecrosis of the femoral head, revisited. Acta Radiol. 2019, 60, 969–976. [Google Scholar] [CrossRef] [PubMed]

- Fordyce, M.J.; Solomon, L. Early detection of avascular necrosis of the femoral head by MRI. J. Bone Joint Surg. Br. 1993, 75, 365–367. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.R.; Steinberg, M.E.; Cheng, E.Y. Osteonecrosis of the femoral head: Diagnosis and classification systems. Curr. Rev. Musculoskelet. Med. 2015, 8, 210–220. [Google Scholar] [CrossRef]

- Zhao, D.; Zhang, F.; Wang, B.; Liu, B.; Li, L.; Kim, S.Y.; Goodman, S.B.; Hernigou, P.; Cui, Q.; Lineaweaver, W.C.; et al. Guidelines for clinical diagnosis and treatment of osteonecrosis of the femoral head in adults (2019 version). J. Orthop. Translat. 2020, 21, 100–110. [Google Scholar] [CrossRef]

- Kim, J.K.; Choo, Y.J.; Choi, G.S.; Shin, H.; Chang, M.C.; Park, D. Deep Learning Analysis to Automatically Detect the Presence of Penetration or Aspiration in Videofluoroscopic Swallowing Study. J. Korean Med. Sci. 2022, 37, e42. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.K.; Choo, Y.J.; Shin, H.; Choi, G.S.; Chang, M.C. Prediction of ambulatory outcome in patients with corona radiata infarction using deep learning. Sci. Rep. 2021, 11, 7989. [Google Scholar] [CrossRef]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Sejnowski, T.J. The unreasonable effectiveness of deep learning in artificial intelligence. Proc. Natl. Acad. Sci. USA 2020, 117, 30033–30038. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke. Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Kim, J.K.; Chang, M.C.; Park, D. Deep Learning Algorithm Trained on Brain Magnetic Resonance Images and Clinical Data to Predict Motor Outcomes of Patients with Corona Radiata Infarct. Front. Neurosci. 2021, 15, 795553. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Marques, G.; Agarwal, D.; de la Torre Díez, I. Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network. Appl. Soft Comput. 2020, 96, 106691. [Google Scholar] [CrossRef]

- Doerr, S.A.; Weber-Levine, C.; Hersh, A.M.; Awosika, T.; Judy, B.; Jin, Y.; Raj, D.; Liu, A.; Lubelski, D.; Jones, C.K.; et al. Automated prediction of the Thoracolumbar Injury Classification and Severity Score from CT using a novel deep learning algorithm. J. Orthop. Sci. 2022, 52, E5. [Google Scholar] [CrossRef]

- Ouyang, H.; Meng, F.; Liu, J.; Song, X.; Li, Y.; Yuan, Y.; Wang, C.; Lang, N.; Tian, S.; Yao, M.; et al. Evaluation of Deep Learning-Based Automated Detection of Primary Spine Tumors on MRI Using the Turing Test. Front. Oncol. 2022, 12, 814667. [Google Scholar] [CrossRef]

- Tao, J.; Wang, Y.; Liang, Y.; Zhang, A. Evaluation and Monitoring of Endometrial Cancer Based on Magnetic Resonance Imaging Features of Deep Learning. Contrast Media Mol. Imaging 2022, 2022, 5198592. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Virtual. 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef]

- Mandrekar, J.N. Receiver operating characteristic curve in diagnostic test assessment. J. Thorac. Oncol. 2010, 5, 1315–1316. [Google Scholar] [CrossRef]

- Chee, C.G.; Kim, Y.; Kang, Y.; Lee, K.J.; Chae, H.D.; Cho, J.; Nam, C.M.; Choi, D.; Lee, E.; Lee, J.W.; et al. Performance of a Deep Learning Algorithm in Detecting Osteonecrosis of the Femoral Head on Digital Radiography: A Comparison with Assessments by Radiologists. Am. J. Roentgenol. 2019, 213, 155–162. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Tian, H. Deep Learning-Based End-to-End Diagnosis System for Avascular Necrosis of Femoral Head. IEEE J. Biomed. Health Inform. 2021, 25, 2093–2102. [Google Scholar] [CrossRef]

- Petek, D.; Hannouche, D.; Suva, D. Osteonecrosis of the femoral head: Pathophysiology and current concepts of treatment. EFFORT Open Rev. 2019, 4, 85–97. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).