Assistive Robot with an AI-Based Application for the Reinforcement of Activities of Daily Living: Technical Validation with Users Affected by Neurodevelopmental Disorders

Abstract

1. Introduction

- For the robot, a user-friendly graphical interface is designed and developed to enable efficient human–robot interaction (see Section 3.2.2). Its objective is to interact with individuals with NDDs and to assist in their therapy sessions to reinforce activities of daily living. Based on artificial intelligence techniques, we have embedded in LOLA2 an online action detection module designed for monitoring ADLs, which is detailed in Section 3.2.3.

- This work presents in Section 4 the first technical validation of the technology proposed with a set of four real final users with NDDs. The results confirm that our developed robot is capable of assisting and monitoring people with NDDs in performing their daily living tasks.

2. Related Work

2.1. Social Assistive Robots

2.2. AI for Monitoring ADLs

3. Human-Robot Interaction Application for the Reinforcement of ADLs

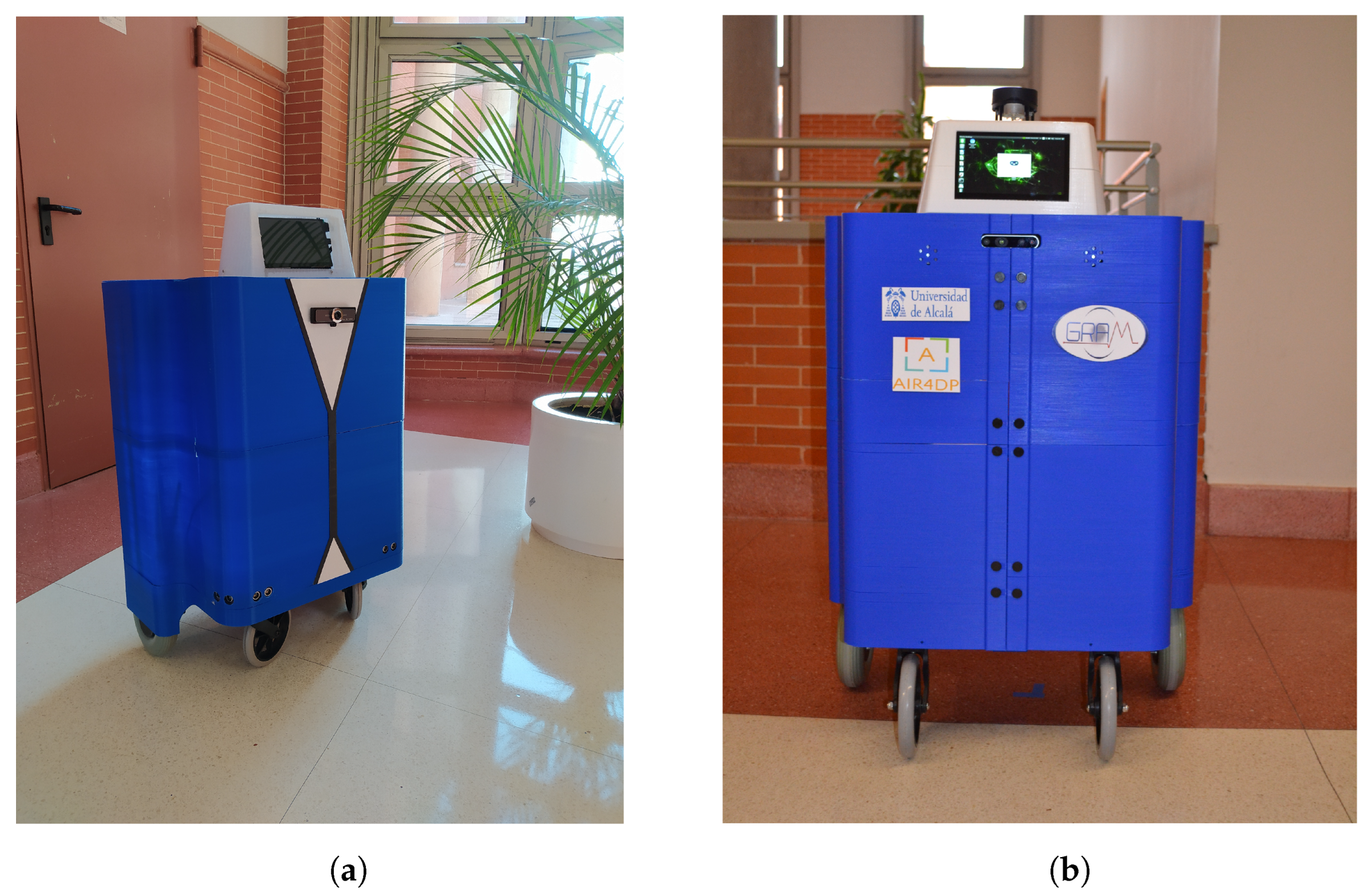

3.1. The Assistive Robotic Platform: LOLA2

3.2. Human–Robot Interaction Application: Software Description

3.2.1. ROS Integration and Navigation Interface

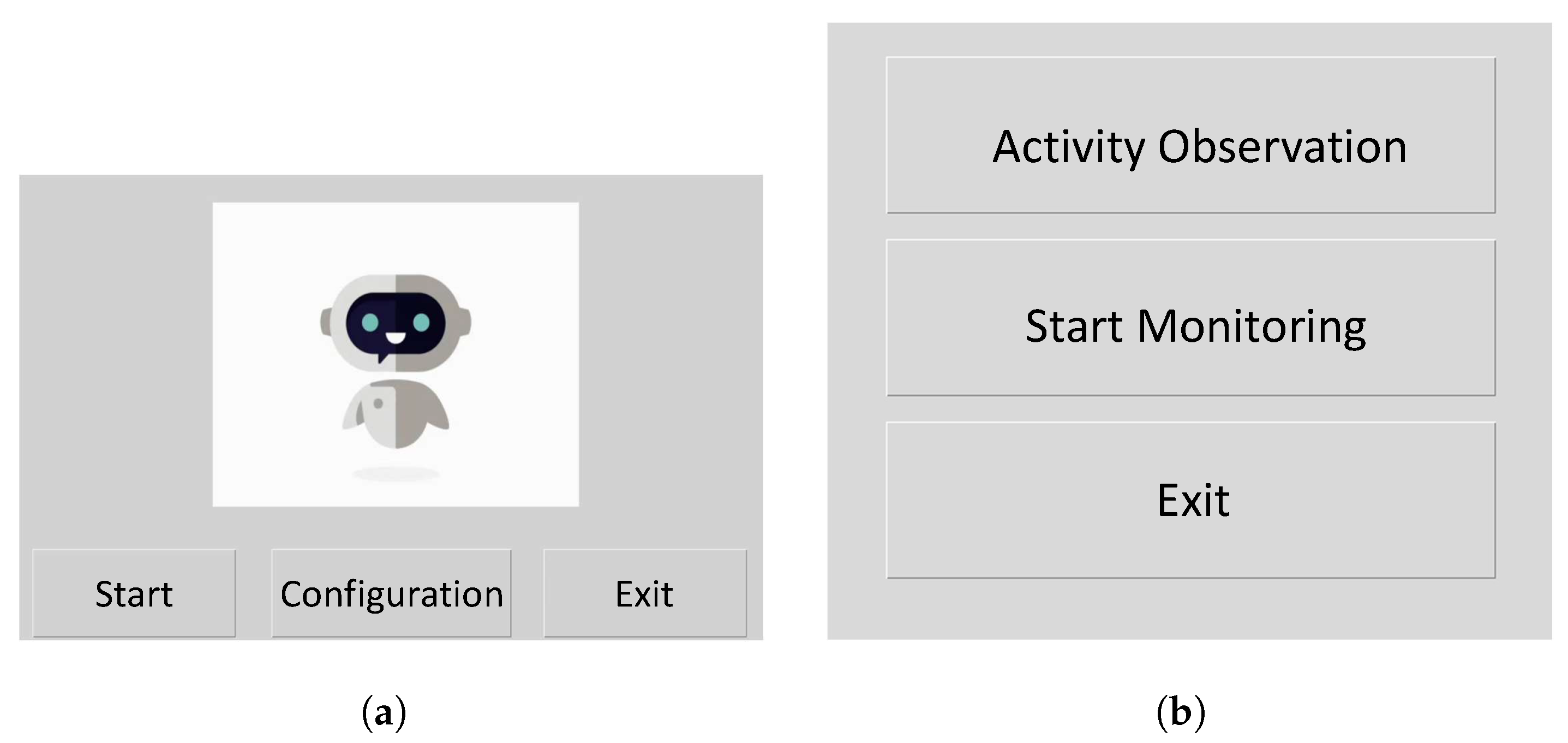

3.2.2. Graphical User Interfaces

- Configuration step: The healthcare professional selects a user (identified by an ID) and particular action for the monitoring.

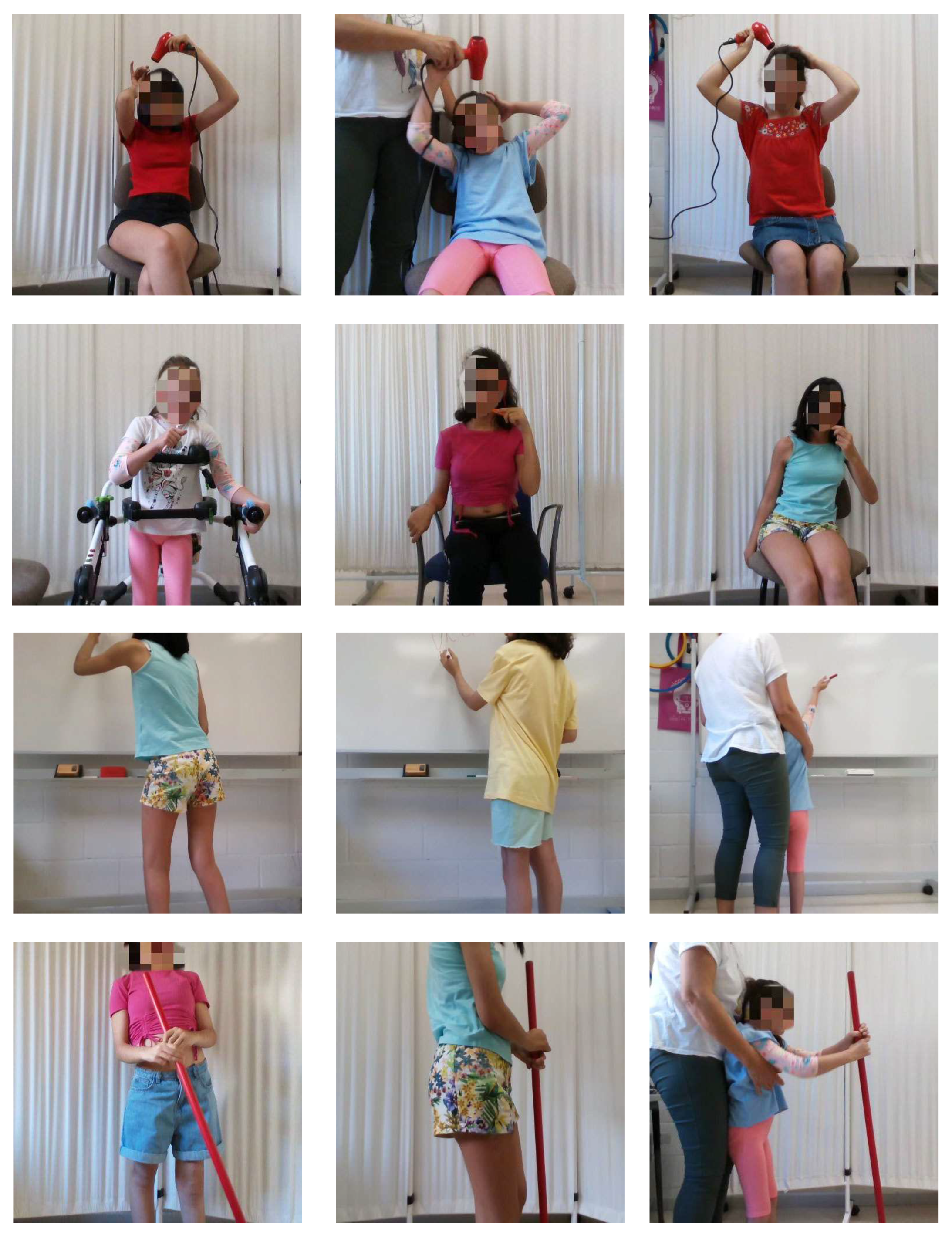

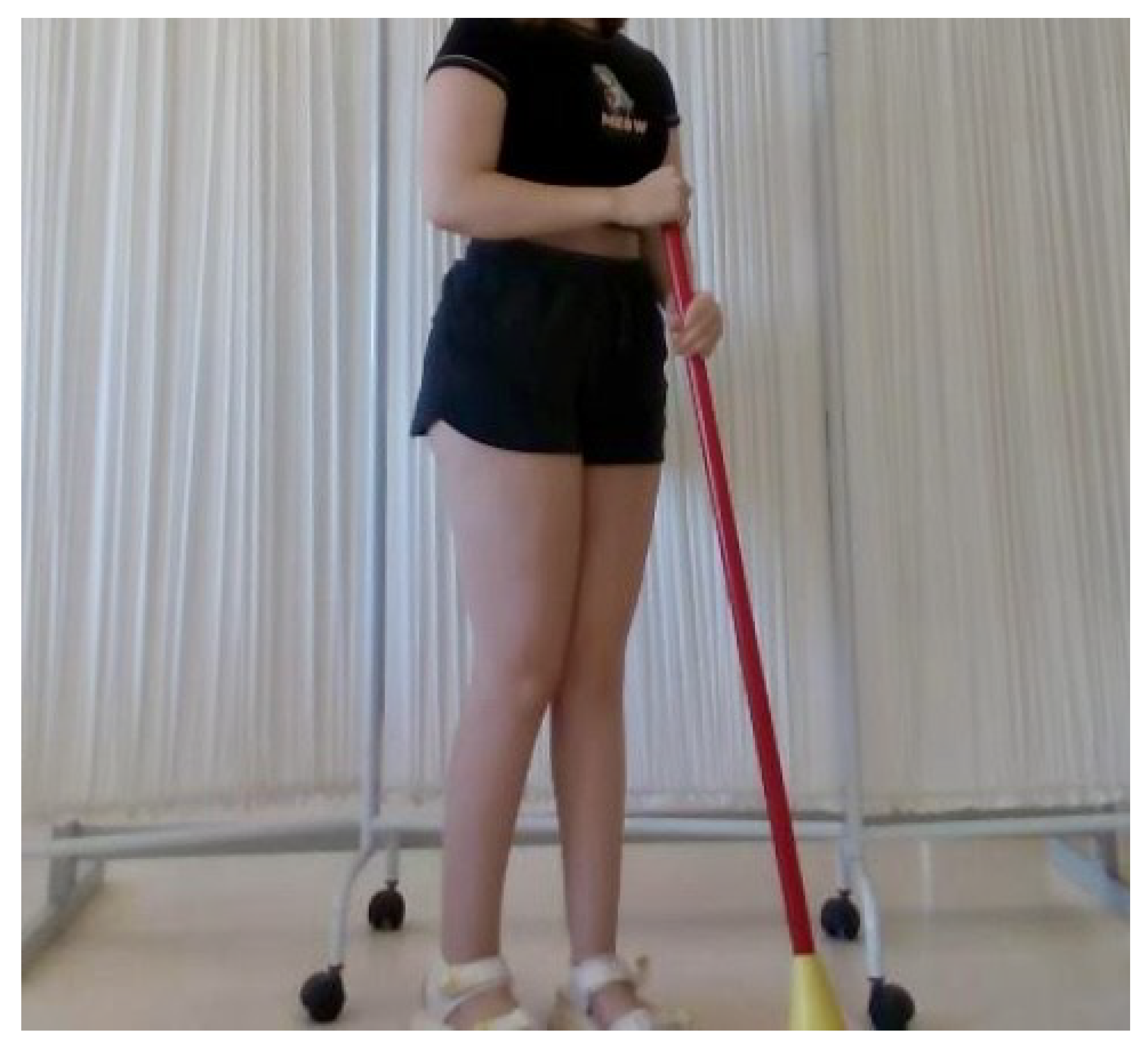

- Activity observation: The user watches a video of the chosen action.

- Activity monitoring: The user is encouraged to replicate the observed action, and the AI online action detection module starts the automatic monitoring of the performance. The software also provides the option to generate a report of the performance of the user during therapy sessions.

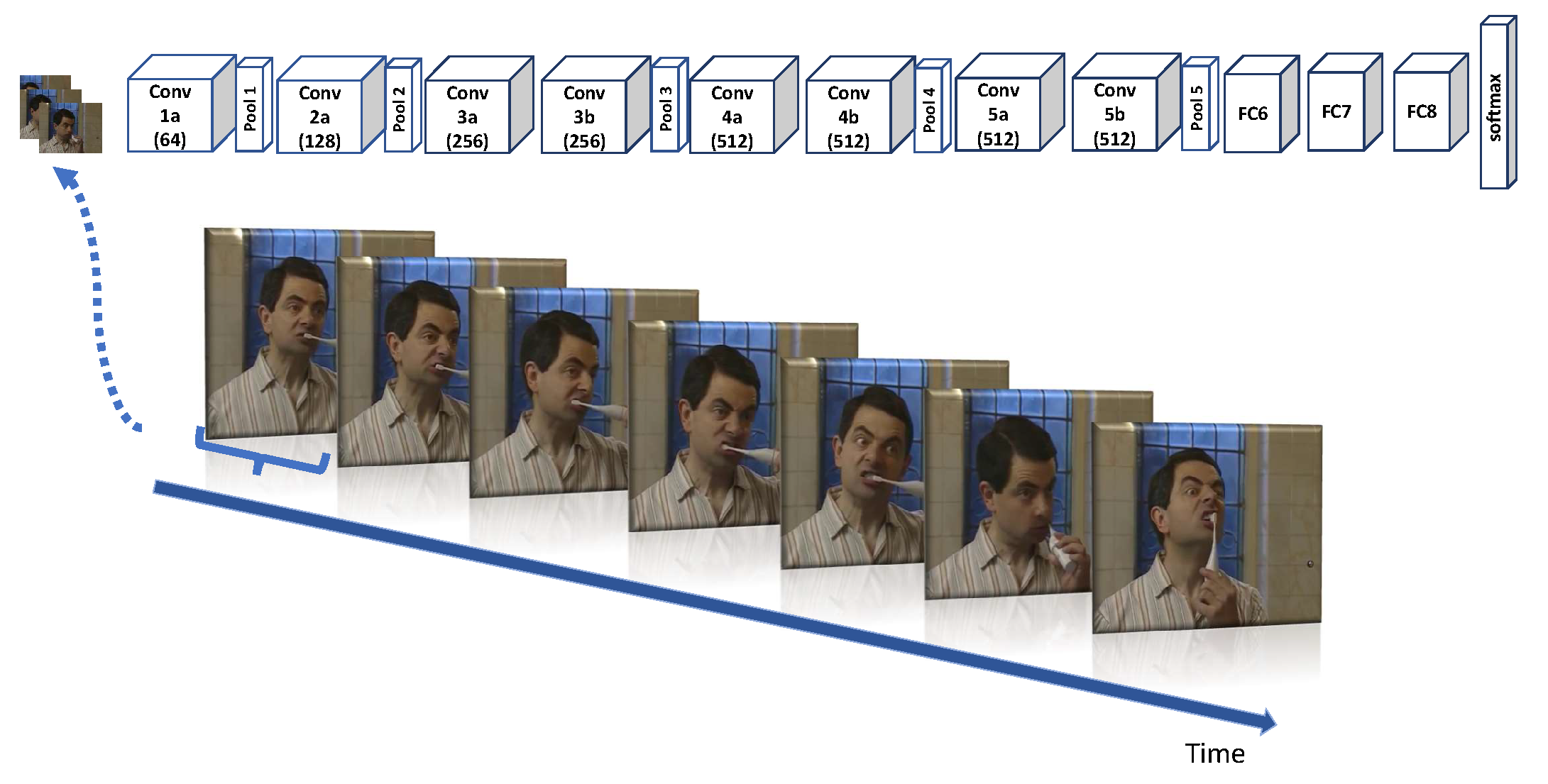

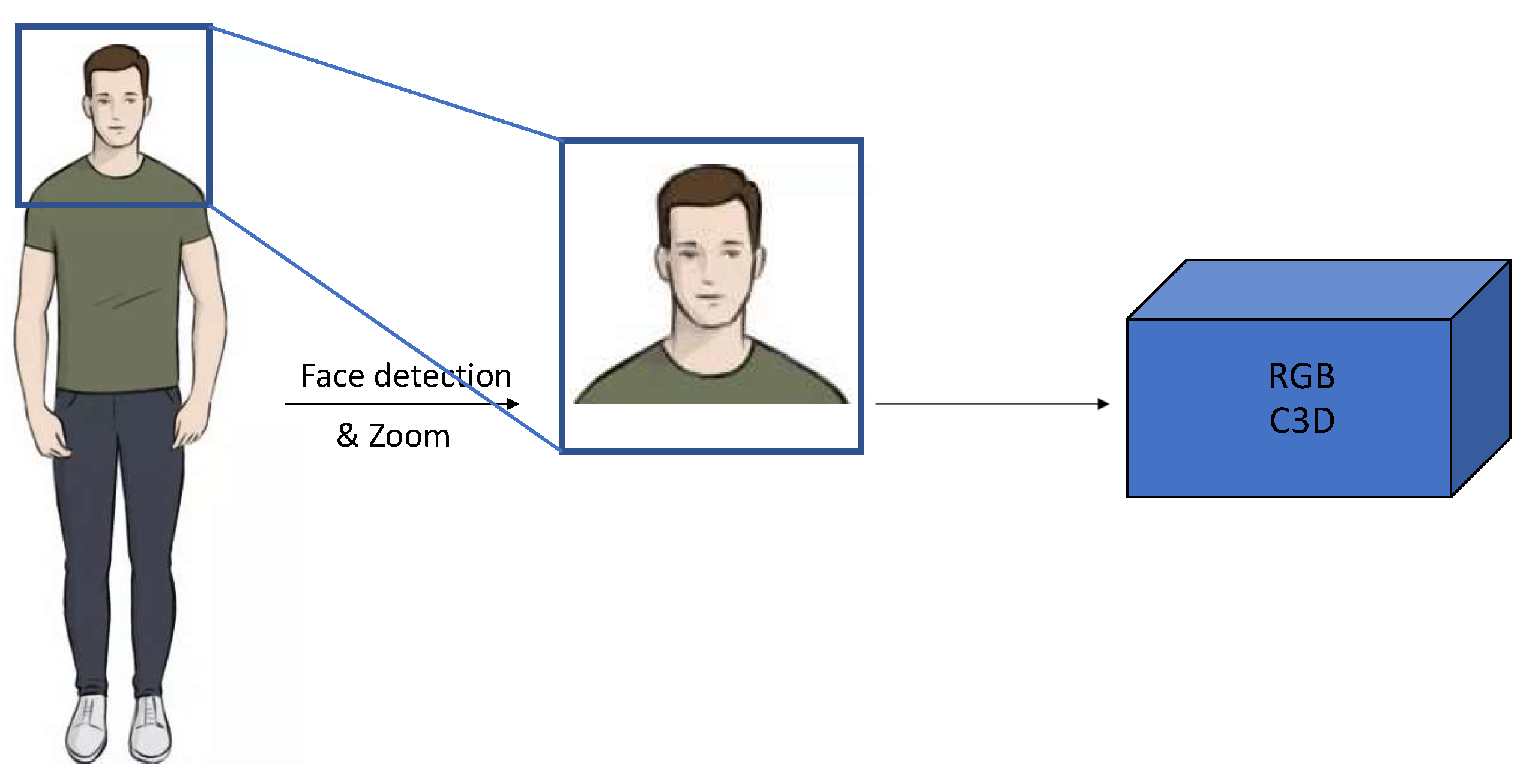

3.2.3. AI for Online Action Monitoring of ADLs

4. Experiments

4.1. Experimental Validation

4.1.1. Sample of Final Users

4.1.2. Design of the Interventions

4.2. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| SAR | Social Assistive Robot |

| CT | Computerized Tomography |

| NDD | Neurodevelopmental Disorder |

| ADLs | Activities of Daily Living |

| ROS | Robotic Operating System |

| OAD | Online Action Detection |

| CNN | Convolutional Neural Network |

| AMCL | Adaptive Monte Carlo Localization |

| CFCS | Communication Function Classification System |

| MACS | Manual Ability Classification System |

| GMFCS E&R | Gross Motor Function Classification System Extended and Revised |

References

- Feil-Seifer, D.; Matarić, M.J. Defining socially assistive robotics. In Proceedings of the 9th International Conference on Rehabilitation Robotics, ICORR 2005, Chicago, IL, USA, 28 June–1 July 2005; pp. 465–468. [Google Scholar]

- Clodic, A.; Alami, R.; Montreuil, V.; Li, S.; Wrede, B.; Swadzba, A. A study of interaction between dialog and decision for human-robot collaborative task achievement. In Proceedings of the RO-MAN 2007—The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 913–918. [Google Scholar]

- Santhanaraj, K.K.; Ramya, M.M.; Dinakaran, D. A survey of assistive robots and systems for elderly care. J. Enabling Technol. 2021, 15, 66–72. [Google Scholar] [CrossRef]

- Trost, M.J.; Chrysilla, G.; Gold, J.I.; Matarić, M.J. Socially-Assistive Robots Using Empathy to Reduce Pain and Distress during Peripheral IV Placement in Children. Pain Res. Manag. 2020, 2020, 7935215. [Google Scholar] [CrossRef] [PubMed]

- Bajones, M.; Fischinger, D.; Weiss, A.; de la Puente, P.; Wolf, D.; Vincze, M.; Körtner, T.; Weninger, M.; Papoutsakis, K.E.; Michel, D.; et al. Results of Field Trials with a Mobile Service Robot for Older Adults in 16 Private Households. ACM Trans. Hum.-Robot. Interact. 2020, 9, 1–27. [Google Scholar] [CrossRef]

- Kwoh, Y.S.; Hou, J.; Jonckheere, E.; Hayati, S. A robot with improved absolute positioning accuracy for CT guided stereotactic brain surgery. IEEE Trans. Biomed. Eng. 1988, 35, 153–160. [Google Scholar] [CrossRef] [PubMed]

- Costa, Â.; Martínez-Martín, E.; Cazorla, M.; Julián, V. PHAROS—PHysical Assistant RObot System. Sensors 2018, 18, 2633. [Google Scholar] [CrossRef]

- Pineau, J.; Montemerlo, M.; Pollack, M.E.; Roy, N.; Thrun, S. Towards robotic assistants in nursing homes: Challenges and results. Robot. Auton. Syst. 2003, 42, 271–281. [Google Scholar] [CrossRef]

- Lee, S.; Noh, H.; Lee, J.; Lee, K.; Lee, G.G.; Sagong, S.; Kim, M. On the effectiveness of Robot-Assisted Language Learning. ReCALL 2011, 23, 25–58. [Google Scholar] [CrossRef]

- Nasri, N.; Gomez-Donoso, F.; Orts, S.; Cazorla, M. Using Inferred Gestures from sEMG Signal to Teleoperate a Domestic Robot for the Disabled. In Proceedings of the International Work-Conference on Artificial Neural Networks, IWANN, Gran Canaria, Spain, 12–14 June 2019. [Google Scholar]

- Dawe, J.; Sutherland, C.J.; Barco, A.; Broadbent, E. Can social robots help children in healthcare contexts? A scoping review. BMJ Paediatr. Open 2019, 3, e000371. [Google Scholar] [CrossRef]

- Murfet, D. Estrategia Española Sobre Discapacidad. Available online: https://sid-inico.usal.es/idocs/F8/FDO26112/Estrategia2012_2020.pdf (accessed on 8 December 2018).

- Lemer, C. Annual Report of the Chief Medical Officer 2012: Our Children Deserve Better: Prevention Pays. 2013. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/255237/2901304_CMO_complete_low_res_accessible.pdf (accessed on 1 October 2013).

- Arora, N.K.; Nair, M.K.C.; Gulati, S.; Deshmukh, V.; Mohapatra, A.; Mishra, D.; Patel, V.; Pandey, R.M.; Das, B.C.; Divan, G.; et al. Neurodevelopmental disorders in children aged 2–9 years: Population-based burden estimates across five regions in India. PLoS Med. 2018, 15, e1002615. [Google Scholar] [CrossRef]

- Dietrich, K.N.; Eskenazi, B.; Schantz, S.L.; Yolton, K.; Rauh, V.A.; Johnson, C.B.; Alkon, A.; Canfield, R.L.; Pessah, I.N.; Berman, R.F. Principles and Practices of Neurodevelopmental Assessment in Children: Lessons Learned from the Centers for Children’s Environmental Health and Disease Prevention Research. Environ. Health Perspect. 2005, 113, 1437–1446. [Google Scholar] [CrossRef]

- Cleaton, M.A.M.; Kirby, A. Why Do We Find it so Hard to Calculate the Burden of Neurodevelopmental Disorders. Arch. Med. 2018, 4, 10. [Google Scholar] [CrossRef]

- Pivetti, M.; Battista, S.D.; Agatolio, F.; Simaku, B.; Moro, M.; Menegatti, E. Educational Robotics for children with neurodevelopmental disorders: A systematic review. Heliyon 2020, 6, e05160. [Google Scholar] [CrossRef] [PubMed]

- López-Sastre, R.J.; Baptista-Ríos, M.; Acevedo-Rodríguez, F.J.; da Costa, S.P.; Maldonado-Bascón, S.; Lafuente-Arroyo, S. A Low-Cost Assistive Robot for Children with Neurodevelopmental Disorders to Aid in Daily Living Activities. Int. J. Environ. Res. Public Health 2021, 18, 3974. [Google Scholar] [CrossRef] [PubMed]

- Okita, S.Y. Self-Other’s Perspective Taking: The Use of Therapeutic Robot Companions as Social Agents for Reducing Pain and Anxiety in Pediatric Patients. Cyberpsychol. Behav. Soc. Netw. 2013, 16, 436–441. [Google Scholar] [CrossRef]

- Trost, M.J.; Ford, A.R.; Kysh, L.; Gold, J.I.; Matarić, M.J. Socially Assistive Robots for Helping Pediatric Distress and Pain: A Review of Current Evidence and Recommendations for Future Research and Practice. Clin. J. Pain 2019, 35, 451–458. [Google Scholar] [CrossRef]

- Soyama, R.; IShii, S.; Fukase, A. Selectable operating interfaces of the meal-assistance device My Spoon. In Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2004; pp. 155–163. [Google Scholar]

- Dario, P.; Guglielmelli, E.; Laschi, C.; Teti, G. MOVAID: A personal robot in everyday life of disabled and elderly people. Technol. Disabil. 1999, 10, 77–93. [Google Scholar] [CrossRef]

- Esposito, R.; Fiorini, L.; Limosani, R.; Bonaccorsi, M.; Manzi, A.; Cavallo, F.; Dario, P. Supporting active and healthy aging with advanced robotics integrated in smart environment. In Optimizing Assistive Technologies for Aging Populations; IGI Global: Hershey, PA, USA, 2016; pp. 46–77. [Google Scholar] [CrossRef]

- Ribeiro, T.; Gonçalves, F.; Garcia, I.S.; Lopes, G.; Ribeiro, A.F. CHARMIE: A Collaborative Healthcare and Home Service and Assistant Robot for Elderly Care. Appl. Sci. 2021, 11, 7248. [Google Scholar] [CrossRef]

- Scoglio, A.A.J.; Reilly, E.D.; Gorman, J.A.; Drebing, C.E. Use of Social Robots in Mental Health and Well-Being Research: Systematic Review. J. Med. Internet Res. 2019, 21, e13322. [Google Scholar] [CrossRef]

- Krakovski, M.; Kumar, S.; Givati, S.; Bardea, M.; Zafrani, O.; Nimrod, G.; Bar-Haim, S.; Edan, Y. “Gymmy”: Designing and Testing a Robot for Physical and Cognitive Training of Older Adults. Appl. Sci. 2021, 11, 6431. [Google Scholar] [CrossRef]

- Bumin, G.; Günal, A. The effects of motor and cognitive impairments on daily living activities and quality of life in autistic children. Eur. J. Paediatr. Neurol. 2008, 12, 444–454. [Google Scholar] [CrossRef]

- Elbasan, B.; Kayihan, H.; Duzgun, I. Sensory integration and activities of daily living in children with developmental coordination disorder. Ital. J. Pediatr. 2012, 38, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Blanco-Martínez, N.; Delgado-Lobete, L.; Montes-Montes, R.; Ruiz-Pérez, N.; Ruiz-Pérez, M.; Santos-Del-Riego, S. Participation in Everyday Activities of Children with and without Neurodevelopmental Disorders: A Cross-Sectional Study in Spain. Children 2020, 7, 157. [Google Scholar] [CrossRef] [PubMed]

- Gelsomini, M.; Degiorgi, M.; Garzotto, F.; Leonardi, G.; Penati, S.; Ramuzat, N.; Silvestri, J.; Clasadonte, F. Designing a Robot Companion for Children with Neuro-Developmental Disorders. In Proceedings of the 2017 Conference on Interaction Design and Children, Stanford, CA, USA, 27–30 June 2017. [Google Scholar]

- Cabibihan, J.J.; Javed, H.; Ang, M.H.; Aljunied, S.M. Why Robots? A Survey on the Roles and Benefits of Social Robots in the Therapy of Children with Autism. Int. J. Soc. Robot. 2013, 5, 593–618. [Google Scholar] [CrossRef]

- Giullian, N.; Ricks, D.J.; Atherton, J.A.; Colton, M.B.; Goodrich, M.A.; Brinton, B. Detailed requirements for robots in autism therapy. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; pp. 2595–2602. [Google Scholar]

- Goodrich, M.A.; Colton, M.B.; Brinton, B.; Fujiki, M.; Atherton, J.A.; Robinson, L.A.; Ricks, D.J.; Maxfield, M.H.; Acerson, A. Incorporating a robot into an autism therapy team. IEEE Intell. Syst. 2012, 27, 52–59. [Google Scholar] [CrossRef]

- Mazzei, D.; Billeci, L.; Armato, A.; Lazzeri, N.; Cisternino, A.; Pioggia, G.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; Rossi, D.D. The FACE of autism. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 791–796. [Google Scholar]

- Ferrari, E.; Robins, B.; Dautenhahn, K. Therapeutic and educational objectives in robot assisted play for children with autism. In Proceedings of the RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 108–114. [Google Scholar]

- Robins, B.; Dautenhahn, K.; Dickerson, P. From Isolation to Communication: A Case Study Evaluation of Robot Assisted Play for Children with Autism with a Minimally Expressive Humanoid Robot. In Proceedings of the Second International Conferences on Advances in Computer-Human Interactions, Cancun, Mexico, 1–7 February 2009; pp. 205–211. [Google Scholar]

- Wood, L.J.; Dautenhahn, K.; Rainer, A.W.; Robins, B.; Lehmann, H.; Syrdal, D.S. Robot-Mediated Interviews—How Effective Is a Humanoid Robot as a Tool for Interviewing Young Children? PLoS ONE 2013, 8, e59448. [Google Scholar] [CrossRef]

- Wood, L.J.; Dautenhahn, K.; Robins, B.; Zaraki, A. Developing child-robot interaction scenarios with a humanoid robot to assist children with autism in developing visual perspective taking skills. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 1055–1060. [Google Scholar]

- Costa, S.; Resende, J.; Soares, F.O.; Ferreira, M.J.; dos Santos, C.P.; Moreira, F. Applications of simple robots to encourage social receptiveness of adolescents with autism. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 5072–5075. [Google Scholar]

- Costa, S.; Soares, F.O.; dos Santos, C.P.; Ferreira, M.J.; Moreira, M.F.; Vieira, A.P.P.; Cunha, F. An approach to promote social and communication behaviors in children with autism spectrum disorders: Robot based intervention. In Proceedings of the 2011 RO-MAN, Atlanta, GA, USA, 31 July–3 August 2011; pp. 101–106. [Google Scholar]

- Gillesen, J.; Barakova, E.I.; Huskens, B.; Feijs, L.M.G. From training to robot behavior: Towards custom scenarios for robotics in training programs for ASD. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Bonarini, A.; Garzotto, F.; Gelsomini, M.; Romero, M.; Clasadonte, F.; Yilmaz, A.N.C. A huggable, mobile robot for developmental disorder interventions in a multi-modal interaction space. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 823–830. [Google Scholar]

- Céspedes, N.; Irfan, B.; Senft, E.; Cifuentes, C.A.; Gutiérrez, L.F.; Rincon-Roncancio, M.; Belpaeme, T.; Múnera, M.C. A Socially Assistive Robot for Long-Term Cardiac Rehabilitation in the Real World. Front. Neurorobot. 2021, 15, 633248. [Google Scholar] [CrossRef] [PubMed]

- Matarić, M.J.; Tapus, A.; Winstein, C.J.; Eriksson, J. Socially assistive robotics for stroke and mild TBI rehabilitation. Stud. Health Technol. Inform. 2009, 145, 249–262. [Google Scholar]

- Tapus, A.; Tapus, C.; Matarić, M.J. User—Robot personality matching and assistive robot behavior adaptation for post-stroke rehabilitation therapy. Intell. Serv. Robot. 2008, 1, 169–183. [Google Scholar] [CrossRef]

- Winkle, K.; Caleb-Solly, P.; Turton, A.J.; Bremner, P.A. Social Robots for Engagement in Rehabilitative Therapies: Design Implications from a Study with Therapists. In Proceedings of the 2018 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 289–297. [Google Scholar]

- Bos, L. Personals attitudes towards robot assisted health care—A pilot study in 111 respondents. Med. Care Compunetics 2008, 5, 56. [Google Scholar]

- Swift-Spong, K.; Short, E.S.; Wade, E.; Matarić, M.J. Effects of comparative feedback from a Socially Assistive Robot on self-efficacy in post-stroke rehabilitation. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics (ICORR), Singapore, 11–14 August 2015; pp. 764–769. [Google Scholar]

- Martínez-Martín, E.; Costa, Â.; Cazorla, M. PHAROS 2.0—A PHysical Assistant RObot System Improved. Sensors 2019, 19, 4531. [Google Scholar] [CrossRef] [PubMed]

- Lamas, C.M.; Bellas, F.; Guijarro-Berdiñas, B. SARDAM: Service Assistant Robot for Daily Activity Monitoring. Multidiscip. Digit. Publ. Inst. Proc. 2020, 54, 3. [Google Scholar]

- Sunny, M.S.H.; Zarif, M.I.I.; Rulik, I.; Sanjuan, J.; Rahman, M.H.; Ahamed, S.I.; Wang, I.; Schultz, K.; Brahmi, B. Eye-gaze control of a wheelchair mounted 6DOF assistive robot for activities of daily living. J. Neuroeng. Rehabil. 2021, 18, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Poppe, R. A survey on vision-based human action recognition. Image Vis. Comput. 2010, 28, 976–990. [Google Scholar] [CrossRef]

- Xu, Y.; Shen, Z.; Zhang, X.; Gao, Y.; Deng, S.; Wang, Y.; Fan, Y.; Chang, E.I.C. Learning multi-level features for sensor-based human action recognition. arXiv 2017, arXiv:1611.07143. [Google Scholar] [CrossRef]

- Chen, L.L.; Hoey, J.; Nugent, C.D.; Cook, D.J.; Yu, Z. Sensor-Based Activity Recognition. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep Learning for Sensor-based Activity Recognition: A Survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Gadde, P.; Kharrazi, H.; Patel, H.; MacDorman, K.F. Toward Monitoring and Increasing Exercise Adherence in Older Adults by Robotic Intervention: A Proof of Concept Study. J. Robot. 2011, 2011, 438514:1–438514:11. [Google Scholar] [CrossRef]

- Görer, B.; Salah, A.A.; Akin, H.L. An autonomous robotic exercise tutor for elderly people. Auton. Robot. 2017, 41, 657–678. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, UI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, A.; et al. The Kinetics Human Action Video Dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Reddy, K.K.; Shah, M. Recognizing 50 human action categories of web videos. Mach. Vis. Appl. 2012, 24, 971–981. [Google Scholar] [CrossRef]

- Shou, Z.; Wang, D.; Chang, S.F. Temporal Action Localization in Untrimmed Videos via Multi-stage CNNs. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1049–1058. [Google Scholar]

- Shou, Z.; Chan, J.; Zareian, A.; Miyazawa, K.; Chang, S.F. CDC: Convolutional-De-Convolutional Networks for Precise Temporal Action Localization in Untrimmed Videos. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1417–1426. [Google Scholar]

- Buch, S.; Escorcia, V.; Shen, C.; Ghanem, B.; Niebles, J.C. SST: Single-Stream Temporal Action Proposals. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6373–6382. [Google Scholar]

- Wang, D.; Yuan, Y.; Wang, Q. Early Action Prediction With Generative Adversarial Networks. IEEE Access 2019, 7, 35795–35804. [Google Scholar] [CrossRef]

- Nguyen, M.H.; la Torre, F.D. Max-Margin Early Event Detectors. Int. J. Comput. Vis. 2012, 107, 191–202. [Google Scholar]

- Lin, J.; Gan, C.; Han, S. TSM: Temporal Shift Module for Efficient Video Understanding. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7082–7092. [Google Scholar]

- Gao, J.; Yang, Z.; Nevatia, R. RED: Reinforced Encoder-Decoder Networks for Action Anticipation. arXiv 2017, arXiv:1707.04818. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6201–6210. [Google Scholar]

- Wang, X.; Zhang, S.; Qing, Z.; Shao, Y.; Zuo, Z.; Gao, C.; Sang, N. OadTR: Online Action Detection with Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 7545–7555. [Google Scholar]

- Geest, R.D.; Gavves, E.; Ghodrati, A.; Li, Z.; Snoek, C.G.M.; Tuytelaars, T. Online Action Detection. In ECCV; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Quigley, M.; Gerkey, B.; Conley, K.; Faust, J.; Foote, T.; Leibs, J.; Berger, E.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA) Workshop on Open Source Robotics, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Baptista-Ríos, M.; López-Sastre, R.J.; Heilbron, F.C.; Van Gemert, J.C.; Acevedo-Rodríguez, F.J.; Maldonado-Bascón, S. Rethinking online action detection in untrimmed videos: A novel online evaluation protocol. IEEE Access 2019, 8, 5139–5146. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vision 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- García, T.P.; González, B.G.; Nieto-Riveiro, L.; Domínguez, N.C.; Maldonado-Bascón, S.; López-Sastre, R.J.; DaCosta, S.P.; González-Gómez, I.; Molina-Cantero, A.J.; Loureiro, J.P. Assessment and counseling to get the best efficiency and effectiveness of the assistive technology (MATCH): Study protocol. PLoS ONE 2022, 17, e0265466. [Google Scholar]

- del Estado, B.O. Real Decreto 1971/1999, de 23 de Diciembre, de Procedimiento Para el Reconocimiento, Declaración y Calificación del Grado de Discapacidad. Available online: https://bit.ly/3AY2ZAH (accessed on 3 July 2020).

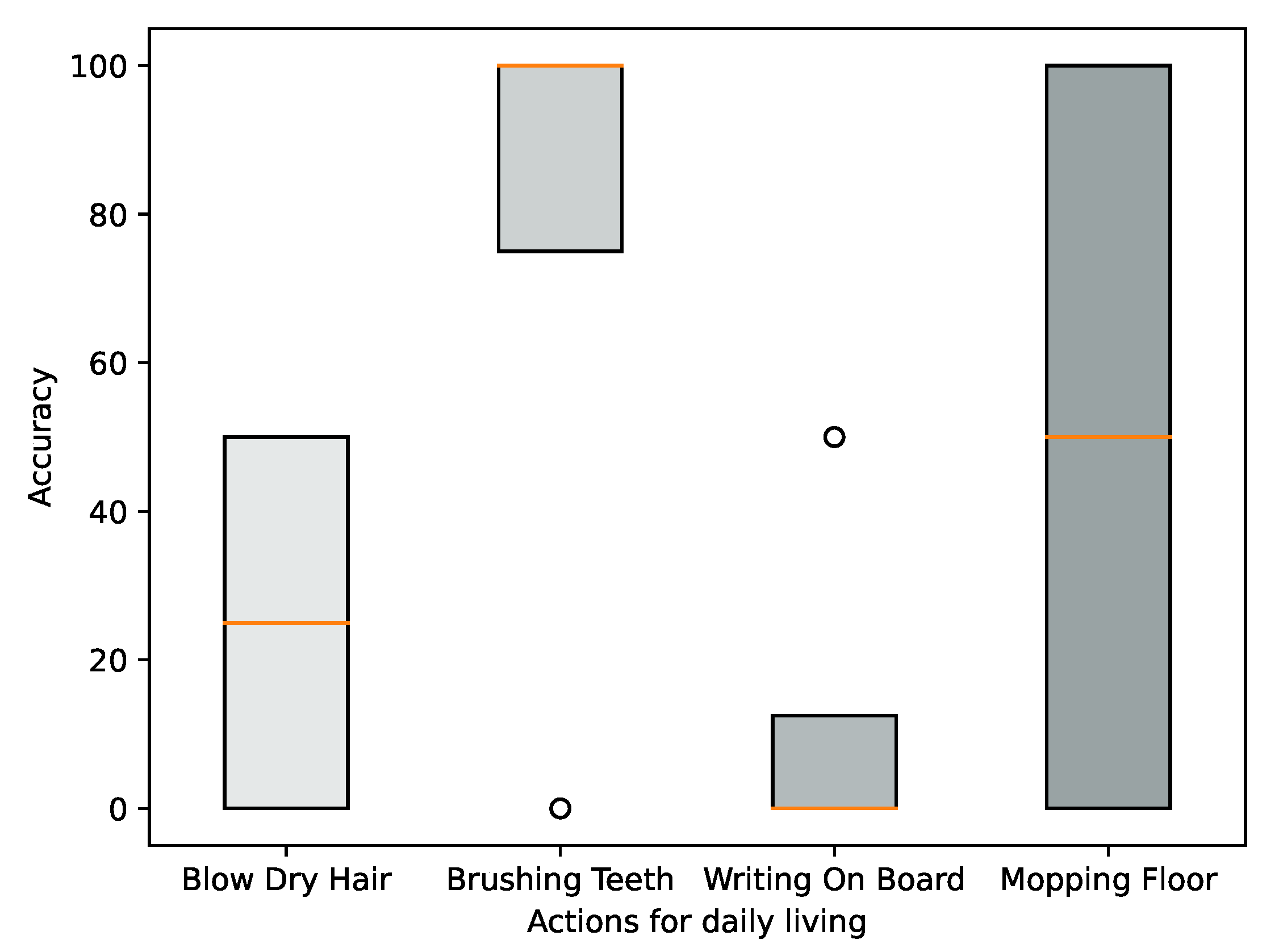

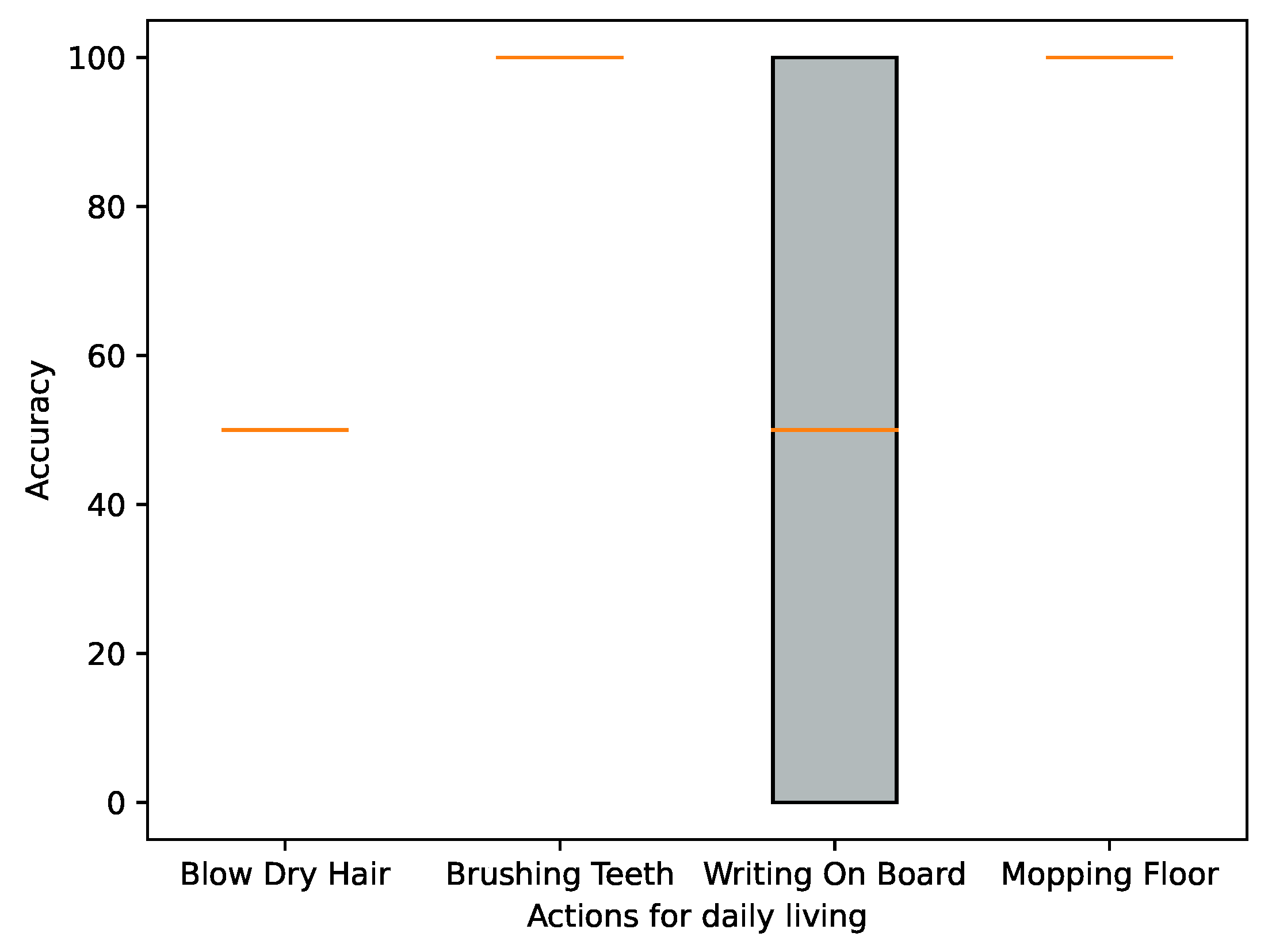

| Action Category | With Zoom/ Without Zoom |

|---|---|

| Blow Dry Hair | With zoom |

| Brushing Teeth | With zoom |

| Writing On Board | Without zoom |

| Mopping Floor | Without zoom |

| User ID | Actions | Session 1 | Session 2 | Session 3 | Session 4 |

|---|---|---|---|---|---|

| UAH-1 | Blow Dry Hair | 0% | 0% | 50% | 50% |

| Brushing Teeth | 100% | 0% | 100% | 100% | |

| Writing On Board | 0% | 0% | 0% | 50% | |

| Mopping Floor | 0% | 0% | 100% | 100% | |

| UAH-2 | Blow Dry Hair | 50% | 50% | 50% | 50% |

| Brushing Teeth | 100% | 100% | 100% | 100% | |

| Writing On Board | 100% | 0% | 0% | 100% | |

| Mopping Floor | 100% | 100% | 100% | 100% | |

| UAH-3 | Blow Dry Hair | 100% | 50% | — | — |

| Brushing Teeth | 100% | 100% | — | — | |

| Writing On Board | 0% | 0% | — | — | |

| Mopping Floor | 100% | 100% | — | — | |

| UAH-4 | Blow Dry Hair | 50% | — | — | — |

| Brushing Teeth | 100% | — | — | — | |

| Writing On Board | 0% | — | — | — | |

| Mopping Floor | 100% | — | — | — |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasri, N.; López-Sastre, R.J.; Pacheco-da-Costa, S.; Fernández-Munilla, I.; Gutiérrez-Álvarez, C.; Pousada-García, T.; Acevedo-Rodríguez, F.J.; Maldonado-Bascón, S. Assistive Robot with an AI-Based Application for the Reinforcement of Activities of Daily Living: Technical Validation with Users Affected by Neurodevelopmental Disorders. Appl. Sci. 2022, 12, 9566. https://doi.org/10.3390/app12199566

Nasri N, López-Sastre RJ, Pacheco-da-Costa S, Fernández-Munilla I, Gutiérrez-Álvarez C, Pousada-García T, Acevedo-Rodríguez FJ, Maldonado-Bascón S. Assistive Robot with an AI-Based Application for the Reinforcement of Activities of Daily Living: Technical Validation with Users Affected by Neurodevelopmental Disorders. Applied Sciences. 2022; 12(19):9566. https://doi.org/10.3390/app12199566

Chicago/Turabian StyleNasri, Nadia, Roberto J. López-Sastre, Soraya Pacheco-da-Costa, Iván Fernández-Munilla, Carlos Gutiérrez-Álvarez, Thais Pousada-García, Francisco Javier Acevedo-Rodríguez, and Saturnino Maldonado-Bascón. 2022. "Assistive Robot with an AI-Based Application for the Reinforcement of Activities of Daily Living: Technical Validation with Users Affected by Neurodevelopmental Disorders" Applied Sciences 12, no. 19: 9566. https://doi.org/10.3390/app12199566

APA StyleNasri, N., López-Sastre, R. J., Pacheco-da-Costa, S., Fernández-Munilla, I., Gutiérrez-Álvarez, C., Pousada-García, T., Acevedo-Rodríguez, F. J., & Maldonado-Bascón, S. (2022). Assistive Robot with an AI-Based Application for the Reinforcement of Activities of Daily Living: Technical Validation with Users Affected by Neurodevelopmental Disorders. Applied Sciences, 12(19), 9566. https://doi.org/10.3390/app12199566