Near-Infrared Image Colorization Using Asymmetric Codec and Pixel-Level Fusion

Abstract

1. Introduction

2. Related Work

2.1. Image Colorization

2.2. NIR Colorization

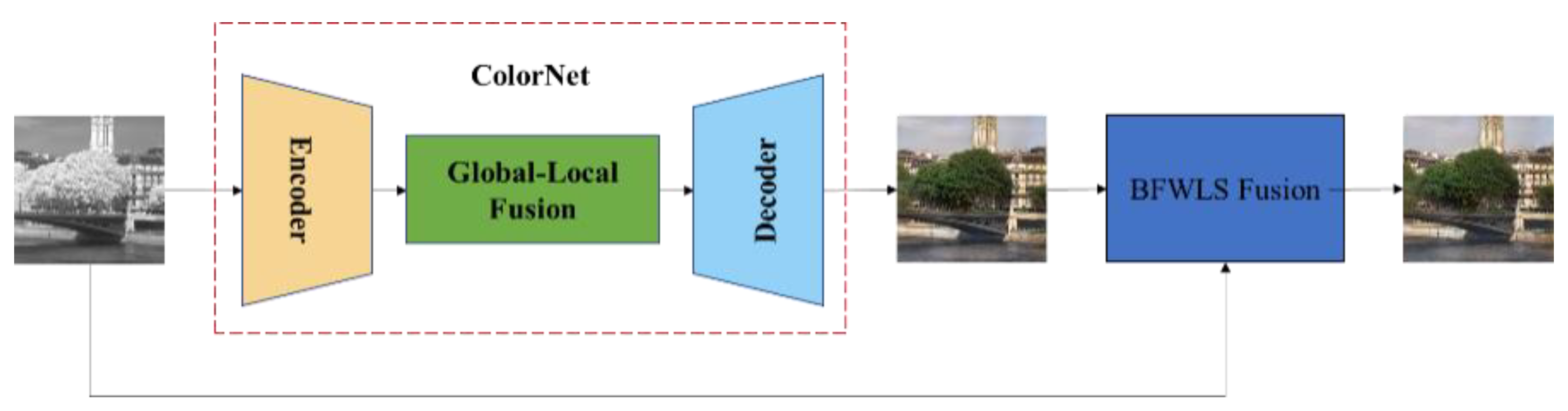

3. Proposed Method

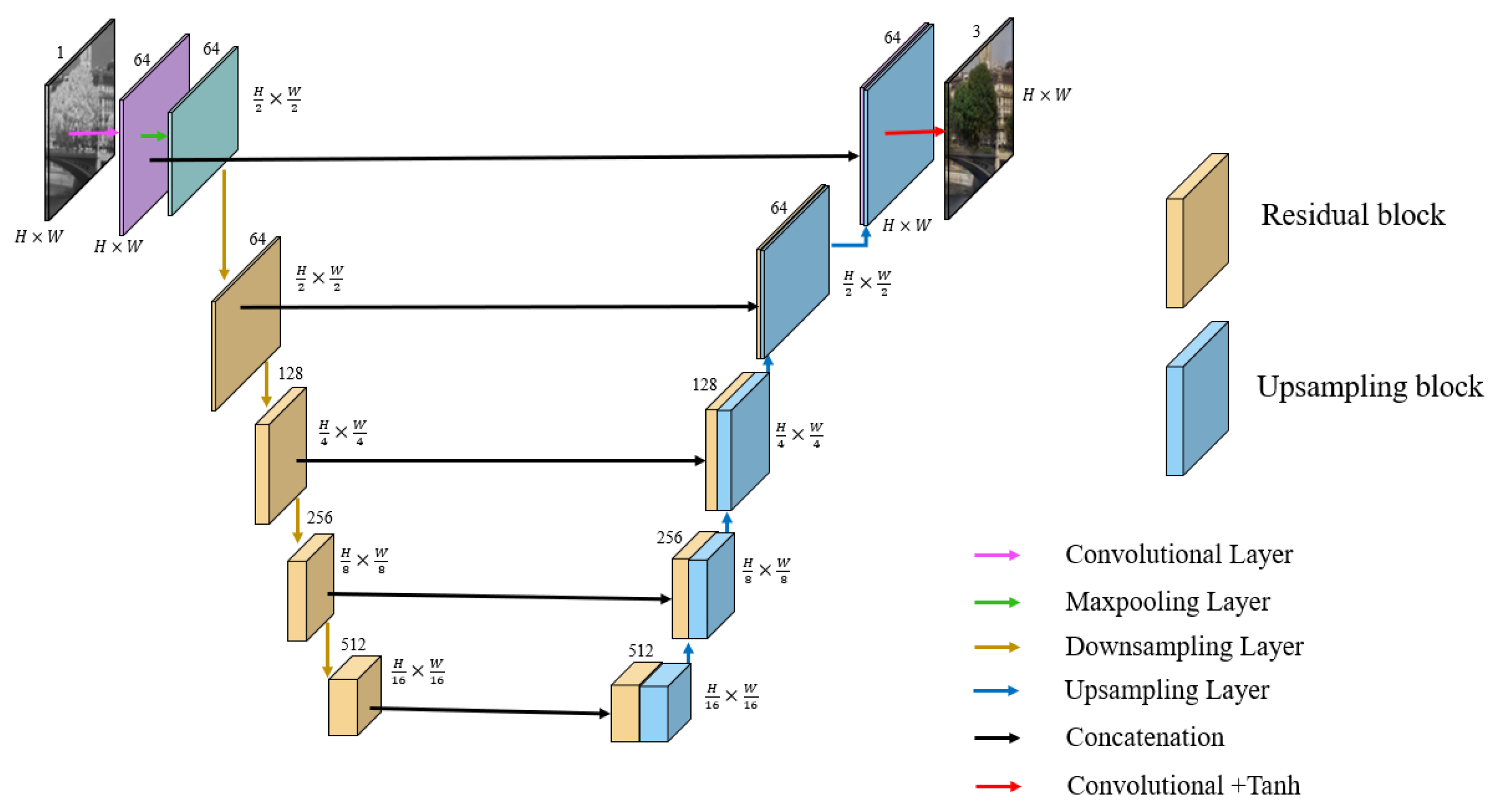

3.1. ColorNet

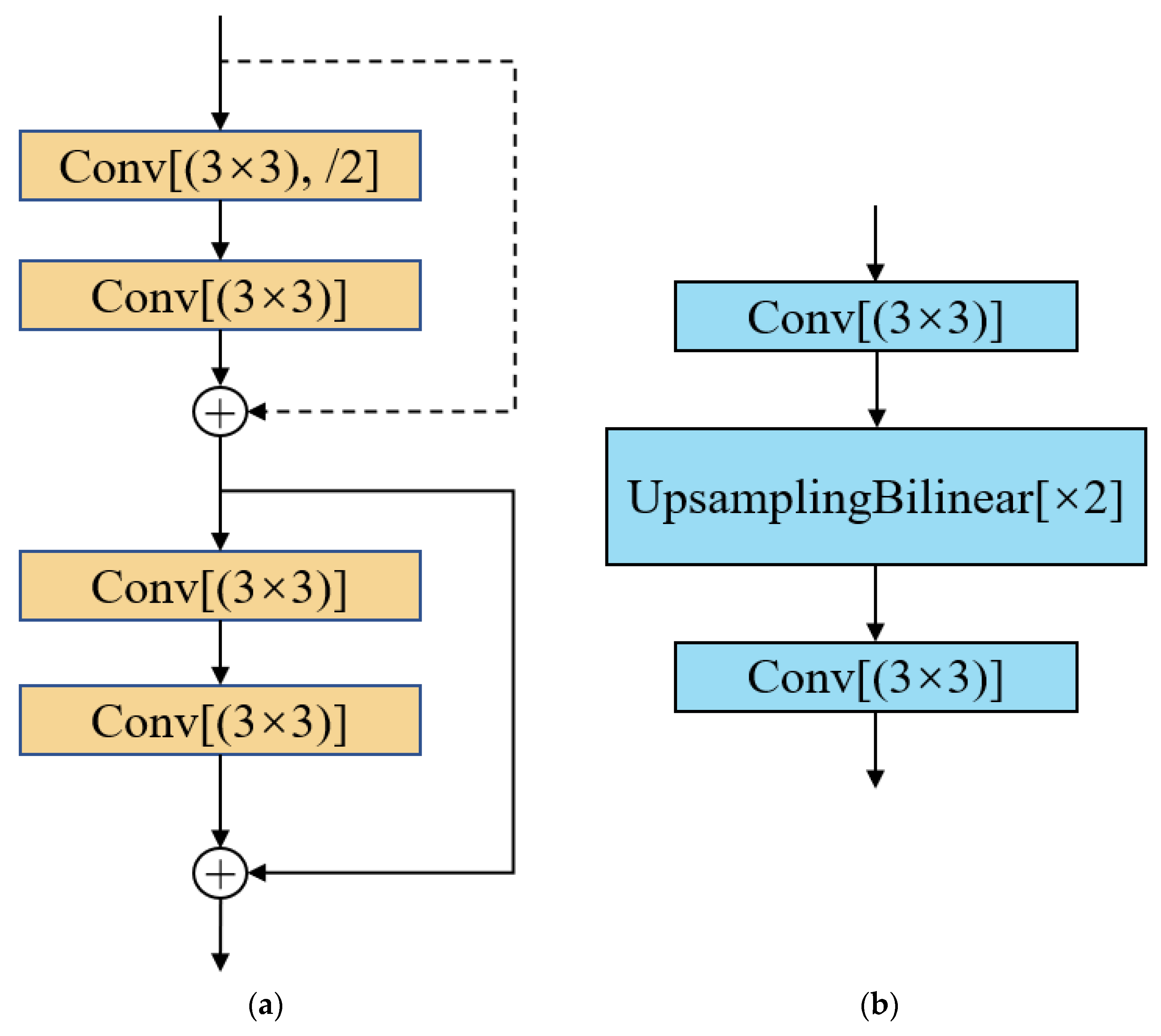

3.1.1. ACD

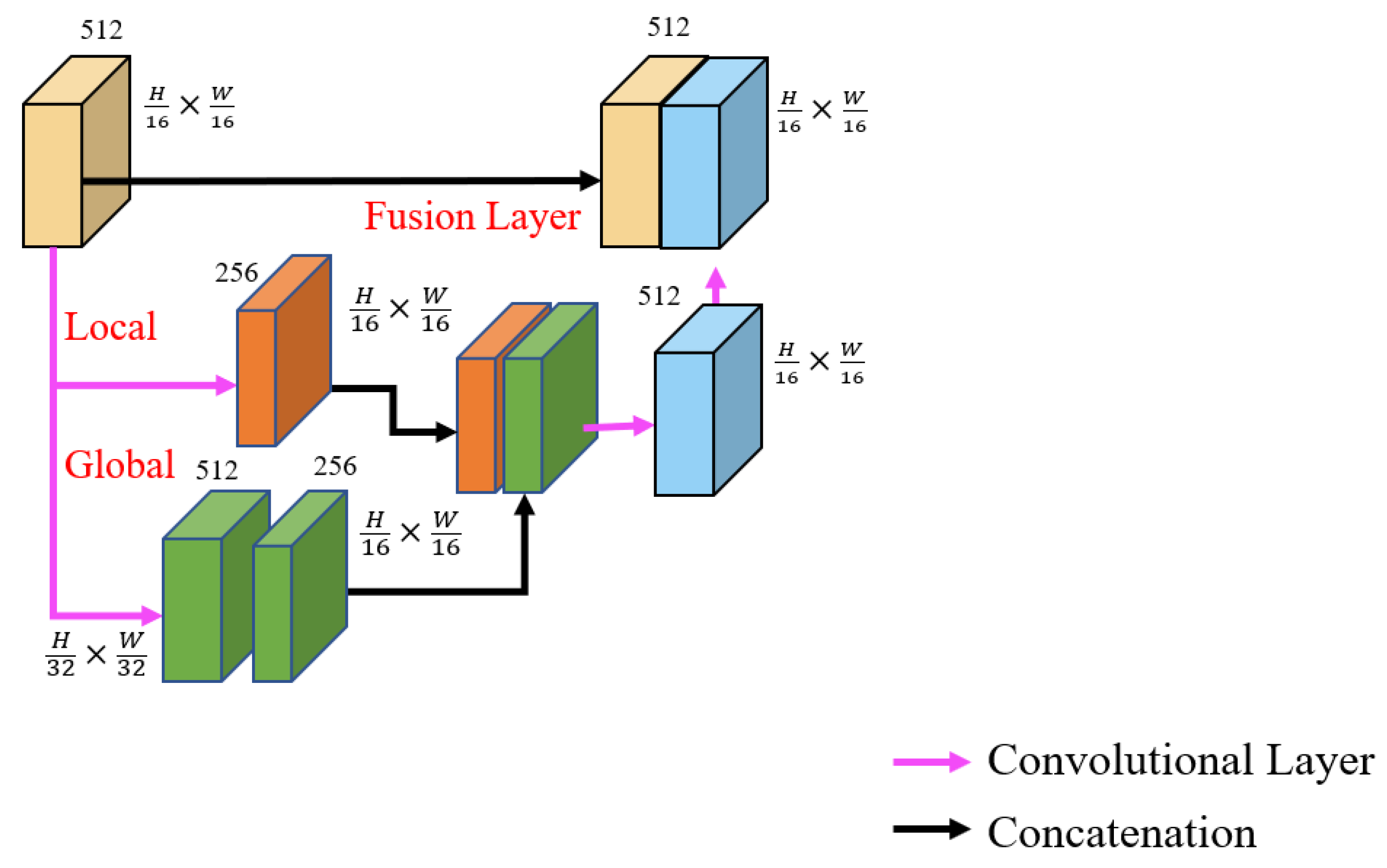

3.1.2. GLFFNet

3.1.3. Loss Function

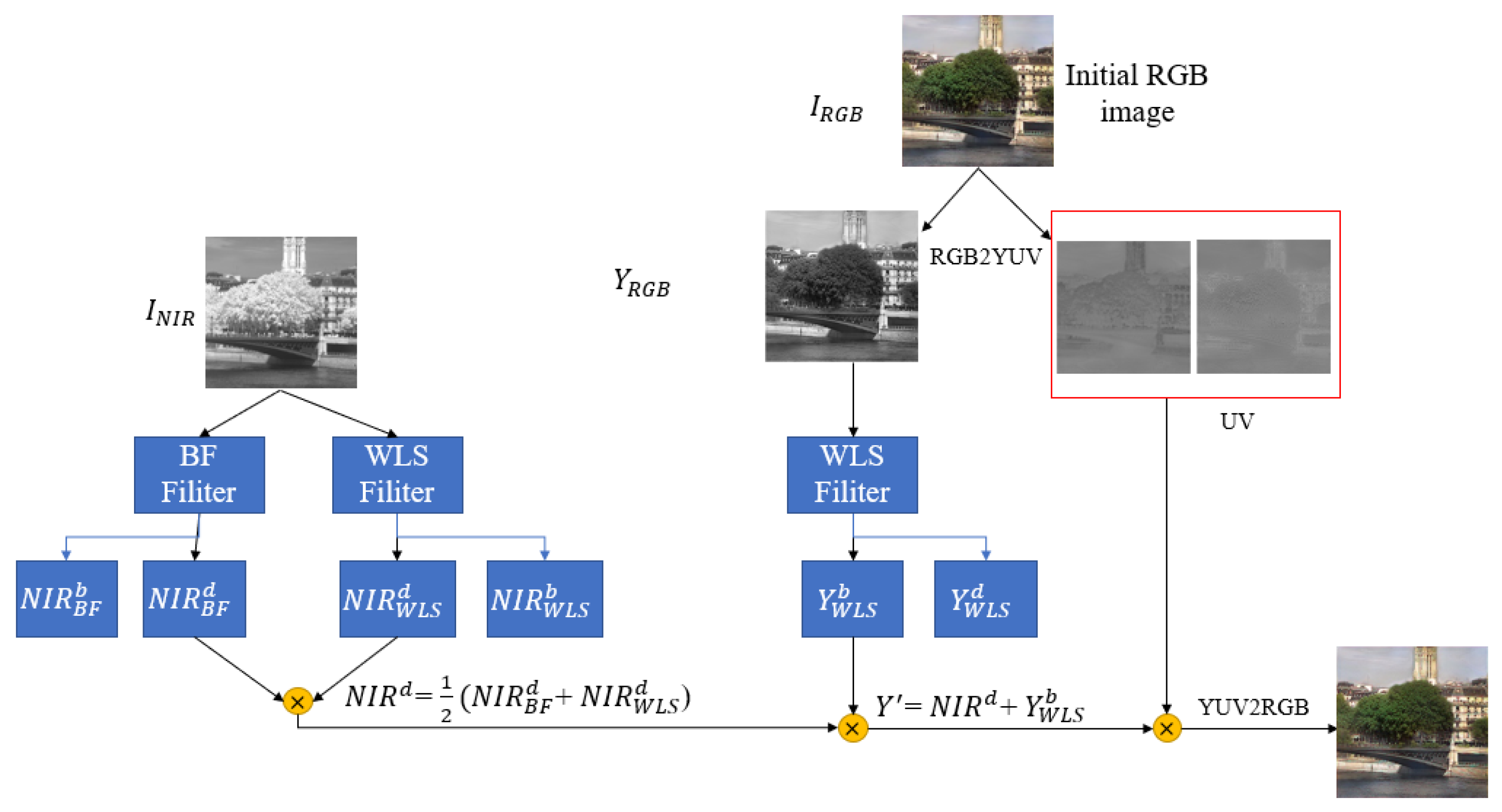

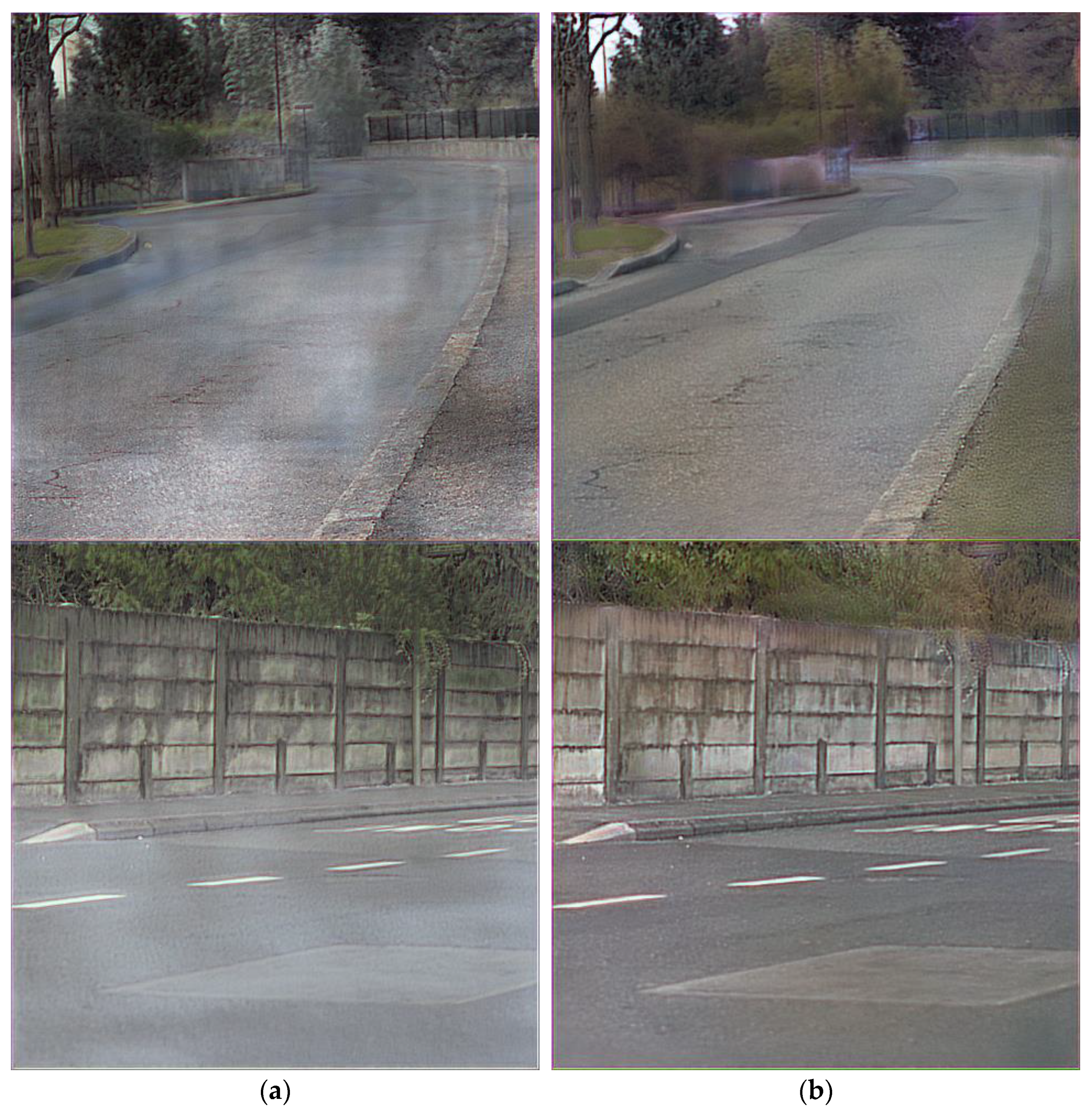

3.2. BFWLS

4. Experiments and Analysis

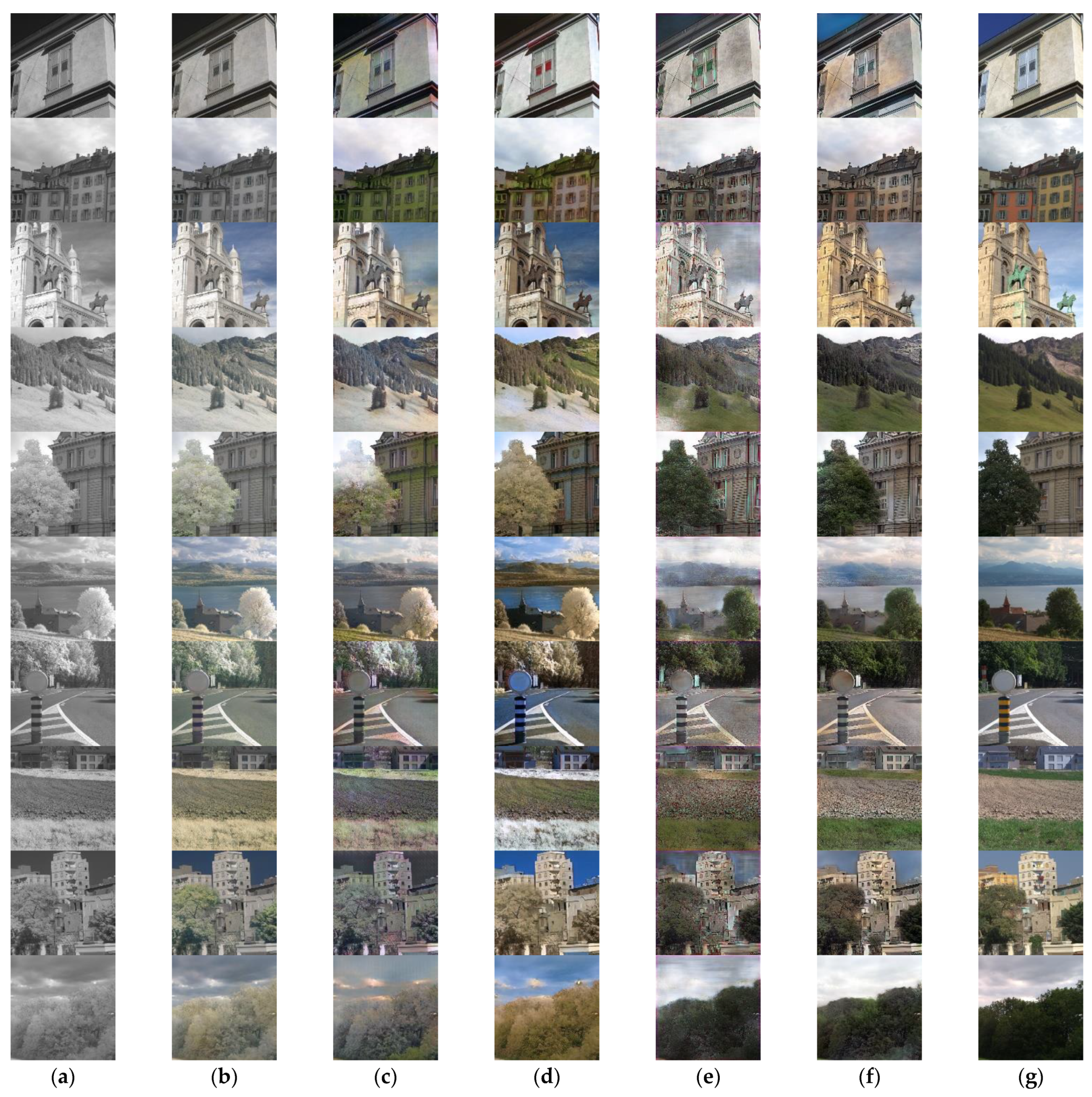

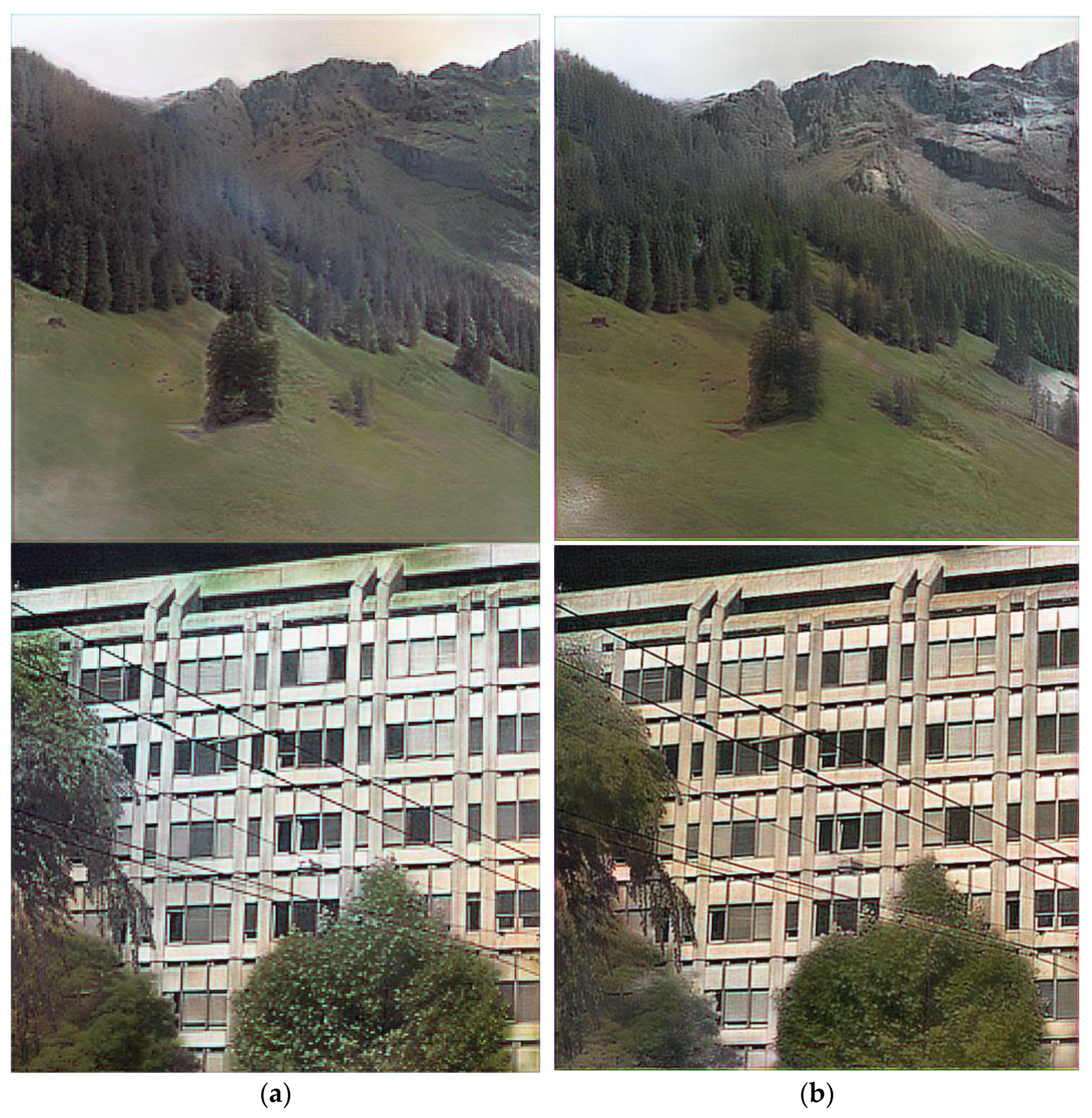

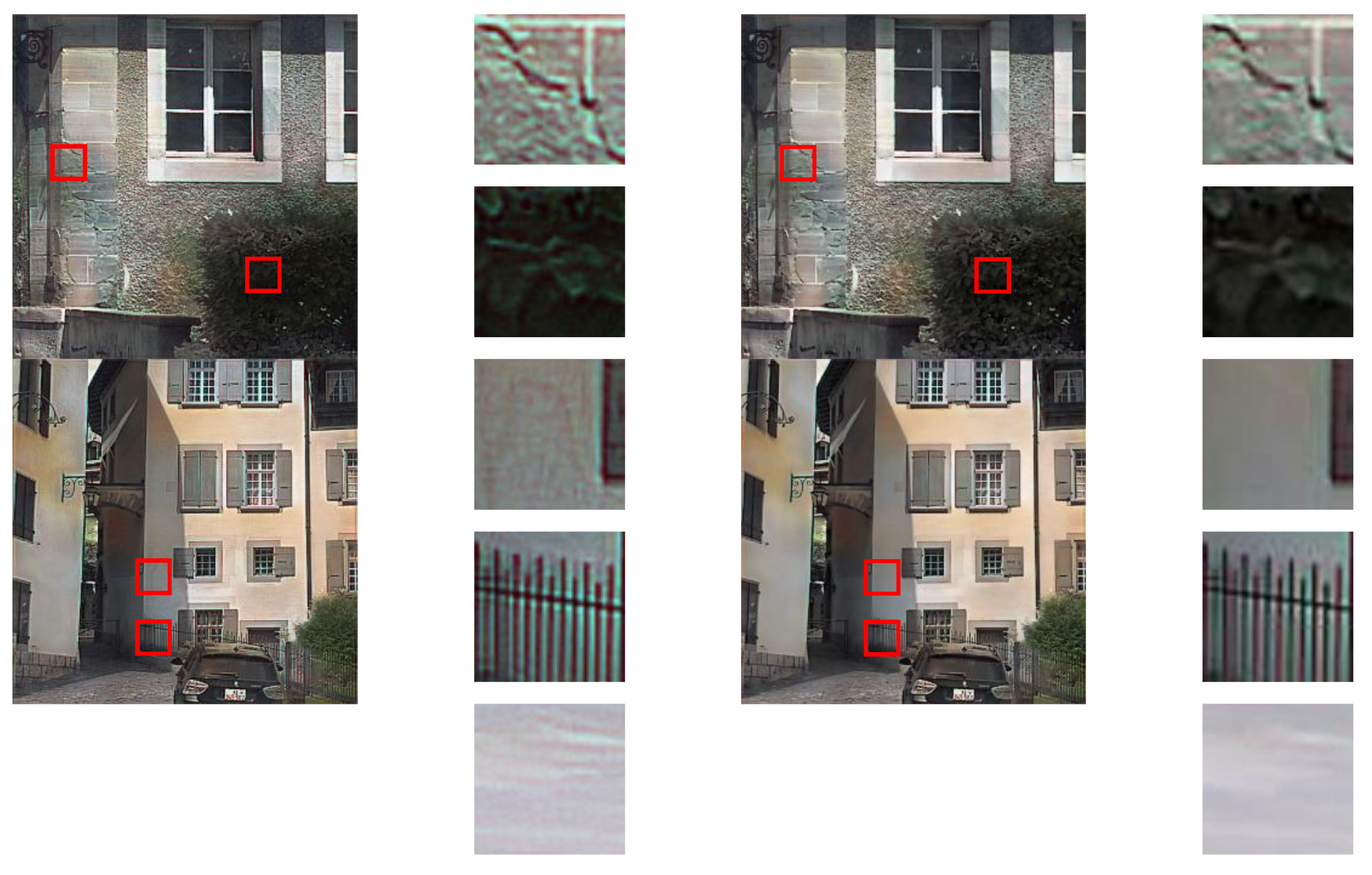

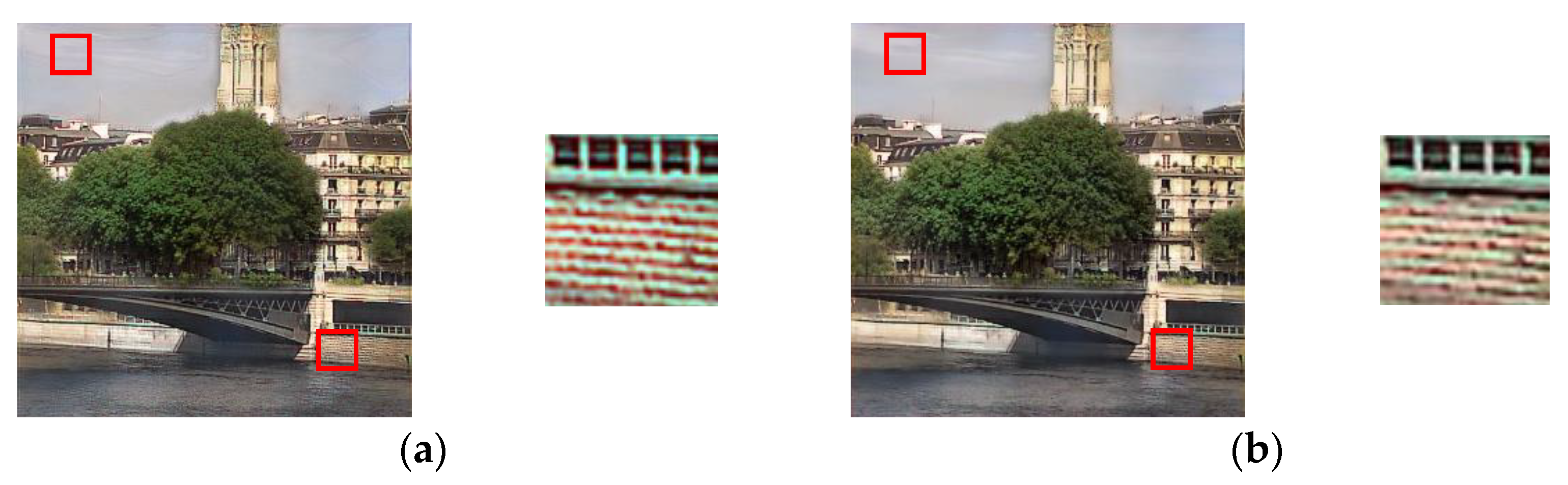

4.1. Main Experiment

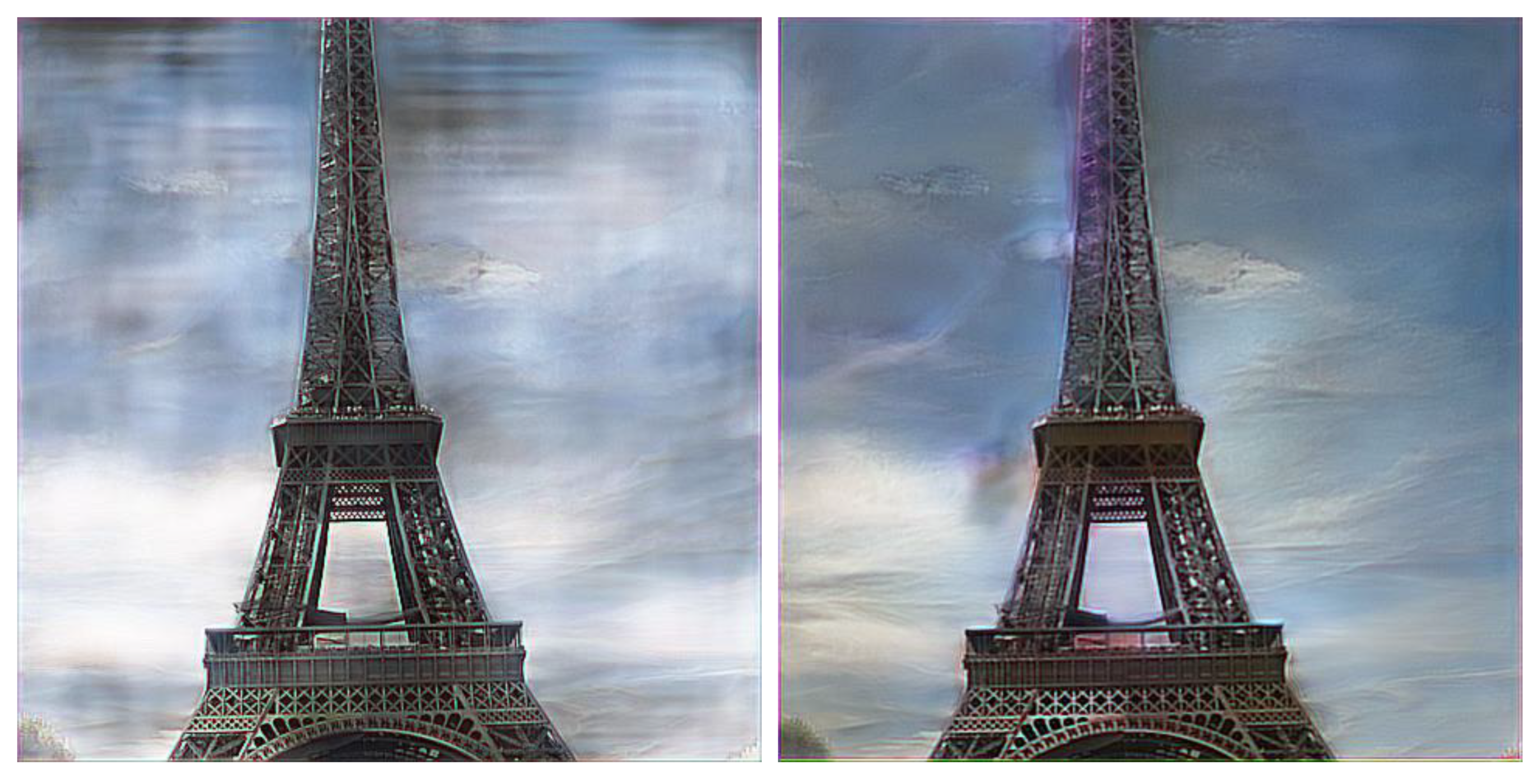

4.2. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luo, Y.; Remillard, J.; Hoetzer, D. Pedestrian Detection in Near-Infrared Night Vision System. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, IEEE, La Jolla, CA, USA, 21–24 June 2010; pp. 51–58. [Google Scholar]

- Ariff, M.F.M.; Majid, Z.; Setan, H.; Chong, A.K.-F. Near-infrared camera for night surveillance applications. Geoinf. Sci. J. 2010, 10, 38–48. [Google Scholar]

- Vance, C.K.; Tolleson, D.R.; Kinoshita, K.; Rodriguez, J.; Foley, W.J. Near infrared spectroscopy in wildlife and biodiversity. J. Near Infrared Spectrosc. 2016, 24, 1–25. [Google Scholar] [CrossRef]

- Aimin, Z. Denoising and fusion method of night vision image based on wavelet transform. Electron. Meas. Technol. 2015, 38, 38–40. [Google Scholar]

- Limmer, M.; Lensch, H.P. Infrared Colorization Using Deep Convolutional Neural Networks. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), IEEE, Anaheim, CA, USA, 18–20 December 2016; pp. 61–68. [Google Scholar]

- Dong, Z.; Kamata, S.-I.; Breckon, T.P. Infrared Image Colorization Using a S-Shape Network. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), IEEE, Athens, Greece, 7–10 October 2018; pp. 2242–2246. [Google Scholar]

- Fredembach, C.; Süsstrunk, S. Colouring the Near-Infrared. In Proceedings of the Color and Imaging Conference, Society for Imaging Science and Technology, Terrassa, Spain, 9–13 June 2008; pp. 176–182. [Google Scholar]

- Chitu, M. Near-Infrared Colorization using Neural Networks for In-Cabin Enhanced Video Conferencing. In Proceedings of the 2021 International Aegean Conference on Electrical Machines and Power Electronics (ACEMP) & 2021 International Conference on Optimization of Electrical and Electronic Equipment (OPTIM), IEEE, Brasov, Romania, 2–3 September 2021; pp. 493–498. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Sun, T.; Jung, C.; Fu, Q.; Han, Q. Nir to rgb domain translation using asymmetric cycle generative adversarial networks. IEEE Access 2019, 7, 112459–112469. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting Encoder Representations for Efficient Semantic Segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), IEEE, St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 182–186. [Google Scholar]

- Liang, W.; Derui, D.; Guoliang, W. An improved DualGAN for near-infrared image colorization. Infrared Phys. Technol. 2021, 116, 103764. [Google Scholar]

- Levin, A.; Lischinski, D.; Weiss, Y. Colorization using optimization. In ACM SIGGRAPH 2004 Papers; Association for Computing Machinery: New York, NY, USA, 2004; pp. 689–694. [Google Scholar]

- Yatziv, L.; Sapiro, G. Fast image and video colorization using chrominance blending. IEEE Trans. Image Process. 2006, 15, 1120–1129. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, J.-Y.; Isola, P.; Geng, X.; Lin, A.S.; Yu, T.; Efros, A.A. Real-time user-guided image colorization with learned deep priors. arXiv 2017, preprint. arXiv:1705.02999. [Google Scholar] [CrossRef]

- Welsh, T.; Ashikhmin, M.; Mueller, K. Transferring Color to Greyscale Images. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques, San Antonio, TX, USA, 23–26 July 2002; pp. 277–280. [Google Scholar]

- Liu, X.; Wan, L.; Qu, Y.; Wong, T.-T.; Lin, S.; Leung, C.-S.; Heng, P.-A. Intrinsic colorization. In ACM SIGGRAPH Asia 2008 Papers; Association for Computing Machinery: New York, NY, USA, 2008; pp. 1–9. [Google Scholar]

- Ironi, R.; Cohen-Or, D.; Lischinski, D. Colorization by Example. Render. Tech. 2005, 29, 201–210. [Google Scholar]

- Charpiat, G.; Hofmann, M.; Schölkopf, B. Automatic Image Colorization via Multimodal Predictions. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 126–139. [Google Scholar]

- Gupta, R.K.; Chia, A.Y.-S.; Rajan, D.; Ng, E.S.; Zhiyong, H. Image Colorization Using Similar Images. In Proceedings of the 20th ACM International Conference on Multimedia, Bali, Indonesia, 3–5 December 2012; pp. 369–378. [Google Scholar]

- Chia, A.Y.-S.; Zhuo, S.; Gupta, R.K.; Tai, Y.-W.; Cho, S.-Y.; Tan, P.; Lin, S. Semantic colorization with internet images. ACM Trans. Graph. 2011, 30, 1–8. [Google Scholar] [CrossRef]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Let there be color! Joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Vitoria, P.; Raad, L.; Ballester, C. Chromagan: Adversarial picture colorization with semantic class distribution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 2–5 March 2020; pp. 2445–2454. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful Image Colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 649–666. [Google Scholar]

- Suárez, P.L.; Sappa, A.D.; Vintimilla, B.X. Learning to Colorize Infrared Images. In Proceedings of the International Conference on Practical Applications of Agents and Multi-Agent Systems, Salamanca, Spain, 6–8 October 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 164–172. [Google Scholar]

- Suárez, P.L.; Sappa, A.D.; Vintimilla, B.X. Infrared Image Colorization Based on a Triplet Dcgan Architecture. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 18–23. [Google Scholar]

- Kim, H.; Kim, J.; Kim, J. Infrared Image Colorization Network Using Variational AutoEncoder. In Proceedings of the 36th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), IEEE, Jeju-si, Korea, 27–30 June 2021; pp. 1–4. [Google Scholar]

- Mehri, A.; Sappa, A.D. Colorizing Near Infrared Images through a Cyclic Adversarial Approach of Unpaired Samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Yang, Z.; Chen, Z. Learning from Paired and Unpaired Data: Alternately Trained CycleGAN for Near Infrared Image Colorization. In Proceedings of the 2020 IEEE International Conference on Visual Communications and Image Processing (VCIP), IEEE, Macau, China, 1–4 December 2020; pp. 467–470. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Sharma, V.; Hardeberg, J.Y.; George, S. RGB-NIR Image Enhancement by Fusing Bilateral and Weighted Least Squares Filters. J. Imaging Sci. Technol. 2017, 61, 40409-1–40409-9. [Google Scholar] [CrossRef]

- Brown, M.; Süsstrunk, S. Multi-Spectral SIFT for Scene Category Recognition. In Proceedings of the CVPR 2011, IEEE, Colorado Springs, CO, USA, 20–25 June 2011; pp. 177–184. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, preprint. arXiv:1412.6980. [Google Scholar]

- Antic, J. A Deep Learning Based Project for Colorizing and Restoring Old Images. 2018. Available online: https://github.com/jantic/DeOldifyn (accessed on 15 March 2022).

- Hore, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, IEEE, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, X.; Wandell, B.A. A Spatial Extension of CIELAB for Digital Color Image Reproduction. In Proceedings of the SID International Symposium Digest of Technical Papers, San Diego, CA, USA, 12–17 May 1996; Citeseer: Princeton, NJ, USA, 1996; pp. 731–734. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

| Methods | MAE | PSNR | SSIM | S-CIELAB | LPIPS |

|---|---|---|---|---|---|

| DeOldify [37] | 0.1631 | 14.7715 | 0.6448 | 8.9250 | 0.1516 |

| CycleGAN_UNet [30] | 0.1559 | 14.8807 | 0.6141 | 9.4862 | 0.1519 |

| CycleGAN_ResNet [31] | 0.1014 | 15.5518 | 0.6153 | 9.6304 | 0.1485 |

| S-Net [6] | 0.1139 | 16.5815 | 0.5205 | 10.1254 | 0.1278 |

| Ours | 0.0939 | 18.4421 | 0.6363 | 8.4912 | 0.1225 |

| Modules | MAE | PSNR | SSIM | S-CIELAB | LPIPS |

|---|---|---|---|---|---|

| SCD only LinkNet | 0.1139 0.1072 | 16.5815 17.1252 | 0.5205 0.5368 | 10.1254 9.9567 | 0.1278 0.1337 |

| ACD only | 0.1107 | 17.4910 | 0.5485 | 9.8960 | 0.1254 |

| ACD+GLFFNet (ColorNet) | 0.0993 | 17.6443 | 0.5389 | 9.6563 | 0.1117 |

| ColorNet + BFWLS(Ours) | 0.0939 | 18.4421 | 0.6363 | 8.4912 | 0.1225 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, X.; Huang, W.; Huang, R.; Liu, X. Near-Infrared Image Colorization Using Asymmetric Codec and Pixel-Level Fusion. Appl. Sci. 2022, 12, 10087. https://doi.org/10.3390/app121910087

Ma X, Huang W, Huang R, Liu X. Near-Infrared Image Colorization Using Asymmetric Codec and Pixel-Level Fusion. Applied Sciences. 2022; 12(19):10087. https://doi.org/10.3390/app121910087

Chicago/Turabian StyleMa, Xiaoyu, Wei Huang, Rui Huang, and Xuefeng Liu. 2022. "Near-Infrared Image Colorization Using Asymmetric Codec and Pixel-Level Fusion" Applied Sciences 12, no. 19: 10087. https://doi.org/10.3390/app121910087

APA StyleMa, X., Huang, W., Huang, R., & Liu, X. (2022). Near-Infrared Image Colorization Using Asymmetric Codec and Pixel-Level Fusion. Applied Sciences, 12(19), 10087. https://doi.org/10.3390/app121910087