Abstract

Portable digital devices such as PDAs and camera phones are the easiest and most widely used methods to preserve and collect information. Capturing a document image using this method always has warping issues, especially when capturing pages from a book and rolled-up documents. In this article, we propose an effective method to correct the warping of the captured document image. The proposed method uses a checkerboard calibration pattern to calculate the world and image points. A radial distortion algorithm is used to handle the warping problem based on the computed image and world points. The proposed method obtained an error rate of 3% using a document de-warping dataset (CBDAR 2007). The proposed method achieved a high level of quality compared with other previous methods. Our method fixes the problem of warping in document images acquired with different levels of complexity, such as poor lighting, low quality, and different layouts.

1. Introduction

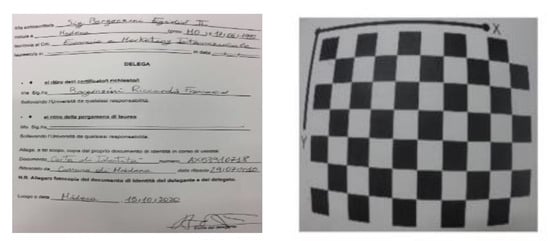

Paper documents are the most common type of document utilized in our daily lives. The most common method for retaining information from paper is to capture it. Photos of documents are easily retrieved and archived at any moment. We can transmit this image of the document to anyone in the world utilizing social media tools. However, many captured document images include issues such as perspectival and geometric abnormalities. When dealing with rolled documents or thick books, warping and curled lines are the most common problems that appear [1,2]. Figure 1 depicts warped document images taken from a bound book.

Figure 1.

Example of document-image warping.

The technique of geometrically transforming 2D images is referred to as the ‘document-image warping problem.’ However, the term “warp” may appear to imply radial distortion. The term “document-image warping” encompasses a wide range of transformations, from complicated asymmetrical warps to basic transformations like rotation and scaling. The use of digital image de-warping has a wide range of applications. The common applications of image processing involve at least one image rotation, scaling, or translation, which are simple warping examples. Image de-warping is increasingly used in the field of remote sensing. Many geometric distortions in images acquired from various satellites, sensors, or aircraft are created by the curvature of the Earth or camera lenses. These geometric and lens distortions are effectively corrected via image de-warping. Image de-warping has recently gotten significant attention because of a special effects method called ‘morphing’. In this study, we look at how to fix the warping problem in camera-captured document images. Optical character recognition (OCR) utilizes the image of the acquired document to accomplish tasks like text extraction and character recognition. The de-warping technique is responsible for the majority of OCR systems’ accuracy [3].

In this research, we present a document image de-warping approach based on a checkerboard calibration pattern. To compute world and image points, the first step is to apply well-defined checkerboard patterns. The world and picture points obtained in the first step are used in the second step to determine camera calibration parameters. Finally, the document image is de-warped using the derived camera-calibration parameters. The main contributions of this paper are as follows:

- We propose a novel document-image de-warping method based on a checkerboard calibration pattern.

- Well-defined checkerboard patterns are employed to compute world and image points.

- Calculated camera calibration parameters are employed to de-warp the document image.

2. Related Work

De-warping approaches for document images have received much attention in the scientific community in recent years, and various approaches have been presented. Three types of approaches are available: text-based, shape-based, and deep-learning-based.

The first category depends on extracting features from text lines, words, and characters. The ridge-based coupled-snakes model was employed among the methods described in [4,5]. These authors used the coupled-snakes model to calculate the baseline, then determined the starting location of each text line. Finally, the perspective distortion was removed using a four-point homography technique. Schneider et al. [6] developed a technique for introducing a vector field from the generated warping mesh based on local orientation data. The image is fixed by using numerous linear projections to approximate the nonlinear distortion. By using a baseline detector, they were able to extract the needed local orientation characteristics.

Kim et al. [7] introduced a method based on discrete representations of text blocks and text lines, which are sets of connected components. Each text line’s features were retrieved in this study. Using the Levenberg–Marquadt technique, a cost function was created to solve the warping. To overcome the de-warping problem, a few approaches based on word information have been presented. B. Gatos et al. [8] have suggested breaking the document into words. The orientation and orientation of each word are determined. Finally, to correct the problem of warping, each word is rotated.

A curled baseline pair was utilized by Bukhari et al. [4]. Each corresponding top and bottom baseline pair’s map characters are computed. The restoration procedure was carried out by Zhang et al. [9] using segmentation and thin plate splines. The text baselines and vertical stroke boundaries for each line were determined by Lu et al. [10]. Bolelli et al. [11] presented a method that covered each letter with a quadrilateral cell and then computed the center of each letter to adjust its orientation, resulting in a flat word.

The most common methods are the text-information based. They work well if the segmentation method is correct. On the other hand, these systems have the disadvantage of being susceptible to high degrees of curl and varying of line spacing. They are also sensitive to the image resolution and words used. Text-based algorithms are time-consuming and fail when dealing with complicated layouts. They also failed when it comes to documents with graphics and tables in them. These approaches are susceptible to a variety of distortions and result in a high number of segmentation mistakes.

The second category is shape recovery. These methods are based on recovering the shape of document. As shown in [12], these techniques rely on 2D-form recovery employing a curved surface-projection map to restore a rectangular 2D region. The uniform parameterization procedure in [13] is guided by a physical pattern. The authors of [14] use a technique that separates the page into three vertical sections and projects each one separately. The approaches presented in [15,16] use text lines to establish the curved shape, then use a surrounding transformation model to flatten it.

The concept behind 3D reconstruction is to turn a coiled paper into three dimensions (x, y, and z). De-warping distortion may be addressed by first determining the depth (z) dimension and then fitting the curved form [17,18,19,20]. Laser range scans [21], stereo cameras, eye scanners [22], controlled lighting [23], and computed tomography (CT) scans for opaque objects [18,24,25] are examples of specialized equipment needed in this procedure. In order to acquire the 3D form of the coiled document, previous knowledge is also necessary.

The majority of 3D methods do not rely on the document’s content. As a result, these approaches are more precise in representing the shape than 2D methods. In the event of complicated deformities like folds and rumples, 3D-based approaches are also accurate. 3D-based solutions, on the other hand, need a unique setup to record the 3D geometry, as well as more intricate hardware setups and more expensive hardware.

Other methods of reconstructing a document’s surface form using shading techniques have been developed. The notion of form recovery using shading techniques is based on the use of varied illuminations to recover shape, which is referred to as shading. Zhang et al. [26,27,28,29,30,31,32] provided methods for creating a 3D model using the shading approach. To recover the 3D or 2D form of a document, shading approaches do not require specialist hardware. The fundamental disadvantage of these approaches, on the other hand, is that they fail when the lighting is poor.

Deep-learning-based approaches are the last category. Bukhari et al. [33] suggested a novel deep-learning-based technique. To overcome the de-warping problem, this solution uses an image-to-image translation deep-learning approach. It uses the deformed input picture and the clean image to transfer the image from one domain to another. This approach utilizes the pix2pixhd network [34], which uses GANs to achieve a high resolution The Pix2pix translation network with CGAN [34] is unable to create 256 × 256 images. In the case of documents including tables and figures, deep-learning-based algorithms function effectively [35]. However, they need a large quantity of data and costly GPUs. A rectifying distorted document framework is presented in [36]. A fully convolutional network (FCN) is used to estimate pixel-wise displacements. After that, pixel-wise displacements are used to rectify the document image. In [37], a learned query embedding set is adopted to capture the global context of the document image. The geometric distortion is corrected by a self-attention mechanism and it decodes the pixel-wise displacement solution.

3. Proposed Method

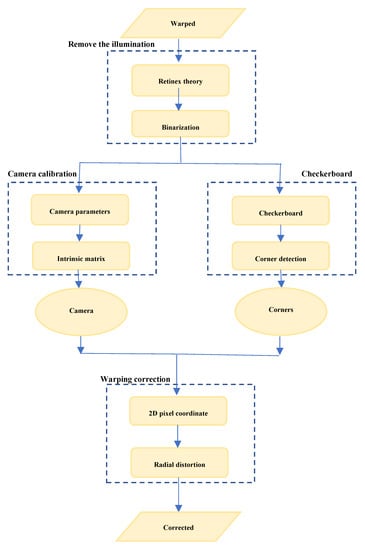

It has been established that 3D page-shape reconstruction methods produce high-quality results when recovering the shape of the document regardless of the content of the document image. However, specialized and expensive hardware is required. Furthermore, prior knowledge parameters from the camera and scanner are needed. In contrast, 2D image-processing techniques do not require any parameters or hardware. Unfortunately, in the case of complex layouts and bad illumination, the shape is often inaccurately recovered. The proposed method combines the advantages of 3D and 2D methods by recovering documents with high quality regardless of their content and without prior knowledge parameters. To obtain the world and image points, the proposed method utilizes checkerboard calibration patterns. Regardless of the content of the document image, camera calibration parameters are produced depending on the computed world and image points. Finally, the radial distortion algorithm is used to correct the warping problems based on the extracted camera-calibration parameters. The block diagram of our method is illustrated in Figure 2.

Figure 2.

General block diagram of our method.

There are five main steps in the proposed technique. The first step includes removing bad illumination and shading from the document image. A well-defined checkerboard calibration pattern is used in the second step to match the warped shape of the document. Checkerboard corner detection is used in the third step to extract the world points. Camera calibration parameters are calculated in the fourth step. The calculated camera calibration parameters and the world points are used to de-warp the document image in the fifth step. The following subsections go through each stage in depth.

3.1. Remove the Illuminations

When capturing a page of a thick book, the resultant document image has shading in the spine area of the bound volume as shown in Figure 3a. OCR engines and segmentation algorithms face numerous challenges in recognizing the text in the case of bad illumination. The proposed method in this paper overcomes this issue by employing the retinex theory introduced in [38]. The image is mathematically represented in retinex theory as a function of its illumination and the reflectance

Figure 3.

Two images of the same document before (a) and after (b) illumination removal.

The low-frequency component of the measured image can be used to approximate the illumination component.

Lightness refers to the estimation of reflectance and is calculated as:

Binarizing the document image is simple after removing the illumination and lightness using the global binarization method described in [39]. A document image before and after the illumination-removal process is shown in Figure 3.

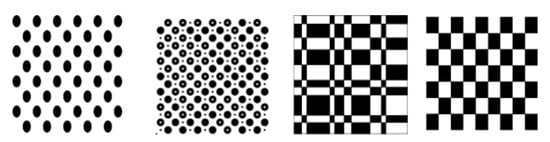

3.2. Checkerboard Calibration Pattern

In this step, the proposed method recovers the 3D points of the document image (world point) by using a well-defined calibration pattern. In the field of computer vision, different calibration patterns are used to perform numerous tasks, such as defining a set of markers, calculating camera parameters (focal length and center), and undistorting the image. Figure 4 shows an example of well-defined calibration pattern images that are commonly used in computer vision. In this work, we use the last checkerboard calibration pattern to recover the 3D world points of the document image, by defining its corners as a set of markers which will be used in the calibration algorithm in Section 3.4.

Figure 4.

Example of a well-defined calibration pattern.

The proposed method collects different de-warped checkerboard patterns as shown in Figure 5. We manually select the corresponding checkerboard pattern mask, which has high similarity measures as in Figure 6. The size of the checkerboard must be at least six-by-six equal squares. The quality of the proposed method depends on the number of squares; a large number of squares produces a high quality image. The size of checkerboard has the same size of the corresponding document image.

Figure 5.

Example images of a checkerboard calibration patterns.

Figure 6.

Example of selecting the corresponding checkerboard pattern.

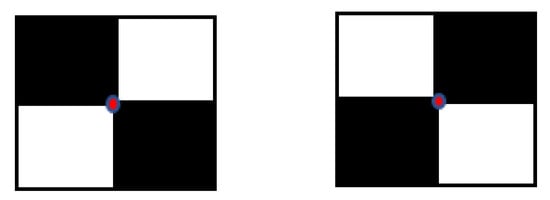

3.3. Checkerboard Corner Detection

To simulate the 3D shape of the document image, we define the corresponding 3D world points of the checkerboard pattern. A set of markers are used to recover the shape by estimating the corners of the checkerboard. The checkerboard corners are extracted from each pair of black or white squares that touch each other. For each local corner located in the checkerboard, there are four squares surrounding the corner (upper left , upper right , lower left , lower right ) as illustrated in Figure 7. The corner is selected if it satisfies the following condition,

or

Figure 7.

Local corner selection.

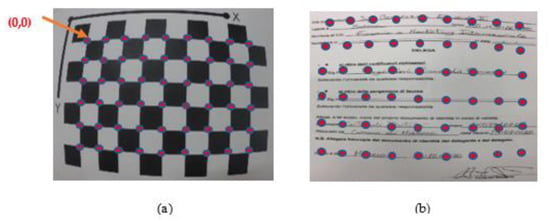

All points on the checkerboard lie in plane; we drop the component so the world points return an N-by-2 matrix of an N number of [x y] coordinates of checkerboard corners. (0, 0) corresponds to the lower-right corner of the upper-left square of the board as shown in Figure 8a. World points are used to match and obtain the corresponding points from the warped document image to recover the 3D shape of the warped document image as shown in Figure 8b. Detected checkerboard corners as illustrated in Figure 8a reflect exactly the warped shape of the document image in Figure 8b. The matrix of is used to solve the warping problem of the document image as follows in the next subsection.

Figure 8.

Detecting the corners of checkerboard calibrating pattern in (a) and its corresponding warped document in (b).

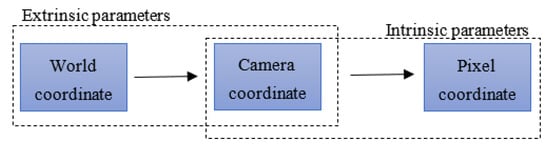

3.4. Camera Calibration

To convert from a 3D world point to a 2D pixel coordinate, camera calibration is used to calculate the camera matrix (image points) [40,41,42,43]. Firstly, we must understand the camera-calibration process, which depends on two components: the intrinsic and extrinsic parameters of a camera. Extrinsic parameters are used to convert the document image into 3D camera coordinates (3D world point Pw) unlike the intrinsic parameters, which are used to convert the 3D camera coordinate into a 2D pixel coordinate. Figure 9 explains the difference between the intrinsic and extrinsic parameters.

Figure 9.

Intrinsic and extrinsic parameters.

Mathematically, the 3 × 4 camera matrix P is defined as the following:

Such that is the intrinsic camera matrix which describes the camera parameters. are the extrinsic parameters which consist of rotation and translation .

The 3 × 3 intrinsic camera matrix K is defined as

where is the focal length and (,) is the camera center. Unfortunately, the intrinsic camera parameters are unknown in the case of a document image, so we compute the camera parameters based on the following equations.

From the corresponding checkerboard pattern, the camera center (,) is defined as

The focal length is defined as

such that is the height and is the width of the checkerboard pattern. = 180° is the field of view.

The intrinsic camera can be used directly to map from a 3D world coordinate of the document image to a 2D pixel coordinate (xs, ys), as the following,

where k is the intrinsic camera matrix defined in Equation (5) and is a 3D world point of the document image as described in Section 3.3.

3.5. Warping Correction

Finally, the proposed method solves the warping problem of the 2D pixel coordinates of the document image by using the radial distortion algorithm in [44,45,46,47], which depends on low-order polynomials, as shown as follows:

where , is defined in Equation (8) and are the radial distortion parameters.

4. Results

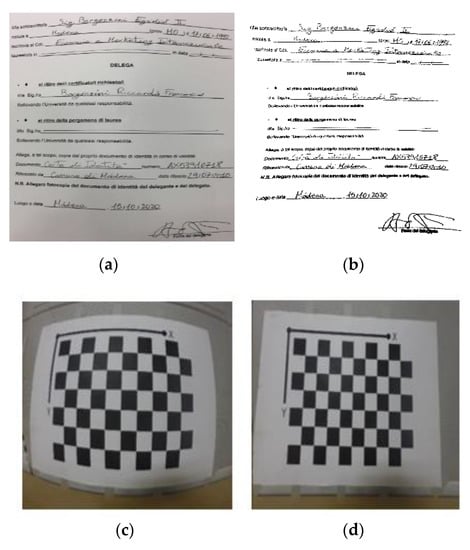

Our method was evaluated on a workstation Core i5 2.5 GHz CPU and implemented in MATLAB R2020a. The performance of our algorithm was experimentally tested on some samples of warped document images. These samples have different levels of complexity, including, for example, degradation, handwriting, different languages, and different layouts. Figure 10 illustrates the result of the document image before and after warping correction. Figure 10a depicts the original document image, which it has numerous degradations, challenges, warping, and handwritten text. The document image after solving the degradation and warping problems is illustrated in Figure 10b. The corresponding checkerboard calibration pattern which was used is introduced in Figure 10c. Figure 10d presents the checkerboard after the de-warping process.

Figure 10.

De-warping of the image. (a) original image. (b) de-warped image. (c) corresponding checkerboard of the warped document. (d) de-warped checkerboard.

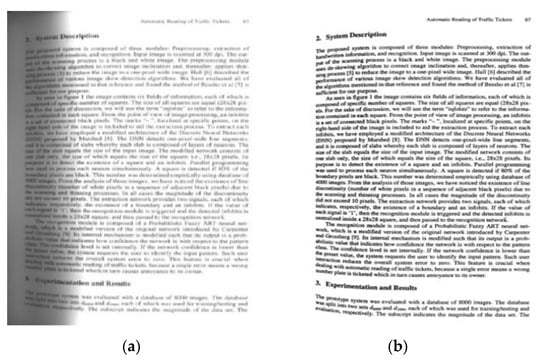

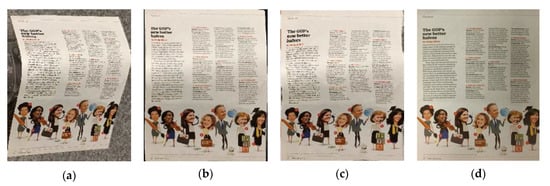

We used two different types of testing to analyze and validate the performance of our results: quantitative analysis based on OCR engines (optical character recognition) (error rate Er) and human visual perception. Figure 10, Figure 11 and Figure 12 show that our proposed method is more readable and easier to comprehend based on visual criteria.

Figure 11.

Recent de-warping method comparison. (a) warped image (b) de-warped image by [36] (c) de warping image by [37] (d) proposed de-warped image.

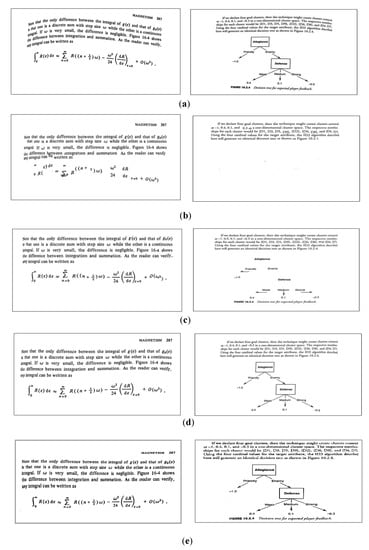

Figure 12.

The comparison between our approach and other state-of-the-art methods using CBDAR dataset. (a) Original document images in CBDAR dataset. (b) Method proposed in [14]. (c) Method proposed in [4]. (d) Method proposed in [13]. (e) Our proposed method.

Quantitative analysis based on the OCR engine is used to evaluate and validate the performance of our results, and to test the text region of the proposed results against the state-of-the-art methods. In this work, the ABBYY Fine Reader OCR program was used. The ABBYY program is used to compute the number of characters in the document image before and after the application of a de-warping technique. The software is used to convert image files, scanned documents, and PDF documents into editable formats. The ABBYY application is employed to calculate the number of characters in the document image.

The proposed results are compared to the state-of-the-art methods in [13,36] using OCR performance as a quantitative analysis. The most popular method for evaluation is to use OCR performance (error rate Er). The OCR error rate (Er) metric is the most common method used for evaluation, and works by computing the ratio incorrect characters to the total number of characters in the document image as shown in Equation (12).

Characters which the OCR fails to recognize are called incorrect characters.

Table 1 illustrates the error rate (Er) of our method and the methods in [13,36]. The proposed method achieved a low error rate.

Table 1.

The performance of OCR (error rate Er).

The only available document de-warping dataset (CBDAR 2007) was employed to evaluate our method. A total of 102 camera-captured documents are included in this dataset, along with their associated ASCII ground-truths. We compared our method with other state-of-the-art approaches in [4,13,14]. Figure 12 presents two examples from the dataset. The selected two images have one of the most challenges that most of the previous methods fail to solve. Figure 12a is the original warped document image from dataset. Figure 12b shows the de-warped image proposed by [14]. The method in [14] produced bad results in the equation portion of the left image and failed when the right document image contained figures. Figure 12c shows the de-warped image proposed by [4]. The results of the method in [4] are better than the method in [13] in the left image but still failed in the right image due to the figure inside the document. Figure 12d shows the de-warped document image which was proposed by [13]. The results of the recent method in [13] solved the problem of the document that contains figures but removed the title of the figure. Furthermore, it failed to de-warp the equation and text in the left image. Figure 12e illustrates the de-warped image using our method, which produces straight lines in the text part and is efficient in the case of the image that contain figures and equations.

Finally, our method outperforms the other approaches, regardless of the content of the document images, as shown in Table 2 and Figure 12. In the case of figures, equations, and tables of various levels of complexity and poor illumination, the proposed method provides high-quality results.

Table 2.

The performance of OCR (error rate Er).

5. Conclusions

In this paper, we present the de-warping of a document image based on a checkerboard calibration pattern. The proposed method recovers the deformed document using a checkerboard calibration pattern based on a camera-calibration algorithm, in spite of the content of the image. Finally, the warping issues are corrected using the radial distortion technique. Based on the CBDAR 2007 dataset, we evaluated the proposed method on a variety of warped document images. When compared to other state-of-the-art methods, the results demonstrated a higher level of quality. The proposed method corrects the warping issue in document images of various levels of complexity, and is successful in recovering the image of a document that includes figures and tables.

Author Contributions

Conceptualization: M.I., M.W. and S.Z.; Methodology: M.I. and M.W.; Formal analysis and investigation: W.S.E., F.S.A. and A.M.Q.; Writing—original draft preparation: M.I., M.W. and S.Z.; Writing—review and editing: W.S.E., F.S.A. and A.M.Q.; Resources: M.I., M.W. and W.S.E.; Visualization: S.Z. and A.M.Q.; Supervision: M.I., S.Z. and W.S.E. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Taif University Researchers Supporting Project number (TURSP-2020/347), Taif University, Taif, Saudi Arabia.

Acknowledgments

The authors thank Taif University Researchers Supporting Project number (TURSP-2020/347), Taif University, Taif, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

References

- Wagdy, M.; Ibrahim, M.; Amin, K. Document skew detection and correction using ellipse shape. Int. J. Imaging Robot. 2019, 19. [Google Scholar]

- Wagdy, M.; Faye, I.; Rohaya, D. Document image skew detection and correction method based on extreme points. In Proceedings of the 2014 International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 3–5 June 2014; pp. 1–5. [Google Scholar]

- Laskov, L. Processing of byzantine neume notation in ancient historical manuscripts. Serdica J. Comput. 2011, 5, 183p–198p. [Google Scholar]

- Bukhari, S.S.; Shafait, F.; Breuel, T.M. Dewarping of document images using coupled-snakes. In Proceedings of the Third International Workshop on Camera-Based Document Analysis and Recognition, Barcelona, Spain, 23–24 July 2009; pp. 34–41. [Google Scholar]

- Vinod, H.C.; Niranjan, S.K. De-warping of camera captured document images. In Proceedings of the 2017 IEEE International Symposium on Consumer Electronics (ISCE), Kuala Lumpur, Malaysia, 14–15 November 2017; pp. 13–18. [Google Scholar]

- Schneider, D.; Block, M.; Rojas, R. Robust document warping with interpolated vector fields. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; Volume 1, pp. 113–117. [Google Scholar]

- Kim, B.S.; Koo, H.I.; Cho, N.I. Document dewarping via text-line based optimization. Pattern Recognit. 2015, 48, 3600–3614. [Google Scholar] [CrossRef]

- Gatos, B.; Pratikakis, I.; Ntirogiannis, K. Segmentation based recovery of arbitrarily warped document images. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; Volume 2, pp. 989–993. [Google Scholar]

- Zhang, Y.; Liu, C.; Ding, X.; Zou, Y. Arbitrary warped document image restoration based on segmentation and Thin-Plate Splines. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Lu, S.; Chen, B.M.; Ko, C.C. A partition approach for the restoration of camera images of planar and curled document. Image Vis. Comput. 2006, 24, 837–848. [Google Scholar] [CrossRef]

- Bolelli, F. Indexing of historical document images: Ad hoc dewarping technique for handwritten text. In Italian Research Conference on Digital Libraries; Springer: Cham, Switzerland, 2017; pp. 45–55. [Google Scholar]

- Tappen, M.; Freeman, W.; Adelson, E. Recovering intrinsic images from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1459–1472. [Google Scholar] [CrossRef] [PubMed]

- Shamqoli, M.; Khosravi, H. Warped document restoration by recovering shape of the surface. In Proceedings of the 2013 8th Iranian Conference on Machine Vision and Image Processing (MVIP), Zanjan, Iran, 10–12 September 2013; pp. 262–265. [Google Scholar]

- Masalovitch, A.; Mestetskiy, L. Usage of continuous skeletal image representation for document images de-warping. In Proceedings of the International Workshop on Camera-Based Document Analysis and Recognition, Curitiba, Brazil, 22 September 2007; pp. 45–53. [Google Scholar]

- Stamatopoulos, N.; Gatos, B.; Pratikakis, I.; Perantonis, S.J. A two-step dewarping of camera document images. In Proceedings of the Eighth IAPR International Workshop on Document Analysis Systems 2008, Nara, Japan, 16–19 September 2008; pp. 209–216. [Google Scholar]

- Sruthy, S.; Suresh Babu, S. Dewarping on camera document images. Int. J. Pure Appl. Math. 2018, 119, 1019–1044. [Google Scholar]

- Flagg, C.; Frieder, O. Searching document repositories using 3D model reconstruction. In Proceedings of the ACM Symposium on Document Engineering 2019, Berlin, Germany, 23–26 September 2019; pp. 1–10. [Google Scholar]

- Lin, Y.; Seales, W.B. Opaque document imaging: Building images of inaccessible texts. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1, pp. 662–669. [Google Scholar]

- Zhang, L.; Zhang, Y.; Tan, C. An improved physically-based method for geometric restoration of distorted document images. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 728–734. [Google Scholar] [CrossRef]

- Galarza, L.; Martin, H.; Adjouadi, M. Integrating low-resolution depth maps to high-resolution images in the development of a book reader design for persons with visual impairment and blindness. Int. J. Innov. Comput. Inf. Control 2018, 14, 797–816. [Google Scholar]

- Tang, Y.Y.; Suen, C.Y. Image transformation approach to nonlinear shape restoration. IEEE Trans. Syst. Man Cybern. 1993, 23, 155–172. [Google Scholar] [CrossRef]

- Chua, K.B.; Zhang, L.; Zhang, Y.; Tan, C.L. A fast and stable approach for restoration of warped document images. In Proceedings of the Eighth International Conference on Document Analysis and Recognition (ICDAR’05), Seoul, Korea, 31 August–1 September 2005; pp. 384–388. [Google Scholar]

- Amano, T.; Abe, T.; Iyoda, T.; Nishikawa, O.; Sato, Y. Camera-based document image mosaicing. Proc. SPIE 2002, 4669, 250–258. [Google Scholar]

- Tappen, M.; Freeman, W.; Adelson, E. Recovering intrinsic images from a single image. Adv. Neural Inf. Process. Syst. 2002, 15. [Google Scholar] [CrossRef] [PubMed]

- Seales, B.W.; Lin, Y. Digital restoration using volumetric scanning. In Proceedings of the 2004 Joint ACM/IEEE Conference on Digital Libraries, Tucson, AZ, USA, 11 June 2004; pp. 117–124. [Google Scholar]

- Zhang, L.; Tan, C.L. Warped document image restoration using shape-from-shading and physically-based modeling. In Proceedings of the 2007 IEEE Workshop on Applications of Computer Vision (WACV’07), Snowbird, UT, USA, 17–21 October 2007; p. 29. [Google Scholar]

- Zhang, L.; Yip, A.M.; Brown, M.S.; Tan, C.L. A unified framework for document restoration using inpainting and shape-from-shading. Pattern Recognit. 2009, 42, 2961–2978. [Google Scholar] [CrossRef]

- Zhang, L.; Yip, A.M.; Tan, C.L. Photometric and geometric restoration of document images using inpainting and shape-from-shading. In Proceedings of the National Conference on Artificial Intelligence, Vancouver, BC, Canada, 22–26 July 2007; AAAI Press: Menlo Park, CA, USA, 2007; Volume 22, pp. 1121–1126. [Google Scholar]

- Zhang, L.; Yip, A.M.; Tan, C.L. Removing shading distortions in camera-based document images using inpainting and surface fitting with radial basis functions. In Proceedings of the Ninth International Conference on Document Analysis and Recognition, (ICDAR 2007), Curitiba, Parana, 23–26 September 2007; Volume 2, pp. 984–988. [Google Scholar]

- Zhang, Z.; Tan, C.L. Restoration of images scanned from thick bound documents. In Proceedings of the 2001 International Conference on Image Processing, (Cat. No. 01CH37205), Washington, DC, USA, 26–28 September 2001; Volume 1, pp. 1074–1077. [Google Scholar]

- Zhang, L.; Yip, A.M.; Tan, C.L. Shape from shading based on lax-friedrichs fast sweeping and regularization techniques with applications to document image restoration. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Wada, T.; Ukida, H.; Matsuyama, T. Shape from shading with interreflections under a proximal light source: Distortion-free copying of an unfolded book. Int. J. Comput. Vis. 1997, 24, 125–135. [Google Scholar] [CrossRef]

- Ramanna, V.K.B.; Bukhari, S.S.; Dengel, A. Document Image Dewarping using Deep Learning. In Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods, ICPRAM, Prague, Czech Republic, 19–21 February 2019; pp. 524–531. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Guowang, X.; Fei, Y.; Xuyao, Z.; Chenglin, L. Dewarping Document Image by Displacement Flow Estimation with Fully Convolutional Network. In International Workshop on Document Analysis Systems; Springer: Cham, Switzerland, 2020; pp. 131–144. [Google Scholar]

- Feng, H.; Wang, Y.; Zhou, W.; Deng, J.; Li, H. Doctr: Document image transformer for geometric unwarping and illumination correction. arXiv 2021, arXiv:2110.12942. [Google Scholar]

- Wagdy, M.; Faye, I.; Rohaya, D. Degradation enhancement for the captured document image using retinex theory. In Proceedings of the 6th International Conference on Information Technology and Multimedia, Putrajaya, Malaysia, 18–20 November 2014; pp. 363–367. [Google Scholar]

- Wagdy, M.; Faye, I.; Rohaya, D. Document image binarization based on retinex theory. Electron. Lett. Comput. Vis. Image Anal. ELCVIA 2015, 14. [Google Scholar]

- Lopez, M.; Mari, R.; Gargallo, P.; Kuang, Y.; Gonzalez-Jimenez, J.; Haro, G. Deep single image camera calibration with radial distortion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11817–11825. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5695–5701. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE international Conference on Robotics and Automation, St Paul, MN, USA, 14–15 May 2012; pp. 3936–3943. [Google Scholar]

- Hartley, R.; Kang, S.B. Parameter-free radial distortion correction with center of distortion estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1309–1321. [Google Scholar] [CrossRef]

- Alvarez, L.; Gomez, L.; Sendra, J.R. Algebraic lens distortion model estimation. Image Process. Line 2010, 1, 1–10. [Google Scholar] [CrossRef][Green Version]

- Drap, P.; Lefèvre, J. An exact formula for calculating inverse radial lens distortions. Sensors 2016, 16, 807. [Google Scholar] [CrossRef]

- Feng, W.; Zhang, Z.; Wang, R.; Zeng, X.; Yang, X.; Lv, S.; Zhang, F.; Xue, D.; Yan, L.; Zhang, X. Distortion measurement of optical system using phase diffractive beam splitter. Opt. Express 2019, 27, 29803–29816. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).