1. Introduction

Digital technologies are now essential in training in the contexts of school, higher education, and corporate training. E-learning technologies and methodologies have introduced a set of radical innovations in form, organisation, and education efficiency [

1,

2]. Since 2020, modern society has had to cope with one of the biggest health emergencies ever experienced due to the COVID-19 pandemic. Many of the perspectives and visions have changed and have forced people to think differently about training methodologies and technologies to support education.

This paper investigated the paradigm changes implemented to address the restrictions of the pandemic within a first-level university master’s degree called the “Master in Management of Sustainable Development Goals” (MSDG). This master’s programmeme was initially delivered in a “blended” mode and included a series of lessons to be followed completely online as well as a series of face-to-face meetings, lessons, and activities. Due to the pandemic, technologies and methodologies for the provision of teaching and the evaluation of students have been reconsidered with the aim of maintaining the same level of learning outcomes and student performances.

In this research work, we studied and analysed whether the mix of methodological and technological changes adopted by the master’s didactic designers were effective and whether they impacted student performance based on the analysis of ongoing assessments. To face the emergency situation, the master’s board applied methodologies widely recognised in the literature to quickly move from blended to fully online learning. We studied the impact of this shift on learning outcomes.

For this purpose, we carried out a comparative analysis of the learning dataset for the MSDG degree. The comparison was made using data from the blended course in the academic year 19/20 and the fully online course delivered during the pandemic in the academic year 20/21.

2. Related Works

The COVID-19 pandemic forced universities and educational institutions to redefine the course delivery methodology. The emergency situation forced many institutions to quickly adapt their training programmes. Transforming teaching methods “on the fly” in a period of crisis is an ambitious challenge that brings with it various risks. It is necessary to change the way of teaching using technology, but it is also essential to adapt the way in which students are assessed, most notably for those courses that allow students to have direct experiences. In this context, several studies outlined the best practices for managing the change from a blended course to an online course. The challenges differ, ranging from maintaining a good level of engagement to redesigning assessments to produce reliable results. Some studies focused on analysis methodologies for blended learning courses. The aim is to let tutors (and educators in general) obtain the full picture of what is happening in a blended learning scenario in which students are assessed for face-to-face and online activities. De Leng et al. carried out a comparative study using video observation techniques; this study built a tool that analysed communication among students during small group sessions including presentations by students and discussions [

3].

Having analysed South Korean college students’ experiences during emergency remote teaching, Shim and Lee’s [

4] research showed that students’ educational environments were important and influenced the learning outcomes. The quality of interaction varied depending on the teachers’ methodology and the technology used. This research highlighted how specific technological and methodological setups could be carried out to maintain academic achievements similar to those realised in traditional classroom teaching. In university master’s education, group activities are often planned as part of the learning path, and students are divided into subgroups. A study by Qiu and McDougall [

5] highlighted how online small group discussions had more advantages over face-to-face ones. Online small group discussions were found to be more focused, thoughtful, thorough, and in-depth. Observations showed that the tutor was less involved in online discussions than in face-to-face discussions. The flexible schedule promoted greater participation and a higher quality of contribution in asynchronous contexts. International students who are not fluent in the language and are shy have been shown to prefer online subgroup discussion.

One consolidated best-practice method in a blended online course is the Discover, Learn, Practice, Collaborate, and Assess (DLPCA) approach proposed by Lapitan et al. in a recent paper [

6]. The paper analysed the impact of a proposed learning strategy during the COVID-19 pandemic. This approach was effective based on student learning experience (which should translate well to a master’s environment where students have large sets of different prior knowledge), academic performance, and tutor observations. The findings highlight that synchronous online sessions had a positive impact on the instructor, and the possibility of attending pre-recorded video lectures had a positive impact on the students. The best solution was found to the mixing of strategies depending on the specific context or subject.

In his systematic literature review, Rasheed Abubakar revealed some of the current challenges in the online component of blended learning from student, teacher, and institutional perspectives. This study showed that students had difficulties in the self-regulation of study and were unable to use technology to study appropriately and without distractions. For teachers, the gap was often represented by not being adequately trained in the use of technology and the changes in online teaching methodologies. Educational institutions found it difficult to provide correct and sufficient technological infrastructure, and to provide effective training support to their teachers [

7].

A study by Meirinhos et al. demonstrated that online learning could be a good alternative to the traditional on-site learning methodology even in improving practical abilities [

8]. As described in the next paragraph, the master’s in management of sustainable development goals was created with the intention of providing practical skills.

In such a complex scenario, it is necessary to analyse the data relating to the progress of the courses in order to promptly make the necessary corrections. In this context, learning analytics plays a key role in understanding human learning, teaching, and education processes by identifying and validating relevant measures of processes, outcomes, and activities [

9,

10].

A continuous learning analytics activity allows us to understand how and when to intervene in a course. Research showed that learning analytics techniques and methods have been useful for intercepting and solving training criticalities during the COVID-19 pandemic [

11,

12,

13,

14]. Therefore, it is necessary to identify guidelines in order to correctly design courses on an e-learning platform, without neglecting learning analytics (LA) [

15].

The two fields (learning design and learning analytics) should converge and create synergies to improve the learning process. However, the growth of digitisation has allowed learning analytics to emerge as a separate field [

16,

17]. Indeed, Ferguson outlined four challenges in learning analytics: (i) building strong connections with the learning sciences; (ii) developing methods of working with a wide range of datasets in order to optimise learning environments; (iii) focusing on the perspectives of learners; and (iv) developing and applying a clear set of ethical guidelines.

Wilson et al. [

18] analysed the issues inherently blocking the effectiveness of learning analytics implementations in post facto analysis and prediction/intervention. Emerging results outline signature behavioural patterns in order to ensure the full power of learning analytics, such as specific things that are generically or group-specifically desirable learning behaviours. They can be measured by the data made available by analytical implements attached to or embedded in LMSs (Learning Management Systems). This allows us to explain unexpected learning behaviours, detect misconceptions and misplaced efforts, identify successful learning patterns, introduce appropriate interventions, and increase users’ awareness of their own actions and progress [

19].

In this way, learning analytics facilitates the transition from tacit to explicit educational practice, while the use of learning design in the pedagogical context transforms learning analytics results into meaningful information [

20,

21,

22]. However, several challenges exist in learning analytics, and these must be addressed in order to design the experiment and have a common model that can offer guidelines for effective development [

23]. The main challenges with regard to learning analytics are: the definition of pedagogical approaches to improve learning design based on learning analytics results [

24]; the evaluation and interpretation of student learning outcomes [

25]; the evaluation of the impact of learning analytics findings on learning design decisions and experiences [

9]; and the evaluation of how educators are planning, designing, implementing, and evaluating learning design decisions [

26].

In this paper, learning analytics were used to verify the expected behaviour of students considering the differences in the teaching methodology. The learning analytics approach used was the cognitive learning analytics [

27]. The data capture activities and subsequent analysis were aimed at obtaining feedback on students’ learning outcomes. The data acquired are the results of the evaluations of different types of assessments administered during the learning path.

In this research, the ongoing redesign operated by the master’s board (considering the best practices consolidated in the state of the art) was evaluated through cognitive learning analytics techniques.

3. Material and methods

3.1. Master Description

The Master in Management of Sustainable Development Goals (MSDG) is a problem-solving-oriented, creative, innovative, learning-by-doing master’s programme launched by Libera Università degli Studi Maria Ss. Assunta di Roma (LUMSA) University in 2017. LUMSA is a public non-state Italian university formed on Catholic principles. It is the second oldest university in Rome after Sapienza. MSDG is one of the first master’s degrees in sustainability delivered with e-learning technologies. It aims to support participants from around the world in acquiring knowledge and competencies in the management of sustainable development in dynamic environments. The vision and focus of MSDG is the spread of a new idea of development, which implies inter-linkages and a multidimensional approach to solving the issues and challenges concerning the long-term preservation of the planet and its inhabitants on a world scale [

28].

MSDG involves a board of professionals who are experts in sustainability management and digital learning fields. Subject matter experts are responsible for ensuring high levels of teaching with constantly updated content. Digital learning experts are responsible for ensuring the implementation of the best way of attending the online course (lessons, assessment, tutoring). Digital learning experts are highly skilled professionals from academia and business. They have skills in the field of digital teaching methodologies and technologies to support learning. The MSDG master’s programme has a mainly structured syllabus. Initially, it was designed to be delivered in a blended mode, provided by the LUMSA e-learning platform based on the Moodle Learning Management System. The MSDG learning path consists of five different pillars and, for the sake of completeness, we summarise here the full topic names:

Pillar I: Sustainable Development Goals;

Pillar II: Socio-Economic Challenges;

Pillar III: Sustainable Management;

Pillar IV: Environmental Challenges;

Pillar V: Finance.

The student has to attend live lectures (planned within specific residential weeks) and participate in individual or team projects for each pillar. At the end of those face-to-face activities, the student will be able to attend the e-lessons: video lessons delivered “on demand” in an asynchronous mode and carried out by specialised teachers. The achievement of the learning outcomes for each pillar is verified through individual tests and group projects called e-labs. In the e-lab, the students are divided into subgroups and a different topic is assigned to each subgroup. The evaluation is carried out by the group and individual, considering the effort of each student.

At the end of the study period, a project or internship report allows the student to work on a real-life sustainable project. These projects are short-term assignments to be carried out in close cooperation with a company or any other organisation involved in the management of the sustainable development field. The student needs to prepare a research paper on an advanced SDG-related topic. The project is compulsory and can be realised in collaboration with a sponsor company. At the end of the training course, the student must write a thesis on a topic approved by the teacher of the course. In order to pass, students are required to attend at least 80% of face-to-face lessons, complete all the e-learning modules in each pillar, and be fully involved in all activities the programme offers. They must obtain sufficient grades for all pillars, proving that they have learned all the topics covered by the lessons.

A didactic tutor follows the students individually and during the group activities, promptly identifying and intervening to correct critical situations. In addition, students can communicate with pairs, tutors, and teachers through specific thematic forums. As mentioned above, students are assessed on an ongoing basis for each pillar and each project performed. The final grade takes into consideration all the individual pillar tests, the teamwork (e-lab), the individual project or internship report, and the final thesis.

In the 2020/2021 edition, due to the COVID-19 pandemic, the methodological and technological organisation of the master’s course completely changed. The students were unable to reach the physical location for face-to-face lessons and could not meet each other to carry out group activities. The course was rethought and converted to a fully online mode. Professors delivered face-to-face lessons through Google Meet. The classes were recorded and made available to those users who did not have a good connection or, due to time zones, did not have the opportunity to follow them in real time. Some group work activities and assessments were redesigned to be performed individually, while others were managed using the collaborative tools of the LMS platform, Google Meet, and Google Docs. Tutoring was enhanced to follow and adequately support students during their studies and work. Unfortunately, the internship period was cancelled. However, the continuous evaluation of the students involved in the master’s programme allowed them to highlight any critical issues and ensured prompt intervention. The final thesis remained unchanged.

Table 1 summarises the main changes made by the Master’s board in the two editions of the programme.

3.2. Methods

The original student population was composed of 28 international students equally divided into the 19/20 and 20/21 academic years. Initially, the dataset was anonymised by replacing each student’s personal information with a sequence number from 1 to 28. The following was taken for each: the final score and the completion date (if applicable, 0 otherwise) for each test or project related to each pillar, the entry assessment, and the final thesis score.

The data were collected from the LUMSA learning management system where all the digital learning activities of the programme took place. The marks of the tests assessed by the teacher (e-labs, projects, final thesis) were entered manually on the platform. At the end of the learning path, all the marks were considered for the final evaluation.

The intent was to demonstrate that the changes made in teaching methodologies and supporting technologies have not impacted students’ performance. It was possible to use statistical methodologies for comparing groups and demonstrating that students’ performances were the same despite the change in methodologies. The hypothesis was that there was not a degradation of student performance between the academic years 19/20 and 20/21.

In our quasi-experimental study, non-parametric comparison methods were used to verify that the two groups had the same performance in the programme. As described in the next section, parametric tests (e.g., t-test) were excluded because the sample did not meet the basic requirements.

4. Results

We start discussing our results by underlining that the population of the two groups was too small to perform a wide range of statistical analyses. Due to this limitation, we do not want to generalise the results to all the possible situations involving the students’ analytics. Rather, by performing an analysis of the proposed case, we aim to give readers some insights and suggestions that will be studied more in-depth by applying the same approach to bigger classes and by collecting more data.

The data stored in the LUMSA Learning Management System were strictly related to each student’s career. For each student, we had access to: the general information (name, surname, date of birth etc.), his/her university ID and course, enrolment data, a sequence of records containing the progression of their learning, and the marks obtained for each assessment. We started our analysis by separating the students according to the academic year they attended. Then, we conducted a dimensional reduction of the original dataset, to work only with meaningful data.

In general, assessment deadlines were fixed for all the students, and they directly depended on the sequence of lessons/pillars attended. We noticed that for the two academic years analysed, all the students passed the assessments without delay. This aspect (which will be further discussed in

Section 5) makes the time variable irrelevant for our analysis. Furthermore, we noticed that 4 out of 28 students (equally divided into the two groups) did not complete most of the pillars (more than 70 % of their marks were missing). It was not possible to determine the trend for the assessments or the final thesis score. Additionally, so many 0 marks for such a small population would invalidate most of the statistics. For this reason, we decided to remove these type of students and the relative rows along with the students’ information.

After this general clearance, we started analysing the general values of the marks, looking for anomalies. Due to personal problems, one student in the academic year 20/21 passed all the pillar assessments but not the final thesis. Similarly, another student in the academic year 19/20 did not complete the entry test. As we had all the remaining scores, we decided to replace the “unknown” values with a 0 in order to obtain a uniform dataset. We found this solution to be more feasible, compared to the row removal, to avoid a further reduction in an already small dataset. A complete table with the cleaned data is available in

Table 2.

Before considering the data, we need to underline an important aspect: the Italian university recognises any mark greater or equal to 18 as “sufficient”. However, as shown in

Table 2, some students showed marks lower than 18. By the end of the course, the student must have achieved a mark average greater than or equal to 18 to pass. Those students who failed were required to re-take the assessment (or the assessments) and score a mark average greater than 18.

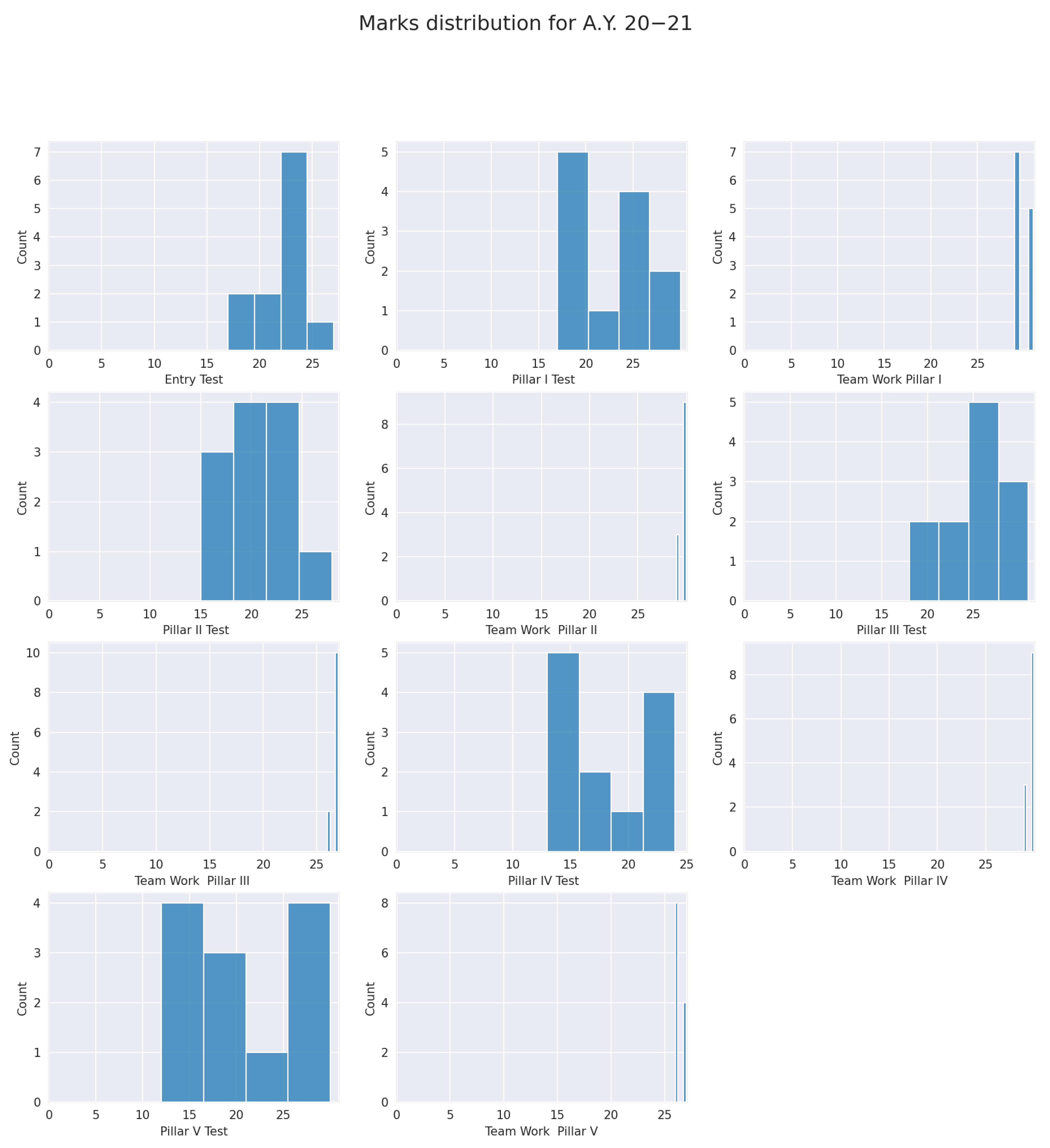

By plotting the distribution of the marks for each academic year, we noticed that none of the pillars had a normal distribution (see

Figure 1 and

Figure 2).

This initially suggested that we would not be able to use any kind of parametric method (such as a

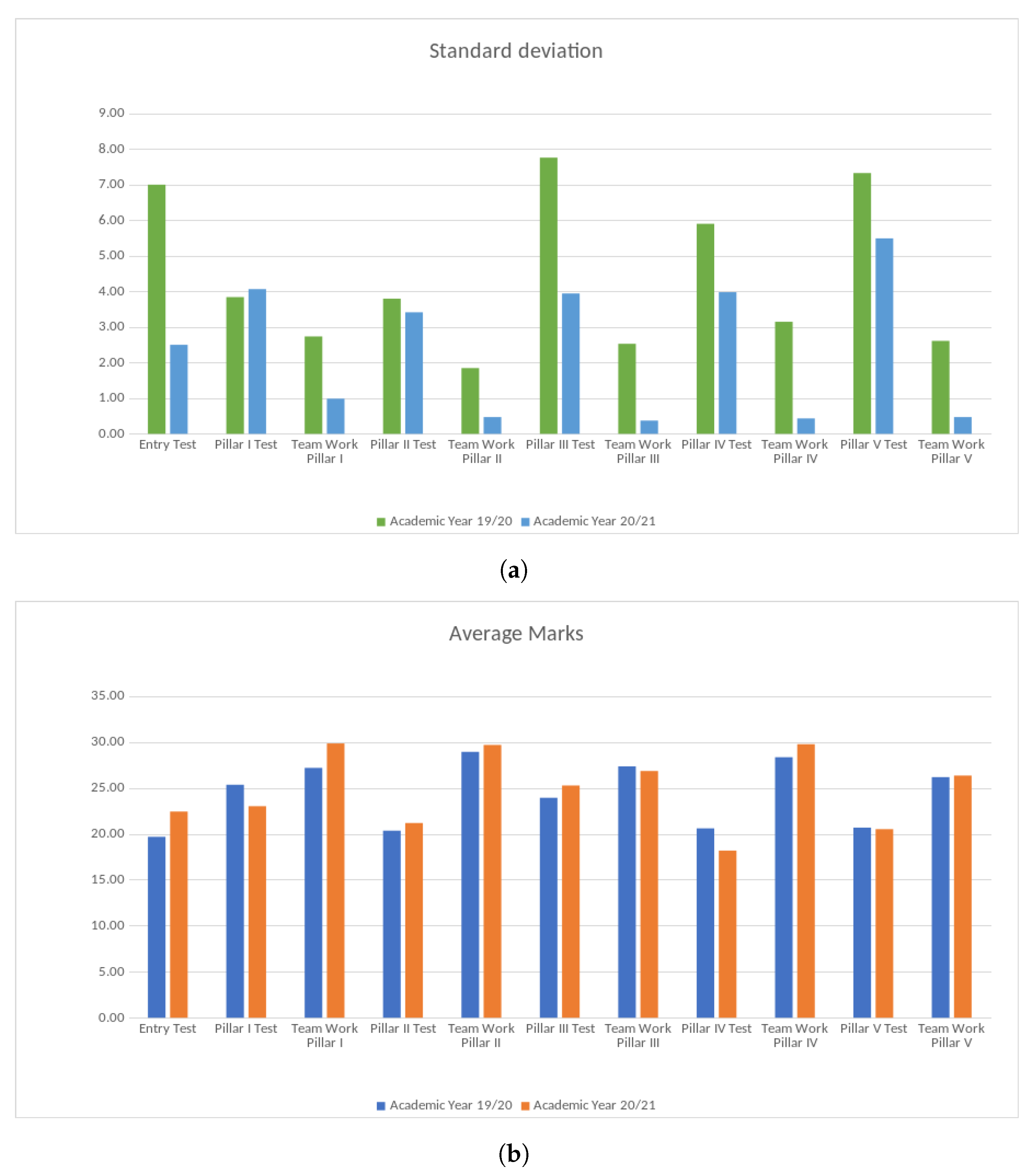

t-test) to determine whether the two populations had distinct behaviours. We went further into the analysis of the marks and found that the standard deviation was higher for the students in the year 19/20 for almost all the assessments, while the trend for the average score on all assessments was constant between both groups (see

Figure 3a,b). Additionally, the overall closeness between the groups was also confirmed considering the exam average and the standard deviation.

5. Discussion

The results presented in this section can help us understand whether the methodology and technology changes applied during the academic year 20/21 allowed students to maintain a good standard on marks and timing. To understand these aspects, we proposed a quasi-experimental study, analysing students in the academic year 20/21 as the test group, while those in the academic year 19/20 were used as the control group. From the data gathered and discussed in

Section 4, an evident characteristic seen in students from both academic years was a passing rate for the assessments according to the course timetable.

This led us to conclude, although this finding is limited to the case under analysis, that despite the urgent change in teaching methodology, students’ time performance did not decrease. There were many previous studies that reached the same conclusions by analysing the students’ preferences between on-site and online training [

8]. Thus, to quantify the impact of the new teaching methods on students’ performance, we focused our attention on the grades achieved during the learning path.

Further discussion is needed for the results obtained in

Section 4; from

Figure 3a, we notice that many pillar assessments presented a bigger standard deviation, while the average mark was almost the same for both academic years.

The limited data do not suggest much more, and we do not want to speculate on hypotheses that the analysis cannot confirm. For this reason, we decided to analyse the data more deeply.

As mentioned in the previous section, there have been several changes in the setting of the master’s course. Some had an impact on the teaching methodology, others on the supporting technology. One of the most impactful changes was the introduction (for the academic year 20/21) of an additional tutor able to respond promptly to students’ doubts. To quantify the impact of those changes, we performed a statistical comparison between the two groups, trying to understand whether, for all the pillar assessments (or just some of them), the methodology changes had an impact on the students’ performances. There are many statistical tests that could have been used to reach this goal. Most of them rely on the calculation of the average and standard deviation of two populations. However, there were some requirements regarding the distribution and the number of elements of the population that our current groups did not satisfy (e.g., a high number of elements and a normal distribution, and so on).

With such a small population and considering that the most well-known parametric test was not usable, we decided to use the Wilcoxon–Mann–Whitney test (also known as the U-test) to define the differences between the two student groups. Results of the statistical analysis are reported in

Table 3. The column “Topic” reports the topic taken into account, “Score” represents the score of the U-test, and “

p-value” gives information about the acceptance of the null hypothesis. The last three columns describe the average for each topic and for each academic year and the difference between them.

This analysis gives many more insights. We notice that only three pillars reported a p-value : Teamwork on Pillar I, Teamwork on Pillar III, and Teamwork on Pillar IV. The low p-value among the two populations for these assessments suggests a low/no difference between the two groups.

We propose some hypotheses about the reason behind this result:

Teams are composed of three to four students that have to prepare and present a common thesis. The final exam consists of a dissertation about the work that must be completed as a group rather than individually. Thus, the final mark is given to the whole group and, generally speaking, the marks tend to be closer among the different years;

Among the team, some students might perform better while others tend to “follow the leader”; thus, marks might be averaged and more similar across the two academic years.

Whatever the reason behind it, we will investigate further by comparing the current results with the next academic years.

Regarding solo learning, the p-value was far from the acceptance value. The rejection of the null hypotheses implies that there was a substantial difference among the two groups. From the analysis of the averages, we cannot assert that the changes had a positive impact on all the pillar assessments. In just two out of five assessments (Pillar II and III), the average slightly decreased, while for the others, a discrete improvement was seen.

We can conclude that the changes implemented and the presence of an additional tutor allowed students to perform slightly better on many assessments.

6. Conclusions

COVID-19 impacted everybody’s lives: from people’s working habits to students’ access to courses and exams. In this article, we discussed the impact of the change of approach in a master’s course during the pandemic. We performed a quasi-experimental study, considering two different groups of students in different academic years, one of which attended a blended master’s course (academic year 19/20) and the other a totally online version (academic year 20/21). With the blended approach, the students could meet the teacher face-to-face, collaborate with pairs for e-labs and group projects, and took the exams in classrooms. To overcome the COVID-19 limitations, online courses received much attention as they have been restructured to fit changing student environments and needs. Thus, in the online course, students had to attend all the classes using online technologies and had an additional tutor to support their studies both individually and in groups.

We analysed the data from the student population by using the Wilcoxon–Mann–Whitney test to determine whether it is possible to identify some overlaps between the two student populations’ marks and discuss the reason therein. We found that students generally performed similarly on the teamwork pillar tests, while marks for one-to-one assessments were different for each student. On the other hand, the test did not provide enough insight for us to determine a general trend. By comparing the average of the marks for each exam of the academic year, we believe that the student support provided a little improvement. However, this must be confirmed by further studies of new academic years.

The proposed work presents some limitations due to the availability of data and the small number of participants in the two student populations. In particular, the lack of a questionnaire prevented us from performing qualitative analysis and obtaining results as proposed in [

8]. Secondly, the fact that the only discriminant metric was the assessment mark led to a lack of details, preventing us from understanding the effective improvement of the students on each of the pillars, as proposed in [

29]. Despite these limitations, the statistical analysis suggests that the changes implemented during the online pandemic allowed students to perform slightly better on many assessments. LUMSA aims to keep the presented methodologies in the coming academic years; thus, we will be able to gather and analyse the data using a more detailed and complete approach, and compare the new results with this one.

Author Contributions

Data curation, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno); Formal analysis, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno); Investigation, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno); Methodology, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno); Writing—original draft, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno); Writing—review & editing, V.S.B., F.C., A.M., A.P. (Alessandro Pagano), J.P. and A.P. (Antonio Piccinno). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. The analyzed data were anonymized.

Data Availability Statement

The repository with the data is freely available directly on

GitHub.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rakic, S.; Pavlovic, M.; Softic, S.; Lalic, B.; Marjanovic, U. An Evaluation of Student Performance at e-Learning Platform. In Proceedings of the 2019 17th International Conference on Emerging eLearning Technologies and Applications (ICETA), Starý Smokovec, Slovakia, 21–22 November 2019; pp. 681–686. [Google Scholar] [CrossRef]

- Chivu, R.G.; Orzan, G.; Popa, I.C. Education software and modern learning environment: Elearning. In Proceedings of the 15th International Scientific Conference eLearning and Software for Education, Bucharest, Romania, 11–12 April 2019; pp. 193–198. [Google Scholar] [CrossRef]

- De Leng, B.A.; Dolmans, D.H.; Donkers, H.J.H.; Muijtjens, A.M.; van der Vleuten, C.P. Instruments to explore blended learning: Modifying a method to analyse online communication for the analysis of face-to-face communication. Comput. Educ. 2010, 55, 644–651. [Google Scholar] [CrossRef]

- Shim, T.E.; Lee, S.Y. College students’ experience of emergency remote teaching due to COVID-19. Child. Youth Serv. Rev. 2020, 119, 105578. [Google Scholar] [CrossRef] [PubMed]

- Qiu, M.; McDougall, D. Foster strengths and circumvent weaknesses: Advantages and disadvantages of online versus face-to-face subgroup discourse. Comput. Educ. 2013, 67, 1–11. [Google Scholar] [CrossRef]

- Lapitan, L.D.; Tiangco, C.E.; Sumalinog, D.A.G.; Sabarillo, N.S.; Diaz, J.M. An effective blended online teaching and learning strategy during the COVID-19 pandemic. Educ. Chem. Eng. 2021, 35, 116–131. [Google Scholar] [CrossRef]

- Rasheed, R.A.; Kamsin, A.; Abdullah, N.A. Challenges in the online component of blended learning: A systematic review. Comput. Educ. 2020, 144, 103701. [Google Scholar] [CrossRef]

- Meirinhos, J.; Pires, M.D.; da Costa, R.P.; Martins, J.N. Receptivity and Feedback to the Online Endodontics Congress Concept as a Learning Option-An International Survey. Eur. Endod. J. 2020, 5, 212. [Google Scholar] [CrossRef]

- Mangaroska, K.; Giannakos, M. Learning Analytics for Learning Design: A Systematic Literature Review of Analytics-Driven Design to Enhance Learning. IEEE Trans. Learn. Technol. 2019, 12, 516–534. [Google Scholar] [CrossRef]

- Clow, D. An overview of learning analytics. Teach. High. Educ. 2013, 18, 683–695. [Google Scholar] [CrossRef]

- Amjad, T.; Shaheen, Z.; Daud, A. Advanced Learning Analytics: Aspect Based Course Feedback Analysis of MOOC Forums to Facilitate Instructors. IEEE Trans. Comput. Soc. Syst. 2022. [Google Scholar] [CrossRef]

- Beerwinkle, A.L. The use of learning analytics and the potential risk of harm for K-12 students participating in digital learning environments. Educ. Technol. Res. Dev. 2020, 69, 327–330. [Google Scholar] [CrossRef]

- Yan, L.; Whitelock-Wainwright, A.; Guan, Q.; Wen, G.; Gašević, D.; Chen, G. Students’ experience of online learning during the COVID-19 pandemic: A province-wide survey study. Br. J. Educ. Technol. 2021, 52, 2038–2057. [Google Scholar] [CrossRef] [PubMed]

- Pange, J. Online Student-to-Student Interaction: Is It Feasible? In Innovations in Learning and Technology for the Workplace and Higher Education; Guralnick, D., Auer, M.E., Poce, A., Eds.; Springer: Cham, Switzerland, 2022; pp. 250–256. [Google Scholar]

- Barletta, V.S.; Cassano, F.; Pagano, A.; Piccinno, A. A collaborative AI dataset creation for speech therapies. In Proceedings of the CoPDA2022—Sixth International Workshop on Cultures of Participation in the Digital Age: AI for Humans or Humans for AI, Frascati, Italy, 7 June 2022; Volume 3136, pp. 81–85. [Google Scholar]

- Ferguson, R. Learning Analytics: Drivers, Developments and Challenges. Int. J. Technol. Enhanc. Learn. 2012, 4, 304–317. [Google Scholar] [CrossRef]

- Marengo, A.; Pagano, A. Training Time Optimization through Adaptive Learning Strategy. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Zallaq, Bahrain, 29–30 September 2021; pp. 563–567. [Google Scholar] [CrossRef]

- Wilson, A.; Watson, C.; Thompson, T.L.; Drew, V.; Doyle, S. Learning analytics: Challenges and limitations. Teach. High. Educ. 2017, 22, 991–1007. [Google Scholar] [CrossRef]

- Siemens, G.; Long, P. Penetrating the fog: Analytics in learning and education. EDUCAUSE Rev. 2011, 46, 30. [Google Scholar]

- Mor, Y.; Ferguson, R.; Wasson, B. Editorial: Learning design, teacher inquiry into student learning and learning analytics: A call for action. Br. J. Educ. Technol. 2015, 46, 221–229. [Google Scholar] [CrossRef]

- Lockyer, L.; Heathcote, E.; Dawson, S. Informing Pedagogical Action: Aligning Learning Analytics With Learning Design. Am. Behav. Sci. 2013, 57, 1439–1459. [Google Scholar] [CrossRef]

- Marengo, A.; Pagano, A.; Ladisa, L. Mobile gaming experience and co-design for kids: Learn German with Mr. Hut. In Proceedings of the 15th European Conference on e-Learning (2016) ECEL, Prague, Czech Republic, 27–28 October 2016. [Google Scholar]

- Keshavamurthy, U.; Guruprasad, D.H.S. Learning Analytics: A Survey. Int. J. Comput. Trends Technol. 2014, 18, 260–264. [Google Scholar] [CrossRef][Green Version]

- Rodríguez-Triana, M.J.; Martínez-Monés, A.; Asensio-Pérez, J.I.; Dimitriadis, Y. Scripting and monitoring meet each other: Aligning learning analytics and learning design to support teachers in orchestrating CSCL situations. Br. J. Educ. Technol. 2015, 46, 330–343. [Google Scholar] [CrossRef]

- Berland, M.; Davis, D.G.; Smith, C.P. AMOEBA: Designing for collaboration in computer science classrooms through live learning analytics. Int. J. Comput.-Support. Collab. Learn. 2015, 10, 425–447. [Google Scholar] [CrossRef]

- Susan McKenney, Y.M. Supporting teachers in data-informed educational design. Br. J. Educ. Technol. 2015, 46, 265–279. [Google Scholar] [CrossRef]

- Yousef, A.M.F.; Khatiry, A.R. Cognitive versus behavioral learning analytics dashboards for supporting learner’s awareness, reflection, and learning process. Interact. Learn. Environ. 2021, 1–17. [Google Scholar] [CrossRef]

- Huang, R.X. Building a Pedagogical Framework for the Education of Sustainable Development using a Values-based Education Approach. In Proceedings of the 2021 Third International Sustainability and Resilience Conference: Climate Change, Sakheer, Bahrain, 15–16 November 2021; pp. 78–82. [Google Scholar] [CrossRef]

- Omer, U.; Farooq, M.S.; Abid, A. Cognitive Learning Analytics Using Assessment Data and Concept Map: A Framework-Based Approach for Sustainability of programmeming Courses. Sustainability 2020, 12, 6990. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).