Facial Emotion Recognition Analysis Based on Age-Biased Data

Abstract

:1. Introduction

- LoBue et al. conducted a study of emotional classification consisting only of kids under the age of 13. They organized kids into groups of various races and studied whether the kids’s race and ethnicity affected the accuracy in identifying emotional expressions. The accuracy of identifying kids’s emotions was, on average, 66%. The average of accurate responses for each of the seven categories of facial expressions did not show significant differences or interactions due to race and ethnicity [8].

- Gonçalves et al. conducted an emotion classification study based on a dataset that separated adults and the elderly from the age of 55. The results of the study showed that compared to the elderly, adults had higher accuracy in identifying emotions such as anger, sadness, fear, surprise, and happiness. In this result, it is said that aging and the classification of emotions are related and can affect emotional identification [9].

- Kim et al. conducted a study on emotion classification by constructing datasets of adults (19–31), middle-aged (39–55), and elderly (69–80) participants. The accuracy, using Amazon Recognition, was 89% for adults, 85% for middle-aged, and 68% for the elderly. The emotion classification accuracy between adults and middle-aged people showed a difference of less than 4%, but it was confirmed that the accuracy of emotion classification for the elderly was about 17% lower than that of adults and middle-aged people [10].

- Sullivan et al. studied the classification of emotions of the elderly, and they determined that it was difficult to classify emotions of the elderly when they were consistently expressing anger, fear, and sadness. Therefore, differences in emotional expression methods were studied in the elderly and adults [11].

- A study by Thomas et al. found that adults expressed fear and anger more accurately than kids. Adults confirmed higher accuracy in the classification of fear and anger emotions than kids [12].

- Lida et al. studied real-time emotion recognition using CNN MobileNetv2. In this study, the background of the image was learned so that it did not affect the emotional classification. CK+, JAFFE, and FER2013 were used as learning data. The accuracy of emotional classification was CK+ 86.86%, JAFFE 87.8%, and FER2013 66.28% [13].

- A study by Ishika et al. used pre-processing steps and several CNN topologies to improve accuracy and training time. MMA FACILE EXPRESSION was used as the learning data, and 35,000 images were used. The accuracy of emotion classification was 69.2% [14].

- Yahia et al. used a face-sensitive convolutional neural network (FS-CNN) for human emotion recognition. The proposed FS-CNN is used to detect faces in high-scale images and analyze landmarks on faces to predict facial expressions for emotion recognition. The proposed FS-CNN was trained and evaluated on the UMD Faces dataset. The experiment resulted in an emotion classification accuracy of 94% [15].

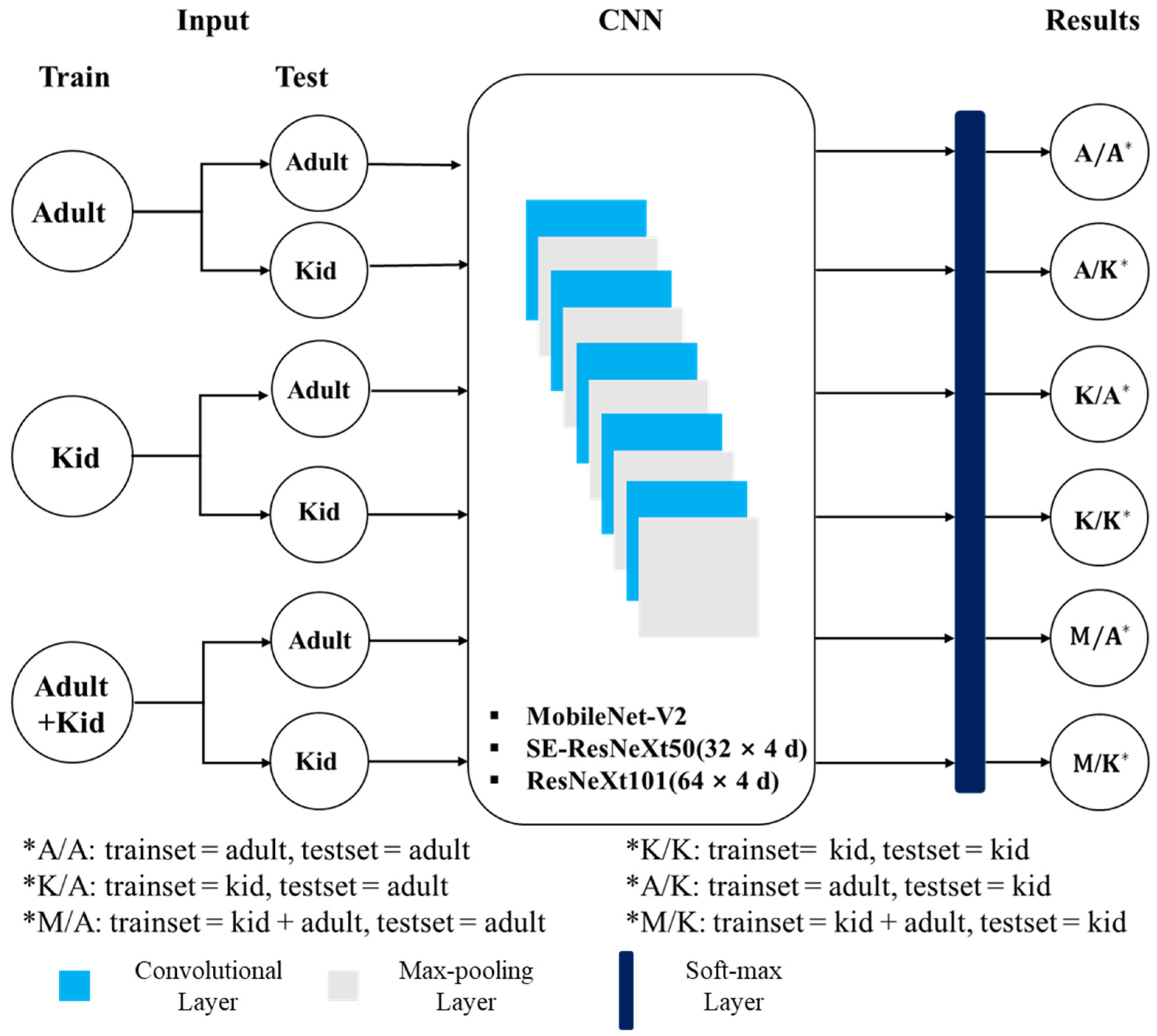

2. Experimental Method

3. Results and Discussion

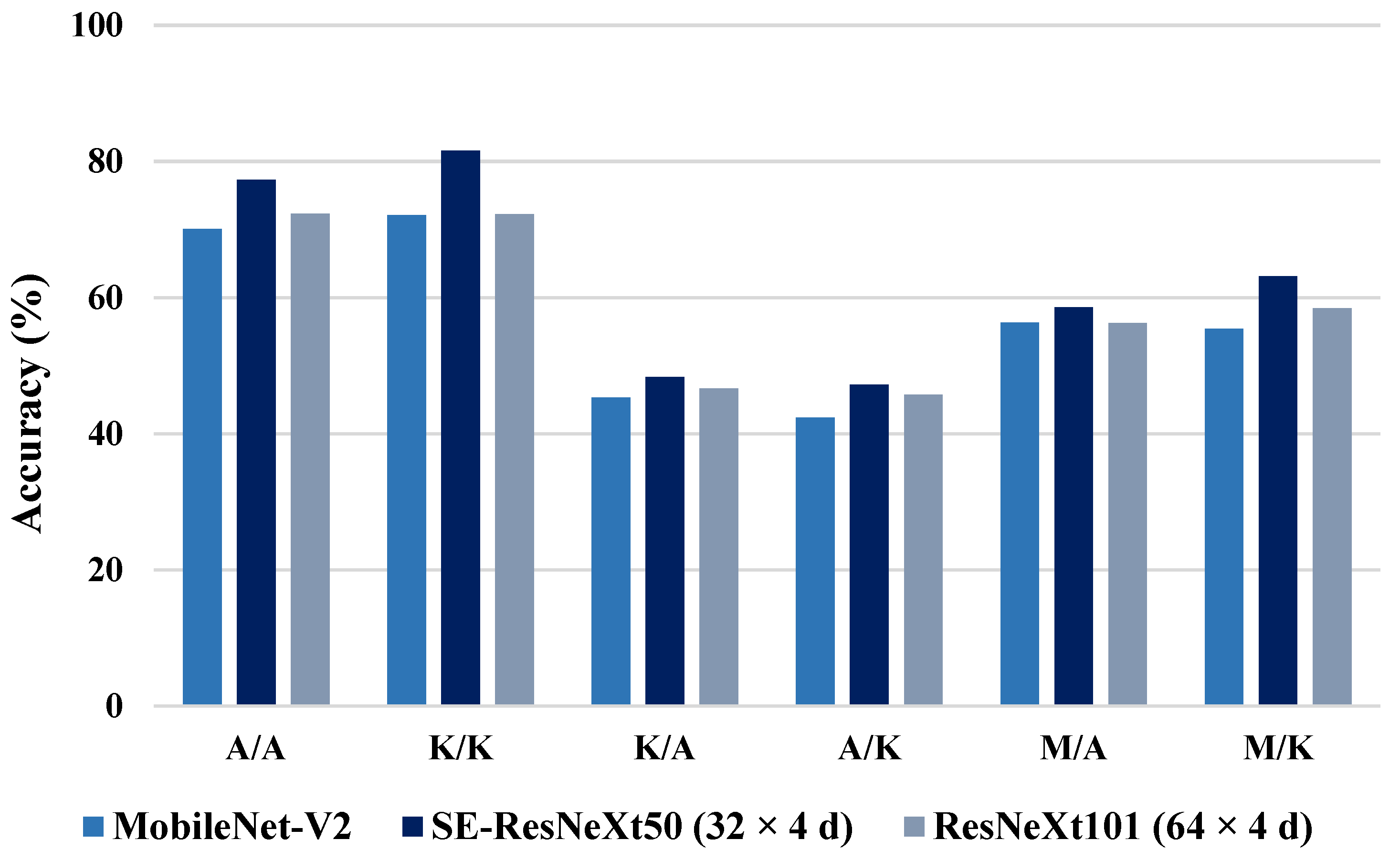

3.1. Comparison of the Average and Emotional Evaluation Metrics of the Architecture by Using the Learning Model

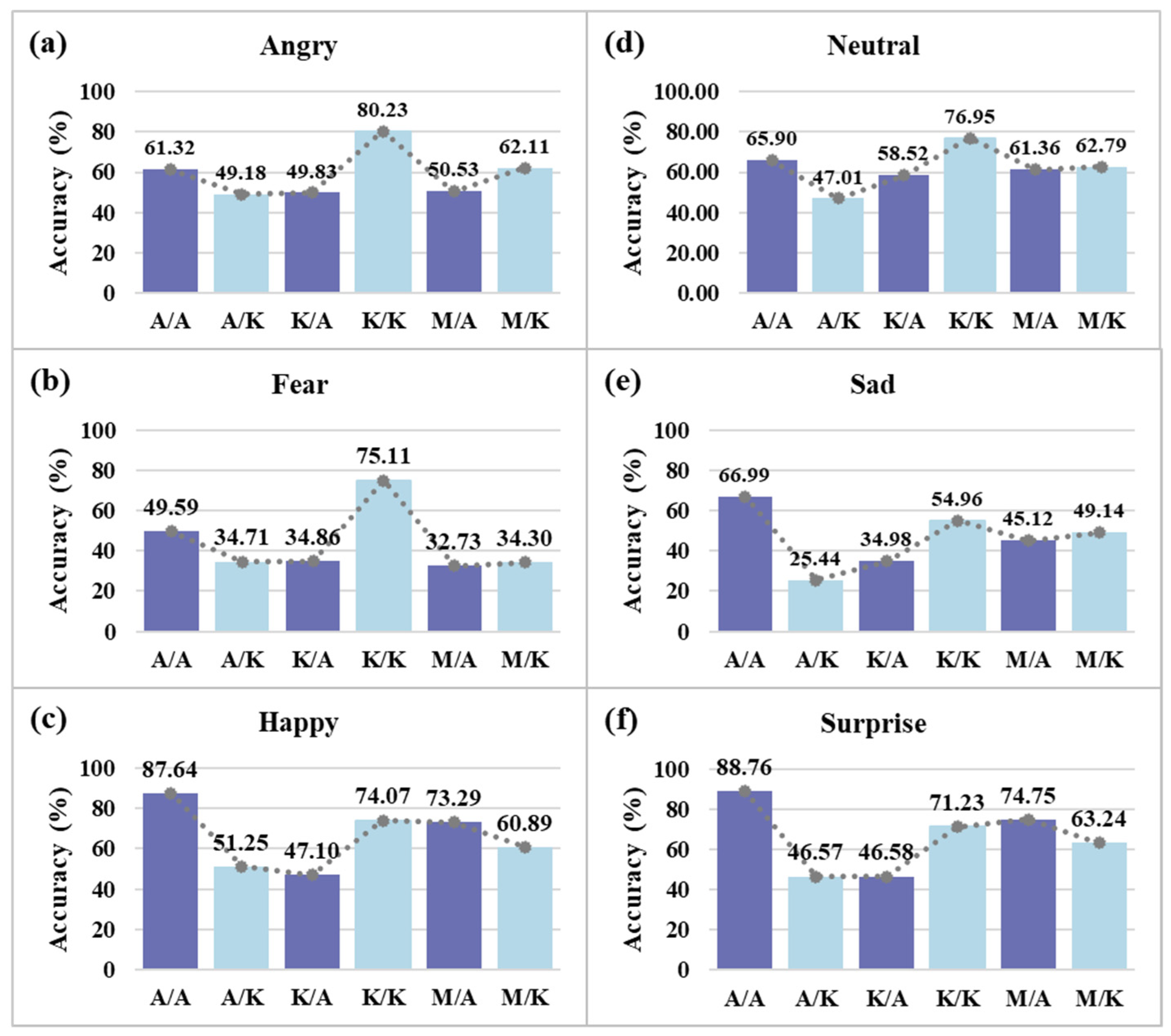

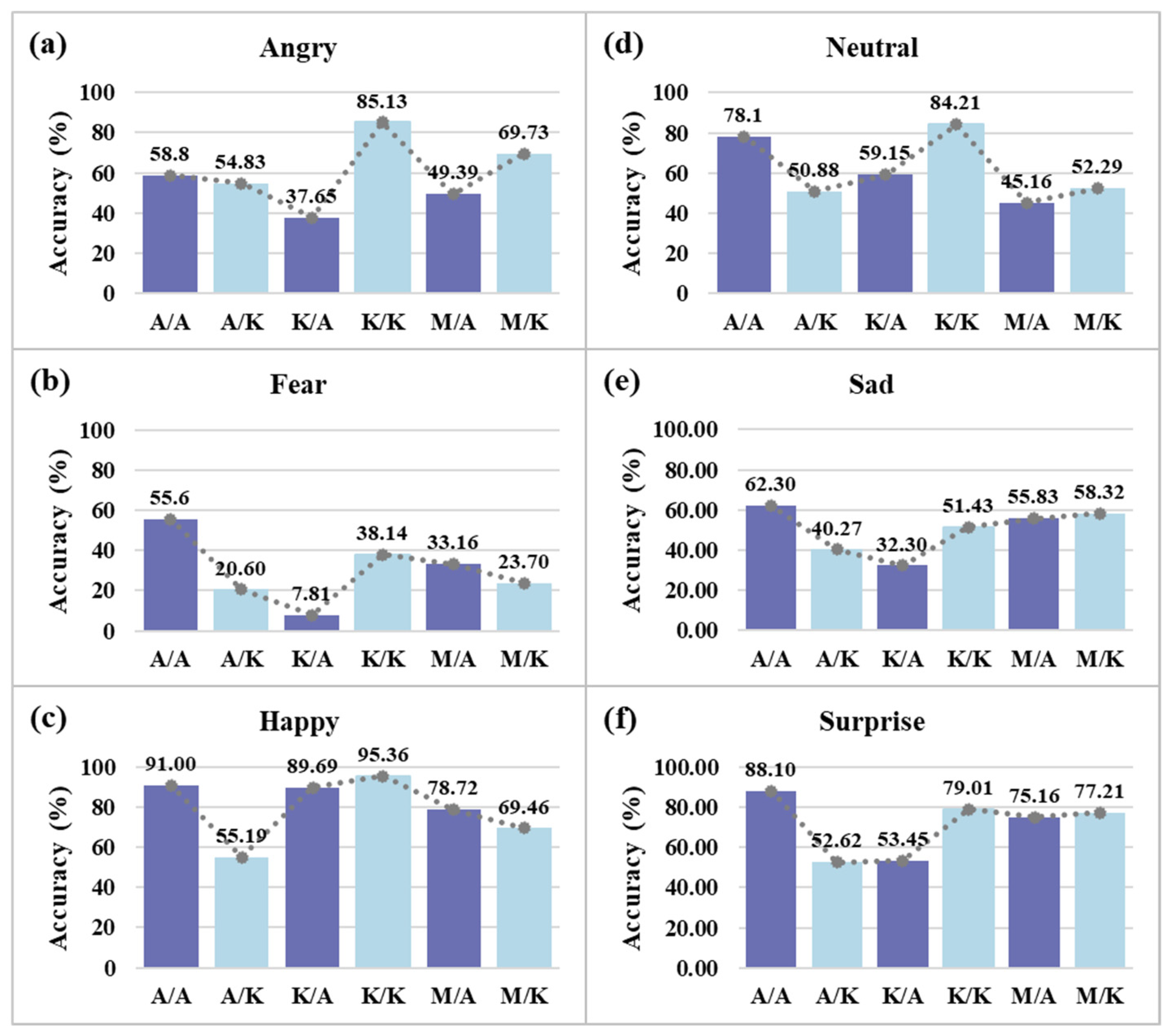

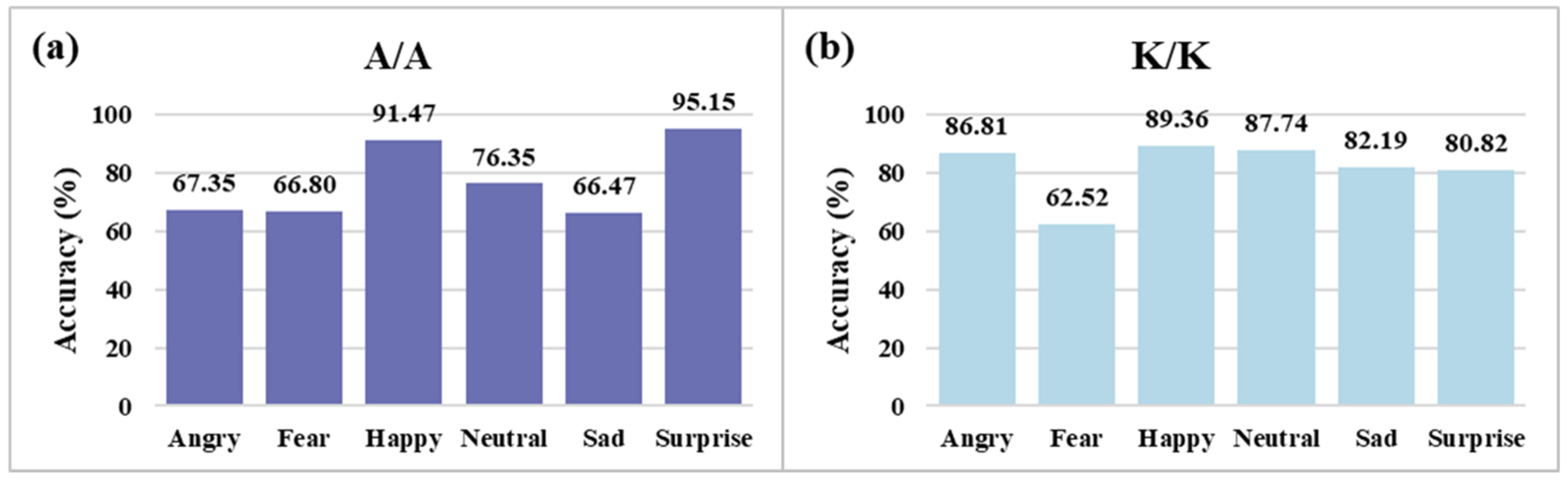

3.2. Comparison of the Emotional Accuracy in the Age-Specific Learning Model

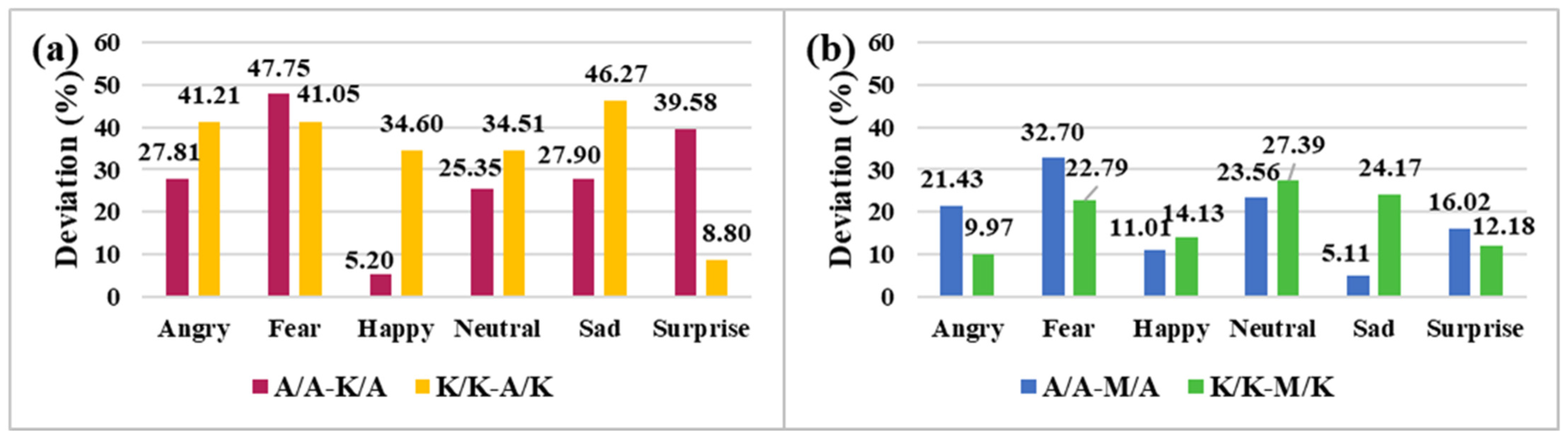

3.3. Comparison of Emotional Accuracy Deviation between the Learning Model

4. Conclusions

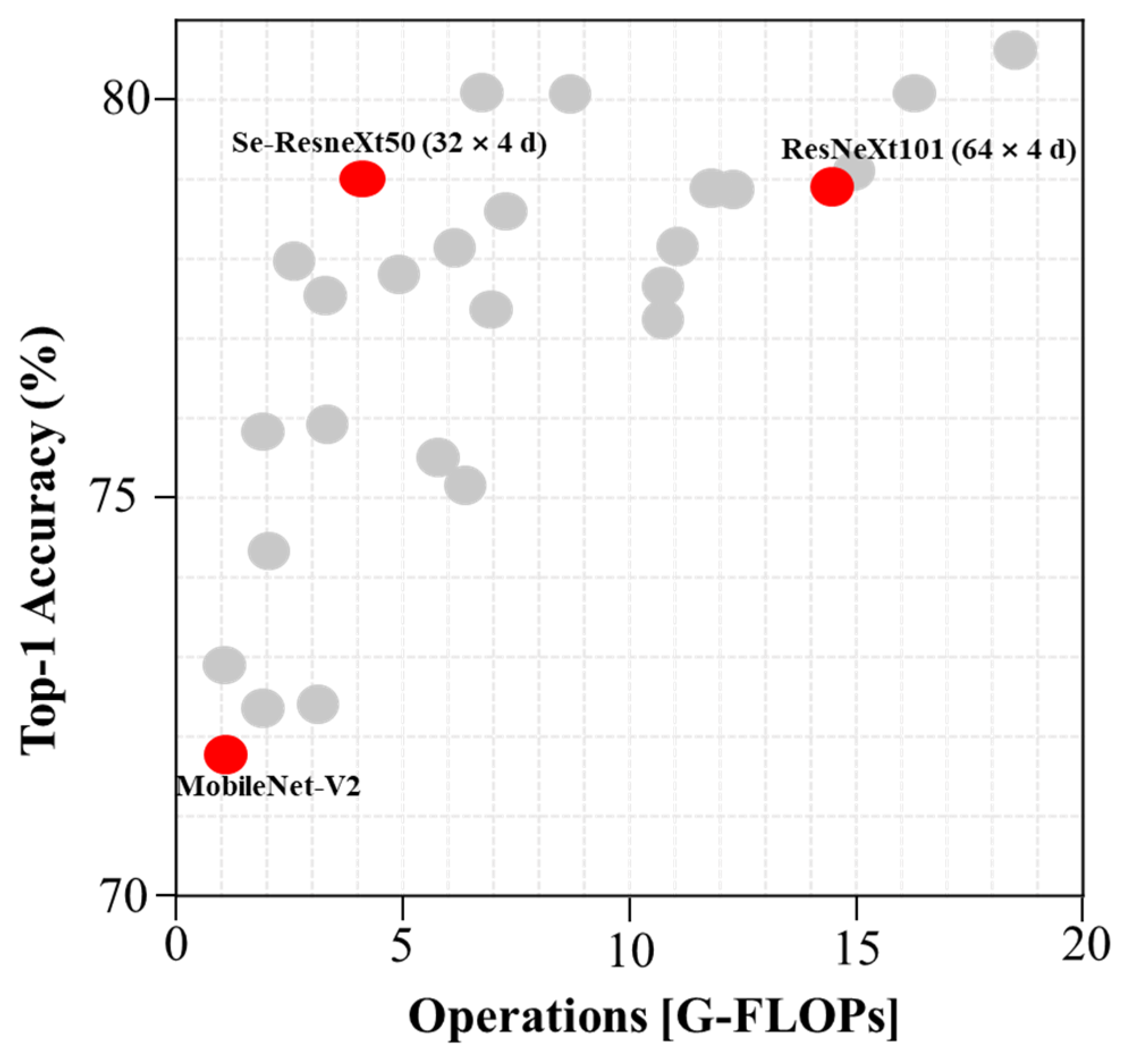

- Based on the CNN algorithm, emotion recognition accuracy was derived using three types of architecture, and the accuracy of the architecture was verified through comparison with the object recognition benchmark chart.

- When the data were trained by age, the average accuracy was 22% higher than that of the non-age-specific learning model.

- In the case of the adult learning model, it was difficult to recognize the emotions of angry, fear, and sad. In the case of the kid learning model, only the fear emotion was derived with low accuracy.

- The age-specific learning model and the mixed learning model also achieved up to 32.70% improvement in the recognition accuracy of the fear emotion.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jayawickrama, N.; Ojala, R.; Pirhonen, J.; Kivekäs, K.; Tammi, K. Classification of Trash and Valuables with Machine Vision in Shared Cars. Appl. Sci. 2022, 12, 5695. [Google Scholar] [CrossRef]

- Kim, J.C.; Kim, M.H.; Suh, H.E.; Naseem, M.T.; Lee, C.S. Hybrid Approach for Facial Expression Recognition Using Convolutional Neural Networks and SVM. Appl. Sci. 2022, 12, 5493. [Google Scholar] [CrossRef]

- Jahangir, R.; Teh, Y.W.; Hanif, F.; Mujtaba, G. Deep learning approaches for speech emotion recognition: State of the art and research challenges. Multimed. Tools Appl. 2021, 80, 23745–23812. [Google Scholar] [CrossRef]

- Le, D.S.; Phan, H.H.; Hung, H.H.; Tran, V.A.; Nguyen, T.H.; Nguyen, D.Q. KFSENet: A Key Frame-Based Skeleton Feature Estimation and Action Recognition Network for Improved Robot Vision with Face and Emotion Recognition. Appl. Sci. 2022, 12, 5455. [Google Scholar] [CrossRef]

- El-Hasnony, I.M.; Elzeki, O.M.; Alshehri, A.; Salem, H. Multi-Label Active Learning-Based Machine Learning Model for Heart Disease Prediction. Sensors 2022, 22, 1184. [Google Scholar] [CrossRef]

- ElAraby, M.E.; Elzeki, O.M.; Shams, M.Y.; Mahmoud, A.; Salem, H. A novel Gray-Scale spatial exploitation learning Net for COVID-19 by crawling Internet resources. Biomed. Signal Process. Control 2022, 73, 103441. [Google Scholar] [CrossRef]

- Wang, Z.; Ho, S.B.; Cambria, E. A review of emotion sensing: Categorization models and algorithms. Multimed. Tools Appl. 2020, 79, 35553–35582. [Google Scholar] [CrossRef]

- LoBue, V.; Thrasher, C. The Child Affective Facial Expression (CAFE) set: Validity and reliability from untrained adults. Front. Psychol. 2015, 5, 1532. [Google Scholar] [CrossRef]

- Gonçalves, A.R.; Fernandes, C.; Pasion, R.; Ferreira-Santos, F.; Barbosa, F.; Marques-Teixeira, J. Effects of age on the identification of emotions in facial expressions: A meta-analysis. PeerJ 2018, 6, e5278. [Google Scholar] [CrossRef]

- Kim, E.; Bryant, D.A.; Srikanth, D.; Howard, A. Age bias in emotion detection: An analysis of facial emotion recognition performance on young, middle-aged, and older adults. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtual, 19–21 May 2021; pp. 638–644. [Google Scholar]

- Sullivan, S.; Ruffman, T.; Hutton, S.B. Age differences in emotion recognition skills and the visual scanning of emotion faces. J. Gerontol. Ser. B Psychol. Sci. Soc. Sci. 2007, 62, P53–P60. [Google Scholar] [CrossRef] [Green Version]

- Thomas, L.A.; De Bellis, M.D.; Graham, R.; LaBar, K.S. Development of emotional facial recognition in late childhood and adolescence. Dev. Sci. 2007, 10, 547–558. [Google Scholar] [CrossRef] [PubMed]

- Hu, L.; Ge, Q. Automatic facial expression recognition based on MobileNetV2 in Real-time. J. Phys. Conf. Ser. 2020, 1549, 2. [Google Scholar] [CrossRef]

- Agrawal, I.; Kumar, A.; Swathi, D.; Yashwanthi, V.; Hegde, R. Emotion Recognition from Facial Expression using CNN. In Proceedings of the 2021 IEEE 9th Region 10 Humanitarian Technology Conference (R10-HTC), Bangalore, India, 30 September–2 October 2021; pp. 1–6. [Google Scholar]

- Said, Y.; Barr, M. Human emotion recognition based on facial expressions via deep learning on high-resolution images. Multimed Tools Appl. 2021, 80, 25241–25253. [Google Scholar] [CrossRef]

- Nook, E.C.; Somerville, L.H. Emotion concept development from childhood to adulthood. In Emotion in the Mind and Body; Neta, M., Haas, I., Eds.; Nebraska Symposium on Motivation; Springer: Cham, Switzerland, 2019; Volume 66, pp. 11–41. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M. Challenges in representation learning: A report on three machine learning contests. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- MMA FACILE EXPRESSION|Kaggle. Available online: https://www.kaggle.com/mahmoudima/mma-facial-expression (accessed on 22 February 2022).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–21 June 2018; pp. 4510–4520. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–21 June 2018; pp. 7132–7141. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark analysis of representative deep neural network architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Zhang, Z.; Sabuncu, M. Generalized cross-entropy loss for training deep neural networks with noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the variance of the adaptive learning rate and beyond. arXiv 2019, arXiv:1908.03265. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Daskalaki, S.; Kopanas, I.; Avouris, N. Evaluation of classifiers for an uneven class distribution problem. Appl. Artif. Intell. 2006, 20, 381–417. [Google Scholar] [CrossRef]

- Giannopoulos, P.; Perikos, I.; Hatzilygeroudis, I. Deep learning approaches for facial emotion recognition: A case study on FER-2013. Smart Innov. Syst. Tech. 2018, 85, 1–16. [Google Scholar]

- Shi, J.; Zhu, S.; Liang, Z. Learning to Amend Facial Expression Representation via De-albino and Affinity. arXiv 2021, arXiv:2103.1018. [Google Scholar]

- Pramerdorfer, C.; Kampel, M. Facial expression recognition using convolutional neural networks: State of the art. arXiv 2016, arXiv:1612.02903. [Google Scholar]

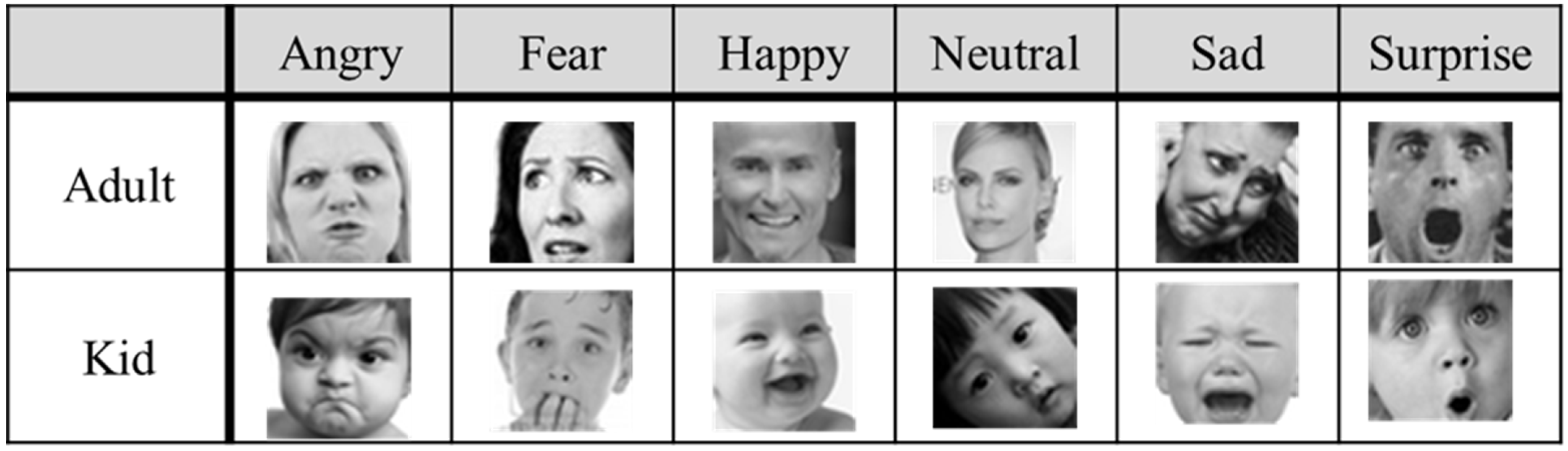

| Dataset | Adult Type, Kid Type, Adult + Kid Type |

|---|---|

| Ages | Adult type (≥14), Kid type (≤13) |

| Number of images in the dataset | 50,000 (Train: 45,000, Test: 5000) |

| Type of emotion | Angry, Fear, Happy, Neutral, Sad, Surprise |

| Parameter | MobileNet-V2 | SE-ResNeXt50 (32 × 4 d) | ResNeXt101 (64 × 4 d) | |

|---|---|---|---|---|

| Pre-Processing | Input dimension (pixel) Batch size | 3 × 224 × 224 32 | 3 × 224 × 224 32 | 3 × 224 × 224 12 |

| Learning | Optimization algorithm Learning rate (s) Learning scheduler Epochs Shuffle Elapsed time (min) | RAdam 1 × 103 Custom 50 True 235 | RAdam 1 × 103 Custom 50 True 955 | RAdam 1 × 103 Custom 50 True 1563 |

| Dataset | MobileNet-V2 | SE-ResNeXt50 (32 × 4 d) | ResNeXt101 (64 × 4 d) | |

|---|---|---|---|---|

| Accuracy (%) | A/A | 70.03 | 77.26 | 72.32 |

| A/K | 42.36 | 47.17 | 45.73 | |

| K/A | 45.31 | 48.33 | 46.67 | |

| K/K | 72.09 | 81.57 | 72.21 | |

| M/A | 56.30 | 58.96 | 56.24 | |

| M/K | 55.41 | 63.13 | 58.45 | |

| Recall (%) | A/A | 72.20 | 75.25 | 70.21 |

| A/K | 38.43 | 45.09 | 38.60 | |

| K/A | 41.78 | 47.07 | 45.14 | |

| K/K | 69.81 | 78.61 | 62.40 | |

| M/A | 56.37 | 62.80 | 59.60 | |

| M/K | 51.47 | 55.36 | 53.31 | |

| Precision (%) | A/A | 72.62 | 75.48 | 71.52 |

| A/K | 38.50 | 46.96 | 40.61 | |

| K/A | 41.78 | 47.63 | 45.04 | |

| K/K | 69.81 | 79.97 | 63.85 | |

| M/A | 56.37 | 64.81 | 60.63 | |

| M/K | 51.47 | 58.30 | 53.75 | |

| F1 score (%) | A/A | 72.33 | 74.78 | 70.27 |

| A/K | 37.97 | 44.33 | 38.75 | |

| K/A | 42.19 | 51.23 | 42.40 | |

| K/K | 68.04 | 79.07 | 61.78 | |

| M/A | 56.23 | 63.35 | 59.51 | |

| M/K | 49.97 | 55.93 | 53.41 | |

| Architecture | Dataset | Accuracy (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Angry | Fear | Happy | Neutral | Sad | Surprise | AVG | ||

| MobileNet-V2 | A/A A/K | 81.17 49.18 | 42.71 34.71 | 77.83 51.25 | 72.30 47.01 | 55.43 25.44 | 90.74 46.57 | 70.03 42.36 |

| K/A K/K | 49.83 80.23 | 34.86 75.11 | 47.10 74.07 | 58.52 76.95 | 34.98 54.96 | 46.58 71.23 | 45.31 72.09 | |

| M/A M/K | 50.53 62.11 | 32.73 34.30 | 73.29 60.89 | 61.36 62.79 | 45.12 49.14 | 74.75 63.24 | 56.30 55.41 | |

| SE-ResNeXt50 (32 × 4 d) | A/A A/K | 67.35 45.60 | 66.80 21.47 | 91.47 54.76 | 76.35 53.23 | 66.47 35.92 | 95.15 72.02 | 77.26 47.17 |

| K/A K/K | 44.18 86.81 | 10.44 62.52 | 81.63 89.36 | 42.43 87.74 | 45.61 82.19 | 65.70 80.82 | 48.33 81.57 | |

| M/A M/K | 72.02 76.84 | 52.18 39.73 | 59.73 75.23 | 54.11 60.35 | 47.58 58.02 | 68.15 68.64 | 58.96 63.13 | |

| ResNeXt101 (64 × 4 d) | A/A A/K | 58.80 54.83 | 55.60 20.60 | 91.00 55.19 | 78.10 50.88 | 62.30 40.27 | 88.10 52.62 | 72.32 45.73 |

| K/A K/K | 37.65 85.13 | 7.81 38.14 | 89.69 95.36 | 59.15 84.21 | 32.30 51.43 | 53.45 79.01 | 46.67 72.21 | |

| M/A M/K | 49.39 69.73 | 33.16 23.70 | 78.72 69.46 | 45.16 52.29 | 55.83 58.32 | 75.16 77.21 | 56.24 58.45 | |

| Method | Accuracy (%) |

|---|---|

| GoogleNet [27] | 65.20 |

| MobileNet-V2 [13] | 66.28 |

| ARM (ResNet-18) [28] | 71.38 |

| Inception [29] | 71.60 |

| ResNet [29] | 72.40 |

| VGG [29] | 72.70 |

| Our model (SE-ResNeXt50 (32 × 4 d)) | 79.42 |

| Architecture | MobileNet-V2 | SE-ResNeXt50 (32 × 4 d) | ResNeXt101 (64 × 4 d) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | Age-Specific | FER2013 | MMA | Age-Specific | FER2013 | MMA | Age-Specific | FER2013 | MMA |

| Accuracy (%) | 71.06 | 59.70 | 54.65 | 79.42 | 62.29 | 62.87 | 72.27 | 58.33 | 60.76 |

| Recall (%) | 71.00 | 58.19 | 54.40 | 76.93 | 59.86 | 52.66 | 66.31 | 56.54 | 49.59 |

| Precision (%) | 70.84 | 56.88 | 47.92 | 77.73 | 61.02 | 57.41 | 67.69 | 55.12 | 56.14 |

| F1 score (%) | 70.19 | 57.25 | 48.86 | 76.93 | 59.43 | 53.98 | 66.03 | 55.38 | 51.08 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.; Shin, Y.; Song, K.; Yun, C.; Jang, D. Facial Emotion Recognition Analysis Based on Age-Biased Data. Appl. Sci. 2022, 12, 7992. https://doi.org/10.3390/app12167992

Park H, Shin Y, Song K, Yun C, Jang D. Facial Emotion Recognition Analysis Based on Age-Biased Data. Applied Sciences. 2022; 12(16):7992. https://doi.org/10.3390/app12167992

Chicago/Turabian StylePark, Hyungjoo, Youngha Shin, Kyu Song, Channyeong Yun, and Dongyoung Jang. 2022. "Facial Emotion Recognition Analysis Based on Age-Biased Data" Applied Sciences 12, no. 16: 7992. https://doi.org/10.3390/app12167992

APA StylePark, H., Shin, Y., Song, K., Yun, C., & Jang, D. (2022). Facial Emotion Recognition Analysis Based on Age-Biased Data. Applied Sciences, 12(16), 7992. https://doi.org/10.3390/app12167992