SuperFormer: Enhanced Multi-Speaker Speech Separation Network Combining Channel and Spatial Adaptability

Abstract

:1. Introduction

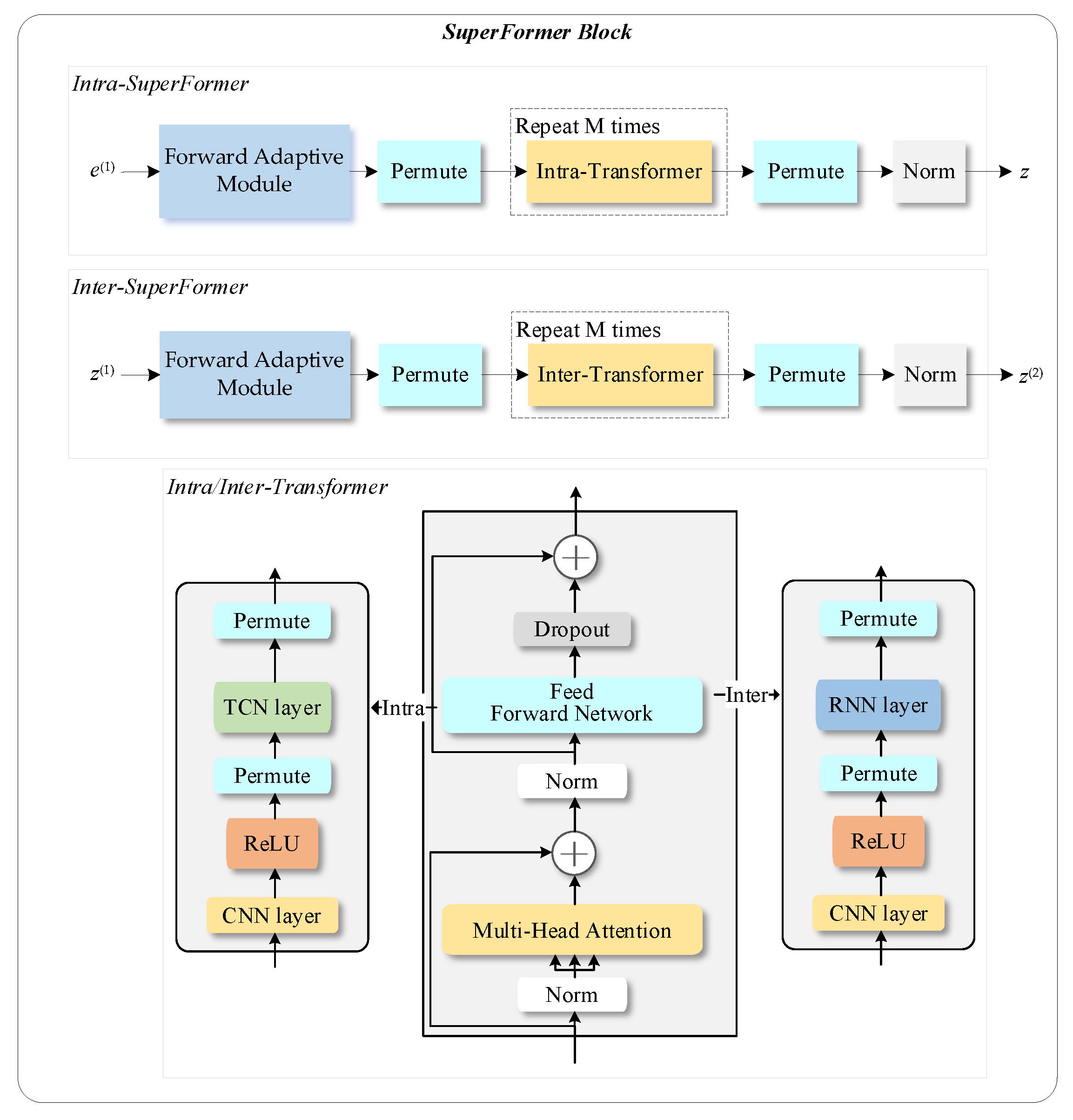

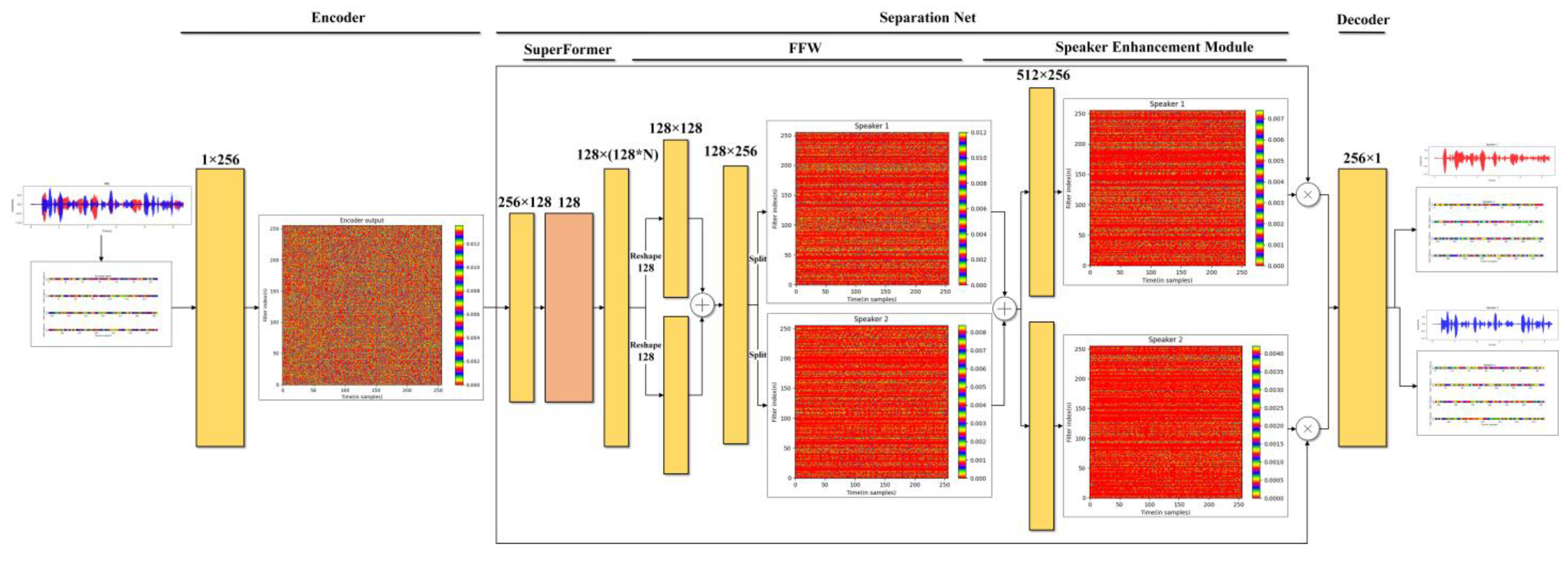

- In mixed speech, the features of the same speaker’s speech have autocorrelation and similarity but mutual suppression among multiple speakers. For better utilization the autocorrelation of mixed speech, this paper designs a forward adaptive module to extract channel and spatial adaptive information.

- Combining the dependence of channel and space, intra-SuperFormer and inter-SuperFormer are designed based on the Transformer module to fully learn the local and global features of mixed speech.

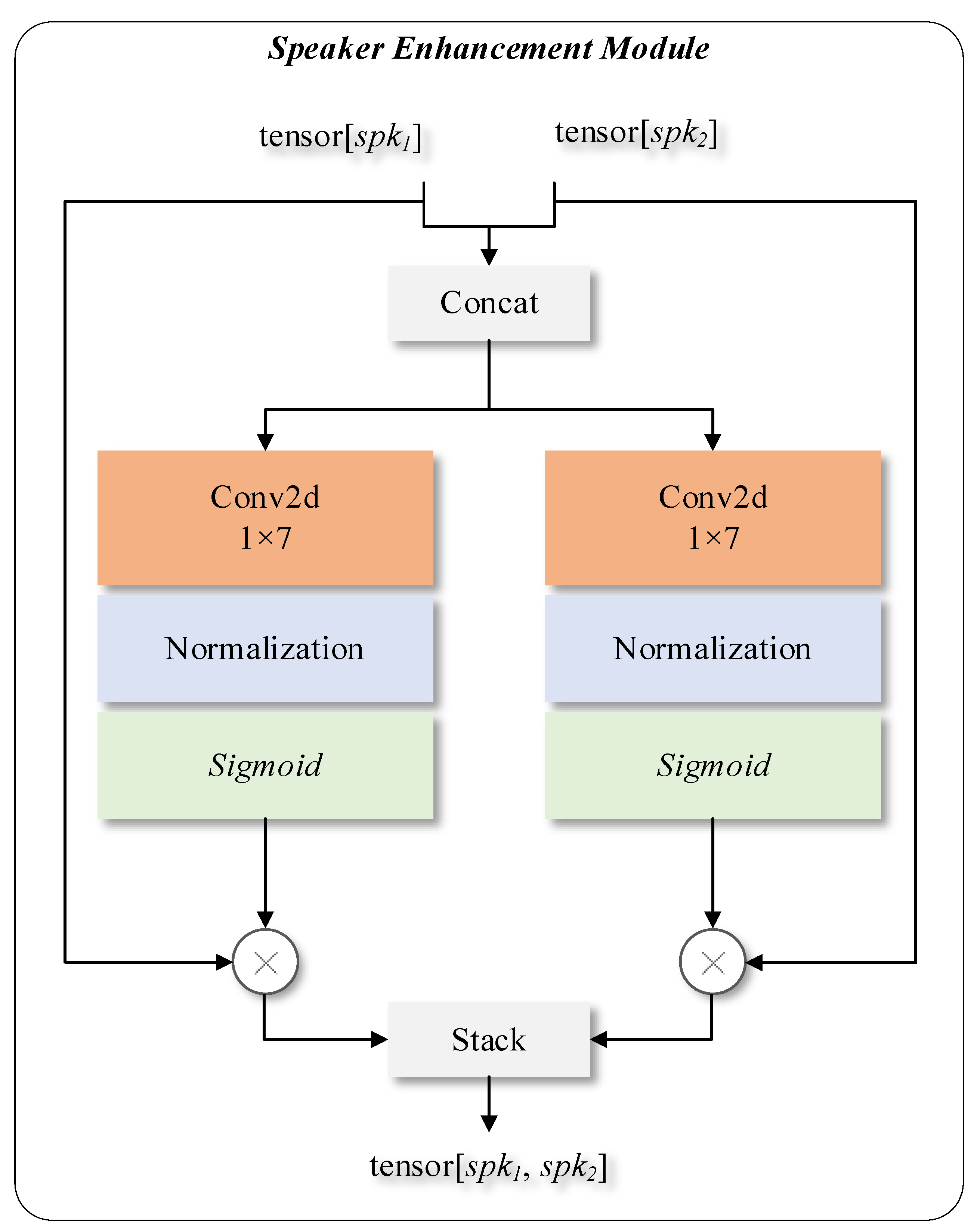

- A speaker enhancement module is added to the back end of SuperFormer to take full advantage of the heterogeneous information between different speakers. The speech separation network enhances the speaker’s speech according to these features to further improve the performance of the model.

2. Methods

2.1. Problem Definition

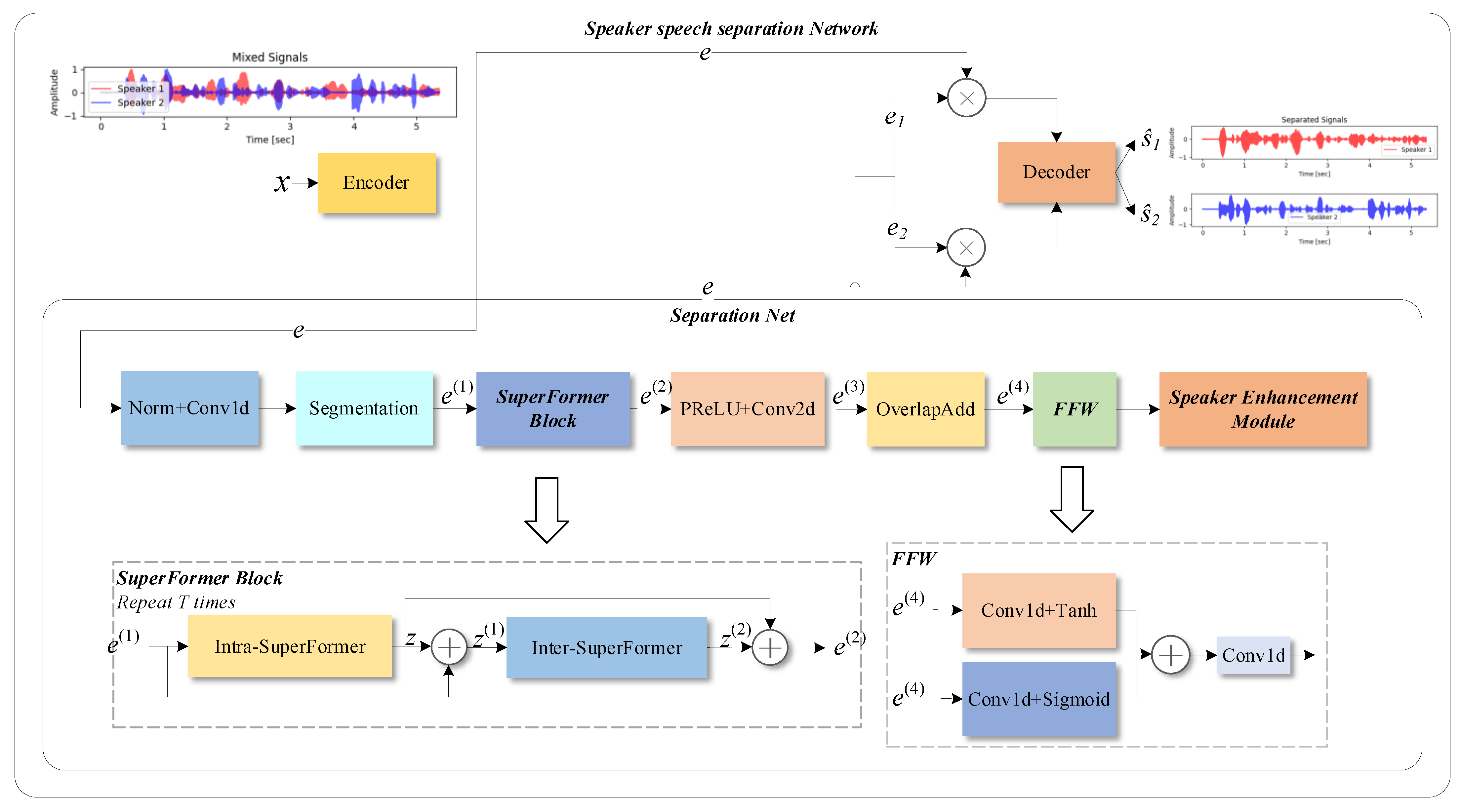

2.2. Model Design

2.2.1. Encoder

2.2.2. Separation Network

- 1.

- Separation Network

- 2.

- SuperFormer Block

- Forward Adaptive Module

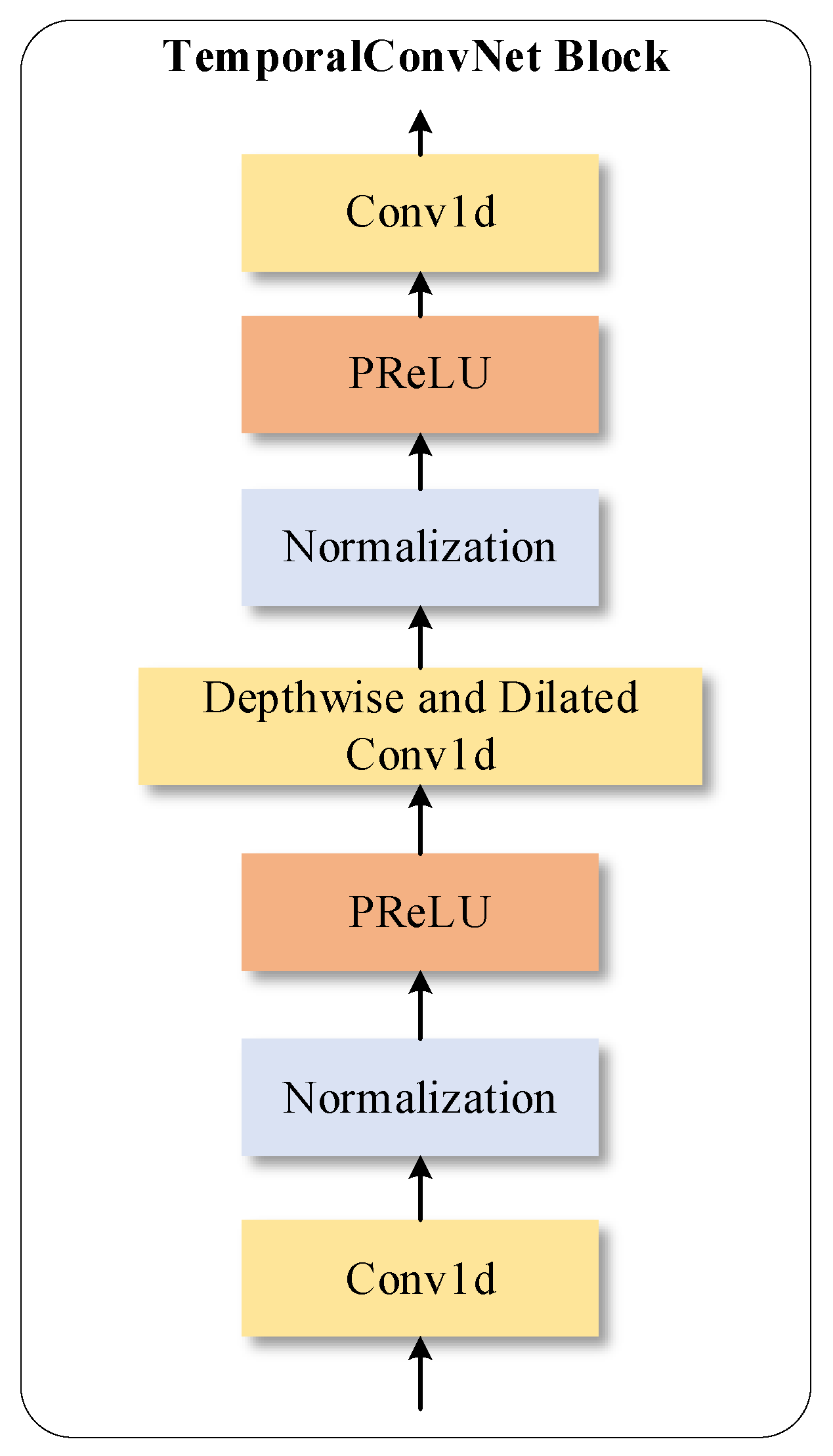

- Intra-Transformer and Inter-Transformer

- 3.

- Speaker Enhancement Module

2.2.3. Decoder

3. Experiments and Results

3.1. Dataset

3.2. Network Parameters and Experiment Configurations

3.2.1. Network Configurations

3.2.2. Experiment Configurations

3.3. Introduce Previous Methods and Evaluation Metrics

- On basis of the deep clustering framework, DPCL++ [12] introduced better regularization, larger temporal context, and a deeper architecture.

- DPCL model was added to realize the end-to-end training of signal reconstruction quality of the first time.

- uPIT-BLSTM-ST [11] added an utterance-level cost function to Permutation Invariant Training (PIT) technology to extend it, eliminating the additional permutation problem that frame level PIT needs to solve in the inference process.

- DANet [13] created attractor points by finding the centroids of the source in the embedded space, and then utilieze these centroids to determine the similarity between each bin in the mixture and each source, achieving end-to-end real-time separation on different numbers of mixed sources.

- ADANet [14] solved the problem of DANet’s mismatch between training and testing, providing a flexible solution and a generalized Expectation–Maximization strategy to determine attractors assigned from estimated speakers. This method maintains the flexibility of attractor formation at the discourse level and can be extended to variable signal conditions.

- Conv-Tasnet [2] proposed a fully convolution time-domain end-to-end speech separation network, it is a classic network structure with outstanding effect.

- DPRNN [3] proved that the deep structure of RNN layers organized by dual paths can better model extremely long sequences to improve separation performance.

- SepFormer [4] proved the effectiveness of learning short-term and long-term dependencies using a multi-scale method with dual-path transformers.

3.4. Comparison with Previous Methods

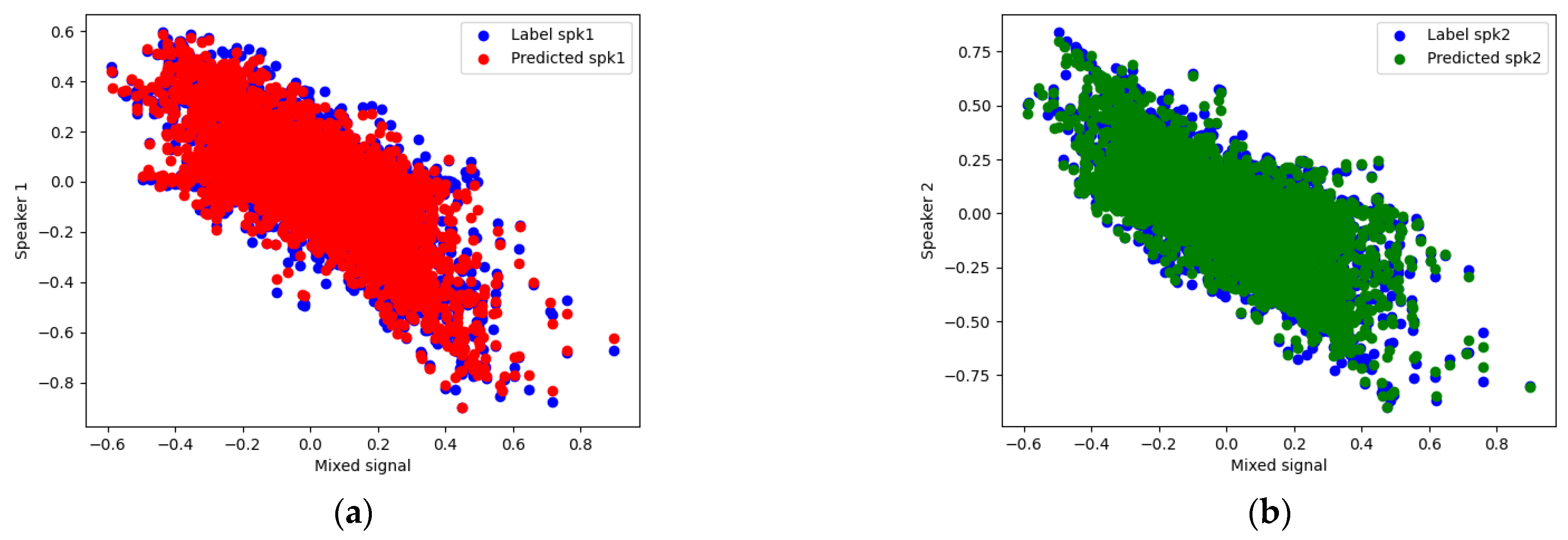

3.5. Visual Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luo, Y.; Mesgarani, N. TaSNet: Time-Domain Audio Separation Network for Real-Time, Single-Channel Speech Separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 696–700. [Google Scholar] [CrossRef] [Green Version]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Luo, Y.; Chen, Z.; Yoshioka, T. Dual-path RNN: Efficient long sequence modeling for time-domain single-channel speech separation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 4–8 May 2020; pp. 46–50. [Google Scholar] [CrossRef] [Green Version]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Bronzi, M.; Zhong, J. Attention is All You Need in Speech Separation. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, ON, Canada, 6–11 June 2021. [Google Scholar] [CrossRef]

- Lea, C.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks: A Unified Approach to Action Segmentation. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10, 15–16 October 2016; pp. 47–54. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. arXiv 2022, arXiv:2201.03545. [Google Scholar] [CrossRef]

- Garofolo, J.S.; Graff, D.; Paul, D.; Pallett, D. CSR-I (WSJ0) Complete LDC93S6A. Web Download; Linguistic Data Consortium: Philadelphia, PA, USA, 1993. [Google Scholar] [CrossRef]

- Hershey, J.R.; Chen, Z.; Le Roux, J.; Watanabe, S.J. Deep clustering: Discriminative embeddings for segmentation and separation. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 31–35. [Google Scholar] [CrossRef] [Green Version]

- Kolbaek, M.; Yu, D.; Tan, Z.H.; Jensen, J. Multi-talker speech separation with utterance-level permutation invariant training of deep recurrent neural networks. IEEE/ACM Trans. Audio Speech Lang. Processing 2017, 25, 1901–1913. [Google Scholar] [CrossRef] [Green Version]

- Isik, Y.; Roux, J.L.; Chen, Z.; Watanabe, S.; Hershey, J.R. Single-channel multi-speaker separation using deep clustering. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016; pp. 545–549. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Luo, Y.; Mesgarani, N. Deep attractor network for single microphone speaker separation. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 246–250. [Google Scholar] [CrossRef] [Green Version]

- Luo, Y.; Chen, Z.; Mesgarani, N. Speaker-independent Speech Separation with Deep Attractor Network. IEEE/ACM Trans. Audio Speech Lang. Process 2018, 26, 787–796. [Google Scholar] [CrossRef]

- Xu, C.L.; Rao, W.; Xiao, X.; Chng, E.S.; Li, H.Z. Single Channel Speech Separation with Constrained Utterance Level Permutation Invariant Training Using Grid LSTM. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar] [CrossRef]

- Li, C.X.; Zhu, L.; Xu, S.; Gao, P.; Xu, B. CBLDNN-Based Speaker-Independent Speech Separation Via Generative Adversarial Training. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 711–715. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Roux, J.L.; Hershey, J.R. Alternative objective functions for deep clustering. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 686–690. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Le Roux, J.; Wang, D.L.; Hershey, J. End-to-End Speech Separation with Unfolded Iterative Phase Reconstruction. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018. [Google Scholar] [CrossRef] [Green Version]

- Luo, Y.; Mesgarani, N. Real-time Single-channel Dereverberation and Separation with Time-domain Audio Separation Network. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018. [Google Scholar] [CrossRef] [Green Version]

| Model | SI-SNRi | SDRi | #Param | Stride |

|---|---|---|---|---|

| uPIT-BLSTM-ST [11] | - | 10.0 | 92.7 M | - |

| cuPIT-Grid-RD [15] | - | 10.2 | 47.2 M | - |

| ADANet [14] | 10.4 | 10.8 | 9.1 M | - |

| DANet [13] | 10.5 | - | 9.1 M | - |

| DPCL++ [12] | 10.8 | - | 13.6 M | - |

| CBLDNN-GAT [16] | - | 11.0 | 39.5 M | - |

| Tasnet [1] | 10.8 | 11.1 | n.a | 20 |

| Chimera++ [17] | 11.5 | 12.0 | 32.9 M | - |

| WA-MISI-5 [18] | 12.6 | 13.1 | 32.9 M | - |

| BLSTM-TasNet [19] | 13.2 | 13.6 | 23.6 M | - |

| Conv-Tasnet [2] | 15.3 | 15.6 | 5.1 M | 10 |

| SuperFormer | 15.2 | 15.3 | 8.1 M | 4 |

| +FA | 16.8 | 16.9 | 10.6 M | 4 |

| +FA+SE | 17.6 | 17.8 | 12.8 M | 4 |

| Dataset | PESQ | |||||

|---|---|---|---|---|---|---|

| DANet-Kmeans | DANet-Fixed | ADANet-6-do | Conv-TasNet | SuperFormer | Clean | |

| WSJ0-2mix | 2.64 | 2.57 | 2.82 | 3.24 | 3.43 | 4.5 |

| Dataset | SuperFormer | Conv-TasNet-gLN | ||

|---|---|---|---|---|

| STOI | MOS-LQO | MOS | Clean | |

| WSJ0-2mix | 0.97 | 3.45 | 4.03 | 4.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Qiu, Y.; Shen, X.; Sun, C.; Liu, H. SuperFormer: Enhanced Multi-Speaker Speech Separation Network Combining Channel and Spatial Adaptability. Appl. Sci. 2022, 12, 7650. https://doi.org/10.3390/app12157650

Jiang Y, Qiu Y, Shen X, Sun C, Liu H. SuperFormer: Enhanced Multi-Speaker Speech Separation Network Combining Channel and Spatial Adaptability. Applied Sciences. 2022; 12(15):7650. https://doi.org/10.3390/app12157650

Chicago/Turabian StyleJiang, Yanji, Youli Qiu, Xueli Shen, Chuan Sun, and Haitao Liu. 2022. "SuperFormer: Enhanced Multi-Speaker Speech Separation Network Combining Channel and Spatial Adaptability" Applied Sciences 12, no. 15: 7650. https://doi.org/10.3390/app12157650

APA StyleJiang, Y., Qiu, Y., Shen, X., Sun, C., & Liu, H. (2022). SuperFormer: Enhanced Multi-Speaker Speech Separation Network Combining Channel and Spatial Adaptability. Applied Sciences, 12(15), 7650. https://doi.org/10.3390/app12157650