RGB-D-Based Robotic Grasping in Fusion Application Environments

Abstract

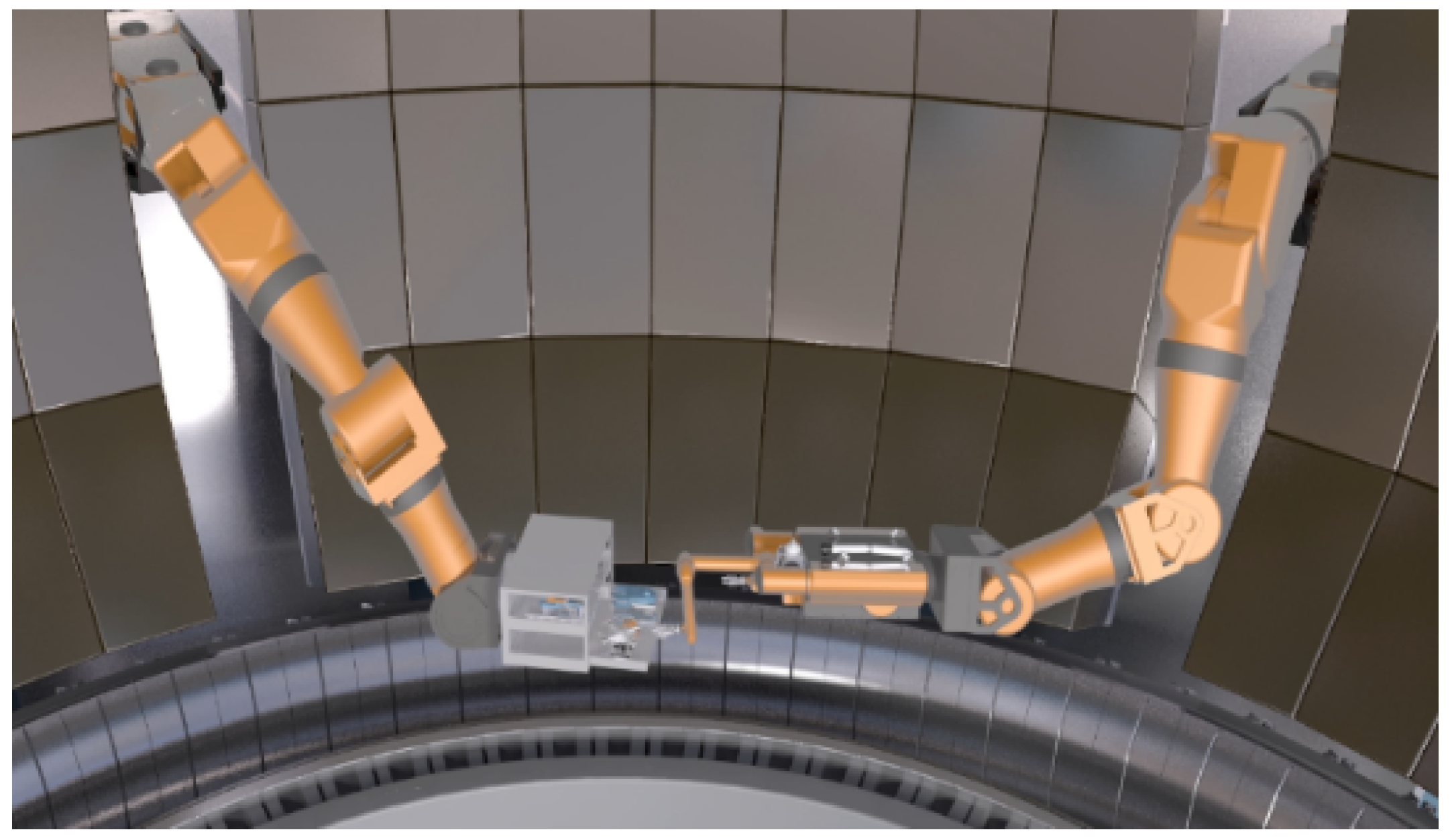

:1. Introduction

- Using the generated data as training data and translating the results from virtual to real;

- Transfer the task from individual objects to cluster objects grasping.

2. Materials and Methods

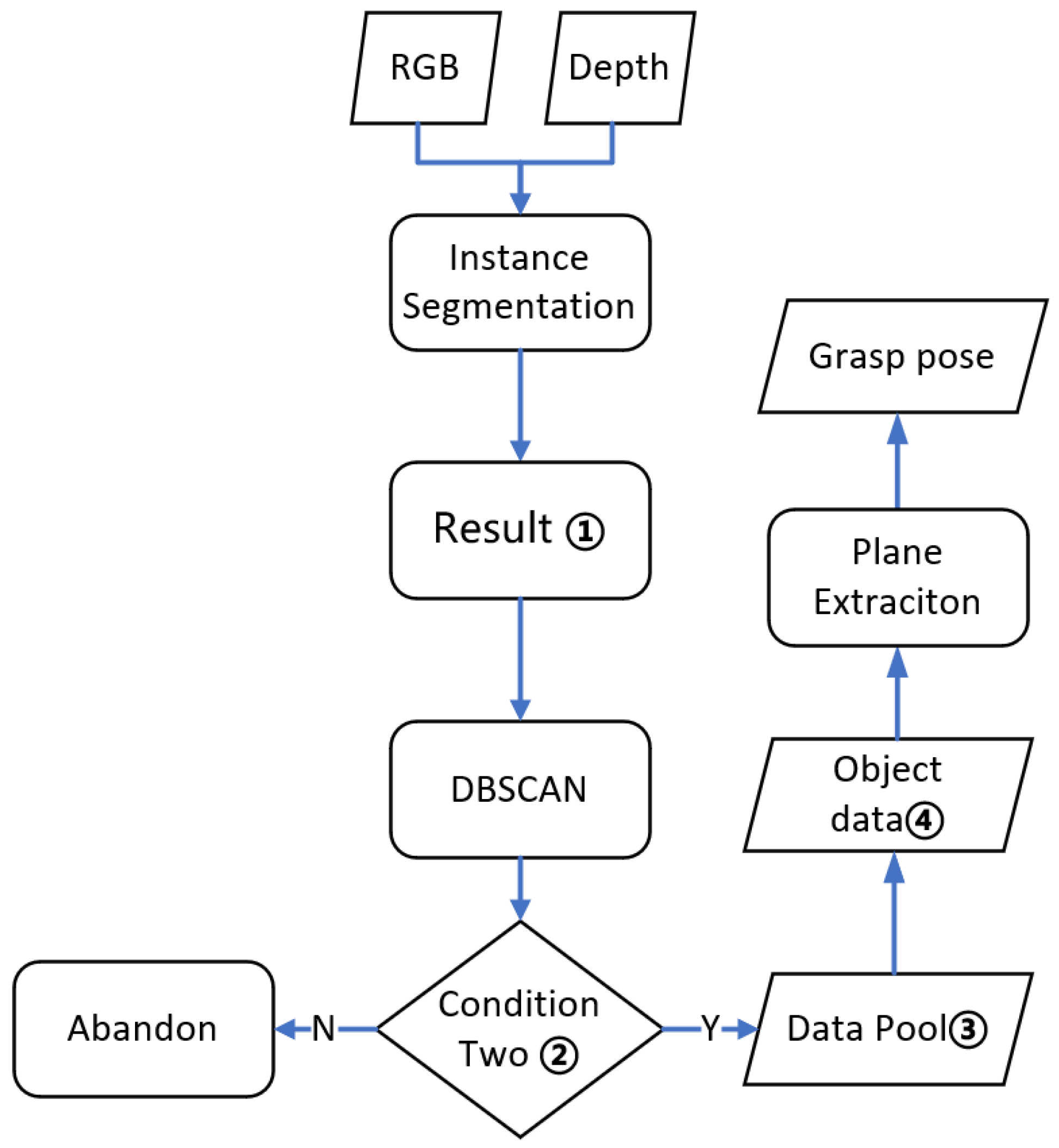

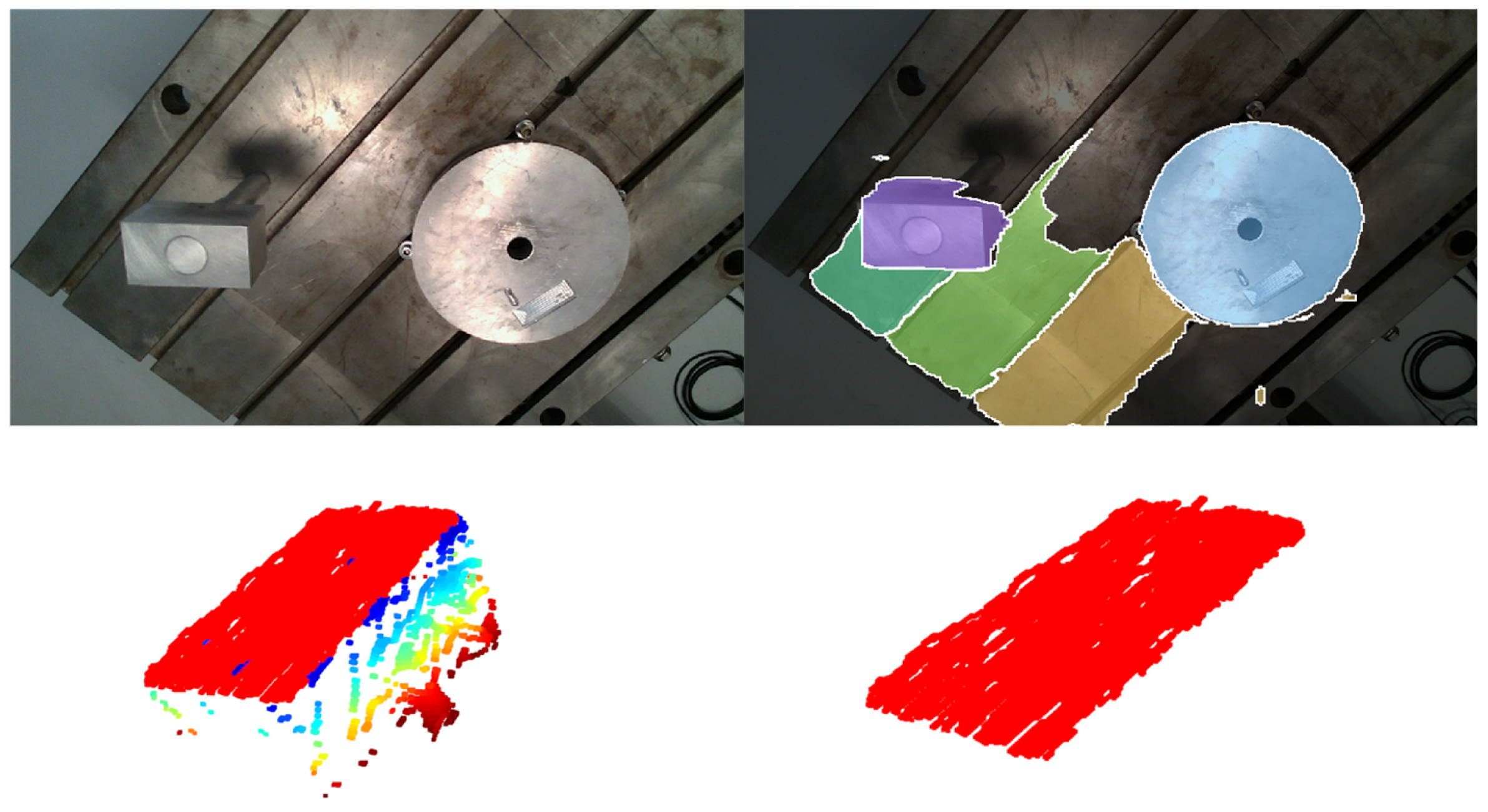

2.1. Instance Segmentation Network

2.2. Clustering Algorithm

| Algorithm 1 DBSCAN |

Input: dataset , algorithm parameter

Output: Clusters set |

2.3. Plane Extraction, Grasping Pose Calculation and Contact Point Chosen

| Algorithm 2 RANSAC plane extraction |

Input: Point clouds , initialise the parameter , iteration times and the distance threshold

Output:, , |

3. Results

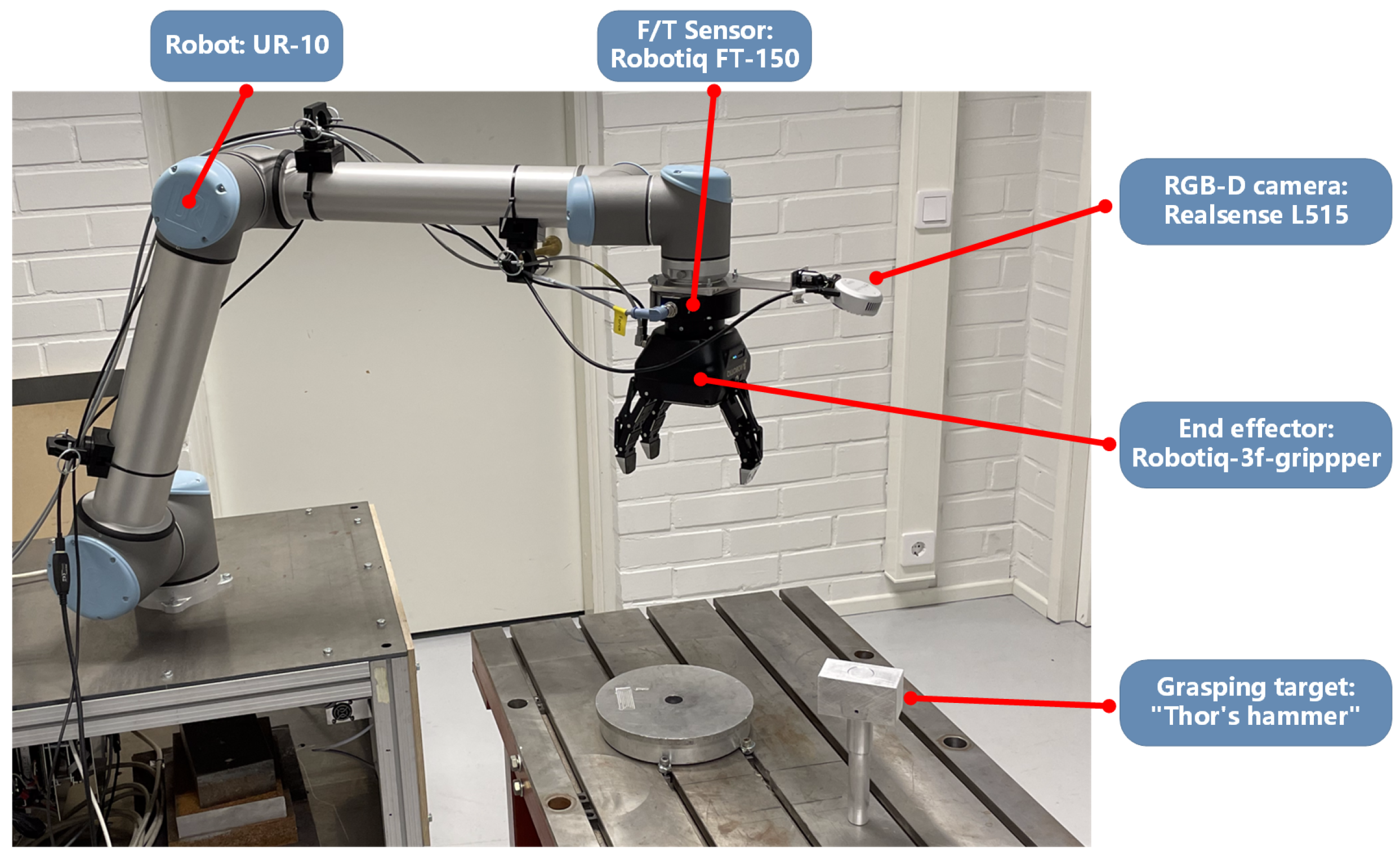

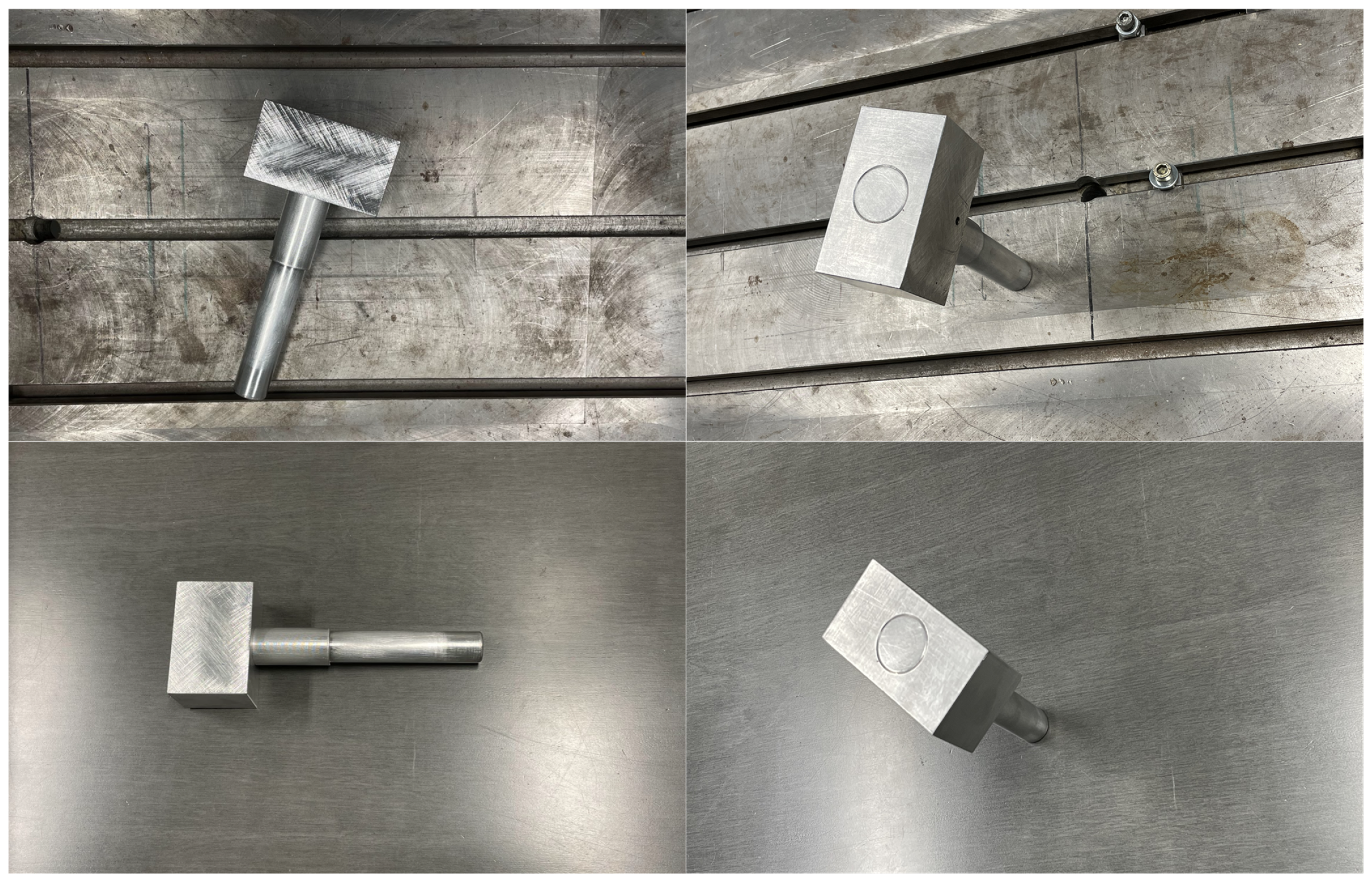

3.1. Experimental Platform

3.2. Visually Intuitive Evaluation

3.3. Quantitative Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Maitin-Shepard, J.; Cusumano-Towner, M.; Lei, J.; Abbeel, P. Cloth grasp point detection based on multiple-view geometric cues with application to robotic towel folding. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2308–2315. [Google Scholar]

- Levine, S.; Pastor, P.; Krizhevsky, A.; Ibarz, J.; Quillen, D. Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Robot. Res. 2018, 37, 421–436. [Google Scholar] [CrossRef]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef] [Green Version]

- Kumra, S.; Kanan, C. Robotic grasp detection using deep convolutional neural networks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 769–776. [Google Scholar]

- Morrison, D.; Corke, P.; Leitner, J. Learning robust, real-time, reactive robotic grasping. Int. J. Robot. Res. 2020, 39, 183–201. [Google Scholar] [CrossRef]

- Kumra, S.; Joshi, S.; Sahin, F. Antipodal robotic grasping using generative residual convolutional neural network. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 9626–9633. [Google Scholar]

- Bousmalis, K.; Irpan, A.; Wohlhart, P.; Bai, Y.; Kelcey, M.; Kalakrishnan, M.; Downs, L.; Ibarz, J.; Pastor, P.; Konolige, K.; et al. Using simulation and domain adaptation to improve efficiency of deep robotic grasping. In Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4243–4250. [Google Scholar]

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep object pose estimation for semantic robotic grasping of household objects. arXiv 2018, arXiv:1809.10790. [Google Scholar]

- Murali, A.; Mousavian, A.; Eppner, C.; Paxton, C.; Fox, D. 6-dof grasping for target-driven object manipulation in clutter. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6232–6238. [Google Scholar]

- Sundermeyer, M.; Mousavian, A.; Triebel, R.; Fox, D. Contact-graspnet: Efficient 6-dof grasp generation in cluttered scenes. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13438–13444. [Google Scholar]

- Wen, B.; Lian, W.; Bekris, K.; Schaal, S. CaTGrasp: Learning Category-Level Task-Relevant Grasping in Clutter from Simulation. arXiv 2021, arXiv:2109.09163. [Google Scholar]

- Tobin, J.; Biewald, L.; Duan, R.; Andrychowicz, M.; Handa, A.; Kumar, V.; McGrew, B.; Ray, A.; Schneider, J.; Welinder, P.; et al. Domain randomization and generative models for robotic grasping. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3482–3489. [Google Scholar]

- Varley, J.; DeChant, C.; Richardson, A.; Ruales, J.; Allen, P. Shape completion enabled robotic grasping. In Proceedings of the 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2442–2447. [Google Scholar]

- James, S.; Wohlhart, P.; Kalakrishnan, M.; Kalashnikov, D.; Irpan, A.; Ibarz, J.; Levine, S.; Hadsell, R.; Bousmalis, K. Sim-to-real via sim-to-sim: Data-efficient robotic grasping via randomized-to-canonical adaptation networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12627–12637. [Google Scholar]

- Joshi, S.; Kumra, S.; Sahin, F. Robotic grasping using deep reinforcement learning. In Proceedings of the 2020 IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 1461–1466. [Google Scholar]

- Quillen, D.; Jang, E.; Nachum, O.; Finn, C.; Ibarz, J.; Levine, S. Deep reinforcement learning for vision-based robotic grasping: A simulated comparative evaluation of off-policy methods. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 6284–6291. [Google Scholar]

- Xiang, Y.; Xie, C.; Mousavian, A.; Fox, D. Learning RGB-D Feature Embeddings for Unseen Object Instance Segmentation. In Proceedings of the Conference on Robot Learning (CoRL), Virtual Event, 16–18 November 2020. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD), Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef] [Green Version]

| GR-ConvNet | UOIS | HUDR | |

|---|---|---|---|

| wooden table + lying pose | 78% | 86% | 93% |

| wooden table + standing pose | 53% | 92% | 100% |

| metal table + lying pose | 49% | 44% | 82% |

| metal table + standing pose | 26% | 71% | 97% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yin, R.; Wu, H.; Li, M.; Cheng, Y.; Song, Y.; Handroos, H. RGB-D-Based Robotic Grasping in Fusion Application Environments. Appl. Sci. 2022, 12, 7573. https://doi.org/10.3390/app12157573

Yin R, Wu H, Li M, Cheng Y, Song Y, Handroos H. RGB-D-Based Robotic Grasping in Fusion Application Environments. Applied Sciences. 2022; 12(15):7573. https://doi.org/10.3390/app12157573

Chicago/Turabian StyleYin, Ruochen, Huapeng Wu, Ming Li, Yong Cheng, Yuntao Song, and Heikki Handroos. 2022. "RGB-D-Based Robotic Grasping in Fusion Application Environments" Applied Sciences 12, no. 15: 7573. https://doi.org/10.3390/app12157573

APA StyleYin, R., Wu, H., Li, M., Cheng, Y., Song, Y., & Handroos, H. (2022). RGB-D-Based Robotic Grasping in Fusion Application Environments. Applied Sciences, 12(15), 7573. https://doi.org/10.3390/app12157573