Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization

Abstract

:1. Introduction

- A data augmentation method that combines traditional data augmentation and deep learning technology;

- A deep learning framework that combines region location selection, local blur transformation, and an adversarial framework;

- Rather than adding more samples, the dataset is expanded by making the data samples harder. The original structure of the dataset is retained;

- The proposed framework optimizes both the data augmentation and the recognition model without any fine-tuning. The augmented samples are created by dynamic learning.

2. Related Work

2.1. Vehicle Re-Identification (ReID)

2.2. Generative Adversarial Networks (GAN)

2.3. Data Augmentation

3. Methodology

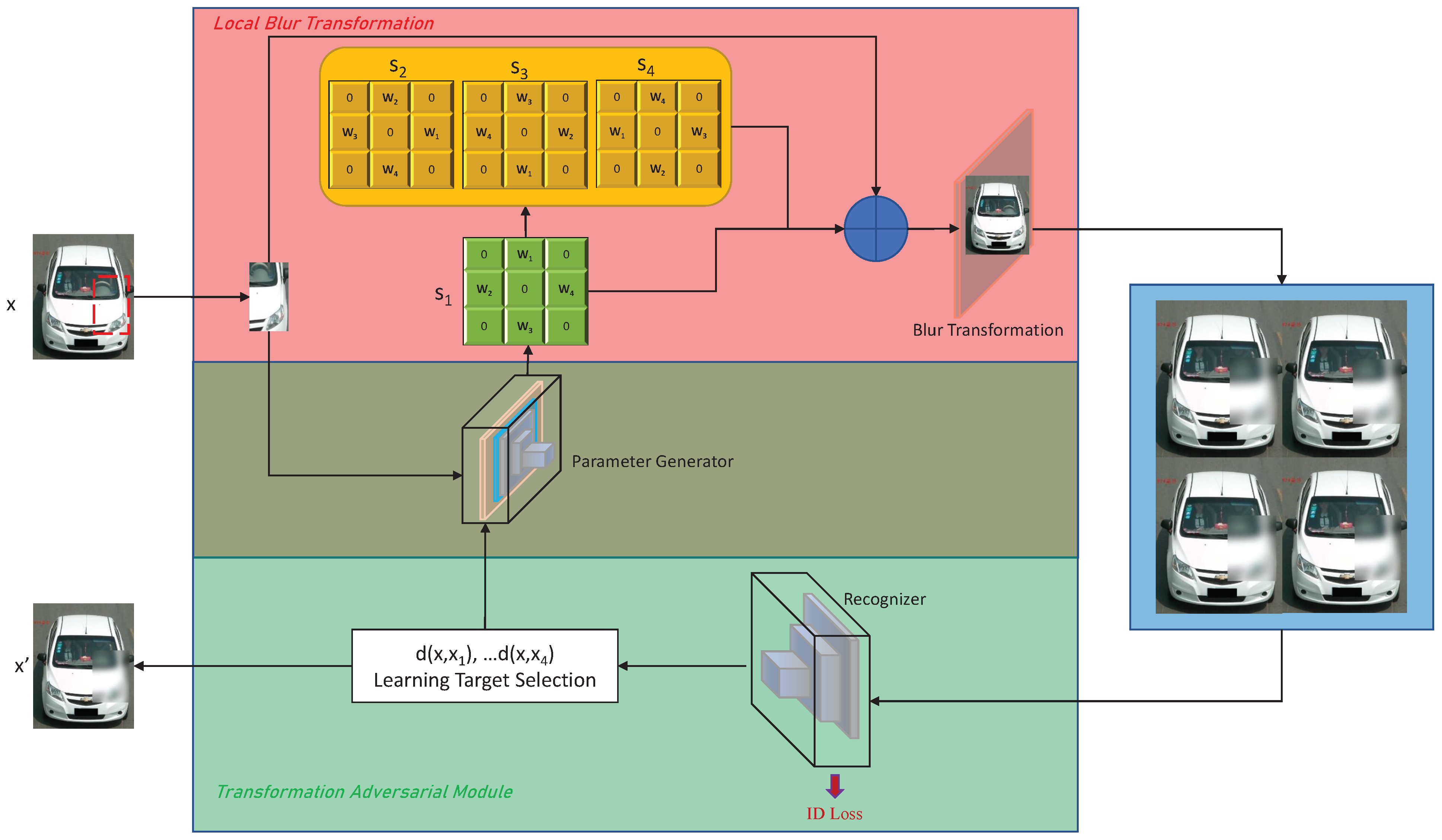

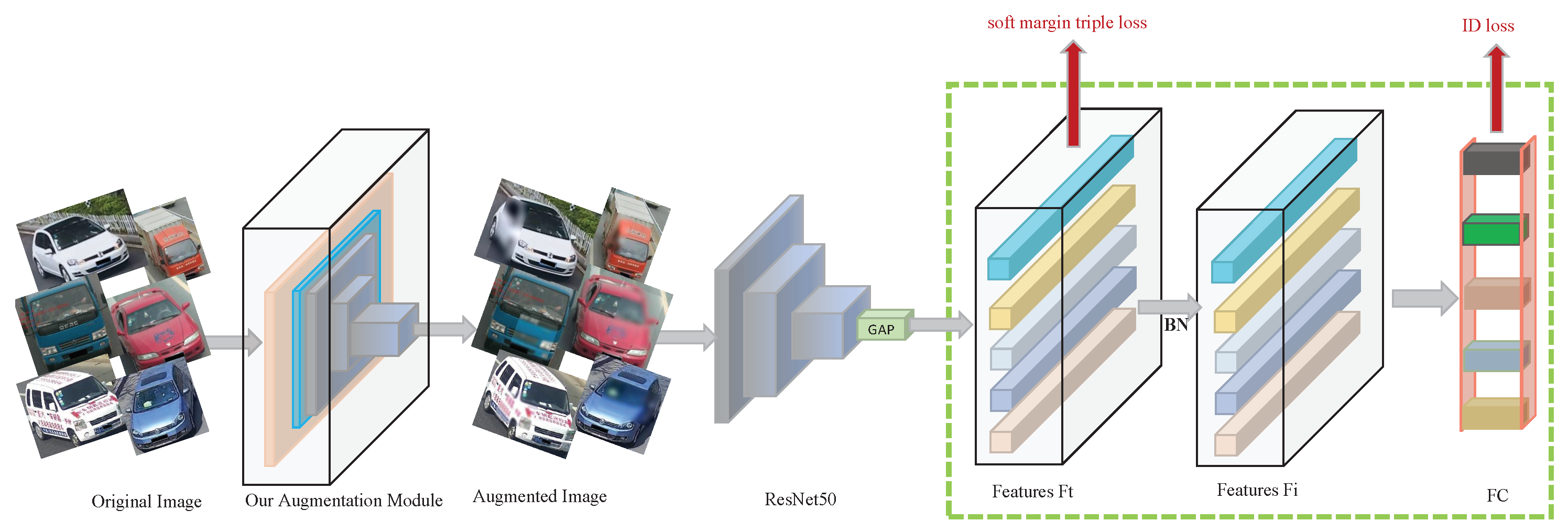

3.1. Overall Framework

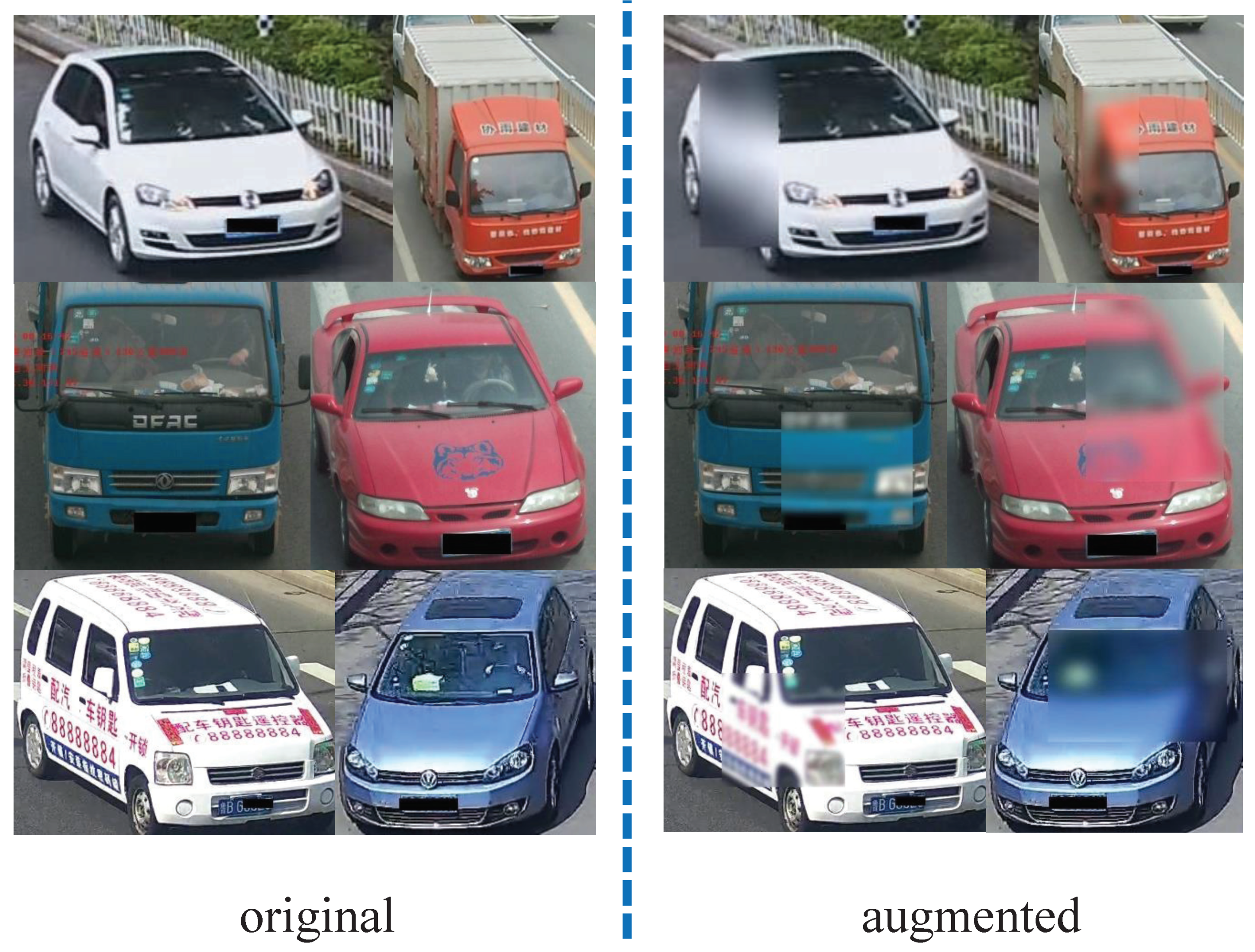

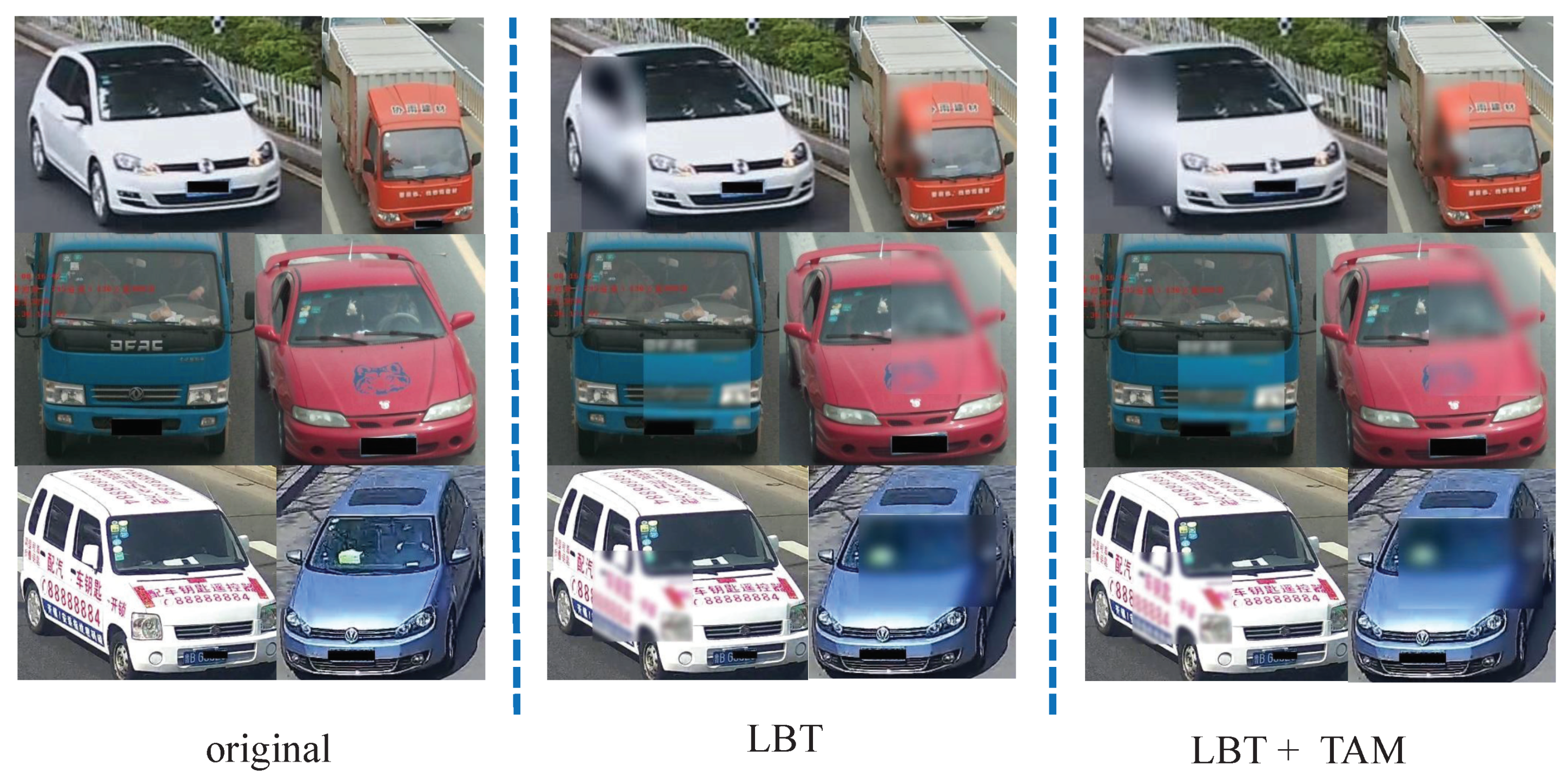

3.2. Local Blur Transformation (LBT)

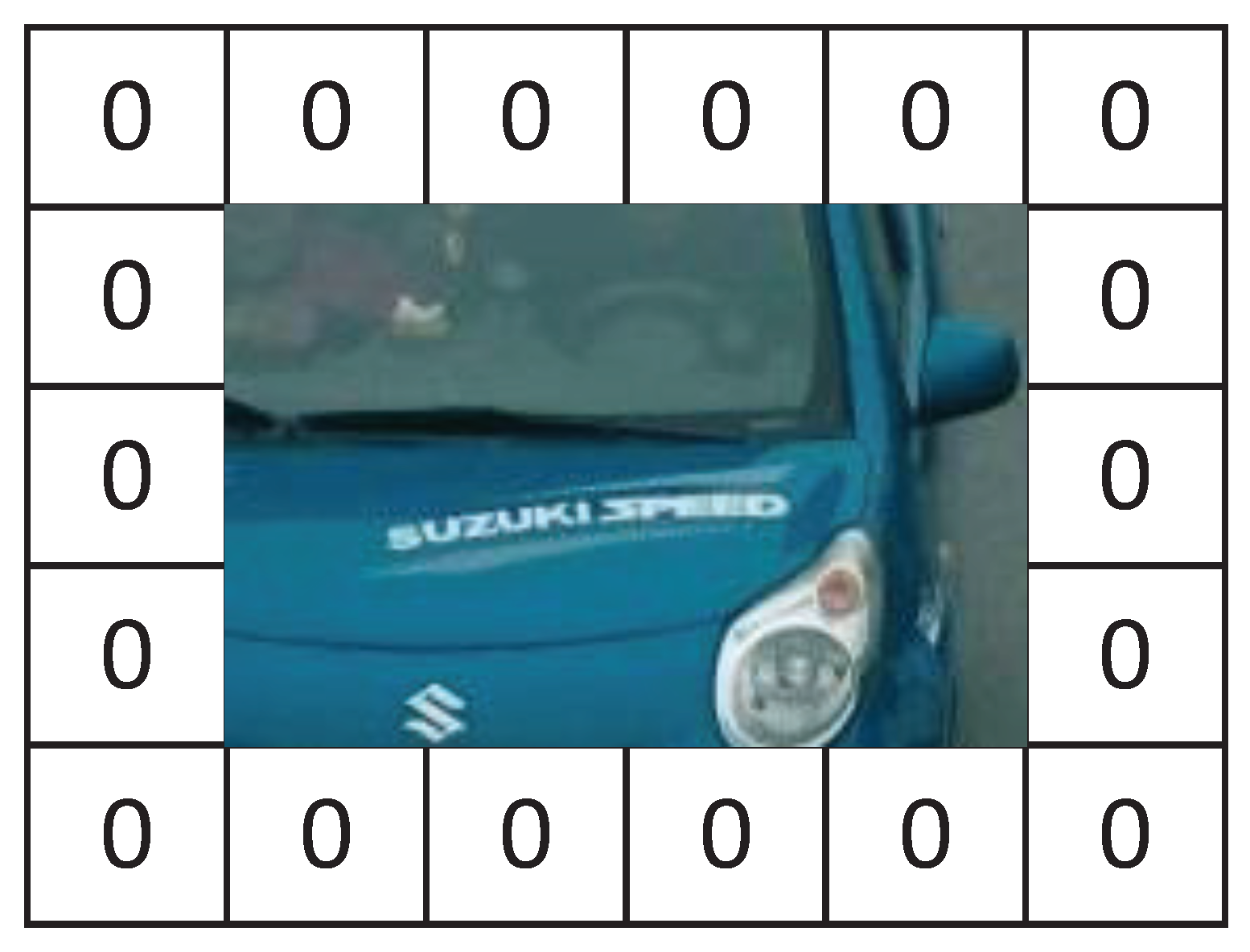

3.2.1. Local-Region Selection (LRS)

| Algorithm 1 Process of Local-Region Selection |

| Input: image width W; image height H; area of image A; ratio of width and height R; area ratio ranges from to ; aspect ratio ranges from to . Output: selected rectangle region .

|

3.2.2. Parameter Generator Network (PGN)

3.2.3. Blur Transformation

3.3. Transformation Adversarial Module (TAM)

| Algorithm 2 Adversarial process of PGN and the recognizer |

| Input: selected rectangle region A, original image x Output: updated

|

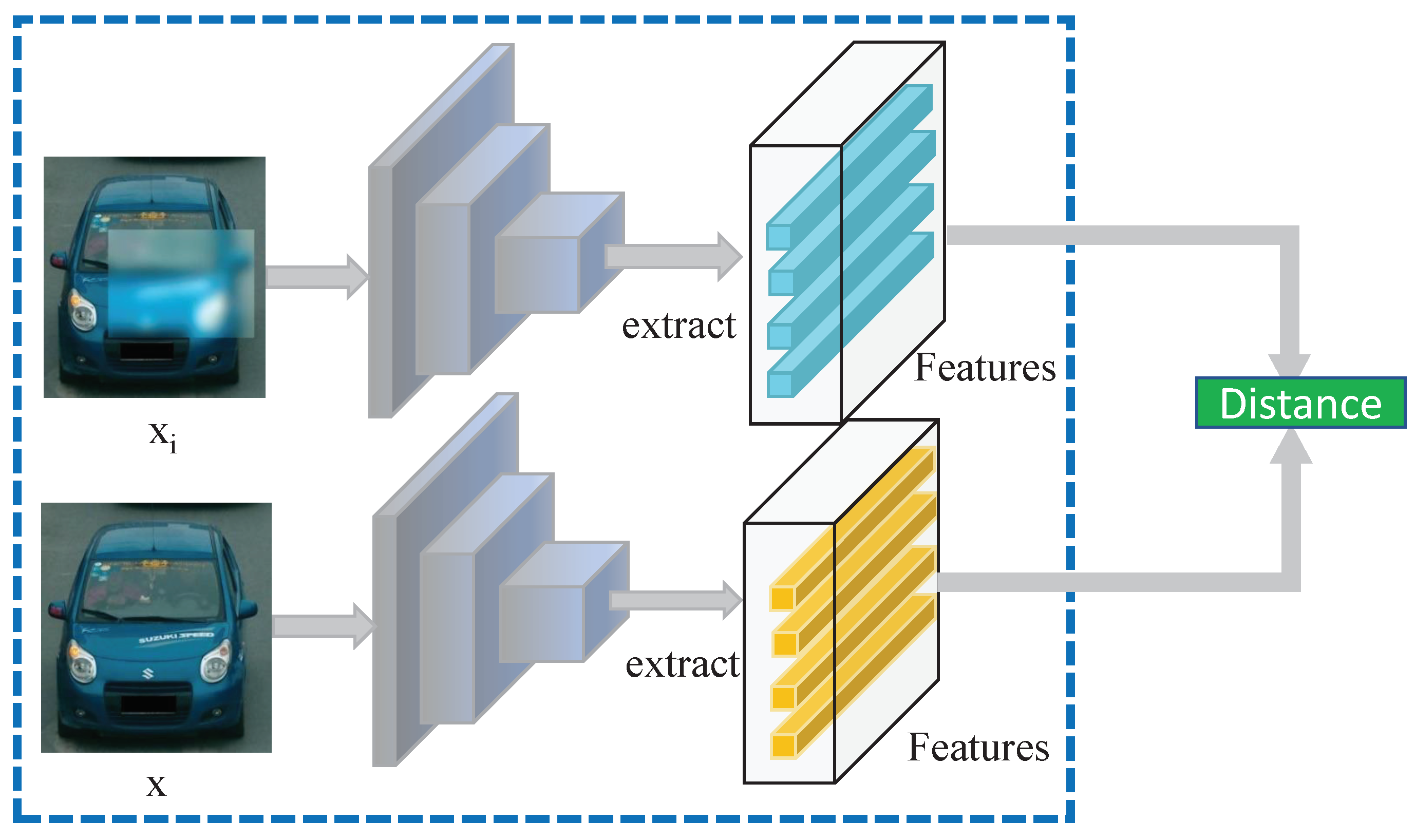

3.3.1. Recognizer

3.3.2. Learning Target Selection

4. Experiment and Explanation

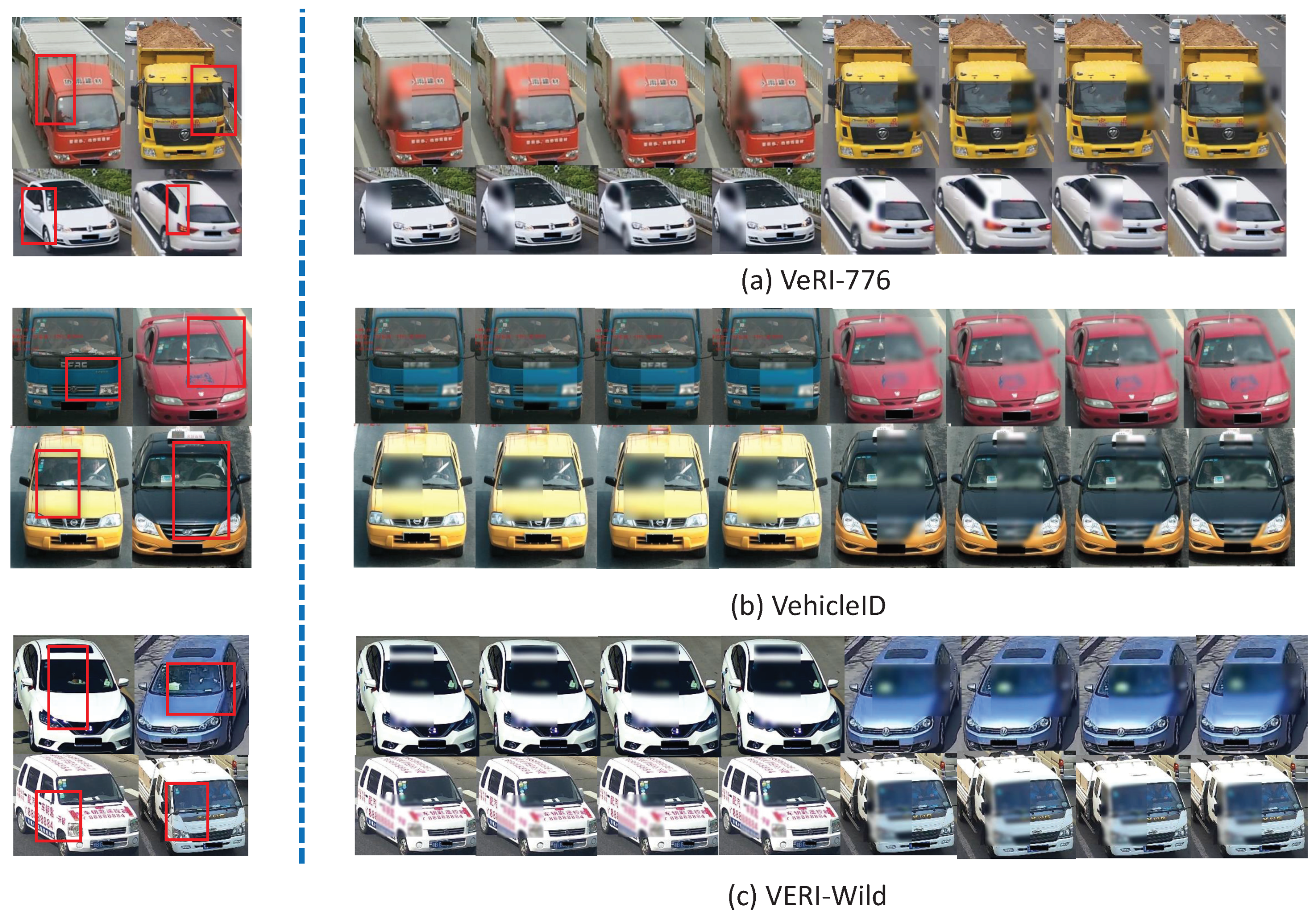

4.1. Datasets

4.1.1. VeRi-776

4.1.2. VehicleID

4.1.3. VERI-Wild

4.2. Implementation

4.3. Ablation Study

4.3.1. Our Model vs. Baseline

4.3.2. Internal Comparison of Our Model

4.4. Comparison with the Sota

4.5. Visualization of our Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Cong, Y.; Zhou, L.; Tian, Z.; Qiu, J. Super-resolution-based part collaboration network for vehicle re-identification. World Wide Web 2022, 1–20. [Google Scholar] [CrossRef]

- Wang, L.; Dai, L.; Bian, H.; Ma, Y.; Zhang, J. Concrete cracking prediction under combined prestress and strand corrosion. Struct. Infrastruct. Eng. 2019, 15, 285–295. [Google Scholar] [CrossRef]

- Li, Y.; Hao, Z.; Lei, H. Survey of convolutional neural network. J. Comput. Appl. 2016, 36, 2508–2515. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. Proc. Aaai Conf. Artif. Intell. 2017, 34. [Google Scholar] [CrossRef]

- Tramèr, F.; Kurakin, A.; Papernot, N.; Goodfellow, I.; Boneh, D.; McDaniel, P. Ensemble adversarial training: Attacks and defenses. arXiv 2017, arXiv:1705.07204. [Google Scholar]

- Jing, Y.; Yang, Y.; Feng, Z.; Ye, J.; Yu, Y.; Song, M. Neural style transfer: A review. IEEE Trans. Vis. Comput. Graph. 2019, 26, 3365–3385. [Google Scholar] [CrossRef] [Green Version]

- Xia, R.; Chen, Y.; Ren, B. Improved anti-occlusion object tracking algorithm using Unscented Rauch-Tung-Striebel smoother and kernel correlation filter. J. King Saud-Univ. Comput. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Chen, Y.; Ke, W.; Lin, H.; Lam, C.T.; Lv, K.; Sheng, H.; Xiong, Z. Local perspective based synthesis for vehicle re-identification: A transformation state adversarial method. J. Vis. Commun. Image Represent. 2022, 103432. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Ma, H.; Fu, H. Large-scale vehicle re-identification in urban surveillance videos. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016; pp. 1–6. [Google Scholar]

- Liu, H.; Tian, Y.; Yang, Y.; Pang, L.; Huang, T. Deep relative distance learning: Tell the difference between similar vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2167–2175. [Google Scholar]

- Lou, Y.; Bai, Y.; Liu, J.; Wang, S.; Duan, L. Veri-wild: A large dataset and a new method for vehicle re-identification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3235–3243. [Google Scholar]

- Hu, Z.; Xu, Y.; Raj, R.S.P.; Cheng, X.; Sun, L.; Wu, L. Vehicle re-identification based on keypoint segmentation of original image. Appl. Intell. 2022, 1–17. [Google Scholar] [CrossRef]

- Ning, X.; Gong, K.; Li, W.; Zhang, L.; Bai, X.; Tian, S. Feature refinement and filter network for person re-identification. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 3391–3402. [Google Scholar] [CrossRef]

- Zhang, J.; Feng, W.; Yuan, T.; Wang, J.; Sangaiah, A.K. SCSTCF: Spatial-channel selection and temporal regularized correlation filters for visual tracking. Appl. Soft Comput. 2022, 118, 108485. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Yan, H.; Liu, J. Deep joint discriminative learning for vehicle re-identification and retrieval. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 395–399. [Google Scholar]

- Wang, Z.; Tang, L.; Liu, X.; Yao, Z.; Yi, S.; Shao, J.; Yan, J.; Wang, S.; Li, H.; Wang, X. Orientation invariant feature embedding and spatial temporal regularization for vehicle re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 379–387. [Google Scholar]

- Wei, X.S.; Zhang, C.L.; Liu, L.; Shen, C.; Wu, J. Coarse-to-fine: A RNN-based hierarchical attention model for vehicle re-identification. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 575–591. [Google Scholar]

- Bai, Y.; Lou, Y.; Gao, F.; Wang, S.; Wu, Y.; Duan, L.Y. Group-sensitive triplet embedding for vehicle reidentification. IEEE Trans. Multimed. 2018, 20, 2385–2399. [Google Scholar] [CrossRef]

- He, B.; Li, J.; Zhao, Y.; Tian, Y. Part-regularized near-duplicate vehicle re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3997–4005. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Zhou, T.; Tulsiani, S.; Sun, W.; Malik, J.; Efros, A.A. View synthesis by appearance flow. In Proceedings of theEuropean Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 286–301. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Tatarchenko, M.; Dosovitskiy, A.; Brox, T. Multi-view 3d models from single images with a convolutional network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 322–337. [Google Scholar]

- Zhou, Y.; Shao, L. Cross-view GAN based vehicle generation for re-identification. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017. [Google Scholar]

- Bodnar, C. Text to image synthesis using generative adversarial networks. arXiv 2018, arXiv:1805.00676. [Google Scholar]

- Bowles, C.; Chen, L.; Guerrero, R.; Bentley, P.; Gunn, R.; Hammers, A.; Dickie, D.A.; Hernández, M.V.; Wardlaw, J.; Rueckert, D. Gan augmentation: Augmenting training data using generative adversarial networks. arXiv 2018, arXiv:1810.10863. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning deep CNN denoiser prior for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Peng, X.; Tang, Z.; Yang, F.; Feris, R.S.; Metaxas, D. Jointly optimize data augmentation and network training: Adversarial data augmentation in human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2226–2234. [Google Scholar]

- Ho, D.; Liang, E.; Chen, X.; Stoica, I.; Abbeel, P. Population based augmentation: Efficient learning of augmentation policy schedules. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2731–2741. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Luo, C.; Jin, L.; Sun, Z. A multi-object rectified attention network for scene text recognition. arXiv 2019, arXiv:1901.03003. [Google Scholar] [CrossRef]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Aster: An attentional scene text recognizer with flexible rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2035–2048. [Google Scholar] [CrossRef] [PubMed]

- He, S.; Luo, H.; Chen, W.; Zhang, M.; Zhang, Y.; Wang, F.; Li, H.; Jiang, W. Multi-domain learning and identity mining for vehicle re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 582–583. [Google Scholar]

- Jung, H.; Choi, M.K.; Jung, J.; Lee, J.H.; Kwon, S.; Young Jung, W. ResNet-based vehicle classification and localization in traffic surveillance systems. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2017; pp. 61–67. [Google Scholar]

- Yuan, Y.; Chen, W.; Yang, Y.; Wang, Z. In defense of the triplet loss again: Learning robust person re-identification with fast approximated triplet loss and label distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 354–355. [Google Scholar]

- Zhang, S.; Choromanska, A.; LeCun, Y. Deep learning with elastic averaging SGD. arXiv 2014, arXiv:1412.6651. [Google Scholar]

- Luo, H.; Gu, Y.; Liao, X.; Lai, S.; Jiang, W. Bag of tricks and a strong baseline for deep person re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Luo, H.; Jiang, W.; Gu, Y.; Liu, F.; Liao, X.; Lai, S.; Gu, J. A strong baseline and batch normalization neck for deep person re-identification. IEEE Trans. Multimed. 2019, 22, 2597–2609. [Google Scholar] [CrossRef] [Green Version]

- Shen, Y.; Xiao, T.; Li, H.; Yi, S.; Wang, X. Learning deep neural networks for vehicle re-id with visual-spatio-temporal path proposals. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1900–1909. [Google Scholar]

- Liu, X.; Liu, W.; Mei, T.; Ma, H. Provid: Progressive and multimodal vehicle reidentification for large-scale urban surveillance. IEEE Trans. Multimed. 2017, 20, 645–658. [Google Scholar] [CrossRef]

- Khorramshahi, P.; Kumar, A.; Peri, N.; Rambhatla, S.S.; Chen, J.C.; Chellappa, R. A dual-path model with adaptive attention for vehicle re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6132–6141. [Google Scholar]

- Kuma, R.; Weill, E.; Aghdasi, F.; Sriram, P. Vehicle re-identification: An efficient baseline using triplet embedding. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–9. [Google Scholar]

- Peng, J.; Jiang, G.; Chen, D.; Zhao, T.; Wang, H.; Fu, X. Eliminating cross-camera bias for vehicle re-identification. Multimed. Tools Appl. 2020, 1–17. [Google Scholar] [CrossRef]

- Zheng, A.; Lin, X.; Li, C.; He, R.; Tang, J. Attributes guided feature learning for vehicle re-identification. arXiv 2019, arXiv:1905.08997. [Google Scholar]

- Tang, Z.; Naphade, M.; Birchfield, S.; Tremblay, J.; Hodge, W.; Kumar, R.; Wang, S.; Yang, X. Pamtri: Pose-aware multi-task learning for vehicle re-identification using highly randomized synthetic data. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 211–220. [Google Scholar]

- Yao, Y.; Zheng, L.; Yang, X.; Naphade, M.; Gedeon, T. Simulating content consistent vehicle datasets with attribute descent. arXiv 2019, arXiv:1912.08855. [Google Scholar]

- Zhou, Y.; Shao, L. Aware attentive multi-view inference for vehicle re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6489–6498. [Google Scholar]

- Yang, L.; Luo, P.; Change Loy, C.; Tang, X. A large-scale car dataset for fine-grained categorization and verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3973–3981. [Google Scholar]

- Alfasly, S.; Hu, Y.; Li, H.; Liang, T.; Jin, X.; Liu, B.; Zhao, Q. Multi-Label-Based Similarity Learning for Vehicle Re-Identification. IEEE Access 2019, 7, 162605–162616. [Google Scholar] [CrossRef]

- Jin, X.; Lan, C.; Zeng, W.; Chen, Z. Uncertainty-aware multi-shot knowledge distillation for image-based object re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11165–11172. [Google Scholar]

| Description | Dimension |

|---|---|

| Original | |

| Con16, Relu, MP | |

| Con64, Relu, MP | |

| Con128, BN, Relu | |

| Con128, Relu, MP | |

| Con64, BN, Relu | |

| Con16, BN, Relu, MP | |

| FLayer | 4 |

| Model | VeRi-776 | VehicleID | VERI-Wild | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Small | Medium | Large | Small | Medium | Large | |||||||||

| R1 | MAP | R1 | MAP | R1 | MAP | R1 | MAP | R1 | MAP | R1 | MAP | R1 | MAP | |

| baseline | 95.7 | 76.6 | 83.0 | 77.0 | 80.7 | 75.0 | 79.2 | 74.0 | 93.1 | 72.6 | 90.5 | 66.5 | 86.4 | 58.5 |

| LBT (Our) | 96.7 | 80.5 | 84.3 | 77.8 | 83.4 | 77.9 | 79.9 | 74.4 | 91.8 | 72.7 | 90.3 | 66.7 | 87.6 | 58.8 |

| LBT + TAM (Our) | 96.9 | 81.6 | 84.3 | 77.9 | 83.5 | 78.0 | 79.9 | 74.6 | 93.4 | 72.6 | 91.1 | 66.7 | 87.7 | 58.8 |

| Methods | MAP | R1 | R5 |

|---|---|---|---|

| Siamese-CNN [50] | 54.2 | 79.3 | 88.9 |

| FDA-Net [12] | 55.5 | 84.3 | 92.4 |

| Siamese-CNN+ST [50] | 58.3 | 83.5 | 90.0 |

| PROVID [51] | 53.4 | 81.6 | 95.1 |

| AAVER [52] | 66.4 | 90.2 | 94.3 |

| BS [53] | 67.6 | 90.2 | 96.4 |

| CCA [54] | 68.0 | 91.7 | 94.3 |

| PRN [20] | 70.2 | 92.2 | 97.9 |

| AGNET [55] | 71.6 | 95.6 | 96.6 |

| PAMTRI [56] | 71.8 | 92.9 | 97.0 |

| VehicleX [57] | 73.3 | 95.0 | 98.0 |

| APAN [57] | 73.5 | 93.3 | - |

| MDL [44] | 79.4 | 90.7 | - |

| Our | 81.6 | 96.9 | 99.0 |

| Methods | Small | Medium | Large | |||

|---|---|---|---|---|---|---|

| R1 | R5 | R1 | R5 | R1 | R5 | |

| VAMI [58] | 63.1 | 83.3 | 52.9 | 75.1 | 47.3 | 70.3 |

| FDA-Net [12] | - | - | 59.8 | 77.1 | 55.5 | 74.7 |

| AGNET [55] | 71.2 | 83.8 | 69.2 | 81.4 | 65.7 | 78.3 |

| AAVER [52] | 74.7 | 93.8 | 68.6 | 90.0 | 63.5 | 85.6 |

| OIFE [17] | - | - | - | - | 67.0 | 82.9 |

| CCA [54] | 75.5 | 91.1 | 73.6 | 86.5 | 70.1 | 83.2 |

| PRN [20] | 78.4 | 92.3 | 75.0 | 88.3 | 74.2 | 86.4 |

| BS [53] | 78.8 | 96.2 | 73.4 | 92.6 | 69.3 | 89.5 |

| VehicleX [57] | 79.8 | 93.2 | 76.7 | 90.3 | 73.9 | 88.2 |

| Our | 84.3 | 93.5 | 83.5 | 90.6 | 79.9 | 86.5 |

| Methods | Small | Medium | Large | |||

|---|---|---|---|---|---|---|

| MAP | R1 | MAP | R1 | MAP | R1 | |

| GoogleNet [59] | 24.3 | 57.2 | 24.2 | 53.2 | 21.5 | 44.6 |

| FDA-net [12] | 35.1 | 64.0 | 29.8 | 57.8 | 22.8 | 49.4 |

| MLSL [60] | 46.3 | 86.0 | 42.4 | 83.0 | 36.6 | 77.5 |

| AAVER [52] | 62.2 | 75.8 | 53.7 | 68.2 | 41.7 | 58.7 |

| BS [53] | 70.0 | 84.2 | 62.8 | 78.2 | 51.6 | 70.0 |

| UMTS [61] | 72.7 | 84.5 | - | - | - | - |

| Our | 72.9 | 93.4 | 66.7 | 91.1 | 58.8 | 87.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Ke, W.; Sheng, H.; Xiong, Z. Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization. Appl. Sci. 2022, 12, 7467. https://doi.org/10.3390/app12157467

Chen Y, Ke W, Sheng H, Xiong Z. Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization. Applied Sciences. 2022; 12(15):7467. https://doi.org/10.3390/app12157467

Chicago/Turabian StyleChen, Yanbing, Wei Ke, Hao Sheng, and Zhang Xiong. 2022. "Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization" Applied Sciences 12, no. 15: 7467. https://doi.org/10.3390/app12157467

APA StyleChen, Y., Ke, W., Sheng, H., & Xiong, Z. (2022). Learning More in Vehicle Re-Identification: Joint Local Blur Transformation and Adversarial Network Optimization. Applied Sciences, 12(15), 7467. https://doi.org/10.3390/app12157467