Fusion Information Multi-View Classification Method for Remote Sensing Cloud Detection

Abstract

:1. Introduction

- 1.

- A feature extraction network at the super pixel level is constructed to extract the fast features of super pixels in cloud-containing remote sensing images at multi-scales. The cloud content in super pixels and the cloud-containing marker state information of adjacent super pixels are effectively utilized.

- 2.

- A multi-view support vector machine cloud detection classifier based on fusion information is constructed and the solving algorithm based on quadratic convex optimization is given.

- 3.

- We provide a multi-view classification dataset based on remote sensing cloud super pixels. The new model is used to classify the super pixels and synthesize the cloud mask bipartite graph. Experiments are carried out in images with different cloud contents to verify the effectiveness of the new method.

2. Related Work

2.1. Multi-View Learning

2.2. Coupling Privileged Kernel Method for Multi-View Learning

3. Proposed Method

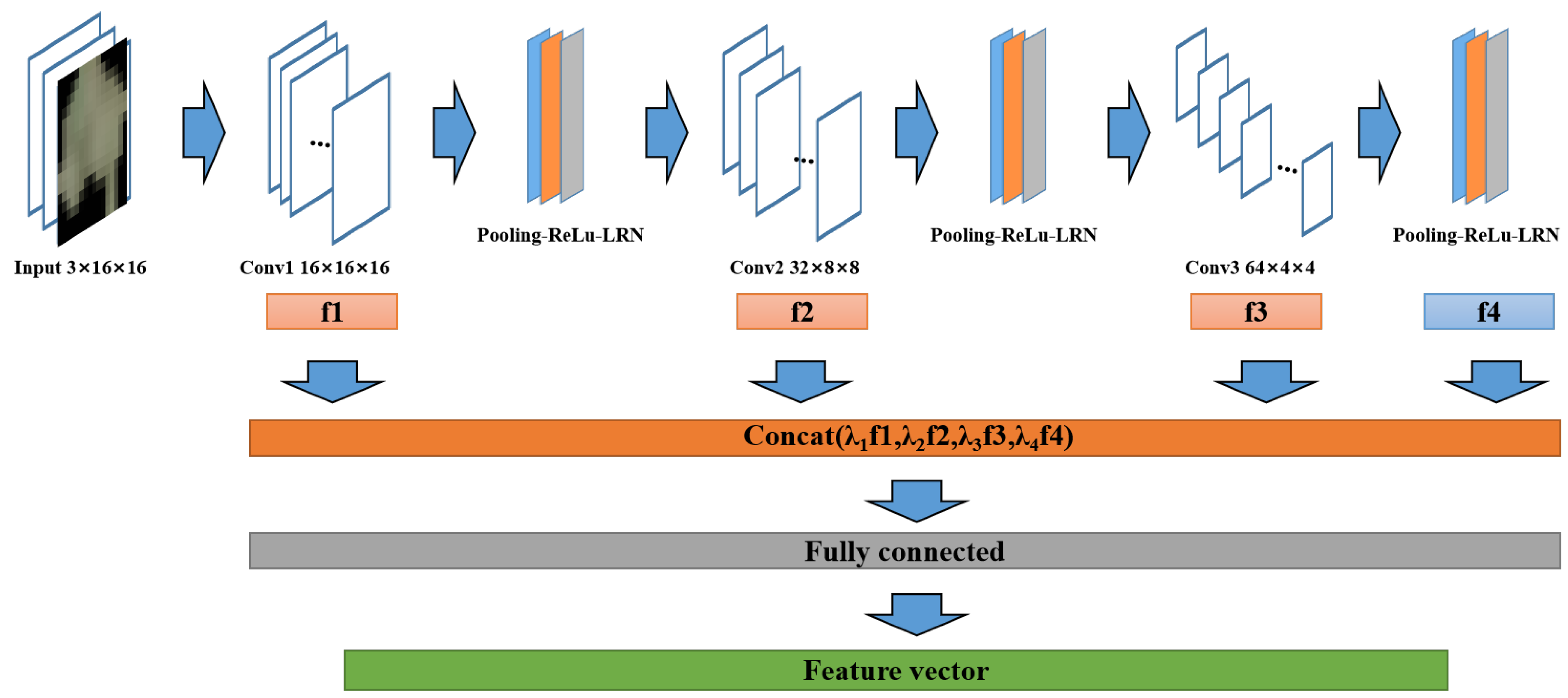

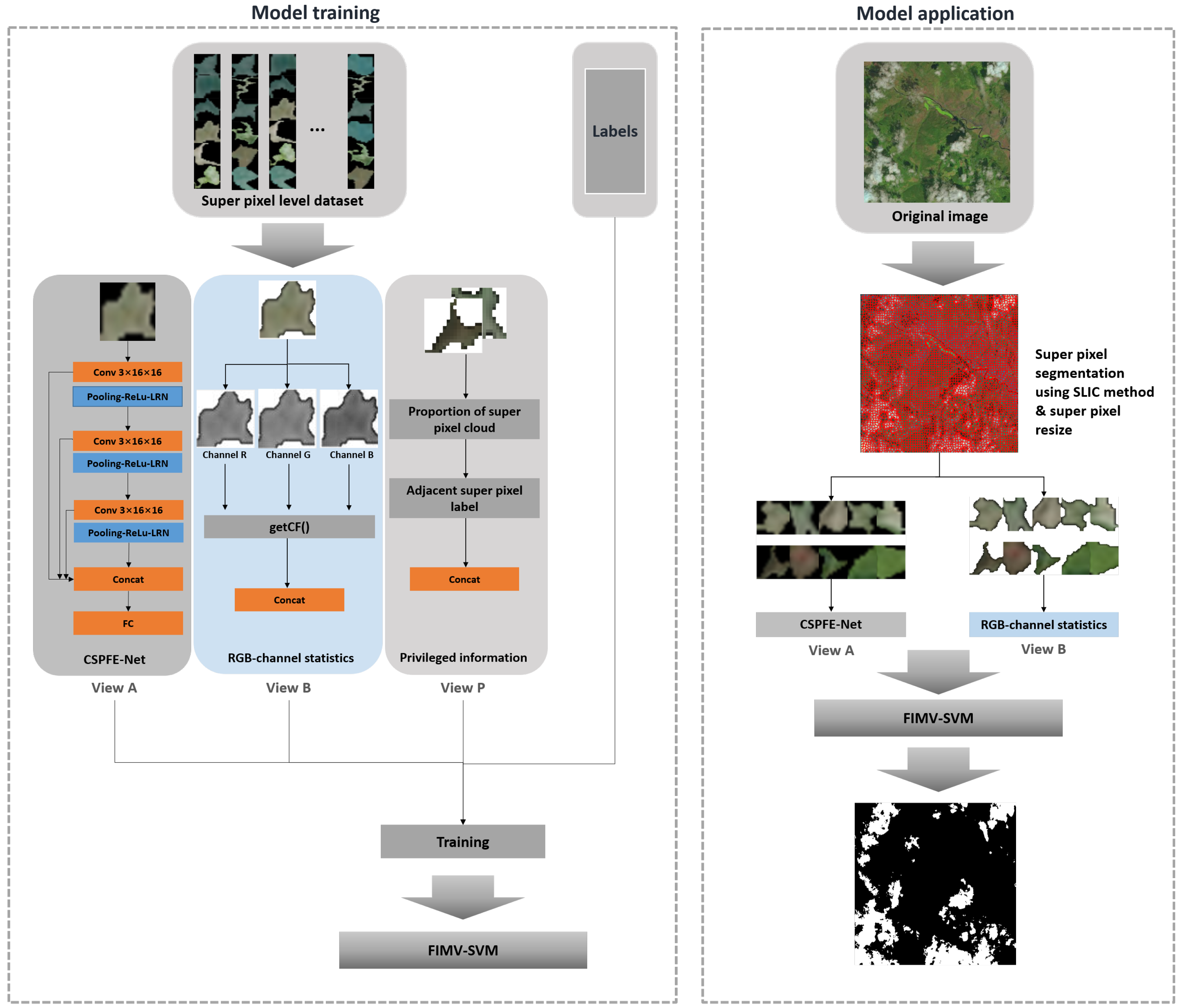

3.1. Multi-View Feature Extraction

3.2. Fusion Information Multi-View SVM Classification Method

3.3. Cloud Detection Model Training and Application Process

| Algorithm 1 QP Algorithm for FIMV-SVM |

| Require: Ensure: Decision functions:

|

4. Experiment

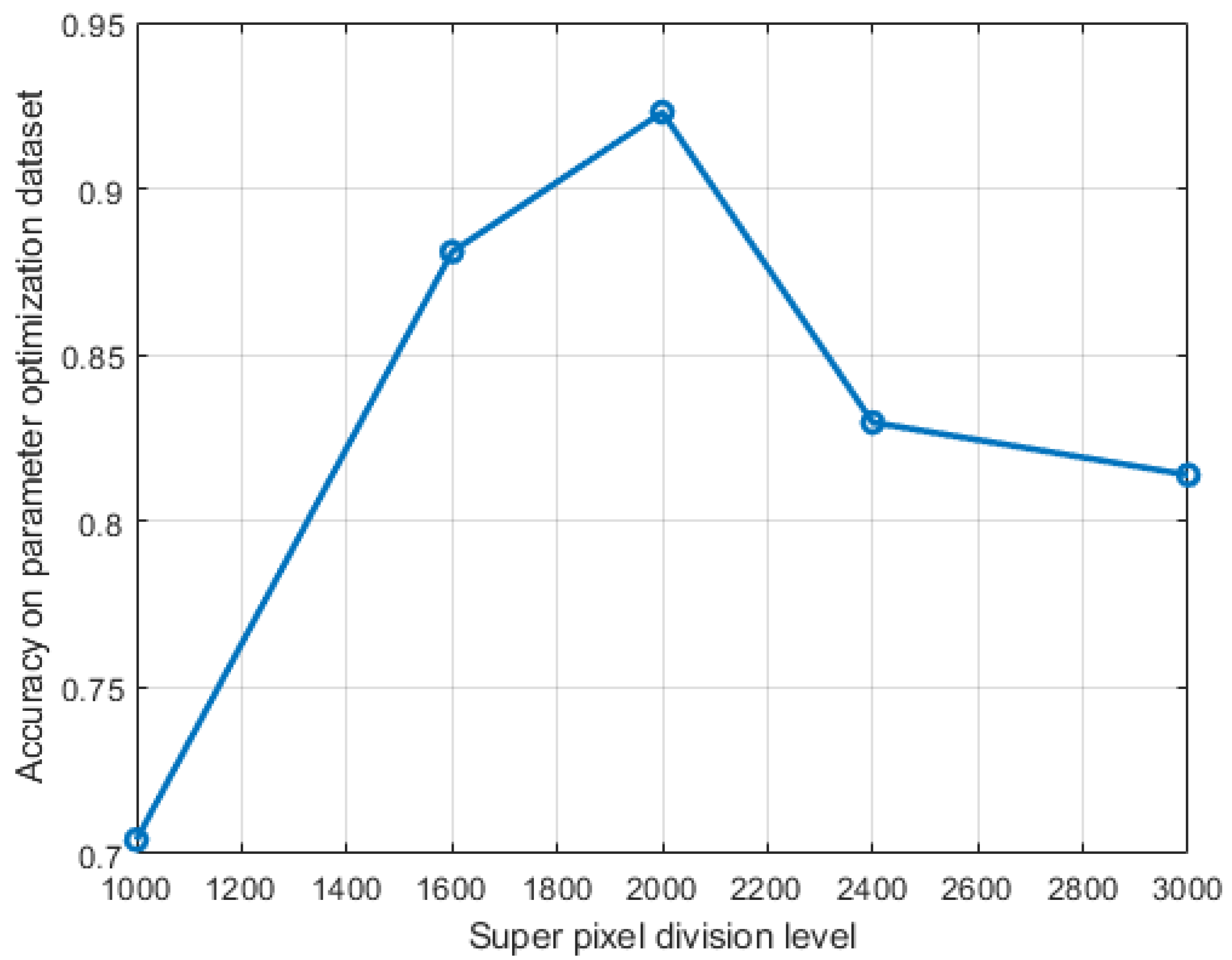

4.1. Parameter Setting

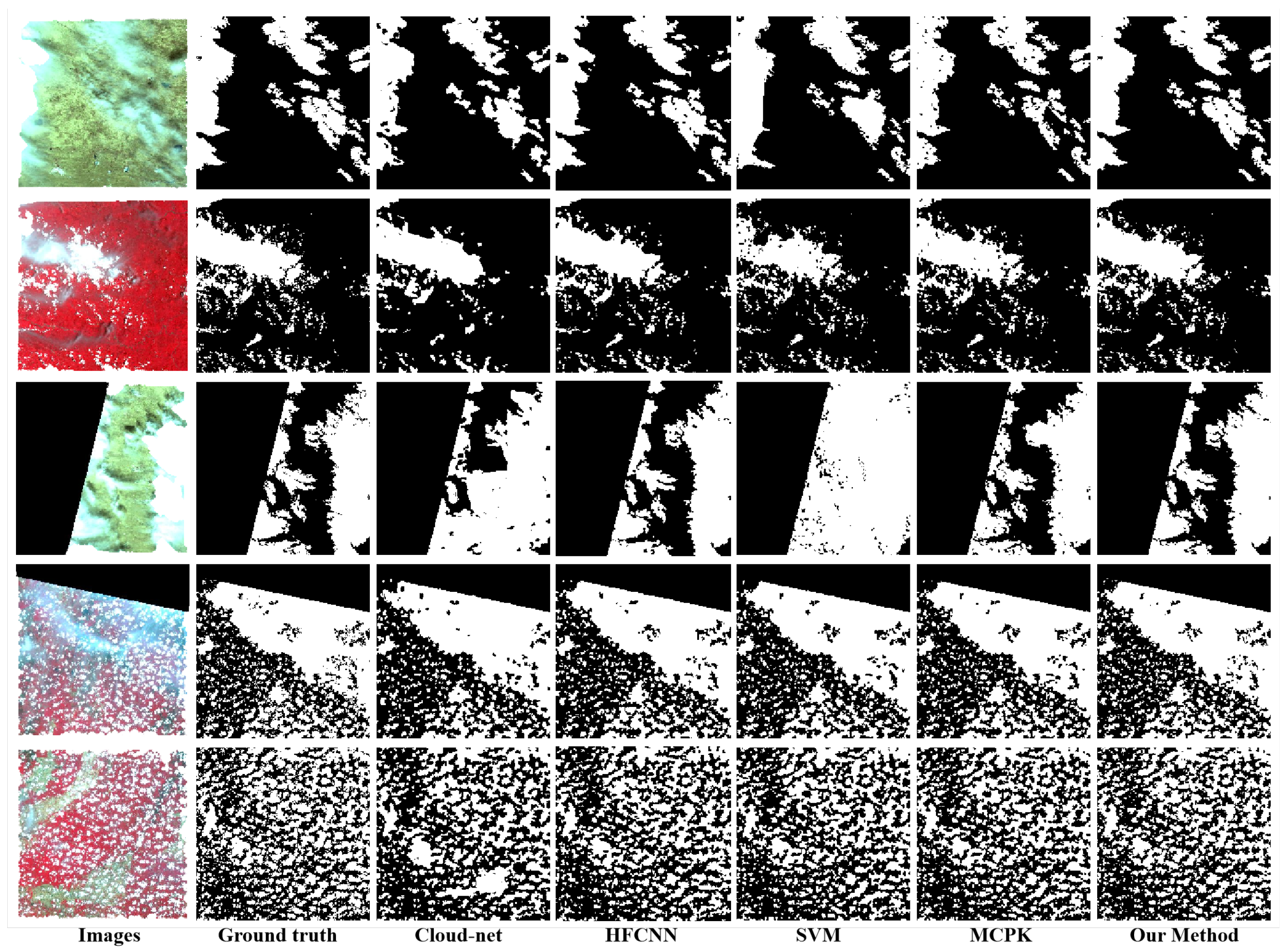

4.2. Visual Performance

4.3. Quantitative Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SVM | Support vector machine |

| MKL | Multi-kernel learning |

| SMO | Sequential minimum optimization |

| MKNPSVM | Multi-kernel framework with nonparallel support vector machine |

| KCCA | Kernel canonical correlation analysis |

| FCN | Full convolution networks |

| MCPK | Multi-view learning coupling privileged kernel method |

| CNN | Convolutional neural network |

| CSPFE-Net | Cloud super pixel feature extraction network |

| FIMV-SVM | Fusion information multi-view SVM |

| KKT | Karush–Kuhn–Tucke |

| SLIC | Simple linear iterative cluster |

| HFCNN | Hierarchical fusion convolutional neural network |

| RBF | Radial basis function |

References

- Sola, I.; Álvarez-Mozos, J.; González-Audícana, M. Inter-Comparison of Atmospheric Correction Methods on Sentinel-2 Images Applied to Croplands. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, IGARSS 2018, Valencia, Spain, 22–27 July 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 5940–5943. [Google Scholar]

- Vermote, E.F.; Saleous, N.; Justice, C.O. Atmospheric correction of MODIS data in the visible to middle infrared: First results. Remote Sens. Environ. 2002, 83, 97–111. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ automated cloud-cover assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Bley, S.; Deneke, H. A threshold-based cloud mask for the high-resolution visible channel of Meteosat Second Generation SEVIRI. Atmos. Meas. Tech. 2013, 6, 2713–2723. [Google Scholar] [CrossRef] [Green Version]

- Jedlovec, G.J.; Haines, S.L.; LaFontaine, F.J. Spatial and temporal varying thresholds for cloud detection in GOES imagery. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1705–1717. [Google Scholar] [CrossRef]

- Zhang, Q.; Xiao, C. Cloud detection of RGB color aerial photographs by progressive refinement scheme. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7264–7275. [Google Scholar] [CrossRef] [Green Version]

- Li, P.; Dong, L.; Xiao, H.; Xu, M. A cloud image detection method based on SVM vector machine. Neurocomputing 2015, 169, 34–42. [Google Scholar] [CrossRef]

- Le Hégarat-Mascle, S.; André, C. Use of Markov random fields for automatic cloud/shadow detection on high resolution optical images. ISPRS J. Photogramm. Remote Sens. 2009, 64, 351–366. [Google Scholar] [CrossRef]

- Shao, Z.; Deng, J.; Wang, L.; Fan, Y.; Sumari, N.S.; Cheng, Q. Fuzzy autoencode based cloud detection for remote sensing imagery. Remote Sens. 2017, 9, 311. [Google Scholar] [CrossRef] [Green Version]

- Ishida, H.; Oishi, Y.; Morita, K.; Moriwaki, K.; Nakajima, T.Y. Development of a support vector machine based cloud detection method for MODIS with the adjustability to various conditions. Remote Sens. Environ. 2018, 205, 390–407. [Google Scholar] [CrossRef]

- Yuan, Y.; Hu, X. Bag-of-words and object-based classification for cloud extraction from satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4197–4205. [Google Scholar] [CrossRef]

- Mohajerani, S.; Krammer, T.A.; Saeedi, P. Cloud detection algorithm for remote sensing images using fully convolutional neural networks. arXiv 2018, arXiv:1810.05782. [Google Scholar]

- Manzo, M.; Pellino, S. Voting in transfer learning system for ground-based cloud classification. Mach. Learn. Knowl. Extr. 2021, 3, 28. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An end-to-end cloud detection algorithm for Landsat 8 imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Piscataway Township, NJ, USA,, 2019; pp. 1029–1032. [Google Scholar]

- Yan, Z.; Yan, M.; Sun, H.; Fu, K.; Hong, J.; Sun, J.; Zhang, Y.; Sun, X. Cloud and cloud shadow detection using multilevel feature fused segmentation network. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1600–1604. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Tong, X. Cloud extraction from Chinese high resolution satellite imagery by probabilistic latent semantic analysis and object-based machine learning. Remote Sens. 2016, 8, 963. [Google Scholar] [CrossRef] [Green Version]

- Xie, F.; Shi, M.; Shi, Z.; Yin, J.; Zhao, D. Multilevel cloud detection in remote sensing images based on deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Liu, H.; Du, H.; Zeng, D.; Tian, Q. Cloud detection using super pixel classification and semantic segmentation. J. Comput. Sci. Technol. 2019, 34, 622–633. [Google Scholar] [CrossRef]

- Gao, X.; Fan, L.; Xu, H. Multiple rank multi-linear kernel support vector machine for matrix data classification. Int. J. Mach. Learn. Cybern. 2018, 9, 251–261. [Google Scholar] [CrossRef]

- Xu, C.; Tao, D.; Xu, C. A survey on multi-view learning. arXiv 2013, arXiv:1304.5634. [Google Scholar]

- Tang, J.; He, Y.; Tian, Y.; Liu, D.; Kou, G.; Alsaadi, F.E. Coupling loss and self-used privileged information guided multi-view transfer learning. Inf. Sci. 2021, 551, 245–269. [Google Scholar] [CrossRef]

- Appice, A.; Malerba, D. A co-training strategy for multiple view clustering in process mining. IEEE Trans. Serv. Comput. 2015, 9, 832–845. [Google Scholar] [CrossRef]

- Kim, D.; Seo, D.; Cho, S.; Kang, P. Multi-co-training for document classification using various document representations: TF–IDF, LDA, and Doc2Vec. Inf. Sci. 2019, 477, 15–29. [Google Scholar] [CrossRef]

- Cao, L.-L.; Huang, W.B.; Sun, F.-C. Optimization-based extreme learning machine with multi-kernel learning approach for classification. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; IEEE: Piscataway Township, NJ, USA, 2014; pp. 3564–3569. [Google Scholar]

- Tang, J.; Tian, Y. A multi-kernel framework with nonparallel support vector machine. Neurocomputing 2017, 266, 226–238. [Google Scholar] [CrossRef]

- Chao, G.; Sun, S. Consensus and complementarity based maximum entropy discrimination for multi-view classification. Inf. Sci. 2016, 367, 296–310. [Google Scholar] [CrossRef]

- Tang, J.; Tian, Y.; Liu, D.; Kou, G. Coupling privileged kernel method for multi-view learning. Inf. Sci. 2019, 481, 110–127. [Google Scholar] [CrossRef]

- Deng, N.; Tian, Y.; Zhang, C. Support Vector Machines: Optimization Based Theory, Algorithms, and Extensions; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC super pixels compared to state-of-the-art super pixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Grant, M.; Boyd, S.; Ye, Y. CVX: Matlab Software for Disciplined Convex Programming. 2008. Available online: http://cvxr.com/cvx/ (accessed on 15 July 2022).

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Wulder, M.A.; Loveland, T.R.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Helder, D.; Irons, J.R.; Johnson, D.M.; Kennedy, R.; et al. Landsat-8: Science and product vision for terrestrial global change research. Remote Sens. Environ. 2014, 145, 154–172. [Google Scholar] [CrossRef] [Green Version]

| Name | Number of Imgages | Resource Acquisition | Remarks |

|---|---|---|---|

| GF-1_WHU | 108 | http://sendimage.whu.edu.cn/en/mfc-validation-data/ (accessed on 15 July 2022) | GF-1_WHU includes 108 GF-1 wide field of view (WFV) level-2A scenes and its reference cloud and cloud shadow masks. |

| Cloud-38 | 38 | https://www.kaggle.com/datasets/sorour/38cloud-cloud-segmentation-in-satellite-images/download (accessed on 15 July 2022) | There are four spectral channels, namely, red (band 4), green (band 3), blue (band 2), and near-infrared (band 5). |

| Method | Jaccard Index | Pression | Recall | Specificity | Overall Accuracy | F1-Score |

|---|---|---|---|---|---|---|

| Cloud-Net [15] | 81.93 ± 7.22 | 87.03 ± 5.48 | 93.49 ± 4.36 | 82.86 ± 4.34 | 88.46 ± 3.68 | 91.21 ± 4.46 |

| HFCNN [19] | 87.94 ± 5.12 | 91.87 ± 4.05 | 96.02 ± 5.13 | 91.28 ± 3.74 | 92.79 ± 5.04 | 94.36 ± 4.76 |

| SVM | 67.12 ± 3.77 | 71.36 ± 5.14 | 92.13 ± 5.49 | 72.56 ± 4.87 | 78.85 ± 3.24 | 79.62 ± 3.64 |

| MCPK | 85.32 ± 4.19 | 87.93 ± 5.33 | 96.07 ± 3.96 | 85.77 ± 5.08 | 90.76 ± 4.39 | 92.43 ± 3.13 |

| Our method | 91.69 ± 1.34 | 94.67 ± 2.01 | 97.38 ± 1.65 | 93.04 ± 2.12 | 96.31 ± 1.46 | 96.03 ± 1.72 |

| Method | Jaccard Index | Pression | Recall | Specificity | Overall Accuracy | F1-Score |

|---|---|---|---|---|---|---|

| Cloud-Net [15] | 87.13 ± 5.14 | 91.22 ± 4.37 | 95.94 ± 3.89 | 89.03 ± 4.34 | 93.14 ± 5.36 | 92.87 ± 4.68 |

| HFCNN [19] | 92.04 ± 3.69 | 92.95 ± 3.98 | 98.25 ± 5.01 | 92.24 ± 4.36 | 95.86 ± 5.23 | 94.21 ± 3.67 |

| SVM | 79.02 ± 4.37 | 82.83 ± 5.04 | 92.87 ± 4.76 | 82.78 ± 3.85 | 86.74 ± 4.19 | 87.86 ± 3.92 |

| MCPK | 87.45 ± 3.94 | 92.32 ± 4.18 | 96.83 ± 5.03 | 88.34 ± 4.76 | 92.89 ± 5.14 | 94.03 ± 3.87 |

| Our method | 94.87 ± 1.24 | 95.28 ± 1.39 | 98.74 ± 2.07 | 93.48 ± 2.11 | 97.28 ± 1.47 | 97.37 ± 1.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, Q.; Zheng, W.; Xiao, Y. Fusion Information Multi-View Classification Method for Remote Sensing Cloud Detection. Appl. Sci. 2022, 12, 7295. https://doi.org/10.3390/app12147295

Hao Q, Zheng W, Xiao Y. Fusion Information Multi-View Classification Method for Remote Sensing Cloud Detection. Applied Sciences. 2022; 12(14):7295. https://doi.org/10.3390/app12147295

Chicago/Turabian StyleHao, Qi, Wenguang Zheng, and Yingyuan Xiao. 2022. "Fusion Information Multi-View Classification Method for Remote Sensing Cloud Detection" Applied Sciences 12, no. 14: 7295. https://doi.org/10.3390/app12147295

APA StyleHao, Q., Zheng, W., & Xiao, Y. (2022). Fusion Information Multi-View Classification Method for Remote Sensing Cloud Detection. Applied Sciences, 12(14), 7295. https://doi.org/10.3390/app12147295