An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System

Abstract

:1. Introduction

- The human body is unaffected by visible light waves [3]. In addition to the negative impact of RF waves on human health, they can also degrade system performance owing to electromagnetic interference (EMI).

- Visible light waveforms have a much larger bandwidth compared with RF waves (more than 1000 times that of RF waveforms).

- Visible light waveforms are safer and more efficient when the line-of-sight transmission is acquired by the optical channel.

- Based on the following benefits, several organizations have suggested research funding to develop and investigate OWC systems. The OWC approach was introduced with its complexity protocol in the Institute of Electrical and Electronics Engineers (IEEE, 2011) 802.15.7-2011 standard [4]. The IEEE 802.15.7-2018 [5] standard was published in 2018, and it added the following four modes to the previous standard.

- The IEEE 802.15.7-2011 standard [4] included VLC modes information.

- Optical Camera Communication: By using image sensors, modulation systems can decode OCC information from a variety of LED sources.

- High-speed LiFi: Using high-rate photodiode modulation techniques, the data rate can be increased to higher than 1 Mbps.

- Photodiode identification: Photodiodes are used in communication techniques to transmit data at a low rate (less than 1 Mbps).

2. The Contribution of the Present Study

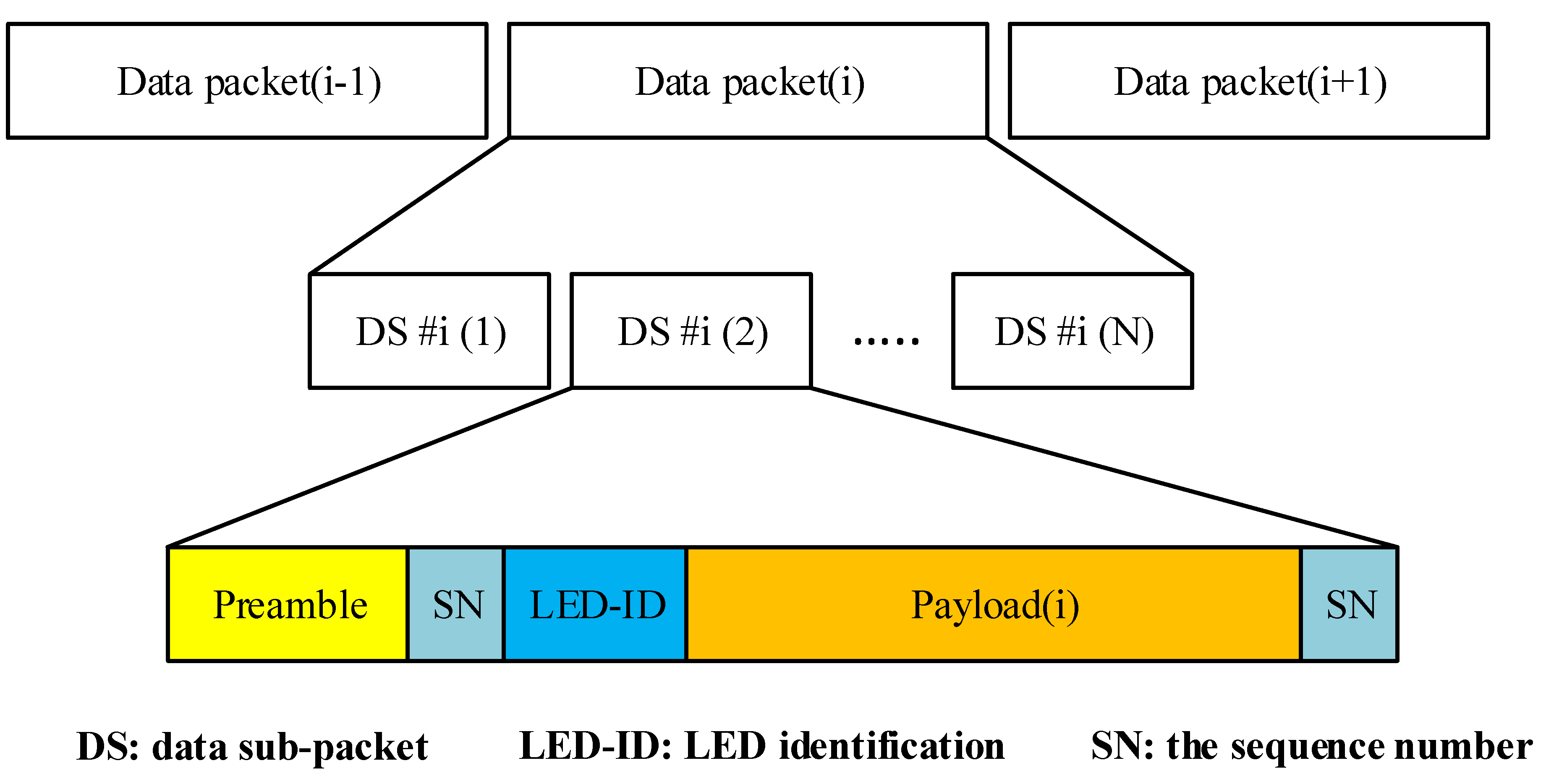

- Support for frame rate variations: Frame rate variation significantly impacts the OCC system, causing packet losses on the receiver side. Most people tend to ensure that a camera frame rate remains constant regardless of its specifications (e.g., 30 or 1000 fps). Depending on the technological parameters of different cameras, synchronizing transmitters and receivers can be difficult. Then, the sequence number (SN) is used to improve the system’s performance by identifying whether the camera frame rate is higher than the transmitter’s package rate.

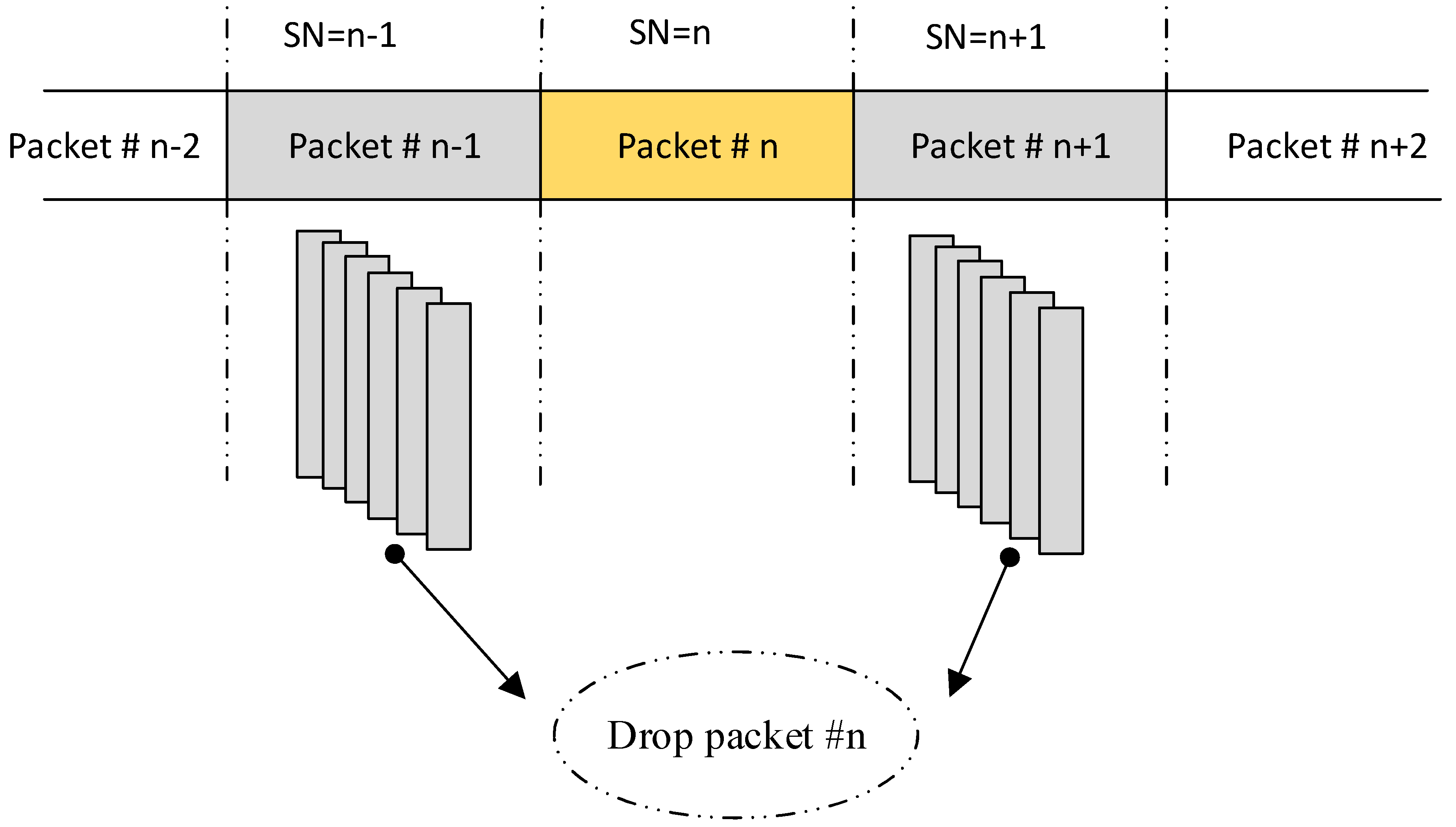

- The discovery of lost packets: To detect the missing packet, we compared two SNs in two consecutive pictures when the length of the SN exceeded a specified value to discover each missing packet collected by the camera.

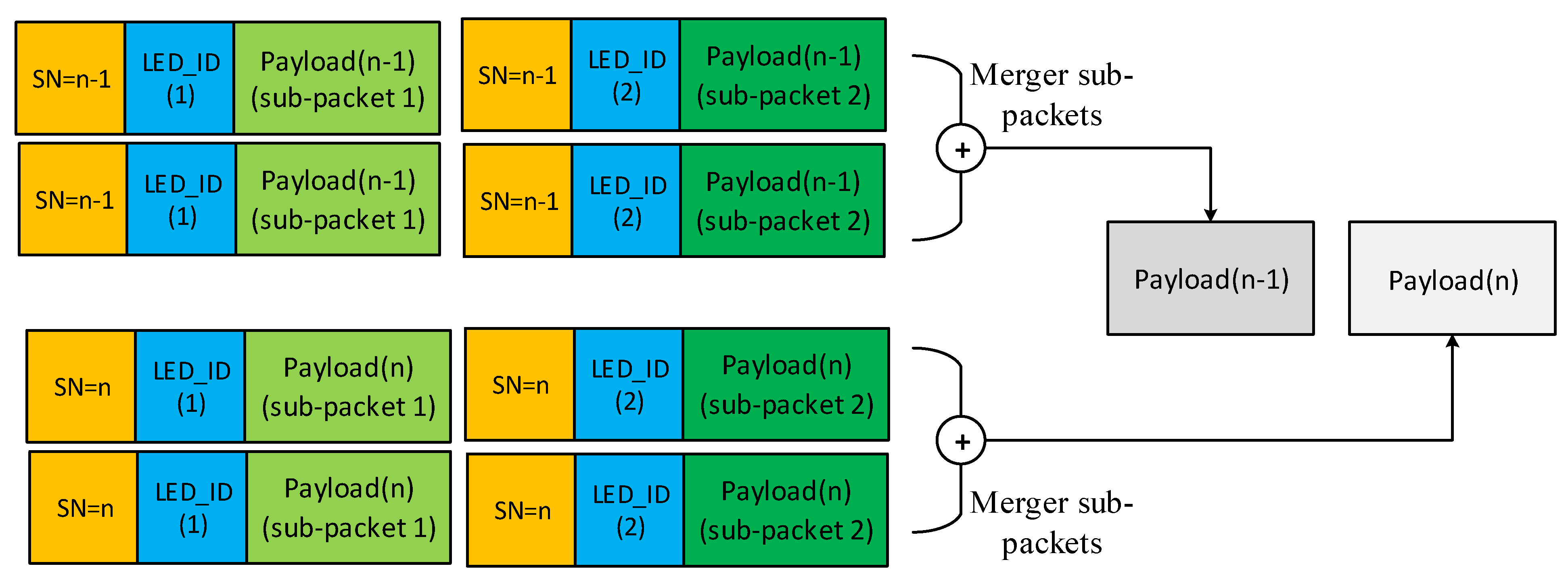

- Data-merging algorithm: We presented this process for each data sequence in our experiment by deploying the sequence number in each packet to detect the exact sequence of packets.

- Improved data rate: By applying deep learning, many LEDs could be detected with high accuracy by considering long-range and mobile environments compared with RoI algorithms.

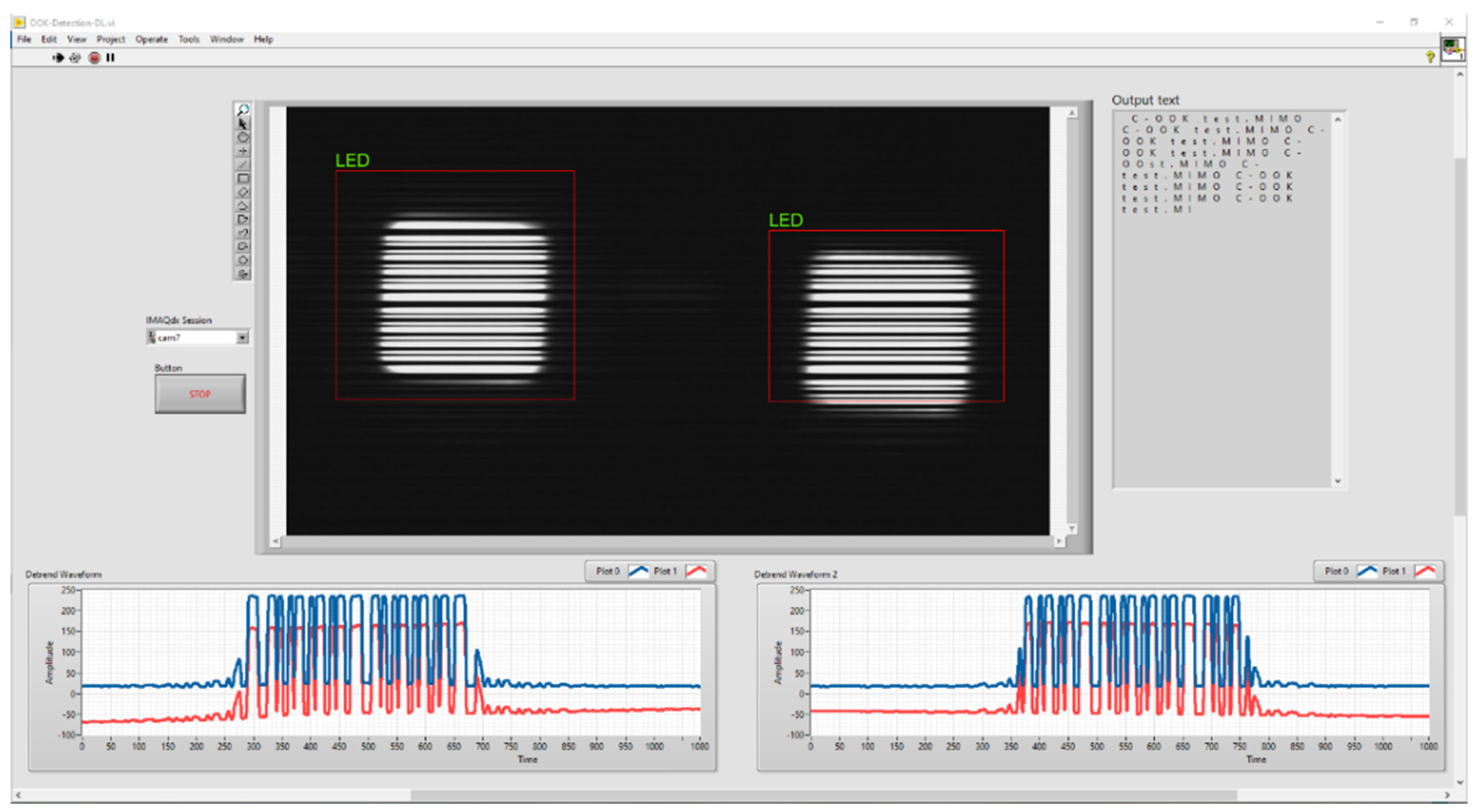

- Mobility support: The C-OOK scheme based on the rolling-shutter effect, which was more sensitive to the mobility effect than RoI algorithms, highlighted issues for detecting multiple LEDs compared with CNN. When using a rolling-shutter camera, the LEDs were displayed in an image as black and white strips; the number of LEDs could not be clearly detected when using RoI algorithms. Accordingly, we proposed a neural network for improving the performance of the OCC system.

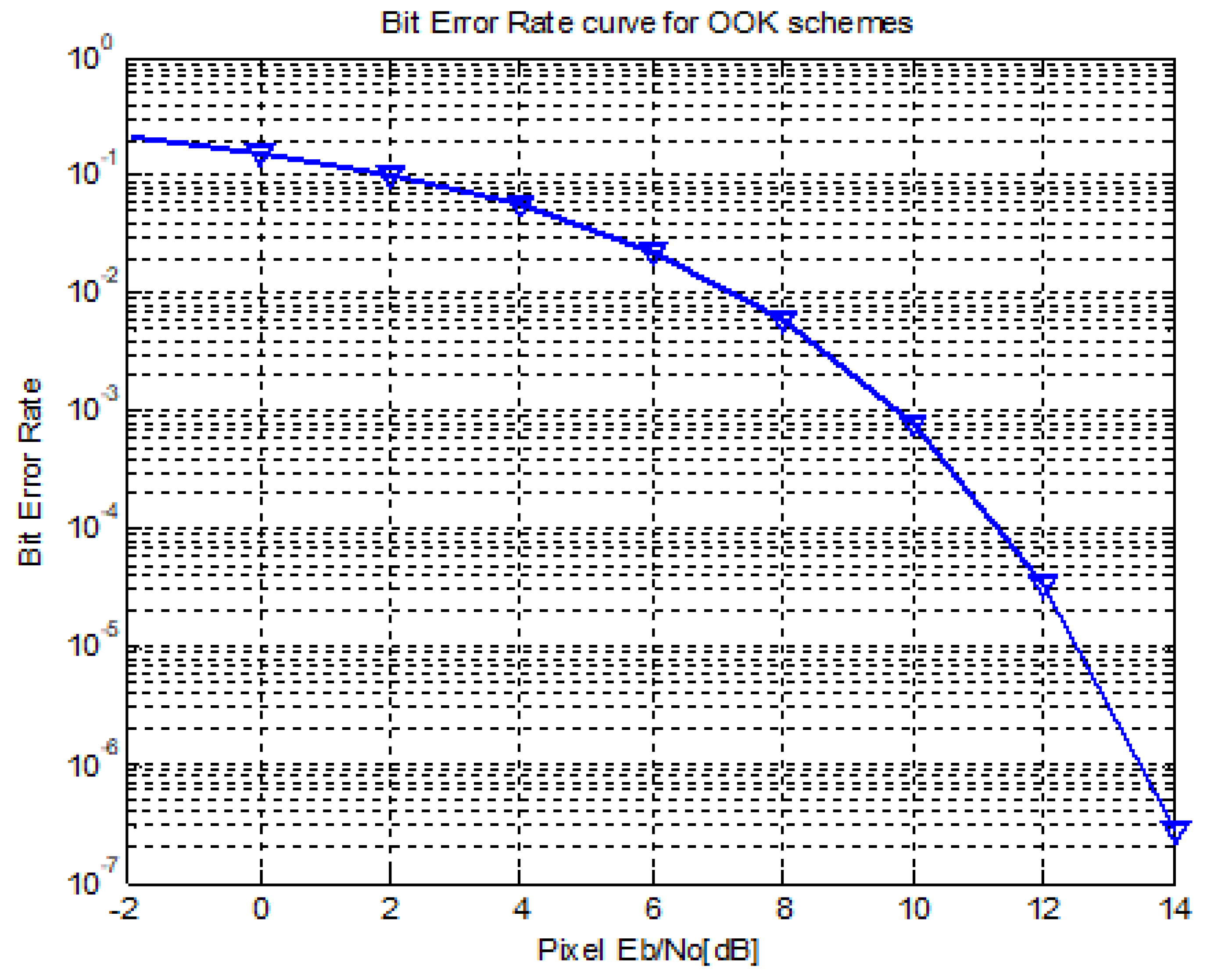

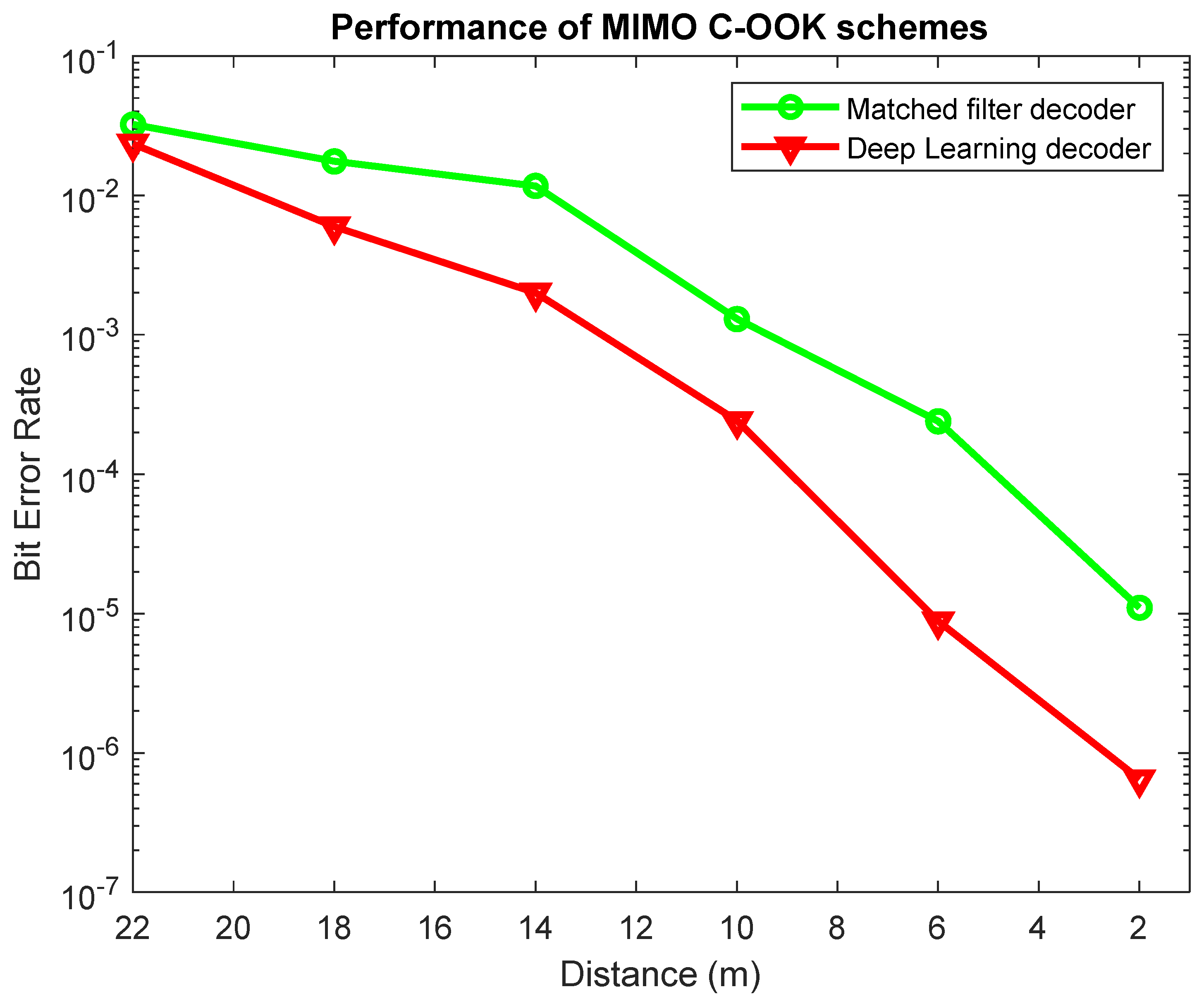

- The BER was reduced compared with that using the conventional decoder approach. By collecting data from several cases (using different distances, mobility, and from several cameras), the dataset for a deep learning neural network decoder could achieve good performance compared with using the conventional decoder method. A comparison between the conventional decoder method and our proposed technique based on deep learning is shown in Section 4.

3. System Architecture

3.1. Deep Learning for Detecting and Tracking LEDs

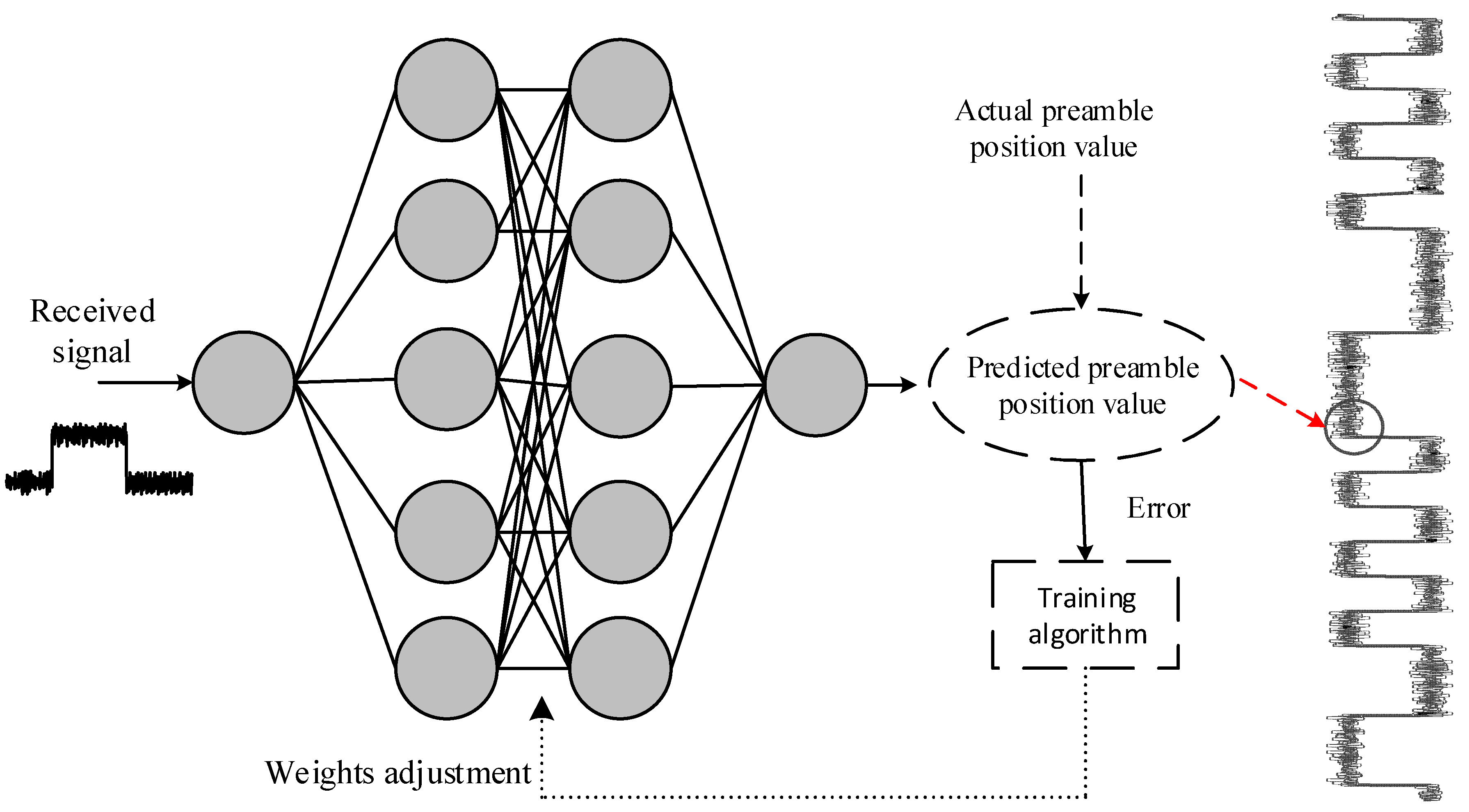

3.2. Decoding Based on Deep Learning

3.3. Channel Coding

4. Implementation

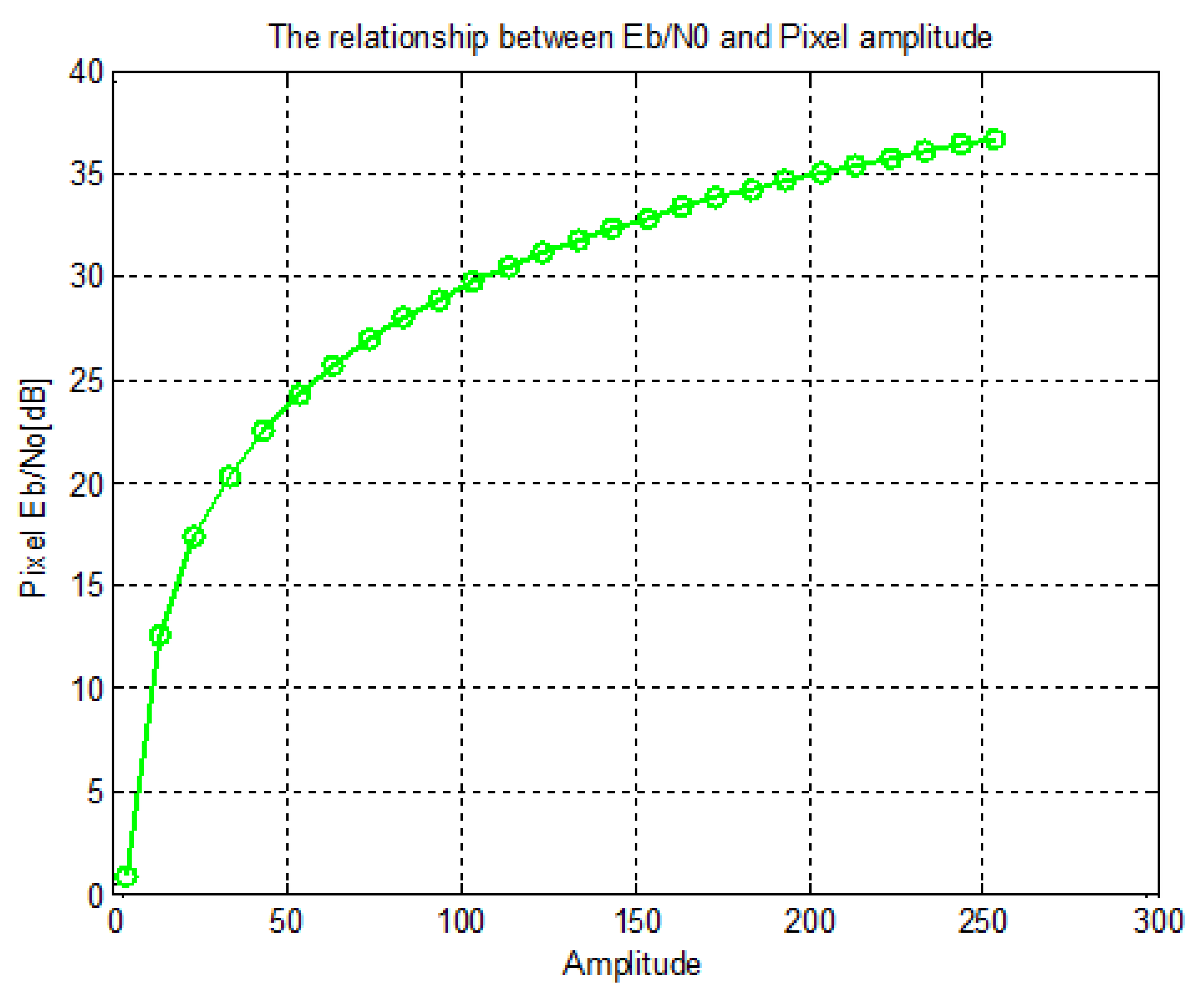

4.1. The Pixel Energy Per Bit to the Spectral Noise Density Ratio Computation and Noise Modeling

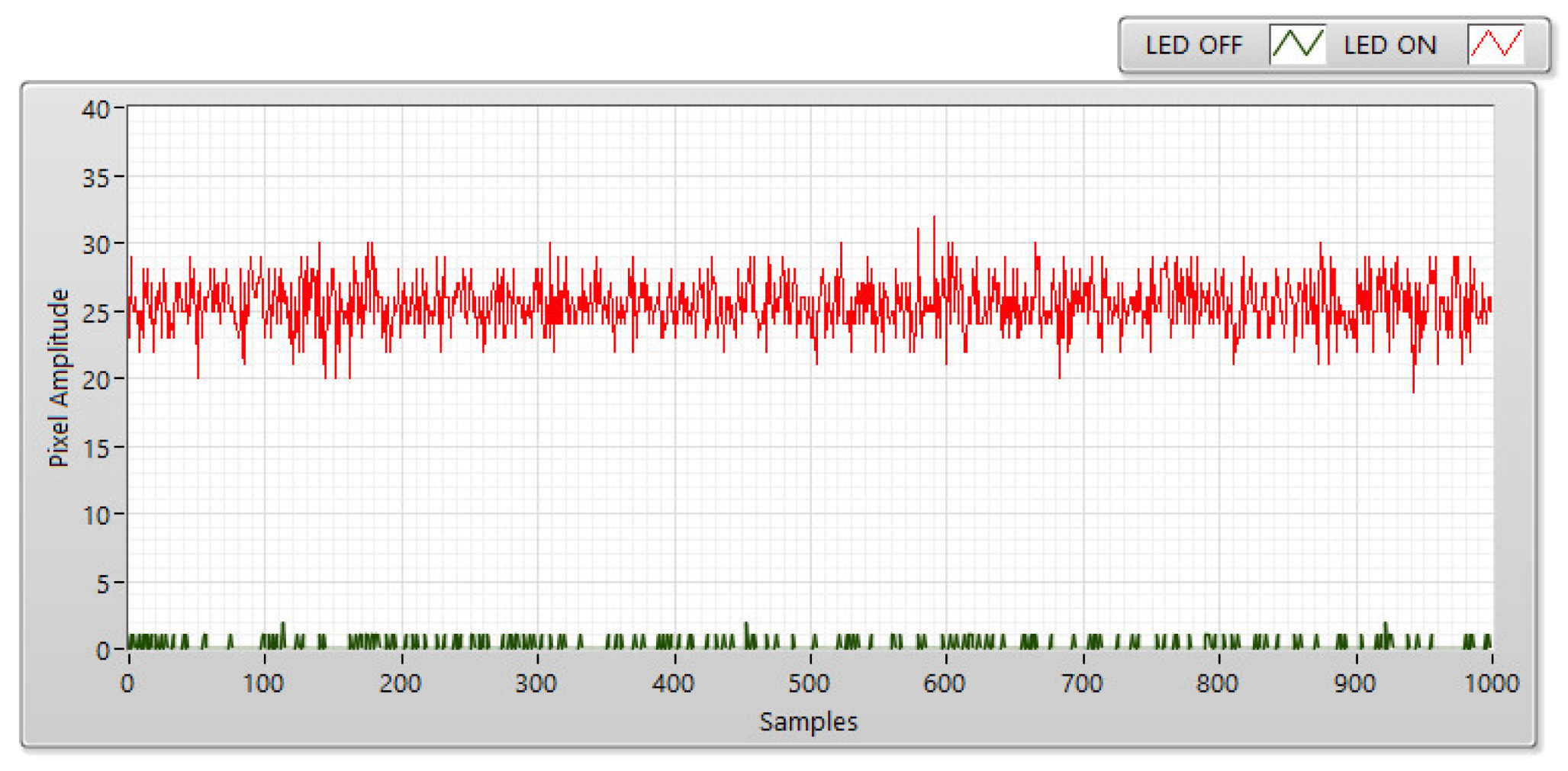

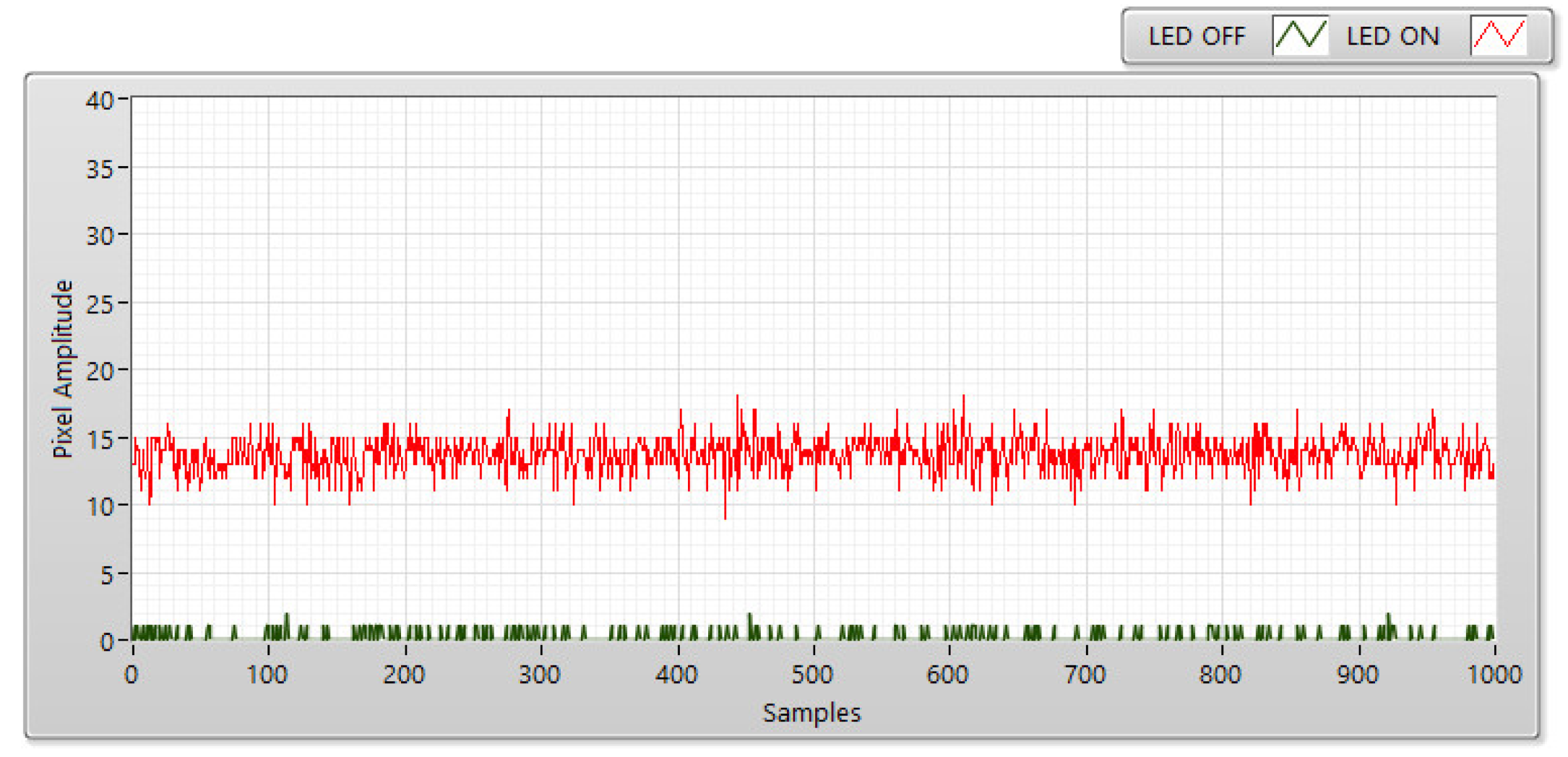

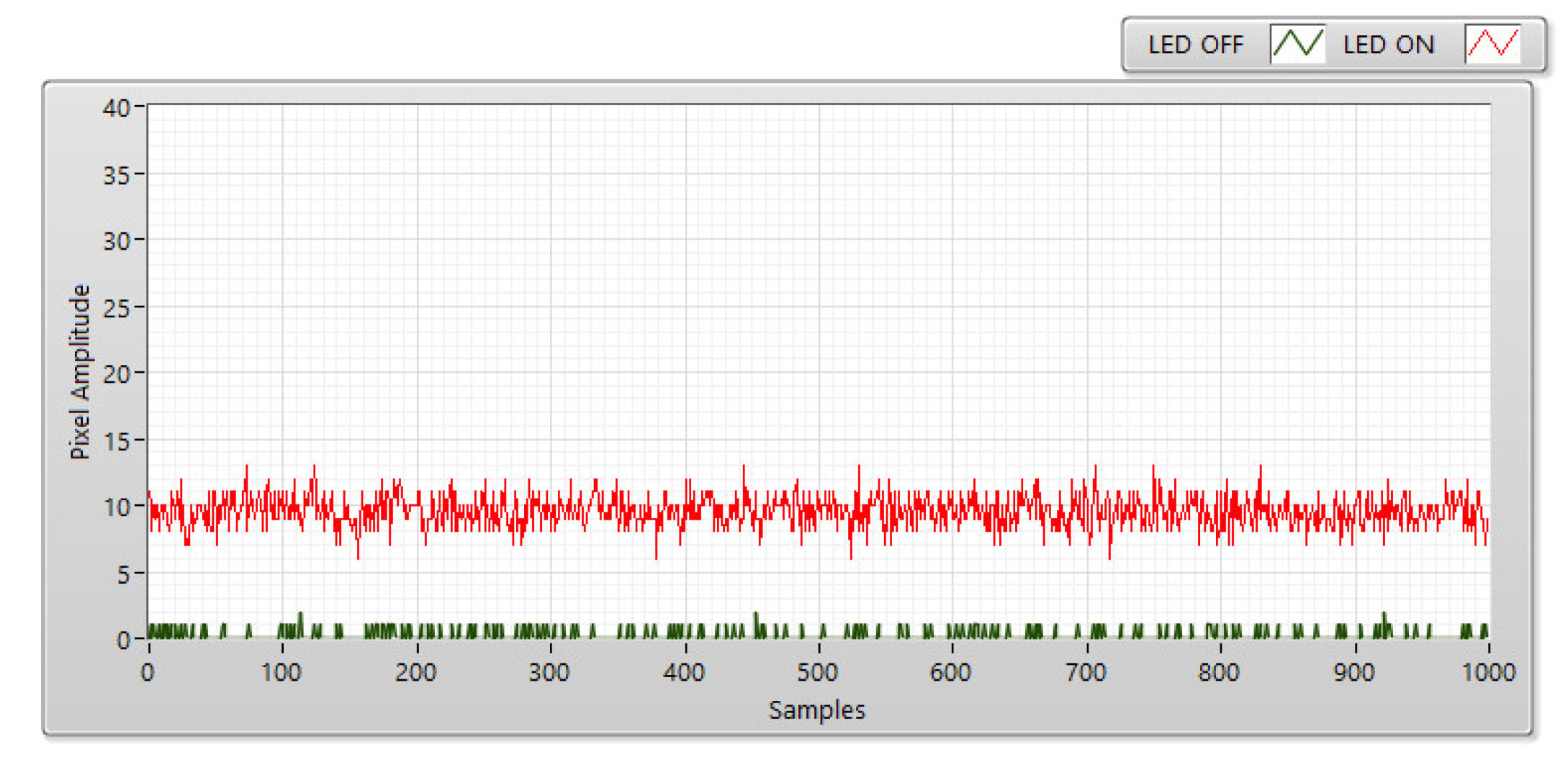

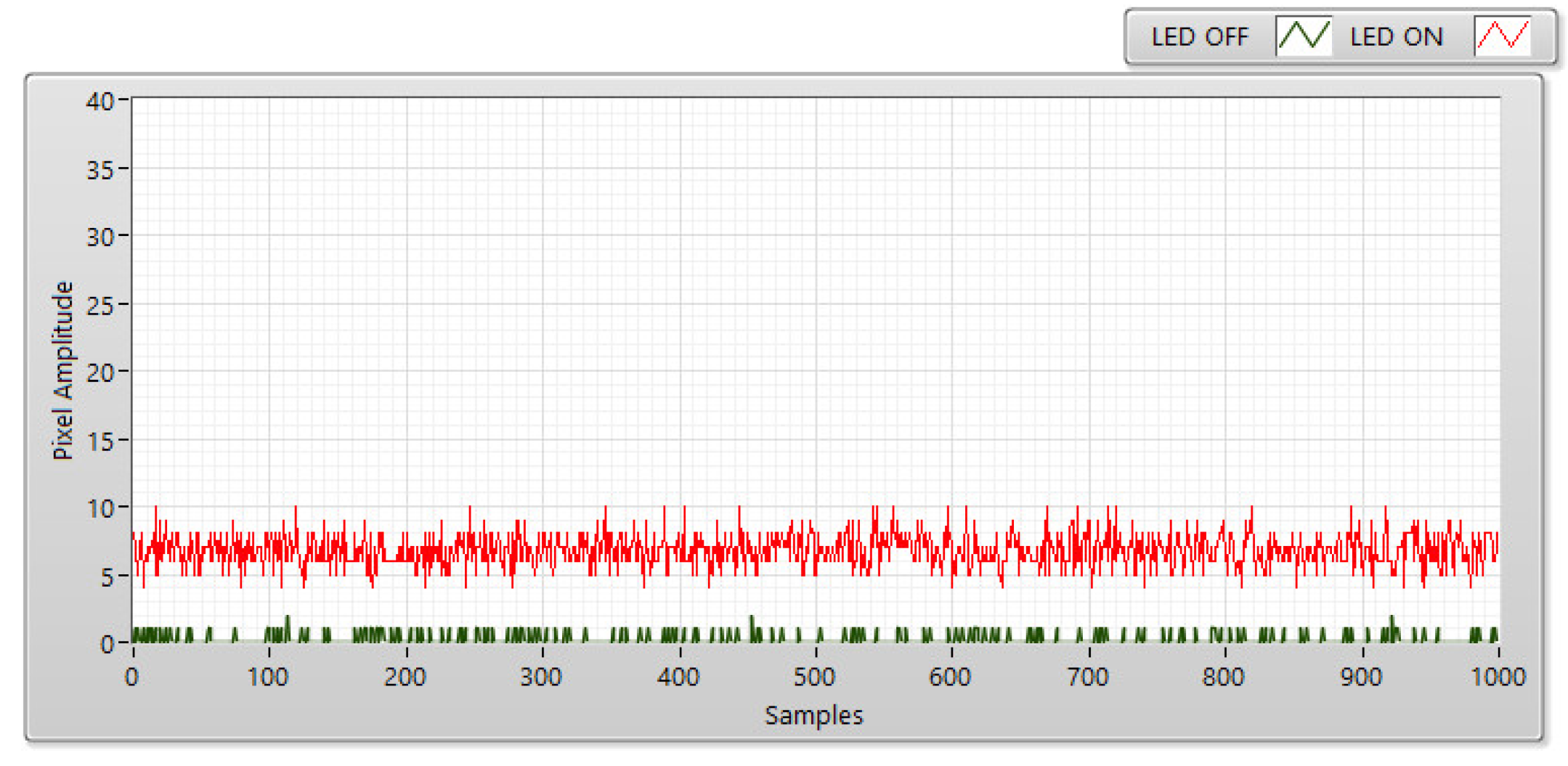

4.2. The Experimental SNR Measurement

4.3. BER Estimation for the Optical on–off Keying Modulation

4.4. Implementation Results

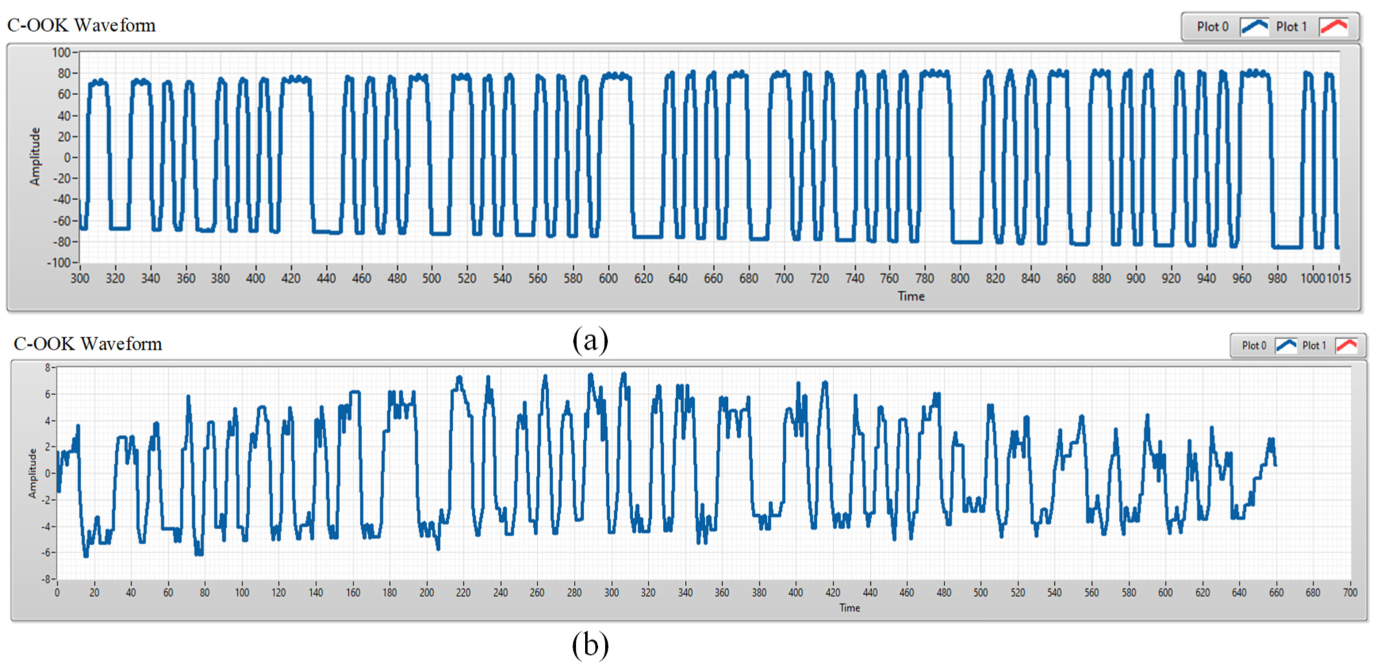

4.4.1. Oversampling

4.4.2. Undersampling

4.4.3. Implementation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- You, X.; Wang, C.X.; Huang, J.; Gao, X.; Zhang, Z.; Wang, M.; Huang, Y.; Zhang, C.; Jiang, Y.; Wang, J.; et al. Towards 6G wireless communication networks: Vision, enabling technologies, and new paradigm shifts. Sci. China Inf. Sci. 2020, 64, 110301. [Google Scholar] [CrossRef]

- Kim, J.H.; Lee, J.K.; Kim, H.G.; Kim, K.B.; Kim, H.R. Possible effects of radiofrequency electromagnetic field exposure on central nerve system. Biomol. Ther. 2019, 27, 265–275. [Google Scholar] [CrossRef] [PubMed]

- Sridhar, R.; Richard, D.; Kyu, L.S. IEEE 802.15.7 visible light communication: Modulation and dimming support. IEEE Commun. Mag. 2012, 17, 2047–2077. [Google Scholar]

- IEEE std 802.15.7-2011; IEEE Standard for Local and Metropolitan Area Networks—Part 15.7: Short-Range Wireless Optical Communication Using Visible Light. IEEE-SA: Piscataway, NJ, USA, 2011.

- IEEE std 802.15.7-2018; IEEE Standard for Local and Metropolitan Area Networks—Part 15.7: Short-Range Optical Wireless Communications. IEEE-SA: Piscataway, NJ, USA, 2018.

- Nikola, S.; Volker, J.; Yeong Min, J.; John, L.Q. An Overview on High-Speed Optical Wireless/Light Communications. Available online: https://mentor.ieee.org/802.11/dcn/17/11-17-0962-02-00lc-an-overviewon-high-speed-optical-wireless-light-communications.pdf (accessed on 2 February 2022).

- Nguyen, H.; Jang, Y.M. Design of MIMO C-OOK using Matched filter for Optical Camera Communication System. In Proceedings of the 2021 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju, Korea, 13–16 April 2021. [Google Scholar]

- Nagura, T.; Yamazato, T.; Katayama, M.; Yendo, T.; Fujii, T.; Okada, H. Improved decoding methods of visible light communication system for its using LED array and high-speed camera. In Proceedings of the IEEE 71st Vehicular Technology Conferences (VTC), Taipei, Taiwan, 16–19 May 2010; pp. 1–5. [Google Scholar]

- Tan, K.S.; Hinberg, I.; Wadhwani, J. Electromagnetic interference in medical devices: Health Canada’s past current perspectives and activities. In Proceedings of the IEEE International Symposium Electromagnetic Compatibility, Montreal, QC, Canada, 13–17 August 2001; pp. 1283–1284. [Google Scholar]

- Masao, T.; Soichi, W. Biological and health effects of exposure to electromagnetic field from mobile communi-cations system. IATSS Res. J. 2001, 5, 40–50. [Google Scholar]

- Ong, Z.; Rachim, V.P.; Chung, W.Y. Novel electromagnetic-interference-free indoor environment monitoring system by mobile camera-image-sensor-based VLC. IEEE Photonics J. 2017, 9, 1–11. [Google Scholar] [CrossRef]

- Haas, H.; Yin, L.; Wang, Y.; Chen, C. What is LiFi? J. Lightwave sTechnol. 2015, 34, 1533–1544. [Google Scholar] [CrossRef]

- Ali, A.Y.; Zhang, Z.; Zong, B. Pulse position and shape modulation for visible light communication system. In Proceedings of the International Conferences Electromagnetics Advanced Application, Palm Beach, FL, USA, 3–8 August 2014; pp. 546–549. [Google Scholar]

- Videv, S.; Haas, H. Practical space shift keying VLC system. In Proceedings of the IEEE Wireless Communication Networking Conferences, Istanbul, Turkey, 6–9 April 2014; pp. 405–409. [Google Scholar]

- Deng, P.; Kavehrad, M. Real-time software-defined single-carrier QAM MIMO visible light communication system. In Proceedings of the Integrated Communications Navigation and Surveillance (ICNS), Herndon, VA, USA, 19–21 April 2016; pp. 5A3-1–5A3-11. [Google Scholar]

- Cai, H.B.; Zhang, J.; Zhu, Y.J.; Zhang, J.K.; Yang, X. Optimal constellation design for Indoor MIMO visible light communications. IEEE Commun. Lett. 2016, 20, 264–267. [Google Scholar] [CrossRef]

- Thieu, M.D.; Pham, T.L.; Nguyen, T.; Jang, Y.M. Optical-RoI-signaling for vehicular communications. IEEE Access 2019, 7, 69873–69891. [Google Scholar] [CrossRef]

- Nguyen, T.; Islam, A.; Hossan, T.; Jang, Y.M. Current status and performance analysis of optical camera communication technologies for 5G Networks. IEEE Access 2017, 5, 4574–4594. [Google Scholar] [CrossRef]

- Ayyash, M.; Elgala, H.; Khreishah, A.; Jungnickel, V.; Little, T.; Shao, S.; Rahaim, M.; Schulz, D.; Hilt, J.; Freund, R. Coexistence of WiFi and LiFi toward 5G: Concepts, opportunities, and challenges. IEEE Commun. Mag. 2016, 54, 64–71. [Google Scholar] [CrossRef]

- Nguyen, T.; Hossain, M.; Jang, Y. Design and implementation of a novel compatible encoding scheme in the time domain for image sensor communication. Sensors 2016, 16, 736. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nguyen, V.H.; Thieu, M.D.; Nguyen, H.; Jang, Y.M. Design and Implementation of the MIMO–COOK Scheme Using an Image Sensor for Long-Range Communication. Sensors 2019, 20, 2258. [Google Scholar] [CrossRef]

- Nguyen, H.; Thieu, M.D.; Nguyen, T.; Jang, Y.M. Rolling OFDM for Image Sensor Based Optical Wireless Communication. IEEE Photonics J. 2019, 11, 1–17. [Google Scholar] [CrossRef]

- Nguyen, T.; Thieu, M.D.; Jang, Y.M. 2D-OFDM for optical camera communication: Principle and implementation. IEEE Access 2019, 7, 29405–29424. [Google Scholar] [CrossRef]

- Isa, I.S.; Rosli, M.S.; Yusof, U.K.; Maruzuki, M.I.; Sulaiman, S.N. Optimizing the Hyperparameter Tuning of YOLOv5 for Underwater Detection. IEEE Access 2022, 10, 52818–52831. [Google Scholar] [CrossRef]

- Guan, W.; Li, J.; Wen, S.; Zhang, X.; Ye, Y.; Zheng, J. The detection and recognition of RGB-LED-ID based on visible light communication using convolutional neural network. Appl. Sci. 2019, 9, 1400. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, H.; Thieu, M.D.; Pham, T.L.; Nguyen, H.; Jang, Y.M. The Impact of Camera Parameters on Optical Camera Communication. In Proceedings of the 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019. [Google Scholar]

| Transmitter | ||||

|---|---|---|---|---|

| Optical clock rate | 8 kHz | 10 kHz | ||

| RLL code | Manchester code | 4B6B code | Manchester code | 4B6B code |

| Forward Error Correction | Reed Solomon code (15,11) | |||

| LED type | 9 V, 3 W | |||

| Number of LEDs | 2 | 3 | ||

| Packet rate (packet/s) | 30 | |||

| Receiver | ||||

| Camera type | Rolling Shutter Camera (FL3-U3-132C-CS) | |||

| Camera frame rate (fps) | 60 | |||

| Data rate of OCC system | ||||

| Uncode bit rate (kbps) | 1.8 | 2.7 | 3.375 | 5.06 |

| Code bit rate (kbps) | 1.32 | 1.98 | 2.22 | 3.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, V.L.; Tran, D.H.; Nguyen, H.; Jang, Y.M. An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System. Appl. Sci. 2022, 12, 6935. https://doi.org/10.3390/app12146935

Nguyen VL, Tran DH, Nguyen H, Jang YM. An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System. Applied Sciences. 2022; 12(14):6935. https://doi.org/10.3390/app12146935

Chicago/Turabian StyleNguyen, Van Linh, Duc Hoang Tran, Huy Nguyen, and Yeong Min Jang. 2022. "An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System" Applied Sciences 12, no. 14: 6935. https://doi.org/10.3390/app12146935

APA StyleNguyen, V. L., Tran, D. H., Nguyen, H., & Jang, Y. M. (2022). An Experimental Demonstration of MIMO C-OOK Scheme Based on Deep Learning for Optical Camera Communication System. Applied Sciences, 12(14), 6935. https://doi.org/10.3390/app12146935