Augmented Reality in Surgery: A Scoping Review

Abstract

:1. Introduction

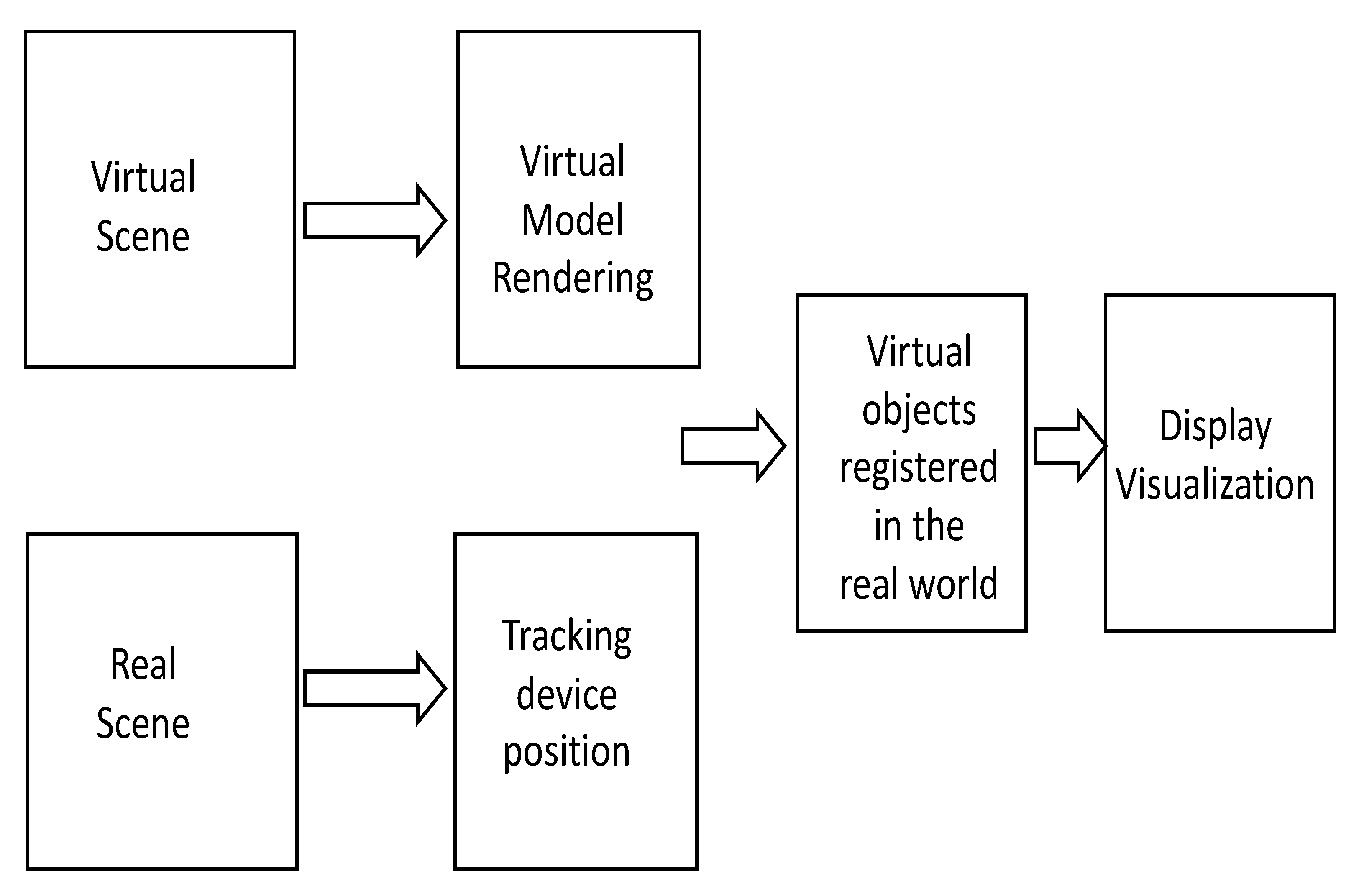

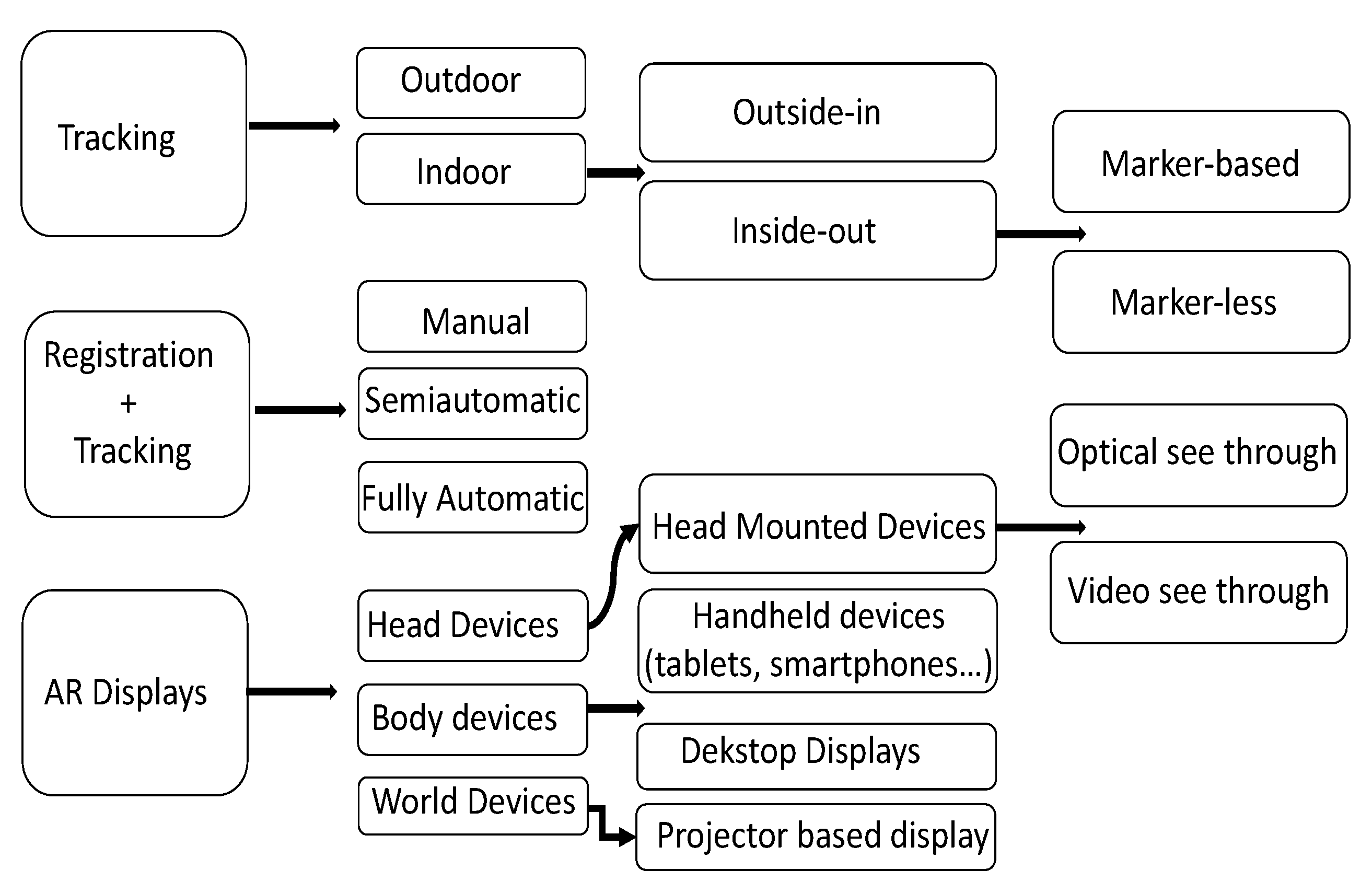

2. Theoretical Background

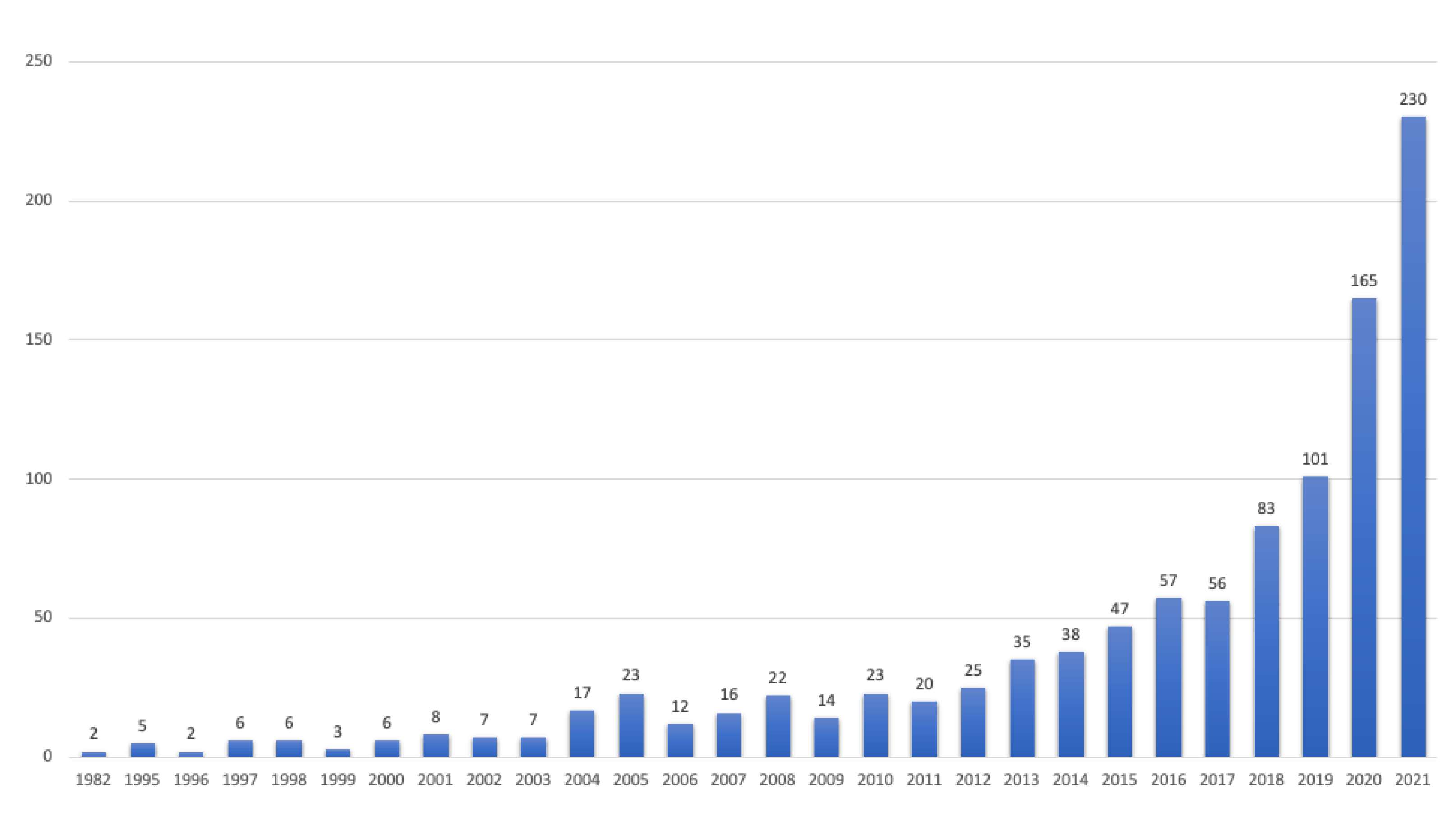

3. Materials and Methods

3.1. Inclusion Criteria

3.2. Selection of Sources Criteria

4. Results

4.1. Oncology

4.2. Orthopedics

4.3. Spinal Surgeries

4.4. Neurosurgery

4.5. Surgical Training and Medical Education

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Park, B.J.; Hunt, S.J.; Martin, C., III; Nadolski, G.J.; Wood, B.; Gade, T.P. Augmented and Mixed Reality: Technologies for Enhancing the Future of IR. J. Vasc. Interv. Radiol. 2020, 31, 1074–1082. [Google Scholar] [CrossRef] [PubMed]

- Villarraga-Gómez, H.; Herazo, E.L.; Smith, S.T. X-ray computed tomography: From medical imaging to dimensional metrology. Precis. Eng. 2019, 60, 544–569. [Google Scholar] [CrossRef]

- Cutolo, F. Augmented Reality in Image-Guided Surgery. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer International Publishing: Cham, Switzterland, 2017; pp. 1–11. [Google Scholar] [CrossRef]

- Allison, B.; Ye, X.; Janan, F. MIXR: A Standard Architecture for Medical Image Analysis in Augmented and Mixed Reality; IEEE Computer Society: Washington, DC, USA, 2020; pp. 252–257. [Google Scholar] [CrossRef]

- Marmulla, R.; Hoppe, H.; Mühling, J.; Eggers, G. An augmented reality system for image-guided surgery: This article is derived from a previous article published in the journal International Congress Series. Int. J. Oral Maxillofac. Surg. 2005, 34, 594–596. [Google Scholar] [CrossRef] [PubMed]

- Peters, T.M. Image-guidance for surgical procedures. Phys. Med. Biol. 2006, 51, R505–R540. [Google Scholar] [CrossRef] [PubMed]

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented Reality in Medicine: Systematic and Bibliographic Review. JMIR Publ. 2019, 7, e10967. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Kim, H.; Kim, Y.O. Virtual Reality and Augmented Reality in Plastic Surgery: A Review. Arch. Plast. Surg. 2017, 44, 179–187. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Desselle, M.R.; Brown, R.A.; James, A.R.; Midwinter, M.J.; Powell, S.K.; Woodruff, M.A. Augmented and Virtual Reality in Surgery. Comput. Sci. Eng. 2020, 22, 18–26. [Google Scholar] [CrossRef]

- Pérez-Pachón, L.; Poyade, M.; Lowe, T.; Gröning, F. Image Overlay Surgery Based on Augmented Reality: A Systematic Review. In Biomedical Visualisation. Advances in Experimental Medicine and Biology; Springer International Publishing: Cham, Switzterland, 2020; Volume 1260, pp. 175–195. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef] [Green Version]

- Cheng, J.; Chen, K.; Chen, W. Comparison of marker-based AR and markerless AR: A case study on indoor decoration system. In Proceedings of the Lean and Computing in Construction Congress (LC3): Proceedings of the Joint Conference on Computing in Construction (JC3), Heraklion, Greece, 4–7 July 2017; pp. 483–490. [Google Scholar] [CrossRef] [Green Version]

- Thangarajah, A.; Wu, J.; Madon, B.; Chowdhury, A.K. Vision-based registration for augmented reality-a short survey. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015; pp. 463–468. [Google Scholar] [CrossRef]

- Quero, G.; Lapergola, A.; Soler, L.; Shahbaz, M.; Hostettler, A.; Collins, T.; Marescaux, J.; Mutter, D.; Diana, M.; Pessaux, P. Virtual and Augmented Reality in Oncologic Liver Surgery. Surg. Oncol. Clin. N. Am. 2019, 28, 31–44. [Google Scholar] [CrossRef]

- Tuceryan, M.; Greer, D.S.; Whitaker, R.T.; Breen, D.E.; Crampton, C.; Rose, E.; Ahlers, H.K. Calibration requirements and procedures for a monitor-based augmented reality system. IEEE Trans. Vis. Comput. Graph. 1995, 1, 255–273. [Google Scholar] [CrossRef]

- Maybody, M.; Stevenson, C.; Solomon, S.B. Overview of Navigation Systems in Image-Guided Interventions. Tech. Vasc. Interv. Radiol. 2013, 16, 136–143. [Google Scholar] [CrossRef]

- Zhanat, M.; Vslor, H.A. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef] [Green Version]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Intelligent Predictive Maintenance and Remote Monitoring Framework for Industrial Equipment Based on Mixed Reality. Front. Mech. Eng. 2020, 6, 578379. [Google Scholar] [CrossRef]

- Bruce, T.H. A Survey of Visual, Mixed, and Augmented Reality Gaming. Assoc. Comput. Mach. 2012, 10, 1. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [Green Version]

- Schwam, Z.G.; Kaul, V.F.; Bu, D.D.; Iloreta, A.M.C.; Bederson, J.B.; Perez, E.; Cosetti, M.K.; Wanna, G.B. The utility of augmented reality in lateral skull base surgery: A preliminary report. Am. J. Otolaryngol. 2021, 42, 102942. [Google Scholar] [CrossRef]

- Coelho, G.; Trigo, L.; Faig, F.; Vieira, E.V.; da Silva, H.P.G.; Acácio, G.; Zagatto, G.; Teles, S.; Gasparetto, T.P.D.; Freitas, L.F.; et al. The Potential Applications of Augmented Reality in Fetoscopic Surgery for Antenatal Treatment of Myelomeningocele. World Neurosurg. 2022, 159, 27–32. [Google Scholar] [CrossRef]

- Gouveia, P.F.; Costa, J.; Morgado, P.; Kates, R.; Pinto, D.; Mavioso, C.; Anacleto, J.; Martinho, M.; Lopes, D.S.; Ferreira, A.R.; et al. Breast cancer surgery with augmented reality. Breast 2021, 56, 14–17. [Google Scholar] [CrossRef]

- Chen, F.; Cui, X.; Han, B.; Liu, J.; Zhang, X.; Liao, H. Augmented reality navigation for minimally invasive knee surgery using enhanced arthroscopy. Comput. Methods Programs Biomed. 2021, 201, 105952. [Google Scholar] [CrossRef]

- Golse, N.; Petit, A.; Lewin, M.; Vibert, E.; Cotin, S. Augmented Reality during Open Liver Surgery Using a Markerless Non-rigid Registration System. J. Gastrointest. Surg. 2021, 25, 662–671. [Google Scholar] [CrossRef]

- Gsaxner, C.; Pepe, A.; Li, J.; Ibrahimpasic, U.; Wallner, J.; Schmalstieg, D.; Egger, J. Augmented Reality for Head and Neck Carcinoma Imaging: Description and Feasibility of an Instant Calibration, Markerless Approach. Comput. Methods Programs Biomed. 2020, 200, 105854. [Google Scholar] [CrossRef] [PubMed]

- Molina, C.; Sciubba, D.; Greenberg, J.; Khan, M.; Withamm, T. Clinical Accuracy, Technical Precision, and Workflow of the First in Human Use of an Augmented-Reality Head-Mounted Display Stereotactic Navigation System for Spine Surgery. Oper. Neurosurg. 2021, 20, 300–309. [Google Scholar] [CrossRef] [PubMed]

- Ackermann, J.; Florentin, L.; Armando, H.; Jess, S.; Mazda, F.; Stefan, R.; Patrick, Z.; Furnstahl, P. Augmented Reality Based Surgical Navigation of Complex Pelvic Osteotomies—A Feasibility Study on Cadavers. Appl. Sci. 2021, 11, 1228. [Google Scholar] [CrossRef]

- Peng, L.; Chenmeng, L.; Changlin, X.; Zeshu, Z.; Junqi, M.; Jian, G.; Pengfei, S.; Ian, V.; Pawlik, T.M.; Chengbiao, D.; et al. A Wearable Augmented Reality Navigation System for Surgical Telementoring Based on Microsoft HoloLens. Ann. Biomed. Eng. 2021, 49, 287–298. [Google Scholar] [CrossRef]

- Collins, T.; Pizarro, D.; Gasparini, S.; Bourdel, N.; Chauvet, P.; Canis, M.; Calvet, L.; Bartoli, A. Augmented Reality Guided Laparoscopic Surgery of the Uterus. IEEE Trans. Med. Imaging 2021, 40, 371–380. [Google Scholar] [CrossRef] [PubMed]

- Arpaia, P.; Benedetto, E.D.; Duraccio, L. Design, implementation, and metrological characterization of a wearable, integrated AR-BCI hands-free system for health 4.0 monitoring. Measurement 2021, 177, 109280. [Google Scholar] [CrossRef]

- Shrestha, G.; Alsadoon, A.; Prasad, P.W.C.; Al-Dala’in, T.; Alrubaie, A. A novel enhanced energy function using augmented reality for a bowel: Modified region and weighted factor. Multimed. Tools Appl. 2021, 80, 17893–17922. [Google Scholar] [CrossRef]

- Wei, W.; Ho, E.; McCay, K.; Damasevicius, R.; Maskeliunas, R.; Esposito, A. Assessing Facial Symmetry and Attractiveness using Augmented Reality. Pattern Anal. Appl. 2021, 1–17. [Google Scholar] [CrossRef]

- Lee, D.; Yu, H.W.; Kim, S.; Yoon, J.; Lee, K.; Chai, Y.J.; Choi, J.Y.; Kong, H.J.; Lee, K.E.; Cho, H.S.; et al. Vision-based tracking system for augmented reality to localize recurrent laryngeal nerve during robotic thyroid surgery. Sci. Rep. 2020, 10, 8437. [Google Scholar] [CrossRef]

- Hussain, R.; Lalande, A.; Marroquin, R.; Guigou, C.; Bozorg Grayeli, A. Video-based augmented reality combining CT-scan and instrument position data to microscope view in middle ear surgery. Sci. Rep. 2020, 10, 6767. [Google Scholar] [CrossRef] [Green Version]

- Rüger, C.; Feufel, M.; Moosburner, S.; Özbek, C.; Pratschke, J.; Sauer, I. Ultrasound in augmented reality: A mixed-methods evaluation of head-mounted displays in image-guided interventions. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1895–1905. [Google Scholar] [CrossRef]

- Carl, B.; Bopp, M.H.A.; Benescu, A.; Saß, B.; Nimsky, C. Indocyanine green angiography visualized by augmented reality in aneurysm surgery. World Neurosurg. 2020, 142, e307–e315. [Google Scholar] [CrossRef]

- Chan, J.Y.K.; Holsinger, F.C.; Liu, S.; Sorger, J.M.; Azizian, M.; Tsang, R.K.Y. Augmented reality for image guidance in transoral robotic surgery. J. Robot. Surg. 2019, 14, 579–583. [Google Scholar] [CrossRef]

- Ferraguti, F.; Minelli, M.; Farsoni, S.; Bazzani, S.; Bonfè, M.; Vandanjon, A.; Puliatti, S.; Bianchi, G.; Secchi, C. Augmented Reality and Robotic-Assistance for Percutaneous Nephrolithotomy. IEEE Robot. Autom. Lett. 2020, 5, 4556–4563. [Google Scholar] [CrossRef]

- Auloge, P.; Cazzato, R.; Ramamurthy, N.; De Marini, P.; Rousseau, C.; Garnon, J.; Charles, Y.; Steib, J.P.; Gangi, A. Augmented reality and artificial intelligence-based navigation during percutaneous vertebroplasty: A pilot randomised clinical trial. Eur. Spine J. 2020, 29, 1580–1589. [Google Scholar] [CrossRef]

- Libaw, J.; Sinskey, J. Use of Augmented Reasameility During Inhaled Induction of General Anesthesia in 3 Pediatric Patients: A Case Report. A&A Pract. 2020, 14, e01219. [Google Scholar] [CrossRef]

- Pietruski, P.; Majak, M.; Swiatek-Najwer, E.; Żuk, M.; Popek, M.; Jaworowski, J.; Mazurek, M. Supporting Fibula Free Flap Harvest With Augmented Reality: A Proof-of-Concept Study. Laryngoscope 2019, 130, 1173–1179. [Google Scholar] [CrossRef]

- Jiang, T.; Yu, D.; Wang, Y.; Zan, T.; Wang, S.; Li, Q. HoloLens-Based Vascular Localization System: Precision Evaluation Study With a Three-Dimensional Printed Model. J. Med. Internet Res. 2020, 22, e16852. [Google Scholar] [CrossRef]

- Samei, G.; Tsang, K.; Kesch, C.; Lobo, J.; Hor, S.; Mohareri, O.; Chang, S.; Goldenberg, S.L.; Black, P.C.; Salcudean, S. A partial augmented reality system with live ultrasound and registered preoperative MRI for guiding robot-assisted radical prostatectomy. Med. Image Anal. 2020, 60, 101588. [Google Scholar] [CrossRef]

- Rose, A.; Kim, H.; Fuchs, H.; Frahm, J.M. Development of augmented-reality applications in otolaryngology-head and neck surgery: Augmented Reality Applications. Laryngoscope 2019, 129, S1–S11. [Google Scholar] [CrossRef]

- Carl, B.; Bopp, M.; Voellger, B.; Saß, B.; Nimsky, C. Augmented reality in transsphenoidal surgery. World Neurosurg. 2019, 125, e873–e883. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Alsadoon, A.; Prasad, P.W.C.; Al-Dala’in, T.; Haddad, S. A novel augmented reality visualization in jaw surgery: Enhanced ICP based modified rotation invariant and modified correntropy. Multimed. Tools Appl. 2021, 80, 1–25. [Google Scholar] [CrossRef]

- Abdel Al, S.; Abou Chaar, M.K.; Mustafa, A.; Al-Hussaini, M.; Barakat, F.; Asha, W. Innovative Surgical Planning in Resecting Soft Tissue Sarcoma of the Foot Using Augmented Reality With a Smartphone. J. Foot Ankle Surg. 2020, 59, 1092–1097. [Google Scholar] [CrossRef]

- Melero, M.; Hou, A.; Cheng, E.; Tayade, A.; Lee, S.C.; Unberath, M.; Navab, N. Upbeat: Augmented Reality-Guided Dancing for Prosthetic Rehabilitation of Upper Limb Amputees. J. Healthc. Eng. 2019, 2019, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tu, P.; Yao, G.; Lungu, A.; Li, D.; Wang, H.; Chen, X. Augmented Reality Based Navigation for Distal Interlocking of Intramedullary Nails Utilizing Microsoft HoloLens 2. Comput. Biol. Med. 2021, 133, 104402. [Google Scholar] [CrossRef] [PubMed]

- Cofano, F.; Di Perna, G.; Bozzaro, M.; Longo, A.; Marengo, N.; Zenga, F.; Zullo, N.; Cavalieri, M.; Damiani, L.; Boges, D.; et al. Augmented Reality in Medical Practice: From Spine Surgery to Remote Assistance. Front. Surg. 2021, 8, 657901. [Google Scholar] [CrossRef] [PubMed]

- Heinrich, F.; Huettl, F.; Schmidt, G.; Paschold, M.; Kneist, W.; Huber, T.; Hansen, C. HoloPointer: A virtual augmented reality pointer for laparoscopic surgery training. Int. J. CARS 2021, 16, 161–168. [Google Scholar] [CrossRef] [PubMed]

- Brookes, M.J.; Chan, C.D.; Baljer, B.; Wimalagunaratna, S.; Crowley, T.P.; Ragbir, M.; Irwin, A.; Gamie, Z.; Beckingsale, T.; Ghosh, K.M.; et al. Surgical Advances in Osteosarcoma. Cancers 2021, 13, 388. [Google Scholar] [CrossRef]

- Kraeima, J.; Glas, H.; Merema, B.; Vissink, A.; Spijkervet, F.; Witjes, M. Three-dimensional virtual surgical planning in the oncologic treatment of the mandible. Oral Dis. 2021, 27, 14–20. [Google Scholar] [CrossRef]

- Wake, N.; Nussbaum, J.E.; Elias, M.I.; Nikas, C.V.; Bjurlin, M.A. 3D Printing, Augmented Reality, and Virtual Reality for the Assessment and Management of Kidney and Prostate Cancer: A Systematic Review. Urology 2020, 143, 20–32. [Google Scholar] [CrossRef]

- Alexandre, L.; Torstein, M.; Karl, S.; Marco, C. Augmented reality in intracranial meningioma surgery: A case report and systematic review. J. Neurosurg. Sci. 2020, 64, 369–376. [Google Scholar] [CrossRef]

- Lee, C.; Wong, G.K.C. Virtual reality and augmented reality in the management of intracranial tumors: A review. J. Clin. Neurosci. 2019, 62, 14–20. [Google Scholar] [CrossRef]

- Gerard, I.J.; Kersten-Oertel, M.; Petrecca, K.; Sirhan, D.; Hall, J.A.; Collins, D.L. Brain shift in neuronavigation of brain tumors: A review. Med. Image Anal. 2017, 35, 403–420. [Google Scholar] [CrossRef]

- Inoue, D.; Cho, B.; Mori, M.; Kikkawa, Y.; Amano, T.; Nakamizo, A.; Yoshimoto, K.; Mizoguchi, M.; Tomikawa, M.; Hong, J.; et al. Preliminary study on the clinical application of augmented reality neuronavigation. J. Neurol. Surg. Part A Cent. Eur. Neurosurg. 2013, 74, 71–76. [Google Scholar] [CrossRef] [Green Version]

- Besharati, T.L.; Mehran, M. Augmented reality-guided neurosurgery: Accuracy and intraoperative application of an image projection technique. J. Neurosurg. 2015, 123, 206–211. [Google Scholar] [CrossRef] [Green Version]

- Cabrilo, I.; Sarrafzadeh, A.; Bijlenga, P.; Landis, B.N.; Schaller, K. Augmented reality-assisted skull base surgery. Neurochirurgie 2014, 60, 304–306. [Google Scholar] [CrossRef]

- Contreras López, W.; Navarro, P.; Crispin, S. Intraoperative clinical application of augmented reality in neurosurgery: A systematic review. Clin. Neurol. Neurosurg. 2019, 177, 6–11. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N.; Kardamakis, D. A Smart IoT Platform for Oncology Patient Diagnosis based on AI: Towards the Human Digital Twin. Procedia CIRP 2021, 104, 1686–1691. [Google Scholar] [CrossRef]

- Casari, F.A.; Navab, N.; Hruby, L.A.; Philipp, K.; Ricardo, N.; Romero, T.; de Lourdes dos Santos Nunes, F.; Queiroz, M.C.; Furnstahl, P.; Mazda, F. Augmented Reality in Orthopedic Surgery Is Emerging from Proof of Concept Towards Clinical Studies: A Literature Review Explaining the Technology and Current State of the Art. Curr. Rev. Musculoskelet. Med. 2021, 14, 192–203. [Google Scholar] [CrossRef]

- Bagwe, S.; Singh, K.; Kashyap, A.; Arora, S.; Maini, L. Evolution of augmented reality applications in Orthopaedics: A systematic review. J. Arthrosc. Jt. Surg. 2021, 8, 84–90. [Google Scholar] [CrossRef]

- Negrillo-Cardenas, J.; Jimenez-Perez, J.R.; Feito, F.R. The role of virtual and augmented reality in orthopedic trauma surgery: From diagnosis to rehabilitation. Comput. Methods Programs Biomed. 2020, 191, 105407. [Google Scholar] [CrossRef] [PubMed]

- Jud, L.; Fotouhi, J.; Andronic, O.; Aichmair, A.; Osgood, G.; Navab, N.; Farshad, M. Applicability of augmented reality in orthopedic surgery—A systematic review. BMC Musculoskelet. Disord. 2020, 21, 103. [Google Scholar] [CrossRef] [PubMed]

- Molina, C.A.; Phillips, F.M.; Poelstra, K.A.; Colman, M.; Khoo, L.T. 151. A cadaveric precision and accuracy analysis of augmented reality mediated percutaneous pedicle implant insertion. Spine J. 2020, 20, S74. [Google Scholar] [CrossRef]

- Burstrom, G.; Persson, O.; Edstrom, E.; Elmi-Terander, A. Augmented reality navigation in spine surgery: A systematic review. Acta Neurochir. 2021, 163, 843–852. [Google Scholar] [CrossRef]

- Frank, Y.; Georgios, M.; Kosuke, S.; Jeremy, S. Current innovation in virtual and augmented reality in spine surgery. Ann. Transl. Med. 2021, 9, 94. [Google Scholar] [CrossRef]

- Sakai, D.; Joyce, K.; Sugimoto, M.; Horikita, N.; Hiyama, A.; Sato, M.; Devitt, A.; Watanabe, M. Augmented, virtual and mixed reality in spinal surgery: A real-world experience. J. Vasc. Intervetional Radiol. 2020, 3, 28. [Google Scholar] [CrossRef]

- Vadalà, G.; Salvatore, S.D.; Ambrosio, L.; Russo, F.; Papalia, R.; Denaro, V. Robotic Spine Surgery and Augmented Reality Systems: A State of the Art. Neurospine 2020, 17, 88–100. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Yonghang, T.; Chengming, Z.; Lei, W.; Jun, Z.; Junjun, P.; Shi, J. Augmented reality in neurosurgical navigation: A survey. Int. J. Med Robot. Comput. Assist. Surg. MRCAS 2020, 16, e2160. [Google Scholar] [CrossRef]

- Deng, W.; Li, F.; Wang, M.; Song, Z. Easy-to-Use Augmented Reality Neuronavigation Using a Wireless Tablet PC. Stereotact. Funct. Neurosurg. 2014, 92, 17–24. [Google Scholar] [CrossRef]

- Gumprecht, H.K.; Widenka, D.C.; Lumenta, C.B. BrainLab VectorVision Neuronavigation System: Technology and clinical experiences in 131 cases. Neurosurgery 1999, 44, 97–104. [Google Scholar] [CrossRef]

- Grunert, P.; Darabi, K.; Espinosa, J.; Filippi, R. Computer-aided navigation in neurosurgery. Neurosurg. Rev. 2003, 26, 73–99. [Google Scholar] [CrossRef]

- Cleary, K.; Peters, T.M. Image-Guided Interventions: Technology Review and Clinical Applications. Annu. Rev. Biomed. Eng. 2010, 12, 119–142. [Google Scholar] [CrossRef]

- Incekara, F.; Smits, M.; Dirven, C.; Vincent, A. Clinical Feasibility of a Wearable Mixed-Reality Device in Neurosurgery. World Neurosurg. 2018, 118, e422–e427. [Google Scholar] [CrossRef]

- Moro, C.; Phelps, C.; Redmond, P.; Stromberga, Z. HoloLens and mobile augmented reality in medical and health science education: A randomised controlled trial. Br. J. Educ. Technol. 2020, 52, 680–694. [Google Scholar] [CrossRef]

- Kumar, N.; Pandey, S.; Rahman, E. A Novel Three-Dimensional Interactive Virtual Face to Facilitate Facial Anatomy Teaching Using Microsoft HoloLens. Aesthetic Plast. Surg. 2021, 45, 1005–1011. [Google Scholar] [CrossRef]

- Moro, C.; Phelps, C.; Jones, D.; Stromberga, Z. Using Holograms to Enhance Learning in Health Sciences and Medicine. Med. Sci. Educ. 2020, 30, 1351–1352. [Google Scholar] [CrossRef]

- Parsons, D.; Mac Callum, K. Current Perspectives on Augmented Reality in Medical Education: Applications, Affordances and Limitations. Adv. Med Educ. Pract. 2021, 12, 77–91. [Google Scholar] [CrossRef]

- Williams, M.A.; McVeigh, J.; Handa, A.I.; Regent, L. Augmented reality in surgical training: A systematic review. Postgrad. Med. J. 2020, 96, 537–542. [Google Scholar] [CrossRef]

- Cao, C.; Cerfolio, R.J. Virtual or Augmented Reality to Enhance Surgical Education and Surgical Planning. Thorac. Surg. Clin. 2019, 29, 329–337. [Google Scholar] [CrossRef]

- Yeung, A.W.K.; Tosevska, A.; Klager, E.; Eibensteiner, F.; Laxar, D.; Jivko, S.; Marija, G.; Sebastian, Z.; Stefan, K.; Rik, C.; et al. Virtual and Augmented Reality Applications in Medicine: Analysis of the Scientific Literature. J. Med Internet Res. 2021, 23, e25499. [Google Scholar] [CrossRef]

- McKnight, R.R.; Pean, C.A.; Buck, J.S.; Hwang, J.S.; Hsu, J.R.; Pierrie, S.N. Virtual Reality and Augmented Reality—Translating Surgical Training into Surgical Technique. Curr. Rev. Musculoskelet. Med. 2020, 13, 663–674. [Google Scholar] [CrossRef]

- Fazel, R.; Krumholz, H.M.; Wang, Y.; Ross, J.S.; Chen, J.; Ting, H.H.; Shah, N.D.; Nasir, K.; Einstein, A.J.; Nallamothu, B.K. Exposure to low-dose ionizing radiation from medical imaging procedures. N. Engl. J. Med. 2009, 361, 849–857. [Google Scholar] [CrossRef] [Green Version]

- Hong, J.Y.; Han, K.; Jung, J.H.; Kim, J.S. Association of exposure to diagnostic low-dose ionizing radiation with risk of cancer among youths in South Korea. JAMA Netw. Open 2019, 2, e1910584. [Google Scholar] [CrossRef] [Green Version]

- Reisz, J.; Bansal, N.; Qian, J.; Zhao, W.; Furdui, C. Effects of ionizing radiation on biological molecules–mechanisms of damage and emerging methods of detection. Antioxidants Redox Signal. 2014, 21, 260–292. [Google Scholar] [CrossRef]

- Peng, H.; Ding, C. Minimum redundancy and maximum relevance feature selection and recent advances in cancer classification. Feature Sel. Data Min. 2005, 3, 185–205. [Google Scholar] [CrossRef]

- Singh, V.K.; Ali, A.; Nair, P.S. A Report on Registration Problems in Augmented Reality. Int. J. Eng. Res. Technol. 2014, 3, 819–821. [Google Scholar]

- Chen, Y.; Wang, Q.; Chen, H.; Song, X.; Tang, H.; Tian, M. An overview of augmented reality technology. J. Phys. Conf. Ser. 2019, 1237, 022082. [Google Scholar] [CrossRef]

- Lee, Y.H.; Zhan, T.; Wu, S.T. Prospects and challenges in augmented reality displays. Virtual Real. Intell. Hardw. 2019, 1, 10–20. [Google Scholar] [CrossRef]

- Ren, D.; Goldschwendt, T.; Chang, Y.; Höllerer, T. Evaluating wide-field-of-view augmented reality with mixed reality simulation. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 93–102. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, J.; Fang, F. Vergence-accommodation conflict in optical see-through display: Review and prospect. Results Opt. 2021, 5, 100160. [Google Scholar] [CrossRef]

- Erkelens, I.M.; MacKenzie, K.J. 19-2: Vergence-Accommodation Conflicts in Augmented Reality: Impacts on Perceived Image Quality. SID Symp. Dig. Tech. Pap. 2020, 51, 265–268. [Google Scholar] [CrossRef]

- Kim, J.; Kane, D.; Banks, M.S. The rate of change of vergence–Accommodation conflict affects visual discomfort. Vis. Res. 2014, 105, 159–165. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Author | Application | Technology | Display | Registration | Error | Data Set |

|---|---|---|---|---|---|---|

| Schwam [21] | Lateral skull surgery | BrainLab Curve, Surgical Theate and Zeiss OPMI® PENTERO® 900 | Microscope-based HUD | Marker-less, rigid | Not reported | 40 patients. |

| Coelho [22] | Antenatal Treatment of Myelomeningocele. Preoperative and post operative simulation to make it happen | Unity Engine, Google ARCore libraries, ray casting target object rendering | Application for smartphone and tablets | object placed based on the rendering, | Not reported | 1 pregnant woman at 27 weeks of gestation. |

| Gouveia [23] | Left breast cancer: Oncology | Contrast-enhanced MRI Horos R software v2.4.0 | HoloLens AR Headset | Marker-based, rigid, | Not reported | 57 menopausal woman. |

| Chen [24] | Knee surgery arthroscopy | CT scanner, optical tracking system, | Glasses-free 3D display | Marker-based, rigid | Mean: 0.32 mm. Reduced error targets of 2.10 mm and 2.70 mm | Experiments: preclinical on knee phantom and in-vitro swine knee. |

| Golse [25] | Liver resection | 3D segmentation. CT | Standard mobile external monitor | Real time Marker-less, non-rigid | 7.9 mm root mean square error for internal landmark registration | In vivo: 5 patients, ex vivo: native tumor-free. |

| Gsaxner [26] | Head and neck Carcinoma: Training | CT, PET-CT and MRI scans. Instant calibration | HoloLens AR Headset | Marker-less, rigid | Between a few mm of up to 2 cm. | 11 health care professionals. |

| Molina [27] | Spinal navigation | iCT scans. Gertzbein-Robbins (GS) scale | AR-HMD Xvision (Augmedics) | Marker-based, rigid | Linear deviation: 2.07 mm. Angular deviation: 2.41. | 78-yr-old female. |

| Ackermann [28] | Osteotomy cuts and reorientation of the acetabular fragment: navigation system | CT scan | Microsoft HoloLens | Marker-based, rigid | Osteotomy starting points: 10.8 mm. Osteotomy directions: 5.4. Reorientation errors: x = 6.7, y = 7.0, z = 0.9. LCE angle postoperative error: 4.5 | 2 fresh-frozen human cadaverous hips. |

| Liu [29] | Medical training and telemonitored surgery | 2 color digital cameras. | Microsoft HoloLens | Marker-based, rigid | The overall tracking one: less than 2.5 mm. The overall guidance one: less than 2.75 mm | Ex vivo arm phantom, in vivo rabbit model. |

| Collins [30] | Uterus: Laparoscopy | MR or CT and monocular laparoscopes | Monitor | Marker-less, rigid | Distribution increase towards the cervix (2 mm for 15 views up to 8 mm for 2 views) | Phantom and videos recorded during laparoscopic surgery. |

| Arpaia [31] | Neurosurgery | Equipment of the brain computer interface. | Epson Moverio BT-350 glasses. | Not reported | Not reported | 10 runs on the same patient. |

| Shrestha [32] | Bowel | CT scans and endoscope camera intraoperatively. | Monitor | Marker-based, rigid | The overlay error accuracy was 0.24777px. Performance was 44fps | People with three different ages: 15–25, 26–35, 35–60. |

| Wei [33] | Plastic surgery | Google Face API | Android display | Rigid, Marker-based | Not reported | 4 benchmarks data set. |

| Lee [34] | thyroid surgery | CT. Semiautomatic registration | AR screen. Master surgical robot screen. | Marker-based, rigid | Mean ± SD = 1.9 ± 1.5 mm | 9 patients. |

| Hussain [35] | Ear surgery | Without tracking system, CT. Microscope 2D real time video | DDM. Bronchoscopy monitor | Marker-less, rigid | Surgical instrument tip position one: 0.3 ± 0.22 mm | 6 artificial human temporal bone specimens. |

| Feufel [36] | Ultrasound guided needle placement | Reflective markers Ultrasound transducer | Microsoft HoloLens | Marker-based, rigid | Mean error of 7.4 mm | 20 participants. |

| Carl [37] | Aneurysm surgery: indocyanine green (ICG) hagiography | CT, 3D rotational (DynaCT) or Time-of-flight magnetic resonance angiography. Automatic registration | Operating microscope HUD | Marker-based, rigid | Target registration one: 0.71 ± 0.21 mm | 20 patients with 22 aneurysm. |

| Chan [38] | Transoral robotic surgery | CT | 3D Surgeon’s console | Marker-based, rigid | Not reported | 2 cadavers. |

| Ferraguti [39] | Percutaneous Nephrolithotomy | Ct or MRI, 3 electrodes. Real time registration. | Microsoft HoloLens | Marker-based, rigid | Translation and orientation norm between 2 transformation matrices: 15.80 mm and 4.12 | 11 samples. |

| Auloge [40] | Percutaneous vertebroplasty | Cone-beam CT | Monitor | Marker-based, rigid | Not reported | 2 groups of 10 patients. |

| Libaw [41] | Inhaled induction of general anesthesia, pediatric | iPhone 7. | AR headset | Not reported | Not reported | 3 patients: 8 an 10 years old. |

| Pietruski [42] | Fibula free flap harvest | 7 markers. Actual, virtual registration. Sagittal surgical saw (GB129R) with a tracking adapter | HMD: Moverio BT-200 Smart Glasses, Epson | Marker-based, rigid | Not reported | 756 osteotomies simulated. |

| Jiang [43] | Vascular localization system | CTA scan. No ionic contrast agent. Registration real time. | Microsoft HoloLens | Marker-based, rigid | Minimum 1.35 mm. Maximum 3.18 mm | 7 operators. |

| Samei [44] | Laparoscopic radical prostatectomy | MRI. 3 transformations. | From Da Vinci to pc | Marker-based, rigid | Not reported | Agar prostate phantom ex vivo. 12 patients in vivo. |

| Rose [45] | Otolaryngology - head and neck surgery | CT, MeshLab and Unity. | Microsoft HoloLens | Marker-based, rigid | In measurement of accuracy: 2.47 ± 0.46 mm (1.99, 3.30) | A phantom. |

| Carl [46] | Transsphenoidal Surgery | C-arm radiographic fluoroscopy. Registration using iCT. | Operating microscopes HUD | Marker-based, rigid | Target registration error of 0.83 ± 0.44 mm | 288 cases of transsphenoidal surgery. |

| Sharma [47] | Jaw surgery | Ct scan. Virtual scenes. Stereo views. | monitor | Marker-less, rigid | Alignment error 0.59 ± 0.62 mm | 20 different samples after jaw surgery. |

| Abdel [48] | Foot sarcoma: Oncology | NDI Polaris. Smartphone AR application: FINO | Samsung galaxy | Marker-based, rigid | Not reported | A 39-year-old male patient. |

| Melero [49] | Rehabilitation: upper limbs | Myo armband. EMG data. Microsoft Kinect sensor | Monitor | Marker-less, rigid | Not reported | 3 subjects, with 10 trials for each subject. |

| Tu [50] | Orthopedics | C++ application on pc. C♯ application in Unity. Connection via TCP/IP | HoloLens 2 | Marker-based, rigid | Distance error: 1.61 ± 0.44 mm. 3D angle error: 1.46 ± 0.46 | Phantom and cadaver experiment. |

| Cofano [51] | Spine Surgery | Ct, TeamViewer software and holosurgery | HoloLens 2 | Marker-less, rigid | not reported | 2 patients. |

| Heinrich [52] | Training | Not specified | HoloLens 1 | Marker-based, rigid | Error rates (p = 0.047) | 10 surgical trainees. |

| Application | Percentage of Application |

|---|---|

| Telemonitoring | 4% |

| Maxillofacial | 23% |

| Liver Surgery | 4% |

| Pediatric | 4% |

| Orthopedics | 27% |

| Oncology | 19% |

| Training | 8% |

| Puncture Surgery | 7% |

| Bowel Surgery | 4% |

| Type of Display | Percentage of Application |

|---|---|

| Smartphone | 14% |

| Video see through Device | 14% |

| Generic Head Mounted Display | 17% |

| Unspecified Display | 14% |

| Projected Directly over the Patient | 3% |

| HoloLens 2 | 10% |

| HoloLens 1 | 28% |

| Tracking and Registration Methods | Percentage of Application |

|---|---|

| Marker based and Non-rigid Registration | 4% |

| Markerless rigid Registration | 20% |

| Markerless Non-rigid Registration | 8% |

| Markeerbased and rigid Registration | 68% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barcali, E.; Iadanza, E.; Manetti, L.; Francia, P.; Nardi, C.; Bocchi, L. Augmented Reality in Surgery: A Scoping Review. Appl. Sci. 2022, 12, 6890. https://doi.org/10.3390/app12146890

Barcali E, Iadanza E, Manetti L, Francia P, Nardi C, Bocchi L. Augmented Reality in Surgery: A Scoping Review. Applied Sciences. 2022; 12(14):6890. https://doi.org/10.3390/app12146890

Chicago/Turabian StyleBarcali, Eleonora, Ernesto Iadanza, Leonardo Manetti, Piergiorgio Francia, Cosimo Nardi, and Leonardo Bocchi. 2022. "Augmented Reality in Surgery: A Scoping Review" Applied Sciences 12, no. 14: 6890. https://doi.org/10.3390/app12146890

APA StyleBarcali, E., Iadanza, E., Manetti, L., Francia, P., Nardi, C., & Bocchi, L. (2022). Augmented Reality in Surgery: A Scoping Review. Applied Sciences, 12(14), 6890. https://doi.org/10.3390/app12146890