1. Introduction

Data centers are the critical infrastructure for cloud computing, where massive amounts of computing and storage servers are jointly managed and maintained. With the explosive growth of distributed cloud computing services, the east-west traffic resulting from frequent interactions among servers has exceeded the north-south traffic, thus occupying the largest traffic proportion in data center networks (DCNs) [

1]. In typical cluster computing frameworks, such as MapReduce and Spark, one cloud computing task is typically decomposed into multiple computing stages, and servers must exchange a considerable amount of intermediate data between adjacent stages [

2]. At present, the intermediate data’s transmission time accounts for 33–50% of the time required to complete cloud computing tasks [

3]. As the time delay is a critical performance indicator for cloud computing services, the blocking of DCNs will directly impact server processing efficiency and, consequently, operators’ profits [

4].

The goal of network operators is to reduce the network deployment cost on the premise of satisfying certain quality of service (QoS) indicators, such as blocking probability and delay. Operators can plan how many network resources should be deployed, according to the estimated traffic load and QoS requirements, or determine how much service load can be admitted according to the existing available network bandwidth. For example, cellular network operators determine the locations and frequency bands for base stations, on the basis of the statistical user distribution and acceptable blocking probability levels [

5,

6]. In the Infrastructure as a Service (IaaS) business model, traditional providers are decoupled into Infrastructure Providers (InPs) and Service Providers (SPs) [

7]. InPs focus on deploying and maintaining network equipment, while SPs adopt virtualization technology, manage virtual machines, and configure virtual networks flexibly to meet the demands of cloud computing applications, in terms of computing and network resources. To improve their revenue-cost ratio while guaranteeing the QoS of applications at the same time, the SP should determine the allowable traffic load level and optimize the cloud computing task placements, according to the network configuration provided by the InP; meanwhile, the InP should design the network deployment and upgrade solutions on the basis of the traffic intensity provided by the SP. In this case, the relationship between the blocking performance and the traffic load acts as an important basis for network plan and task placement in cloud data centers.

In the beginning, blocking probability is defined as the statistical probability that a telephone connection cannot be established due to insufficient transmission resources in the network [

8]. Later, blocking probability also applies to end-to-end connections or flows including a sequence of packets sharing the same five-tuple [

9]. In the circuit switching networks or connection-oriented packet switching networks, a blocking means a call or connection cannot be established; while in the general connectionless mode transmission, if some packets are blocked, they may be temporarily stored in the buffer waiting for idle bandwidth or may be discarded if the buffer is full or timeout expires [

10]. Thus, though there may be no actual blockings for the whole data flow, the blocking probability performance is also related to other QoS indicators such as delay and packet-loss ratio and can still act as an implicit network performance metric in packet switching networks.

Network blocking performance analysis can be traced back to computing the blocking probabilities of circuit switching networks. C. Y. Lee of the Bell Laboratories first considered analyzing the blocking rate of circuit switching networks with single-channel links [

11]. Assuming that the loads of different links are independent, the blocking rate of a multi-stage circuit switching network can be obtained. Based on the link load independence assumption, the theoretical blocking rate obtained by Lee’s method is higher than that of the simulation result and, therefore, can be used as a conservative upper bound for the network blocking probability. N. Pippenger took into account the load correlation of the front and rear links in the crossbar circuit switching network, and derived the theoretical lower bound for the blocking probability [

12]. E. Valdimarsson extended Lee’s and Pippenger’s blocking analysis models to multi-rate networks, such as asynchronous transfer mode (ATM) circuit switching networks and packet-switched networks [

13], where the rate of each link can be continuous or discrete.

Besides multi-stage circuit switching networks, the blocking performance of optical switching networks has also been widely discussed. When full-wavelength converters are adopted, one transmission path may utilize different wavelengths in different links. Thus, an optical switching network can be treated as an equivalent multi-rate circuit switching network. On the basis of the link load independence assumption, the authors in [

14] adopted the Erlang Fixed Point Approximation (EFPA) method to evaluate the blocking performance of optical switching networks with full-wavelength converters and static routing. In the EFPA method, the traffic at different links are considered to obey independent Poisson processes, the effective load on a link is equal to the effective accumulated loads from all of the connections passing through this link, and the blocking rates of links and connections are iteratively updated until convergence. In [

14], the theoretical and simulation results of blocking probabilities have been separately compared in the star network, the ring network, and the mesh-torus network, where the theoretical and simulation results were found to match well, except for the ring network, due to the high load correlation between adjacent links in the ring network. Therefore, in this case, the link load independence assumption is not valid. The EFPA method has been widely used for blocking rate analysis in circuit switching and optical switching networks with general topologies [

15,

16,

17,

18,

19]. In view of the high computational complexity of the EFPA method, E. Abramov et al. have proposed a simplified EFPA method, which is suitable for networks with large channel numbers in each link [

20]. Besides theoretical analysis, the authors in [

21] built a routing and spectrum assignment simulation framework to evaluate the blocking probabilities of existing spectrum assignment (SA) strategies under different network load conditions.

In addition to the link load independence assumption, some works have proposed blocking rate analysis methods based on the object independence assumption [

22,

23]. In the object independence assumption model, the correlation of links belonging to the same multi-hop connection is considered, and the individual free links and multi-hop connections are treated as independent objects. The object independence assumption-based analysis method was first proposed for the ring network, following which R.C. Almeida extended it to generic topologies, including linear, ring, tree, and mesh topologies [

23]. Although the object independence assumption-based method can obtain higher approximation accuracy than that based on the link load independence assumption, it is only applicable to single-channel networks.

Compared with other networks, the DCN is characterized by topological symmetry and traffic aggregation. The over-subscription ratio of a DCN is defined as the ratio of the aggregated bandwidth demand from end servers to the aggregated capacity of core switch links [

24]. The over-subscription ratio can be set to be more than one to reduce the numbers of aggregation switches and core switches, saving capital investment. With a high over-subscription ratio, the core layer links tend to be the bottleneck, and the aggregated traffic results in considerable load correlation among links in adjacent layers. The authors in [

25,

26] have analyzed the multi-cast blocking rates in fat-tree DCNs and proposed new multi-cast scheduling strategies to reduce blocking, based on the link load independence assumption. In [

27], the blocking probabilities of different DCN architectures were compared through simulation and some congestion avoidance approaches were discussed, but a theoretical analysis was not carried out. Rahul et al. discussed how to minimize the occupied wavelengths by solving a optimization problem while obtaining the target blocking performance, with different wavelength converter distributions and buffer sizes [

28]. The blocking performance analyses in [

27,

28] are all based on simulation rather than theoretical derivation. The authors in [

10] gave the theoretical blocking performance of switches at different layers in optical data center networks, according to the Markov chain model. However, their analysis focused on the blocking at each switch’s ports rather than the blocking of a flow along the whole path.

In summary, there have been few works focused on the theoretical blocking performance of DCNs, and the conventional EFPA analysis method results in a relatively large approximation error, as the characteristics of DCNs are not taken into consideration. In this paper, a modified EFPA method is proposed for blocking performance analysis in tree-type DCNs, based on the traffic load correlation between edge links and aggregation links. The theoretical accuracy is verified by simulation. The proposed theoretical analysis method may act as the guideline for DCN configuration and traffic engineering.

The remainder of this paper is organized as follows. In

Section 2, we introduce the system model. In

Section 3, the proposed analysis method is introduced. The simulation results are presented in

Section 4, and

Section 5 concludes the paper.

2. System Model

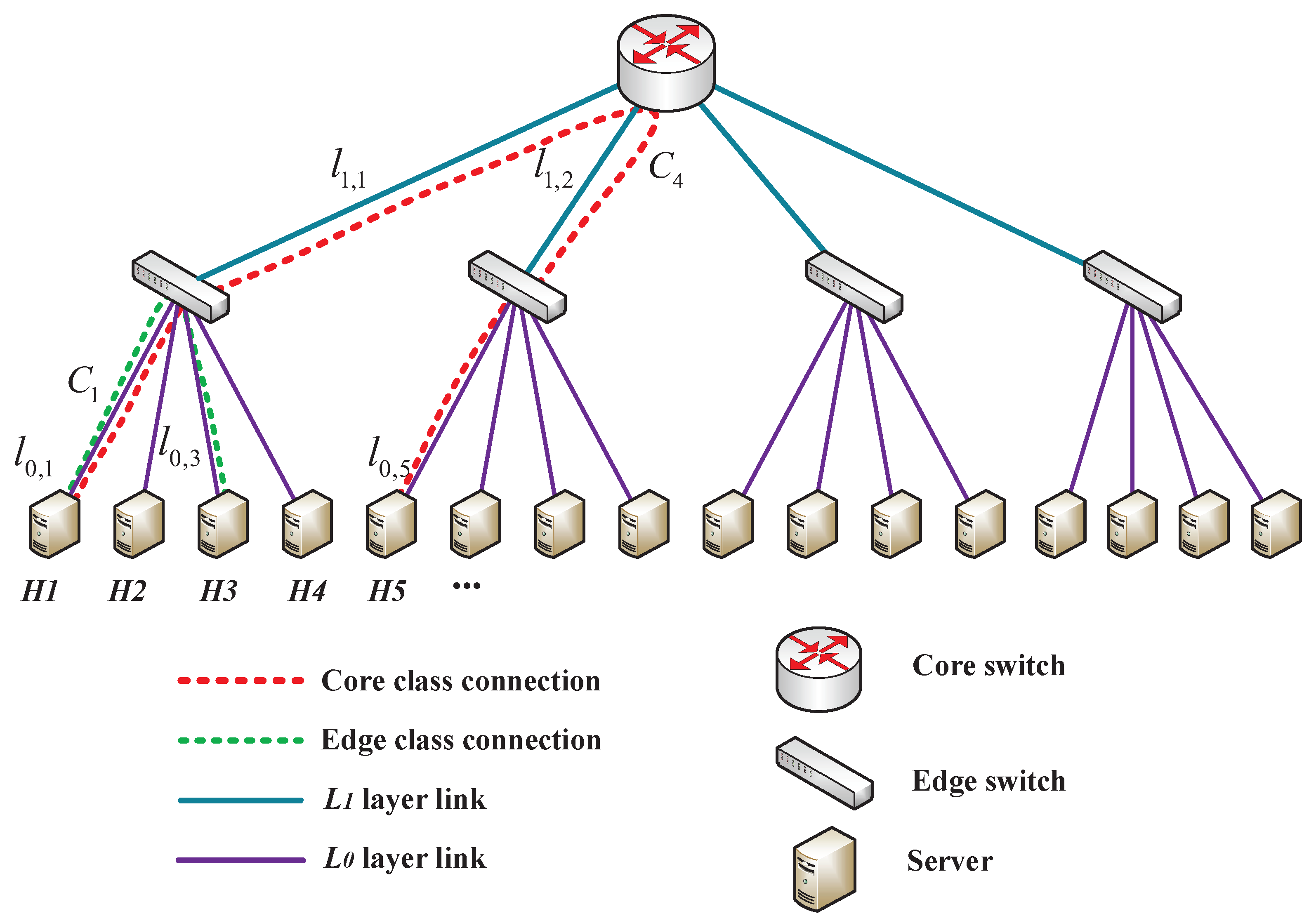

The switch-centric two-layer tree DCN architecture shown in

Figure 1 is considered, where the tree factor is

K, each core switch connects

K edge switches, and each edge switch connects

K physical servers. The total number of servers is

, and the servers are separately denoted by

. For simplicity, the edge links between edge switches and servers are called the

layer links, while the aggregation links between the core and edge switches are called the

layer links. The

j-th

layer link is denoted by

. We assume that the

and

layer links separately contain

and

sub-channels, and the over-subscription ratio is

, i.e.,

. The logical sub-channels can be mapped to physical wavelengths/sub-carriers in optical networks [

8] or time slots/cycles in time-deterministic IP networks [

29].

In recent years, the spine-leaf network has become one of the most popular commercial DCN architectures. It is assumed that the core and edge switchers are aware of the link states and can determine the optimal link in the next hop. Therefore, ideal routing and load balancing can be achieved when an arrived connection chooses the path. In this case, the multiple spine/core switches can be treated as an equivalent large core switch, and all of the links from one leaf/edge switch to all spine switches can be seen as an equivalent aggregated link from the leaf switch to the large equivalent core switch. Thus, a spine-leaf DCN can be treated as an equivalent tree DCN, where the blocking rate of this tree DCN will be the lower bound for the original spine-leaf DCN. Due to the significant performance improvement of commercial switch products, a two-level spine-leaf DCN is capable of connecting tens of thousands of physical servers, which is sufficient for the networking requirements of most small- and medium-sized data centers.

In cloud data centers, each physical server can host multiple virtual machines (VM) or containers simultaneously, and end-to-end traffic transmissions may occur between VM or container pairs located in different physical servers. Assume that any two physical servers can generate multiple bi-directional end-to-end connections, and those connections between the same physical server pair are treated as having the same connection type. The DCN traffic matrix is the cumulation of all end-to-end connections. Each connection occupies one sub-channel, and different types of connections arrive independently. Assume that the i-th type connections are denoted by , they follow the Poisson process with arrival rate , and their durations are exponentially distributed with mean . Admission control is adopted, and an arriving connection will be accepted if all links in this connection’s path have at least one idle sub-channel. Otherwise, this new connection is blocked and discarded.

4. Simulation Results

We conducted Monte Carlo simulations to validate the performance of the proposed LLC-based network blocking analysis method. The simulation scenario is a symmetric two-layer tree DCN with tree factor

K and over-subscription ratio

. A uniform traffic distribution was deployed, and the load of all connection types was

. The blocking performance of four analysis methods were compared, including the Monte Carlo approach and three analytical approaches: Lee’s method [

11], the classical EFPA method [

14], and the proposed LLC-based method. Cases with different link capacities and over-subscription ratios were considered.

For cases with tree factor

and over-subscription ratio

, the simulated and analytical blocking rates under different sub-channel numbers are compared in

Figure 2,

Figure 3 and

Figure 4, respectively. The curves of the EFPA and LLC-based methods both match well with the simulation curves, while the curves of the Lee’s method are always above them. When

, for each edge switch, the aggregated bandwidth of its

layer links is equal to the bandwidth of its

layer link. Thus, if one

layer link of some CC connection is blocked, we can infer that at least one

layer link of this CC connection must be blocked at the same time. In this case, we only need to consider the blockings in the edge links, and the load correlation between edge and core links is not necessarily considered. Therefore, for over-subscription ratio

, which is also called the fat-tree network case, the EFPA and LLC-based methods can both obtain satisfactory accuracy in blocking probability analysis.

For cases with tree factor

and over-subscription ratio

, the simulated and analytical blocking rates under different sub-channel numbers are compared in

Figure 5,

Figure 6 and

Figure 7, respectively. As shown in these figures, the LLC-based method curve is closer to the simulation curve than both Lee’s method and the EFPA method. The LLC-based method curve agrees well with the simulation curve, especially in the blocking rate range below 4%. When the blocking rate is higher than 4%, the approximation errors of the LLC-based method and EFPA method both gradually increase with the traffic load and channel number. From the perspective of network deployment, blocking probabilities above 5% are generally unacceptable for users, and network operators should update the capacity or limit the subscribed users to reduce the network blocking rate. Therefore, the low blocking rate regime is much more important in blocking performance evaluations.

For cases with tree factor

and over-subscription ratio

, the simulated and analytical blocking rates under different sub-channel numbers are compared in

Figure 8,

Figure 9 and

Figure 10, respectively. As shown in these figures, the LLC-based method curve is closer to the simulation curve than the other two in the blocking rate range of 1% to 4%. The EFPA method matches best with the simulation curve in the blocking rate range below 1%. For higher blocking rates, the curves of the LLC-based method and EFPA method gradually converge.

For cases with tree factor

, the simulated and analytical blocking rates under different over-subscription ratios and sub-channel numbers are compared in

Figure 11 and

Figure 12, respectively. Similar to the cases with

, the dominant blocking rate range of the LLC-based method is slightly different for

and

. With

, the LLC-based method curve matches best with the simulation curve especially in the blocking rate range below 4%; while the EFPA method still achieves the most accurate blocking rate in the range below 1% with

. Therefore, it can be concluded that, after the load correlation between adjacent layer links is taken into consideration, the network blocking rate analysis accuracy can be improved in a two-layer tree-type DCN through use of the proposed method, especially with over-subscription ratio 1.5.