Application Research of Bridge Damage Detection Based on the Improved Lightweight Convolutional Neural Network Model

Abstract

:1. Introduction

2. Methods

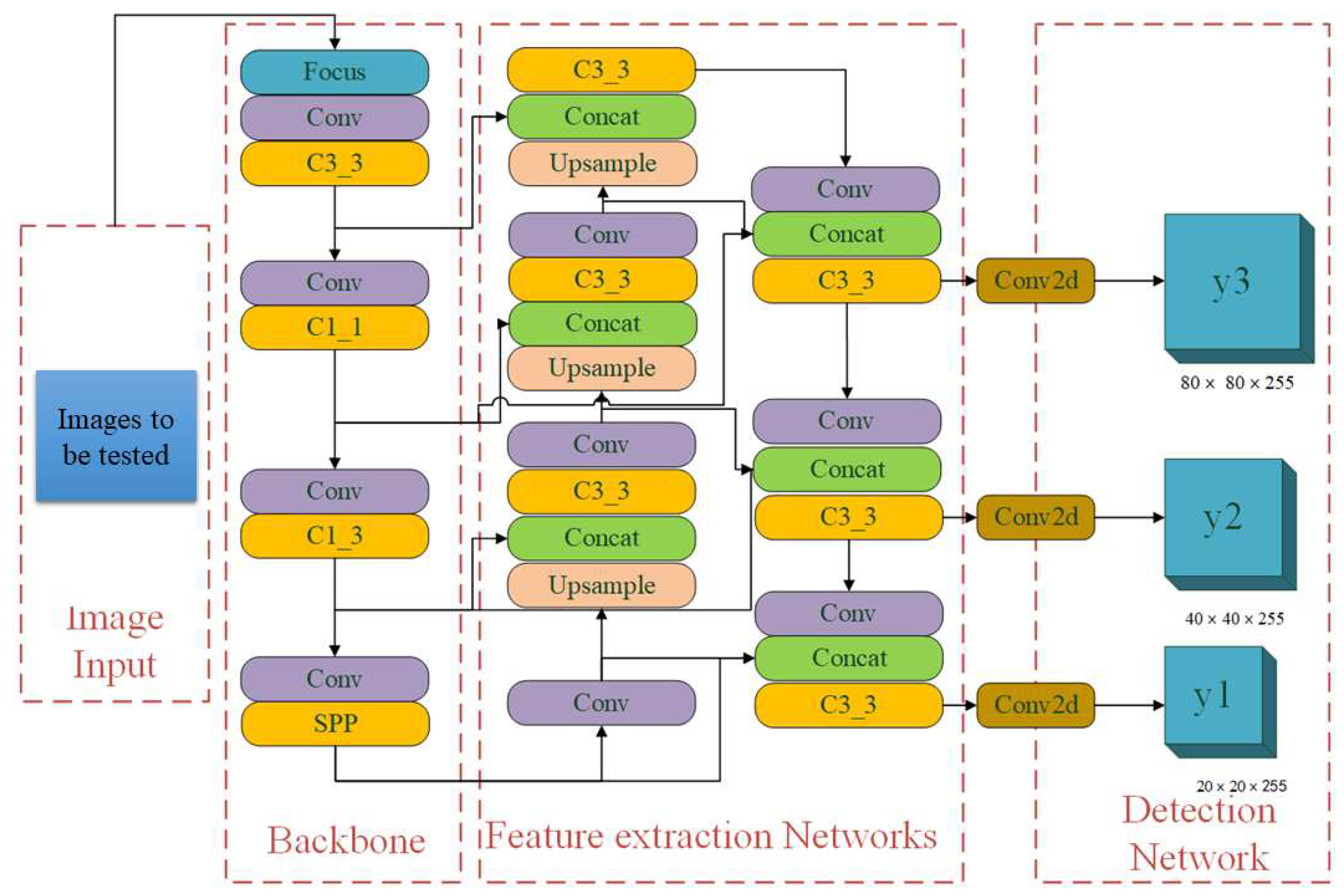

2.1. Algorithm Introduction and BE-YOLOv5S Structure

2.2. Feature Extraction Networks of the BE-YOLOv5S Model

2.3. Improved Sample Imbalance Handling Mechanism for BE-YOLOv5S Bridge Damage Detection Model

3. Experiment

3.1. Development Environment and Evaluation Metrics

3.1.1. Development Environment

3.1.2. Evaluation Metrics

The Confusion Matrix

Precision, Recall, F1-Score, and PR Curve

Mean Average Precision IoU = 0.5 (mAP@.5)

Frames Per Second (FPS)

3.2. Creation of the Dataset

3.3. Model Building Details

4. Results and Analysis

4.1. Evaluation Metrics Results and Discussion

4.2. Result and Discussion of Testing under Complex Conditions

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- American Society for Civil Engineering. Bridge Investigation Report. Available online: https://infrastructurereportcard.org/cat-item/bridges (accessed on 11 May 2022).

- Ministry of Communications of the People’s Republic of China. Academic Report of Dr. Yan Xin, Beijing Highway Research Institute, Ministry of Communications; Ministry of Communications of the People’s Republic of China: Beijing, China.

- Renyan, Y.; Ruifeng, Z.; Qian, H. Analysis of the causes and risks of bridge collapse accidents in China in the past 15 years. Transp. Technol. 2015, 5, 61–64. [Google Scholar]

- Ishibashi, K.; Furuta, H.; Nakatsu, K. Bridge Maintenance Scheduling in Consideration of Resilience Against Natural Disasters. Front. Built Environ. 2020, 6, 849. [Google Scholar] [CrossRef]

- Cichocki, R.; Moore, I.; Williams, K. Steel buried structures: Condition of Ontario structures and review of deterioration mechanisms and rehabilitation approaches. Can. J. Civ. Eng. 2021, 48, 159–172. [Google Scholar] [CrossRef]

- Heymsfield, E.; Kuss, M.L. Implementing Gigapixel Technology in Highway Bridge Inspections. J. Perform. Constr. Facil. 2015, 29, 4014074. [Google Scholar] [CrossRef]

- Zanini, M.A.; Faleschini, F.; Casas, J.R. State-Of-Research on Performance Indicators for Bridge Quality Control and Management. Front. Built Environ. 2019, 5, 903. [Google Scholar] [CrossRef] [Green Version]

- Abdallah, A.M.; Atadero, R.A.; Ozbek, M.E. A State-of-the-Art Review of Bridge Inspection Planning: Current Situation and Future Needs. J. Bridge Eng. 2022, 27, 1236. [Google Scholar] [CrossRef]

- Federal Highway Administration (FHWA). Deficient Bridges by State and Highway System; Federal Highway Administration: Washington, DC, USA, 2013.

- Leemis, L.M. Reliability: Probabilistic Models and Statistical Methods; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1995. [Google Scholar]

- Frangopol, D.M.; Fellow, A.S.C.E.; Lin, K.Y.; Estes, A.C. Life-Cycle Cost Design of Deteriorating Structures. J. Struct. Eng. 1997, 123, 1390. [Google Scholar] [CrossRef]

- Vrouwenvelder, A.C.W.M.; Holicky, B.M.; Tanner, C.P.; Lovegrove, D.R.; Canisius, E.G. Risk assessment and risk communication in civil engineering. CIB Report Publication: Rotterdam, The Netherlands, 2001. [Google Scholar]

- Elkington, J. Enter the triple bottom line. In The Triple Bottom Line: Does It All Add Up? Routledge: Oxfordshire, UK, 2013; pp. 1–16. [Google Scholar]

- Ang, A.-S. Probabilistic Concepts in Engineering Planning and Design. In Decision, Risk and Reliability; John Wiley and Sons: Hoboken, NJ, USA, 1984; Volume 2. [Google Scholar]

- Frangopol, D.M.; Dong, Y.; Sabatino, S. Bridge life-cycle performance and cost: Analysis, prediction, optimisation and decision-making. Struct. Infrastruct. Eng. 2017, 13, 1239–1257. [Google Scholar] [CrossRef]

- Bu, G.; Lee, J.; Guan, H.; Blumenstein, M.; Loo, Y.-C. Development of an Integrated Method for Probabilistic Bridge-Deterioration Modeling. J. Perform. Constr. Facil. 2014, 28, 330–340. [Google Scholar] [CrossRef] [Green Version]

- Ilbeigi, M.; Ebrahimi Meimand, M. Statistical Forecasting of Bridge Deterioration Conditions. J. Perform. Constr. Facil. 2020, 34, 4019104. [Google Scholar] [CrossRef]

- Ahmed, H.; La, H.M.; Gucunski, N. Review of Non-Destructive Civil Infrastructure Evaluation for Bridges: State-of-the-Art Robotic Platforms, Sensors and Algorithms. Sensors 2020, 20, 3954. [Google Scholar] [CrossRef]

- Rashidi, M.; Mohammadi, M.; Sadeghlou Kivi, S.; Abdolvand, M.M.; Truong-Hong, L.; Samali, B. A Decade of Modern Bridge Monitoring Using Terrestrial Laser Scanning: Review and Future Directions. Remote Sens. 2020, 12, 3796. [Google Scholar] [CrossRef]

- Munawar, H.S.; Hammad, A.W.A.; Haddad, A.; Soares, C.A.P.; Waller, S.T. Image-Based Crack Detection Methods: A Review. Infrastructures 2021, 6, 115. [Google Scholar] [CrossRef]

- Qiao, W.; Ma, B.; Liu, Q.; Wu, X.; Li, G. Computer Vision-Based Bridge Damage Detection Using Deep Convolutional Networks with Expectation Maximum Attention Module. Sensors 2021, 21, 824. [Google Scholar] [CrossRef]

- Yehia, S.; Abudayyeh, O.; Nabulsi, S.; Abdelqader, I. Detection of Common Defects in Concrete Bridge Decks Using Nondestructive Evaluation Techniques. J. Bridge Eng. 2007, 12, 215–225. [Google Scholar] [CrossRef]

- Coleman, Z.W.; Schindler, A.K.; Jetzel, C.M. Impact-Echo Defect Detection in Reinforced Concrete Bridge Decks without Overlays. J. Perform. Constr. Facil. 2021, 35, 4021058. [Google Scholar] [CrossRef]

- Montaggioli, G.; Puliti, M.; Sabato, A. Automated Damage Detection of Bridge’s Sub-Surface Defects from Infrared Images Using Machine Learning; SPIE: Bellingham, WA, USA, 2021; p. 11593. [Google Scholar] [CrossRef]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Huseynov, F.; Hester, D.; OBrien, E.J.; McGeown, C.; Kim, C.-W.; Chang, K.; Pakrashi, V. Monitoring the Condition of Narrow Bridges Using Data from Rotation-Based and Strain-Based Bridge Weigh-in-Motion Systems. J. Bridge Eng. 2022, 27, 04022050. [Google Scholar] [CrossRef]

- Shokravi, H.; Shokravi, H.; Bakhary, N.; Heidarrezaei, M.; Rahimian Koloor, S.S.; Petrů, M. Vehicle-Assisted Techniques for Health Monitoring of Bridges. Sensors 2020, 20, 3460. [Google Scholar] [CrossRef]

- Salari, E.; Bao, G. Pavement Distress Detection and Severity Analysis. Adv. Eng. Inform. 2011, 7877, 78770C. [Google Scholar]

- Koch, C.; Brilakis, I. Pothole detection in asphalt pavement images. Adv. Eng. Inform. 2011, 25, 507–515. [Google Scholar] [CrossRef]

- Moghadas Nejad, F.; Zakeri, H. A comparison of multi-resolution methods for detection and isolation of pavement distress. Expert Syst. Appl. 2011, 38, 2857–2872. [Google Scholar] [CrossRef]

- MELECON. In Proceedings of the 14th IEEE Mediterranean Electrotechnical Conference, Ajaccio, France, 5–7 May 2008; [1 CD-ROM]. IEEE: Piscataway, NJ, USA, 2008.

- Hoang, N.-D. Detection of Surface Crack in Building Structures Using Image Processing Technique with an Improved Otsu Method for Image Thresholding. Adv. Civ. Eng. 2018, 2018, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Ma, D.; Fang, H.; Wang, N.; Xue, B.; Dong, J.; Wang, F. A real-time crack detection algorithm for pavement based on CNN with multiple feature layers. Road Mater. Pavement Des. 2021, 10338, 1–17. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. -Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. 2017. Available online: http://arxiv.org/pdf/1703.06870v3 (accessed on 11 May 2022).

- Girshick, R. Fast R-CNN. Advanced Engineering Informatics. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Processing Syst. 2015, 28. Available online: http://arxiv.org/pdf/1506.01497v3 (accessed on 11 May 2022). [CrossRef] [Green Version]

- Cheng, Y.; Chen, C.; Gan, Z. Enhanced Single Shot MultiBox Detector for Pedestrian Detection. In Proceedings of the 3rd International Conference on Computer Science and Application Engineering—CSAE 2019, Sanya, China, 22–24 October 2019; Emrouznejad, A., Xu, Z., Eds.; ACM Press: New York, NY, USA, 2019; pp. 1–7, ISBN 9781450362948. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; Available online: http://arxiv.org/pdf/1506.02640v5 (accessed on 11 May 2022).

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. Available online: http://arxiv.org/pdf/1708.02002v2 (accessed on 11 May 2022).

- Zhu, J.; Song, J. An Intelligent Classification Model for Surface Defects on Cement Concrete Bridges. Appl. Sci. 2020, 10, 972. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; He, S.; Liu, X.; Ma, M.; Xiang, S. Engineering-oriented bridge multiple-damage detection with damage integrity using modified faster region-based convolutional neural network. Multimed. Tools Appl. 2022, 16, 126. [Google Scholar] [CrossRef]

- McLaughlin, E.; Charron, N.; Narasimhan, S. Automated Defect Quantification in Concrete Bridges Using Robotics and Deep Learning. J. Comput. Civ. Eng. 2020, 34, 4020029. [Google Scholar] [CrossRef]

- Ma, D.; Fang, H.; Wang, N.; Zhang, C.; Dong, J.; Hu, H. Automatic Detection and Counting System for Pavement Cracks Based on PCGAN and YOLO-MF. IEEE Trans. Intell. Transport. Syst. 2022, 1–13. [Google Scholar] [CrossRef]

- Ping, P.; Yang, X.; Gao, Z. A Deep Learning Approach for Street Pothole Detection. In Proceedings of the 2020 IEEE Sixth International Conference on Big Data Computing Service and Applications (BigDataService), Oxford, UK, 3–6 August 2020; pp. 198–204, ISBN 978-1-7281-7022-0. [Google Scholar]

- Pena-Caballero, C.; Kim, D.; Gonzalez, A.; Castellanos, O.; Cantu, A.; Ho, J. Real-Time Road Hazard Information System. Infrastructures 2020, 5, 75. [Google Scholar] [CrossRef]

- Ahmed, K.R. Smart Pothole Detection Using Deep Learning Based on Dilated Convolution. Sensors 2021, 21, 8406. [Google Scholar] [CrossRef]

- Gao, Q.; Wang, Y.; Li, J.; Sheng, K.; Liu, C. An Enhanced Percolation Method for Automatic Detection of Cracks in Concrete Bridges. Adv. Civ. Eng. 2020, 2020, 1–23. [Google Scholar] [CrossRef]

- Yang, W.; Gao, X.; Zhang, C.; Tong, F.; Chen, G.; Xiao, Z. Bridge Extraction Algorithm Based on Deep Learning and High-Resolution Satellite Image. Sci. Program. 2021, 2021, 1–8. [Google Scholar] [CrossRef]

- Ultralytics. Yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 January 2021).

- Li, Z.; Tian, X.; Liu, X.; Liu, Y.; Shi, X. A Two-Stage Industrial Defect Detection Framework Based on Improved-YOLOv5 and Optimized-Inception-ResnetV2 Models. Appl. Sci. 2022, 12, 834. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, V.Q. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Available online: http://arxiv.org/pdf/1803.01534v4 (accessed on 11 May 2022).

- Liu, C.; Wu, Y.; Liu, J.; Sun, Z.; Xu, H. Insulator Faults Detection in Aerial Images from High-Voltage Transmission Lines Based on Deep Learning Model. Appl. Sci. 2021, 11, 4647. [Google Scholar] [CrossRef]

- Du, F.-J.; Jiao, S.-J. Improvement of Lightweight Convolutional Neural Network Model Based on YOLO Algorithm and Its Research in Pavement Defect Detection. Sensors 2022, 22, 3537. [Google Scholar] [CrossRef]

- Li, B.; Yao, Y.; Tan, J.; Zhang, G.; Yu, F.; Lu, J.; Luo, Y. Equalized Focal Loss for Dense Long-Tailed Object Detection. arXiv 2022, arXiv:2201.02593. [Google Scholar]

- Zeng, Y.; Zhang, L.; Zhao, J.; Lan, J.; Li, B. JRL-YOLO: A Novel Jump-Join Repetitious Learning Structure for Real-Time Dangerous Object Detection. Comput. Intell. Neurosci. 2021, 2021, 5536152. [Google Scholar] [CrossRef]

- Sha, G.; Wu, J.; Yu, B. The Shrank YoloV3-tiny for spinal fracture lesions detection. IFS 2022, 42, 2809–2828. [Google Scholar] [CrossRef]

- Devisurya, V.; Devi Priya, R.; Anitha, N. Early detection of major diseases in turmeric plant using improved deep learning algorithm. Bull. Pol. Acad. Sci. Tech. Sci. 2022, 70, e140689. [Google Scholar]

- Zhang, T.; Zhang, C.; Wang, Y.; Zou, X.; Hu, T. A vision-based fusion method for defect detection of milling cutter spiral cutting edge. Measurement 2021, 177, 109248. [Google Scholar] [CrossRef]

- Mundt, M.; Majumder, S.; Murali, S.; Panetsos, P.; Ramesh, V. Meta-learning convolutional neural architectures for multi-target concrete defect classification with the concrete defect bridge image dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hüthwohl, P.; Lu, R.; Brilakis, I. Multi-classifier for reinforced concrete bridge defects. Autom. Constr. 2019, 105, 102824. [Google Scholar] [CrossRef]

- Khan, F.; Salahuddin, S.; Javidnia, H. Deep Learning-Based Monocular Depth Estimation Methods-A State-of-the-Art Review. Sensors 2020, 20, 2272. [Google Scholar] [CrossRef] [Green Version]

| Cracks | Efflorescence | Rust Staining | Total | |

|---|---|---|---|---|

| Train | 308 | 247 | 284 | 839 |

| Test | 39 | 31 | 35 | 105 |

| Val | 39 | 31 | 35 | 105 |

| Total | 386 | 309 | 354 | 1049 |

| Model. | mAP@.5 | Precision | Recall | F1-Score | FPS |

|---|---|---|---|---|---|

| YOLOv3-tiny | 0.376 | 0.293 | 0.701 | 0.413 | 244 |

| YOLOv5S | 0.807 | 0.867 | 0.817 | 0.841 | 189 |

| B-YOLOv5S | 0.811 | 0.841 | 0.803 | 0.822 | 156 |

| BV-YOLOv5S | 0.827 | 0.893 | 0.821 | 0.855 | 185 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, F.; Jiao, S.; Chu, K. Application Research of Bridge Damage Detection Based on the Improved Lightweight Convolutional Neural Network Model. Appl. Sci. 2022, 12, 6225. https://doi.org/10.3390/app12126225

Du F, Jiao S, Chu K. Application Research of Bridge Damage Detection Based on the Improved Lightweight Convolutional Neural Network Model. Applied Sciences. 2022; 12(12):6225. https://doi.org/10.3390/app12126225

Chicago/Turabian StyleDu, Fujun, Shuangjian Jiao, and Kaili Chu. 2022. "Application Research of Bridge Damage Detection Based on the Improved Lightweight Convolutional Neural Network Model" Applied Sciences 12, no. 12: 6225. https://doi.org/10.3390/app12126225

APA StyleDu, F., Jiao, S., & Chu, K. (2022). Application Research of Bridge Damage Detection Based on the Improved Lightweight Convolutional Neural Network Model. Applied Sciences, 12(12), 6225. https://doi.org/10.3390/app12126225