Extended Reality Application Framework for a Digital-Twin-Based Smart Crane

Abstract

:1. Introduction

1.1. Industry 4.0 and Industry 5.0

1.2. Digital Twin

1.3. Industrial XR Applications

1.4. Research on XR Development Framework

1.5. Research Gap and Our Contribution

- The control unit of the simulated model was set up by the ROS MoveIt package, which was not compliant with the PLC-based machine.

- The WebSocket protocol was used to connect the simulated model to the applications in Unity in the local network, which was not consistent with the practical industrial scenario.

- The whole framework was established under the simulated and virtual environment, lacking an interface with the physical machine.

- Providing an XR application technological framework for the DT-based machine (see Figure 1).

- Presenting software integration process for XR applications.

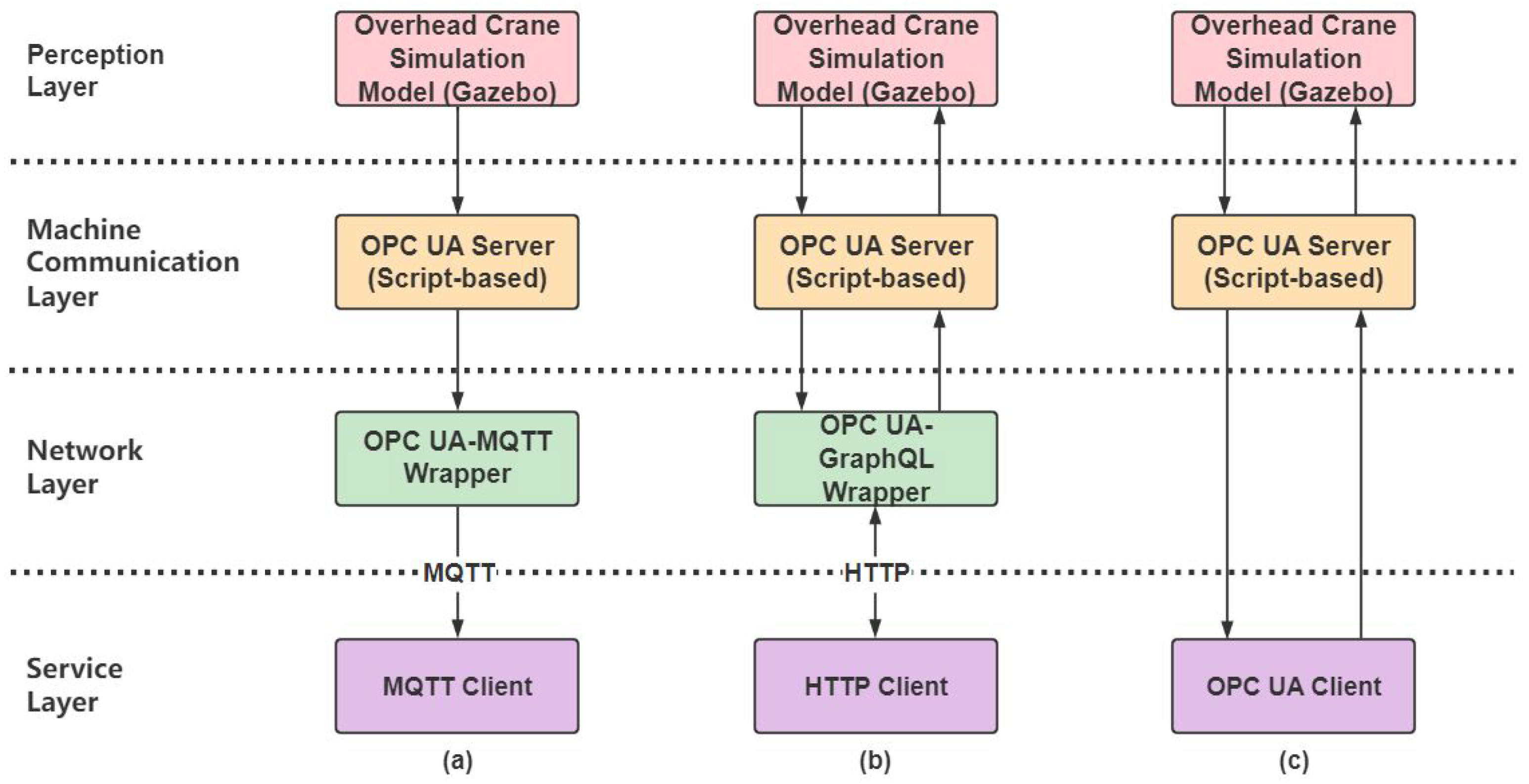

- Developing several XR applications based on the framework leveraging different communication middleware, including OPC UA-MQTT wrapper, OPC UA-GraphQL wrapper, and OPC UA-Unity client.

2. Materials and Methods

2.1. “Ilmatar” Crane

2.2. Simulation Model

2.3. Machine Communication Layer

2.4. Network Layer

2.4.1. OPC UA-MQTT Wrapper

2.4.2. OPC UA-GraphQL Wrapper

2.5. Hardware Setup

2.6. Software Setup

2.7. The XR Environment Development

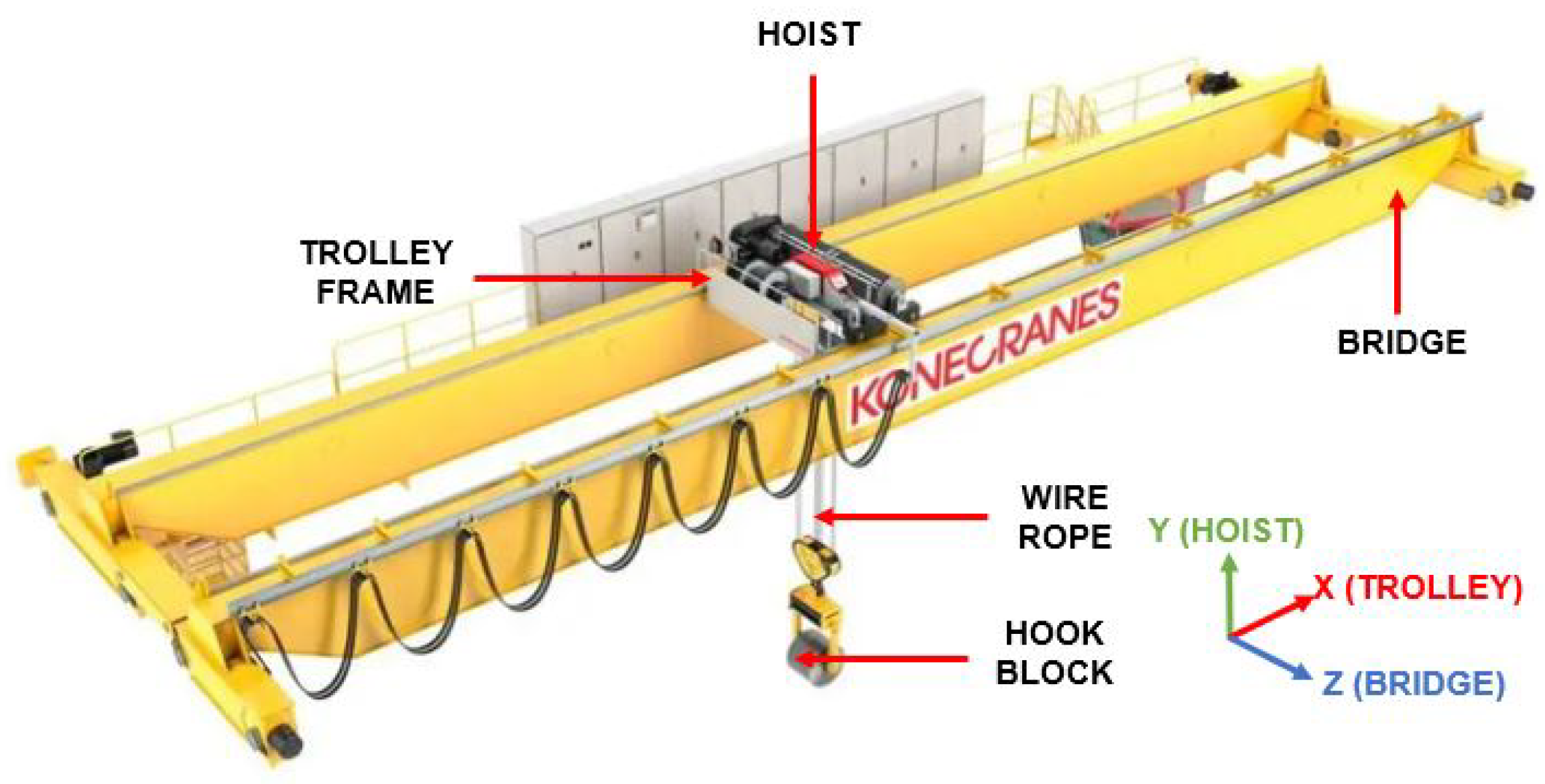

- Identify the key moving system, and disassemble the corresponding parts in the CAD or original software: The crane was divided into four main moving parts, namely, the bridge, trolley, hoist, and hook block (see Figure 2). In Siemens NX, each moving part was disassembled as an isolated file.

- Convert to the Unity compatible format: The STP file is the most general format that is compatible with most industrial CAD software, such as AutoCAD, Siemens NX, and SolidWorks. In the meantime, STP is a format for 3D graphics files and supports product model data exchange, which is commonly used as the transition format between the different software. There are two kinds of methods for model import in Unity: one is exporting models as FBX or OBJ format by the specific plugin; the other is directly exporting the model as the corresponding file, such as max or blend, which can be converted automatically by Unity. The former method is suitable for the case in which the model originates from industrial 3D software. In our research, the original model file was exported as STP format, through the specific plugin, and converted to the OBJ format.

- Set up the coordinate system and establish the parent–child relation of the import parts: There is no coordinate relation for the imported model. The coordinate system should be reestablished in the Unity, as well as the parent–child organization of the components according to the movement relation.

2.8. Measurement Setup

3. Results

3.1. The Performance of the Framework

3.2. Use Case

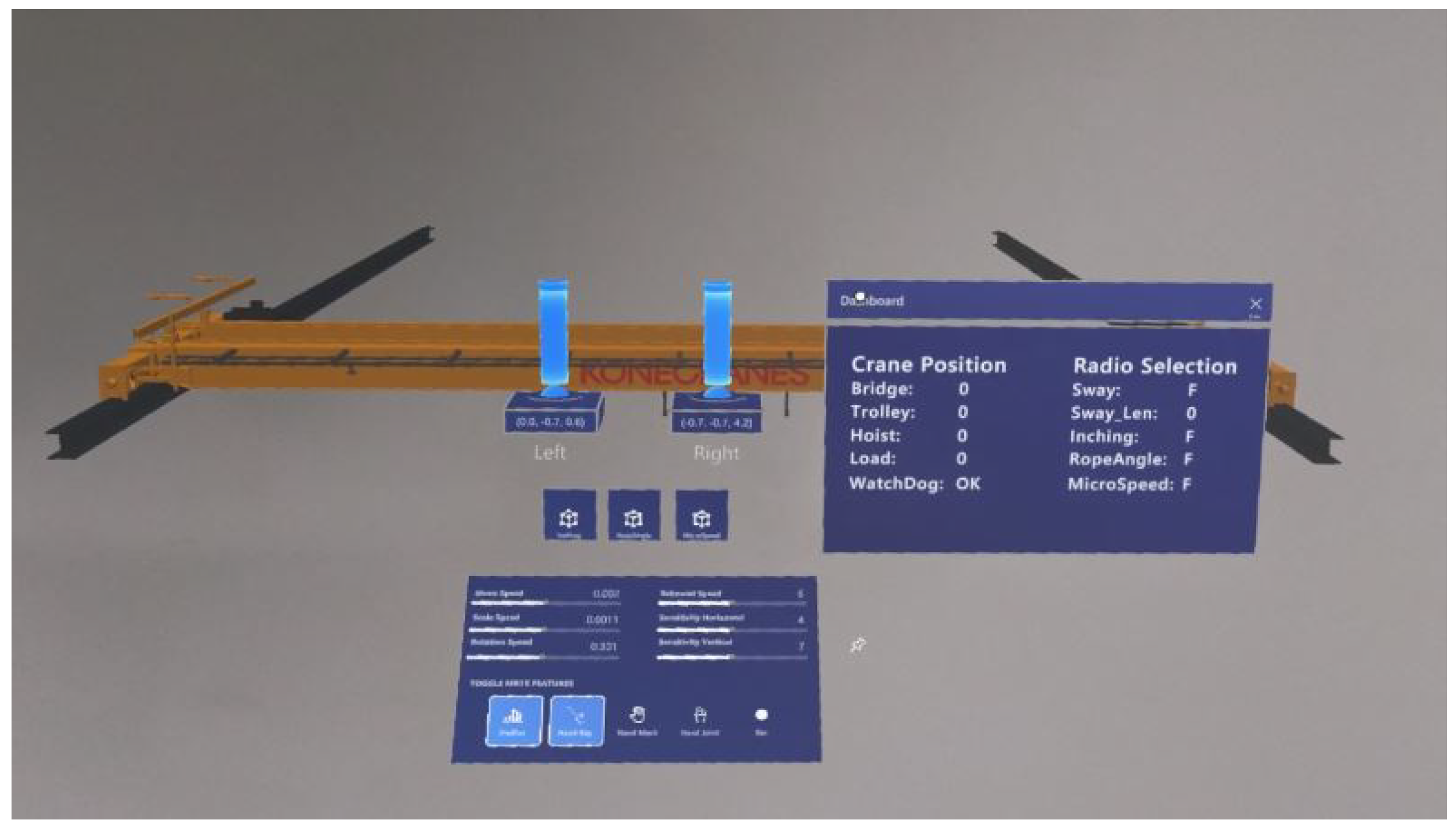

3.2.1. VR Training Application

- Physical VR hand: The two learning contents (the operation of the device controller, and the operation of the virtual remote controller of the crane) in the VR environment increased the operation complexity. The development of the physical VR hands eased the sense of unfamiliarity and improved the immersion.

- Multi-Interactor: Implemented the different Interactors to improve the user experience. Interactors are used for interacting with interactable. It contained three kinds of Interactors: Ray Interactor, used for interacting with interactable at a distance; Direct Interactor, used for directly interacting with interactables that are touching; and Socket Interactor, that would hold an interactable and raise an event when an interactable was placed into, or removed from, the Socket.

- Multi-modal interaction capabilities: video and sound streams were imported, video stream was used for the tutorial of the crane features instead of the text description, while audio functions were set up to give feedback on the operation.

- Multi-scene: Four scenes were organized to give a progressive learning process, including the introduction of the VR environment, getting familiar with the interaction features in the VR environment, learning about the functional features of the “Ilmatar” crane, and completing a virtual training (see Figure 9).

- Design parameter synchronization: The design parameters (see Table 3) were stored in an independent script-based component in Unity, enabling the request of updated data from the external resource (DT document) through HTTP. In other words, the VR training application is a dynamic data-driven XR environment.

3.2.2. Remote Monitor/Control Application

3.2.3. Mixed Reality Control Application

4. Discussion

4.1. Perception Layer

4.2. Network Layer

4.3. Service Layer

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIIC | Aalto Industrial Internet Campus |

| API | Application Programming Interface |

| AR | Augmented Reality |

| CPS | Cyber–Physical Systems |

| DDS | Data Distribution Service |

| DT | Digital Twin |

| FOV | Field of View |

| GraphQL | Graph Query Language |

| HMI | Human–Machine Interaction |

| IDE | Integrated Development Environment |

| IIoT | Industrial Internet of Things |

| IMU | Inertial Measurement Unit |

| M2M | Machine-to-Machine |

| MQTT | Message Queuing Telemetry Transport |

| MR | Mixed Reality |

| MRTK | Microsoft Mixed Reality Toolkit |

| NASA | National Aeronautical Space Administration |

| OPC UA | Open Platform Communication Unified Architecture |

| OSEMA | Open Sensor Manager |

| PLC | Programmable Logic Controller |

| PLM | Product Lifecycle Management |

| ROS | Robot Operating System |

| RPC | Remote Procedure Call |

| RTOS | Real-Time Operating System |

| SDF | Simulation Description Format |

| SDK | Software Development Kit |

| UI | User Interface |

| URDF | Unified Robot Description Format |

| VR | Virtual Reality |

| XML | Extensible Markup Language |

| XR | Extended Reality |

| XRTK | Extended Reality Toolkit |

References

- Skobelev, P.; Borovik, S.Y. On the way from Industry 4.0 to Industry 5.0: From digital manufacturing to digital society. Industry 4.0 2017, 2, 307–311. [Google Scholar]

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, conception and perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef] [Green Version]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2021, 26, 100257. [Google Scholar] [CrossRef]

- Vogel-Heuser, B.; Hess, D. Guest editorial Industry 4.0–prerequisites and visions. IEEE Trans. Autom. Sci. Eng. 2016, 13, 411–413. [Google Scholar] [CrossRef]

- PwC. Industry 4.0: Building the Digital Enterprise. 2016 Global Industry 4.0 Survey. Available online: https://www.pwc.com/gx/en/industries/industries-4.0/landing-page/industry-4.0-building-your-digital-enterprise-april-2016.pdf (accessed on 28 March 2022).

- Dalenogare, L.S.; Benitez, G.B.; Ayala, N.F.; Frank, A.G. The expected contribution of Industry 4.0 technologies for industrial performance. Int. J. Prod. Econ. 2018, 204, 383–394. [Google Scholar] [CrossRef]

- Frank, A.G.; Dalenogare, L.S.; Ayala, N.F. Industry 4.0 technologies: Implementation patterns in manufacturing companies. Int. J. Prod. Econ. 2019, 210, 15–26. [Google Scholar] [CrossRef]

- Nayak, N.G.; Dürr, F.; Rothermel, K. Software-defined environment for reconfigurable manufacturing systems. In Proceedings of the 2015 5th International Conference on the Internet of Things (IOT), Seoul, Korea, 26–28 October 2015; pp. 122–129. [Google Scholar]

- Longo, F.; Padovano, A.; Umbrello, S. Value-oriented and ethical technology engineering in industry 5.0: A human-centric perspective for the design of the factory of the future. Appl. Sci. 2020, 10, 4182. [Google Scholar] [CrossRef]

- Fatima, Z.; Tanveer, M.H.; Zardari, S.; Naz, L.F.; Khadim, H.; Ahmed, N.; Tahir, M. Production Plant and Warehouse Automation with IoT and Industry 5.0. Appl. Sci. 2022, 12, 2053. [Google Scholar] [CrossRef]

- Kaasinen, E.; Anttila, A.H.; Heikkilä, P.; Laarni, J.; Koskinen, H.; Väätänen, A. Smooth and Resilient Human–Machine Teamwork as an Industry 5.0 Design Challenge. Sustainability 2022, 14, 2773. [Google Scholar] [CrossRef]

- Ma, X.; Tao, F.; Zhang, M.; Wang, T.; Zuo, Y. Digital twin enhanced human-machine interaction in product lifecycle. Procedia CIRP 2019, 83, 789–793. [Google Scholar] [CrossRef]

- Wang, T.; Li, J.; Deng, Y.; Wang, C.; Snoussi, H.; Tao, F. Digital twin for human-machine interaction with convolutional neural network. Int. J. Comput. Integr. Manuf. 2021, 34, 888–897. [Google Scholar] [CrossRef]

- Cimino, C.; Negri, E.; Fumagalli, L. Review of digital twin applications in manufacturing. Comput. Ind. 2019, 113, 103130. [Google Scholar] [CrossRef]

- Boschert, S.; Rosen, R. Digital twin—The simulation aspect. In Mechatronic Futures; Springer: Cham, Switzerland, 2016; pp. 59–74. [Google Scholar]

- Grieves, M.; Vickers, J. Digital twin: Mitigating unpredictable, undesirable emergent behavior in complex systems. In Transdisciplinary Perspectives on Complex Systems; Springer: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar]

- Glaessgen, E.; Stargel, D. The digital twin paradigm for future NASA and US Air Force vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference 20th AIAA/ASME/AHS Adaptive Structures Conference 14th AIAA, Honolulu, HI, USA, 23–26 April 2012; p. 1818. [Google Scholar]

- Reid, J.; Rhodes, D. Digital system models: An investigation of the non-technical challenges and research needs. In Conference on Systems Engineering Research, Systems Engineering Advancement Research Initiative; Massachusetts Institute of Technology: Cambridge, MA, USA, 2016. [Google Scholar]

- Fera, M.; Greco, A.; Caterino, M.; Gerbino, S.; Caputo, F.; Macchiaroli, R.; D’Amato, E. Towards digital twin implementation for assessing production line performance and balancing. Sensors 2019, 20, 97. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Guo, H.; Li, X.; Tang, S.; Li, Y.; Xie, L.; Lv, Z. BIM Information Integration Based VR Modeling in Digital Twins in Industry 5.0. J. Ind. Inf. Integr. 2022, 28, 100351. [Google Scholar] [CrossRef]

- Tao, F.; Cheng, J.; Qi, Q.; Meng, Z.; He, Z.; Sui, F. Digital twin-driven product design, manufacturing and service with big data. Int. J. Adv. Manuf. Technol. 2018, 94, 3563–3576. [Google Scholar] [CrossRef]

- Schroeder, G.N.; Steinmetz, C.; Pereira, C.E.; Espindola, D.B. Digital twin data modeling with automationml and a communication methodology for data exchange. IFAC-PapersOnLine 2016, 49, 12–17. [Google Scholar] [CrossRef]

- Yildiz, E.; Møller, C.; Bilberg, A. Virtual factory: Digital twin based integrated factory simulations. Procedia CIRP 2020, 93, 216–221. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Digital twin and virtual reality based methodology for multi-robot manufacturing cell commissioning. Appl. Sci. 2020, 10, 3633. [Google Scholar] [CrossRef]

- Autiosalo, J.; Siegel, J.; Tammi, K. Twinbase: Open-source server software for the Digital Twin Web. IEEE Access 2021, 9, 140779–140798. [Google Scholar] [CrossRef]

- Autiosalo, J.; Vepsäläinen, J.; Viitala, R.; Tammi, K. A feature-based framework for structuring industrial digital twins. IEEE Access 2019, 8, 1193–1208. [Google Scholar] [CrossRef]

- Dotoli, M.; Fay, A.; Miśkowicz, M.; Seatzu, C. An overview of current technologies and emerging trends in factory automation. Int. J. Prod. Res. 2019, 57, 5047–5067. [Google Scholar] [CrossRef]

- Lee, J.; Bagheri, B.; Kao, H.A. A cyber-physical systems architecture for industry 4.0-based manufacturing systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Gorecky, D.; Schmitt, M.; Loskyll, M.; Zühlke, D. Human-machine-interaction in the industry 4.0 era. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN), Porto Alegre, Brazil, 27–30 July 2014; pp. 289–294. [Google Scholar]

- Dammann, M.P.; Steger, W.; Stelzer, R. Automated and adaptive geometry preparation for ar/vr-applications. J. Comput. Inf. Sci. Eng. 2022, 22, 031010. [Google Scholar] [CrossRef]

- Bellalouna, F. Industrial Case Studies for Digital Transformation of Engineering Processes using the Virtual Reality Technology. Procedia CIRP 2020, 90, 636–641. [Google Scholar] [CrossRef]

- Arjun, S.; Murthy, L.; Biswas, P. Interactive Sensor Dashboard for Smart Manufacturing. Procedia Comput. Sci. 2022, 200, 49–61. [Google Scholar] [CrossRef]

- Burghardt, A.; Szybicki, D.; Gierlak, P.; Kurc, K.; Pietruś, P.; Cygan, R. Programming of industrial robots using virtual reality and digital twins. Appl. Sci. 2020, 10, 486. [Google Scholar] [CrossRef] [Green Version]

- He, F.; Ong, S.K.; Nee, A.Y. An Integrated Mobile Augmented Reality Digital Twin Monitoring System. Computers 2021, 10, 99. [Google Scholar] [CrossRef]

- Majewski, M.; Kacalak, W. Human-machine speech-based interfaces with augmented reality and interactive systems for controlling mobile cranes. In International Conference on Interactive Collaborative Robotics; Springer: Cham, Switzerland, 2016; pp. 89–98. [Google Scholar]

- Lin, Z.; Petzold, F.; Hsieh, S. 4D-BIM Based Real Time Augmented Reality Navigation System for Tower Crane Operation. In Construction Research Congress 2020: Computer Applications; American Society of Civil Engineers: Reston, VA, USA, 2020; pp. 828–836. [Google Scholar]

- Quandt, M.; Beinke, T.; Freitag, M.; Kölsch, C. Requirements for an Augmented Reality-Based Assistance System. In International Conference on Dynamics in Logistics; Springer: Cham, Switzerland, 2018; pp. 335–340. [Google Scholar]

- Pooladvand, S.; Taghaddos, H.; Eslami, A.; Nekouvaght Tak, A.; Hermann, U. Evaluating Mobile Crane Lift Operations Using an Interactive Virtual Reality System. J. Constr. Eng. Manag. 2021, 147, 04021154. [Google Scholar] [CrossRef]

- Gong, L.; Fast-Berglund, Å.; Johansson, B. A framework for extended reality system development in manufacturing. IEEE Access 2021, 9, 24796–24813. [Google Scholar] [CrossRef]

- Catalano, M.; Chiurco, A.; Fusto, C.; Gazzaneo, L.; Longo, F.; Mirabelli, G.; Nicoletti, L.; Solina, V.; Talarico, S. A Digital Twin-Driven and Conceptual Framework for Enabling Extended Reality Applications: A Case Study of a Brake Discs Manufacturer. Procedia Comput. Sci. 2022, 200, 1885–1893. [Google Scholar] [CrossRef]

- Pereira, V.; Matos, T.; Rodrigues, R.; Nóbrega, R.; Jacob, J. Extended reality framework for remote collaborative interactions in virtual environments. In Proceedings of the 2019 International Conference on Graphics and Interaction (ICGI), Faro, Portugal, 21–22 November 2019; pp. 17–24. [Google Scholar]

- Tu, X.; Autiosalo, J.; Jadid, A.; Tammi, K.; Klinker, G. A Mixed Reality Interface for a Digital Twin Based Crane. Appl. Sci. 2021, 11, 9480. [Google Scholar] [CrossRef]

- Yang, C. Framework for Virtual Reality Digital Services Leveraging Digital Twin-Based Crane. Master’s Thesis, Aalto University, Espoo, Finland, 2021. [Google Scholar]

- Autiosalo, J. Platform for industrial internet and digital twin focused education, research, and innovation: Ilmatar the overhead crane. In Proceedings of the 2018 IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 241–244. [Google Scholar]

- Autiosalo, J.; Ala-Laurinaho, R.; Mattila, J.; Valtonen, M.; Peltoranta, V.; Tammi, K. Towards integrated digital twins for industrial products: Case study on an overhead crane. Appl. Sci. 2021, 11, 683. [Google Scholar] [CrossRef]

- Ala-Laurinaho, R.; Autiosalo, J.; Tammi, K. Open Sensor Manager for IIoT. J. Sens. Actuator Netw. 2020, 9, 30. [Google Scholar] [CrossRef]

- Luo, W.; Hu, T.; Zhang, C.; Wei, Y. Digital twin for CNC machine tool: Modeling and using strategy. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 1129–1140. [Google Scholar] [CrossRef]

- Kostrzewski, M.; Chamier-Gliszczyński, N.; Królikowski, T. Selected reflections on formal modeling in Industry 4.0. Procedia Comput. Sci. 2020, 176, 3293–3300. [Google Scholar] [CrossRef]

- Koubâa, A. Robot Operating System (ROS); Springer: Cham, Switzerland, 2017; Volume 1. [Google Scholar]

- Wikipedia Contributors. What Is ROS? 2022. Available online: http://wiki.ros.org/ROS/Introduction (accessed on 28 March 2022).

- Maruyama, Y.; Kato, S.; Azumi, T. Exploring the performance of ROS2. In Proceedings of the 13th International Conference on Embedded Software, Pittsburgh, PA, USA, 1–10 October 2016; pp. 1–10. [Google Scholar]

- Takaya, K.; Asai, T.; Kroumov, V.; Smarandache, F. Simulation environment for mobile robots testing using ROS and Gazebo. In Proceedings of the 2016 20th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 13–15 October 2016; pp. 96–101. [Google Scholar]

- OPC FOUNDATION. Unified Architecture. 2022. Available online: https://opcfoundation.org/about/opc-technologies/opc-ua/ (accessed on 28 March 2022).

- Leitner, S.H.; Mahnke, W. OPC UA–service-oriented architecture for industrial applications. ABB Corp. Res. Cent. 2006, 48, 22. [Google Scholar]

- Mattila, J.; Ala-Laurinaho, R.; Autiosalo, J.; Salminen, P.; Tammi, K. Using Digital Twin Documents to Control a Smart Factory: Simulation Approach with ROS, Gazebo, and Twinbase. Machines 2022, 10, 225. [Google Scholar] [CrossRef]

- Chao, Y. OPC-Unity-Client. Available online: https://github.com/talentyc/OPCUA-CLIENT-IN-UNITY (accessed on 12 April 2022).

- Soni, D.; Makwana, A. A survey on mqtt: A protocol of internet of things (iot). In Proceedings of the International Conference On Telecommunication, Power Analysis And Computing Techniques (ICTPACT-2017), Chennai, India, 6–8 April 2017; Volume 20, pp. 173–177. [Google Scholar]

- Yang, C. OPC UA-MQTT Wrapper. Available online: https://github.com/talentyc/OPCUA-MQTT-GATEWAY-MQTT-UNITY-CLIENT (accessed on 12 April 2022).

- Hietala, J. OPC-UA-GraphQL-Wrapper. Available online: https://github.com/AaltoIIC/OPC-UA-GraphQL-Wrapper (accessed on 12 April 2022).

- Hietala, J. Real-Time Two-Way Data Transfer with a Digital Twin via Web Interface. Master’s Thesis, Aalto University, Espoo, Finland, 2020. [Google Scholar]

- Egliston, B.; Carter, M. Critical questions for Facebook’s virtual reality: Data, power and the metaverse. Internet Policy Rev. 2021, 10, 1–23. [Google Scholar] [CrossRef]

- Wikipedia Contributors. OpenXR. 2022. Available online: https://en.wikipedia.org/wiki/OpenXR (accessed on 28 March 2022).

- Ala-Laurinaho, R.; Autiosalo, J.; Nikander, A.; Mattila, J.; Tammi, K. Data Link for the Creation of Digital Twins. IEEE Access 2020, 8, 228675–228684. [Google Scholar] [CrossRef]

- Ala-Laurinaho, R.; Mattila, J.; Autiosalo, J.; Hietala, J.; Laaki, H.; Tammi, K. Comparison of REST and GraphQL Interfaces for OPC UA. Computers 2022, 11, 65. [Google Scholar] [CrossRef]

- Liagkou, V.; Salmas, D.; Stylios, C. Realizing virtual reality learning environment for industry 4.0. Procedia CIRP 2019, 79, 712–717. [Google Scholar] [CrossRef]

| Subcomponent | Feature | Value |

|---|---|---|

| Hoist | Lifting height | 3.0 m |

| Lifting speed | 8.0 m/min stepless | |

| Trolley | Movement range | 9.0 m |

| Movement speed | 20.0 m/min stepless | |

| Bridge | Movement range | 19.8 m |

| Movement speed | 32.0 m/min stepless |

| Content | Oculus Quest 2 | HoloLens 2 | Varjo XR-1 |

|---|---|---|---|

| Manufacturer | Microsoft | Varjo Technology | |

| Resolution | 1832 × 1920 | 2 K | 1920 × 1080 |

| 1440 × 1600 | |||

| Field of View | 120 | 43 × 29 | 87 |

| Refresh Rate | 90 Hz | 60 Hz | 90 Hz |

| Tracking Mode | inside-out | inside-out | outside-in |

| Hand Tracking | √ | √ | |

| Controller | √ | √ | |

| Virtual Reality | √ | √ | |

| Mixed Reality | √ | √ |

| Class | Name | Format | Ref_Type | Data Source | Description |

|---|---|---|---|---|---|

| Design | Hoist_height_max | float | constant | Unity | Hoist maximum height |

| Hoist_height_min | float | constant | Unity | Hoist minimum height | |

| Hoist_speed_max | float | constant | Unity | Hoist maximum speed | |

| Hoist_capacity | float | constant | Unity | Hoist maximum capacity | |

| Trolley_range_max | float | constant | Unity | Trolley maximum range | |

| Trolley_range_min | float | constant | Unity | Trolley minimum range | |

| Trolley_speed_max | float | constant | Unity | Trolley maximum speed | |

| Bridge_range_max | float | constant | Unity | Bridge maximum location | |

| Bridge_range_min | float | constant | Unity | Bridge minimum range | |

| Bridge_speed_max | float | constant | Unity | Bridge maximum speed | |

| Coefficient_microspeed | float | constant | Unity | Microspeed scale factor | |

| Coefficient_inching_speed | float | constant | Unity | Predefined speed for inching | |

| Coefficient_inching_distance | float | constant | Unity | Predefined distance for inching | |

| Status | HoistPosition | float | variable | Middleware | The height of the hook block |

| TrolleyPosition | float | variable | Middleware | The location of the trolley | |

| BridgePosition | float | variable | Middleware | The location of the bridge | |

| Control | Inching | Boolean | variable | Unity/Middleware | Inching button status |

| MicroSpeed | Boolean | variable | Unity/Middleware | Microspeed button status | |

| SwayControl | Boolean | variable | Unity/Middleware | Swaycontrol button status | |

| RopeAngleFeatureBypass | Boolean | variable | Unity/Middleware | Rope angle button status | |

| SwayControl_SlingLength_mm | int | variable | Unity/Middleware | The sling length | |

| Hoist.Up | Boolean | variable | Unity | Whether hoist moves up | |

| Hoist.Down | Boolean | variable | Unity | Whether hoist moves down | |

| Hoist.Speed | float | variable | Unity | The speed of the hoist | |

| Trolley.Forward | Boolean | variable | Unity | Whether trolley moves forward | |

| Trolley.Backward | Boolean | variable | Unity | Whether trolley moves backward | |

| Trolley.Speed | float | variable | Unity | The speed of trolley | |

| Bridge.Forward | Boolean | variable | Unity | Whether bridge moves forward | |

| Bridge.Backward | Boolean | variable | Unity | Whether bridge moves backward | |

| Bridge.Speed | float | variable | Unity | The speed of bridge |

| Min | Max | Mean | Median | SD | |

|---|---|---|---|---|---|

| OPC UA-GraphQL wrapper | 0.01 | 1.023 | 0.505 | 0.508 | 0.289 |

| OPC UA-MQTT wrapper | 0.004 | 0.545 | 0.273 | 0.283 | 0.149 |

| OPC UA Client in Unity | 0.001 | 1.001 | 0.531 | 0.552 | 0.278 |

| Network Layer | OPC UA-MQTT Wrapper | OPC UA-GraphQL Wrapper | OPC UA-Unity Client |

|---|---|---|---|

| Coding Language | Python and C# | C# | C# |

| Communication Protocol | MQTT | HTTP | OPC UA |

| Learning Cost | Medium | Low | High |

| Response time | 0.273 s | 0.505 s | 0.531 s |

| Applied Scenario | Continuous data flow remote monitor | Discrete data request/response interaction | Complex information model interaction |

| App Info | Title: | |

| “Ilmatar” crane VR training application | ||

| Device: | ||

| Oculus Quest 2, Varjo XR-1 | ||

| Basics | The user will be able to grab: | There will be sockets: |

| Normal size remote controller | Crane hook block Joysticks Normal size remote controller | |

| Events & Interactions | By default, the left hand will have a: | and right hand will have a: |

| Direct Interactor | Direct & Ray Interactor | |

| Left hand can: | And right hand can: | |

| Implement continuous move Grab the normal size remote controller Interact with joysticks Interact with the switch button | Implement continuous turn Grab the normal size remote controller Interact with joysticks Interact with the switch button Interact with UI | |

| If the user is: | ||

| Getting close to UI with the right virtual hand, the rayline will occur automatically | ||

| Pressing the left trigger button when raycasts show up, UI function will be activated | ||

| Pressing the left joystick down, the teleport will be activated | ||

| Pressing the grip button, the virtual object can be grabbed | ||

| Pressing the trigger button, the grabbing object can be released | ||

| Pressing left button B when the hook gets close to the drum, the drum will be hoisted | ||

| Pressing left button B when the hook hoists the drum, the drum will be released | ||

| Interacting with the switch button by the virtual hand, the button will be rotated | ||

| The main menu will be located: | ||

| In the front of the virtual environment | ||

| There will be additional UI elements for: | ||

| Scene 1: Introduction of the VR environment | ||

| Scene 2: Getting familiar with the interactive features in the VR environment | ||

| Scene 3: Learning about the functional features of the “Ilmatar” crane | ||

| Scene 4: Completing a virtual training | ||

| Dashboard to show the info of the crane | ||

| Functional Features | Fetch information from the DT document | |

| Support Oculus Quest 2 and Varjo XR-1 | ||

| Simulate the smart features, snag prevention, micro speed and inching | ||

| Other Features | Realistic looking virtual hand models will be developed | |

| Interact the controller with the real fingers, the corresponding virtual finger bend | ||

| Video stream tutorials about the features of the crane | ||

| Sound play while the crane moves | ||

| Sound play while interacting with UI | ||

| Sound play while releasing anything | ||

| Two remote controllers, a large one for the scene 2, the normal one for the scene 4 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Tu, X.; Autiosalo, J.; Ala-Laurinaho, R.; Mattila, J.; Salminen, P.; Tammi, K. Extended Reality Application Framework for a Digital-Twin-Based Smart Crane. Appl. Sci. 2022, 12, 6030. https://doi.org/10.3390/app12126030

Yang C, Tu X, Autiosalo J, Ala-Laurinaho R, Mattila J, Salminen P, Tammi K. Extended Reality Application Framework for a Digital-Twin-Based Smart Crane. Applied Sciences. 2022; 12(12):6030. https://doi.org/10.3390/app12126030

Chicago/Turabian StyleYang, Chao, Xinyi Tu, Juuso Autiosalo, Riku Ala-Laurinaho, Joel Mattila, Pauli Salminen, and Kari Tammi. 2022. "Extended Reality Application Framework for a Digital-Twin-Based Smart Crane" Applied Sciences 12, no. 12: 6030. https://doi.org/10.3390/app12126030

APA StyleYang, C., Tu, X., Autiosalo, J., Ala-Laurinaho, R., Mattila, J., Salminen, P., & Tammi, K. (2022). Extended Reality Application Framework for a Digital-Twin-Based Smart Crane. Applied Sciences, 12(12), 6030. https://doi.org/10.3390/app12126030