Abstract

Low illumination, light reflections, scattering, absorption, and suspended particles inevitably lead to critically degraded underwater image quality, which poses great challenges for recognizing objects from underwater images. The existing underwater enhancement methods that aim to promote underwater visibility heavily suffer from poor image restoration performance and generalization ability. To reduce the difficulty of underwater image enhancement, we introduce the media transmission map as guidance for image enhancement. Different from the existing frameworks, which also introduce the medium transmission map for better distribution modeling, we formulate the interaction between the underwater visual images and the transmission map explicitly to obtain better enhancement results. At the same time, our network only requires supervised learning of the media transmission map during training, and the corresponding prediction map can be generated in subsequent tests, which reduces the operation difficulty of subsequent tasks. Thanks to our formulation, the proposed method with a very lightweight network configuration can produce very promising results of 22.6 dB on the challenging Test-R90 with an impressive 30.3 FPS, which is faster than most current algorithms. Comprehensive experimental results have demonstrated the superiority on underwater perception.

1. Introduction

With the development of science and technology, underwater research activities are also increasing, such as underwater object detection and tracking [1], underwater robots [2], and underwater monitoring [3]. However, the light reflections, scattering, absorption, and suspended particles inevitably result in poor visibility with inhomogeneous illumination in the collected underwater images. In detail, the light is absorbed and scattered by suspended particles in the underwater setting, resulting in hazy effects on the images captured by the cameras. Due to the salinity and wavelength of light, since red light is more attenuated as a longer wavelength, light is attenuated underwater. In addition, the light intensity decreases with the increase in water depth. Such properties reduce visibility underwater and hamper the applicability of computer vision methods.

Early single-image underwater image restoration work used traditional physical methods, which depended on the image degradation model. Inspired by the dark prior method, Drews Jr. et al. [2] believed that the prior information of the attenuated underwater image was provided by blue and green channels. For this purpose, an Underwater Dark Channel Prior signal estimation method (UDCP) was proposed, which performed well on underwater images with severe red channel attenuation. Li et al. [4] proposed an underwater enhancement method that contained two algorithms; an effective underwater image dehazing algorithm based on the principle of minimum information loss was proposed to restore the visibility, color, and natural appearance of underwater images. Furthermore, a simple and effective contrast enhancement algorithm was proposed based on the prior histogram distribution, which improved the contrast and brightness of underwater images. In general, due to the dependence on complex physical models, the above methods are not satisfactory in terms of complexity and operation time. Additionally, it is difficult to obtain accurate physical models suitable for complex and changeable underwater environments.

Recently, with the development of scientific and technological artificial intelligence, the method based on deep learning has achieved remarkable results. Driven by the release of a series of paired training sets including [5,6,7], deep learning methods have also been proposed by learning the mapping between the underwater images and the restored images.

Furthermore, the underwater image enhancement framework is mostly based on the convolutional neural network (CNN) or generative adversarial network (GAN). For example, Li et al. [5] proposed a simple CNN model named Water-Net using gated fusion, and their team proposed the following algorithms: the UWCNN [8], based on the underwater scene prior, and an underwater image enhancement network [9], embedding a medium transmission-guided multicolor space; J. Li et al. [10] used GANs and image formation models for supervised learning. Furthermore, Islam, M.J. et al. [11] proposed a method using Generative Adversarial Networks, and Fabbri, C. et al. [6] afforded a network embedded with the color space guided by media transmission, with a fully-convolutional conditional GAN-based model. To avoid requiring paired training data, [12] proposed a weakly supervised underwater color correction network (UCycleGAN). A multiscale dense GAN for powerful underwater image enhancement was described in [13].

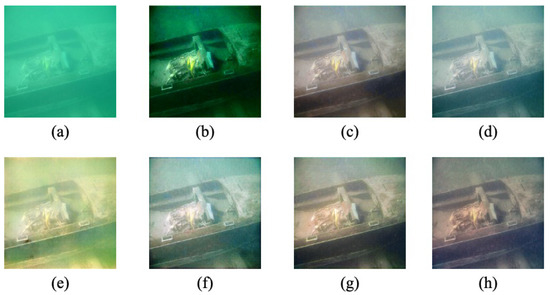

All these methods showed better results than the traditional physical designs, as shown in Figure 1. However, the quality improvement was limited due to the ignoring of other factors, such as distance-dependent attenuation and scattering. Considering the underwater imaging process, these factors can be considered by utilizing the semantics contained in the medium transmission map [9], such as the design proposed in this paper. By analyzing the results in Figure 1g, the improvement by the medium transmission map can be fully reflected by producing more visually pleasing results in terms of color, contrast, and naturalness.

Figure 1.

Comparison of the results of different methods for processing a real underwater picture. Our method restores the chromatic aberration and enhances the contrast. (a) Raw, (b) UDCP [2], (c) WaterNet [5], (d) Ucolor [9], (e) UGAN [11], (f) FUniE-GAN [6], (g) Ours, (h) Ground Truth.

In this work, we eliminate the influence of light scattering and attenuation on underwater images in real time to support intelligent underwater perception systems. Inspired by the depth-guided deraining model by Hu et al. [14], we introduce the medium transmission map (MT) and formulate an MT-guided restoration framework. Specifically, a multitask learning network is designed to generate both the MT and restoration outputs jointly. A multilevel (including both feature level and output level) knowledge interaction mechanism is proposed for better mining the guidance from the MT learning space. Furthermore, to maximally reduce the computational burden caused by the MT learning branch, parameters in some specific stages are shared across these two related tasks, thus enabling real-time processing of the underwater images.

In summary, this work makes the following contributions:

- We improved the use of the medium transmission map. We can obtain good results by relying on the RGB map alone using various preprocessing and color embedding, proving that the MT map is of great significance for learning in a more powerful real-world underwater image restoration network.

- A multitask learning framework is formulated for leveraging the MT map, and a novel multilevel knowledge interaction mechanism is proposed for better mining the guidance from the MT learning space.

- A comparative study on two real-world benchmarks demonstrated the superiority of our MTUR-Net over the state-of-the-art in terms of both restoration quality and inference speed.

2. Materials and Methods

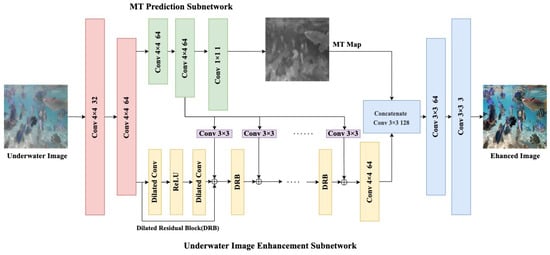

Figure 2 shows the overall architecture of our medium transmission map guided underwater image restoration network (MTUR-Net). This network takes underwater images as input and predicts the corresponding MT map and underwater enhanced images as output in an end-to-end manner. In general, the network first uses CNN to extract semantics and generate feature maps and share weights. Then two decoding branches are generated: (i) the MT prediction subnet, which is generated by the encoding and decoding network, to regress a medium transmission map from the input and (ii) the underwater image enhancement network, guided by the predicted MT map, which predicts the enhanced image from the input underwater image.

Figure 2.

Schematic diagram of MTUR-Net. It consists of an encoder–decoder network for predicting the MT map (green), a set of dilated residual blocks (yellow) to generate local features, a convolutional layer (purple) for processing MT features before fusion, and the convolutional layer (blue part) to upsample the feature map and generate the underwater enhanced images. ⊕ pixel-wise addition.

2.1. MT Prediction Subnet

We review the haze removal method based on dark channel prior [15], which is widely used in harsh visual scenarios such as fog, dust, and underwater [16,17,18]. The image formation model can be expressed as [19]:

This equation is defined on three RGB color channels. I represents the observed image, A is the airlight color vector, J is the surface brightness vector at the intersection of the scene and the real world light corresponding to the pixel x = (), and is the transmission along with the light. Peng, Y. T. et al. [20] proposed a new Dark Channel Prior (DCP) algorithm that can effectively estimate ambient light and is suitable for enhancing foggy, hazy, sandstorm, and underwater images. Inspired by DCP, as transferred has wide applicability, we use the medium transmission (MT) map () as our attention map. It is worth mentioning that effectiveness will be demonstrated in ablation experiments. From [20], the actual input underwater image does not have a corresponding ground truth medium transmission map. It is difficult to train a deep neural network to estimate the medium transmission map. So the medium transmission map can be estimated as:

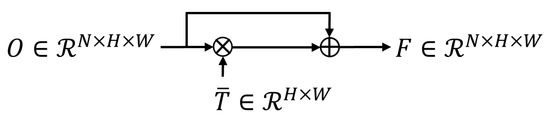

where is the estimated medium transmission map, is a local patch centered at x, and c is the RGB channel. We use the MT map as a feature selector to weigh the importance of different spatial locations of features, as shown in Figure 3. We assign more weight to high-quality pixels (pixels larger MT values), which can be expressed as:

Figure 3.

Medium transmission guidance module. The MT map is a feature selector. weighs the importance of the different spatial positions for F. ⊗ pixel-wise multiplication. ⊕ pixel-wise addition.

represent the characteristics of the output and input, respectively. In detail, the MT map prediction subnetwork consists of four blocks to extract features. Each block has a convolution operation, a group normalization [21], and a proportional exponent linear unit (SELU) [22]. Then, it uses lateral connections to influence the detailed information decoded in the underwater feature map. Finally, another convolution operation is used, plus a sigmoid function, to return to by adding a supervision (input MT map in the training data).

2.2. Underwater Image Enhancement Subnet

In the underwater image enhancement subnet, we use the convolution to reduce the resolution of the feature map followed by 11 dilated residual blocks (DRB) [23] to increase the size of the perceptual field out and reduce the resolution. Each DRB has a 3 × 3 dilated convolution [24], a ReLU nonlinear function, and another 3 × 3 dilated convolution that adds input and output feature maps using skip connections. To avoid gridding issues, we set the dilation ratio of these 11 DRBs as 1, 1, 2, 2, 4, 8, 4, 2, 2, 1 following [25]. Moreover, we adopt the horizontally connected convolution module to add the MT prediction feature to the output feature map, while using the convolution to change the feature map to the size of the MT map and concatenate them together. Finally, we scale the feature map to the size of the input image by convolution.

3. Results and Discussion

In this section, we first illustrate the details of the parameter design and then explain the settings of the entire experimental process. Then, we compare our model with several existing models that performed well and provide ablation experiments at the end of this section to study the effective parts of the MTUR-Net.

3.1. Parameter Settings

To train the network, we chose the real underwater image dataset used in Li [9], which contains 890 pairs of images from [5] and 1250 pairs of images from [8]. We trained our network on a single NVIDIA 3090 Ti GPU with a batch size of eight, the initial learning rate was set to 1 × 10, and network optimization was carried out by Adam.

3.2. Experiment Setup

To test the proposed model, we took the remaining 90 pairs of real data in UIEB and recorded them as Test-R90, and to synthesize the multifaceted results, we also tested 60 challenging images in UIEB, which were recorded as Test-C60.

To show the advancement of this proposed model, we compared our method with other SOTA, including a physical model-based model and a deep-learning-based model. The physical model was an extension of their previous work to deal with underwater image restoration called Underwater Dark Channel Prior (UDCP) [2]. Furthermore, we chose Water-Net [5], a simple CNN model through gated fusion; Ucolor [9], a network embedding with the color space guided by media transmission; a fully-convolutional conditional GAN-based model FUnIE-GAN [6]; and a method using Generative Adversarial Networks (GANs) [11]. To control the variables, we chose the same training data and loss function as MTUR-Net.

3.3. Comparative Study

In this experiment, we chose two evaluation methods, including visual evaluation and quantitative evaluation, to compare the specific effects of our model with other models.

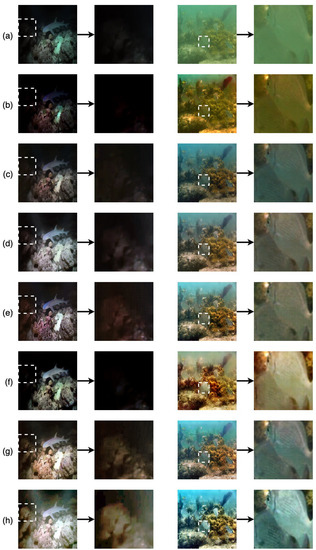

Visual Evaluation. In open water, due to the longest wave tension and fast propagation speed, red light, compared to other wavelengths, is absorbed more. Therefore, the underwater image appears blue or green. In order to clearly observe the effect of the image via MTUR-Net processing, we provide a comparison chart of the corresponding results obtained in different ways. Figure 4 shows that the output obtained by MTUR-Net had the best performance. Our solution repaired the chromatic aberration caused by different water areas, and one can see the details in the dark water and the texture of fish in the muddy water in the restored image.

Figure 4.

Visual comparison of different images (from Test-R90) enhanced by state-of-the-art methods and our MTUR-Net. (a) Raw, (b) UDCP [2], (c) WaterNet [5], (d) Ucolor [9], (e) UGAN [11], (f) FUniE-GAN [6], (g) Ours, (h) Ground Truth.

Quantitative Evaluation. We provide full-reference evaluation and non-reference evaluation to quantitatively analyze the performance of different methods.

We conducted a full-reference evaluation using the peak signal–to–noise ratio (PSNR), structural similarity (SSIM), and frame per second (FPS). For the first two evaluation criteria, PSNR is a widely used objective evaluation index of image, which is an image quality evaluation based on the difference between corresponding pixels; SSIM compares local patterns of pixel intensities that have been normalized for luminance and contrast [26]. Although the real-world environmental situation may differ from the reference image, the results of a full-reference evaluation using the reference image can provide some feedback on the performance of different methods. A higher PSNR means that the result is less distorted, a higher SSIM implies that the result is more similar to the reference image structure, and a higher FPS means that the processing process is more efficient. In Table 1, we see that our method achieved the best PSNR and SSIM, while the FPS value was also ideal.

Table 1.

Comparison the State-of-the-Art Methods Using the PSNR and SSIM on the Test-R90 Dataset [5].

Then, we used UCIQE [27] and UIQM [28] for a non-reference evaluation. In principle, the higher UCIQE score, the better the balance of the standard deviation of the chroma, the better the contrast of the brightness, and the evenness of the saturation; for the higher UIQM score, the better the visual result, subjectively. As shown in Table 2, our proposed model obtained one of the best scores in UCIQE and UIQM. However, when we visually compared the images, we found that there were many small squares on UGAN’s images, such as those shown in Figure 5, but the score was still very high, indicating that this evaluation standard still needs to be improved. At the same time, we tested the Subjectively-Annotated UIE benchmark Dataset (SAUD) [29], and its test data contains 100 unpaired images. Our experiment showed that our MTUR-Net still had one of the best results in UCIQE and UIQM, as shown in Table 3. Here, Ucolor’s UIQM was higher than MTUR-Net, because Ucolor’s setting is different from ours. The former network has two inputs in the input port, one is the original image, the other is the MT map artificially processed by the physical formula. However, the MTUR-Net generated the predicted MT map through its own training model at test time. Furthermore, our method still applies when testing on a dataset when a groundtruth MT map is unavailable, which is also one of its strengths.

Table 2.

UCIQE [27] Scores and UIQM [28] Scores of Different Methods on Test-C60.

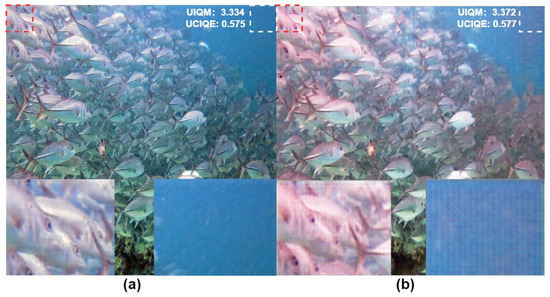

Figure 5.

Test-C60 visual image comparison. Here, we can see the difference between our image and the UGAN image. We do not have any clear pixel cubes, and the contrast and color difference of the objects are better. (a) Ours, (b) UGAN [11].

Table 3.

UCIQE [27] Scores and UIQM [28] Scores of Different Methods on SAUD.

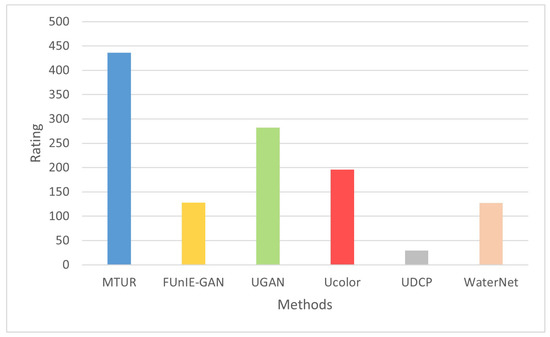

In order to further verify the effect of the MTUR method and avoid the influence of our subjective judgment of the visualization results, we conducted further research. We prepared 420 pictures extended from the Test-C60 test set, each image corresponding to seven different types (raw, MTUR, FUnIE-GAN, UGAN, Ucolor, UDCP, and WaterNet), and then we invited 20 volunteers to evaluate the quality of the images in terms of chromatic aberration, visibility, clarity, etc., and they selected the best performance without knowing the corresponding experimental method of each image. After that, we summarized the results, as shown in Figure 6. As shown in the graph, the score obtained was the total number of times each of the six methods received the best rating for its own output image. Here, we see that the MTUR had the highest number of best ratings.

Figure 6.

The generated image equality evaluation results of different methods on Test-C60.

3.4. Ablation Study

We performed ablation experiments on test-R90 to verify the effectiveness of each part of the network. The results are shown in Table 4. First, the second line’s basic network architecture removed the entire Medium Transmission Map module. So, the network sustained the enhanced image directly based on the feature map generated from the dilated residual block (DRB) in the underwater image enhancement subnet. The second line removed the skip connection between the two subnetworks. Then, we performed a comparative test to remove the concatenation and only retain the skip connection. From the experimental results, we see that without the final concatenation operation, the effect was greatly reduced. These three experiments show that the MT prediction subnetwork has a profound impact on image enhancement. Then, we reduced the convolution operation after concatenation, and we found that the effect also had an impact. In the last two ablation experiments, we concatenated or added all DRB blocks together through the skip connection to enhance the connection between the shallow layer and deep layer network, and the results showed that the effect did not perform well.

Table 4.

Component Analysis. The Basic Model is MTUR-Net without the MT-Guided Non-Local Module.

4. Conclusions

In this paper, to solve the difficulties existing in underwater image enhancement at this stage, we demonstrated the value of the physical prior, in particular, the medium transmission map, for restoring the real-world underwater images. By formulating a very simple network for learning both the prior and restoration results jointly, and encapsulating the knowledge interaction process across these two tasks at both feature and output levels, much better restoration features were learned, guaranteeing better results. In addition to producing the best results on two real-world benchmarks, our model also processed the underwater images at real-time speed, making it a potential framework to be deployed in intelligent underwater systems.

In the future, we will explore the upper bound of the benefits of the medium transmission map and also continue the exploration for a suitable knowledge interaction design for better fusing the physical prior.

Author Contributions

Conceptualization, G.W., K.Y. and L.L.; methodology, K.Y. and L.L.; writing—original draft preparation, K.Y. and L.L.; writing—review and editing, G.W. and Z.Z.; supervision, Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under grant 62102069 and U20B2063, the Sichuan Science and Technology Program under grant 2022YFG0032.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Our experiment was conducted on the UIEB Dataset [5], the UWCNN Dataset [8], and SAUD [29].

Acknowledgments

Our code extends this github repository https://github.com/xahidbuffon/FUnIE-GAN/tree/master/Evaluation (accessed on 7 April 2022), and we extend our thanks to the group.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | convolutional neural network |

| GAN | generative adversarial network |

| MT | medium transmission |

| DRB | dilated residual blocks |

| UIEB | underwater image enhancement benchmark |

| PSNR | peak signal–to–noise ratio |

| SSIM | structural similarity |

| FPS | frame per second |

| UCIQE | underwater color image quality evaluation |

| UIQM | underwater image quality measure |

| SAUD | subjectively-annotated UIE benchmark dataset |

References

- Lee, D.; Kim, G.; Kim, D.; Myung, H.; Choi, H.T. Vision-based object detection and tracking for autonomous navigation of underwater robots. Ocean Eng. 2012, 48, 59–68. [Google Scholar] [CrossRef]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission Estimation in Underwater Single Images. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 825–830. [Google Scholar] [CrossRef]

- Mohamed, N.; Jawhar, I.; Al-Jaroodi, J.; Zhang, L. Sensor Network Architectures for Monitoring Underwater Pipelines. Sensors 2011, 11, 10738–10764. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater Image Enhancement by Dehazing With Minimum Information Loss and Histogram Distribution Prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef] [Green Version]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2822–2837. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Hou, J.; Cong, R.; Guo, C.; Ren, W. Underwater Image Enhancement via Medium Transmission-Guided Multi-Color Space Embedding. IEEE Trans. Image Process. 2021, 30, 4985–5000. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-Time Color Correction of Monocular Underwater Images. IEEE Robot. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef] [Green Version]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7159–7165. [Google Scholar]

- Li, C.; Guo, J.; Guo, C. Emerging From Water: Underwater Image Color Correction Based on Weakly Supervised Color Transfer. IEEE Signal Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Li, H.; Zhuang, P. Underwater Image Enhancement Using a Multiscale Dense Generative Adversarial Network. IEEE J. Ocean. Eng. 2020, 45, 862–870. [Google Scholar] [CrossRef]

- Hu, X.; Fu, C.W.; Zhu, L.; Heng, P.A. Depth-Attentional Features for Single-Image Rain Removal. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8014–8023. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.C.; Chen, B.H.; Wang, W.J. Visibility Restoration of Single Hazy Images Captured in Real-World Weather Conditions. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1814–1824. [Google Scholar] [CrossRef]

- Yang, H.Y.; Chen, P.Y.; Huang, C.C.; Zhuang, Y.Z.; Shiau, Y.H. Low Complexity Underwater Image Enhancement Based on Dark Channel Prior. In Proceedings of the 2011 Second International Conference on Innovations in Bio-inspired Computing and Applications, Shenzhen, China, 16–18 December 2011; pp. 17–20. [Google Scholar] [CrossRef]

- Zhao, X.; Jin, T.; Qu, S. Deriving inherent optical properties from background color and underwater image enhancement. Ocean Eng. 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Fattal, R. Single Image dehazing. ACM Trans. Graph. (TOG) 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the Dark Channel Prior for Single Image Restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Klambauer, G.; Unterthiner, T.; Mayr, A.; Hochreiter, S. Self-Normalizing Neural Networks; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 972–981. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated Residual Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 636–644. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [Green Version]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding Convolution for Semantic Segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Jiang, Q.; Gu, Y.; Li, C.; Cong, R.; Shao, F. Underwater Image Enhancement Quality Evaluation: Benchmark Dataset and Objective Metric. IEEE Trans. Circuits Syst. Video Technol. 2022. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).