Language of Driving for Autonomous Vehicles

Abstract

:1. Introduction

2. Related Studies

3. Language of Driving

4. Method

4.1. Experimental Setup

4.2. Focus Group Interview

5. Results

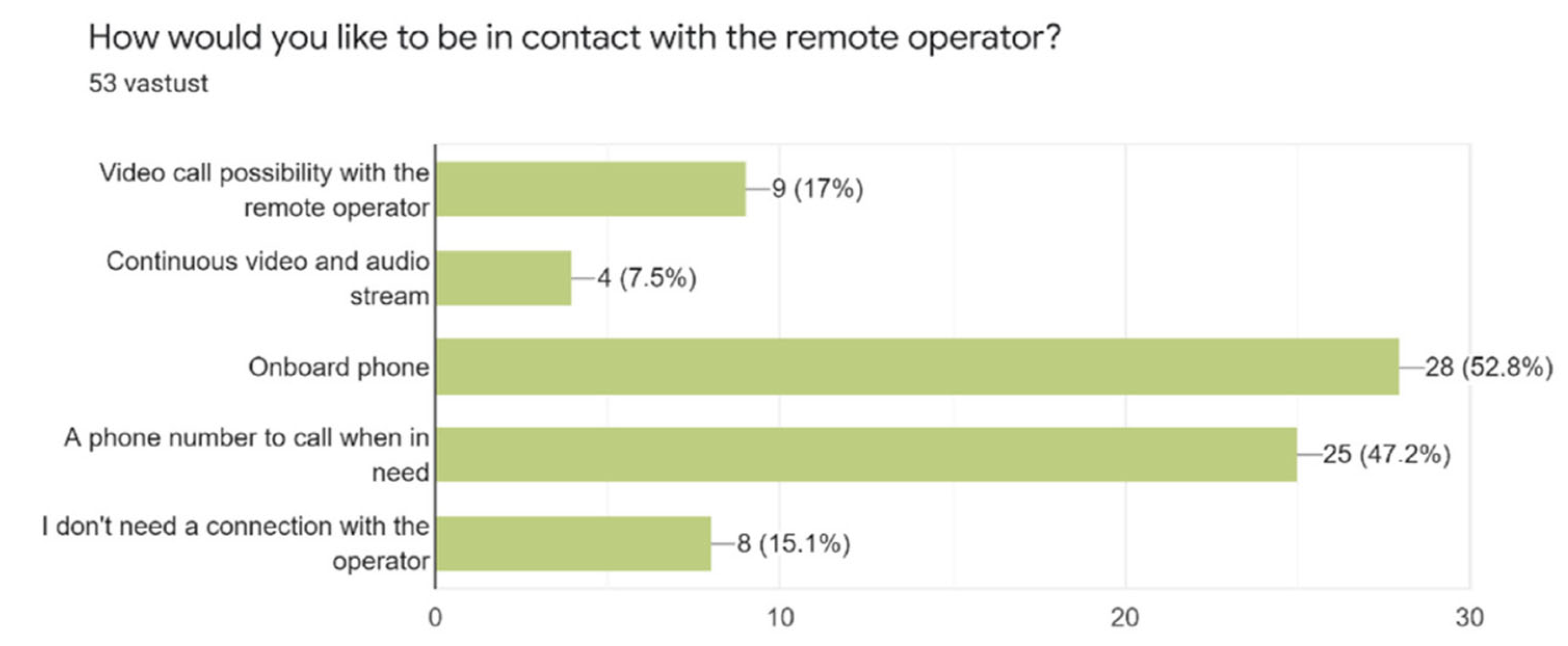

5.1. Survey among Passengers

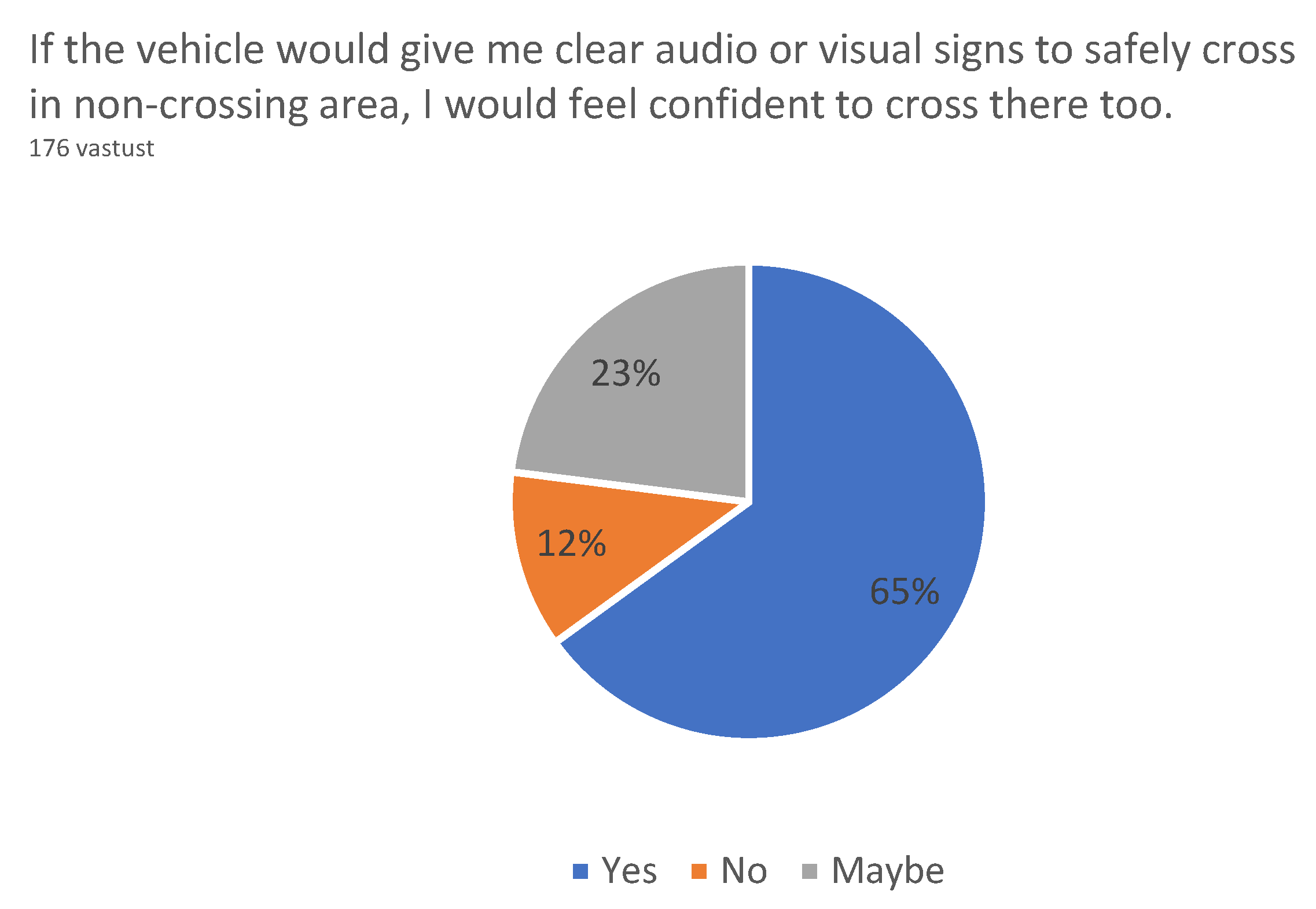

5.2. Survey among Other Road Users

5.3. Additional Suggestions from Participants

- Speed—people want the buses to drive faster (this was the most common comment).

- Vehicle-to-passenger communication:

- The bus should preferably deliver messages in several languages;

- The bus should be more communicative and explain what and why it is doing something (e.g., why the bus has braked suddenly);

- There was a suggestion to play lounge music inside the bus.

- Language of driving:

- -

- More audio should be used to communicate with other participants in the traffic, as the signs shown in Table 1 were not fully understood or it was hard to see them under direct sunlight. The audio message should also be shown as text on the screen(s), as it can be hard to hear in traffic;

- -

- Some suggested using only text instead of the signs in Table 1.

- Design:

- -

- Both the interior and outer design should be more appealing;

- -

- The use of brighter colors was recommended to better differentiate AV buses from regular vehicles.

- Smart bus stops and the size of the bus:

- -

- The one who orders the bus should also be the one who enters the vehicle, as these shuttles are quite small in size, taking up to 6 people;

- -

- The size of the bus should be bigger and accommodate at least 10 people.

- Passenger and traffic safety:

- -

- Worry about not having a safety operator was expressed, as some passengers or even vandals might damage the bus;

- -

- Passengers also worried about the missing seatbelts, which can easily be added and, according to the law, should be there if there is a wish for such buses to be operated in open traffic.

5.4. Survey among Public Sector Experts

5.5. Survey Summary and Interview Conclusions

6. Avenues for Future Research

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Understanding Pedestrian Behavior in Complex Traffic Scenes. IEEE Trans. Intell. Veh. 2017, 3, 61–70. [Google Scholar] [CrossRef]

- Camara, F.; Giles, O.; Madigan, R.; Rothmuller, M.; Rasmussen, P.H.; Vendelbo-Larsen, S.A.; Markkula, G.; Lee, Y.M.; Garach, L.; Merat, N.; et al. Predicting pedestrian road-crossing assertiveness for autonomous vehicle control. In Proceedings of the 21st International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; pp. 2098–2103. [Google Scholar] [CrossRef] [Green Version]

- Färber, B. Communication and Communication Problems Between Autonomous Vehicles and Human Drivers. In Autonomous Drivin; Springer: Berlin/Heidelberg, Germany, 2016; pp. 125–144. [Google Scholar]

- Rasouli, A.; Tsotsos, J.K. Autonomous Vehicles That Interact with Pedestrians: A Survey of Theory and Practice. IEEE Trans. Intell. Transp. Syst. 2019, 21, 900–918. [Google Scholar] [CrossRef] [Green Version]

- Rassolkin, A.; Gevorkov, L.; Vaimann, T.; Kallaste, A.; Sell, R. Calculation of the traction effort of ISEAUTO self-driving vehicle. In Proceedings of the 25th International Workshop on Electric Drives: Optimization in Control of Electric Drives, Moscow, Russia, 31 January–2 February 2018; pp. 1–5. [Google Scholar]

- Sell, R.; Rassõlkin, A.; Wang, R.; Otto, T. Integration of autonomous vehicles and Industry 4.0. Proc. Estonian Acad. Sci. 2019, 68, 389. [Google Scholar] [CrossRef]

- Sell, R.; Soe, R.-M.; Wang, R.; Rassõlkin, A. Autonomous Vehicle Shuttle in Smart City Testbed. In Intelligent System Solutions for Auto Mobility and Beyond; Zachäus, C., Meyer, G., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 143–157. [Google Scholar] [CrossRef]

- Mueid, R.; Christopher, L.; Tian, R. Vehicle-pedestrian dynamic interaction through tractography of relative movements and articulated pedestrian pose estimation. In Proceedings of the 2016 IEEE Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 18–20 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Morales-Alvarez, W.; Gomez-Silva, M.J.; Fernandez-Lopez, G.; GarcA-Fernandez, F.; Olaverri-Monreal, C. Automatic Analysis of Pedestrian’s Body Language in the Interaction with Autonomous Vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Shi, K.; Zhu, Y.; Pan, H. A novel model based on deep learning for Pedestrian detection and Trajectory prediction. In Proceedings of the 2019 IEEE 8th Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 24–26 May 2019; pp. 592–598. [Google Scholar] [CrossRef]

- Kalda, K.; Sell, R.; Soe, R.-M. Use case of Autonomous Vehicle shuttle and passenger acceptance analysis. Proc. Estonian Acad. Sci. 2021, 70, 429. [Google Scholar] [CrossRef]

- Yang, D.; Li, L.; Redmill, K.; Ozguner, U. Top-view Trajectories: A Pedestrian Dataset of Vehicle-Crowd Interaction from Controlled Experiments and Crowded Campus. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 899–904. [Google Scholar] [CrossRef] [Green Version]

- Bellone, M.; Ismailogullari, A.; Kantala, T.; Mäkinen, S.; Soe, R.-M.; Kyyrö, M. A cross-country comparison of user experience of public autonomous transport. Eur. Transp. Res. Rev. 2021, 13, 19. [Google Scholar] [CrossRef]

- Faas, S.M.; Kao, A.C.; Baumann, M. A Longitudinal Video Study on Communicating Status and Intent for Self-Driving Vehicle—Pedestrian Interaction. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 25–30 April 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Faas, S.M.; Stange, V.; Baumann, M. Self-Driving Vehicles and Pedestrian Interaction: Does an External Human-Machine Interface Mitigate the Threat of a Tinted Windshield or a Distracted Driver? Int. J. Hum. Comput. Interact. 2021, 37, 1364–1374. [Google Scholar] [CrossRef]

- Ackermann, C.; Beggiato, M.; Schubert, S.; Krems, J.F. An experimental study to investigate design and assessment criteria: What is important for communication between pedestrians and automated vehicles? Appl. Ergon. 2018, 75, 272–282. [Google Scholar] [CrossRef] [PubMed]

- Dey, D.; Martens, M.; Eggen, B.; Terken, J. The Impact of Vehicle Appearance and Vehicle Behavior on Pedestrian Interaction with Autonomous Vehicles. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, Leeds, UK, 24–27 September 2017; pp. 158–162. [Google Scholar] [CrossRef]

- Rothenbücher, D.; Li, J.; Sirkin, D.; Mok, B.; Ju, W. Ghost Driver: A Field Study Investigating the Interaction between Pedestrians and Driverless Vehicles. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication, Columbia University, New York, NY, USA, 26–31 August 2016; pp. 795–802. [Google Scholar] [CrossRef]

- De Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External Human-Machine Interfaces on Automated Vehicles: Effects on Pedestrian Crossing Decisions. Hum. Factors 2019, 61, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stadler, S.; Cornet, H.; Theoto, T.N.; Frenkler, F. A Tool, not a Toy: Using Virtual Reality to Evaluate the Communication Between Autonomous Vehicles and Pedestrians. In Augmented Reality and Virtual Reality; Springer International Publishing: Cham, Switzerland, 2019; pp. 203–216. [Google Scholar] [CrossRef]

- Deb, S.; Carruth, D.W.; Sween, R.; Strawderman, L.; Garrison, T.M. Efficacy of virtual reality in pedestrian safety research. Appl. Ergon. 2017, 65, 449–460. [Google Scholar] [CrossRef] [PubMed]

- Konkol, K.; Brandenburg, E.; Stark, R. Modular virtual reality to enable efficient user studies for autonomous driving. In Proceedings of the 13th ACM International Conference on PErvasive Technologies Related to Assistive Environments, New York, NY, USA, 30 June–3 July 2020; pp. 1–2. [Google Scholar] [CrossRef]

- Rettenmaier, M.; Pietsch, M.; Schmidtler, J.; Bengler, K. Passing through the Bottleneck—The Potential of External Human-Machine Interfaces. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1687–1692. [Google Scholar] [CrossRef]

- Goedicke, D.; Li, J.; Evers, V.; Ju, W. VR-OOM: Virtual reality on-road driving simulation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–27 April 2018; pp. 1–11. [Google Scholar] [CrossRef]

- Flohr, L.A.; Janetzko, D.; Wallach, D.P.; Scholz, S.C.; Krüger, A. Context-based interface prototyping and evaluation for (shared) autonomous vehicles using a lightweight immersive video-based simulator. In Proceedings of the 2020 ACM Designing Interactive Systems Conference, New York, NY, USA, 6–10 July 2020; pp. 1379–1390. [Google Scholar] [CrossRef]

- Morra, L.; Lamberti, F.; Prattico, F.G.; La Rosa, S.; Montuschi, P. Building Trust in Autonomous Vehicles: Role of Virtual Reality Driving Simulators in HMI Design. IEEE Trans. Veh. Technol. 2019, 68, 9438–9450. [Google Scholar] [CrossRef]

- Charisi, V. Children’s Views on Identification and Intention Communication of Self-Driving Vehicles. In Proceedings of the 2017 Conference on Interaction Design and Children, New York, NY, USA, 27–30 June 2017; pp. 399–404. [Google Scholar]

- Morgan, P.L.; Voinescu, A.; Alford, C.; Caleb-Solly, P. Exploring the Usability of a Connected Autonomous Vehicle Human Machine Interface Designed for Older Adults. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 22–26 July 2018; pp. 591–603. [Google Scholar] [CrossRef]

- Voinescu, A.; Morgan, P.L.; Alford, C.; Caleb-Solly, P. Investigating Older Adults’ Preferences for Functions Within a Human-Machine Interface Designed for Fully Autonomous Vehicles; Zhou, J., Salvendy, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 10927, pp. 445–462. [Google Scholar]

- Othersen, I.; Conti-Kufner, A.S.; Dietrich, A.; Maruhn, P.; Bengler, K. Designing for Automated Vehicle and Pedestrian Communication: Perspectives on eHMIs from Older and Younger Persons. In Proceedings of the Human Factors and Ergonomics Society Europe, Online, 1–5 October 2018; Volume 4959, pp. 135–148. Available online: https://www.hfes-europe.org/technology-ageing-society (accessed on 15 April 2022).

- Sahawneh, S.; Alnaser, A.J.; Akbas, M.I.; Sargolzaei, A.; Razdan, R. Requirements for the Next-Generation Autonomous Vehicle Ecosystem. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019. [Google Scholar] [CrossRef]

- Favarò, F.M.; Nader, N.; Eurich, S.O.; Tripp, M.; Varadaraju, N. Examining accident reports involving autonomous vehicles in California. PLoS ONE 2017, 12, e0184952. [Google Scholar] [CrossRef] [PubMed]

- Razdan, R.; Univ, F.P. Unsettled Technology Areas in Autonomous Vehicle Test and Validation; SAE international: Warrendale, PA, USA, 2019. [Google Scholar] [CrossRef]

- Miller, N. Do You Speak Air Horns? 12 October 2015. Available online: http://learningindia.in/indian-horn-language (accessed on 28 February 2022).

- Pizzagalli, S.; Kuts, V.; Bondarenko, Y.; Otto, T. Evaluation of Virtual Reality Interface Interaction Methods for Digital Twin Industrial Robot Programming and Control, A Pilot Study. In Proceedings of the 2020 ASME International Mechanical Engineering Congress and Exposition, Portland, OR, USA, 16–19 November 2020; Volume 85567, p. V02BT02A005. [Google Scholar]

- Rassolkin, A.; Vaimann, T.; Kallaste, A.; Kuts, V. Digital twin for propulsion drive of autonomous electric vehicle. In Proceedings of the 2019 IEEE 60th International Scientific Conference on Power and Electrical Engineering of Riga Technical University, Riga, Latvia, 7–9 Octorber 2019; pp. 5–8. [Google Scholar] [CrossRef]

- Kuts, V.; Bondarenko, Y.; Gavriljuk, M.; Paryshev, A.; Jegorov, S.; Pizzagall, S.; Otto, T. Digital Twin: Universal User Interface for Online Management of the Manufacturing System. In Proceedings of the 2021 ASME International Mechanical Engineering Congress and Exposition, Columbus, OH, USA, 30 October–3 November 2022; Volume 85567, p. V02BT02A003. [Google Scholar]

| Trigger | The vehicle is approaching a pedestrian crossing; pre-defined either by vector map or V2I communication | Objects detected by the sensors | Objects detected by the sensors |

| Situation | The vehicle is approaching a pedestrian crossing | The vehicle is approaching the pedestrian crossing and objects are detected on the zebra or nearby | The vehicle is driving on the road, and objects are detected close to waypoints or their moving trajectory is about to cross with the vehicle |

| Visualization |  |  |  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kalda, K.; Pizzagalli, S.-L.; Soe, R.-M.; Sell, R.; Bellone, M. Language of Driving for Autonomous Vehicles. Appl. Sci. 2022, 12, 5406. https://doi.org/10.3390/app12115406

Kalda K, Pizzagalli S-L, Soe R-M, Sell R, Bellone M. Language of Driving for Autonomous Vehicles. Applied Sciences. 2022; 12(11):5406. https://doi.org/10.3390/app12115406

Chicago/Turabian StyleKalda, Krister, Simone-Luca Pizzagalli, Ralf-Martin Soe, Raivo Sell, and Mauro Bellone. 2022. "Language of Driving for Autonomous Vehicles" Applied Sciences 12, no. 11: 5406. https://doi.org/10.3390/app12115406

APA StyleKalda, K., Pizzagalli, S.-L., Soe, R.-M., Sell, R., & Bellone, M. (2022). Language of Driving for Autonomous Vehicles. Applied Sciences, 12(11), 5406. https://doi.org/10.3390/app12115406