Damage Detection in Wind Turbine Blades Based on an Improved Broad Learning System Model

Abstract

:1. Introduction

2. Mathematical Preliminaries

2.1. Non-Local Means

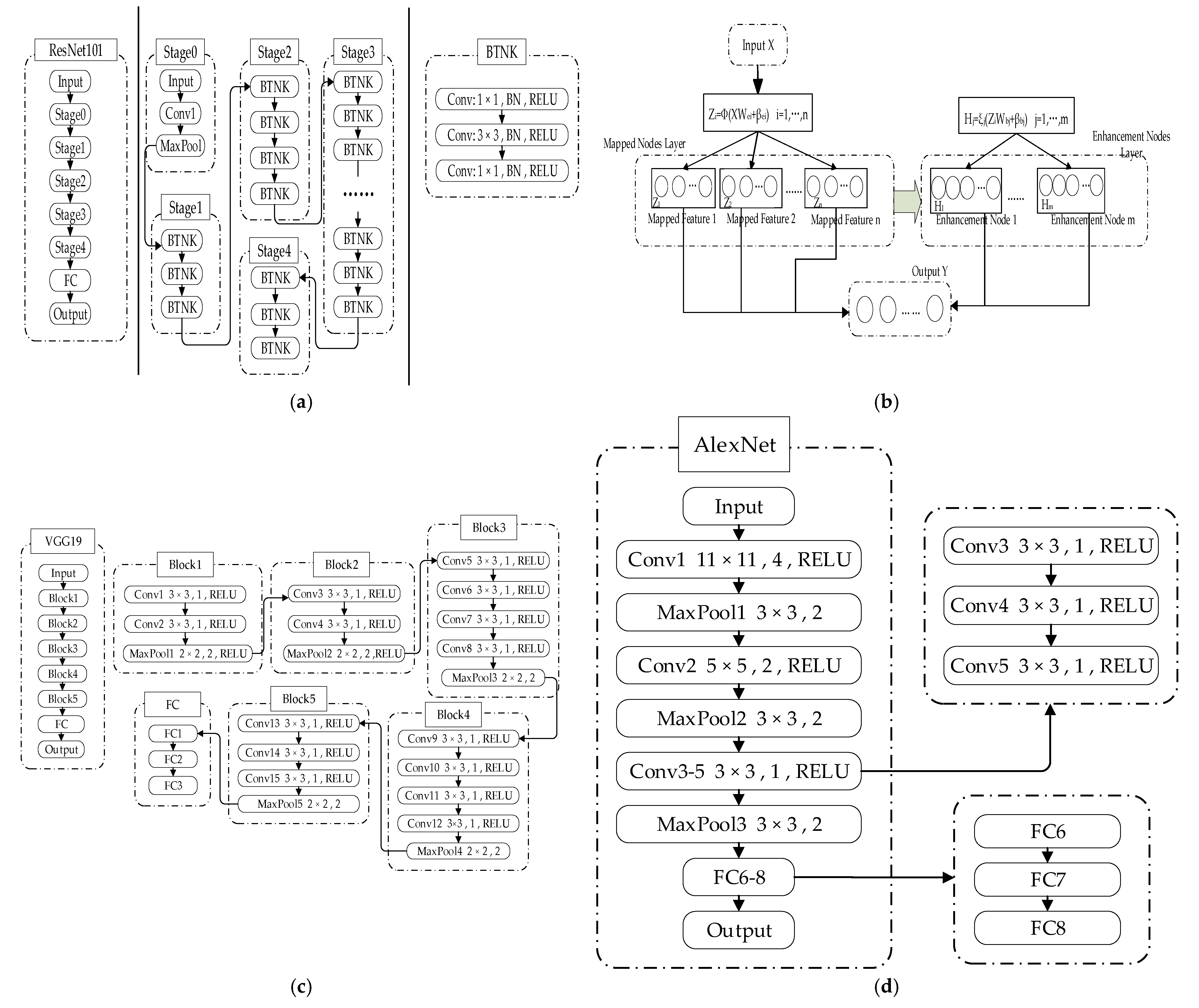

2.2. Broad Learning System

3. Damage Detection in Wind Turbine Blades Based on the CBNLM-BLS

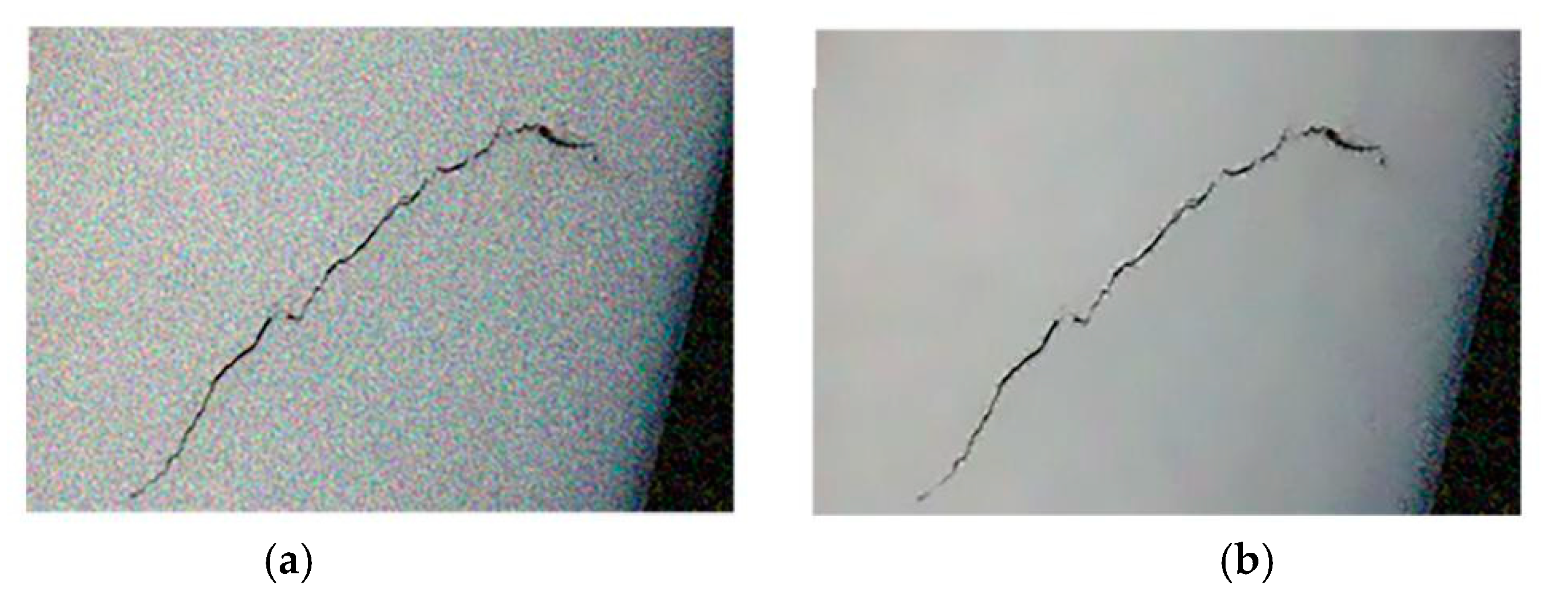

3.1. The Acquisition of Wind Turbine Blade Images

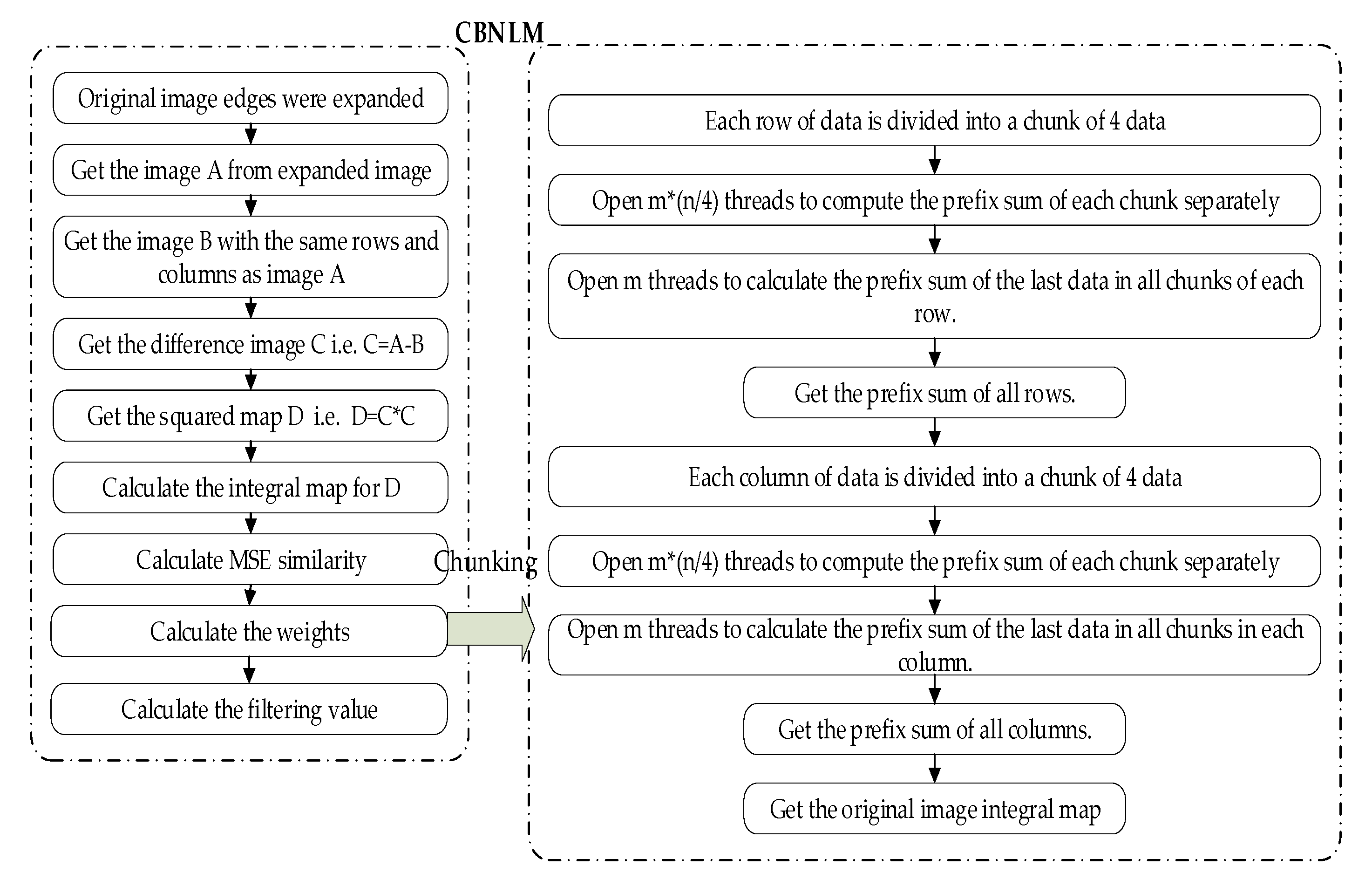

3.2. The CBNLM for Pre-Processing of Images

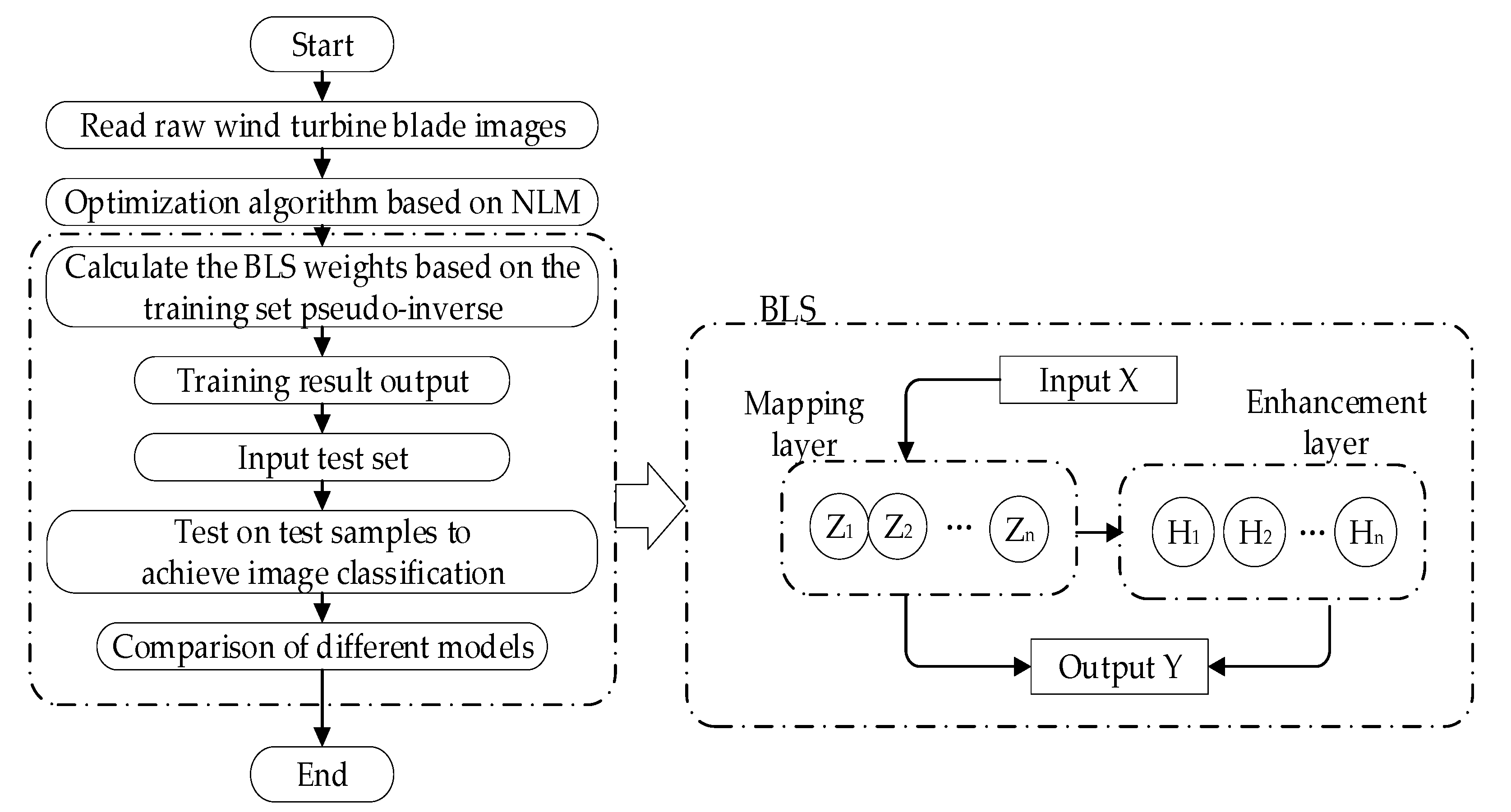

3.3. Construction of the CBNLM-BLS

- 1.

- The generation of feature nodes.

- 2.

- Enhancement nodes are generated.

- 3.

- Pseudo-inversion is sought.

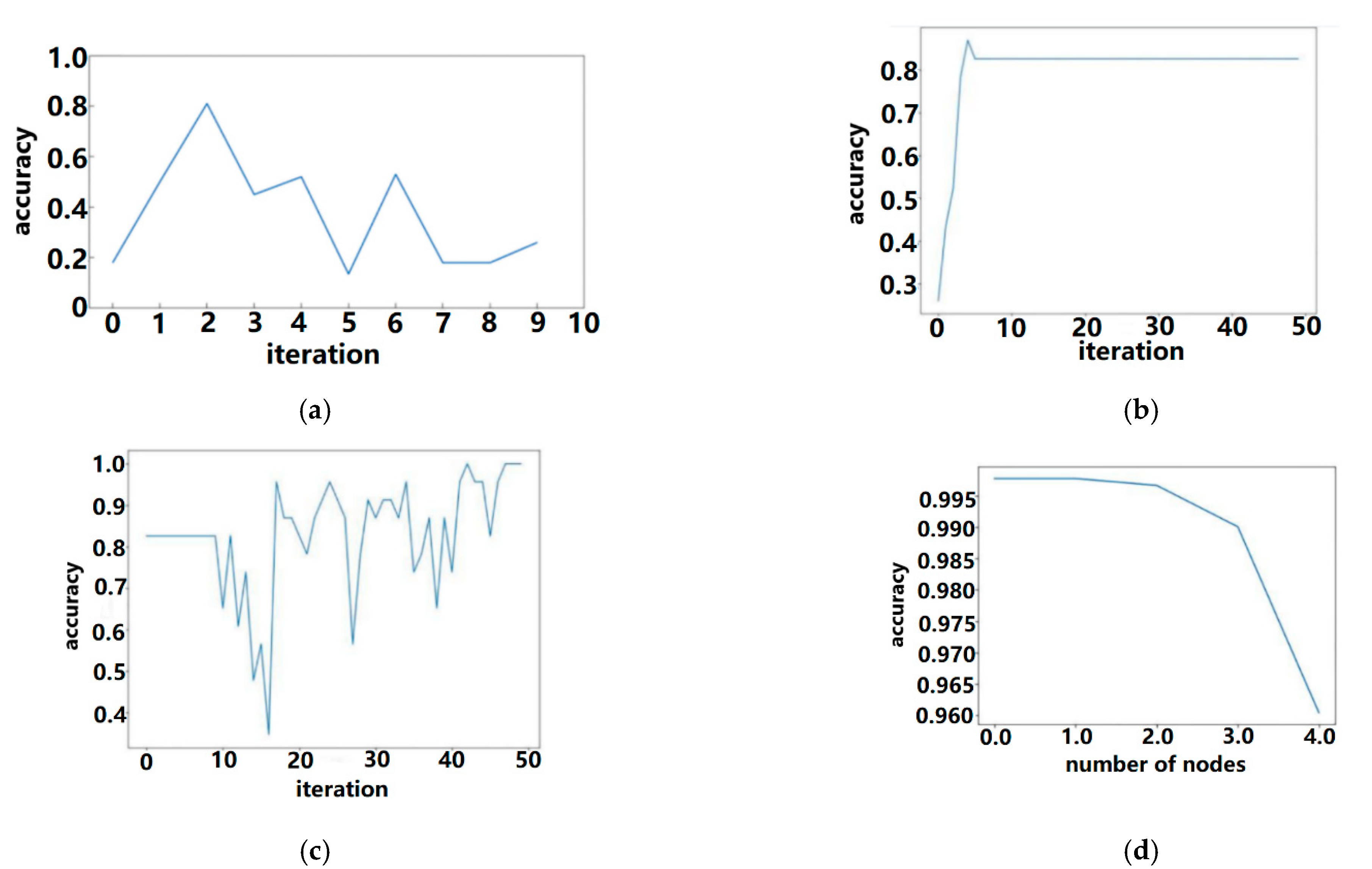

3.4. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Data Interpretation: Analysis of Global Wind Power Installed Capacity Growth Trend from 2001 to 2020. Available online: https://www.sohu.com/a/496115969_100158378?spm=smpc.author.fd-d.4.1651547430984GFPQYRM (accessed on 20 October 2021).

- Global Wind Energy Council. GWEC|Global Wind Report 2021; Global Wind Energy Council: Brussels, Belgium, 2021. [Google Scholar]

- Lu, J.Q.; Mou, S.X. Advances in leading edge protection technology of wind turbine blades. J. Gla. Fiber. Reinf. Plast. 2015, 7, 91–95. [Google Scholar]

- Yuan, C.; Li, J. Investigation on the Effect of the Baseline Control System on Dynamic and Fatigue Characteristics of Modern Wind Turbines. Appl. Sci. 2022, 12, 2968. [Google Scholar] [CrossRef]

- Wang, Z.G.; Yang, B. Advances in online monitoring technology for wind turbine blades under operating conditions. J. Eng. Ther. Energy Power 2017, 32, 1–5. [Google Scholar]

- Skrimpas, G.A.; Kleani, K. Detection of icing on wind turbine blades by means of vibration and power curve analysis. Wind Energy 2016, 19, 1819–1832. [Google Scholar] [CrossRef]

- Yeum, C.M.; Choi, J. Automated region-of-interest localization and classification for vision-based visual assessment of civil infrastructure. Struct. Health Monit. 2019, 18, 675–689. [Google Scholar] [CrossRef]

- Lenjani, A.; Dyke, S.J. Towards fully automated post-event data collection and analysis: Pre-event and post-event information fusion. Eng. Struct. 2020, 208, 109884. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Zhang, Y.; Lv, W.; Wang, D. Image recognition of wind turbine blade damage based on a deep learning model with transfer learning and an ensemble learning classifier. Renew. Energy 2021, 163, 386–397. [Google Scholar] [CrossRef]

- Xu, D.; Wen, C.B. Wind turbine blade surface inspection based on deep learning and UAV-taken images. J. Renew. Sust. Energy 2019, 11, 053305. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2017, 65, 5990–5998. [Google Scholar] [CrossRef]

- Zhou, S.T.; Li, Y.Y. Optical Inspection Technology of metal surface defects based on machine vision. Nondestruct. Test. 2020, 42, 39–44. [Google Scholar]

- Chen, C.; Liu, Z. Broad Learning System: An Effective and Efficient Incremental Learning System without the Need for Deep Architecture. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 10–24. [Google Scholar] [CrossRef]

- Chen, C.P.; Liu, Z. Broad learning system: A new learning paradigm and system without going deep. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation, Hefei, China, 19 May 2017. [Google Scholar]

- Chen, C.P.; Liu, Z. Universal approximation capability of broad learning system and its structural variations. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 1191–1204. [Google Scholar] [CrossRef]

- Jin, J.; Liu, Z.L. Discriminative graph regularized broad learning system for image recognition. Sci. China Inf. Sci. 2018, 61, 1–14. [Google Scholar] [CrossRef]

- Zhao, H.; Zheng, J.J. Semi-supervised broad learning system based on manifold regularization and broad network. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 983–994. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, X.H. GCB-Net: Graph convolutional broad network and its application in emotion recognition. IEEE Trans. Affect. Comput. 2019, 13, 379–388. [Google Scholar] [CrossRef]

- Guha, R.; Das, N. Devnet: An efficient cnn architecture for handwritten devanagari character recognition. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2052009. [Google Scholar] [CrossRef]

- Jiao, L.; Zhao, J. A survey on the new generation of deep learning in image processing. IEEE Access 2019, 7, 172231–172263. [Google Scholar] [CrossRef]

- Zhan, Y.; Wu, J.; Ding, M.; Zhang, X. Nonlocal means image denoising with minimum MSE based decay parameter adaptation. IEEE Access 2019, 7, 130246–130261. [Google Scholar] [CrossRef]

- Gong, M.; Liu, J. A Multiobjective Sparse Feature Learning Model for Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 26, 3263–3277. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. 2011, 73, 267–288. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2010, 3, 1–122. [Google Scholar] [CrossRef]

- Yang, W.; Gao, Y. MRM-Lasso: A Sparse Multiview Feature Selection Method via Low-Rank Analysis. IEEE Trans. Neural Netw. Learn. Syst. 2017, 26, 2801–2815. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 2012, 12, 80–86. [Google Scholar]

- Wu, R.T.; Singla, A. Pruning deep convolutional neural networks for efficient edge computing in condition assessment of infrastructures. Comput. Civ. Infrastruct. Eng. 2019, 34, 774–789. [Google Scholar] [CrossRef]

- Mafi, M.; Martin, H. A comprehensive survey on impulse and Gaussian denoising filters for digital images. Signal Process. 2019, 157, 236–260. [Google Scholar] [CrossRef]

- Jain, P.; Tyagi, V. A survey of edge-preserving image denoising methods. Inf. Syst. Front. 2016, 18, 159–170. [Google Scholar] [CrossRef]

- Mafi, M.; Rajaei, H. A robust edge detection approach in the presence of high impulse noise intensity through switching adaptive median and fixed weighted mean filtering. IEEE Trans. Image Process. 2018, 27, 5475–5490. [Google Scholar] [CrossRef]

- Cai, B.; Liu, W. An improved non-local means denoising algorithm. Pattern Recognit. Artif. Intell. 2016, 29, 1–10. [Google Scholar]

- He, K.; Zhang, X. Identity Mappings in Deep Residual Networks; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

| The Number of Images | Images with Damage | Images without Damage | |

|---|---|---|---|

| Training set | 556 | 266 | 290 |

| Testing set | 185 | 88 | 97 |

| Total | 741 | 354 | 384 |

| Actual Categories | Predicted Categories | |

|---|---|---|

| Positive | Negative | |

| Positive | TP | FN |

| Negative | FP | TN |

| Algorithm | Accuracy (%) | Precision (%) | Recall (%) | F1_Score (%) | Times (s) |

|---|---|---|---|---|---|

| ResNet | 81.035 | 94.444 | 37.778 | 53.968 | 35,939 |

| AlexNet | 95.700 | 81.818 | 100 | 90.000 | 11,898 |

| VGG19 | 82.609 | 82.609 | 100 | 90.476 | 13,063 |

| BLS | 99.107 | 100 | 98.276 | 99.131 | 51.126 |

| CBNLM-BLS | 99.716 | 98.630 | 100 | 99.310 | 28.662 |

| N1 | N2 | N3 | Accuracy (%) | Times (s) |

|---|---|---|---|---|

| 10 | 10 | 100 | 99.639 | 28.301 |

| 150 | 99.639 | 27.211 | ||

| 200 | 99.819 | 27.327 | ||

| 5 | 10 | 50 | 99.097 | 14.543 |

| 10 | 99.639 | 27.891 | ||

| 15 | 99.639 | 41.060 | ||

| 10 | 5 | 50 | 99.278 | 14.078 |

| 10 | 99.639 | 27.327 | ||

| 15 | 99.639 | 41.752 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, L.; Wang, Y.; Bi, J.; Sun, Y. Damage Detection in Wind Turbine Blades Based on an Improved Broad Learning System Model. Appl. Sci. 2022, 12, 5164. https://doi.org/10.3390/app12105164

Zou L, Wang Y, Bi J, Sun Y. Damage Detection in Wind Turbine Blades Based on an Improved Broad Learning System Model. Applied Sciences. 2022; 12(10):5164. https://doi.org/10.3390/app12105164

Chicago/Turabian StyleZou, Li, Yu Wang, Jiangwei Bi, and Yibo Sun. 2022. "Damage Detection in Wind Turbine Blades Based on an Improved Broad Learning System Model" Applied Sciences 12, no. 10: 5164. https://doi.org/10.3390/app12105164

APA StyleZou, L., Wang, Y., Bi, J., & Sun, Y. (2022). Damage Detection in Wind Turbine Blades Based on an Improved Broad Learning System Model. Applied Sciences, 12(10), 5164. https://doi.org/10.3390/app12105164