Abstract

Hamming space retrieval is a hot area of research in deep hashing because it is effective for large-scale image retrieval. Existing hashing algorithms have not fully used the absolute boundary to discriminate the data inside and outside the Hamming ball, and the performance is not satisfying. In this paper, a boundary-aware contrastive loss is designed. It involves an exponential function with absolute boundary (i.e., Hamming radius) information for dissimilar pairs and a logarithmic function to encourage small distance for similar pairs. It achieves a push that is bigger than the pull inside the Hamming ball, and the pull is bigger than the push outside the ball. Furthermore, a novel Boundary-Aware Hashing (BAH) architecture is proposed. It discriminatively penalizes the dissimilar data inside and outside the Hamming ball. BAH enables the influence of extremely imbalanced data to be reduced without up-weight to similar pairs or other optimization strategies because its exponential function rapidly converges outside the absolute boundary, making a huge contrast difference between the gradients of the logarithmic and exponential functions. Extensive experiments conducted on four benchmark datasets show that the proposed BAH obtains higher performance for different code lengths, and it has the advantage of handling extremely imbalanced data.

1. Introduction

The image retrieval system extracts features from each image in a database and stores them. The query image extracts the same feature vector and then performs distance matching with the feature vector in the database. The image is returned corresponding to the closest feature vector as the search result. It is widely used in the fields of pattern recognition, image search, duplicate detection, computer vision, e-commerce, etc. However, in the era of big data, large-scale and high-dimensional image data has brought a huge burden to the image retrieval system [1,2,3]. One popular and promising solution is deep supervised hashing, which has the advantages of lightweight storage and efficient computation [4,5,6].

Deep supervised hashing involves linear scan (Hamming ranking) or Hamming space retrieval. Linear scan outputs the ranked list based on the Hamming distance, and the time complexity on a dataset of size N is [7,8]. Hamming space retrieval executes the search through hash table lookups and returns data points within a small Hamming ball because it has the time complexity of when the radius of the Hamming ball is not more than 2 [9]. Therefore, it is more suitable for large-scale databases than the linear scan and has become popular in recent years.

The linear scan can be used for Hamming space retrieval, and it can obtain a satisfying retrieval performance when the code length is small, such as 8 bits or 16 bits. However, as the code length increases, the Hamming space becomes highly sparse, which makes it difficult to pull similar pairs into the Hamming ball and results in performance degradation [10,11,12,13]. To address this issue, deep Cauchy hashing (DCH) [7] and maximum-margin Hamming hashing (MMHH) [9] are specifically proposed for Hamming space retrieval. DCH [7] designs a pairwise cross-entropy loss based on the Cauchy distribution to push dissimilar pairs out of the Hamming ball. Nevertheless, it does not considered the Hamming ball as an absolute boundary, and it is possible to prune out some similar pairs, which results in decreased recall, especially for the longer code length. MMHH [9] introduces the radius of the Hamming ball as an absolute boundary. It can pull similar pairs into the ball. However, the loss is invariant for both similar and dissimilar pairs in the Hamming ball. Therefore, it is difficult to push the dissimilar pairs out of the ball, which results in poor precision. A solution that can improve the performance of Hamming space retrieval is that the model mainly learns the push of dissimilar pairs inside the Hamming ball and the pull of similar pairs outside the ball.

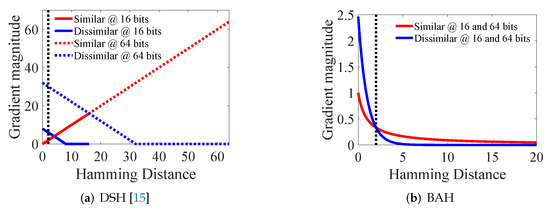

Contrastive loss is designed by comparing the pull to similar and push to dissimilar samples, and it can effectively cluster similar samples. It is usually used in dimension reduction learning [14,15], metric learning [16,17], and so on. Deep supervised hashing (DSH) [15] is a contrastive loss based on the hashing algorithm, but it does not consider the absolute boundary, and its gradient intersection point and marginal threshold increase as code length increases, as shown in Figure 1a. It is difficult to concentrate similar pairs into Hamming ball with the absolute boundary.

Figure 1.

(a) Gradient magnitude of DSH [15] at 16 and 64 bits and (b) gradient magnitude of BAH at 16 and 64 bits. Red and blue colors represent the gradient magnitudes of similar and dissimilar pairs, respectively. The solid and dashed lines represent 16 bits and 64 bits, respectively. The black dashed line is the Hamming distance 2.

To solve this problem, in this paper, an exponential function is introduced for dissimilar pairs whose convergence boundary corresponds to the marginal threshold, which does not vary with code length. A logarithmic function is used to encourage small distance for similar pairs. Furthermore, absolute boundary (i.e., Hamming radius) information is introduced to guide the gradient intersection of contrastive loss near the boundary. It implements the conditions that push is bigger than pull inside the Hamming ball and that pull is bigger than push outside the ball, as shown in Figure 1b. This can improve both recall and precision, especially for longer code lengths.

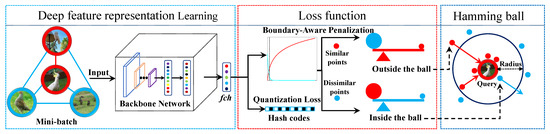

Based on the above loss, a Boundary-Aware Hashing (BAH) architecture is proposed for Hamming space retrieval. Specifically, a convolutional neural network (CNN) is exploited as a backbone network to learn the image representations, followed by a fully connected hash layer (fch) to map the image representations into K-dimensional continuous codes. Boundary-aware penalization loss (logarithmic and exponential functions) and quantization error loss are used to learn hash coding during the training process. In the test, the sign function is used directly to encode the output of the hash layer into hash codes, and the Hamming distance is used for measuring the similarity (or dissimilarity) of image pairs.

The main contribution of this work is as follows:

- Based on the idea of contrastive loss with the boundary information, a novel loss function is designed by combining a logarithmic function for similar pairs and exponential functions with boundary information for dissimilar pairs. It can push the dissimilar pairs out of the Hamming ball and pull the similar pairs inside.

- An image retrieval framework is proposed based on the designed loss function to resolve extremely imbalanced data. Its gradient of similar pairs outside the Hamming ball is much larger than that of dissimilar pairs. It effectively pulls similar pairs into the ball without up-weighting the similar pairs or other optimization strategies.

- Comprehensive experiments on four widely used image retrieval datasets, namely MS-COCO, NUS-WIDE, CIFAR-10, and ImageNet, show that: (i) the proposed method can achieve a stable improvement in recall and accuracy with the encoding length increases; (ii) our method returns a lower ratio of zero images (i.e., ratio of queries that return no images) than the seven state-of-the-art deep hashing methods and in particular can return all retrieval results efficiently on the CIFAR-10 dataset; and (iii) our method is also competitive when handling extremely imbalanced data.

2. Related Work

This section focuses on reviewing deep supervised hashing [18,19,20,21], including linear scan and Hamming space retrieval. For a detailed survey on hashing, please refer to [22].

2.1. Linear Scan

The deep supervised hashing method can effectively reduce the semantic gap between feature representation and hash encoding by using supervised information to achieve satisfactory retrieval performance. DSH [15] used contrastive loss to measure the similarity between the pairwise data and controlled the quantization error effectively using a regularization constraint. Deep hashing network (DHN) [23] and deep pairwise-supervised hashing (DPSH) [24] leveraged generalized sigmoid function as a probability function to design pairwise loss, learned pairwise similarity by the equivalent form of the inner product of Hamming distance, and effectively reduced the relaxation quantization error by controlling the quantization error function. In addition, Hashnet [25] introduced a weighted similarity training pairwise based on DHN [23] to solve the data imbalance problem and achieved a lower quantization error by using the continuation method. In order to better learn the Hamming ranking of hash codes, central similarity quantization (CSQ) [26] proposed a concept of hash center to learn the center similarity and aggregated the hash codes to the corresponding hash centers with deep neural networks. However, the above methods are all based on Hamming ranking design to maximize retrieval performance, which cannot pull similar data into the small Hamming ball well, resulting in a low level of hash code aggregation.

2.2. Hamming Space Retrieval

Hamming space retrieval is an efficient method for fast search of approximate nearest neighbors in Hamming space. Cross-modal Hamming hashing (CMHH) [27] proposed a pairwise focal loss function based on exponential distribution to solve poor cross-modal hashing methods in hash table lookups. DCH [7] proposed a pairwise cross-entropy loss based on the Cauchy distribution and introduced an up-weighting similar training pair. However, it still returns a large number of zero images for longer code lengths. MMHH [9] proposed a loss based on the maximum marginal t-distribution to explicitly characterize the Hamming ball, making the model focused on data outside the Hamming ball. Nevertheless, it ignores the distribution of similar and dissimilar data inside the Hamming ball. Unlike them, this work focuses on similar/dissimilar data outside/inside the boundary through boundary-aware penalization.

3. Boundary-Aware Hashing

We begin this section by presenting the problem definition. Then, the backbone network plus hash layer are introduced, followed by our design of boundary-aware penalization. Finally, we detail the overall objective function.

3.1. Problem Definition

In supervised hashing, the supervised information is classified as pointwise labels [28,29,30], pairwise labels [13,15,24], and triplet labels [31,32]. This paper focuses on a popular setting with pairwise labels in deep supervised hashing to validate our method. The techniques described in this paper can also be adopted with other supervised labels setting, as will be carried out in our future work.

In supervised image retrieval systems, suppose that indicates a training set of n points (images), where the supervised label constructs a pairwise similarity matrix with . if two data points and are semantically similar; otherwise, they are semantically dissimilar, i.e., .

The goal of BAH is to learn a nonlinear hash function , where K denotes the length of hash codes. The hash function encodes each data point into K-bit hash codes, which aims to learn a Hamming ball of radius to concentrate more similar data points inside the ball and distribute the dissimilar ones outside the ball.

3.2. Deep Neural Network Architecture

BAH is an end-to-end deep supervised hashing method that learns the deep feature and the hash coding in the same network architecture using supervised information. Figure 2 depicts a high-level overview of our BAH architecture, which consists of two major components: deep feature learning and a loss function. Deep feature learning is used to learn the feature representation of hashing. The loss function is used to learn hash coding by supervised information.

Figure 2.

The architecture of the proposed Boundary-Aware Hashing (BAH) comprises two key components: (1) a backbone network for learning image representation of each image and an fch (hash layer) for learning the feature representation of hashing and (2) a boundary-aware penalization that explicitly characterizes the Hamming ball by the boundary information for preserving similarity relationship in the Hamming space, and an loss for controlling the quantization errors and hash code quality. The boundary-aware penalization discriminatively penalizes the dissimilar data inside and outside the Hamming ball. The larger blue/red ball represents dissimilar/similar data of a large sample. The small blue/red ball represents dissimilar/similar data of a small sample. The fulcrum of the triangle is expressed as the boundary of the ball.

Deep feature learning includes a backbone network and a fully connected hash layer (fch). Following previous works [7,9], we replace the softmax layer of backbone network with a fch of K-neurons, which maps the high-dimensional feature representation into K-dimensional continuous code for each image . We obtain compact binary hash codes with the sign function . A novel BAH architecture is designed for learning concentrated and compact binary hash codes. Note that we do not add the tanh activation function [33,34] to the hash layer due to its saturation. We will elaborate on the details in the ablation study, which measures the impact of adding the tanh activation function.

In terms of the loss function, to ensure that the compact and concentrated hash codes are used for hash table lookups, this paper proposes a novel loss function based on boundary-aware penalization. Furthermore, it adopts the widely used loss function to control the quantization error of continuous value to binary codes .

3.3. Boundary-Aware Penalization

In many previous methods [7,23], the specific hashing model was instantiated by the Bayesian learning framework with any valid probability function.

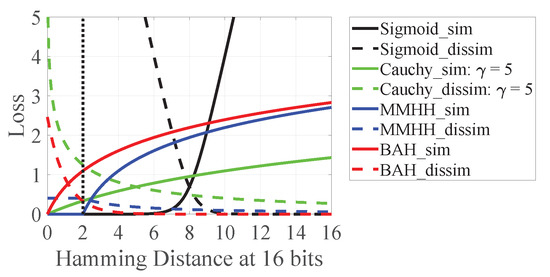

Sigmoid function. As shown in Figure 3, we can observe that it is more difficult for similar points to be concentrated within the Hamming ball with radius 2. The dissimilar points are also hard to push out of the ball. The above problems may affect the Hamming space retrieval.

Figure 3.

Loss function. The X-axis denotes Hamming Distance at 16 bits, and the Y-axis denotes the mapping loss at 16 bits of Hamming Distance.

Cauchy distribution. As shown in Figure 3, for dissimilar pairs with Hamming distance less than 2, its loss approaches infinite. This makes a limited benefit on the pruning effectiveness. Furthermore, it is possible to prune out similar pairs, resulting in the decrease in recall. Following DCH [7], we use to display loss for similar/dissimilar pairs.

Max-margint-distribution. MMHH [9] focuses on the data distribution outside the Hamming ball and overlooks the distribution of dissimilar pairs inside the ball, making it difficult to distribute the dissimilar ones inside to the ball outside, as shown in Figure 3.

A critical challenge for supervised hashing is that there are much fewer similar pairs than dissimilar pairs. Most previous methods reduce the imbalanced data by up-weighting similar pairs. However, this operation may severely affect the effectiveness of retrieval. With the increase in training iterations, there will be a large number of similar points accumulated in the Hamming ball and dissimilar pairs will become fewer. This will lead to a biased model for Hamming space retrieval. Obviously, this operation is not conducive to handling different sampling points inside and outside the Hamming ball.

One natural way to alleviate this problem is to adjust the penalization degree of different sample points by boundary information. Motivated by contrastive loss [14], which can effectively handle pairwise data relationships, we have designed a boundary-aware penalization based on the boundary information as

where is the Hamming ball radius pre-specified for the most search scenes in Hamming space retrieval, and is a parameter to make the gradient of similar and dissimilar pairs equal on the given Hamming radius. is the Hamming distance between a pair of hash codes . is the cosine similarity of the labels and corresponding to the two images and , i.e., . It can effectively measure the degree of similarity between two multi-labels, enabling the model to effectively learn the correlation between images.

The boundary-aware penalization is devised with logarithmic and exponential functions. Exponential functions with boundary information encourage large penalizing of the dissimilar pairs within the Hamming ball and small penalizing outside the ball, which discriminatively penalizes the dissimilar data inside/outside the Hamming ball. Moreover, the logarithmic function encourages similar pairs to be closer at small distances. Specifically, when the Hamming distances are 16 and 64, the similar gradient is 0.0588 and 0.0154, while the dissimilar gradient is and , and the ratio of the two is approximately and times. This indicates that the pull is much greater than the push at the large Hamming distance and demonstrates that they play an exponential role. Therefore, similar samples can be pulled into the Hamming ball using the logarithmic function. At the small Hamming distance, the push is much greater than the pull.

3.4. Objective Function

The boundary-aware penalization of the proposed BAH model in Equation (1) is a standard NP-hard problem [35]. It can be solved by employing the approximate relationship between effective continuous codes metric and discrete Hamming distance, i.e., continuous relaxation. Since the cosine distance measures each pair of continuous codes on a unit sphere and the discrete Hamming distance measures each pair of continuous codes on a unit hypercube, the cosine distance on continuous codes is always the upper bound of the discrete Hamming distance on hash codes [7,36]. Ideally, the cosine distance is equivalent to the Hamming distance. This paper adopts the cosine distance to approximate this relationship:

where is continuous codes for each image , is the hash codes corresponding to the continuous codes , K is the code length, , and is norm of a vector. To control the quantization error of the continuous relaxation and bridges the gap between cosine distance and Hamming distance, BAH adds a regularizer to minimize the difference between and with the loss:

We jointly optimize boundary-aware penalization L in Equation (1) and the -norm regularizer Q in Equation (3), forming the objective function of the proposed BAH as

where represents the network parameter sets to be backpropagated, and is a hyper-parameter to trade off between L and Q.

During the testing process, given a query , the BAH model can achieve its continuous values directly through forwarding propagation, and then it obtains the hash codes through a sign function, as follows:

where is the element-wise sign function, if , otherwise .

4. Experiments

We evaluate BAH and other comparison methods on four widely used image retrieval datasets, including MS-COCO [37], NUS-WIDE [38], CIFAR-10 [39], and ImageNet [40].

4.1. Datasets and Settings

Table 1 reports the details and experimental setup for these four datasets. The four datasets contain both multi-label and single-label datasets, and the data imbalance rates range from balanced to highly unbalanced, which is consistent with most application scenarios. The training sets in Table 1 are randomly sampled from the retrieval database, and these settings are similar to those in [7,9].

Table 1.

Four publicly available image retrieval datasets information and experimental settings. Ratio represents the ratio between the number of similar and dissimilar pairs.

In supervised hashing, the similarity between two images refers to whether they share at least one category label information [18,41]. They are ground-true neighbors if true; otherwise, they are not ground-true neighbors. For example, suppose there are three images (a), (b), and (c) in the NUS-WIDE dataset. Image (a) is annotated , and the other two images (b) and (c) are annotated and , respectively. The image pairs (a, b) and (a, c) are similar because they share and , respectively. The image pair (b, c) is dissimilar because they do not have the same category label. According to this definition of semantic similarity, supervised hashing methods need to face various complex application scenarios. Thus, the dataset in Table 1 can effectively evaluate the retrieval performance of various methods under different application scenarios.

Previous research has shown that deep hashing outperforms traditional manual hashing methods due to its ability to perform end-to-end learning of deep features and hash coding. As a result, this paper compares BAH to seven state-of-the-art deep supervised hashing methods, including DHN [23], DSH [15], HashNet [25], GreedyHash [42], DCH [7], MMHH [9], and CSQ [26]. These comparative deep supervised hashing methods are briefly described as follows:

- Deep Hashing Network (DHN) [23]. DHN learns hash coding in a Bayesian architecture by using both pairwise cross-entropy loss and pairwise quantization loss.

- Deep Supervised Hashing (DSH) [15]. DSH simultaneously employs contrastive loss and a regularizer to maximize the distinguishability between hash codes.

- HashNet: Deep Learning to Hash by Continuation (HashNet) [25]. HashNet improves DHN by up-weighting similar training pairs to solve the data imbalance problem while using a continuation technique to achieve low quantization error.

- GreedyHash: Towards Fast Optimization for Accurate Hash Coding in CNN (GreedyHash) [42]. GreedyHash uses the greedy principle to solve the binary-code discrete optimization problem, allowing the network model to converge quickly during the training process.

- Deep Cauchy Hashing for Hamming Space Retrieval (DCH) [7]. DCH minimizes the pairwise cross-entropy loss and pairwise quantization loss based on the Cauchy distribution in a Bayesian architecture.

- Maximum-Margin Hamming Hashing (MMHH) [9]. MMHH minimizes the Kullback–Leibler (KL) divergence loss based on the max-margin t-distribution to learn hash coding.

- Central Similarity Quantization(CSQ) [26]. CSQ utilizes Hadamard matrices and Bernoulli distributions to construct hash centers, causing hash codes to be aggregated to the corresponding hash centers.

According to the standard evaluation protocol, Hamming space retrieval constitutes two phases: (1) pruning: return all data points within the Hamming radius 2 by hash table lookups of each query; (2) re-ranking: re-rank the continuous code of the pruning data points in ascending order of their distances to each query point. We evaluate the performance of pruning by Recall and Precision within Hamming radius 2 (, ) while employing Mean Average Precision of Re-ranking within Hamming Radius 2 () on Re-ranking. In addition, we use the F1-score [43] to evaluate the harmonic mean of precision and recall. The ratios of returning zero images evaluate the efficiency of the hash table lookups of the various method.

We implement BAH based on the PyTorch framework and adopt the AlexNet architecture [44] as the backbone for all comparison methods. All experiments are completed on an RTX 3090 GPU server. We employ back-propagation to train the hash layer fch and finetune the backbone model pre-trained on ImageNet. As the hash layer fch is trained from scratch, we set its learning rate to be 10 times higher than that of the other layers. We set the mini-batch size of images as 128 and use Root Mean Square prop (RMSprop) as the optimizer with a weight decay of 0.00001. Furthermore, we tune the learning rate from to using a 5-fold cross-validation strategy and obtain the hyper-parameter . We set the hyper-parameters to 0.01, 0.01, 0.05, and 0.01 for the MS-COCO, NUS-WIDE, CIFAR-10, and ImageNet datasets, respectively.

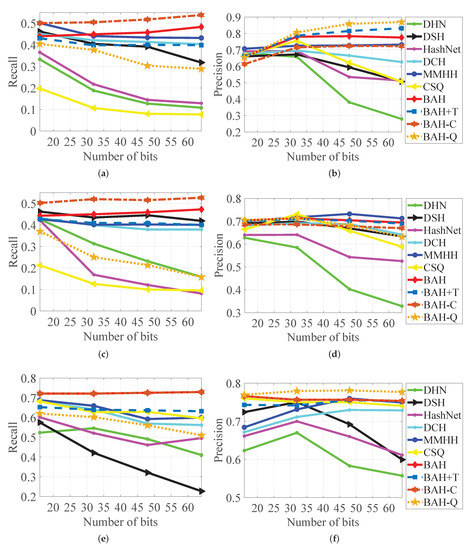

Three variants of BAH, namely BAH+T, BAT-C, and BAT-Q, have been investigated and are shown in Figure 4, Figure 5 and Figure 6, Table 2 and Table 3. BAH+T adds a tanh activation function behind the hash layer to learn the hash code using BAH. BAH-C is a BAH variant that does not use Equation (1). BAH-Q is a variant of BAH that does not utilize the loss Equation (3). We will discuss them in detail in Section 4.4. Ablation studies were used to analyze the impact of various BAH variants on the hash model.

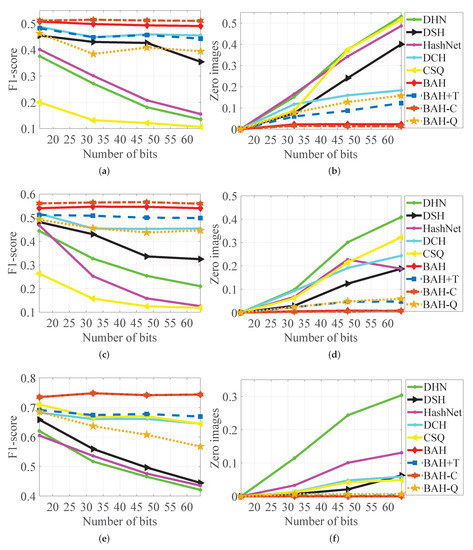

Figure 4.

and with different code lengths on MS-COCO, NUS-WIDE, and CIFAR-10. (a) on MS-COCO. (b) on MS-COCO. (c) on NUS-WIDE. (d) on NUS-WIDE. (e) on CIFAR-10. (f) on CIFAR-10.

Figure 5.

F1-score and ratios of returned zero images for different code lengths on MS-COCO, NUS-WIDE, and CIFAR-10. (a) F1-score on MS-COCO. (b) Zero images on MS-COCO. (c) F1-score on NUS-WIDE. (d) Zero images on NUS-WIDE. (e) F1-score on CIFAR-10. (f) Zero images on CIFAR-10.

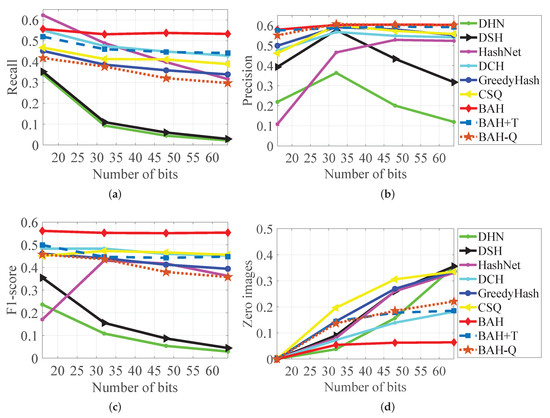

Figure 6.

Comparison of , , F1-score, and ratio of returned zero images for BAH and other methods on the ImageNet dataset. (a) on ImageNet. (b) on ImageNet. (c) F1-score on ImageNet. (d) Zero images on ImageNet.

Table 2.

The MAP Results of Re-ranking within Hamming Radius 2 () for different bits on three benchmark datasets.

Table 3.

MAP Results of Re-ranking within Hamming Radius 2 () for different bits on the ImageNet dataset.

4.2. Hamming Space Retrieval Results

Pruning. As the Hamming space becomes sparse using longer hash codes, it is not easy to push the similar data points into the Hamming ball and take out the dissimilar ones from the ball, which results in relevant data points being pruned and may severely affect the effectiveness. Therefore, we provide and of BAH and competitors in Figure 4. As shown in Figure 4, as the length of hash codes increases over 32 bits, and of DHN and HashNet with the sigmoid function drop quickly. This demonstrates that they cannot aggregate similar data in the Hamming ball effectively, and the sigmoid function is not suitable for Hamming space retrieval. In comparison, the performance of DCH and MMHH is much better than that of DHN and HashNet. However, compared with the proposed BAH, their performance is not satisfying, especially on the CIFAR-10 dataset. The reason is that DCH and MMHH cannot handle well different sampling points inside and outside the Hamming ball. The performance of BAH is significantly higher than all the competitors. It demonstrates that BAH could improve the aggregation ability of similar data by introducing boundary-aware penalization. Moreover, the is higher and the is higher than or equal to others on both the multi-label and single-label datasets. The reason is that the designed logarithm encourage small distances for similar pairs, and exponential functions with boundary information guide the push for the dissimilar pairs to be bigger and smaller than the pull to the similar pairs inside and outside he Hamming ball, respectively. As a result, a discriminative penalty to the dissimilar data inside and outside the Hamming ball could effectively improve the performance of Hamming space retrieval. Note that the and curves of BAH and BAH-C on the CIFAR-10 dataset are the same.

F1-score and the ratio of returned zero images. As shown in Figure 5a–c, we use an F1-score of varying code lengths to evaluate the effectiveness of various algorithms in hash table lookups while accounting for precision and recall. In contrast to other methods, BAH can maintain a stable F1-score across the three datasets, indicating that the BAH method can aggregate similar data within Hamming ball with a small Hamming radius as the hash code becomes longer. As a result, BAH performs hash table lookups more efficiently than other deep hashing methods. It is worth noting that BAH-C has a slightly higher F1-score than BAH, but BAH has higher precision than BAH-C, indicating that acts as a trade-off between precision and recall on multi-label datasets.

We use the ratio of returned zero images to evaluate the hash codes aggregation capability of various algorithms to effectively verify the retrieval capability of various deep hashing methods on hash table lookups, as shown in Figure 5d–f. This result demonstrates the difficulty of deep hashing methods based on linear scans to aggregate relevant data into a small Hamming radius, which leads to hash table lookup failures when using longer hash codes lengths. In contrast, the ratio of returned zero images for BAH does not increase substantially with increasing encoding length, particularly on the CIFAR-10 and NUS-WIDE datasets, indicating that BAH can cluster more relevant points into a Hamming ball with a small radius. This is primarily due to boundary-aware penalization, which treats data inside and outside the ball differently.

Re-ranking. To effectively evaluate the retrieval quality of the hashing algorithms after pruning, we used Re-ranking () to measure the superiority of the compared methods, and Table 2 shows the retrieval performance of each algorithm in detail. Table 2 also reveals an intriguing phenomenon: on the multi-label MS-COCO dataset, CSQ with longer hash code length outperforms the other methods in terms of MAP performance. This means that CSQ outperforms all other algorithms in reranking retrieval of returned data. However, its pruning, F1-score, and rate of returning zero images are lower than those of the other comparison methods, indicating that can only reflect the retrieval performance of the returned data and that the effectiveness of the hash table lookups must be evaluated holistically. This observation may necessitate more careful thought when developing new hashing methods to achieve more efficient hash table lookups.

4.3. Robustness on Imbalanced and Noisy Data

Next, we validated the effectiveness of the proposed BAH in handling unbalanced data on the ImageNet dataset, as shown in Figure 6. This result reveals some intriguing insights: (1) The HashNet method has an excellent recall on 16 bits, but it has poor precision. (2) GreedyHash and CSQ have very consistent F1-score performance. The main reasons are as follows. HashNet relies on up-weighting similar pairs to solve the data imbalance problem, but this focuses too much on the similar pairs and ignores the dissimilar pairs inside the Hamming ball, such that it is difficult to push the dissimilar pairs outside the Hamming ball resulting in poor precision. The Imagenet dataset is sample-balanced for GreedyHash and CSQ since they are based on the pointwise label to design. Compared to these methods, BAH exhibits excellent pruning performance, particularly when encoding longer hash codes, with a rate of returning zero images of less than 7%. This is primarily due to the discriminative penalization of data inside and outside the Hamming ball, allowing the loss function to efficiently handle the unbalanced data problem.

Table 3 details that BAH substantially outperforms all comparison methods on the imbalanced dataset by , which indicates that BAH can obtain high-quality retrieval by efficient re-ranking. Compared to CSQ and GreedyHash, the hashing method based on the classification, BAH achieves substantial boosts of 9.18% and 6.83% on average on ImageNet, respectively. Compared to DCH, by up-weighting similar pairs, BAH achieves a substantial upgrade of 9.88% on average . This indicates that DCH cannot effectively solve the Hamming space retrieval in imbalanced scenarios through up-weighting of similar pairs. It further verifies that BAH can effectively utilize boundary information to discriminate the penalty on different sampling points in imbalanced scenarios without up-weighting similar pairs or other optimization strategies.

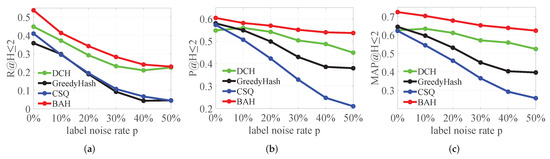

We further evaluate the robustness of BAH for noisy labels on 64 bits of the training ImageNet dataset, and compare it to to BAH, CSQ, GreedyHash, and DCH. Following the setting in [45], we change each image label in the original training set to another with probability p (denote the label noise rate) to obtain the noisy training set. The query set and retrieval database remain invariant.

Figure 7 shows that the results of four deep hashing methods on the ImageNet dataset with label noise rate from 0% (denote clean training set) to 50% are , , and . In pruning and re-ranking, the hash methods based on pairwise labels are more robust to label noise than those based on classification. The results in Figure 7 also indicate that the model is more robust to noise data than DCH.

Figure 7.

Comparison of , , and in BAH and competitors on training ImageNet dataset with noisy labels. (a) . (b) . (c) .

4.4. Ablation Study

We investigate three variants of BAH: (1) BAH+T is a common operation using the deep hashing methods, adding a tanh activation function behind the hash layer to learn the hash codes using the BAH; (2) BAH-C is the BAH variant without using the Equation (1), it is worth noting that the is only valid for multi-label datasets; (3) BAH-Q is the BAH variant without using the loss Equation (4), i.e., .

From Figure 4, Figure 5 and Figure 6, Table 2 and Table 3, BAH outperforms BAH+T by 5.08%, 4.45%, 8.42% and 7.29% in average of on MS-COCO, NUS-WIDE, CIFAR-10 and ImageNet datasets, respectively. As previously stated, the tanh function exists in the saturated region. This is the reason that BAH+T to aggregate similar data is far weaker than that of BAH—it is worth noting that the re-ranking performance of BAH on MS-COCO is lower than that of BAH+T. However, BAH+T limits the effectiveness of the search while BAH balances both effectiveness and accuracy. Thus, BAH is more suitable for Hamming space retrieval in different application scenarios. Taking everything into account, BAH outperforms BAH-C on MS-COCO and NUS-WIDE datasets. It is thus clear that leverages multi-label information, which can effectively trade off between accuracy and effectiveness on multi-label datasets. BAH outperforms BAH-Q by huge margins of 11.43%, 20.81%, 15.11%, and 10.42% in average on MS-COCO, NUS-WIDE, CIFAR-10, and ImageNet datasets, respectively. This result validates that the loss helps aggregate relevant data effectively into Hamming ball, leading to an enhancement of the pruning efficiency by BAH.

4.5. Hyper-Parameter Sensitivity

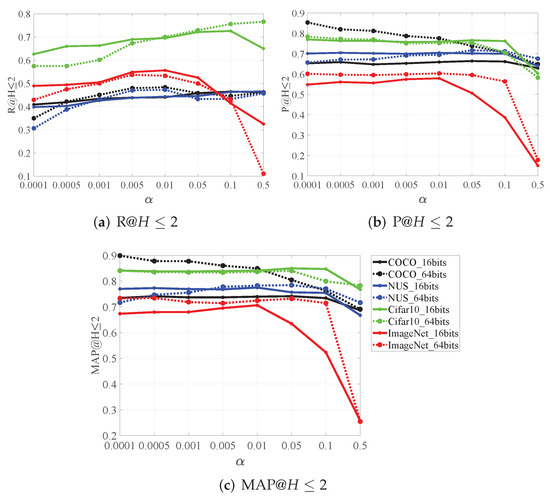

We conduct experiments to test the sensitivity of the parameter towards search performance. As shown in Figure 8, the performance of BAH increases rapidly at first with the increase of and then decreases. As the hyper-parameter increases, the real-value continuously approaches the hash codes to reduce the quantization error and improve the pruning. To achieve both satisfactory precision and recall, we provide the hyper-parameter of the four datasets set to 0.01, 0.01, 0.05, and 0.01, respectively.

Figure 8.

Sensitivity study for BAH using 16 bits and 64 bits on the four datasets: (a) the curves w.r.t for different datasets, (b) the curves w.r.t for different datasets, and (c) the curves w.r.t for different datasets.

5. Conclusions

We propose a new boundary-aware hash (BAH) for efficient Hamming space retrieval, consisting of a boundary-aware contrastive loss and a quantization loss. The core idea of boundary-aware contrastive loss is to discriminatively penalize the data inside and outside the Hamming ball by introducing boundary information (i.e., Hamming radius). It can effectively push dissimilar pairs from the Hamming ball and pull similar pairs into it. Moreover, it effectively solves the problem of imbalanced data without up-weighting similar pairs or other optimization strategies. Extensive experiments on four benchmark datasets have shown that the proposed BAH outperforms the state-of-the-art baselines.

In the future, to perform Hamming space retrieval more effectively, we will further study the loss based on boundary-aware probability. The Hamming space retrieval hashing method is designed more efficiently by comparing and analyzing the ability to use boundary information between metric and probability. In addition, we apply the boundary-aware contrastive loss to cross-modal Hamming space retrieval to further verify the effectiveness of BAH.

Author Contributions

Conceptualization, W.H. and G.S.; Data curation, W.H. and Y.C.; Formal analysis, W.H., Y.C. and G.S.; Funding acquisition, L.W, G.S. and M.J.; Investigation, G.S. and M.J.; Methodology, W.H.; Project administration, G.S. and M.J.; Software, W.H. and Y.C.; Supervision, W.H., L.W., G.S. and M.J.; Validation, Y.C.; Visualization, W.H. and Y.C.; Writing—original draft, W.H.; Writing—review & editing, L.W., G.S. and M.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Beijing Postdoctoral Research Foundation: Q6042001202101.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this paper as no new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, H.; Zhao, N.; Shang, X.; Luan, H.; Chua, T.S. Discrete image hashing using large weakly annotated photo collections. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence 2016, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Yan, X.; Ye, Y.; Mao, Y.; Yu, H. Shared-private information bottleneck method for cross-modal clustering. IEEE Access 2019, 7, 36045–36056. [Google Scholar] [CrossRef]

- Gu, G.; Liu, J.; Li, Z.; Huo, W.; Zhao, Y. Joint learning based deep supervised hashing for large-scale image retrieval. Neurocomputing 2020, 385, 348–357. [Google Scholar] [CrossRef]

- Jin, S.; Zhou, S.; Liu, Y.; Chen, C.; Sun, X.; Yao, H.; Hua, X.S. Ssah: Semi-supervised adversarial deep hashing with self-paced hard sample generation. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11157–11164. [Google Scholar]

- Weng, Z.; Zhu, Y. Online Hashing with Efficient Updating of Binary Codes. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12354–12361. [Google Scholar]

- Ma, L.; Li, H.; Meng, F.; Wu, Q.; Ngan, K.N. Discriminative deep metric learning for asymmetric discrete hashing. Neurocomputing 2020, 380, 115–124. [Google Scholar] [CrossRef]

- Cao, Y.; Long, M.; Liu, B.; Wang, J. Deep cauchy hashing for hamming space retrieval. In Proceedings of the CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1229–1237. [Google Scholar]

- Norouzi, M.; Punjani, A.; Fleet, D.J. Fast search in hamming space with multi-index hashing. In Proceedings of the CVPR 2012, Providence, RI, USA, 16–21 June 2012; pp. 3108–3115. [Google Scholar]

- Kang, R.; Cao, Y.; Long, M.; Wang, J.; Yu, P.S. Maximum-margin hamming hashing. In Proceedings of the ICCV, Seoul, Korea, 27 October–2 November 2019; pp. 8252–8261. [Google Scholar]

- Li, Q.; Sun, Z.; He, R.; Tan, T. Deep supervised discrete hashing. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 2482–2491. [Google Scholar]

- Jiang, Q.Y.; Cui, X.; Li, W.J. Deep discrete supervised hashing. IEEE Trans. Image Process. 2018, 27, 5996–6009. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yan, C.; Pang, G.; Bai, X.; Shen, C.; Zhou, J.; Hancock, E. Deep Hashing by Discriminating Hard Examples. In Proceedings of the ACM MM, Nice, France, 21–25 October 2019; pp. 1535–1542. [Google Scholar]

- Xie, Y.; Liu, Y.; Wang, Y.; Gao, L.; Wang, P.; Zhou, K. Label-Attended Hashing for Multi-Label Image Retrieval. In Proceedings of the IJCAI, Yokohama, Japan, 11–17 July 2020; pp. 955–962. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the CVPR 2006, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1735–1742. [Google Scholar]

- Liu, H.; Wang, R.; Shan, S.; Chen, X. Deep supervised hashing for fast image retrieval. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 2064–2072. [Google Scholar]

- Lian, Z.; Li, Y.; Tao, J.; Huang, J. Speech emotion recognition via contrastive loss under siamese networks. In Proceedings of the ASMMC-MMAC, Seoul, Korea, 26 October 2018; pp. 21–26. [Google Scholar]

- Choi, H.; Som, A.; Turaga, P. AMC-loss: Angular margin contrastive loss for improved explainability in image classification. In Proceedings of the CVPR, Virtual, 14–19 June 2020; pp. 838–839. [Google Scholar]

- Chen, Z.; Yuan, X.; Lu, J.; Tian, Q.; Zhou, J. Deep hashing via discrepancy minimization. In Proceedings of the CVPR, Salt Lake City, Utah, 18–22 June 2018; pp. 6838–6847. [Google Scholar]

- Zhang, Z.; Zou, Q.; Lin, Y.; Chen, L.; Wang, S. Improved deep hashing with soft pairwise similarity for multi-label image retrieval. IEEE Trans. Multimed. 2019, 22, 540–553. [Google Scholar] [CrossRef] [Green Version]

- Fan, L.; Ng, K.W.; Ju, C.; Zhang, T.; Chan, C.S. Deep polarized network for supervised learning of accurate binary hashing codes. In Proceedings of the IJCAI, Yokohama, Japan, 11–17 July 2020; pp. 825–831. [Google Scholar]

- Wang, Z.; Zheng, Q.; Lu, J.; Zhou, J. Deep Hashing with Active Pairwise Supervision. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 522–538. [Google Scholar]

- Wang, J.; Zhang, T.; Sebe, N.; Shen, H.T. A survey on learning to hash. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 769–790. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Long, M.; Wang, J.; Cao, Y. Deep hashing network for efficient similarity retrieval. In Proceedings of the AAAI, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Li, W.J.; Wang, S.; Kang, W.C. Feature learning based deep supervised hashing with pairwise labels. In Proceedings of the AAAI, Phoenix, AZ, USA, 12–17 February 2016; pp. 1711–1717. [Google Scholar]

- Cao, Z.; Long, M.; Wang, J.; Yu, P.S. Hashnet: Deep learning to hash by continuation. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 5608–5617. [Google Scholar]

- Yuan, L.; Wang, T.; Zhang, X.; Tay, F.E.; Jie, Z.; Liu, W.; Feng, J. Central Similarity Quantization for Efficient Image and Video Retrieval. In Proceedings of the CVPR, Virtual, 14–19 June 2020; pp. 3083–3092. [Google Scholar]

- Cao, Y.; Liu, B.; Long, M.; Wang, J. Cross-modal hamming hashing. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 202–218. [Google Scholar]

- Shen, F.; Shen, C.; Liu, W.; Tao Shen, H. Supervised discrete hashing. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015; pp. 37–45. [Google Scholar]

- Liong, V.E.; Lu, J.; Duan, L.Y.; Tan, Y.P. Deep Variational and Structural Hashing. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 580–595. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Zhang, P.F.; Huang, Z.; Nie, L.; Xu, X.S. Discrete hashing with multiple supervision. IEEE Trans. Image Process. 2019, 28, 2962–2975. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Lin, L.; Zhang, R.; Zuo, W.; Zhang, L. Bit-scalable deep hashing with regularized similarity learning for image retrieval and person re-identification. IEEE Trans. Image Process. 2015, 24, 4766–4779. [Google Scholar] [CrossRef] [PubMed]

- Lai, H.; Pan, Y.; Liu, Y.; Yan, S. Simultaneous feature learning and hash coding with deep neural networks. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015; pp. 3270–3278. [Google Scholar]

- Jiang, Q.Y.; Li, W.J. Asymmetric deep supervised hashing. In Proceedings of the AAAI, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Liu, H.; Wang, R.; Shan, S.; Chen, X. Learning multifunctional binary codes for both category and attribute oriented retrieval tasks. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 3901–3910. [Google Scholar]

- Lin, M.; Ji, R.; Liu, H.; Sun, X.; Wu, Y.; Wu, Y. Towards optimal discrete online hashing with balanced similarity. In Proceedings of the AAAI, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8722–8729. [Google Scholar]

- Hu, W.; Wu, L.; Jian, M.; Chen, Y.; Yu, H. Cosine metric supervised deep hashing with balanced similarity. Neurocomputing 2021, 448, 94–105. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the ECCV, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Chua, T.S.; Tang, J.; Hong, R.; Li, H.; Luo, Z.; Zheng, Y. NUS-WIDE: A real-world web image database from National University of Singapore. In Proceedings of the ICMR, Santa Barbara, CA, USA, 2–15 August 2009; pp. 1–9. [Google Scholar]

- Torralba, A.; Fergus, R.; Freeman, W.T. 80 million tiny images: A large data set for nonparametric object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1958–1970. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. IJCV 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Yuan, X.; Ren, L.; Lu, J.; Zhou, J. Relaxation-free deep hashing via policy gradient. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018; pp. 134–150. [Google Scholar]

- Su, S.; Zhang, C.; Han, K.; Tian, Y. Greedy hash: Towards fast optimization for accurate hash coding in cnn. In Proceedings of the NeurIPS, Virtual, 3–8 December 2018; pp. 798–807. [Google Scholar]

- Zhang, T.; Whitehead, S.; Zhang, H.; Li, H.; Ellis, J.; Huang, L.; Liu, W.; Ji, H.; Chang, S.F. Improving event extraction via multimodal integration. In Proceedings of the ACM MM, Mountain View, CA, USA, 23–27 October 2017; pp. 270–278. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the NeurIPS, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Goldberger, J.; Ben-Reuven, E. Training deep neural-networks using a noise adaptation layer. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).