1. Introduction

The influence of visual, proprioceptive, and vestibular systems on sound localization has been investigated in various studies [

1,

2,

3,

4,

5,

6,

7,

8], including a good review to overview this issue [

2]. For instance, Wallach [

7] reported that sound-source localization requires an interaction of auditory-spatial and head-position cues. His study revealed that the construct of auditory space is dependent upon self-motion; despite movement of the head, listeners can perceive a stable auditory environment and use it to accurately localize sounds [

7,

9].

These evidences [

1,

2,

3,

4,

5,

6,

7,

8] demonstrate that sound localization is a multisensory integration process involving self-motion [

10,

11]. In fact, previous studies have demonstrated that a listener’s head/body movement facilitates sound localization by leveraging dynamic changes in the information input into the ears [

10,

11,

12,

13,

14,

15,

16,

17,

18]. However, recent reports have shown that sound localization accuracy deteriorates during rotation of the listener’s head/body [

19,

20,

21,

22,

23]. Thus, the effects of self-motion on sound localization accuracy remain a topic of debate.

In this context, it has been confirmed that the deterioration of sound localization accuracy is independent of the listener’s rotation velocity [

21,

22,

23]. For instance, Honda et al. [

23] reported that sound-localization resolution degrades with passive rotation, irrespective of the rotation speed, even for very low speeds of 0.625°/s. Their results suggest that the deterioration of sound localization accuracy is almost independent of bottom-up effects, such as ear-input blurring. A more appropriate explanation for the deterioration is in terms of the listeners’ perception of self-motion.

The visual system plays a vital role in self-motion perception. For instance, visually induced self-motion perception can be generated by presenting large optic flow patterns to physically stationary observers [

24]. This perceptual phenomenon is often referred to as vection. Several studies have investigated the effects of visually induced self-motion on sound localization. The results of Wallach’s classical study [

7] suggest that vection leads to the same performance in sound-source localization for moving and stationary sounds as actual self-motion. Recently, Teramoto et al. [

25] reported that the auditory space is distorted during visually induced self-motion in depth. They presented a large-field visual motion to induce self-motion perception in participants and investigated whether this linear self-motion information can alter auditory space perception. During the participants’ experience of self-motion, a short noise burst was delivered from one of the spatially distributed loudspeakers on the coronal plane. The participants were asked to indicate the position of the sound (forward or backward) relative to their coronal plane. The results showed that the sound position aligned with the subjective coronal plane was significantly displaced in the direction of self-motion. Their findings [

7,

25] indicate that self-motion information, irrespective of its origin, is crucial for auditory space perception.

If the perception of self-motion itself, induced by any information, is crucial for auditory space perception [

7,

25], sound localization accuracy would deteriorate not only during actual self-motion [

19,

20,

21,

22,

23] but also in the event of self-motion perception. In visually induced self-motion, the input difference between the two ears remains constant because the listener does not perform any head/body movement. Therefore, if the deterioration is also observed during self-motion perception, the findings of Wallach [

7] and Teramoto et al. [

25] would support the idea that self-motion information is crucial for auditory space perception.

In this study, our main aim was to investigate how self-motion information affects sound localization in detail when listeners perceive rotational self-motion. Results of previous studies, including that by Wallach [

18], have revealed that head movement can influence sound localization performance, and various studies have revealed a facilitation effect based on the listeners’ movement. Even if the self-motion is virtually presented in a similar manner to the experiment described by Wallach [

7], the effect seems to be identical to the results under actual self-motion conditions. When the relative position of the sound source is changed, listeners have to process the dynamic change in the sound inputted to their ears, and we believe that this might result in increased cognitive load. Nevertheless, as mentioned above, the facilitation effect based on the listeners’ movement has been observed in various previous studies [

10,

11,

12,

13,

14,

15,

16,

17,

18].

Why the self-motion information facilitates sound localization performance is an interesting albeit unanswered question, considering that processing of dynamic multisensory information might increase the cognitive load. To answer this, it is important to investigate the effect of self-motion in further detail. To do this, we used a very short (30 ms) stimulus and investigated the effect of self-motion. Notably, our former studies [

21,

22,

23] with 30 ms long stimuli suggest that when listeners perceive that they are moving, a kind of top-down process could decay sound localization performance. Contrastingly, the stimuli used in the studies in which the facilitation effect was observed were much longer; for example, in the study by Wallach [

7], music was used as stimuli.

To better understand the mechanism of the sound localization, further studies are required to evaluate the effects of listeners’ perception of self-motion on sound localization accuracy. However, only a few studies have examined the effects of visually induced self-motion on sound localization accuracy with short sound stimuli. Hence, we investigated the effects of visually induced self-motion on the ability of listeners to identify their subjective midline orientation. This study may be helpful in designing efficient dynamic binaural displays for virtual reality (VR) applications.

2. Materials and Methods

Eleven young adults (eight males and three females; average age 22.5 years) with normal hearing were recruited for the experiments performed in a dark anechoic room. All participants were checked that their audiograms were without hearing loss above 20 dB from 125 Hz to 8 kHz. This study was approved by the Ethics Committee of the Research Institute of Electrical Communication at Tohoku University.

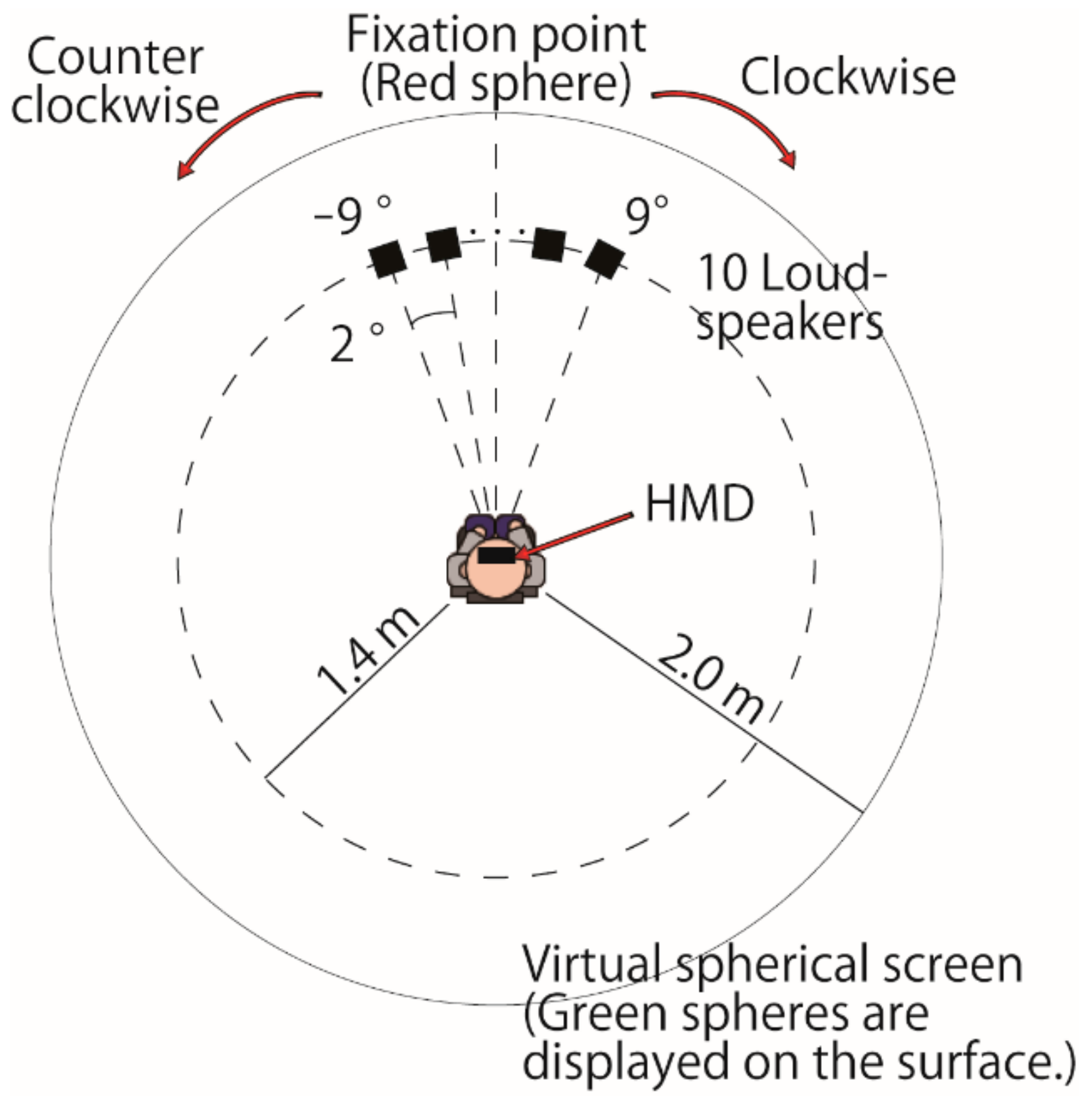

Figure 1 shows a diagram of the experimental setup. All auditory stimuli were generated using a circular array (radius = 1.4 m; range = ±9.0°) of ten 30 mm loudspeakers (AuraSound NSW1-205-8A) separated by angular spacing of 2.0°. The loudspeakers were placed horizontally at a height almost level with the participant’s eyes. The participants were seated on chairs facing at 0° to the horizontal plane and were asked not to move their head during the experiment.

Two conditions—one with a visually induced perception of self-motion (vection condition) and the other without vection (control condition)—were considered during the experiments. Under both conditions, the participants were asked to judge the azimuthal direction (left or right) of the sound image relative to their subjective midline orientation, i.e., the two-alternative forced-choice (2 AFC) approach. The subjective midline was measured as the section point in front with the horizontal plane where the loud speaker array was installed. The acoustic stimulus was a short pink noise burst. Each stimulus lasted 30 ms, including rise and decay times of 5 ms each. The A-weighted sound pressure level of the pink noise when presented steadily was set to 65 dB. The location of the acoustic stimulus was selected using the randomized maximum-likelihood adaptation method [

26].

The participants wore a VR head-mounted display (HMD) (Oculus Rift CV1) and held an Oculus Rift controller and a switch button (FS3-P USB Triple Foot Switch Pedal) with their hands. Head motion was sensed via the HMD during the experiment. All visual stimuli were presented via the HMD. Even if the participants moved their heads slightly during the experiment, the visual stimuli were corrected to present a stable virtual environment according to the sensed head motion.

A black spherical space was simulated around the participants by populating the virtual environment (radius, 2 m). At the beginning of the experiment, a red sphere with a radius of 5 cm was presented in the spherical space (visual angle of the sphere, 1.43°). The red sphere was presented in front of the participant, i.e., 0° in the horizontal and vertical planes. The participants were instructed to keep their eyes and head pointed in the sphere’s direction and remain still until each trial was complete. After 3 s, the red sphere disappeared, and 100,000 green spheres were presented to the participants by populating the virtual environment. The radius of each sphere was 5 cm (visual angle of the sphere, 1.43°). The positions of the green spheres were randomized in each trial.

In the vection condition, the green spheres revolved horizontally around the center of the participant’s head at a speed of 5°/s. Either counterclockwise or clockwise motion was used in any given trial, and the direction of motion was randomly selected with equal probability. The participants were instructed to press the switch button if they perceived self-motion. Immediately after the participants pressed the button, the acoustic stimulus was deployed from one loudspeaker located within ±9.0° of the participants’ SSA orientation. The participants were asked to determine the direction of the sound image. Four sessions were conducted for each participant, resulting in 200 trials (2 motion directions × 100 trials).

In the control condition, the green spheres did not move. The participants were instructed to press the switch button if they perceived the spheres. Immediately after the participants pressed the button, the acoustic stimulus was deployed from one loudspeaker located within ±9.0° of the participants’ subjective midline orientation. The participants were asked to identify the direction of the sound image. Two sessions were conducted for each participant, resulting in 100 trials.

3. Results

The total number of the left (0) and right (1) responses under each condition were recorded. First, for each angle of the subjective midline and just noticeable difference (JND) to be calculated, we plotted the correct-answer rate as a function of the angular distance between the loudspeaker position and the physical straight-ahead orientation of the participant. The normal cumulative distribution function was then fitted to the plots as a psychometric model function, using maximum-likelihood estimation [

27].

The point of subjective equality was calculated as the 50% point of the psychometric function, i.e., the estimated mean represents the subjective midline. Moreover, the JND of the subjective midline, defined as the detection threshold yielding 75% correct 2 AFC responses, was estimated as follows. As the normal cumulative distribution function was used in the estimation, the point at a distance of +0.6745 times the standard deviation (σ) from the mean crosses 0.75 of the accumulated distribution probabilities of the estimated normal cumulative distribution function. Thus 0.6745σ is an approximate representation of the JND of the angle of the subjective midline. One participant was excluded from the analysis owing to the non-estimation of reasonable psychometric functions.

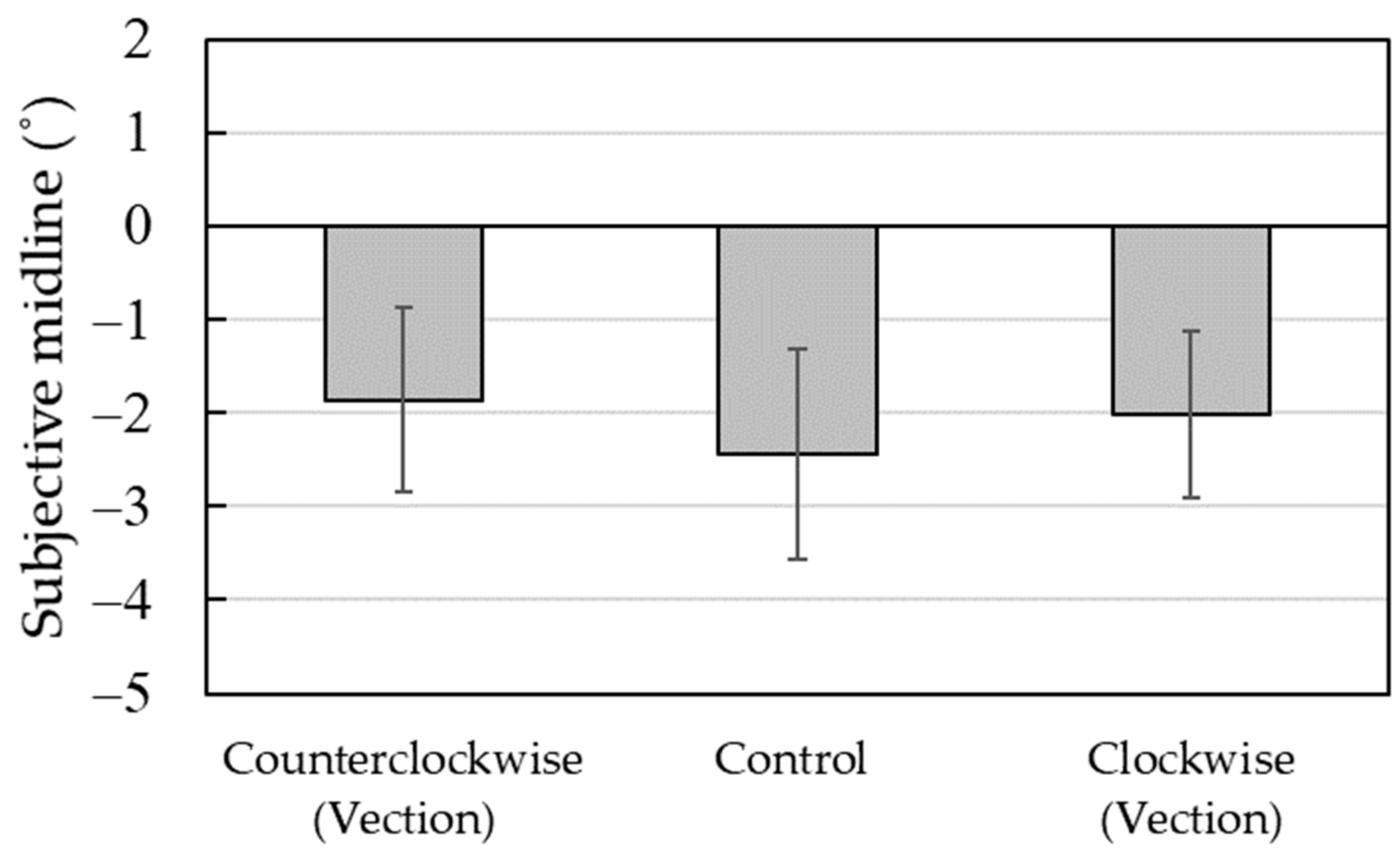

Figure 2 shows the average of the subjective midline and standard errors. One-way repeated-measure analysis of variance (ANOVA) was performed on the subjective midline with the experimental condition as a factor; three experimental conditions were considered—counterclockwise, control, and clockwise. The main effect of the experimental condition (counterclockwise,

M = −1.86; control,

M = −2.44; clockwise,

M = −2.03) was not significant (

F (2, 18) = 0.51,

n.s.,

η2 = 0.05).

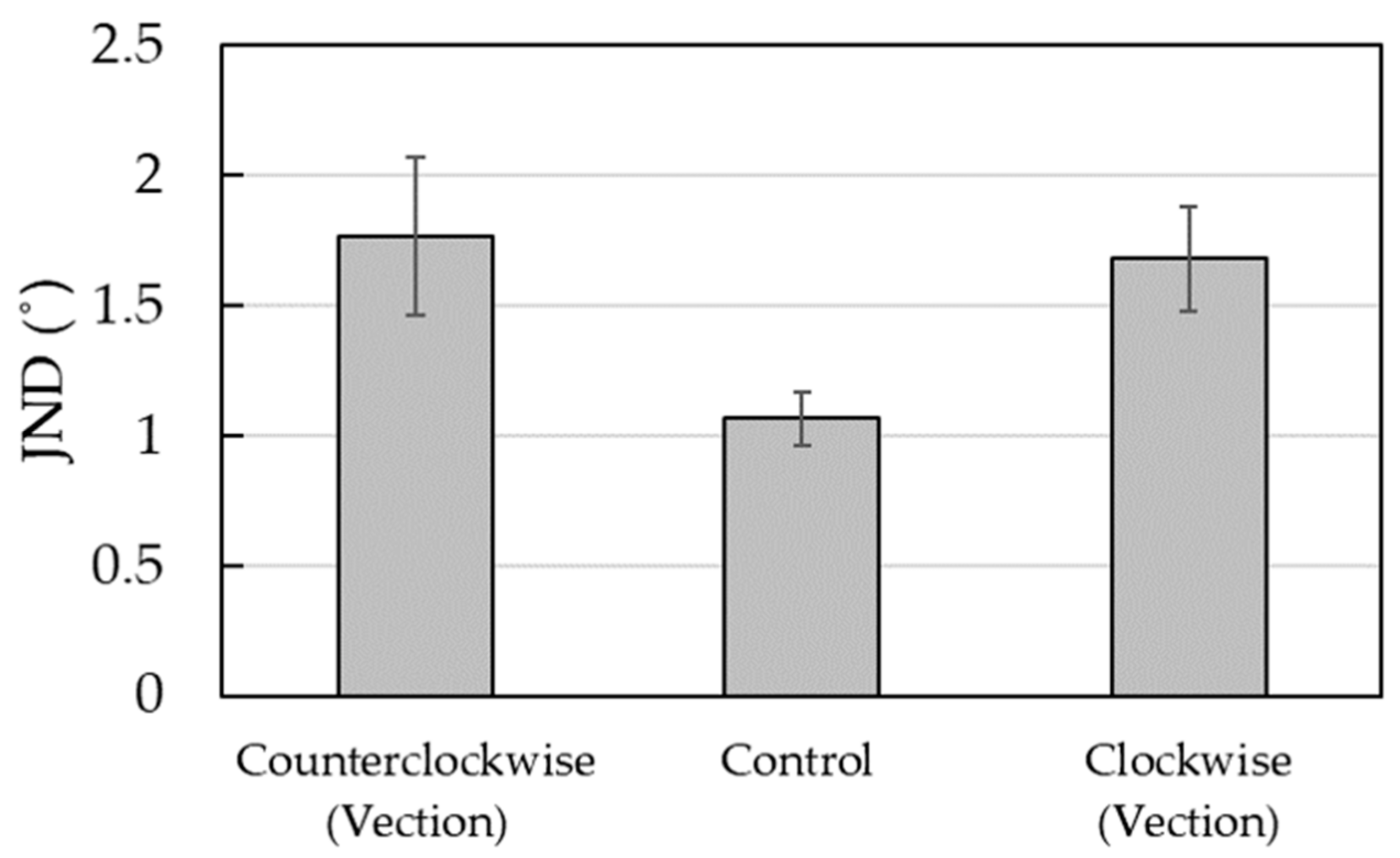

Figure 3 shows the average number of JNDs and standard errors. A one-way repeated-measures ANOVA was performed on the JNDs with the experimental condition as a factor. As in the case of the subjective midline, three experimental conditions were considered. The results showed that the main effect of the experimental condition was significant (

F (2, 18) = 5.50,

p < 0.05,

η2 = 0.38), and multiple comparison analysis (Bonferroni’s method,

p < 0.05) [

28] revealed that JND was smaller for the control condition (

M = 1.06) than for the vection condition (counterclockwise,

M = 1.77; clockwise,

M = 1.68). No significant difference was noted between the counterclockwise and clockwise conditions.

4. Discussion

We examined the effects of visually induced self-motion on the ability of listeners to determine their subjective midline orientation accurately. If self-motion information is crucial for auditory space perception [

7,

25], the deterioration of sound localization accuracy can be observed in the case of self-motion perception, even if the motion is not physically induced. Our results showed that, in terms of detection thresholds of the subjective midline, the sound localization accuracy was lower under the vection condition than under the control condition. Thus, the results indicate that sound localization accuracy deteriorates under visually induced self-motion perception. These findings support the idea that self-motion information is crucial for auditory space perception [

7,

25].

As mentioned before, under the visually induced self-motion, no change was observed in the auditory input difference between the two ears because the listener did not perform any head/body movement. Therefore, our results did not support the assumption that the deterioration of sound localization accuracy is attributable to bottom-up effects, such as ear-input blurring. Previous studies have provided evidence of shared neurophysiological substrates for visual and vestibular processing on self-motion perception [

29,

30,

31]. Accordingly, a more appropriate explanation could be that the deterioration of sound localization accuracy depends on self-motion information, irrespective of the origin of this information.

Curiously, we also observed a slight leftward bias in the subjective midline. Specifically, there seemed to be a bias toward the left by approximately two degrees. It is difficult to ascertain the reason underlying the leftward bias. Notably, however, Abekawa et al. [

32] obtained similar results. In their study [

32], participants were asked to judge whether visual stimuli were located toward the left or right of their body’s midline. Artificial vestibular stimulation was applied to stimulate vestibular organs. They found that artificial stimulation of the vestibular system biased the perception of the body’s midline. Furthermore, they also reported a slight but not significant leftward bias in body-midline localization without vestibular stimulation [

32].

We found that the counterclockwise and clockwise conditions did not bias the auditory detection in opposite directions. Our results did not correspond with those of Abekawa et al. [

32] and are not consistent with the findings of previous studies that investigated the effects of visually induced self-motion on sound localization accuracy [

7,

25]. In the vection condition of the present study, the participants were instructed to press the switch button if they perceived self-motion. Immediately after the participants pressed the button, the acoustic stimulus was deployed from one loudspeaker and the participants were asked to determine the direction of the sound image. Therefore, it is possible that the self-motion information presented to the listeners was shorter and more limited than that in previous studies [

7,

25] because the participants had not experienced the vection for a certain period.

Our results showed that in terms of detection thresholds based on the subjective midline, the sound localization accuracy was lower under the vection condition than under the control condition. The statistical differences between the vection and non-vection conditions were significant, even though they may seem small. Mills [

33] reported that azimuthal sound localization in humans is most accurate in the straight-ahead orientation. The small difference may be due to the fact that we measured the sound localization accuracy of the listener’s midline. However, we believe that this difference is worth noting. It is well known that self-motion facilitates sound localization performance. Nevertheless, our results indicate that although the difference was small, the accuracy of sound localization did not improve, but rather degraded.

This study shows that sound localization is compromised by self-motion perception under vection and that self-motion perception is induced only by visual, i.e., crossmodal information. This could mean that the perception “I am moving” affects sound localization, based on the sensory modality of the information input. Such a top-down effect is related to crossmodal effects or simultaneous multimodal stimulation [

34,

35,

36,

37]. Such phenomena have been reported previously for visual–auditory [

34,

35], visual–tactile [

36], and visual–vestibular [

37] interactions. For instance, it has been reported that adaptation to optic flow leads to subsequent nonvisual self-motion perception [

37]. Our present study will help further the understanding regarding the influence of the visual, proprioceptive, and vestibular systems on sound localization.

This study has several limitations. Our sample size was small, and the differences in JNDs between the three conditions were not large. We did not manipulate the strength of the self-motion and did not examine the relationship between sound localization accuracy and vection strength. Future studies should consider addressing these questions. Nevertheless, our findings demonstrate that sound localization accuracy deteriorates while listeners perceive visually induced self-motion without physical movement. This observation may be helpful in designing efficient VR applications, including dynamic binaural displays, as fewer computational resources can be assigned while listeners are in motion or perceived self-motion.