Information, Thermodynamics and Life: A Narrative Review

Abstract

Featured Application

Abstract

1. Introduction

1.1. The Basic Principles of Information

1.2. Shannon’s Information

Entropy in Shannon’s Information

1.3. Quantum Information

1.4. Information and Thermodynamics

2. Information and Life

2.1. The Old Problem of Maxwell’s “Demon”

2.2. Information in the Cellular Context

2.3. The Information Flow

2.4. Information and Genome

3. Applications “after” Information: To the Process of Learning

3.1. Learning and Its Application in Information-Driven, Complex Artificial Life

3.2. Information Theoretical Concepts in Artificial Life

3.2.1. Predictive Information (PI)

3.2.2. Predictive Information and Dynamical Systems

3.3. Neuronal Systems as Forms of Artificial Life

3.3.1. Neuro-Robotic Systems

3.3.2. A Closed-Loop Brain/Machine Interface (BMI) for Estimating Neural Dynamics

3.3.3. Recurrent Dynamics in a Neuro-Artificial System

3.4. Challenges in Neuro-Artificial Systems

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Bohr, N. On the notions of causality and complementarity. Science 1950, 111, 51–54. [Google Scholar] [CrossRef] [PubMed]

- Schroedinger, E. What is life? The physical Aspect of the Living Cell; The University Press: Cambridge, UK, 1955. [Google Scholar]

- Zenil, H.; Gershenson, C.; Marshall, J.A.R.; Rosenblueth, D.A. Life as thermodynamic evidence of algorithmic structure in natural environments. Entropy 2012, 14, 2173–2191. [Google Scholar] [CrossRef]

- Wolkenhauer, O. Systems Biology-Dynamic Pathway Modelling; Universitaet Rostock: Rostock, Germany, 2010. [Google Scholar]

- Bonchev, D.; Trinajstić, N. Chemical information theory: Structural aspects. Int. J. Quantum Chem. 1982, 22, 463–480. [Google Scholar] [CrossRef]

- Brostow, W. Between laws of thermodynamics and coding of information: There is nothing wrong with carnot’s cycle, but contemporary thermodynamics may be more useful. Science 1972, 178, 123–126. [Google Scholar] [CrossRef] [PubMed]

- Ashby, W.R. An Introduction to Cybernetics; Chapman & Hall Ltd.: London, UK, 1961; p. 306. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Stamatiou, G. Properties of Quantum Information in Quantum Systems. Ph.D. Thesis, University of Patras, Patras, Greece, 2010. [Google Scholar]

- Gallager, R. Information Theory and Reliable Communication: Course Held at the Department for Automation and Information July 1970; Springer: Vienna, Austria, 2014. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Wallace, R.; Wallace, R.G. Organisms, organizations and interactions: An information theory approach to biocultural evolution. Bio Syst. 1999, 51, 101–119. [Google Scholar] [CrossRef]

- Gray, R.M. Entropy and Information Theory; Springer: New York, NY, USA, 2013. [Google Scholar]

- Roman, S. Coding and Information Theory; Springer: New York, NY, USA, 1992. [Google Scholar]

- Wyner, A. Recent results in the shannon theory. IEEE Trans. Inf. Theory 1974, 20, 2–10. [Google Scholar] [CrossRef]

- Wheeler, J.A. Information, physics, quantum: The search for links. In Proceedings of the International Symposium on Foundations of Quantum Mechanics, Tokyo, Japan, 28–31 August 1989; pp. 354–368. [Google Scholar]

- Foschini, L. Where the It from Bit Come From? INAF-Osservatorio Astronomico di Brera, Merate (LC): Milano, Italy, 2013. [Google Scholar]

- Wheeler, J.A.; Ford, K. Geons, Black Holes, and Quantum Foam: A Life in Physics; W. W. Norton: New York, NY, USA, 2010. [Google Scholar]

- Sen, D. The uncertainty relations in quantum mechanics. Curr. Sci. 2014, 107, 203–218. [Google Scholar]

- Landauer, R. Dissipation and noise-immunity in computation and communication. Nature 1988, 335, 779–784. [Google Scholar] [CrossRef]

- Frieden, B.R.; Gatenby, R.A. Information dynamics in living systems: Prokaryotes, eukaryotes, and cancer. PLoS ONE 2011, 6, e22085. [Google Scholar] [CrossRef]

- Gatenby, R.A.; Frieden, B.R. Information theory in living systems, methods, applications, and challenges. Bull. Math. Biol. 2007, 69, 635–657. [Google Scholar] [CrossRef]

- Maxwell, J.C. Theory of Heat; Longmans: Harlow, UK, 1871. [Google Scholar]

- Szilard, L. Ueber die entropieverminderung in einem thermodynamischen system bei eingriffen intelligenter wesen. Z. Phys. 1929, 53, 840–856. [Google Scholar] [CrossRef]

- Brillouin, L. Relativity Reexamined; Elsevier Science: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Kleidon, A. Life, hierarchy, and the thermodynamic machinery of planet earth. Phys. Life Rev. 2010, 7, 424–460. [Google Scholar] [CrossRef] [PubMed]

- Warburg, O. On the origin of cancer cells. Science 1956, 123, 309–314. [Google Scholar] [CrossRef]

- De Castro, A. The thermodynamic cost of fast thought. Minds Mach. 2013, 23, 473–487. [Google Scholar] [CrossRef][Green Version]

- Brookes, B.C. The foundations of information science. Part i. Philosophical aspects. J. Inf. Sci. 1980, 2, 125–133. [Google Scholar] [CrossRef]

- Sagawa, T.; Ueda, M. Nonequilibrium thermodynamics of feedback control. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2012, 85, 021104. [Google Scholar] [CrossRef]

- Sandberg, H.; Delvenne, J.C.; Newton, N.J.; Mitter, S.K. Maximum work extraction and implementation costs for nonequilibrium maxwell’s demons. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2014, 90, 042119. [Google Scholar] [CrossRef]

- Brendel, V.; Busse, H.G. Genome structure described by formal languages. Nucleic Acids Res. 1984, 12, 2561–2568. [Google Scholar] [CrossRef]

- Castellini, A.; Franco, G.; Manca, V. A dictionary based informational genome analysis. BMC Genom. 2012, 13, 485. [Google Scholar] [CrossRef]

- Vinga, S. Information theory applications for biological sequence analysis. Brief. Bioinform. 2014, 15, 376–389. [Google Scholar] [CrossRef]

- Moya, A. The Calculus of Life: Towards a Theory of Life; Springer International Publishing: New York, NY, USA, 2015. [Google Scholar]

- Akhter, S.; Bailey, B.A.; Salamon, P.; Aziz, R.K.; Edwards, R.A. Applying shannon’s information theory to bacterial and phage genomes and metagenomes. Sci. Rep. 2013, 3, 1033. [Google Scholar] [CrossRef]

- Gatenby, R.A.; Frieden, B.R. Application of information theory and extreme physical information to carcinogenesis. Cancer Res. 2002, 62, 3675–3684. [Google Scholar]

- Bashford, J.D.; Tsohantjis, I.; Jarvis, P.D. A supersymmetric model for the evolution of the genetic code. Proc. Natl. Acad. Sci. USA 1998, 95, 987–992. [Google Scholar] [CrossRef]

- Dragovich, B. Genetic code and number theory. arXiv 2009, arXiv:0911.4014. [Google Scholar] [CrossRef]

- Bishop, J.M. Molecular themes in oncogenesis. Cell 1991, 64, 235–248. [Google Scholar] [CrossRef]

- Thompson, E.A. RA Fisher’s contributions to genetical statistics. Biometrics 1990, 46, 905–914. [Google Scholar] [CrossRef] [PubMed]

- Johnson, H.A. Information theory in biology after 18 years. Science 1970, 168, 1545–1550. [Google Scholar] [CrossRef]

- Bush, R.R.; Mosteller, F. A stochastic model with applications to learning. Ann. Math. Stat. 1953, 559–585. [Google Scholar] [CrossRef]

- Ovchinnikov, I.V. Topological field theory of dynamical systems. Chaos 2012, 22, 033134. [Google Scholar] [CrossRef]

- Ovchinnikov, I.V. Topological supersymmetry breaking as the origin of the butterfly effect. arXiv 2012, arXiv:1201.5840. [Google Scholar]

- Ovchinnikov, I.V. Transfer operators and topological field theory. arXiv 2013, arXiv:1308.4222. [Google Scholar]

- Ovchinnikov, I.V. Topological field theory of dynamical systems. II. Chaos 2013, 23, 013108. [Google Scholar] [CrossRef]

- Ovchinnikov, I.V. Self-organized criticality as witten-type topological field theory with spontaneously broken becchi-rouet-stora-tyutin symmetry. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 2011, 83, 051129. [Google Scholar] [CrossRef] [PubMed]

- Estes, W.K. Toward a statistical theory of learning. Psychol. Rev. 1950, 57, 94. [Google Scholar] [CrossRef]

- Miller, G.A.; McGill, W.J. A statistical description of verbal learning. Psychometrika 1952, 17, 369–396. [Google Scholar] [CrossRef]

- Stanley, J.C. The Differential Effects of Partial and Continuous Reward upon the Acquisition and Elimination of a Running Response in a Two-Choice Situation. Ph.D. Thesis, Harvard Graduate School of Education, Cambridge, MA, USA, 1950. [Google Scholar]

- Bush, R.R. Some problems in stochastic learning models with three or more responses. In Mathematical Model of Human Behavior: Proceedings of a Symposium; Dunlap & Associates Inc.: Darien, CT, USA, 1955; pp. 22–24. [Google Scholar]

- Mosteller, F. Stochastic learning models. In Proceedings of the Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Berkeley, CA, USA, 1956; p. 151. [Google Scholar]

- Ay, N.; Bernigau, H.; Der, R.; Prokopenko, M. Information-driven self-organization: The dynamical system approach to autonomous robot behavior. Theory Biosci. 2012, 131, 161–179. [Google Scholar] [CrossRef] [PubMed]

- Lungarella, M.; Pegors, T.; Bulwinkle, D.; Sporns, O. Methods for quantifying the informational structure of sensory and motor data. Neuroinformatics 2005, 3, 243–262. [Google Scholar] [CrossRef]

- Prokopenko, M.; Gerasimov, V.; Tanev, I. Evolving Spatiotemporal Coordination in a Modular Robotic System; Springer: Berlin/Heidelberg, Germany, 2006; pp. 558–569. [Google Scholar]

- Martius, G.; Der, R.; Ay, N. Information driven self-organization of complex robotic behaviors. PLoS ONE 2013, 8, e63400. [Google Scholar] [CrossRef]

- Zahedi, G.; Elkamel, A.; Lohi, A. Genetic algorithm optimization of supercritical fluid extraction of nimbin from neem seeds. J. Food Eng. 2010, 97, 127–134. [Google Scholar] [CrossRef]

- Der, R.; Liebscher, R.A. True Autonomy from Self-Organized Adaptivity; Universität Leipzig: Leipzig, Germany, 2002. [Google Scholar]

- Bergmann, U.M.; Kuhn, R.; Stamatescu, I.O. Learning with incomplete information in the committee machine. Biol. Cybern. 2009, 101, 401–410. [Google Scholar] [CrossRef]

- Mehta, M.R. From synaptic plasticity to spatial maps and sequence learning. Hippocampus 2015, 25, 756–762. [Google Scholar] [CrossRef]

- Pehlevan, C.; Hu, T.; Chklovskii, D.B. A hebbian/anti-hebbian neural network for linear subspace learning: A derivation from multidimensional scaling of streaming data. Neural Comput. 2015, 27, 1461–1495. [Google Scholar] [CrossRef]

- Sommer, F.T.; Palm, G. Improved bidirectional retrieval of sparse patterns stored by hebbian learning. Neural Netw. 1999, 12, 281–297. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications: Volume i; John Wiley & Sons: New York, NY, USA, 1968; Volume 3. [Google Scholar]

- Braitenberg, V. Vehicles: Explorations in Synthetic Psychology; MIT Press: New York, NY, USA, 1984. [Google Scholar]

- Pregowska, A.; Szczepanski, J.; Wajnryb, E. Temporal code versus rate code for binary information sources. Neurocomputing 2016, 216, 756–762. [Google Scholar] [CrossRef]

- Mainen, Z.F.; Sejnowski, T.J. Reliability of spike timing in neocortical neurons. Science 1995, 268, 1503–1506. [Google Scholar] [CrossRef]

- Galinsky, V.L.; Frank, L.R. Universal theory of brain waves: From linear loops to nonlinear synchronized spiking and collective brain rhythms. Phys. Rev. Res. 2020, 2, 023061. [Google Scholar] [CrossRef] [PubMed]

- Paiva, A.R.C.; Park, I.; Príncipe, J.C. Chapter 8—Inner products for representation and learning in the spike train domain. In Statistical Signal Processing for Neuroscience and Neurotechnology; Oweiss, K.G., Ed.; Academic Press: Oxford, UK, 2010; pp. 265–309. [Google Scholar]

- Coop, A.D.; Reeke, G.N. Deciphering the neural code: Neuronal discharge variability is preferentially controlled by the temporal distribution of afferent impulses. Neurocomputing 2001, 38, 153–157. [Google Scholar] [CrossRef]

- Duguid, I.; Sjöström, P.J. Novel presynaptic mechanisms for coincidence detection in synaptic plasticity. Curr. Opin. Neurobiol. 2006, 16, 312–322. [Google Scholar] [CrossRef]

- Stein, R.B. A theoretical analysis of neuronal variability. Biophys. J. 1965, 5, 173–194. [Google Scholar] [CrossRef]

- Pregowska, A.; Kaplan, E.; Szczepanski, J. How far can neural correlations reduce uncertainty? Comparison of information transmission rates for markov and bernoulli processes. Int. J. Neural. Syst. 2019, 29, 1950003. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. The mathematical theory of communication. 1963. MD Comput. Comput. Med Pract. 1997, 14, 306–317. [Google Scholar]

- Gupta, D.S.; Bahmer, A. Increase in mutual information during interaction with the environment contributes to perception. Entropy 2019, 21, 365. [Google Scholar] [CrossRef] [PubMed]

- Street, S. Upper limit on the thermodynamic information content of an action potential. Front. Comput. Neurosci. 2020, 14, 37. [Google Scholar] [CrossRef]

- Karniel, A.; Kositsky, M.; Fleming, K.M.; Chiappalone, M.; Sanguineti, V.; Alford, S.T.; Mussa-Ivaldi, F.A. Computational analysis in vitro: Dynamics and plasticity of a neuro-robotic system. J. Neural Eng. 2005, 2, S250–S265. [Google Scholar] [CrossRef]

- Barahona, M.; Poon, C.S. Detection of nonlinear dynamics in short, noisy time series. Nature 1996, 381, 215–217. [Google Scholar] [CrossRef]

- Poon, C.S.; Barahona, M. Titration of chaos with added noise. Proc. Natl. Acad. Sci USA 2001, 98, 7107–7112. [Google Scholar] [CrossRef]

- Zelenin, P.V.; Deliagina, T.G.; Grillner, S.; Orlovsky, G.N. Postural control in the lamprey: A study with a neuro-mechanical model. J. Neurophysiol. 2000, 84, 2880–2887. [Google Scholar] [CrossRef][Green Version]

- Bennett, C.H. Logical reversibility of computation. IBM J. Res. Dev. 1973, 17, 525–532. [Google Scholar] [CrossRef]

- Zenil, H. Information theory and computational thermodynamics: Lessons for biology from physics. Information 2012, 3, 739–750. [Google Scholar] [CrossRef]

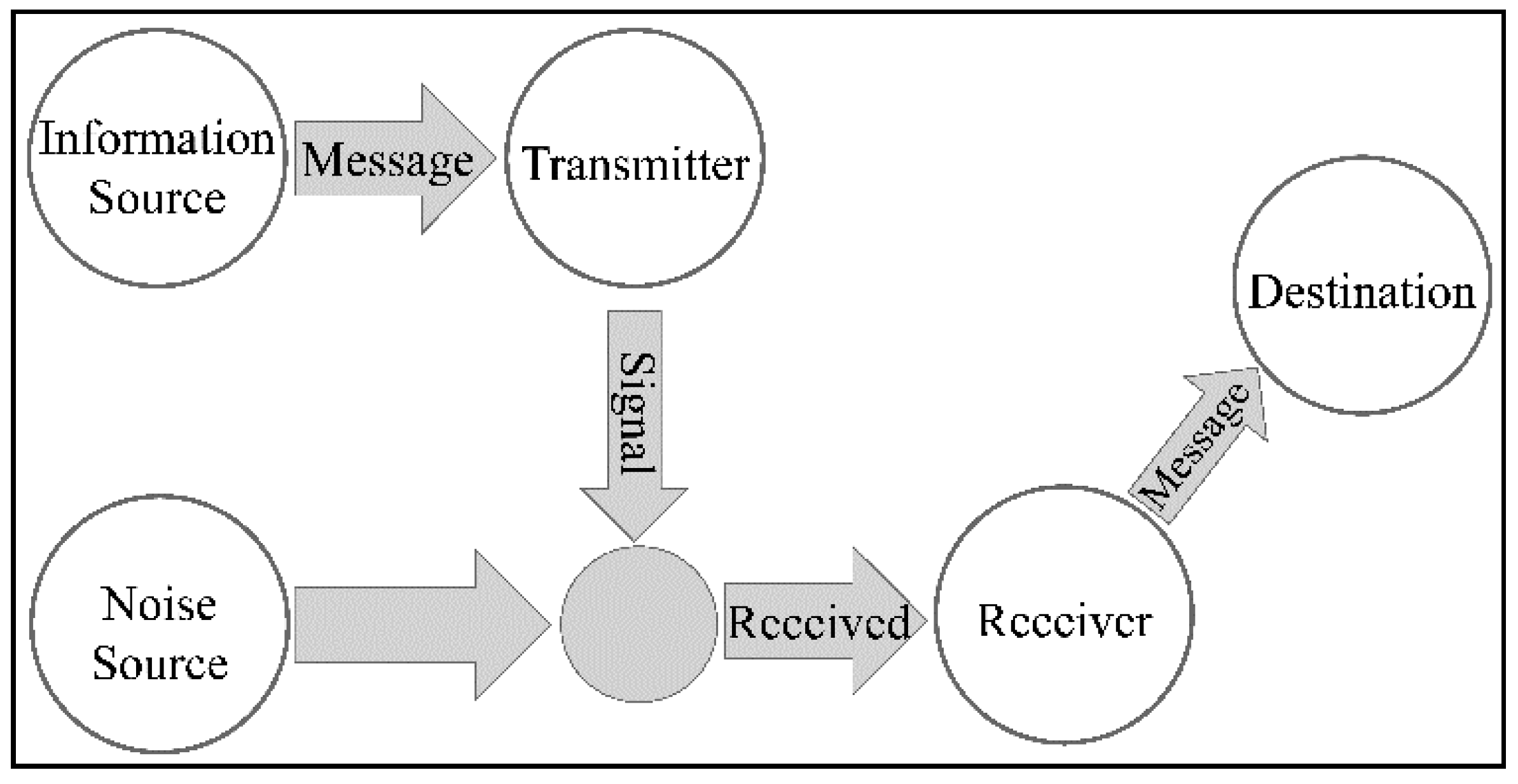

| Stage | Description | |

|---|---|---|

| 1 | The message | Includes the information itself |

| 2 | The transmitter (source) | It is the sender of the message |

| 3 | The encoder | The encoder represents the message in a sequence of bits (or other symbols) based on a rule |

| 4 | The channel | is the conduit for information |

| 5 | The decoder | reverses the process of encoding the message and represents the message in a format understandable to the recipient |

| 6 | The receiver (destination) | It is the recipient and the signifier of the message |

| 7 | The noise source | It is the environment of the system and is a factor that is beyond our control. It is a cause of information degradation. We believe that it interferes with the information as it spreads through the transmission channel. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lambrou, G.I.; Zaravinos, A.; Ioannidou, P.; Koutsouris, D. Information, Thermodynamics and Life: A Narrative Review. Appl. Sci. 2021, 11, 3897. https://doi.org/10.3390/app11093897

Lambrou GI, Zaravinos A, Ioannidou P, Koutsouris D. Information, Thermodynamics and Life: A Narrative Review. Applied Sciences. 2021; 11(9):3897. https://doi.org/10.3390/app11093897

Chicago/Turabian StyleLambrou, George I., Apostolos Zaravinos, Penelope Ioannidou, and Dimitrios Koutsouris. 2021. "Information, Thermodynamics and Life: A Narrative Review" Applied Sciences 11, no. 9: 3897. https://doi.org/10.3390/app11093897

APA StyleLambrou, G. I., Zaravinos, A., Ioannidou, P., & Koutsouris, D. (2021). Information, Thermodynamics and Life: A Narrative Review. Applied Sciences, 11(9), 3897. https://doi.org/10.3390/app11093897