1. Introduction

The usage of commercial unmanned aerial vehicles (UAVs) has been increasing recently [

1]. The identification and detection of UAVs is important for the security measures of airports, military zones, personal private zones, and human life and privacy [

2]. UAVs are classified as either fixed-winged unmanned aerial vehicles (FW-UAV) or rotating-wing unmanned aerial vehicles (RW-UAV). FW-UAVs are both harder to pilot and more costly than RW-UAVs. In additional, FW-UAVs require a runway for landing and departure, whereas RW-UAVs can take-off and land on an area of 1 m

2. As a result of these advantages, RW-UAVs are more commonly used and outnumber FW-UAVs [

1]. For these reasons, it is important to detect RW-UAVs with easily accessible sensors such as cameras for privacy and security.

Several RW-UAV detection and identification studies can be found in the literature. Radar, voice recognition, and image recognition-based methods or hybrid systems [

3] have been used for detection and identification purposes. The above-mentioned radar, voice, and image-based UAV detection methods utilize antenna, microphone array, and camera hardware, respectively. Machine learning (ML) methods are among the most frequently used methods to classify UAVs with sensor fusion data. Image classification methods with artificial intelligence (AI) are used in UAV classification studies [

3]. Image classification methods with AI consist of a series of processes such as data collection, data preparation and labeling, training, and testing [

4]. The data type for training ML models in image classification problems is image. Data collection and data preparation are accomplished by taking photos of the relevant class or by collecting and screening from the Internet; later, they are labeled individually by hand [

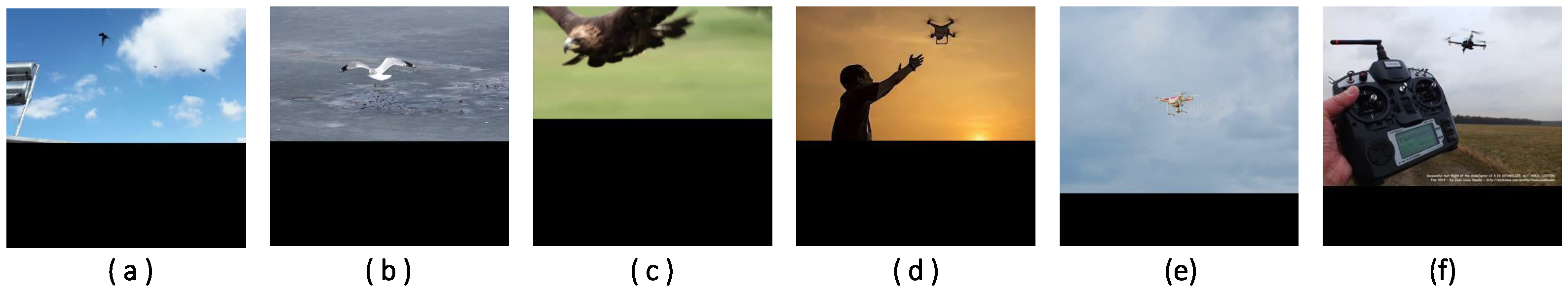

5]. The process of collecting the data, such as images, radar fingerprints, or voice fingerprints, that are required for ML model training is labor-intensive and time-consuming. In the RW-UAV detection process, it is important to identify birds that are likely to be in the sky, as well to avoid any confusion in application. Therefore, a labeled dataset should consist of the image classes of birds and RW-UAVs to develop a successful ML identification model that can classify birds and RW-UAVs. The methods used to create a dataset include ready-made datasets such as IMAGENET [

6] and CIFAR10 [

7], or photographing objects at different angles for classes without datasets.

The collection of data and the arrangement of these collected data according to the ML model is called data collection and preparation, which is a preliminary process for training. The accuracy rate is measured in the test process of the ML model, and the training process is completed using the dataset of the specified classes. Data collection and data preparation processes are labor-intensive, creating a significant workload that is repeated for each new class when training the ML model [

8]. More recently, the success of image classification research has increased with the development of deep learning-based ML models. Classical ML techniques include operations such as feature extraction and selection. Feature extraction and selection layers are manual processes in classical ML, and these layer requirements have been automated in ML models based on deep learning (DL) [

9]. The automation of these layers can be considered to be an advantage of DL networks; however, this advantage has the disadvantage that DL networks require more training data [

10]. The most-used method in deep learning-based image classification studies is the convolutional neural network (CNN). CNN with deep learning-based networks is a rapidly developing technology, and is becoming a more widespread method for image data-type classification studies [

11]. The best-known image classification competition in recent years is the IMAGENET large-scale visual recognition challenge (ILSVRC). The highest-ranked participants used CNN-based methods in ILSVRC [

6]. CNN networks consist of two core structures, called the convolution and pooling layers. The convolution layer consists of feature maps, with each connected to each other and consisting of a series of weights, and eventually connects to a function such as a rectified linear unit (RELU).

Networks frequently used in image classification studies with CNN are AlexNet, Visual Geometry Group Network (VGGNet), and SqueezeNet architectures. There are studies that have achieved RW-UAV and bird image classification by using deep learning-based architectures [

12]. The image data used for training and testing in these studies consist of real photographs that were taken manually. Real photographs of objects belonging to each class must be created to train AI in the general approach to AI model training. The disadvantage of AI studies in which real photo data are used is that real data collection is a difficult and labor-intensive task. In addition, taking photos of real data is difficult depending on the outdoor environment conditions, such as altitude, danger zone, and adverse weather conditions. Considering the importance of the diversity and amount of data in AI training and the difficulty of creating a dataset manually, the following research questions need to be addressed: “Can the artificial intelligence training-image dataset be synthetically produced as needed?” and “Can an artificial intelligence model trained with synthetic data be used to classify a real-image dataset?”.

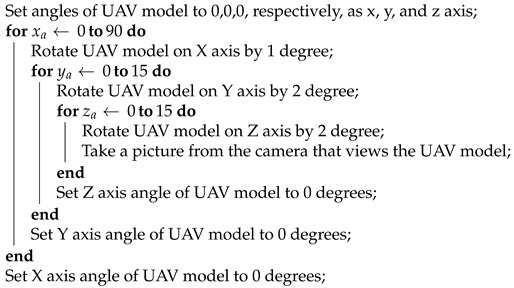

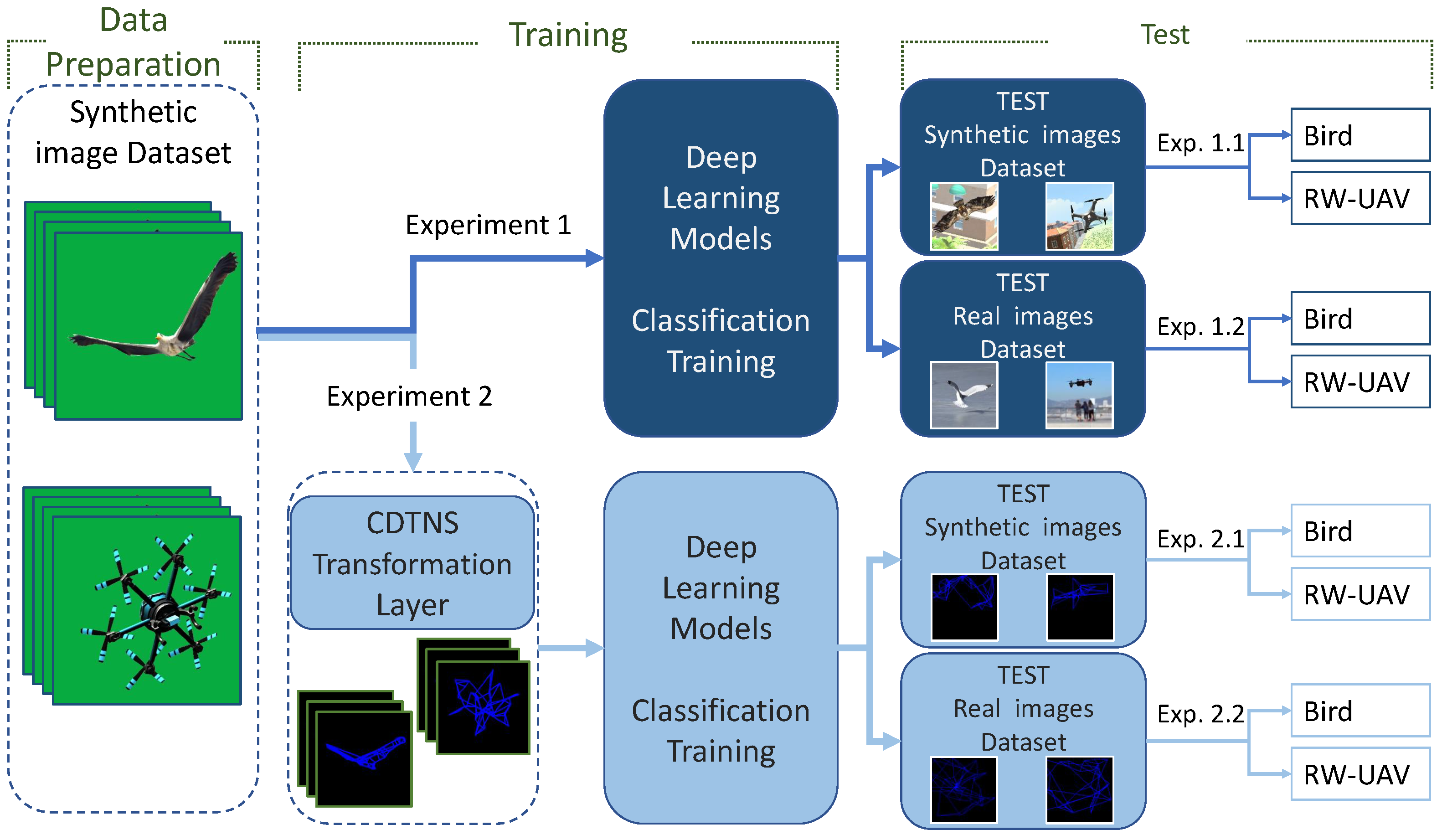

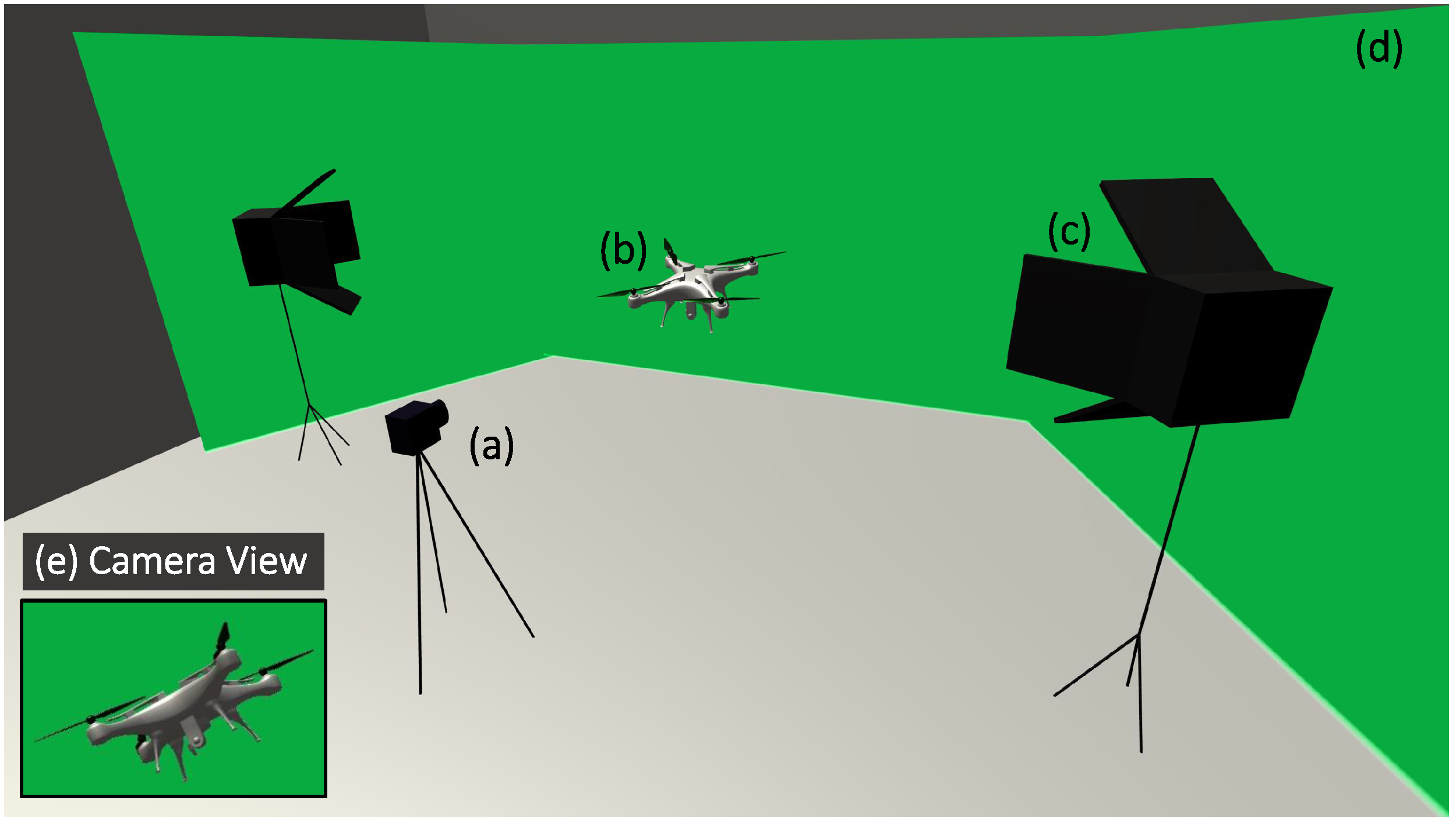

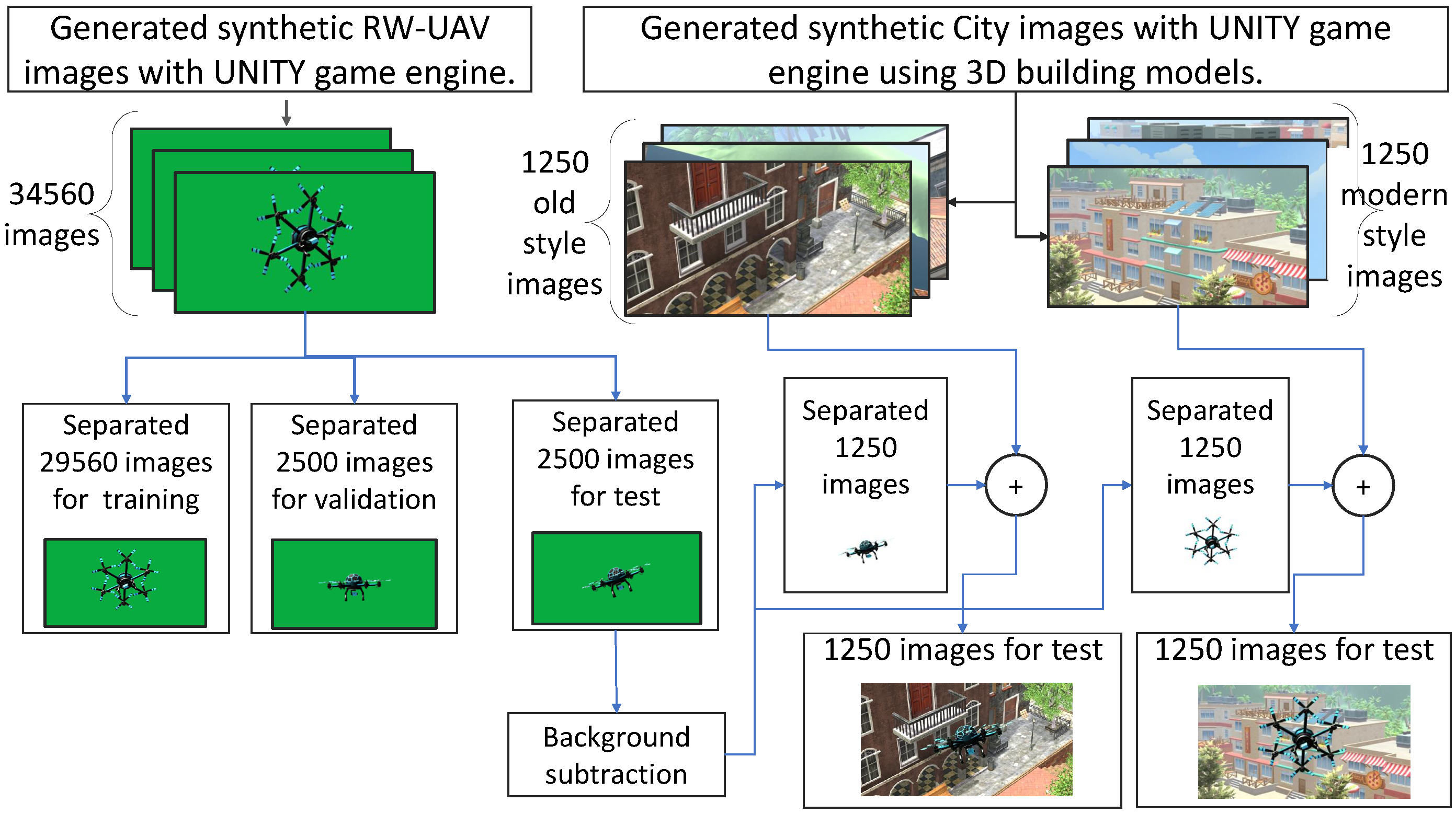

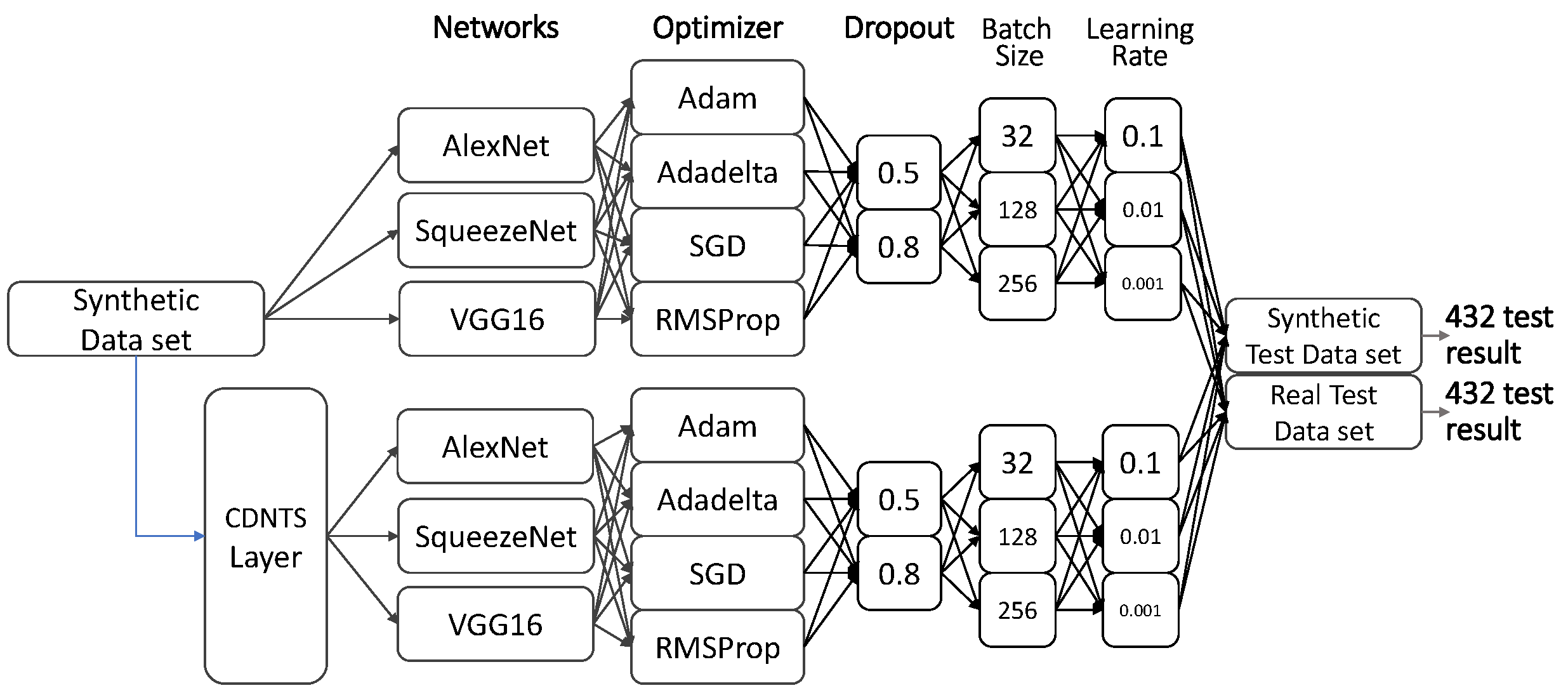

In this study, we have focused on increasing the accuracy of results obtained by trained deep learning-based ML models with a fully synthetic-image dataset and tested them with a real-image dataset. To create a synthetic dataset, a virtual studio was created by using the Unity game engine, and 69,120 synthetic images were created for bird and RW-UAV three-dimensional (3D) models. In total, 34,560 synthetic RW-UAV images and 34,560 synthetic bird images were produced, and the 216 ML models were trained with these synthetic images. Models were tested with 3385 real images and 5000 synthetic images separately.

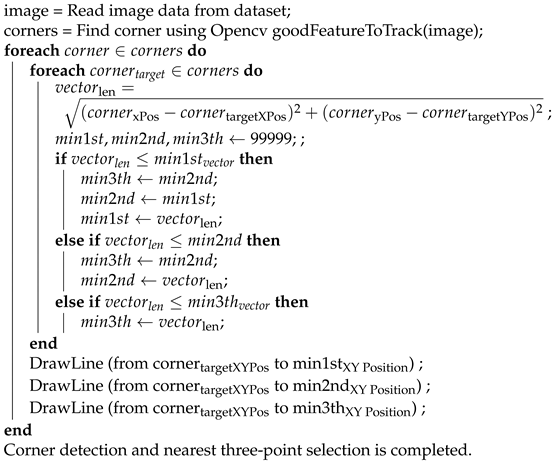

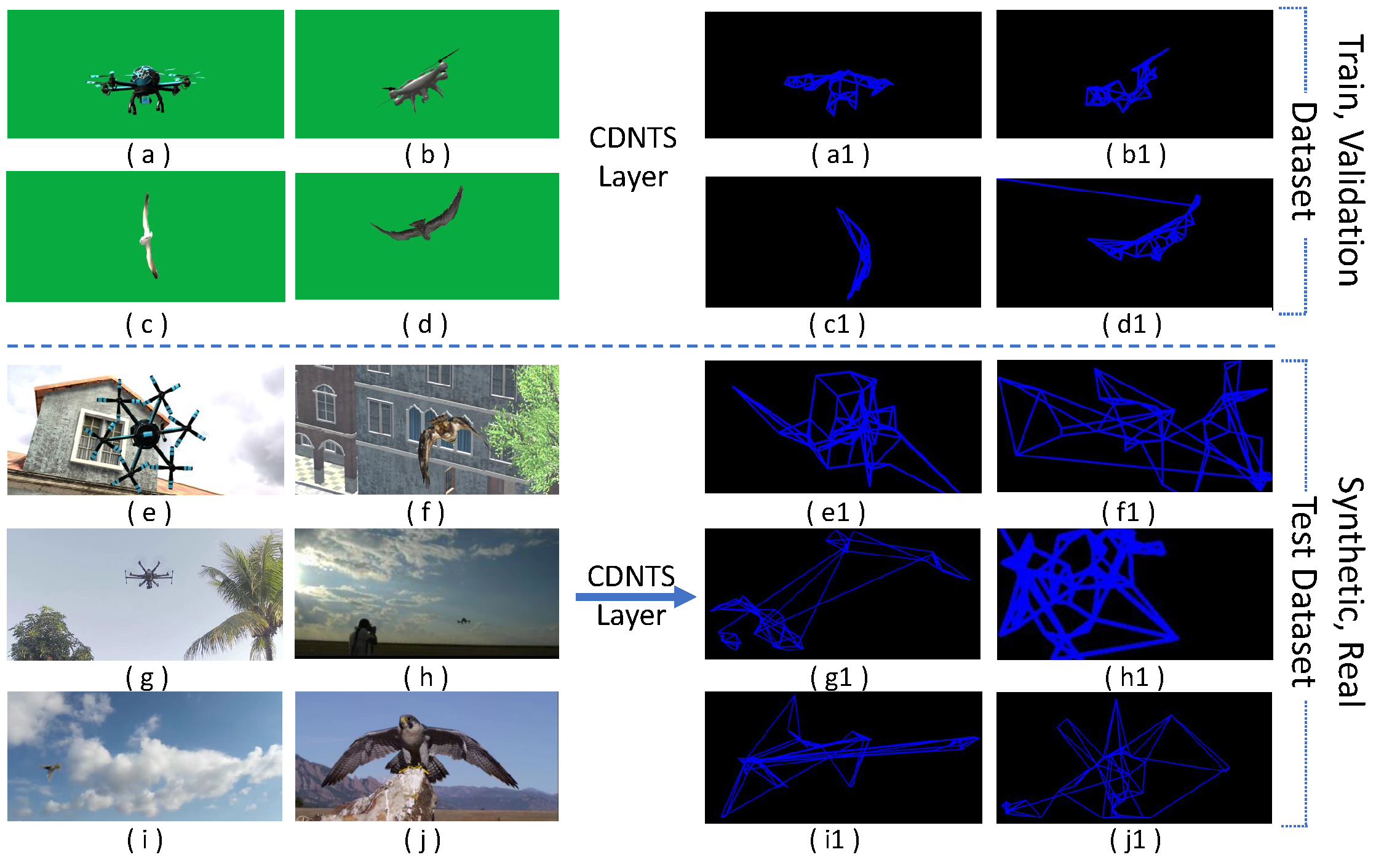

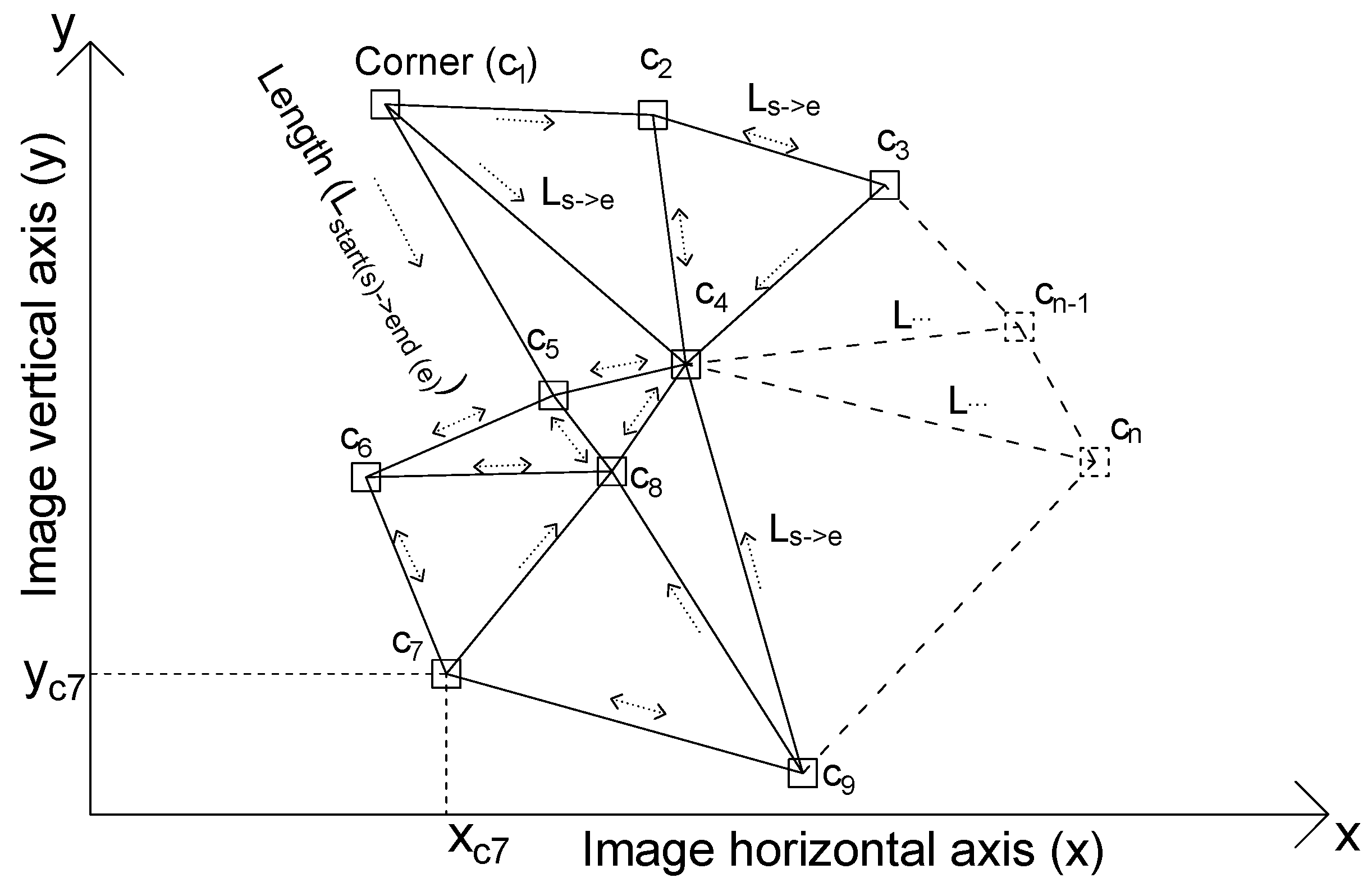

We propose a new approach to improve the accuracy of the DL networks trained with synthetic images and tested with real images. The proposed approach, corner detection, and nearest three-point selection (CDNTS), is a hybrid method of corner detection methods and the nearest point search method for feature extraction that can be used with DL networks. Corner detection-based methods are commonly used in visual tracking [

13], image matching and photography [

14], robotic path finders [

15], etc. This approach is a technique that is generally used to detect corners in images in image-based studies. The “nearest three-point selection” part that forms the other half of the hybrid CDNTS layer is a function that searches for the closest points. Nearest-point search problems, which basically aim to determine the distance of two or more points to each other, are a common subject of interest in the literature research studies. This approach is used to group the pixels of two-dimensional (2D) images and vertexes of 3D point cloud data and determine the closest pixels and vertexes in [

16]. It is used in algorithms that form a decision tree by finding the distances between the weights of classes in decision tree problems [

17]. The CDNTS layer suggested in our study can be considered to be a feature extraction technique that utilizes the “corner detection” and “nearest point search” method. The CDNTS layer consists of three main operations: corner point coordinate detection, the calculation of the distance between corners, and drawing lines between the closest three corners. As a result of these operations, the original image is transformed into an image consisting of corner points and lines. The corner detection-based CDNTS is a hybrid layer that improves the accuracy of the real-image classification of DL networks trained using synthetic images.

The performance, computation times, validations, and test accuracies of the methods were measured. The results obtained from both experimental setups were verified with the validation methods of the F-score [

18] and the area under the curve (AUC). AUC and test accuracy parameters were computed to analyze the performance and success of the network. The F-score parameter is a parameter that calculates test accuracy via recall and precision.

2. Related Work

Image classification has become an active research topic in computer vision. CNN-based image classification methods play an important role in image classification. In addition, with the development of graphics processing unit (GPU)-based hardware and open source libraries such as Tensorflow [

19] and Keras [

20], CNN-based image classification learning algorithms have become prominent [

11]. Some of the CNN-based architectures used for image classification are AlexNet [

21], SqueezeNet [

22], and VGG16 [

23] as convolutional neural network (CNN) algorithms.

The accuracy performances of these DL algorithms may be different. In order to train a DL network for image classification, a dataset with a large number of images should be introduced to the network [

9]. Some libraries such as IMAGENET [

6] and CIFAR10 [

7] provide picture datasets to train the network. IMAGENET and CIFAR10 libraries consist of labeled image data that are directly obtained from real picture data by photographing. This library has been used in some studies in the literature to solve some image classification problems [

6,

24]. Due to the fact that datasets have a limited number of classes, they might not offer solutions to all problems with a desired accuracy. To train a class that is not available in libraries, it is necessary to create a new dataset. In such cases, a dataset can be created by taking many object photographs with a camera or by generating synthetic images with a game engine [

25,

26] according to the problem. CNN-based algorithms are frequently used to train DL networks for image classification [

27,

28,

29]. There are some studies in the literature which make use of CNN-based image classification using pictures of real objects or synthetic objects [

30].

Several studies have used synthetic object image data to identify computer-aided design (CAD) model files provided from the CAD model library [

31]. Some studies also use a video and image dataset created with the rendering tools of game engines [

32,

33,

34] to integrate AI into the games. The common purpose of machine learning studies using synthetic data in addition to real data is to increase the number of samples of datasets where the number of samples is low or limited [

32]. In cases where the number of samples is limited and a CNN-based machine learning algorithm is used, the success of the network is low. To increase the performance of the network, many samples are needed [

35]. To increase the number of samples, synthetic images can be generated by using 3D models and game engines. Using a game engine’s rendering tools, a 3D model can be photographed from different angles and distances. This flexibility provided by the game engine allows the creation of many samples and removes the constraints on the dataset. In the literature, this method is used to diversify real datasets and increase the number of data. In this study, we aimed to create a dataset from fully synthetic data.

5. Conclusions

This study has emphasized that classification problems with a limited size of dataset or without any dataset for training can be solved by generating synthetic data with a game engine. In addition, we have demonstrated that a dataset consisting of fully synthetic images can be used for the training process and such models can be tested on real-image classification.

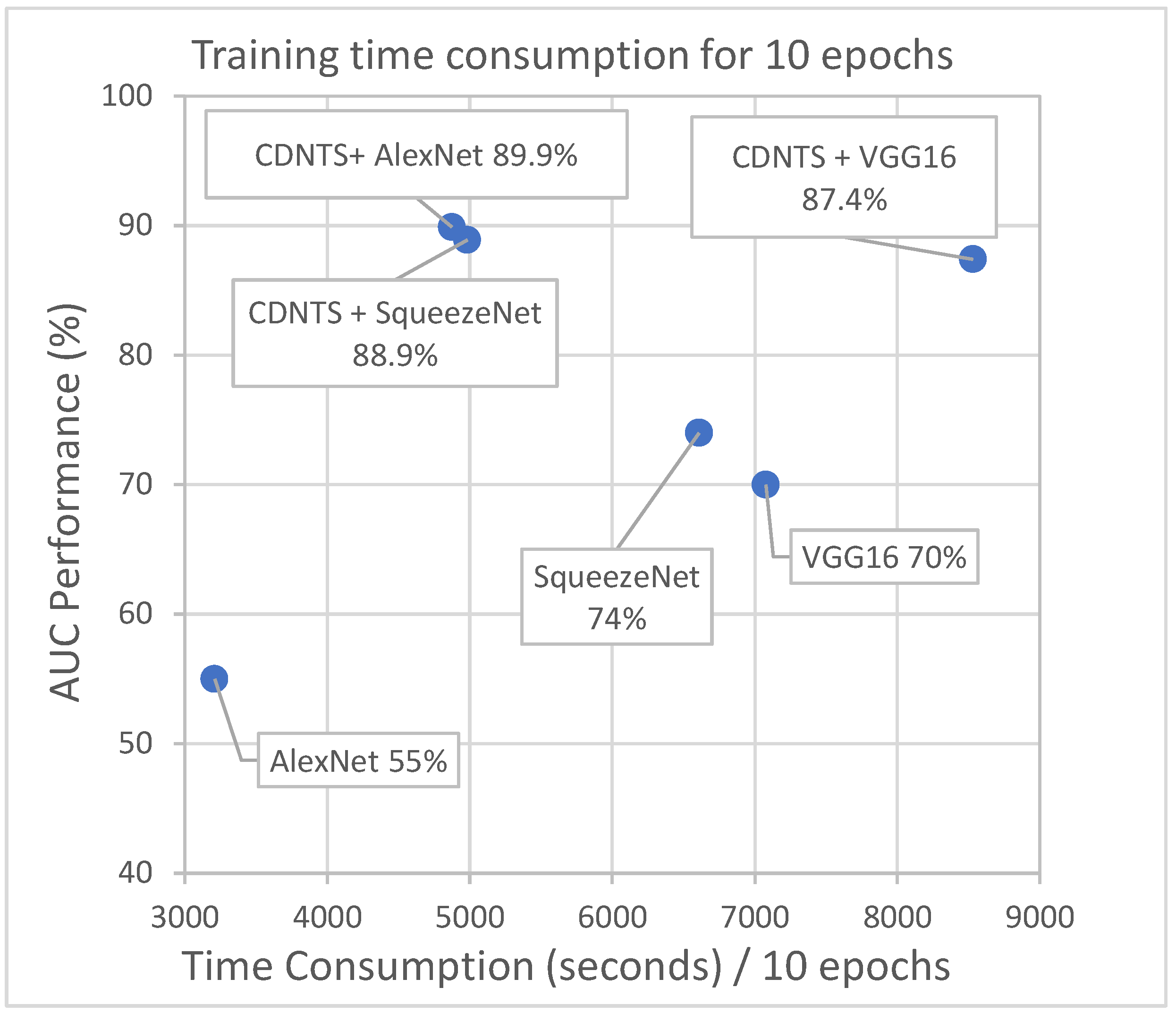

In experiments 1 and 2, segmented synthetic data were trained in two different methods: DL networks and a CDNTS layer with DL networks. The models of the two experiments were tested separately with the synthetic-image dataset and the real-image dataset classifications. It was found that the inclusion of the CDNTS layer considerably improved the accuracy performance of the model. In experiment 1, the AUC value of the most successful synthetic-image test result was 74%, and the most successful real-image test result was 72%. In experiment 2, using the CDNTS network, the most successful synthetic image test result AUC value was 89.9%, and the most successful real image test result was 88.9%. The addition of the CDNTS layer to the training and test processes thus increased the accuracy performance.

The recommended method is limited to images smaller than 20 × 20 pixels. In addition, the average time cost for an image for estimating the CDNTS network is 75.9 ms. The method is suitable for applications where the time cost of 75.9 ms is ignored and the image size is larger than 20 × 20 pixels. Despite the above-mentioned limitations of our application, the present study has shown that networks can be trained with synthetic images produced by game engines using 3D models to solve real-image classification problems. Thanks to the CDNTS layer, the success of classification networks trained with synthetic data in real-image classification was increased. This study has also shown that using synthetic images is suitable for the purpose of accelerating the solving of image classification problems in which there are insufficient or no image data for training and increasing the performance of testing. At future work, it is aimed to develop a more robust classification model for night vision and thermal vision cameras. In addition, it is aimed to train artificial intelligence with synthetic data generated from just several 2D images.