Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks

Abstract

1. Introduction

- The study provides a review of the recent developments to redefine the problem of abnormal human behavior detection and classification, distinctly from human action classification as well as general anomalies detection. To detect each abnormal behavior, its distinct representative patterns were identified.

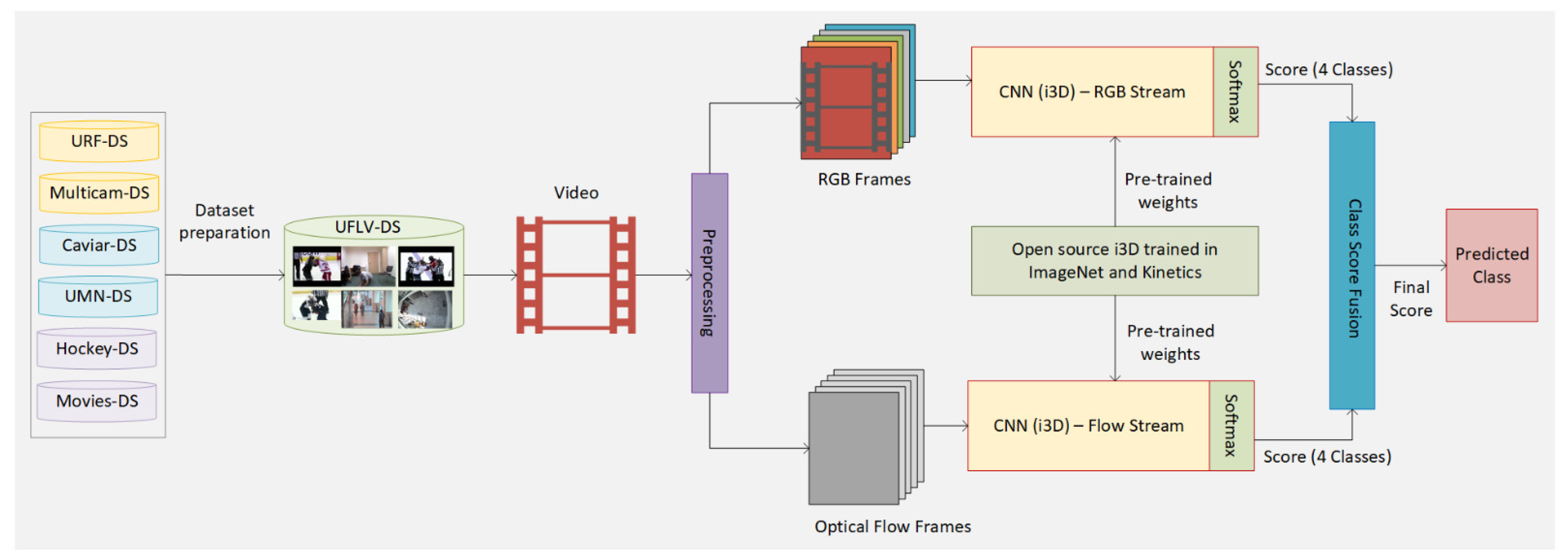

- Unlike previous studies that focused on one specific behavior, this study proposes a new model that combines the detection of all three abnormal behaviors commonly found in uncrowded scenes. In this way, to the best of our knowledge, this is the first study that applies transfer learning from the general action recognition domain to the detection of falling, loitering, and violence.

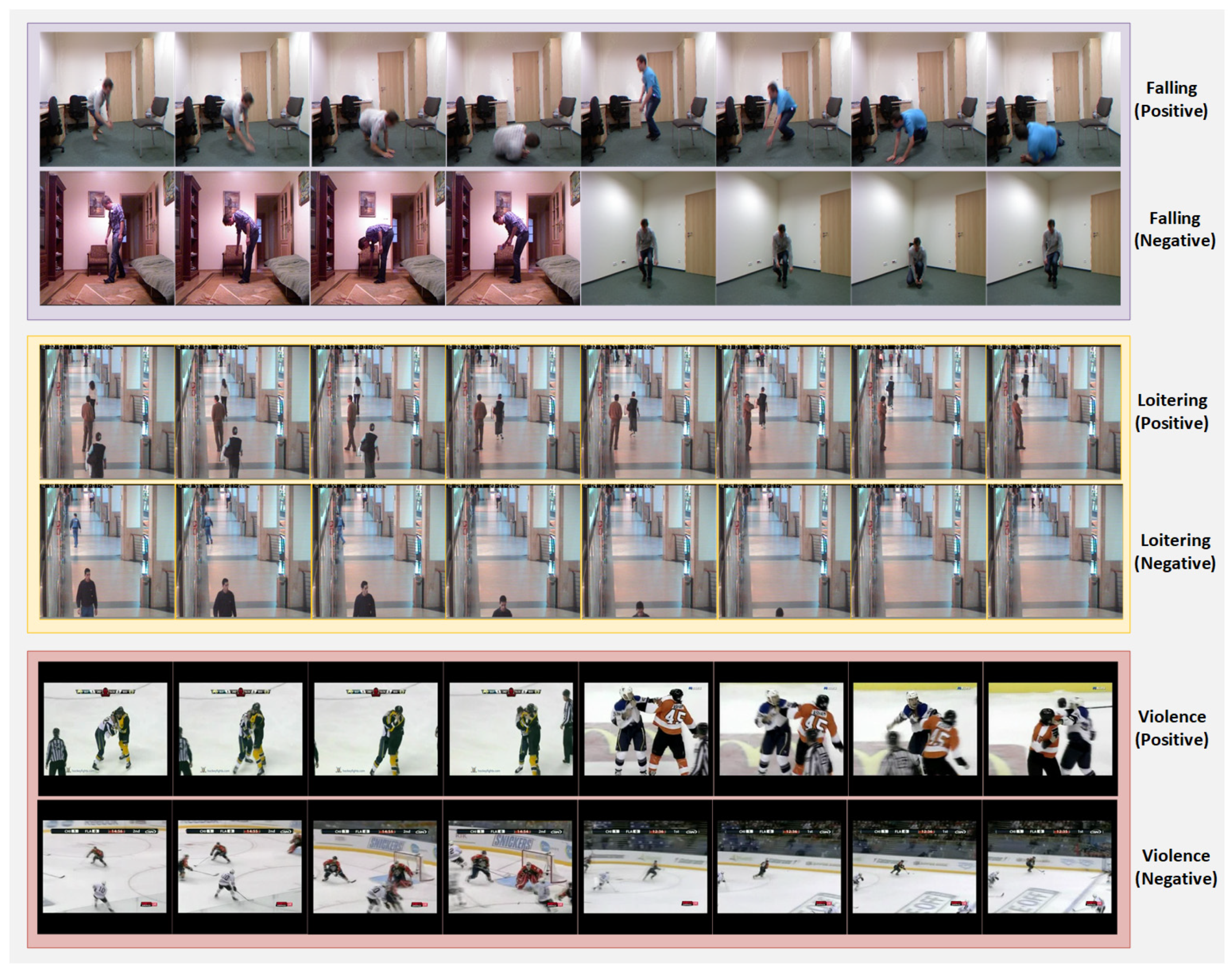

- A specialized dataset was prepared based on publicly available video datasets concerning the common patterns of acts found in behaviors included in the study.

- The study implements a model that makes use of advanced deep learning architectures to simultaneously incorporate both RGB and optical flow information. Moreover, unlike previous works in the domain of abnormal behavior detection that worked on (2D) RGB images extracted from videos, this model takes a 3D video stream as input.

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

3. Background: Challenges in Detecting Three Behaviors

3.1. Falling

- A person is found walking in a scene and then destabilizing in backward, forward, or sideward direction and later found on the floor.

- A person initially static in a horizontal position and then exhibiting a slow backward, forward, or sideway fall eventually ending in a resting position on the floor.

- A person initially found in a horizontal position exhibiting an event such as slipping, tripping, stumbling, losing balance, and eventually falling given that the movement speed is fast.

- A person found in any of the above compounded with the acts of balancing attempts such as lifting arms or hands and holding nearby objects or walls.

3.2. Loitering

- A person is detected in a scene and stays in the scene for a specific duration.

- A person is found in a scene and is moving at a slow speed for a specific duration.

- A person is detected in a scene and moves within an activity area that forms a regular shape such as a rectangle or ellipse.

- The person continues to move around a point of interest thus resulting in an activity area that revolves around one point.

3.3. Violence

3.4. Joint Detection of Falling, Loitering, and Violence

- Loitering and falling: Loitering without intent may involve lifting of arms, touching nearby objects or walls, crouching down, bending down, and lying on the floor. On the other hand, these acts are typical characteristics of the visuals of a person falling.

- Violence and falling: Crouching down, bending down, destabilized posture, posing with raised arms in an attempt to balance, and arms lifted overhead are often found in scenes containing both behaviors.

- Violence and loitering: Holding an object, lifting of arms, touching nearby objects or walls, crouching down, bending down, and lying on the floor are common in both cases.

4. The Proposed Approach

4.1. Dataset Preparation

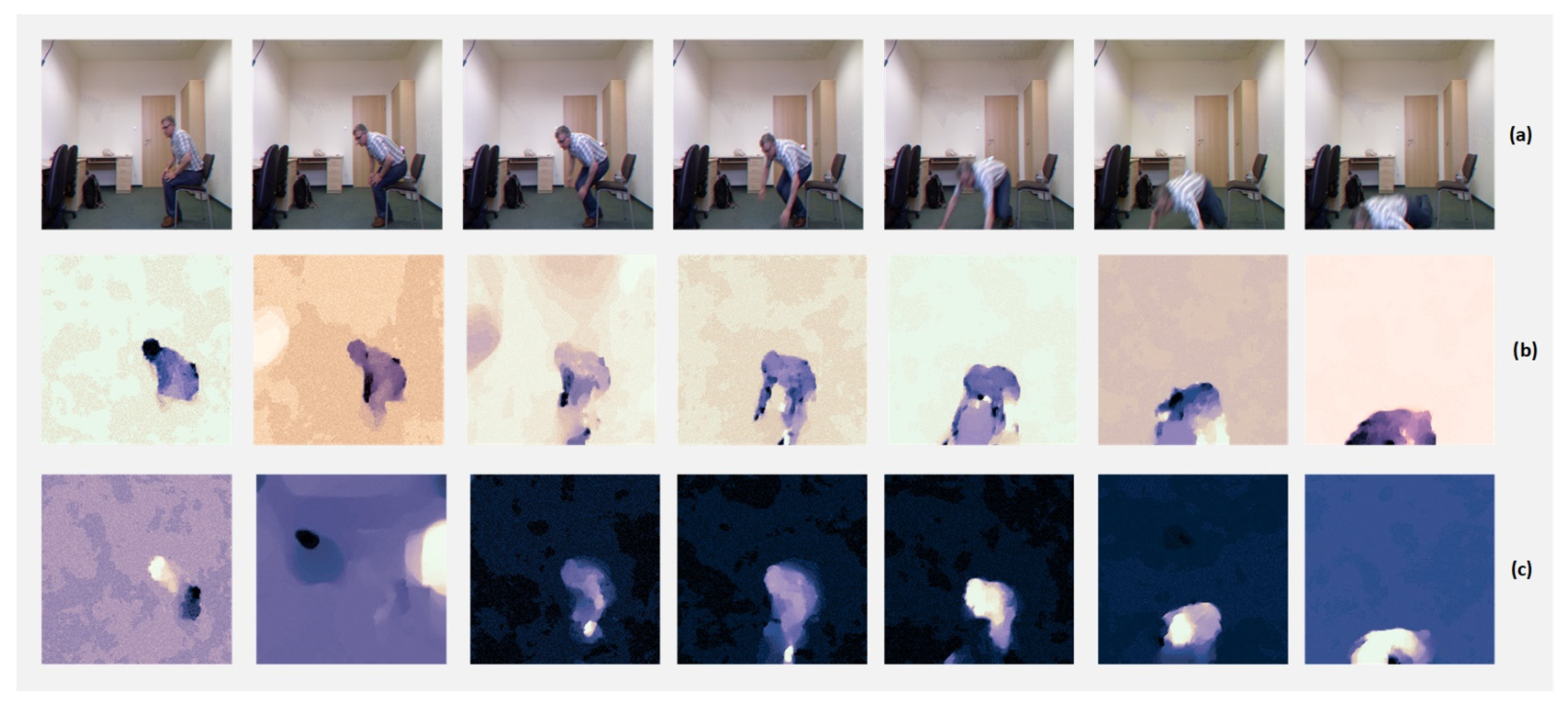

4.2. Optical Stream Construction

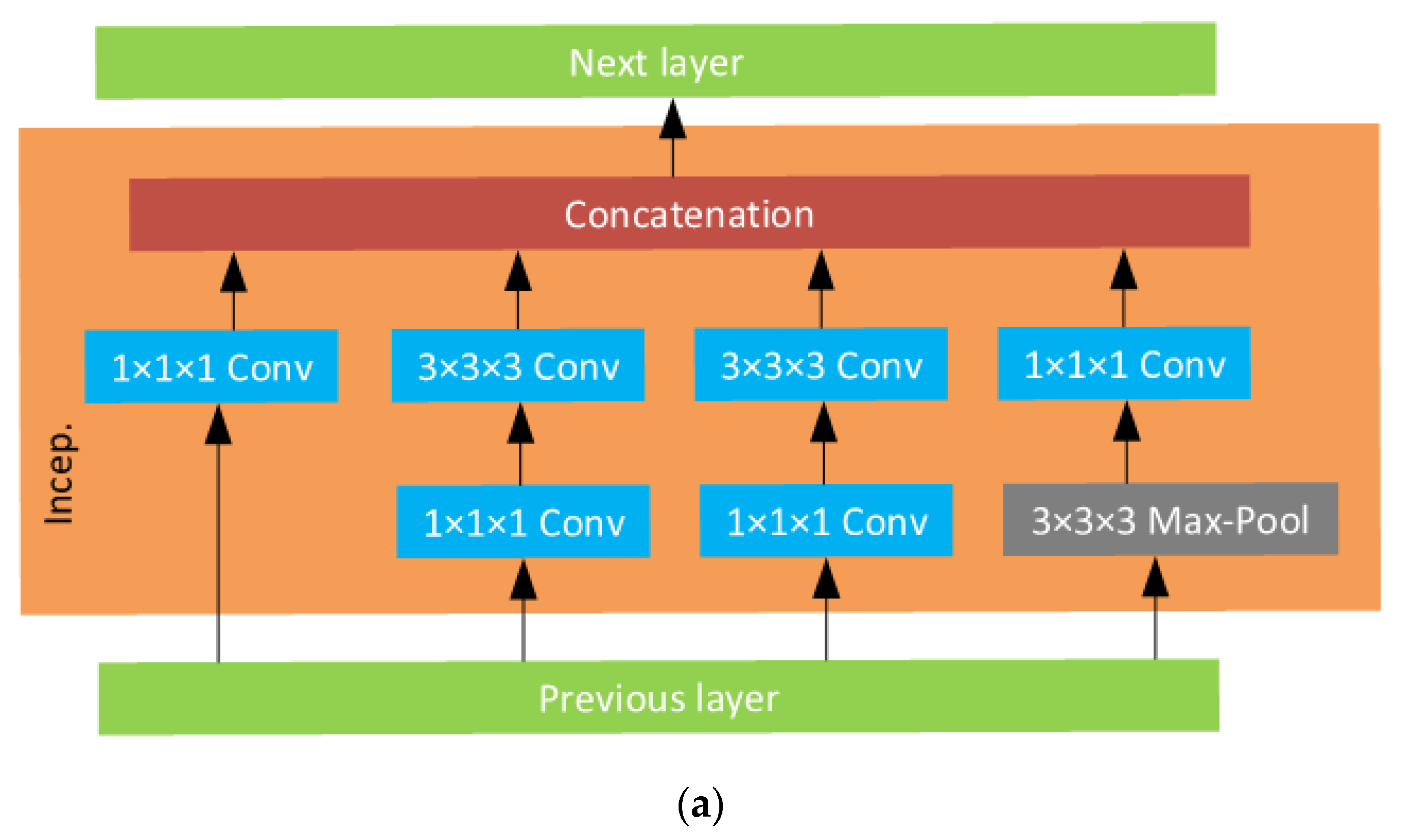

4.3. System Architecture and Flow

4.4. Model Training and Testing

4.4.1. Weights Initialization

4.4.2. Finetuning on UFLV-DS Dataset

5. Experimental Works

5.1. Evaluation Methodology

5.2. Determining the Best Network Configuration and Setup

5.3. Analysis of Detection Errors

5.3.1. False Alarms

5.3.2. Missed Detections

5.3.3. Misclassifications

5.3.4. Discussion of Detection Errors

5.4. Performance Evaluation

5.5. Comparison with the State-of-the-Art

6. Conclusions and Future Work

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ben Mabrouk, A.; Zagrouba, E. Abnormal behavior recognition for intelligent video surveillance systems: A review. Expert Syst. Appl. 2018, 91, 480–491. [Google Scholar] [CrossRef]

- Ullah, H.; Islam, I.U.; Ullah, M.; Afaq, M.; Khan, S.D.; Iqbal, J. Multi-feature-based crowd video modeling for visual event detection. Multimed. Syst. 2020, 1–9. [Google Scholar] [CrossRef]

- Rossetto, L.; Gasser, R.; Lokoc, J.; Bailer, W.; Schoeffmann, K.; Muenzer, B.; Soucek, T.; Nguyen, P.A.; Bolettieri, P.; Leibetseder, A.; et al. Interactive Video Retrieval in the Age of Deep Learning—Detailed Evaluation of VBS 2019. IEEE Trans. Multimed. 2021, 23, 243–256. [Google Scholar] [CrossRef]

- Tsakanikas, V.; Dagiuklas, T. Video surveillance systems-current status and future trends. Comput. Electr. Eng. 2018, 70, 736–753. [Google Scholar] [CrossRef]

- Wang, T.; Miao, Z.; Chen, Y.; Zhou, Y.; Shan, G.; Snoussi, H. AED-Net: An Abnormal Event Detection Network. Engineering 2019, 5, 930–939. [Google Scholar] [CrossRef]

- Ullah, W.; Ullah, A.; Haq, I.U.; Muhammad, K.; Sajjad, M.; Baik, S.W. CNN features with bi-directional LSTM for real-time anomaly detection in surveillance networks. Multimed. Tools Appl. 2020, 1–17. [Google Scholar] [CrossRef]

- Afiq, A.; Zakariya, M.; Saad, M.; Nurfarzana, A.; Khir, M.; Fadzil, A.; Jale, A.; Gunawan, W.; Izuddin, Z.; Faizari, M. A review on classifying abnormal behavior in crowd scene. J. Vis. Commun. Image Represent. 2019, 58, 285–303. [Google Scholar] [CrossRef]

- Núñez-Marcos, A.; Azkune, G.; Arganda-Carreras, I. Vision-Based Fall Detection with Convolutional Neural Networks. Wirel. Commun. Mob. Comput. 2017, 2017, 1–16. [Google Scholar] [CrossRef]

- Rezaee, K.; Haddadnia, J.; Delbari, A. Modeling abnormal walking of the elderly to predict risk of the falls using Kalman filter and motion estimation approach. Comput. Electr. Eng. 2015, 46, 471–486. [Google Scholar] [CrossRef]

- Nguyen, V.D.; Le, M.T.; Do, A.D.; Duong, H.H.; Thai, T.D.; Tran, D.H. An efficient camera-based surveillance for fall detection of elderly people. In Proceedings of the 9th IEEE Conference on Industrial Electronics and Applications, Hangzhou, China, 9–11 June 2014; pp. 994–997. [Google Scholar]

- Aslan, M.; Sengur, A.; Xiao, Y.; Wang, H.; Ince, M.C.; Ma, X. Shape feature encoding via Fisher Vector for efficient fall detection in depth-videos. Appl. Soft Comput. 2015, 37, 1023–1028. [Google Scholar] [CrossRef]

- Yao, C.; Hu, J.; Min, W.; Deng, Z.; Zou, S.; Min, W. A novel real-time fall detection method based on head segmentation and convolutional neural network. J. Real-Time Image Process. 2020, 17, 1–11. [Google Scholar] [CrossRef]

- Khraief, C.; Benzarti, F.; Amiri, H. Elderly fall detection based on multi-stream deep convolutional networks. Multimed. Tools Appl. 2020, 79, 19537–19560. [Google Scholar] [CrossRef]

- Huang, T.; Han, Q.; Min, W.; Li, X.; Yu, Y.; Zhang, Y. Loitering Detection Based on Pedestrian Activity Area Classification. Appl. Sci. 2019, 9, 1866. [Google Scholar] [CrossRef]

- Tomás, R.M.; Tapia, S.A.; Caballero, A.F.; Ratté, S.; Eras, A.G.; González, P.L. Identification of Loitering Human Behaviour in Video Surveillance Environments; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Lim, M.K.; Tang, S.; Chan, C.S. iSurveillance: Intelligent framework for multiple events detection in surveillance videos. Expert Syst. Appl. 2014, 41, 4704–4715. [Google Scholar] [CrossRef]

- Ding, C.; Fan, S.; Zhu, M.; Feng, W.; Jia, B. Violence detection in video by using 3D convolutional neural networks. In International Symposium on Visual Computing; Springer: Berlin/Heidelberg, Germany, 2014; pp. 551–558. [Google Scholar]

- Nievas, E.B.; Suarez, O.D.; García, G.B.; Sukthankar, R. Violence detection in video using computer vision techniques. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 2011; pp. 332–339. [Google Scholar]

- Song, W.; Zhang, D.; Zhao, X.; Yu, J.; Zheng, R.; Wang, A. A Novel Violent Video Detection Scheme Based on Modified 3D Convolutional Neural Networks. IEEE Access 2019, 7, 39172–39179. [Google Scholar] [CrossRef]

- Asad, M.; Yang, J.; He, J.; Shamsolmoali, P.; He, X. Multi-frame feature-fusion-based model for violence detection. Vis. Comput. 2020, 1–17. [Google Scholar] [CrossRef]

- Kim, J.-H.; Won, C.S. Action Recognition in Videos Using Pre-Trained 2D Convolutional Neural Networks. IEEE Access 2020, 8, 60179–60188. [Google Scholar] [CrossRef]

- Lu, Z.; Qin, S.; Li, X.; Li, L.; Zhang, D. One-shot learning hand gesture recognition based on modified 3d convolutional neural networks. Mach. Vis. Appl. 2019, 30, 1157–1180. [Google Scholar] [CrossRef]

- Hara, K.; Kataoka, H.; Satoh, Y. Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Kataoka, H.; Wakamiya, T.; Hara, K.; Satoh, Y. Would mega-scale datasets further enhance spatiotemporal 3d cnns? arXiv 2020, arXiv:2004.04968. [Google Scholar]

- Tripathi, G.; Singh, K.; Vishwakarma, D.K. Convolutional neural networks for crowd behaviour analysis: A survey. Vis. Comput. 2018, 35, 753–776. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional Two-Stream Network Fusion for Video Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. arXiv 2014, arXiv:1406.2199. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning Spatio-Temporal Features with 3D Residual Networks for Action Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 3154–3160. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-Term Temporal Convolutions for Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1510–1517. [Google Scholar] [CrossRef]

- Sha, L.; Zhiwen, Y.; Kan, X.; Jinli, Z.; Honggang, D. An improved two-stream CNN method for abnormal behavior detection. J. Physics: Conf. Ser. 2020, 1617, 012064. [Google Scholar] [CrossRef]

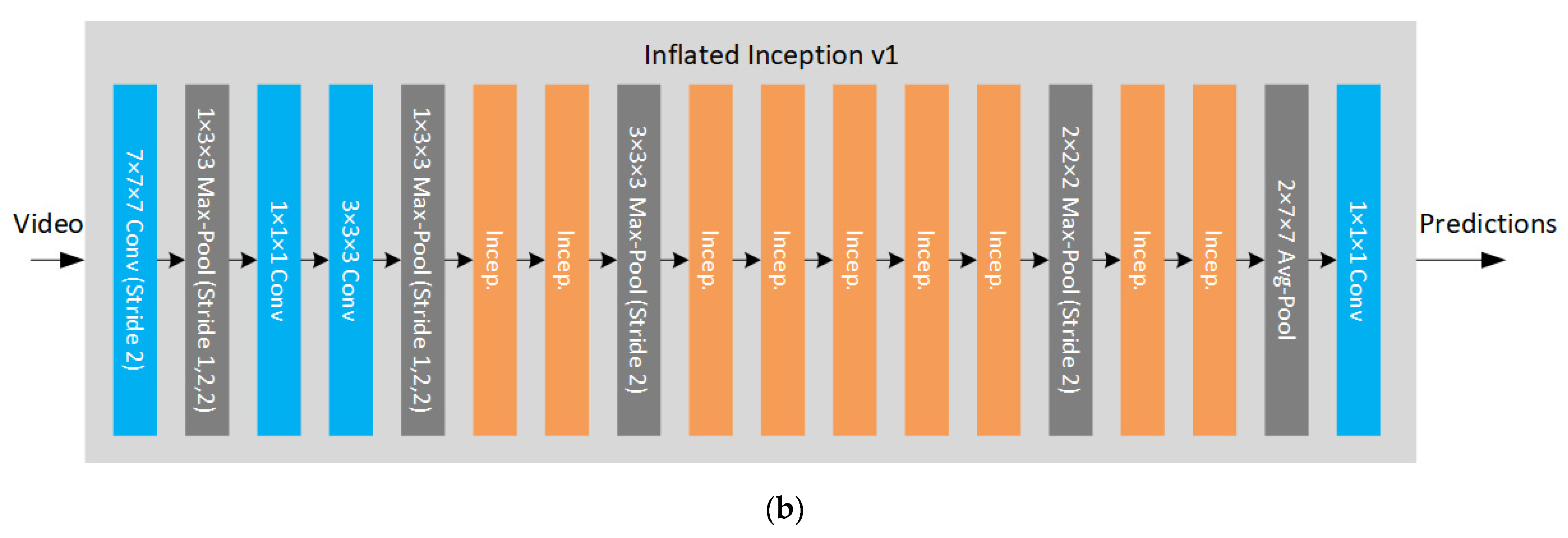

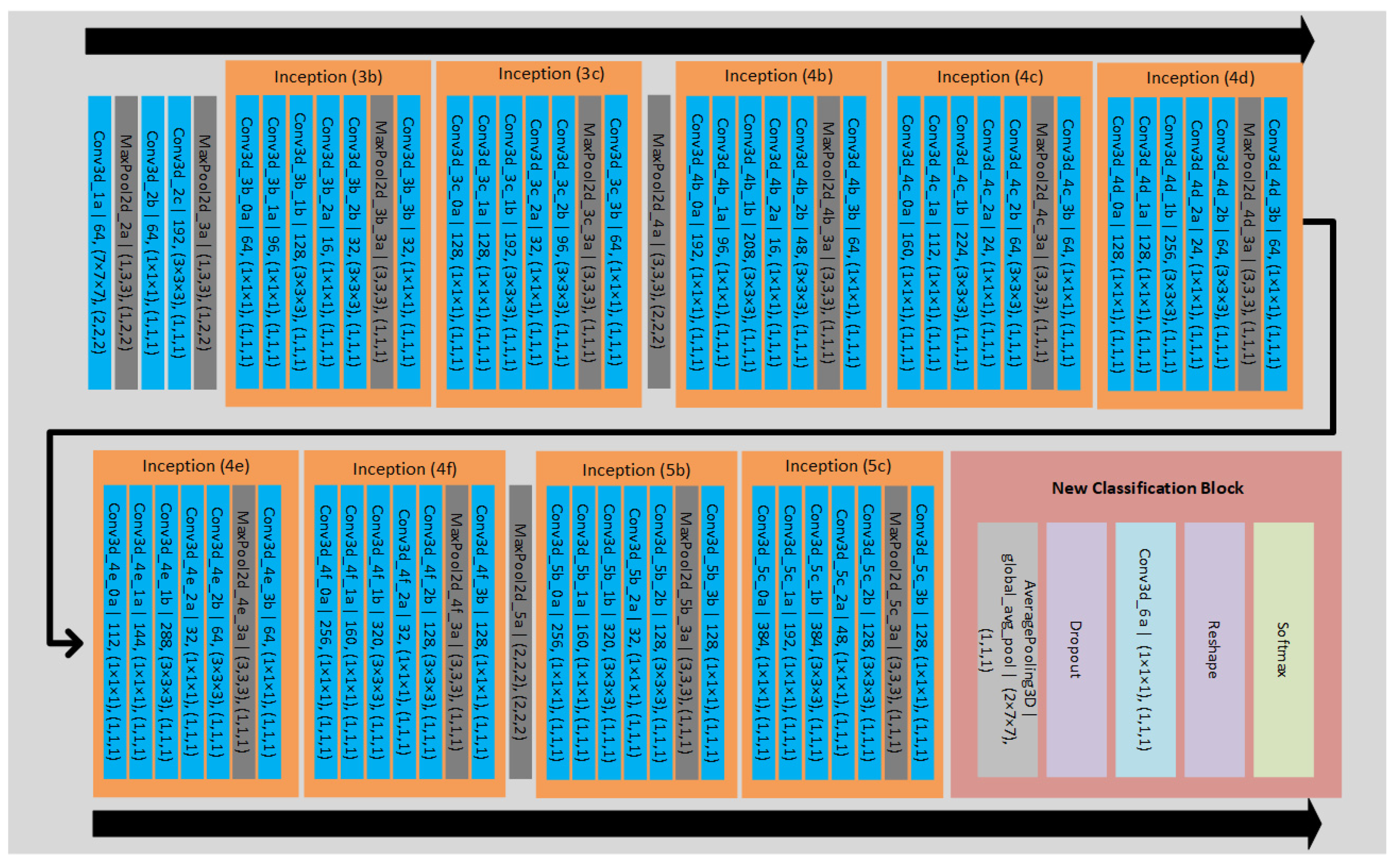

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar]

- Xie, S.; Sun, C.; Huang, J.; Tu, Z.; Murphy, K. Rethinking Spatiotemporal Feature Learning: Speed-Accuracy Trade-offs in Video Classification. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2018; Apress: New York, NY, USA, 2018; pp. 318–335. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Chen, M.Y.; Hauptmann, A. Hauptmann, MoSIFT: Recognizing Human Actions in Surveillance Videos; Carnegie Mellon University: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Dai, W.; Chen, Y.; Huang, C.; Gao, M.-K.; Zhang, X. Two-Stream Convolution Neural Network with Video-stream for Action Recognition. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Ramya, P.; Rajeswari, R. Human action recognition using distance transform and entropy based features. Multimedia Tools Appl. 2021, 80, 8147–8173. [Google Scholar] [CrossRef]

- Chriki, A.; Touati, H.; Snoussi, H.; Kamoun, F. Deep learning and handcrafted features for one-class anomaly detection in UAV video. Multimed. Tools Appl. 2021, 80, 2599–2620. [Google Scholar] [CrossRef]

- Castellano, G.; Castiello, C.; Cianciotta, M.; Mencar, C.; Vessio, G. Multi-view Convolutional Network for Crowd Counting in Drone-Captured Images. In HCI International 2020—Late Breaking Papers: Cognition, Learning and Games; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2020; pp. 588–603. [Google Scholar]

- Castellano, G.; Castiello, C.; Mencar, C.; Vessio, G. Crowd Detection in Aerial Images Using Spatial Graphs and Fully-Convolutional Neural Networks. IEEE Access 2020, 8, 64534–64544. [Google Scholar] [CrossRef]

- Ullah, A.; Muhammad, K.; Haydarov, K.; Haq, I.U.; Lee, M.; Baik, S.W. One-Shot Learning for Surveillance Anomaly Recognition using Siamese 3D CNN. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Sahoo, S.R.; Dash, R.; Mahapatra, R.K.; Sahu, B. Unusual Event Detection in Surveillance Video Using Transfer Learning. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2019; pp. 319–324. [Google Scholar]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Wang, S.; Chen, L.; Zhou, Z.; Sun, X.; Dong, J. Human fall detection in surveillance video based on PCANet. Multimed. Tools Appl. 2016, 75, 11603–11613. [Google Scholar] [CrossRef]

- Chan, T.-H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. PCANet: A Simple Deep Learning Baseline for Image Classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Cao, G.; Meng, D.; Chen, W.; Cao, W. Automatic fall detection of human in video using combination of features. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 1228–1233. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM international conference on Multimedia, Nice, France, 21–25 October 2014; pp. 675–678. [Google Scholar]

- Stone, E.E.; Skubic, M. Fall Detection in Homes of Older Adults Using the Microsoft Kinect. IEEE J. Biomed. Health Inform. 2015, 19, 290–301. [Google Scholar] [CrossRef] [PubMed]

- Sevilla-Lara, L.; Liao, Y.; Güney, F.; Jampani, V.; Geiger, A.; Black, M.J. On the Integration of Optical Flow and Action Recognition. In Computer Vision; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2019; pp. 281–297. [Google Scholar]

- Zach, C.; Pock, T.; Bischof, H. A Duality Based Approach for Realtime TV-L 1 Optical Flow. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer: Berlin/Heidelberg, Germany, 2007; pp. 214–223. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large Video Database for Human Motion Recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Zisserman, A. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning Realistic Human Actions from Movies. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Niebles, J.C.; Wang, H.; Fei-Fei, L. Unsupervised Learning of Human Action Categories Using Spatial-Temporal Words. Int. J. Comput. Vis. 2008, 79, 299–318. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Action Recognition with Improved Trajectories. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Taylor, G.W.; Fergus, R.; LeCun, Y.; Bregler, C. Convolutional learning of spatio-temporal features. In Computer Vision–ECCV; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Kwon, H.; Kim, Y.; Lee, J.S.; Cho, M. First Person Action Recognition via Two-stream ConvNet with Long-term Fusion Pooling. Pattern Recognit. Lett. 2018, 112, 161–167. [Google Scholar] [CrossRef]

- Crasto, N.; Weinzaepfel, P.; Alahari, K.; Schmid, C. MARS: Motion-Augmented RGB Stream for Action Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 7874–7883. [Google Scholar]

- Li, J.; Liu, X.; Zhang, M.; Wang, D. Spatio-temporal deformable 3D ConvNets with attention for action recognition. Pattern Recognit. 2020, 98, 107037. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Zerrouki, N.; Houacine, A. Combined curvelets and hidden Markov models for human fall detection. Multimed. Tools Appl. 2017, 77, 6405–6424. [Google Scholar] [CrossRef]

| Model | Representative Works | Pros | Cons |

|---|---|---|---|

| 2D CNN/ CNN + LSTM | [55,56,57,58] | Reuse image classification networks, Low no. of parameters | Tend to ignore temporal structure, large training temporal footprint (expensive), may not capture low-level variation |

| 3D CNNs | [28,29,59,60] | Directly represent spatiotemporal data | Very high no. of parameters |

| Original Two-Stream Nets | [27,61] | Use of optical flow along with RGB frames gives high performance, low no. of parameters | Optical flow frames add computational load, high memory requirement |

| 3D-Fused Two-Stream Nets | [26,37,62,63] | Less test time augmentation due to snapshot sampling | High no. of parameters |

| Two-Stream Inflated 3D Nets | [31] | Enhanced use of both RGB and flow inputs, relatively low no. of parameters | Computation load, high memory requirement |

| False Alarm Cases | % Cases Relative to Total False Alarms |

|---|---|

| None predicted as Fall | 57.40 |

| nf1 | 23.40 |

| nf2 | 16.00 |

| nf3 | 11.40 |

| nf4 | 6.60 |

| None predicted as Violence | 22.90 |

| nv1 | 10.90 |

| nv2 | 6.40 |

| nv3 | 5.60 |

| None predicted as Loitering | 17.80 |

| nl1 | 7.50 |

| nl2 | 5.60 |

| nl3 | 4.70 |

| Misc. cases | 1.90 |

| Missed Detection Cases | % Cases Relative to Total Missed Detections |

|---|---|

| Fall predicted as None | 14.71 |

| fn1 | 7.14 |

| fn2 | 4.62 |

| fn3 | 2.94 |

| Loitering predicted as None | 62.18 |

| ln1 | 31.09 |

| ln2 | 21.43 |

| ln3 | 9.66 |

| Violence predicted as None | 19.33 |

| vn1 | 8.82 |

| vn2 | 5.88 |

| vn3 | 4.62 |

| Misc. cases | 3.78 |

| Misclassification Cases | % Cases Relative to Total Misclassifications |

|---|---|

| Fall predicted as Loitering | 2.24 |

| fl1 | 1.40 |

| fl2 | 0.84 |

| Fall predicted as Violence | 19.33 |

| fv1 | 9.80 |

| fv2 | 6.16 |

| fv3 | 3.36 |

| Loitering predicted as Fall | 6.72 |

| lf1 | 4.20 |

| lf2 | 2.52 |

| Loitering predicted as Violence | 36.69 |

| lv1 | 18.21 |

| lv2 | 12.04 |

| lv3 | 6.44 |

| Violence predicted as Fall | 20.45 |

| vf1 | 8.68 |

| vf2 | 7.28 |

| vf3 | 4.48 |

| Violence predicted as Loitering | 12.89 |

| vl1 | 8.12 |

| vl2 | 4.76 |

| Misc. cases | 1.68 |

| Actual | Predicted | |||

|---|---|---|---|---|

| Falling | Loitering | Violence | None | |

| Falling | 98.03 | 0.05 | 1.36 | 0.56 |

| Loitering | 0.01 | 93.34 | 0.11 | 6.54 |

| Violence | 1.04 | 2.00 | 96.91 | 0.05 |

| None | 0.92 | 4.61 | 1.62 | 92.85 |

| Falling | Loitering | Violence | None | |

|---|---|---|---|---|

| Recall | 0.92 | 0.73 | 0.88 | 0.87 |

| FP Rate | 0.04 | 0.02 | 0.02 | 0.11 |

| Precision | 0.88 | 0.91 | 0.92 | 0.72 |

| Accuracy | 0.95 | 0.91 | 0.95 | 0.88 |

| F1 | 0.90 | 0.81 | 0.90 | 0.79 |

| Falling | Loitering | Violence | None | |

|---|---|---|---|---|

| Recall | 0.98 | 0.93 | 0.97 | 0.93 |

| FP Rate | 0.01 | 0.02 | 0.01 | 0.02 |

| Precision | 0.98 | 0.93 | 0.97 | 0.93 |

| Accuracy | 0.99 | 0.97 | 0.98 | 0.96 |

| F1 | 0.98 | 0.93 | 0.97 | 0.93 |

| Paper | Pretraining | Dataset | Data Used | Method/Classification Approach | Precision | Recall | Accuracy |

|---|---|---|---|---|---|---|---|

| Zerrouki and Houacine [65] | - | URF-DS | RGB | Curvelet transforms and area ratios features+ SVM-HMM | - | - | 0.97 |

| Kun et al. [48] | - | Multicam-DS | RGB | Histograms of Oriented Gradients (HOG), LBP, Caffe features+SVM | - | 0.93 | - |

| Yao et. al. [12] | - | Self-collected | RGB | GMM+2D-CNN | - | - | 0.90 |

| Adrian et al. [8] | Imagenet + UCF-101 | URF-DS | RGB, OF | 2D-CNN | - | 1.00 | 0.95 |

| Multicam-DS | - | 0.99 | - | ||||

| URF-DS+ Multicam-DS+ FDD | 0.94 | - | |||||

| Proposed Method | Imagenet + Kinetics | UFLV-DS | RGB, OF | 2-Stream 3D-CNN | 0.98 | 0.98 | 0.99 |

| Paper | Pretraining | Dataset | Data Used | Method/Classification Approach | Precision | Recall | Accuracy |

|---|---|---|---|---|---|---|---|

| Gomez et al. [15] | - | Caviar-DS | RGB | Sequential micro-patterns+ Generalized Sequential Patterns (GSP) algorithm | 0.87 | 0.53 | - |

| Huang et al. [14] | - | PETS2007+ Self-collected | RGB | GMMs fusion, MeanShift+ activity area-based trajectory detection algorithm | 0.96 | 1.00 | 0.97 |

| Proposed Method | Imagenet + Kinetics | UFLV-DS | RGB, OF | 2-Stream 3D-CNN | 0.93 | 0.93 | 0.97 |

| Paper | Pretraining | Dataset | Data Used | Method/Classification Approach | Precision | Recall | Accuracy |

|---|---|---|---|---|---|---|---|

| Ding et al. [17] | - | Hockey-DS | RGB | 3D-CNN | - | - | 0.89 |

| Nievas et al. [18] | - | Hockey-DS | RGB | STIP+MoSIFT+HIK | - | - | 0.91 |

| Movies-DS | - | - | 0.89 | ||||

| Asad et al. [20] | - | Hockey-DS | RGB | Feature fusion+2D-CNN+LSTM | - | - | 0.98 |

| Movies-DS | 0.99 | ||||||

| Crowd Violence | 0.97 | ||||||

| BEHAVE | 0.95 | ||||||

| Proposed Method | Imagenet + Kinetics | UFLV-DS | RGB, OF | 2-Stream 3D-CNN | 0.97 | 0.97 | 0.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehmood, A. Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks. Appl. Sci. 2021, 11, 3523. https://doi.org/10.3390/app11083523

Mehmood A. Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks. Applied Sciences. 2021; 11(8):3523. https://doi.org/10.3390/app11083523

Chicago/Turabian StyleMehmood, Abid. 2021. "Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks" Applied Sciences 11, no. 8: 3523. https://doi.org/10.3390/app11083523

APA StyleMehmood, A. (2021). Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks. Applied Sciences, 11(8), 3523. https://doi.org/10.3390/app11083523