Evaluation of the Coherence of Polish Texts Using Neural Network Models

Abstract

Featured Application

Abstract

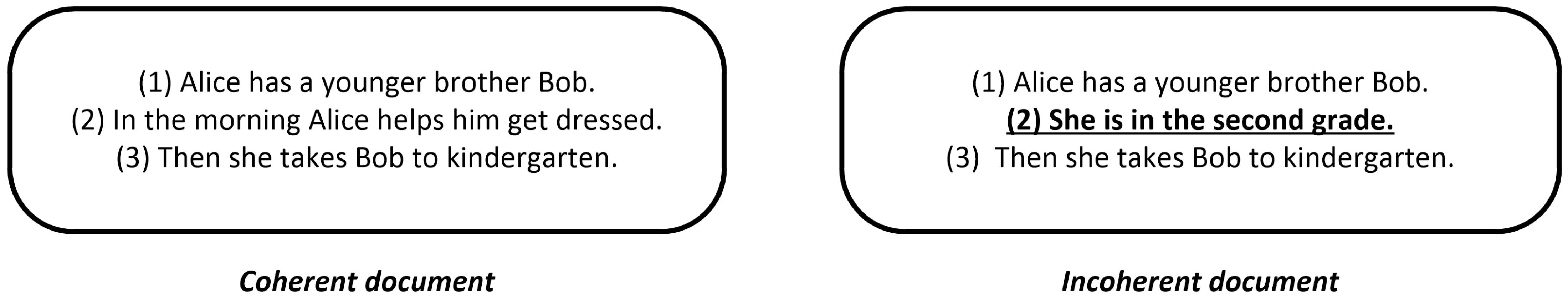

1. Introduction

- Text generation (e.g., creation of responses for voice assistants).

- Text summarization (for instance, formulation of abstracts).

- Detection of schizophrenia symptoms (deviation from the topic of conversation) [4].

- analysis of the state-of-the-art methods of the coherence evaluation of English texts;

- investigation of the impact of lexical and semantic components on the coherence estimation of a Polish-language text;

- experimental verification of the effectiveness of different methods based on neural networks for the solving of typical coherence evaluation tasks on the Polish-language corpus.

2. Materials and Methods

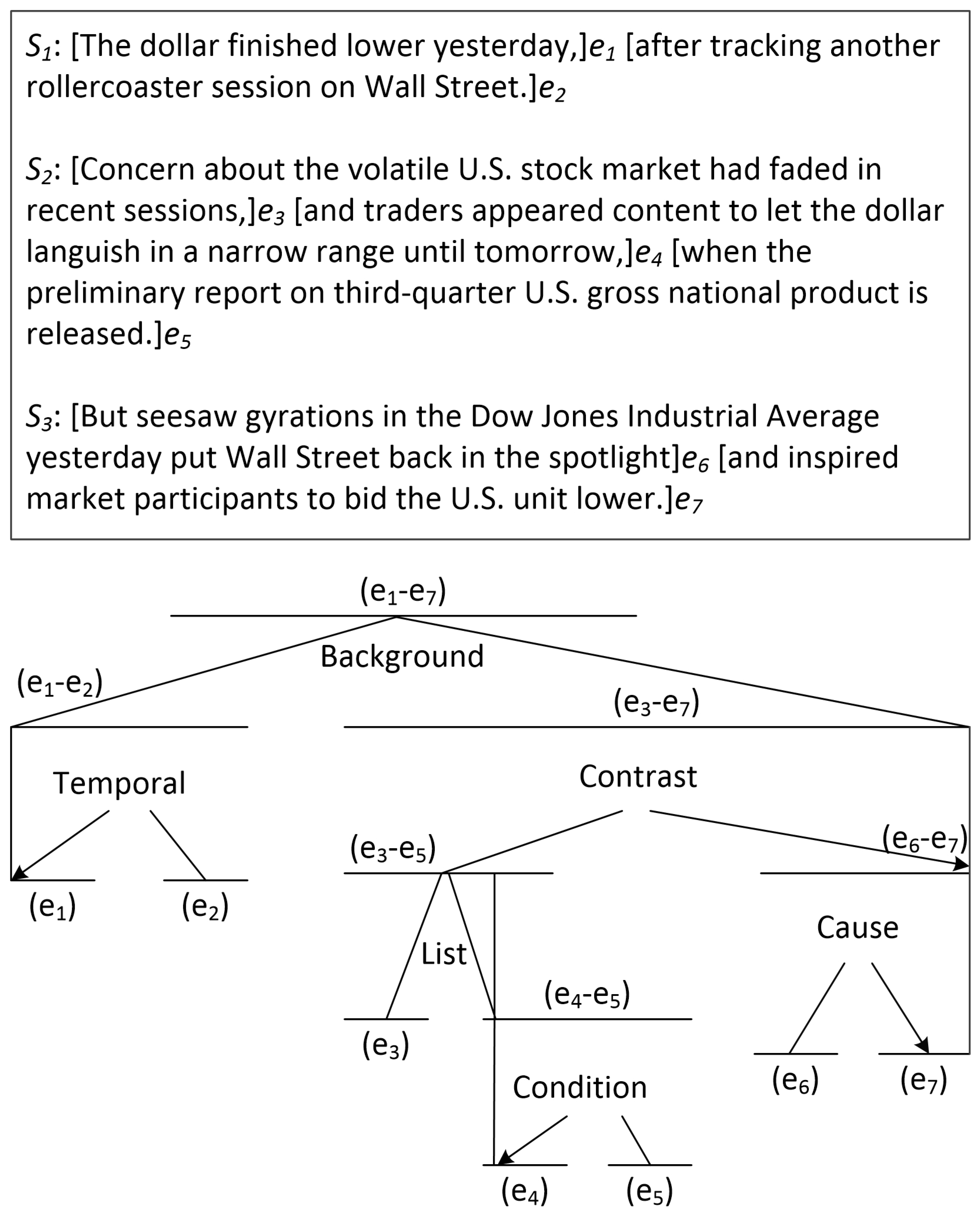

2.1. Related Work

- Entity detection mechanism is required. Moreover, it is necessary to perform additional coreference resolution analysis in order to represent the same referents as a single object (for instance, “Microsoft” and “Microsoft Corporation”).

- The detection of such roles cannot be applied to all languages. For instance, the building of a constituent tree was proposed for the English language. However, the usage of this structure for synthetic languages (like the Polish language) is complicated due to the features of sentence building.

- Other text features like cohesive component, semantic relatedness are not taken into account. Moreover, all non-entity words are neglected despite the impact of such tokens on the representation of the semantic meaning of a text.

2.2. Coherence Estimation Models for The Polish Language

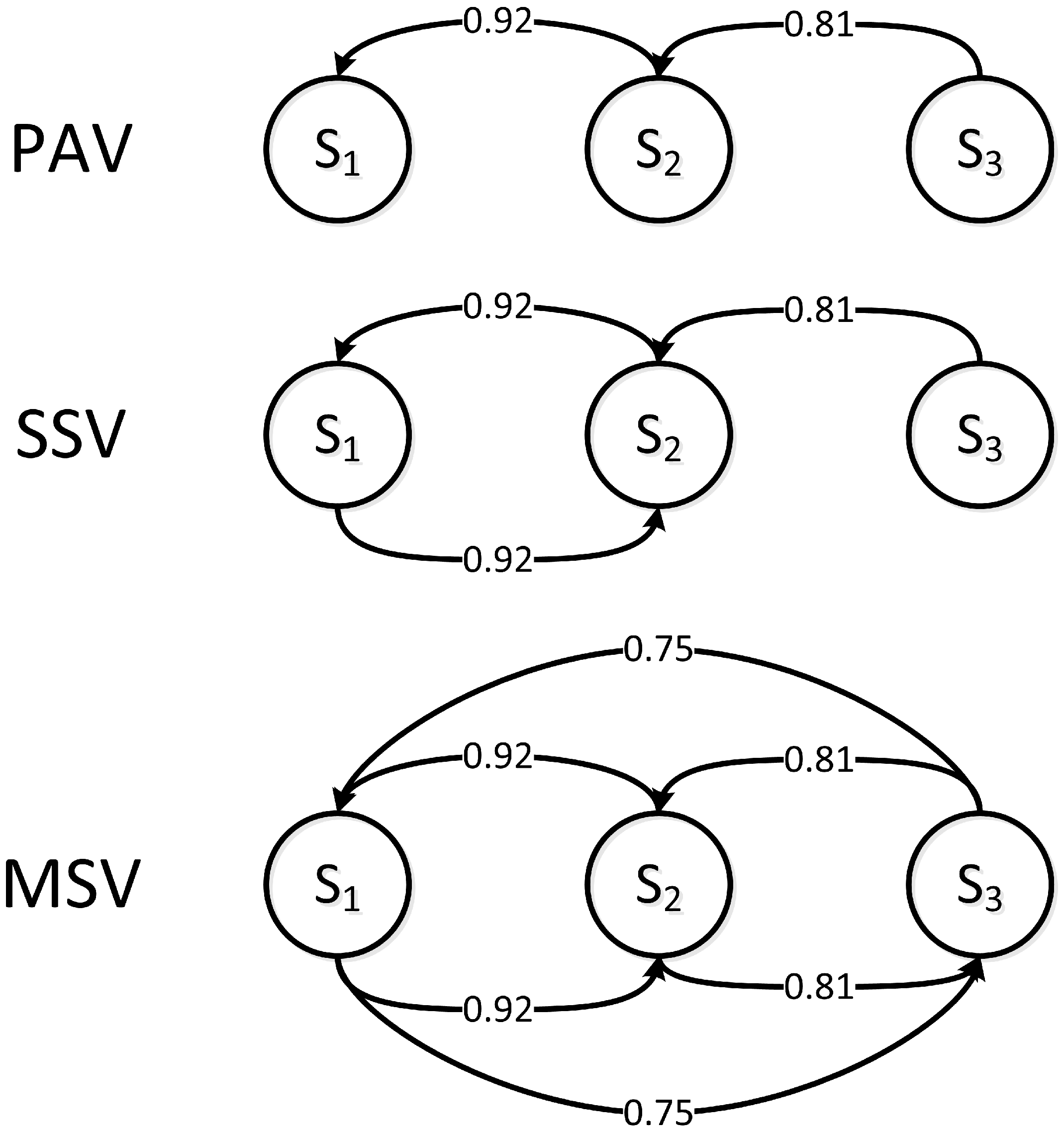

2.2.1. Semantic Similarity Graph

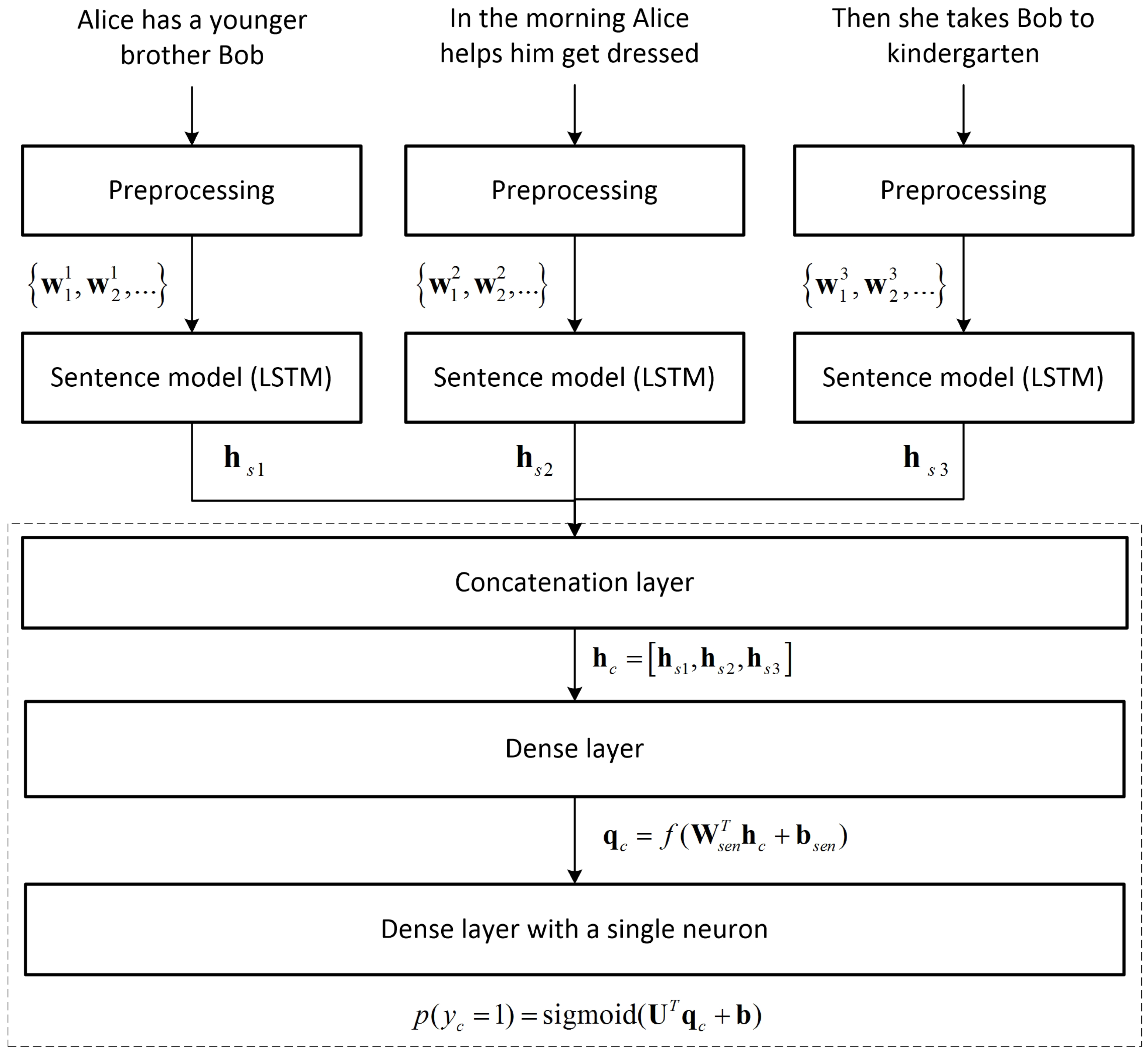

2.2.2. LSTM-Based Coherence Estimation Model

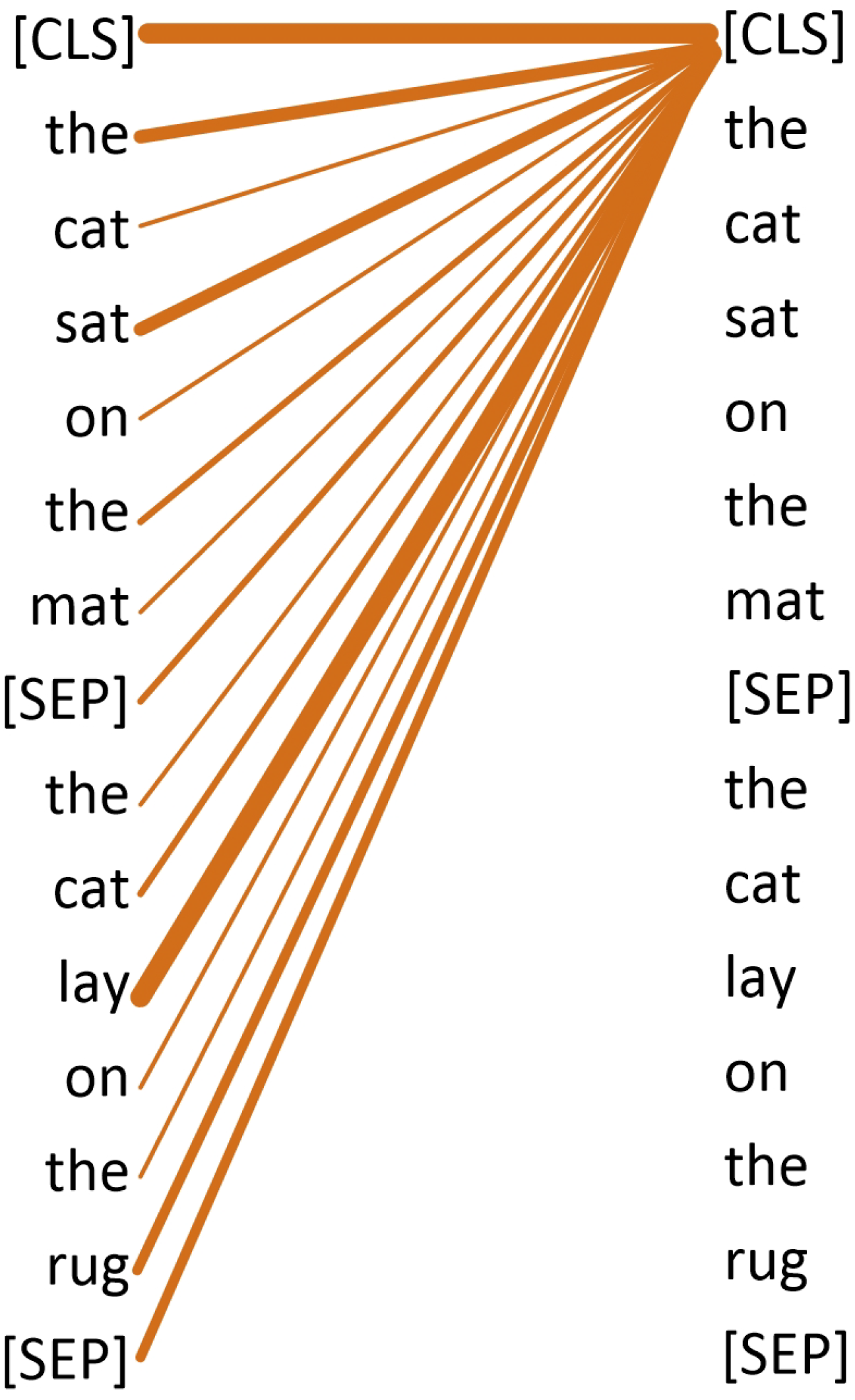

2.2.3. Using of the BERT Model

- Embedding that processes the sequences of input token numbers and attention masks in order to perform the dense representation.

- Encoder that consists of 11 attention-based components.

- Pooler that represents the final vector representation of the input text.

3. Experiments and Results

3.1. Models Preparation and Training

3.2. Results

4. Conclusions

- It is advisable to use both LSTM layers and convolutional operations during the designing of a neural network for the coherence evaluation of texts. The LSTM cells allow the performing of the vector representation of either sentences or entire texts according to items position. Meanwhile, the applying of convolutional operation helps reveal main semantic components from an input text that may represent the topic of the input sequence.

- The highest metrics among the different semantic similarity graph approaches were obtained with the PAV approach. Thus, it is advisable to analyze a text in a sentence-by-sentence manner according to its order within a text for a Polish corpus.

- The peak of the accuracy of the PAV approach with the regulative parameter while solving typical tasks may indicate that both lexical and semantic components of a text can be taken into account during the estimation of the coherence of Polish texts.

- The highest values of metrics among all models were retrieved for the LSTM-based neural network. Thus, despite the relevance of the Polish language to the category of synthetic languages, word order can also be taken into account during the coherence evaluation of a text corpus.

- The results obtained for the BERT-based model with fixed BERT parameters may indicate that such Transformer-based architecture can be used in order to represent different Polish text parts for the further coherence classification layers. The accuracy of this model can be increased by the fine-tuning of all parameters according to the training of the model for the estimation of the coherence of texts of the Polish language.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pishdad, L.; Fancellu, F.; Zhang, R.; Fazly, A. How coherent are neural models of coherence? In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 6126–6138. [Google Scholar] [CrossRef]

- Xiong, H.; He, Z.; Wu, H.; Wang, H. Modeling coherence for discourse neural machine translation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7338–7345. [Google Scholar]

- Pogorilyy, S.; Kramov, A. Coreference Resolution Method Using a Convolutional Neural Network. In Proceedings of the 2019 IEEE International Conference on Advanced Trends in Information Theory (ATIT), Kyiv, Ukraine, 18–20 December 2019; pp. 397–401. [Google Scholar] [CrossRef]

- Kramov, A. Evaluating text coherence based on the graph of the consistency of phrases to identify symptoms of schizophrenia. Data Rec. Storage Process. 2020, 22, 62–71. [Google Scholar] [CrossRef]

- Barzilay, R.; Lapata, M. Modeling Local Coherence: An Entity-Based Approach. Comput. Linguist. 2008, 34, 1–34. [Google Scholar] [CrossRef]

- Walker, M.A.; Joshi, A.K.; Prince, E.F. Centering Theory in Discourse; Claredon: London, UK, 1998. [Google Scholar]

- Guinaudeau, C.; Strube, M. Graph-based Local Coherence Modeling. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Sofia, Bulgaria, 4–9 August 2013; Association for Computational Linguistics: Sofia, Bulgaria, 2013; pp. 93–103. [Google Scholar]

- Zhang, M.; Feng, V.W.; Qin, B.; Hirst, G.; Liu, T.; Huang, J. Encoding World Knowledge in the Evaluation of Local Coherence. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; Association for Computational Linguistics: Denver, CO, USA, 2015; pp. 1087–1096. [Google Scholar] [CrossRef]

- Feng, V.W.; Lin, Z.; Hirst, G. The Impact of Deep Hierarchical Discourse Structures in the Evaluation of Text Coherence. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; Dublin City University and Association for Computational Linguistics: Dublin, Ireland, 2014; pp. 940–949. [Google Scholar]

- Mann, W.C.; Thompson, S.A. Rhetorical Structure Theory: Toward a functional theory of text organization. Text Interdiscip. J. Study Discourse 1988, 8, 243–281. [Google Scholar] [CrossRef]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on International Conference on Machine Learning—Volume 32, JMLR.org, ICML’14, Bejing, China, 22–24 June 2014; pp. II-1188–II-1196. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Peters, M.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; Association for Computational Linguistics: New Orleans, LA, USA, 2018; pp. 2227–2237. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Li, J.; Hovy, E. A Model of Coherence Based on Distributed Sentence Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 2039–2048. [Google Scholar] [CrossRef]

- Lai, A.; Tetreault, J. Discourse Coherence in the Wild: A Dataset, Evaluation and Methods. In Proceedings of the 19th Annual SIGdial Meeting on Discourse and Dialogue, Melbourne, Australia, 12–14 June 2018; Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 214–223. [Google Scholar] [CrossRef]

- Mesgar, M.; Strube, M. A Neural Local Coherence Model for Text Quality Assessment. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Brussels, Belgium, 2018; pp. 4328–4339. [Google Scholar] [CrossRef]

- Moon, H.C.; Mohiuddin, T.; Joty, S.; Xu, C. A Unified Neural Coherence Model. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Hong Kong, China, 2019; pp. 2262–2272. [Google Scholar] [CrossRef]

- Pogorilyy, S.D.; Kramov, A.A. Assessment of Text Coherence by Constructing the Graph of Semantic, Lexical, and Grammatical Consistancy of Phrases of Sentences. Cybern. Syst. Anal. 2020, 56, 893–899. [Google Scholar] [CrossRef]

- Cui, B.; Li, Y.; Zhang, Y.; Zhang, Z. Text Coherence Analysis Based on Deep Neural Network. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; Association for Computing Machinery: New York, NY, USA, 2017. CIKM ’17. pp. 2027–2030. [Google Scholar] [CrossRef]

- How to Make Your Copy More Readable: Make Sentences Shorter—PRsay. Available online: http://comprehension.prsa.org/?p=217 (accessed on 11 March 2021).

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, U.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017. NIPS’17. pp. 6000–6010. [Google Scholar]

- Pawlowski, A. ChronoPress—Chronological Corpus of Polish Press Texts (1945–1962); CLARIN2017 Book of Abstracts; CLARIN ERIC Location: Budapest, Hungary, 2017. [Google Scholar]

- Fares, M.; Kutuzov, A.; Oepen, S.; Velldal, E. Word vectors, reuse, and replicability: Towards a community repository of large-text resources. In Proceedings of the 21st Nordic Conference on Computational Linguistics, Gothenburg, Sweden, 22–24 May 2017; Association for Computational Linguistics: Gothenburg, Sweden, 2017; pp. 271–276. [Google Scholar]

- Woliński, M. Morfeusz Reloaded. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; European Language Resources Association (ELRA): Reykjavik, Iceland, 2014; pp. 1106–1111. [Google Scholar]

- Polbert—Polish BERT. Available online: https://huggingface.co/dkleczek/bert-base-polish-uncased-v1 (accessed on 11 March 2021).

- Polish Coherence Models—Google Colab Notebook. Available online: https://colab.research.google.com/drive/1OMbYKmy9fYVtKMRTE-byQrivqXmooH8r?usp=sharing (accessed on 11 March 2021).

| Model | Parameter Value | Sentence Ordering | Insertion Task |

|---|---|---|---|

| SSG (PAV), parameter α | 0.0 | 0.786 | 0.105 |

| 0.1 | 0.788 | 0.109 | |

| 0.2 | 0.794 | 0.107 | |

| 0.3 | 0.792 | 0.131 | |

| 0.4 | 0.790 | 0.133 | |

| 0.5 | 0.796 | 0.131 | |

| 0.6 | 0.807 | 0.138 | |

| 0.7 | 0.799 | 0.131 | |

| 0.8 | 0.786 | 0.116 | |

| 0.9 | 0.757 | 0.112 | |

| 1.0 | 0.740 | 0.098 | |

| SSG (SSV) | - | 0.760 | 0.083 |

| SSG (MSV), parameter θ | 0.0 | 0.759 | 0.107 |

| 0.1 | 0.718 | 0.092 | |

| 0.2 | 0.696 | 0.077 | |

| 0.3 | 0.716 | 0.072 | |

| 0.4 | 0.659 | 0.105 | |

| 0.5 | 0.731 | 0.105 | |

| 0.6 | 0.665 | 0.094 | |

| 0.7 | 0.584 | 0.077 | |

| 0.8 | 0.525 | 0.055 | |

| 0.9 | 0.055 | 0.002 | |

| LSTM | - | 0.875 | 0.168 |

| BERT | - | 0.835 | 0.143 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Telenyk, S.; Pogorilyy, S.; Kramov, A. Evaluation of the Coherence of Polish Texts Using Neural Network Models. Appl. Sci. 2021, 11, 3210. https://doi.org/10.3390/app11073210

Telenyk S, Pogorilyy S, Kramov A. Evaluation of the Coherence of Polish Texts Using Neural Network Models. Applied Sciences. 2021; 11(7):3210. https://doi.org/10.3390/app11073210

Chicago/Turabian StyleTelenyk, Sergii, Sergiy Pogorilyy, and Artem Kramov. 2021. "Evaluation of the Coherence of Polish Texts Using Neural Network Models" Applied Sciences 11, no. 7: 3210. https://doi.org/10.3390/app11073210

APA StyleTelenyk, S., Pogorilyy, S., & Kramov, A. (2021). Evaluation of the Coherence of Polish Texts Using Neural Network Models. Applied Sciences, 11(7), 3210. https://doi.org/10.3390/app11073210