1. Introduction

The timely inspection of parts and components along the production line is critical to ensure that each part adheres to strict quality criteria necessary to guarantee the safety of the product’s end-user, particularly in sectors such as aerospace, naval and automotive. Regarding the latter, the adoption of lighter and robust materials has made it so that some parts cannot be welded, with structural adhesives playing an important role as an alternative that contributes to the reduction of noise, vibrations and infiltrations.

However, the inspection of parts bonded with this method typically involves destructive tests that require the separation of bonded parts as a way to enable the analysis of the continuity, spread and consistency of the adhesive. This makes such inspections difficult, being both time-consuming and costly in terms of resources, materials and waste. Moreover, common defects like discontinuities and blobs are impossible to correct if not detect prior to the bonding process, since parts undergo considerable mechanical and structural changes.

While the recent advances in Artificial Intelligence (AI), particularly concerning deep learning, and the Industry 4.0 paradigm have made significant progress towards the automation of in-line quality inspections across varied domains [

1,

2,

3,

4], the destructive nature and relatively low frequency of these tests make it so that data availability remains a tremendous challenge for the industrial viability and adoption of such solutions. Even in cases for which defect data can be made available, it is difficult to ensure that a balanced number of samples of each defect type is included.

Consequently, one of the main barriers in modern deep learning-based approaches concerning quality control is the vast amount of training data necessary to develop these solutions. Large datasets require considerable human effort to generate from scratch, whilst generally being costly, time-consuming and error-prone. To mitigate this issue, data augmentation with synthetic data is emerging as a possible solution to decrease the burden of data collection and annotation [

5].

Recently, a systematic review [

6] of the current Industrial AI landscape has shown that the usage of synthetic data with the purpose of artificially augmenting datasets in industrial applications was present in around 20% of publications included in the study. With the important role that realistic and high-resolution synthetic images can play in industrial computer vision tasks, Generative Adversarial Networks (GANs) [

7] are becoming more and more attractive as a way to reliably generate additional samples in the manufacturing domain.

While generative methods have been prevalent in recent literature as a way to generate novel samples from high-dimensional data distributions (for instance in the case of images), previous methods such as those based on variational autoencoders [

8] tended to produce blurry results due to restrictions in the model. GANs are generally capable of producing sharper images [

9] with recent advances making it possible to generate synthetic samples with increasingly large levels of quality [

10,

11].

Some examples of GANs in the manufacturing domain can be found in the literature, addressing applications in which the data are scarce and difficult to obtain [

12,

13,

14]. Other relevant examples include efforts addressing cases in which labeling the entire dataset may be unfeasible, with semi-supervised learning methods showing promise in this direction [

15,

16,

17].

While a large amount of real training data is still necessary in order to train GAN models to generate samples with sufficient quality, researchers at NVIDIA have recently proposed StyleGAN2-ADA [

18], which makes considerable progress towards the successful training of GANs with limited data. This was made possible by employing an Adaptive Discriminator Augmentation (ADA) mechanism that stabilizes training in limited data regimes, thus avoiding typical issues of overfitting the discriminator.

Based on this, we propose a novel approach leveraging these recent advances in GAN training to generate synthetic data as a way to augment scarce training sets in manufacturing quality control tasks, specifically regarding structural adhesive applications. Additionally, we demonstrate that such an approach can improve the viability and performance of automated inspections based on deep learning. We carried out preliminary testing using a pilot application cell to generate real images of defective beads, creating a dataset to train this generative model for data augmentation. Using the augmented training set, we trained a state-of-the-art object detection model to automate the quality inspection task, then compared its performance with the same model trained only on a real training set of limited size.

This directly addresses the future research direction delineated in previous work [

19], which performed data augmentation using simulation. Shifting to a GAN-based method facilitates the employment of synthetic data generation without requiring the specific modeling of a simulation that closely resembles the real application. This is especially important in cases for which modeling may be too complex or unfeasible.

The remainder of this article is organized as follows:

Section 2 describes the materials and methods adopted in this work, providing sufficient detail and references for its replication.

Section 3 summarizes the preliminary results along with a brief interpretation, followed by a more thorough discussion of their implication and directions for future work on this topic in

Section 4. Finally,

Section 5 summarizes the conclusions and provides some closing remarks.

2. Materials and Methods

This section briefly addresses the methods necessary for the replication of the proposed approach. Firstly, the generation of the real dataset and its characterization are discussed. Then, the generation of the synthetic adhesive images using StyleGAN2-ADA is presented. Finally, the implementation, training and validation steps for the YOLOv4-Tiny object detection model are discussed. The model weights, configurations and data encompassed in this study are made publicly available at

https://github.com/RicardoSPeres/GAN_Synth_Adhesive (accessed on 30 March 2021).

2.1. Dataset Characterization

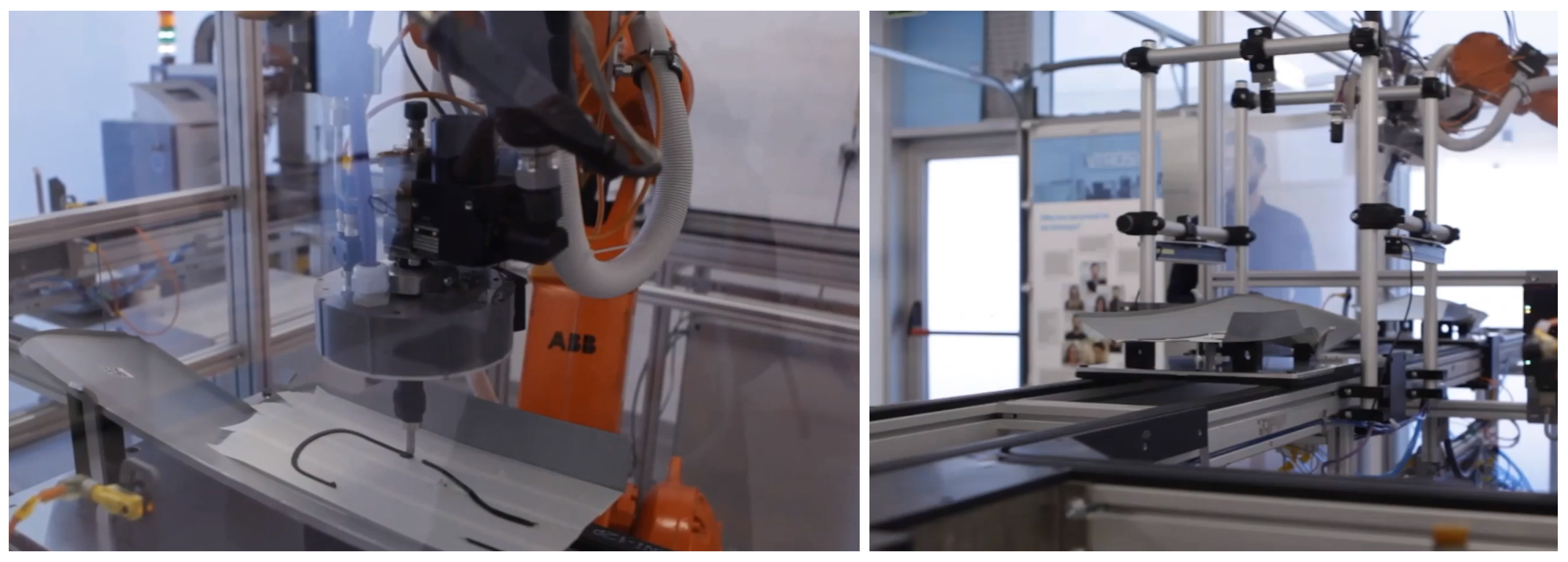

The dataset considered in this study consists of images collected from a structural adhesive application cell located at Introsys S.A. facilities in Castelo Branco, Portugal, a company specializing in industrial automation (particularly in the automotive sector) that operates in the international market since 2004. It encompasses two stations, one for the adhesive application carried out by an ABB IRB 2400 robotic arm, and the other for the visual quality inspection with two Teledine cameras, as depicted in

Figure 1. Additional descriptions of the cell are available in [

19,

20]. For this study two types of defects are considered based on the requirements of the use case at hand, namely discontinuities and blobs (excess of material). Still, the approach can easily accommodate additional defect types, so long as they are identified during the labeling process. To generate the original dataset, the controller was manually reconfigured by an operator for each product to produce different defects of these types at varying positions during the adhesive application.

For the training set of 143 real images used in the GAN model, the original images were cropped to a resolution of 1024 × 1024 to match the input format of StyleGAN2-ADA, namely square-shaped resolution and the same power-of-two dimensions, and centered on the adhesive bead. The resulting model was then used to generate 536 synthetic images of defective beads to augment the original dataset.

Regarding the object detection model to automate quality inspection, 116 real images from the original dataset were selected (removing those that had no defects of the contemplated types), with this set being split 50/50 into training and validation sets. The initial training set of 58 images was then augmented with the synthetic set, resulting in a training set of 594 1024 × 1024 images, which were manually annotated using LabelImg (

https://github.com/tzutalin/labelImg, accessed on 9 March 2021). The real training set is imbalanced, consisting of 75 instances of discontinuity defects and 34 of excess defects, since the former occur more frequently in the present use case. The augmentation addresses the class imbalance, resulting in 582 instances of discontinuities and 580 of excess defects.

2.2. Generating Synthetic Adhesive Images

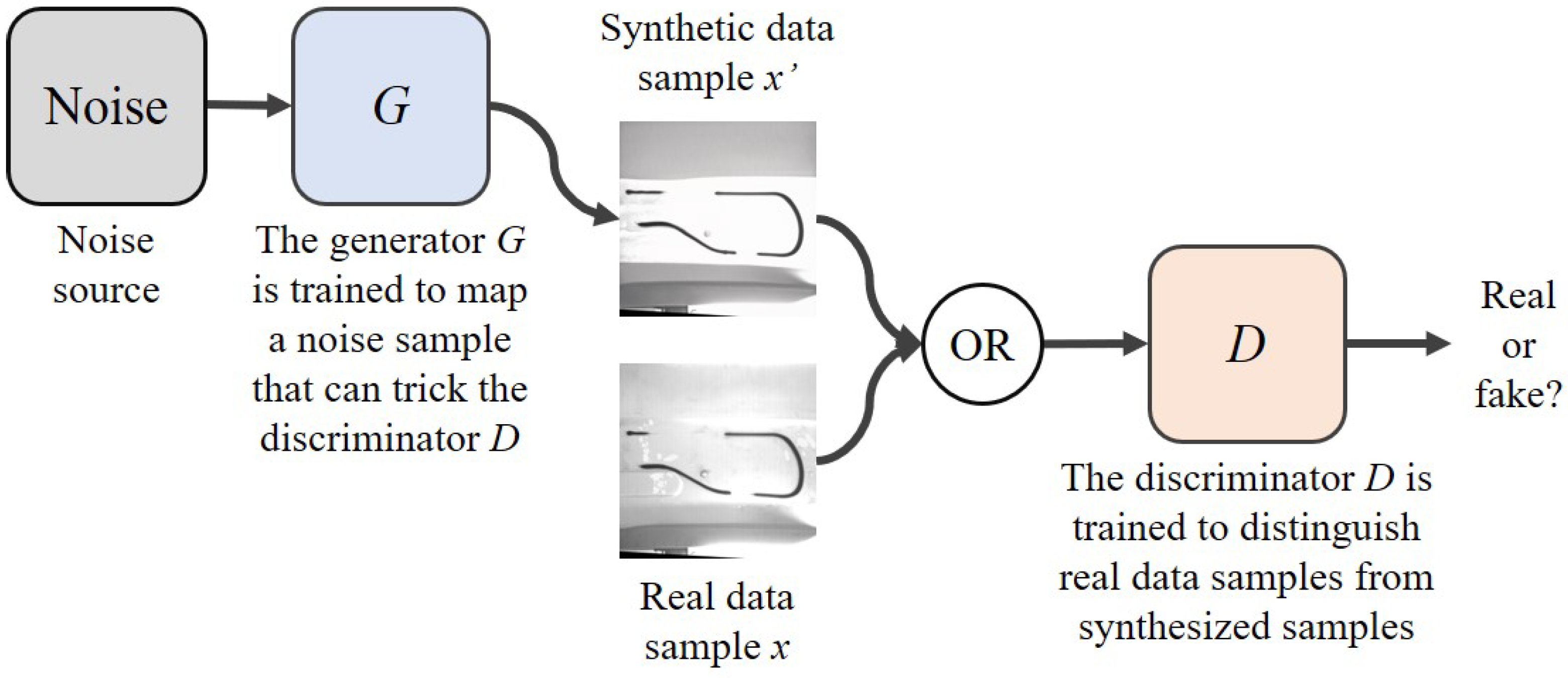

GANs are an emerging trend in synthetic image generation that is founded on the idea of training a pair of neural networks in competition with one another [

21]. A general depiction of a basic GAN structure is provided in

Figure 2.

During this simultaneous training process, the error signal to the discriminator is provided from the ground truth of whether or not a sample was real or synthetic. Since the generator has no direct access to real images, the error signal can be used to train the generator (via the discriminator), enabling it to generate synthetic images with improved quality.

However, it remains challenging to collect datasets that are large enough to meet the requirements to train modern high-resolution GANs. This is particularly true in domains such as manufacturing quality control, where manufacturers generally optimize against the occurrence of defects. In this light, the recent introduction of StyleGAN2-ADA has made it possible to use much smaller datasets than before for this purpose.

Hence, for the generation of synthetic images, the base implementation of StyleGAN2-ADA available at

https://github.com/NVlabs/stylegan2-ada (accessed on 9 March 2021) was adopted. To speed up convergence and reduce data requirements, transfer learning was used to start from a model pre-trained on the FFHQ 1024 × 1024 dataset [

10], as opposed to random initialization. As stated in [

18], transfer learning provides significantly better results than from-scratch training, with its success apparently depending mainly on on the diversity of the source dataset, instead of the similarity between subjects.

Since datasets are stored as multi-resolution TFRecords, the first step was to convert the structural adhesive dataset to the correct format. Then, training was carried out on a single NVIDIA Tesla V100 GPU, taking approximately eight hours. For inference on the same setup, synthetic images could be generated at an average rate of 105 images per minute, using different seeds and varying truncation values.

2.3. Object Detection with Synthetic Data

Concerning object detection models, Scaled-YOLOv4 [

22] has recently achieved state-of-the-art performance on the Microsoft Common Objects in COntext (MS COCO) dataset [

23]. However, to enable a quicker iteration of experiments, the YOLOv4-Tiny model [

24] was chosen for the validation of this approach due to shorter training times. Training was also carried out in a single NVIDIA Tesla V100 GPU using Darknet [

25], an open source neural network framework written in C and CUDA supporting CPU and GPU computation. The base implementation is available at

https://github.com/AlexeyAB/darknet (accessed on 9 March 2021).

To assess the impact of the proposed augmentation approach, two distinct models were trained over 6000 iterations each, one using only the real training set of 58 structural adhesive bead images, and the other using the full augmented set of 594 images. Afterwards, the mean Average Precision (mAP) of each model was calculated at different Intersection over Union (IoU) thresholds, first for the validation set of 58 real images, then for a second holdout set of 19 images generated in a separate experiment. This metric was chosen as it common in most modern object detection tasks [

23,

26]. The holdout set included images with defects occurring in portions of the bead that were not common (or present at all) in the original dataset, representing a more difficult validation scenario.

3. Preliminary Results

This section provides a brief description of the preliminary experimental results, both for the synthetic data generation and the object detection, along with some interpretations of their implication in the context of this work.

3.1. Synthetic Image Generation

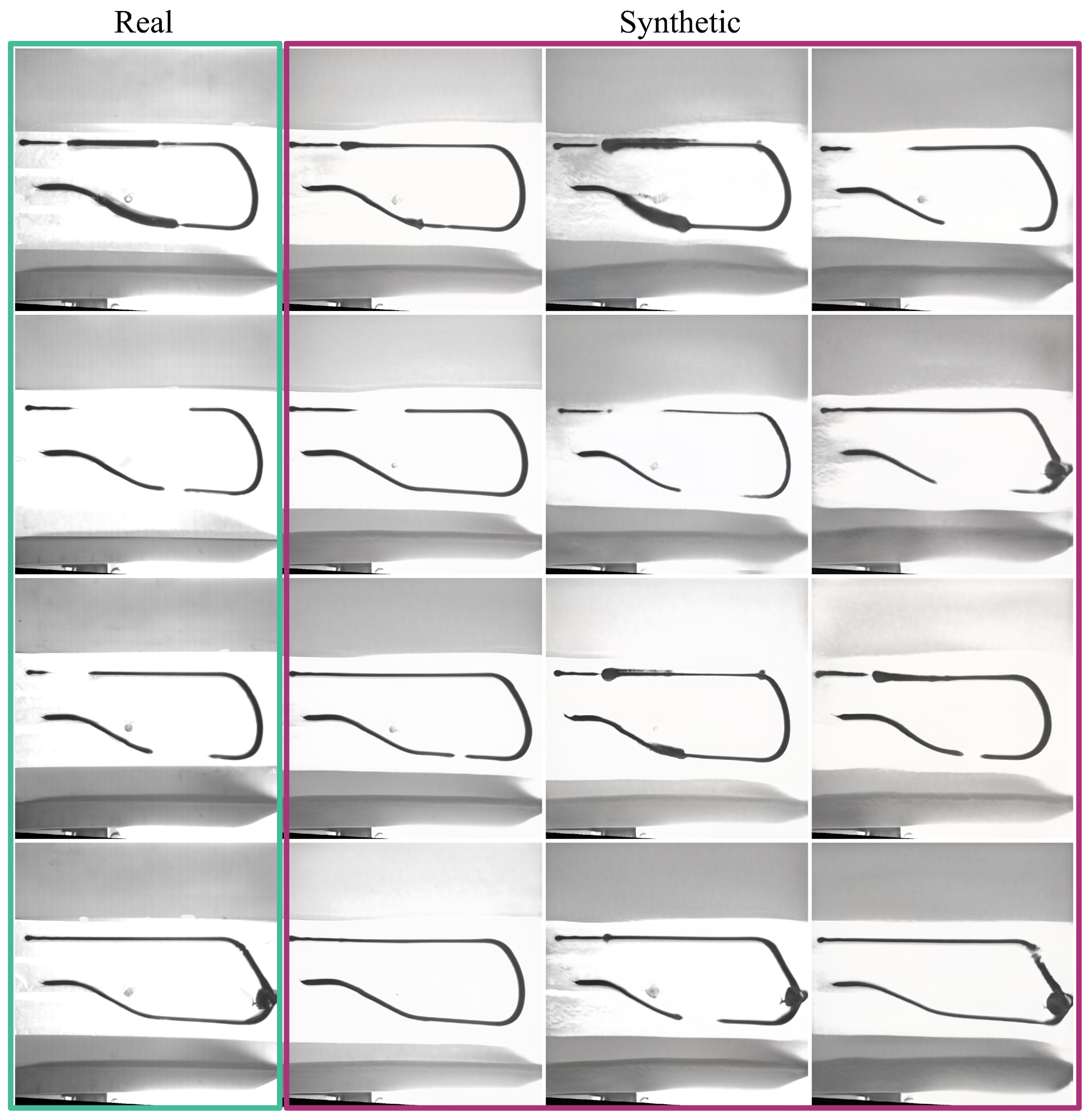

Regarding the generation of synthetic structural adhesive defects, different seed and truncation values were used to generate a variety of images, with some examples shown in

Figure 3 where the first column presents real images, while all others are generated by the trained GAN.

It can be observed that not only is the model capable of generating realistic images of different beads, but also that varied defects can be generated along different segments of the bead, both for discontinuity and blob defects.

3.2. Automated Defect Detection Using Deep Learning

Following the method specified in

Section 2.3, one of the YOLOv4-Tiny models was trained on the real training set and the other on the augmented one, as described in

Section 2.1. To further facilitate the comparison, a third model was trained only on the synthetic dataset, with a fourth model being trained on a dataset augmented with a previous alternative approach based on simulation [

19]. A summary of the results from this process is provided in

Table 1.

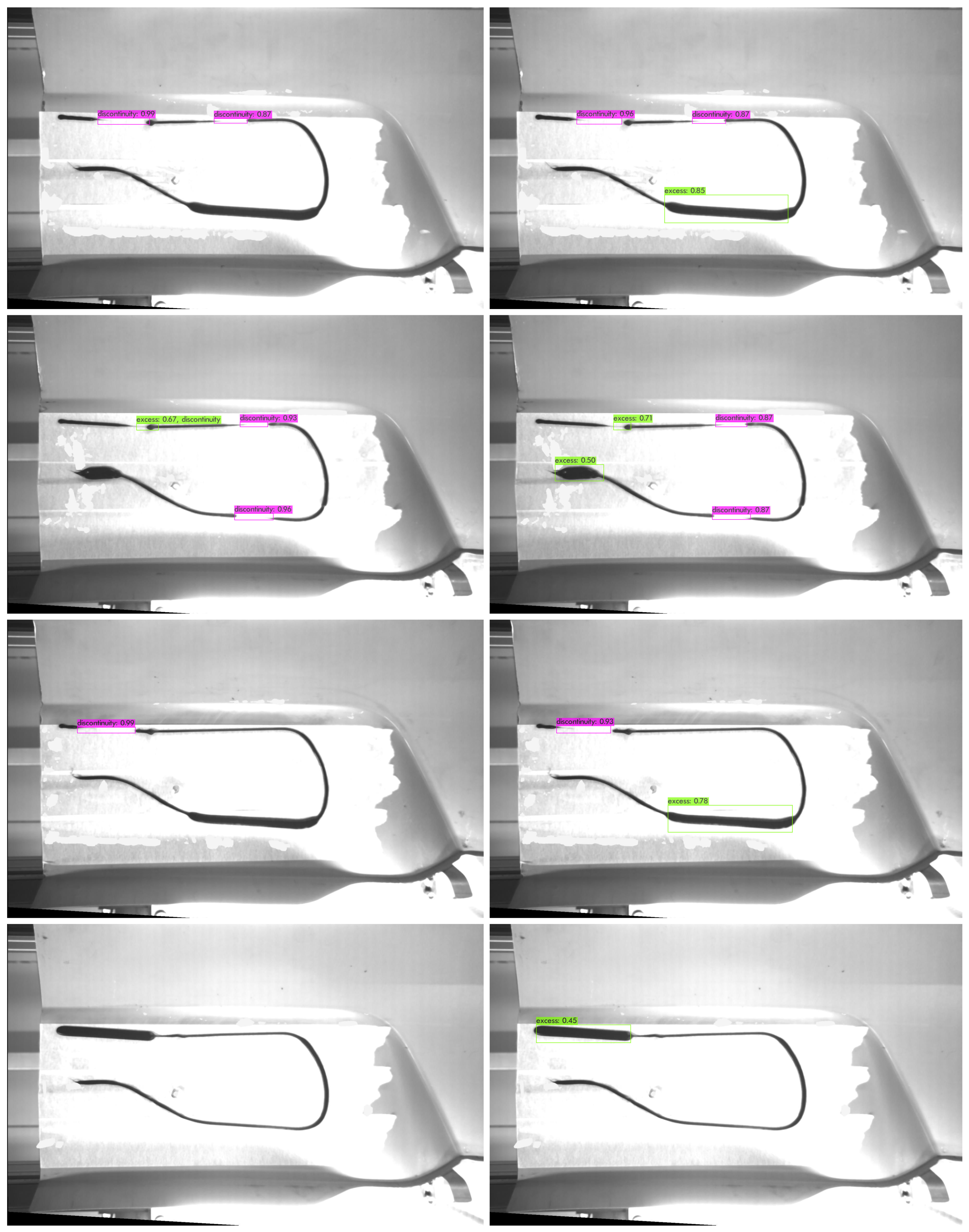

In both cases, the model trained on the training set augmented using the proposed approach attained superior results. Furthermore, the impact of the augmentation becomes even more evident when testing the model with the holdout set. While the validation set contains images with defects that are similar to those present in the real training set, the ones held out are considerably different, for instance with excess defects occurring in parts of the bead that were previously unseen (in both GAN and object detector training sets). Several examples are showcased in

Figure 4, in which the first column (left) depicts results from the model trained only on real data, while the second column (right) shows the detections of the augmented model on the same image.

In addition to this, given the imbalanced nature of the original (real) dataset, it is interesting to take a closer look at the Average Precision (AP) for each class (discontinuity and excess) between the different training sets. These results are presented in

Table 2.

From the analysis of

Table 2 it becomes evident that there is a clear difference in AP between the classes for the model trained only on the real imbalanced dataset. More specifically, the difference amounts to ~10% in the validation set, and ~26% in the holdout set. The considerably larger gap in the latter can be explained once more due to the more pronounced difference between the defects observed in the holdout set and those of the initial dataset. Clear examples of this are provided in

Figure 4, with excess defects occurring at varied parts of the bead.

In contrast, the performance of the model trained on the balanced augmented set is not only superior but also much more harmonized across classes, with a difference of only ~4.5% in the validation set and ~1.5% in the holdout set, clearly showcasing the impact of the augmentation in terms of model performance for this task. While the performance is marginally better for discontinuity defects using a previous approach based on simulated data (0.01 AP), the performance gain enabled by the novel approach for the minority class is much more significant (from 0.3001 to 0.5864 AP).

Lastly, the model trained only on synthetic data resulted in worst performance across all tests, which reveals how important of a role the inclusion of real data still plays in this process, even considering a limited amount of samples.

4. Discussion

Recent advances in the field of GANs have made it possible to train these models with limited data while often achieving results close to previous state-of-the-art approaches. While generally a few thousand training images are still required for this purpose, we show that the usage of a much smaller dataset (under 200 images) still yielded results capable of greatly improving the performance of object detection models in the specific task of structural adhesive inspection, particularly in the case of imbalanced training data. Based on the original recommendation it can be hypothesized that results could be further improved by either increasing the size of the original dataset, or by employing for instance smaller partially overlapping crops.

These results suggest that the proposed approach has the potential to significantly mitigate the issues of data availability in such tasks, as it not only reduces the costs associated with the generation of images (energy, personnel and resources), but also the time required for this process. Naturally, it is generally unfeasible to dedicate a production line solely for the purpose of generating specific defects to create sufficiently large datasets. Even in cases where a pilot line can be used for this purpose, such as the case presented herein, considerable effort is still required to manually trigger and supervise the process, whilst adjusting the parameters for defects with sufficient variety to be generated. Hence, these results reveal the potential of the proposed approach to improve the viability and likelihood of adoption of such a solution at an industrial level.

Particularly regarding

Table 1, the results from the holdout set suggest that the key contribution of the augmentation is not only the increased volume of data, but also the variety of the defects generated by the GAN. This increased variety enables the object detector to perform better in previously unseen settings. This conclusion is further corroborated by

Table 2, which highlights the performance differences between training with the small imbalanced set of real images, and the the larger balanced set of both real and synthetic images.

Nevertheless, some limitations remain to be tackled. On the one hand, the possibility to further increase the variety of the generated images with a finer degree of control on the type of defects generated would be tremendously useful. Besides increasing the size of the dataset, recent methods to explore the model’s latent space such as GANSpace [

27] could be experimented with to achieve this, on top of fine-tuning or extending the base architecture. On the other hand, the effort required to annotate the synthetic dataset is still considerable, as in this case this process was performed manually. A possible direction to explore here could be the usage of semi-supervised approaches to automatically label newly generated images and include them in the training loop, hence reducing the annotation effort.

In addition to this, future work will further explore the comparison of this approach with those based on simulated data (e.g., images generated in simulations such as those created in CoppeliaSim [

28] or the Unity engine). Interesting points of focus can be the time to generate images and the degree of control. In this case, such approaches could prove to be a viable alternative if there are not sufficient real images to train the GAN at an initial stage, so long as the modeling effort required to generate synthetic images with sufficient quality is not too great. Thus, deriving a methodology or guidelines addressing which approach to adopt for different scenarios could prove useful.

5. Conclusions

In this article we propose a novel approach to address the challenge of data availability in the quality inspection of structural adhesive applications, leveraging recent advances regarding GAN training in limited data regimes.

We show that not only can realistic images of a variety of defects be generated quickly with this method, but also that the synthetic dataset can be used to augment scarce training sets for automated inspection solutions based on deep learning, greatly improving their performance on this task. We validate this in a real structural adhesive application line for automotive parts, with preliminary results showing considerable improvements in the mAP of state-of-the-art object detection models at different IoU thresholds when performing the automated defect detection.

Moreover, the proposed approach greatly reduces the costs of generating additional training data with sufficient samples of each defect. The process of generating datasets with sufficient quality and variety for training deep learning models is generally time consuming and costly when using traditional methods, particularly in terms of energy consumption, material and personnel costs. By enabling this at a fraction of the cost/time, this approach greatly contributes to improve the viability of deep learning for such quality inspection tasks.