Providing Predictable Quality of Service in a Cloud-Based Web System

Abstract

1. Introduction

2. Related Work and Motivations

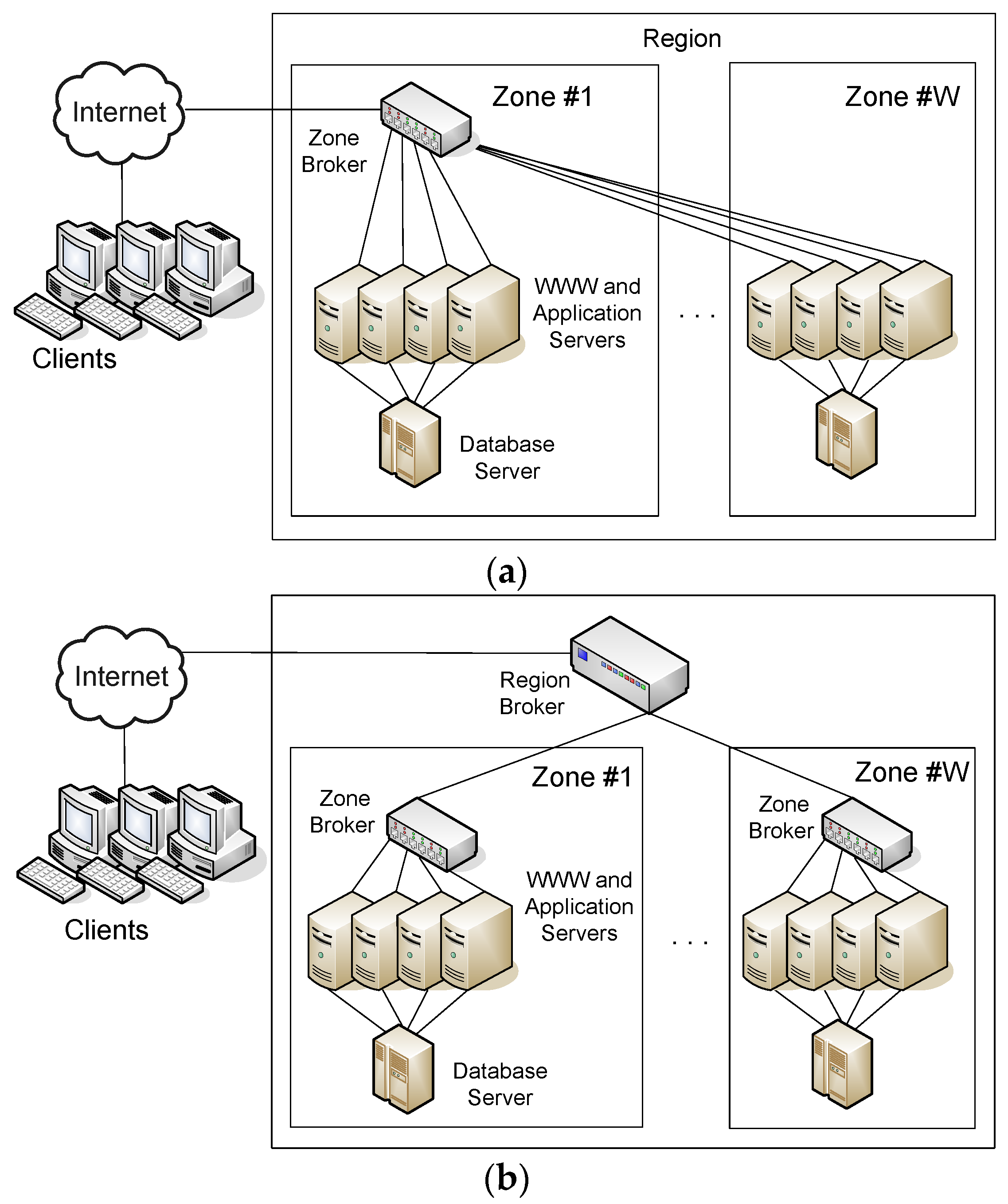

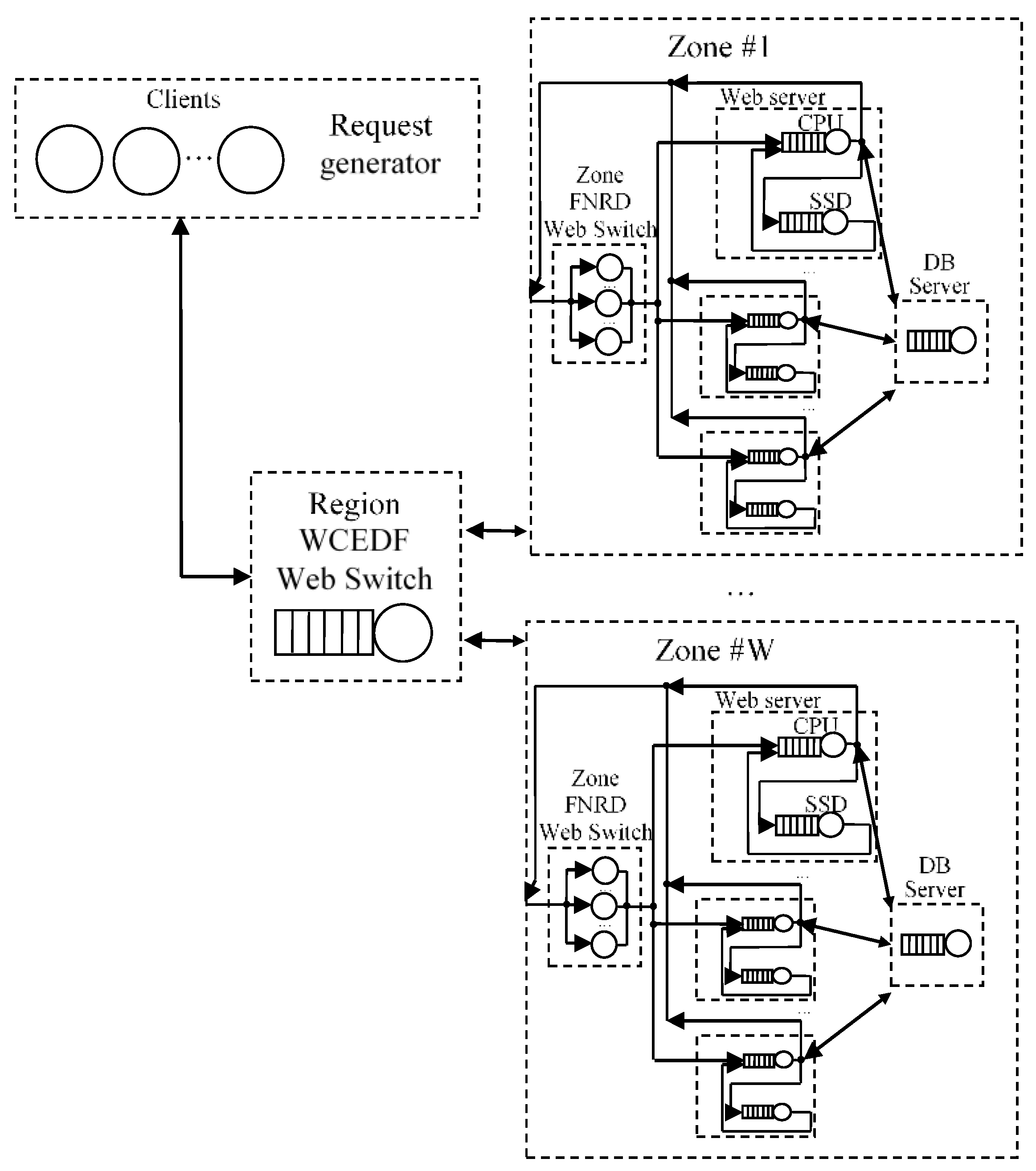

3. Web Cloud Earliest Deadline First Method and Web Switch

- WCEDF web switch distributing HTTP requests among availability zones in the region;

- FNRD web switches distributing HTTP requests among web servers in availability zones;

- Frontend WWW servers and backend database servers, together servicing HTTP requests.

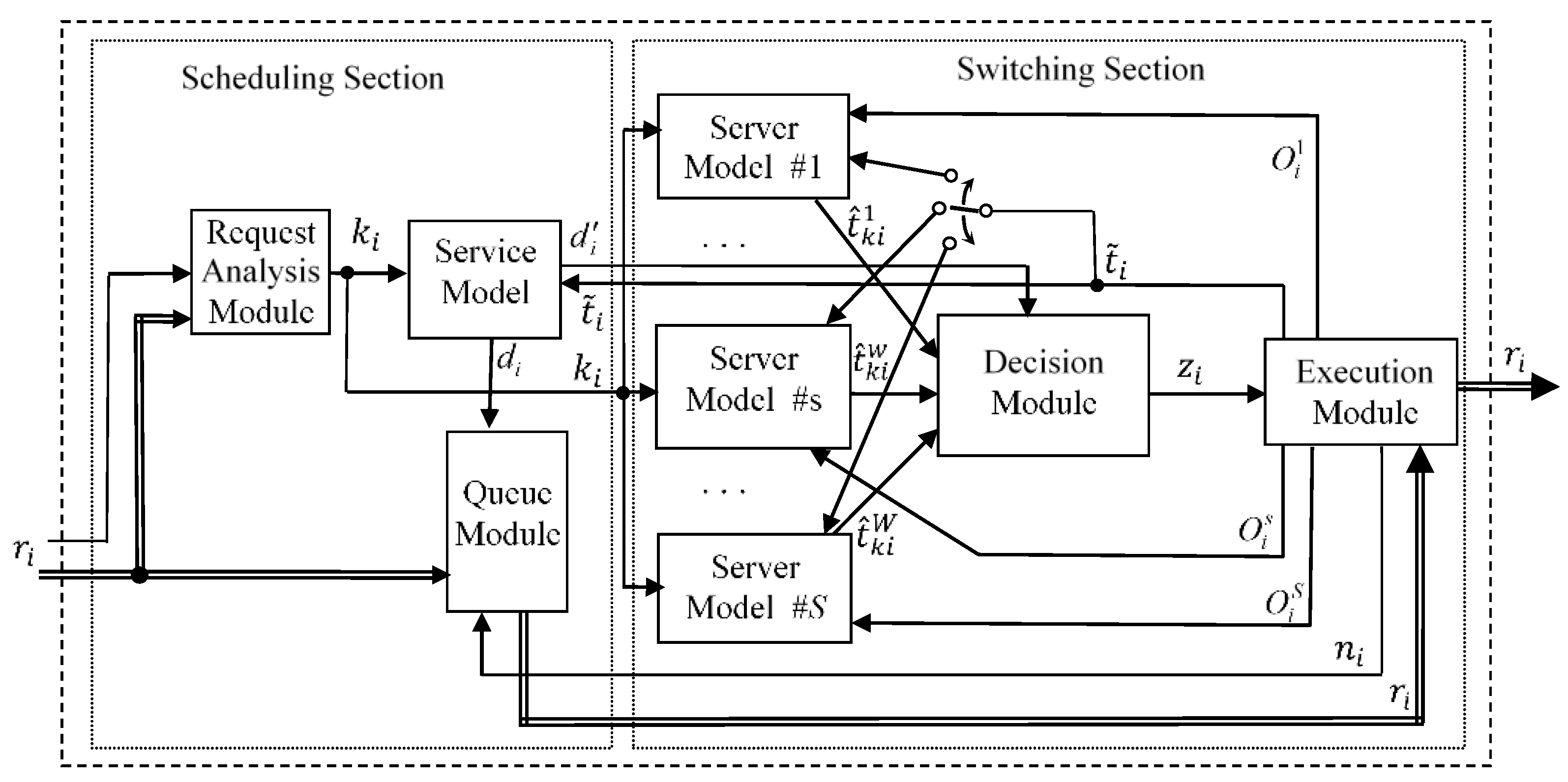

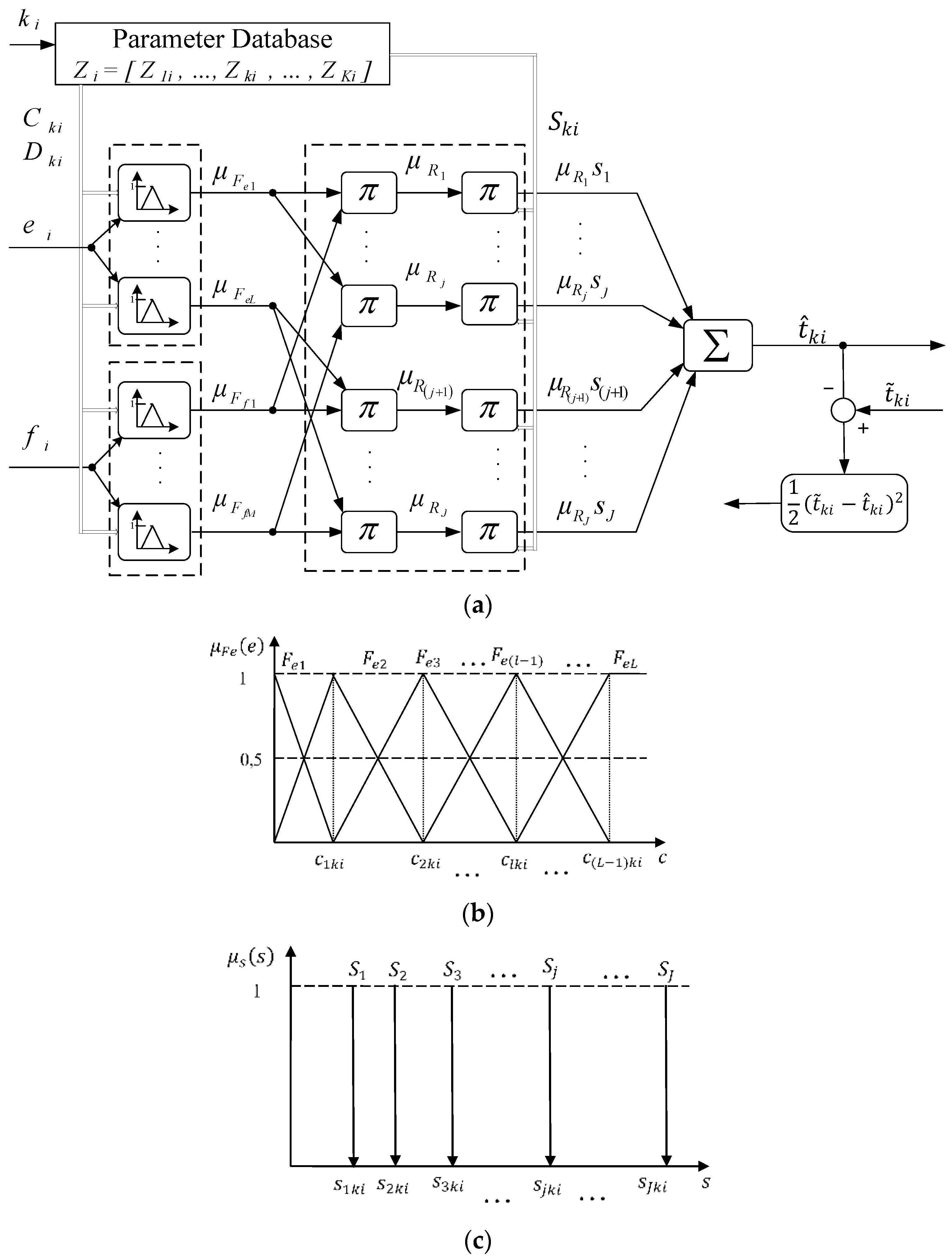

3.1. Scheduling Section

3.2. Switching Section

4. Experiments and Discussion

4.1. Testbed

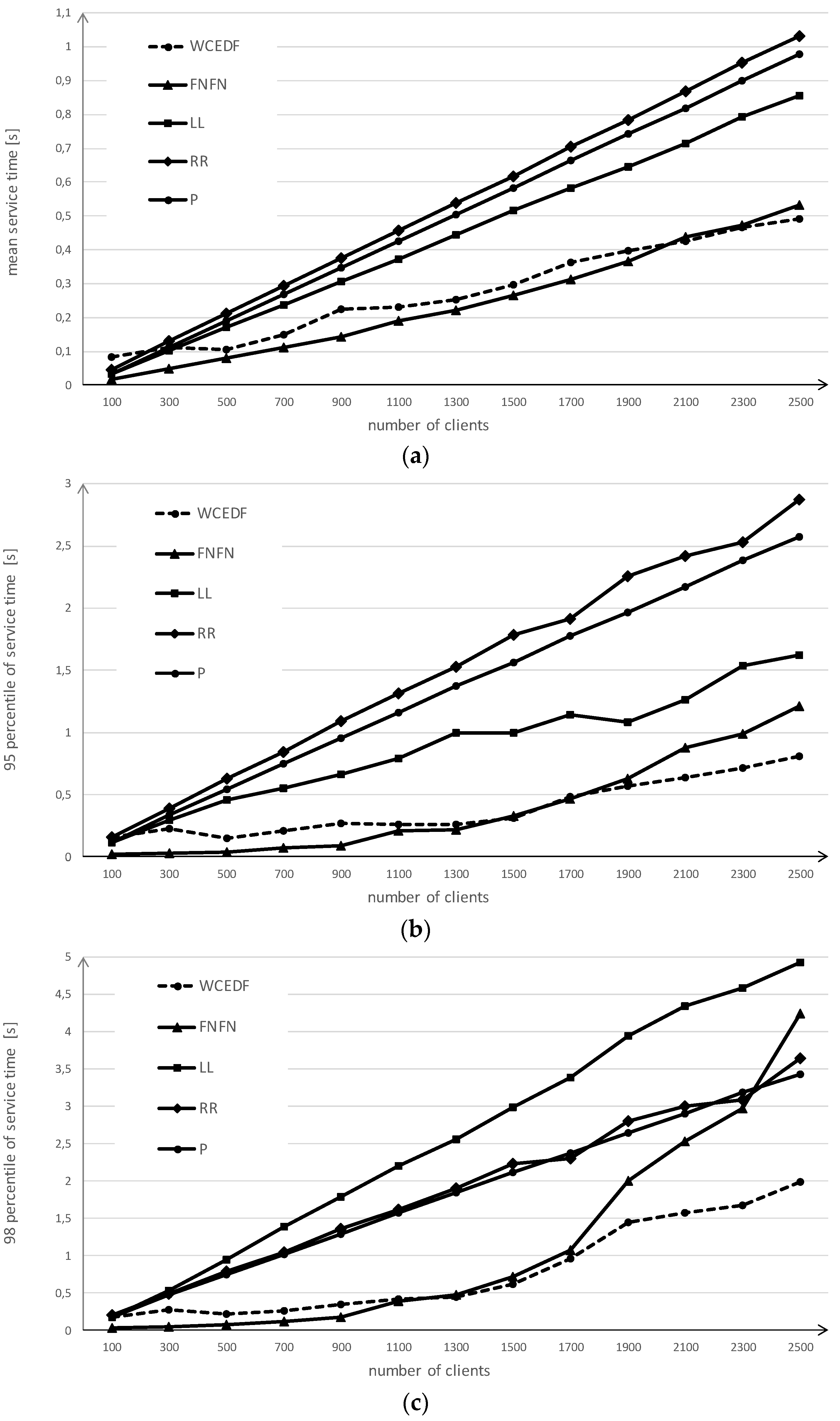

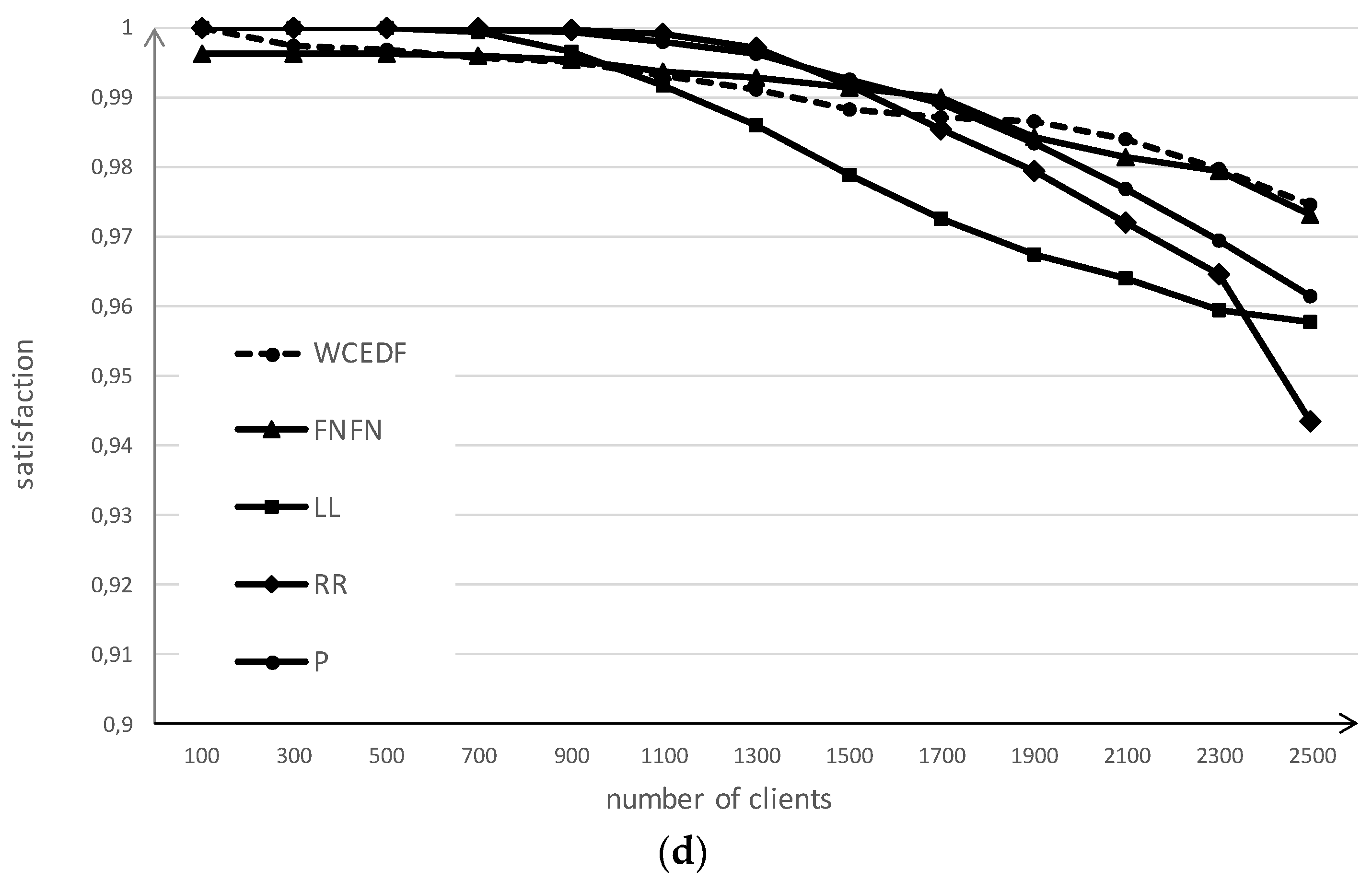

- Round Robin (RR)—assigns HTTP requests to subsequent zones;

- Least Load (LL)—assigns incoming HTTP requests to the zone with the lowest number of serviced HTTP requests;

- Path-based routing (P)—requested resources are assigned to designated zone/web server. In the experiments, a modification of the algorithm was used. It adapted to changing load and behaved like the LARD algorithm [50]. According to this, when the server is overloaded, the service of a given type of requests is moved to the least-loaded server;

- Web Cloud Earliest Deadline First (WCEDF)—strategy presented in this article,

- Fuzzy-Neural Request Distribution (FNRD)—the FNRD strategy is in some way similar to the WCEDF strategy. It also uses neuro-fuzzy models to estimate service time. However, the FNRD strategy does not queue the requests, and the distribution algorithm chooses a web server offering the shortest service time.

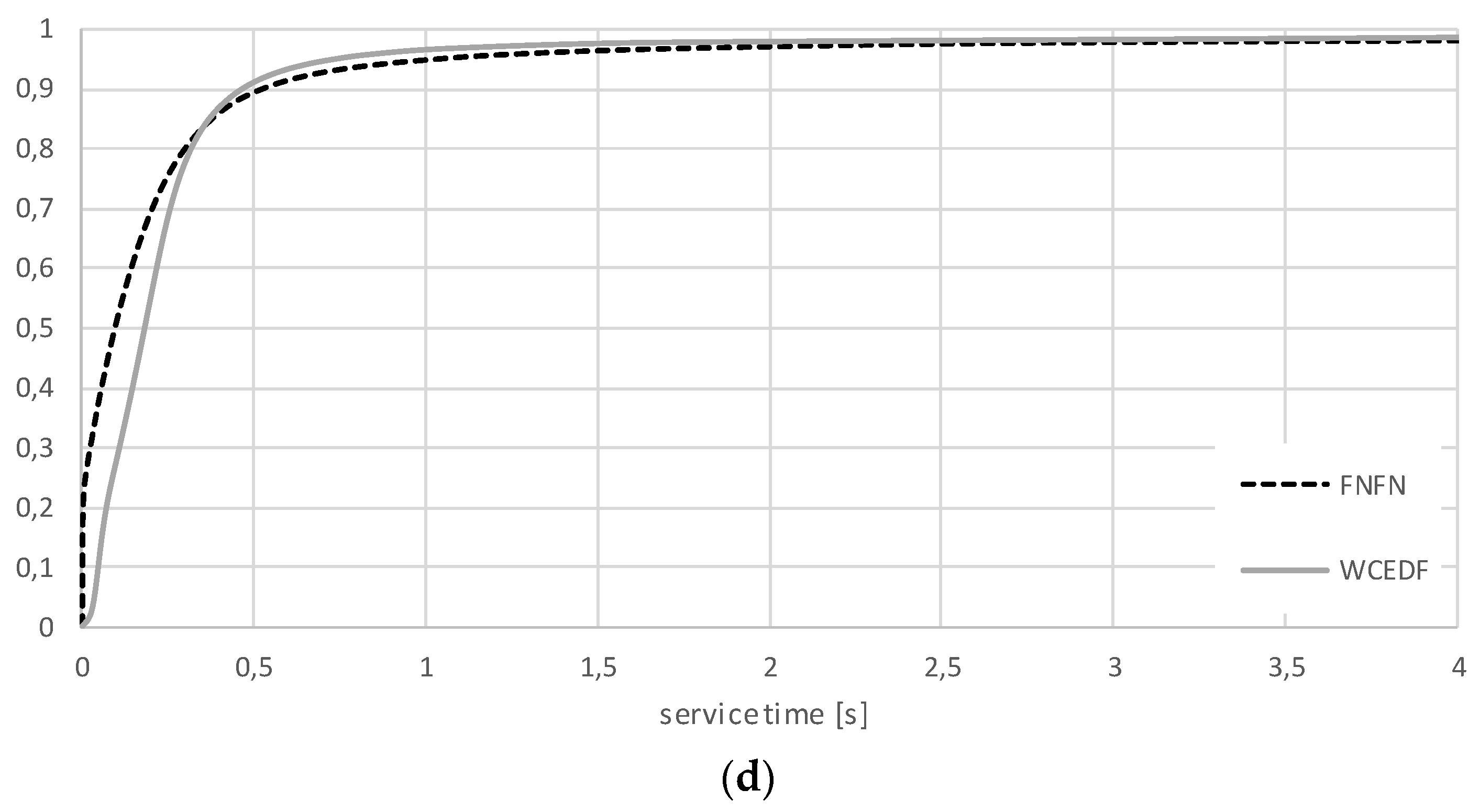

4.2. Experiments

5. Conclusions and Directions for Future Research

- application of the queue of requests in the front of the web system with the method of determining the deadline service times;

- distribution algorithm, designed especially for the proposed solution;

- application of FNRD web switches in the second layer of the decision system.

6. Summary

Funding

Conflicts of Interest

References

- Global Digital Population as of January 2021 (in Millions). Available online: https://www.statista.com/statistics/617136/digital-population-worldwide/ (accessed on 18 February 2021).

- Costello, K.; Rimol, M. Gartner Forecasts Worldwide Public Cloud End-User Spending to Grow 18% in 2021. Gartner. Available online: https://www.gartner.com/en/newsroom/press-releases/2020-11-17-gartner-forecasts-worldwide-public-cloud-end-user-spending-to-grow-18-percent-in-2021 (accessed on 18 February 2021).

- Research and Markets, Raport, Global Forecast to 2025. Available online: https://www.researchandmarkets.com/reports/5136796/cloud-computing-market-by-service-model (accessed on 18 February 2021).

- Lee, B.T.G.; Patt, R.; JeffVoas, C. DRAFT Cloud Computing Synopsis and Recommendations. 2011. Available online: http://csrc.nist.gov/publications/nistpubs/800-146/sp800-146.pdf (accessed on 18 February 2021).

- Puthal, D. Cloud Computing Features, Issues, and Challenges: A Big Picture. In Proceedings of the 2015 International Conference on Computational Intelligence and Networks (CINE), Odisha, India, 12–13 January 2015; pp. 116–123. [Google Scholar]

- Patiniotakis, I.; Verginadis, Y.; Mentzas, G. PuLSaR: Preference-based cloud service selection for cloud service brokers. J. Internet Serv. Appl. 2015. [Google Scholar] [CrossRef]

- Katyal, M.; Mishra, A. A Comparative Study of Load Balancing Algorithms in Cloud Computing Environment. Int. J. Distrib. Cloud Comput. 2013, 1, 806–810. [Google Scholar]

- Montes, J.; Sanchez, A.; Memishi, B.; Pérez, M.S.; Antoniou, G. GMonE: A complete approach to cloud monitoring. Future Gener. Comput. Syst. 2013, 29, 2026–2040. [Google Scholar] [CrossRef]

- Montes, J.; Sánchez, A.; Pérez, M.S. Riding Out the Storm: How to Deal with the Complexity of Grid and Cloud Management. J. Grid Comput. 2012, 10, 349–366. [Google Scholar] [CrossRef]

- AWS documentation, How Elastic Load Balancing Works. Available online: https://docs.aws.amazon.com/elasticloadbalancing/latest/userguide/how-elastic-load-balancing-works.html (accessed on 18 February 2021).

- McCabe, D.J. Network Analysis, Architecture, and Design; Morgan Kaufmann: Boston, MA, USA, 2007. [Google Scholar]

- Alakeel, A. A guide to dynamic load balancing in distributed computer systems. Int. J. Comput. Sci. Inf. Secur. 2010, 10, 153–160. [Google Scholar]

- Rimal, B.P. Architectural requirements for cloud computing systems: An enterprise cloud approach. J. Grid Comput. 2011, 9, 3–26. [Google Scholar] [CrossRef]

- Ponce, L.M.; dos Santos, W.; Meira, W.; Guedes, D.; Lezzi, D.; Badia, R.M. Upgrading a high performance computing environment for massive data processing. J. Internet Serv. Appl. 2019, 10. [Google Scholar] [CrossRef]

- Nuaimi, K.A. A Survey of Load Balancing in Cloud Computing: Challenges and Algorithms. In Proceedings of the 2012 Second Symposium on Network Cloud Computing and Applications (NCCA), London, UK, 3–4 December 2012. [Google Scholar] [CrossRef]

- Zenon, C.; Venkatesh, M.; Shahrzad, A. Availability and Load Balancing in Cloud Computing. In Proceedings of the International Conference on Computer and Soft modeling IPCSI, Singapore, 23 February 2011. [Google Scholar]

- Campelo, R.A.; Casanova, M.A.; Guedes, D.O. A brief survey on replica consistency in cloud environments. J. Internet Serv. Appl. 2020, 11. [Google Scholar] [CrossRef]

- Afzal, S.; Kavitha, G. Load balancing in cloud computing—A hierarchical taxonomical classification. J. Cloud Comp. 2019, 8. [Google Scholar] [CrossRef]

- Rafique, A.; Van Landuyt, D.; Truyen, E. SCOPE: Self-adaptive and policy-based data management middleware for federated clouds. J. Internet Serv. Appl. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Remesh, B.K.R.; Samuel, P. Enhanced Bee Colony Algorithm for Efficient Load Balancing and Scheduling in Cloud. Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2016; pp. 67–78. [Google Scholar]

- Crovella, M.; Bestavros, A. Self-similarity in World Wide Web traffic: Evidence and possible causes. IEEE/ACM Trans. Netw. 1997, 5, 835–846. [Google Scholar] [CrossRef]

- Domańska, J.; Domański, A.; Czachórski, T. The Influence of Traffic Self-Similarity on QoS Mechanisms. In Proceedings of the SAINT 2005 Workshop, Trento, Italy, 20 February 2005. [Google Scholar]

- Suchacka, G.; Dembczak, A. Verification of Web Traffic Burstiness and Self-Similarity for Multiple Online Stores. Advances in Intelligent Systems and Computing; Springer International Publishing: Heidelberg, 2017; Volume 655, pp. 305–314. [Google Scholar]

- Suraj, P.; Wu, L.; Mayura Guru, S.; Buyya, R. A Particle Swarm Optimization-Based Heuristic for Scheduling Workflow Applications in Cloud Computing Environments. In Proceedings of the 24th IEEE International Conference on Advanced In-formation Networking and Applications, Perth, WA, Australia, 20–23 April 2010. [Google Scholar] [CrossRef]

- Farid, M.; Latip, R.; Hussin, M.; Abdul Hamid, N.A.W. A Survey on QoS Requirements Based on Particle Swarm Optimization Scheduling Techniques for Workflow Scheduling in Cloud Computing. Symmetry 2020, 12, 551. [Google Scholar] [CrossRef]

- Sharifian, S.; Akbari, M.K.; Motamedi, S.A. An Intelligence Layer-7 Switch for Web Server Clusters. In Proceedings of the 3rd International Conference: Sciences of Electronic, Technologies of Information and Telecommunications SETIT, Susa, Tunisia, 27–31 March 2005; pp. 5–20. [Google Scholar]

- Bryniarska, A. The n-Pythagorean Fuzzy Sets. Symmetry 2020, 12, 1772. [Google Scholar] [CrossRef]

- Abdelzaher, T.F.; Shin, K.G.; Bhatti, N. Performance Guarantees for Web Server End-Systems. A Control-Theoretical Approach. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 80–96. [Google Scholar] [CrossRef]

- Blanquer, J.M.; Batchelli, A.; Schauser, K.; Wolski, R. Quorum: Flexible quality of service for internet services. In Proceedings. of the 2nd Conference on Symposium on Networked Systems Design & Implementation; USENIX Association: Berkeley, CA, USA, 2005; Volume 2, pp. 159–174. [Google Scholar]

- Borzemski, L.; Zatwarnicki, K. CDNs with Global Adaptive Request Distribution. In Proceedings of the 12th International Conference on Knowledge-Based & Intelligent Information & Engineering Systems, Zagreb, Croatia, 3–5 September 2008; Springer: Heidelberg, Germany, 2008; pp. 117–124. [Google Scholar]

- Harchol-Balter, M.; Schroeder, B.; Bansal, N.; Agrawal, M. Size-based scheduling to improve web performance. ACM Trans. Comput. Syst. 2003, 21, 207–233. [Google Scholar] [CrossRef]

- Kamra, A.; Misra, V.; Nahum, E. A Self Tubing Controller for Managing the Performance of 3-Tiered Websites. In Proceedings of the 12th International Workshop Quality of Service, Montreal, QC, Canada, 7–9 June 2004; pp. 47–56. [Google Scholar]

- Wei, J.; Xu, C.-Z. QoS: Provisioning of client-perceived end-to-end QoS guarantees in Web servers. IEEE Trans. Comput. 2006, 55, 1543–1556. [Google Scholar]

- Zatwarnicki, K. Adaptive Scheduling System Guaranteeing Web Page Response Times. Computational Collective Intelligence; Lecture Notes in Artificial Intelligence. In Proceedings of the 4th International Conference, ICCI, Ho Chi Mihn, Vietnam, 28–30 November 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Zatwarnicki, K.; Płatek, M.; Zatwarnicka, A. A Cluster-Based Quality Aware Web System. In ISAT 2015–Part II. Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2015; Volume 430. [Google Scholar]

- Zatwarnicki, K. Operation of Cluster-Based Web System Guaranteeing Web Page Response Time. In Computational Collective Intelligence. Technologies and Applications; Lecture Notes in Computer Science (ICCCI 2013); Bǎdicǎ, C., Nguyen, N.T., Brezovan, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8083. [Google Scholar]

- Serrano, D. Towards QoS-Oriented SLA Guarantees for Online Cloud Services. In Proceedings of the 13th IEEE/ACM International Symposium on Cluster, Cloud, and Grid Computing, Delft, The Netherlands, 13–16 May 2013; pp. 50–57. [Google Scholar]

- Chhetri, M.B.; Vo, Q.; Kowalczyk, R. Policy-Based Automation of SLA Establishment for Cloud Computing Services. In Proceedings of the 2012 12th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Ottawa, ON, Canada, 13–16 May 2012; pp. 164–171. [Google Scholar]

- Rao, J.; Wei, Y.; Gong, J.; Xu, C. QoS Guarantees and Service Differentiation for Dynamic Cloud Applications. IEEE Trans. Netw.Serv. Manag. 2013, 10, 43–55. [Google Scholar]

- Jing, W.; Zhao, C.; Miao, Q.; Song, H.; Chen, G. QoS-DPSO: QoS-aware Task Scheduling for Cloud Computing System. J. Netw. Syst. Manag. 2021, 29. [Google Scholar] [CrossRef]

- Zatwarnicki, K. Adaptive control of cluster-based web systems using neuro-fuzzy models. Int. J. Appl. Math. Comput. Sci. 2012, 22, 365–377. [Google Scholar] [CrossRef]

- Zatwarnicki, K.; Zatwarnicka, A. Application of an Intelligent Request Distribution Broker in Two-Layer Cloud-Based Web System. In Computational Collective Intelligence; Lecture Notes in Computer Science (ICCCI 2019); Springer: Cham, Switzerland, 2019; Volume 11684. [Google Scholar]

- Zatwarnicki, K.; Zatwarnicka, A. An Architecture of a Two-Layer Cloud-Based Web System Using a Fuzzy-Neural Request Distribution. Vietnam J. Comput. Sci. 2020, 7. [Google Scholar] [CrossRef]

- Zatwarnicki, K. Two-level fuzzy-neural load distribution strategy in cloud-based web system. J. Cloud Comput. 2020, 9, 1–11. [Google Scholar] [CrossRef]

- Mamdani, E.H. Application of fuzzy logic to approximate reasoning using linguistic synthesis. IEEE Trans. Comput. 1977, C-26, 1182–1191. [Google Scholar] [CrossRef]

- Rumelhart, D.; Hinton, G.; Williams, R. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- OMNeT++ Discrete Event Simulator. Available online: https://www.omnetpp.org/ (accessed on 18 February 2021).

- Cao, J.; Cleveland, S.W.; Gao, Y.; Jeffay, K.; Smith, F.D.; Weigle, M.C. Stochastic models for generating synthetic HTTP source traffic. In Proceedings of the Twenty-third Annual Joint Conference of the IEEE Computer and Communications Societies. INFOCOM, Hong-Kong, China, 7–11 March 2004; pp. 1547–1558. [Google Scholar]

- Sony Music, Main Page. Available online: https://www.sonymusic.com/ (accessed on 9 March 2020).

- Pai, V.S.; Aron, M.; Banga, G.; Svendsen, M.; Druschel, P.; Zwaenepoel, W.; Nahum, E. Locality-aware request distribution in cluster-based network servers. In Proceedings of the 8th International Conference on Architectural Support for Programming Languages and Operating Systems, San Jose, CA, USA, 10 October 1998; pp. 205–216. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zatwarnicki, K. Providing Predictable Quality of Service in a Cloud-Based Web System. Appl. Sci. 2021, 11, 2896. https://doi.org/10.3390/app11072896

Zatwarnicki K. Providing Predictable Quality of Service in a Cloud-Based Web System. Applied Sciences. 2021; 11(7):2896. https://doi.org/10.3390/app11072896

Chicago/Turabian StyleZatwarnicki, Krzysztof. 2021. "Providing Predictable Quality of Service in a Cloud-Based Web System" Applied Sciences 11, no. 7: 2896. https://doi.org/10.3390/app11072896

APA StyleZatwarnicki, K. (2021). Providing Predictable Quality of Service in a Cloud-Based Web System. Applied Sciences, 11(7), 2896. https://doi.org/10.3390/app11072896