Optimization of Inventory Management to Prevent Drug Shortages in the Hospital Supply Chain

Abstract

1. Introduction

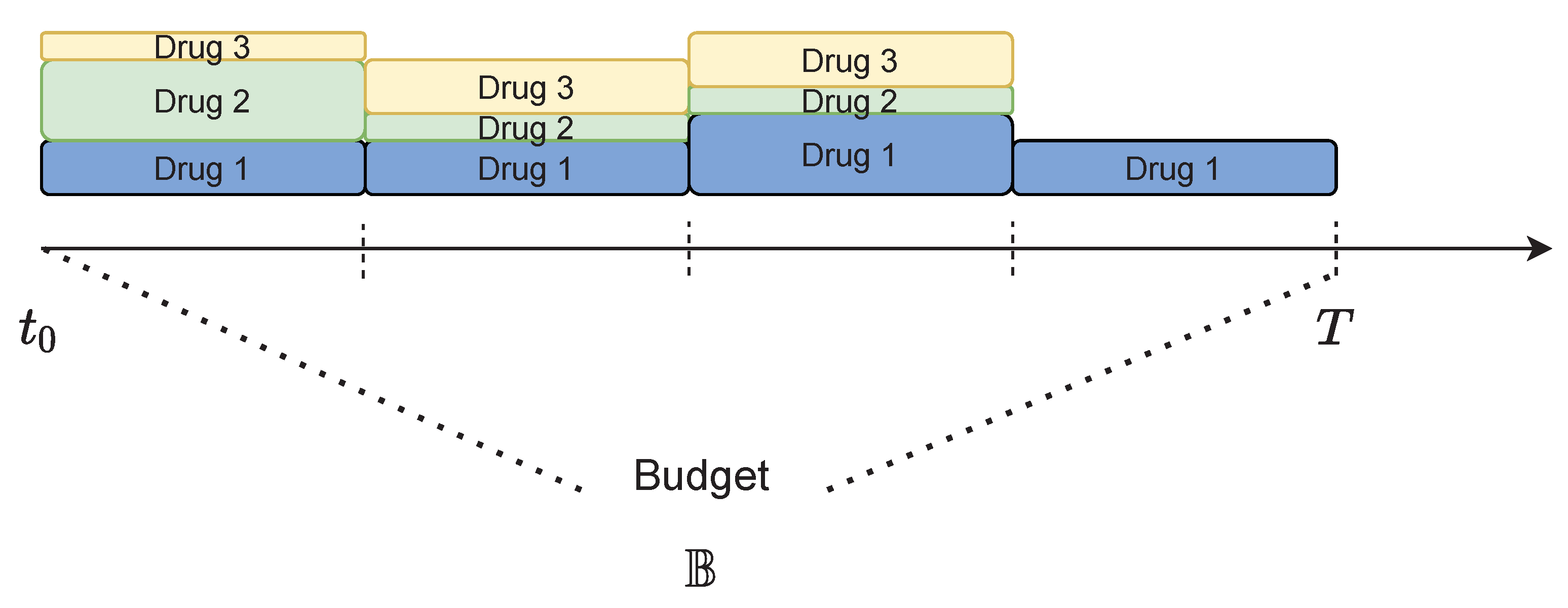

- First, we investigate the supply chain model of drugs in a hospital to formulate an optimization drug shortage problem, named Dynamic Refilling dRug Optimization (DR2O). We model an objective function that aims to minimize the refilling costs, comprised of the costs to buy, to store medicines, and the penalty cost due to shortage. Furthermore, we consider supply constraints to refill drugs, such as storage capacity, and budget constraints.

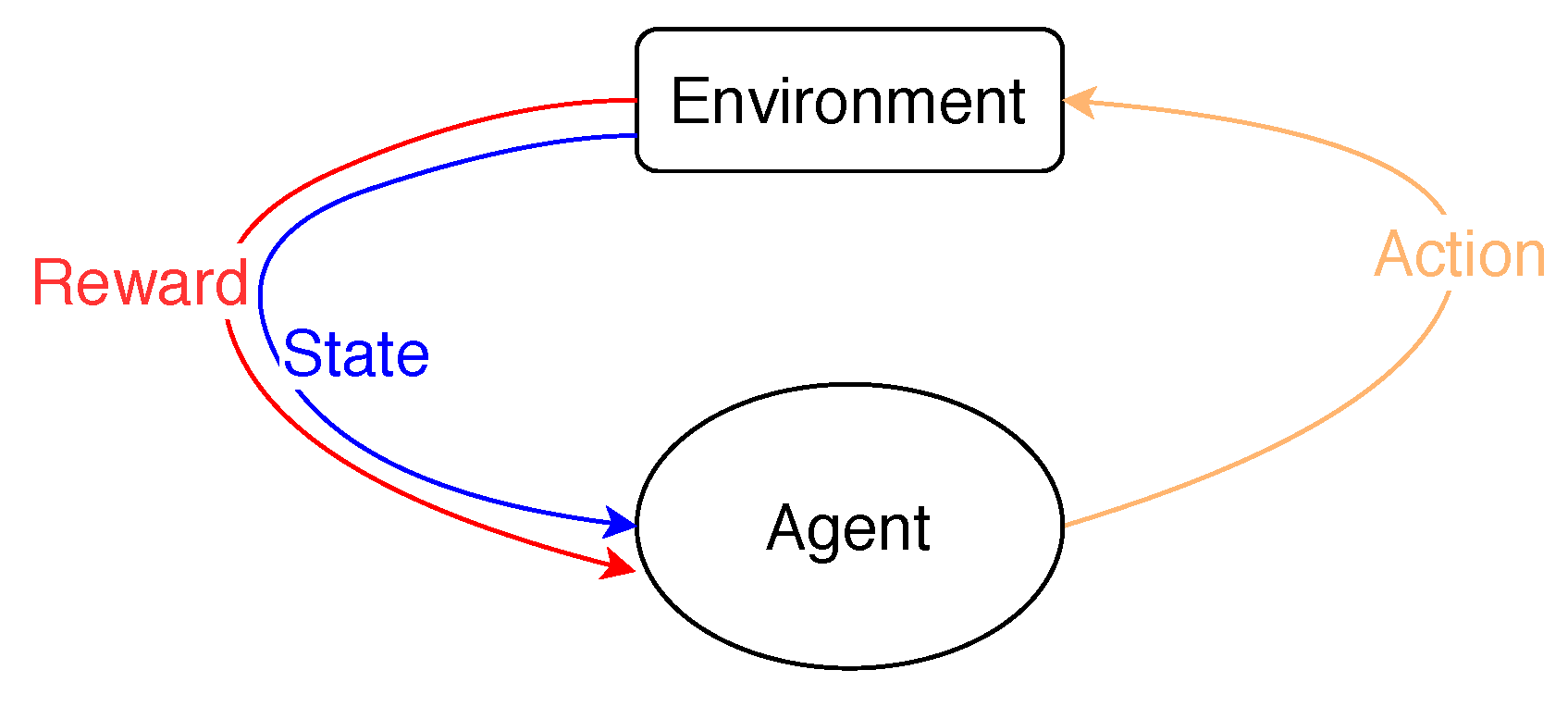

- Second, we propose a deep learning method based on RL and DNN, named the Deep Reinforcement Learning model for Drug inventory (DRLD), where the situation of drugs is formed as a state in a Markov Decision Process (MDP) [9]. Depending on each state, we look for a suitable action to make a refilling decision in order to minimize the objective cost function. Based on the MDP model, we design an online method to control the system, where a reward and Q-matrices are built to evaluate an action corresponding to each state. Due to a large searching state space in RL, we introduce a DNN model that can approximate the Q-values after training that is able to learn the behavior of the system.

- Finally, we consider an intensive simulation to conduct our work. In detail, we make a comparison between our method and three baseline approaches, including over-provisioning, ski-rental [11], and max-min [12]. Our method outperforms in most evaluations, especially in reducing the refilling cost and shortage situation.

2. Related Work

2.1. Defining Inventory Management and the Hospital Supply Chain

2.2. The Importance of Optimizing the Inventory Management to Prevent Drug Shortages of the Hospital Supply Chain

2.3. Minimization of Drug Shortages in Hospital Supply Chains

3. System Model

3.1. Problem Formulation

- Medicine cost: We consider the first term as the medicine cost depending on the amount of drugs being ordered: , where is the weight parameter of drug i and is the amount of money required to purchase the required amount of drug i. Depending on the drugs’ priorities, is set with a high or low value.

- Storage cost: We design the second term as the storage cost incurred when the hospital stores drugs. Hospitals may incur a variety of costs associated with safely storing medicine, and some must utilize third-party storage. In this work, we generally model the storage cost as a function depending on , the amount of drugs, and , the storage cost. The volume of drug i at time t can be obtained by:where is the remaining volume of drug i at timeslot , is the amount of the demand, and is the amount of the drug available before expiration at time t.

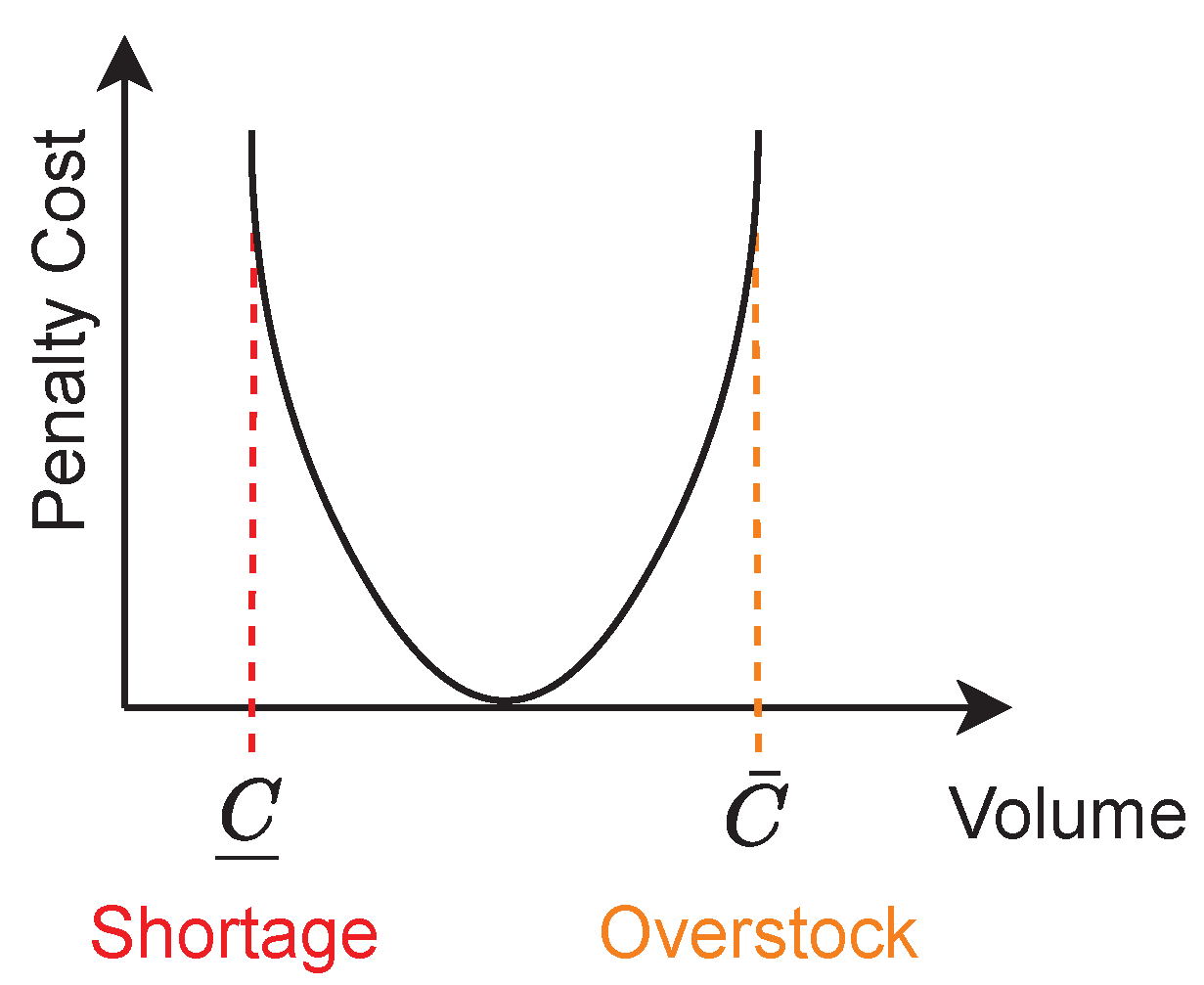

- Penalty cost: A penalty cost is assigned to prevent a shortage situation in the system. This cost depends on the volume and the minimum requirement for the drugs at time t. Without loss of generality, we refer this cost to a quadratic function to formulate our model. As shown in Figure 1, the quadratic penalty cost increases when the volume of drug i reaches the bounded values and . In other words, the penalty cost function aims to prevent both the shortage and overstock problems.

3.2. A Dynamic Refilling Drug Optimization Model

4. Reinforcement Learning

4.1. Markov Decision Process Model

4.2. Deep Q-Learning

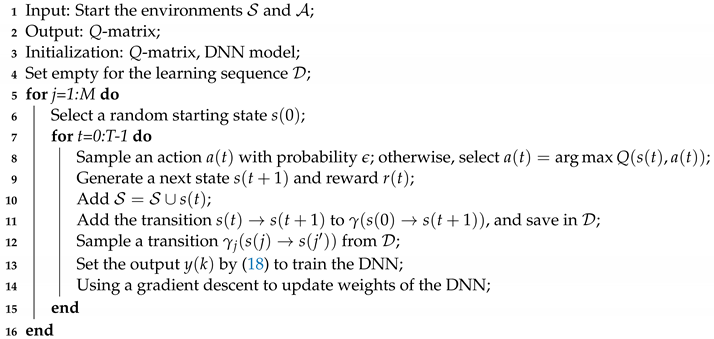

| Algorithm 1: Reinforcement learning. |

|

4.3. Training and Testing Phases

| Algorithm 2: DRLD- training algorithm. |

|

4.4. Discussion

5. Experiment and Numerical Results

5.1. Experiment Configuration

- Optimal: We use the Ipopt solver [42] to solve the DR2O problem with the assumption that the demand during T is given. Hence, the optimal result of DR2O, in this case, can be considered as the offline optimal solution to compare with other approaches.

- Over-provisioning: This is a simple strategy that is often carried out in the inventory system. Based on the average utilization (gathered from the log files of history), the expired period, and the current remaining volume of drugs, a refilling decision is considered. To prevent a shortage problem, the amount of drugs to be refilled is often provided with an additional volume, which results in a higher cost of operation.

- Ski-rental: As presented in the formulation, DR2O can be an instance of the ski-rental problem. Therefore, to evaluate the performance of the DRLD, we implement an online algorithm with the c-competitive value where .

- Max-min [12]: This refilling strategy is one of the most useful mechanisms for inventory management. By using the min level, a trigger will be active to make a refilling decision to obtain the maximum target quantity. To prevent the shortage problem, the min value is often set with a high volume (we use 45% in our work); therefore, the max-min baseline also results in a high total cost.

5.2. Results

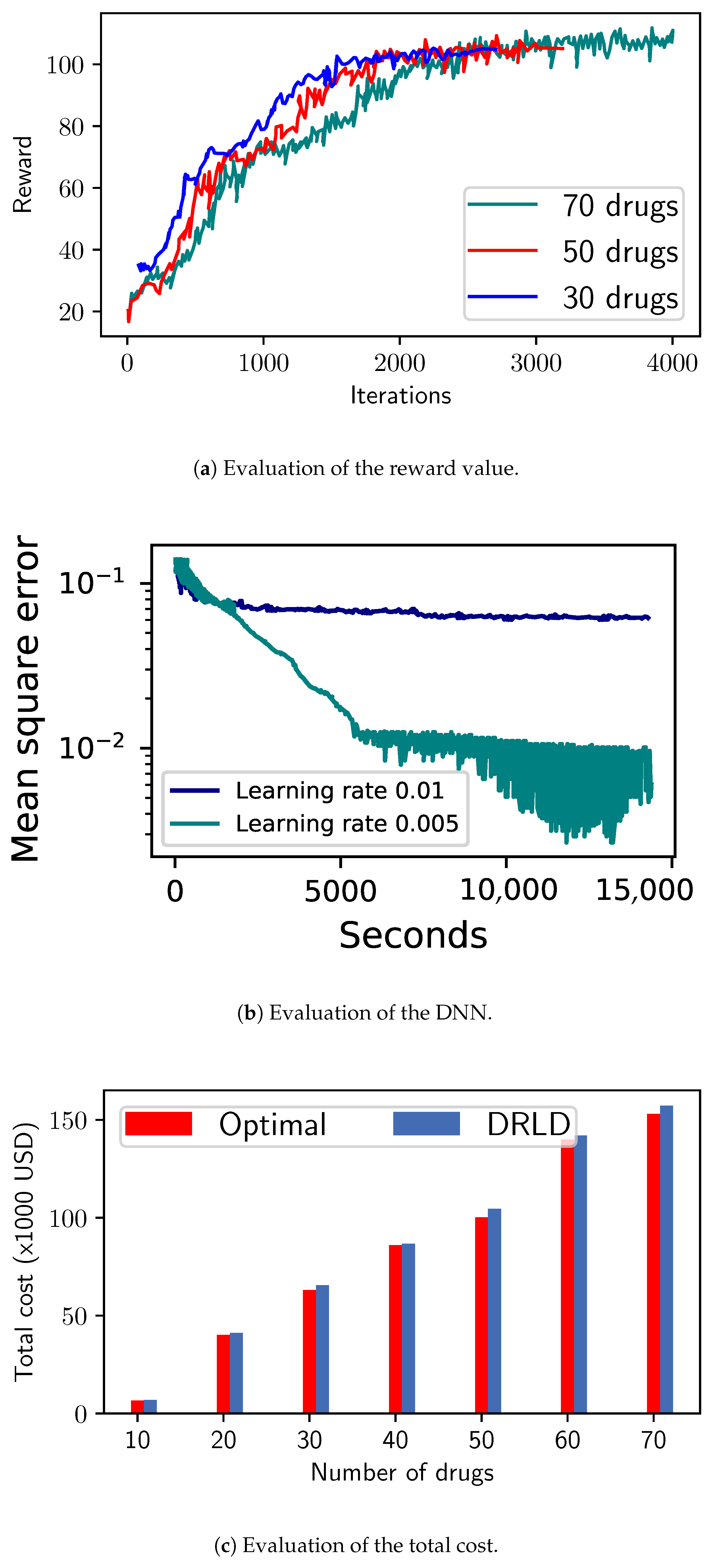

5.2.1. Convergence Evaluation

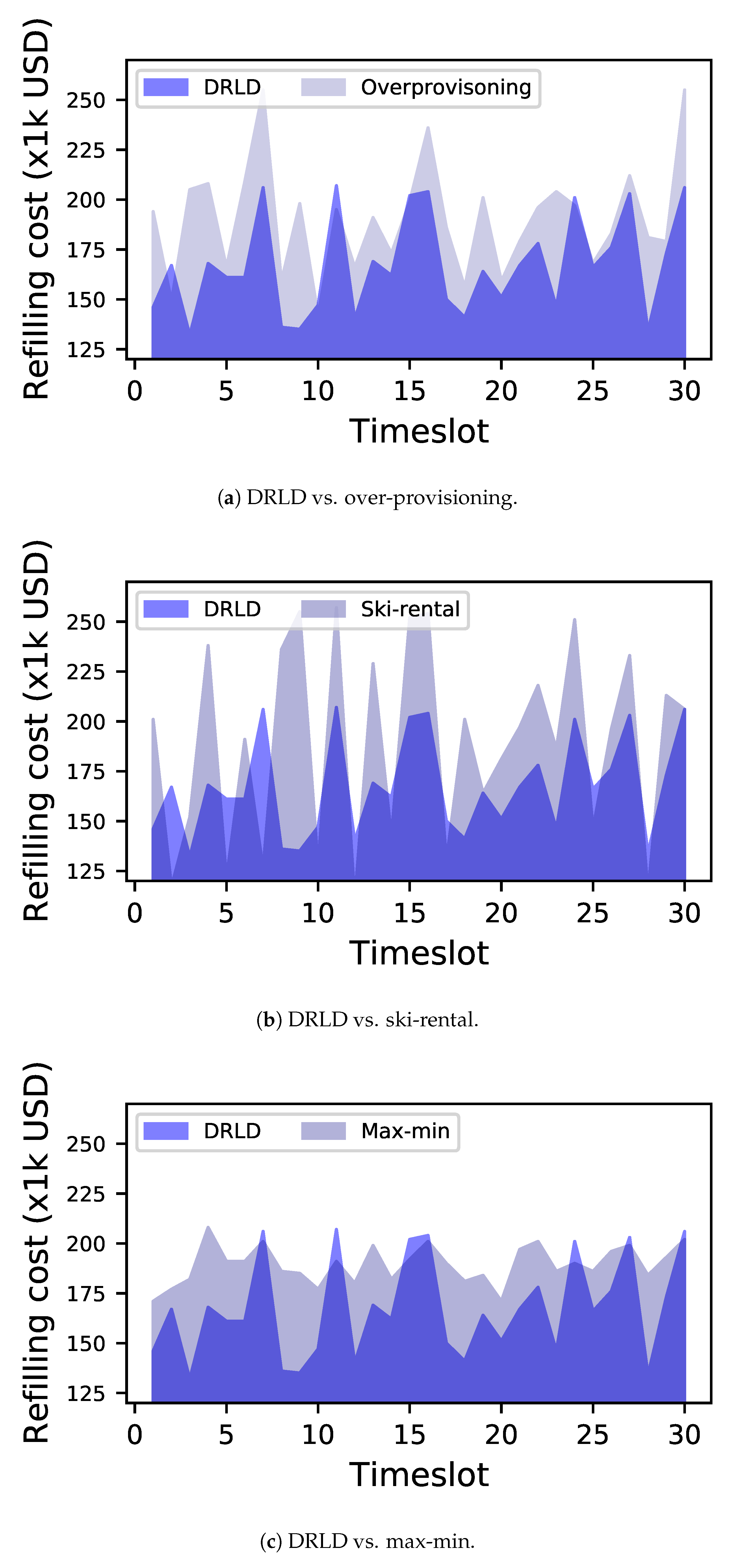

5.2.2. Evaluation of the Refilling Cost

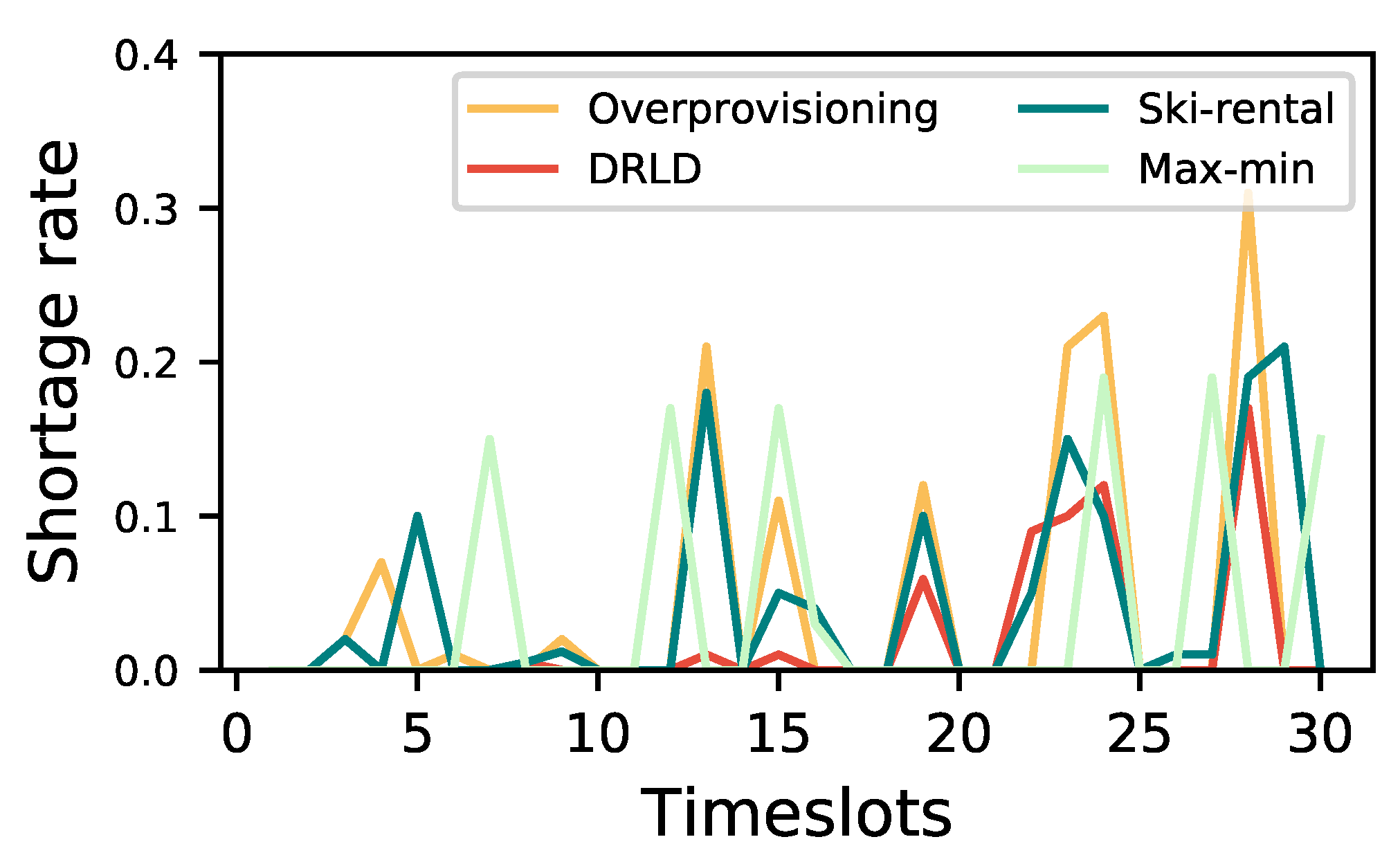

5.2.3. Evaluation of the Shortage Situation

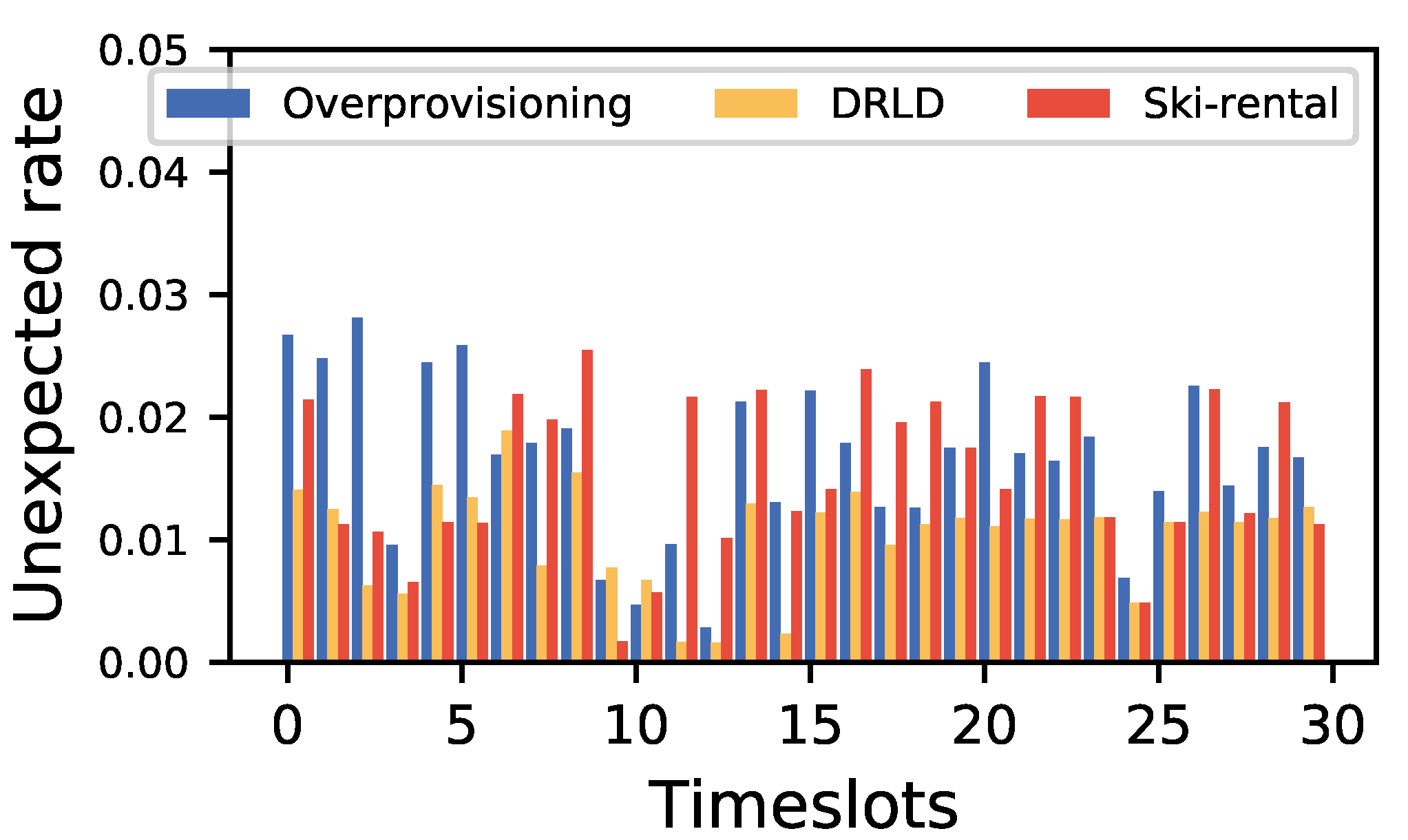

5.2.4. Evaluation of the Unexpected Rate

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Sample Availability

References

- Kaakeh, R.; Sweet, B.V.; Reilly, C.; Bush, C.; DeLoach, S.; Higgins, B.; Clark, A.M.; Stevenson, J. Impact of drug shortages on U.S. health systems. Am. J. Health Syst. Pharm. 2011, 68, 1811–1819. [Google Scholar] [CrossRef]

- Fox, E.R.; Burgunda, V. Sweet, and Valerie Jensen, Drug shortages: A complex health care crisis. Mayo Clin. Proc. 2014, 89, 361–373. [Google Scholar] [CrossRef]

- Stevenson, W.J.; Hojati, M.; Cao, J. Operations Management, 6th ed.; Stevenson explains this well in chapter 12 page 455; Thomson Reuters: Toronto, ON, Canada, 2018. [Google Scholar]

- Holm, M.R.; Rudis, M.I.; Wilson, J.W. Medication supply chain management through implementation of a hospital pharmacy computerized inventory program in Haiti. Glob Health Action 2015, 8, 26546. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Chabner, B.A. Early accelerated approval for highly targeted cancer drugs. N. Engl. J. Med. 2011, 364, 1087–1089. [Google Scholar] [CrossRef]

- Mazer-Amirshahi, M.; Pourmand, A.; Singer, S.; Pines, J.M.; Van den Anker, J. Critical drug shortages: Implications for emergency medicine. Acad. Emerg. Med. 2014, 21, 704–711. [Google Scholar] [CrossRef] [PubMed]

- Postacchini, M.; Centurioni, L.R.; Braasch, L.; Brocchini, M.; Vicinanza, D. Lagrangian Observations of Waves and Currents From the River Drifter. IEEE J. Ocean. Eng. 2015, 41, 94–104. [Google Scholar]

- De Weerdt, E.; Simoens, S.; Casteels, M.; Huys, I. Toward a European definition for a drug shortage: A qualitative study. Front. Pharmacol. 2015, 6, 253. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; A Bradford Book; The MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Karlin, A.R.; Manasse, M.S.; McGeoch, L.A.; Owicki, S. Competitive randomized algorithms for non-uniform problems. In Proceedings of the First Annual ACM-SIAM Symposium on Discrete Algorithms, San Francisco, CA, USA, 22–24 January 1990; pp. 301–309. [Google Scholar]

- Mjelde, K.M. Max-min resource allocation. BIT 1983, 23, 529–537. [Google Scholar] [CrossRef]

- Qiu, Y.; Qiao, J.; Pardalos, P.M. Optimal production, replenishment, delivery, routing and inventory management policies for products with perishable inventory. Omega 2019, 82, 193–204. [Google Scholar] [CrossRef]

- Pyke, D.; Peterson, R.; Silver, E. Inventory Management and Production Planning and Scheduling (Third Edition). J. Oper. Res. Soc. 2001, 52, 845. [Google Scholar]

- Muller, M. Essentials of Inventory Management; HarperCollins Leadership: Nashville, TN, USA, 2019. [Google Scholar]

- Ageron, B.; Benzidia, S.; Bourlakis, M. Healthcare logistics and supply chain—Issues and future challenges. Supply Chain. Forum Int. J. 2018, 19, 1–3. [Google Scholar] [CrossRef]

- Moons, K.; Waeyenbergh, G.; Pintelon, L. Measuring the logistics performance of internal hospital supply chains—A literature study. Omega 2019, 82, 205–217. [Google Scholar] [CrossRef]

- Wild, T. Best Practice in Inventory Management; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Bradley, R.V.; Esper, T.L.; In, J.; Lee, K.B.; Bichescu, B.C.; Byrd, T.A. The joint use of RFID and EDI: Implications for hospital performance. Prod. Oper. Manag. 2018, 27, 2071–2090. [Google Scholar] [CrossRef]

- Kritchanchai, D.; Hoeur, S.; Engelseth, P. Develop a strategy for improving healthcare logistics performance. Supply Chain. Forum Int. J. 2018, 19, 55–69. [Google Scholar] [CrossRef]

- Tiwari, S.; Sana, S.S.; Sarkar, S. Joint economic lot sizing model with stochastic demand and controllable lead-time by reducing ordering cost and setup cost. Rev. Real Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. 2018, 112, 1075–1099. [Google Scholar] [CrossRef]

- Loftus, P. Shortages of simple drugs thwart treatments. Wall Str. J. 2017, 1. [Google Scholar]

- Kees, M.C.; Bandoni, J.A.; Moreno, M.S. An Optimization Model for Managing the Drug Logistics Process in a Public Hospital Supply Chain Integrating Physical and Economic Flows. Ind. Eng. Chem. Res. 2019, 58, 3767–3781. [Google Scholar] [CrossRef]

- De Kok, T.; Grob, C.; Laumanns, M.; Minner, S.; Rambau, J.; Schade, K. A typology and literature review on stochastic multi-echelon inventory models. Eur. Oper. Res. 2018, 269, 955–983. [Google Scholar] [CrossRef]

- Abdulsalam, Y.; Schneller, E. Hospital supply expenses: An important ingredient in health services research. Med. Care Res. Rev. 2019, 76, 240–252. [Google Scholar] [CrossRef]

- Michigan State University. Changes and Challenges in the Healthcare Supply Chain. 2019. Available online: https://www.michiganstateuniversityonline.com/resources/healthcare-management/changes-and-challenges-in-the-healthcare-supply-chain/ (accessed on 1 December 2020).

- Canadian Pharmacists Association. Canadian Drug Shortages Survey: Final Report (PDF). 2010. Available online: https://www.pharmacists.ca/cpha-ca/assets/File/cpha-on-the-issues/DrugShortagesReport.pdf (accessed on 1 December 2020).

- TZwaida, A.; Beauregard, Y.; Elarroudi, K. Comprehensive Literature Review about Drug Shortages in the Canadian Hospital’s Pharmacy Supply Chain. In Proceedings of the International Conference on Engineering, Science, and Industrial Applications (ICESI), Tokyo, Japan, 22–24 August 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Shiau, J.-Y. A drug association based inventory control system for ambulatory care. J. Inf. Optim. Sci. 2019, 40, 1351–1365. [Google Scholar] [CrossRef]

- Silver, E.A.; Pyke, D.F.; Thomas, D.J. Inventory and Production Management in Supply Chains, 4th ed.; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- VanVactor, J.D. Healthcare logistics in disaster planning and emergency management: A perspective. J. Bus. Contin. Emerg. Plan. 2017, 10, 157–176. [Google Scholar]

- Feibert, D.C. Improving Healthcare Logistics Processes; DTU Management Engineering: Lyngby, Denmark, 2017. [Google Scholar]

- Dillon, M.; Oliveira, F.; Abbasi, B. A two-stage stochastic programming model for inventory management in the blood supply chain. Int. J. Prod. Econ. 2017, 187, 27–41. [Google Scholar] [CrossRef]

- Cardinal Health. Survey Finds Hospital Staff Report Better Supply Chain Management Leads to Better Quality of Care and Supports Patient Safety. 2017. Available online: https://www.multivu.com/players/English/8041151-cardinal-health-hospital-supply-chain-management-survey/ (accessed on 1 December 2020).

- Paavola, A. Supply Expenses will Cost more than Labor & 4 Other Healthcare Forecasts for 2020. Becker’s Hospital Review. 2019. Available online: https://www.beckershospitalreview.com/supply-chain/supply-expenses-will-cost-more-than-labor-4-other-healthcare-forecasts-for-2020.html (accessed on 1 December 2020).

- Zhou, L.; Pan, S.; Wang, J.; Vasilakos, A.V. Machine learning on big data: Opportunities and challenges. Neurocomputing 2017, 237, 350–361. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; Mitchell, T. What can machine learning do? Workforce implications. Science 2017, 358, 1530–1534. [Google Scholar] [CrossRef] [PubMed]

- WHO. Medicines shortages. WHO Drug Inf. 2016, 30, 180–185. [Google Scholar]

- Ventola, C. The Drug Shortage Crisis in the United States: Causes, Impact, and Management Strategies. Pharm. Ther. 2011, 36, 740–757. [Google Scholar]

- Korte, B.; Vygen, J. Combinatorial Optimization: Theory and Algorithms, 5th. ed.; Springer Publishing Company: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- PyTorch. Available online: https://pytorch.org/ (accessed on 1 December 2020).

- Ipopt. Available online: https://coin-or.github.io/Ipopt/ (accessed on 1 December 2020).

- Kelle, P.; Woosley, J.; Schneider, H. Pharmaceutical supply chain specifics and inventory solutions for a hospital case. Oper. Res. Health Care 2012, 1, 54–63. [Google Scholar] [CrossRef]

Not applicable. |

| Symbols | Description |

|---|---|

| Set of drugs | |

| i | Drug index. |

| t | Time index. |

| T | Spanning time. |

| An amount of expired drug i at time t. | |

| The refilling cost of drug i at time t. | |

| The penalty function of drug i. | |

| The base price of drug i at time t. | |

| The penalty price of drug i with an emergency demand. | |

| The budget of drug i. | |

| The total budget. | |

| The weight parameters of drug i. | |

| The storage cost function of drug i. | |

| The storage cost of drug i at time t. | |

| The amount of demand of drug i at time t. | |

| The amount expired at time t. | |

| The remaining volume of drug i at time t. | |

| The storage capacity of drug i. | |

| The lowest volume requirement of drug i. | |

| and | The upper and lower bound refilling of drug i at time t. |

| Variables | |

| The decision refilling volume variable. | |

| The refilling volume of drug i. |

| Parameters | Settings |

|---|---|

| Storage demand of drugs | [0.001–0.005] ft |

| Total inventory capacity | 40 ft |

| Refilling cost of drugs | [5–100] USD/unit |

| Storing cost | [2–5] USD/ft |

| Drug demand | [5–20] unit |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abu Zwaida, T.; Pham, C.; Beauregard, Y. Optimization of Inventory Management to Prevent Drug Shortages in the Hospital Supply Chain. Appl. Sci. 2021, 11, 2726. https://doi.org/10.3390/app11062726

Abu Zwaida T, Pham C, Beauregard Y. Optimization of Inventory Management to Prevent Drug Shortages in the Hospital Supply Chain. Applied Sciences. 2021; 11(6):2726. https://doi.org/10.3390/app11062726

Chicago/Turabian StyleAbu Zwaida, Tarek, Chuan Pham, and Yvan Beauregard. 2021. "Optimization of Inventory Management to Prevent Drug Shortages in the Hospital Supply Chain" Applied Sciences 11, no. 6: 2726. https://doi.org/10.3390/app11062726

APA StyleAbu Zwaida, T., Pham, C., & Beauregard, Y. (2021). Optimization of Inventory Management to Prevent Drug Shortages in the Hospital Supply Chain. Applied Sciences, 11(6), 2726. https://doi.org/10.3390/app11062726