Abstract

This paper introduces a continuous-time fast motion estimation framework using high frame-rate cameras. To recover the high-speed motions trajectory, we inherent the bundle adjustment using a different frame-rate strategy. Based on the optimized trajectory, a cubic B-spline representation was proposed to parameter the continuous-time position, velocity and acceleration during this fast motion. We designed a high-speed visual system consisting of the high frame-rate cameras and infrared cameras, which can capture the fast scattered motion of explosion fragments and evaluate our method. The experiments show that bundle adjustment can greatly improve the accuracy and stability of the trajectory estimation, and the B-spline representation of the high frame-rate can estimate the velocity, acceleration, momentum and force of each fragments at any given time during its motion. The related estimated result can achieve under 1% error.

1. Introduction

A continuous-time motion analysis has great engineering support for the damage assessment and cause analysis of an explosion [1]. Reconstructing a continuous-time trajectory of scattered fragments has great value. In this paper, we present a method of fast motion estimation of the explosion fragments. The difficulty of the fragment’s motion estimation is randomly scattered. The fragments created by an explosion follow these four stages:

- Radial expansion of the shell;

- A crack appears somewhere in the shell;

- Material leakage, resulting in pressure difference between the inside and outside of the shell;

- The shell is broken randomly, forming irregular fragments which are scattered with a related high initial velocity.

To capture this fast, diffuse motion, the high frame-rate (HFR) cameras are deployed in this research. We used two HFR cameras to build a high-speed vision system capturing the high-speed motion.

The HFR camera has overcome the restrictions posed by conventional video signals. Many offline HFR cameras can be operated at over 1000 FPS (Frame Per Second) during a very short time [2,3,4]. HFR cameras can help us recognize the high-speed phenomena in the real world, which cannot be captured by conventional cameras (e.g., 30 FPS). Many phenomena have been analyed by the HFR camera, such as crash testing, sports motion estimation, industrial robot control, chemical reaction, etc. For 3D measurement, 3D shape inspection using HFR cameras, cooperated with the related projected and coded pattern, can reconstruct the dynamic phenomena of the shape [5,6,7,8]. Deformation and vibration can also be analyzed by only the HFR system or a similar camera array [9,10]. For motion estimation, unlike shape inspection and vibration analysis, the measured object is not a continuous plane or continuous rigid body. A high frequency projector does not work well. A pure vision-based system is widely deployed for activity sensing. To capture the detail of the motion, plenty of HFR vision systems have been developed [11,12,13]. Vision-based motion capture systems are either using passive markers [14,15] or marker-less systems where motion estimation employs object kinematic models. Image analyzing methods, such as pixel level temporal frequency analyzing, are also presented [16,17]. Our high-speed vision system uses an infrared camera which senses the heating information which can control the HFR cameras and evaluate our method.

HFR cameras can only capture some samples at a certain time stamp at the moment of the explosion. The triangulation measurement of the stereo vision has accuracy drift problems, etc. To avoid this problem, we inherent the bundle adjustment (BA) method from a static object’s 3D reconstruction. Bundle adjustment has played an essential role in the 3D reconstruction problem. It estimates six degrees of freedom (DOF), (three for position and three for orientation) of the camera’s moving trajectory and three DOF of point clouds (e.g., 3D map) [18,19,20,21,22].

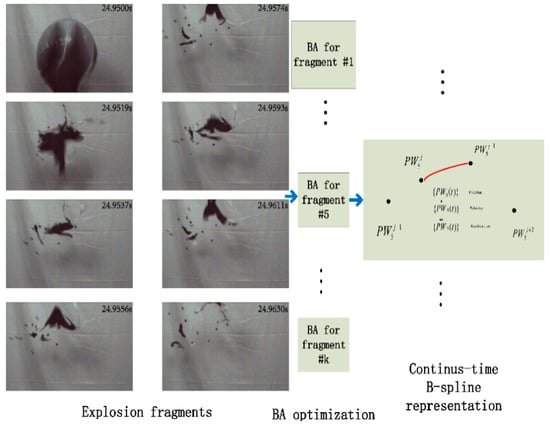

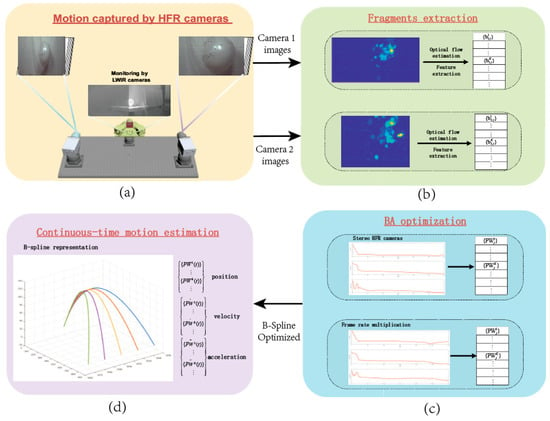

We explore the bundle adjustment to reconstruct the motion along the time axis, i.e., the moving trajectory of each fragment created by the explosion is a 3D structure along continuous time. Furthermore, the derivation of these 3D positions have their own physical meaning, i.e., velocity and acceleration. We give the analytical result of the continuous-time position, velocity and acceleration estimation by using the cubic B-spline representation. The key idea of this study is shown in Figure 1.

Figure 1.

Continuous-time motion estimation using bundle adjustment (BA) and B-spline representation.

2. Bundle Adjustment for Motion Estimation Using HFR Cameras

2.1. Bundle Adjustment Reconstructs Motion’s Trajectory

The key idea of BA is that there exist bundles of light rays from the camera center and a projected position in images at each moment, and all parameters adjust simultaneously and iteratively together to obtain the optimal bundles of all times. Reprojection errors in bundle adjustment come from all deviations between the observed position in the projected images and the reprojected position at the same image at each time. The motion and the trajectory are estimated along with the image sequences whereas the cameras are static to the world coordinate. We define the reproject error as the following equation:

where is the observed position, is the reprojected position, is the identification of the recording camera, is the time stamp.

The reprojected function can be expressed in short as:

where is the position of the world coordinate at the time stamp ; is the rotation vector presented in lie algebra (3) from the world coordinate to camera coordinate; is the translation vector from the world to camera , presented in camera i coordinate; is the intrinsic parameter of camera whereas is the focal length of the camera i; and are the distortion parameters of the camera. Equation (2) follows the pinhole camera model with the distortion expression. It can be detailed by the following equations:

The optimized parameters can be expressed as, with m recording cameras and n captured positions, . All single reprojection errors form a reprojection vector . The optimization process for the bundle adjustment is finding the minimum of all reprojection errors. In this paper, we use the sum of the squares constructed by a large number of nonlinear functions as the cost function to form a nonlinear least-square problem.

To solve this unconstrained optimization Equation (4), basing on Taylor’s theorem, a combination of linear search and trust region method Levenberg–Marquardt (LM) algorithm is deployed in this paper. For each iteration of the LM algorithm, the increment of optimized i can be calculated by the following equation:

where is the Jacobian matrix, calculated analytically by the partial derivatives of the reprojection error; damping factor controls the LM algorithm search for the result following the linear search or trust region; D is the damping matrix. For the original LM algorithm, D is set as the identity matrix. In this paper, we set D as a diagonal matrix; each element at the diagonal is the eigenvalue of the matrix . The benefit of this design is that it can balance the magnitude gap between the position, orientation and intrinsic parameters. is also denoted as the Hessian matrix.

To solve the bundle adjustment, the key is the Jacobian matrix. From Equation (3), we give the analytically partial derivatives of the reprojection error to each component of by using rotation lie algebra (3) properties and the chain rule of derivatives. For , the partial derivative can be expressed as:

where the equation in that means the skew matrix of the related vector; the other two partial derivatives can be easily calculated by using Equation (3). is the left Jacobian for the BCH formula, with

For translation vector , the partial derivate is expressed in Equation (7). Meanwhile, Equation (8) is for intrinsic , and Equation (9) is for position at time stamp j,

with . Equations (6)–(9) form the Jacobian matrix. We denote formed by Equations (6)–(8) as the camera’s Jacobian for camera i at time stamp j, with formed by Equation (9) as the position’s Jacobian observed by camera i at time stamp j. The Jacobian matrix will be detailed by the subsequent section which combines the HFR camera’s properties and deploying strategy.

The optimization procedure of the bundle adjustment for motion estimation are summarized by pseudocode Algorithm 1.

| Algorithm 1: Bundle adjustment for motion estimation | |

| Input: Series of observed point from image sequences of all cameras | |

| Output: Cameras parameters and all captured position | |

| 1: | V; |

| 2: | |

| 3: | =; g :=; |

| 4: | found := ; |

| 5: | while not found and do |

| 6: | k = k + 1; |

| 7: | Solve Equation (5) |

| 8: | |

| 9: | found := true; |

| 10: | else |

| 11: | ; |

| 12: | then |

| 13: | ; ; |

| 14: | else |

| 15: | using Equations (1)–(9) |

| 16: | ; g := |

| 17: | |

| 18: | |

| 19: | end if |

| 20: | end if |

| 21: | end while |

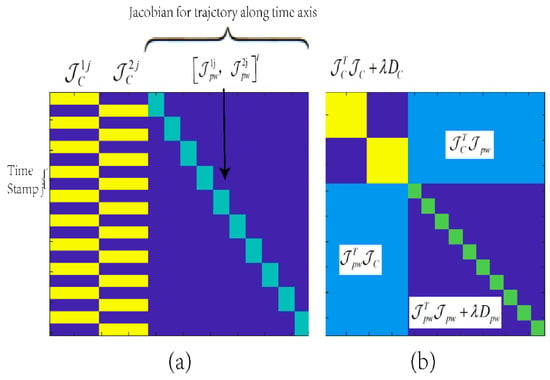

2.2. Jacobian Matrix for Stereo HFR Cameras

Stereo vision is widely used for non-contact measurement, which is suitable for the physical evaluation of an explosion. In this section, we use the synchronized stereo HFR camera’s captured motion. For each fragment captured by these stereo HFR cameras, during its movement, the formation of the Jacobian matrix is shown in Figure 2. Figure 2 presents the HFR cameras with 540 FPS of the first 20 samples (e.g., for the first 37 ms). In Figure 2, each 4 × 18 matrix block of yellow and purple presents the Jacobian matrix of both cameras at time stamp j, and the matrix block in yellow are the . The matrix block in light blue is at time stamp j. The matrix block in purple from Figure 2 are zeros. Both the Jacobian matrix and Hessian Matrix are sparse matrices. Therefore, we use the Shur Complement to solve Equation (5). Equation (5) can be specified as:

Figure 2.

(a) Jacobian matrix for stereo high frame-rate (HFR) cameras; (b) related Hessian Matrix of these two HFR cameras.

Since the dimension of the cameras is related less to the position, is the diagonal block matrix which makes the full rank. We use the Shur complement in the following:

Equation (11) efficiently reduces the complexity of the calculation. From Figure 2b we can see that since matrix P is a block diagonal matrix, its inversion is relatively simple. The original Equation (5) can be easily solved by Equations (11) and (12), especially since the number of captured images is large.

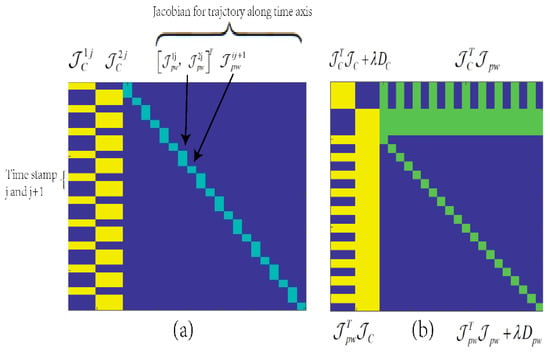

2.3. Jacobian Matrix for HFR Cameras with Frame-Rate Multiplication

For HFR cameras, the captured frame-rate is highly reliant on the resolution of the frame, the exposure time, the bandwidth of the hardware, etc. In general, the resolution affects the frame-rate the most. the resolution influences the monitoring field of view (FOV). For fragments produced by the explosion, we want to use high frame-rate (over 500 FPS) to capture the moment of the explosion, and we also want to record the scattered fragments as long as possible.

To solve this problem, we use two HFR cameras with frame-rate multiplication, e.g., one HFR camera under 540 FPS, and another HFR camera under 1080 FPS. The frame-rate of the second HFR camera is twice that of the first camera. This is the same as Figure 2 for the first 37 ms, i.e., 20 frames for the first camera and 40 frames for the second camera. The Jacobian matrix of these two HFR cameras are presented in Figure 3. Each 6 × 18 matrix block of yellow and purple presents the Jacobian matrix of both cameras at time stamp j and j + 1, with 4 × 18 for both cameras at time j, 2 × 18 for camera two at time j + 1. The matrix block in yellow is the . The matrix block in light blue is at time stamp j and for time j + 1. The Jacobian matrix and Hessian matrix are also the sparse matrices, and with Equations (11) and (12), the iteration step can be solved efficiently.

Figure 3.

(a) Jacobian matrix for HFR cameras with frame-rate multiplication; (b) related Hessian matrix of these two HFR cameras.

The interval between captured frames and the synchronization of our HFR cameras is strictly controlled by hardware instruments. This gives us the benefits that: (1) the Jacobian matrix is formatted strictly along the time axis with the same interval; (2) the Jacobian matrix presented in this paper is blocked diagonally, which reduces the complexity of the iteration. These are clearly demonstrated in the examples of Figure 2 and Figure 3.

To improve the accuracy and reduce the iteration step, the initialization of i is important. For synchronization stereo HFR cameras, we can initial i by a stereo calculation at each time stamp. In this section, for a certain time stamp, there is only one camera observing the fragment. To solve this problem, we use pose averaging to initialize the position which is observed by only one camera, i.e.,

where k is the time interval to the last time stamp where at least two cameras observed, and is the time interval to the follow up time stamp where two cameras captured the fragment. Based on our initialization strategy, the iteration step can be reduced to less than 300 steps for both frame synchronization stereo cameras and the frame-rate multiplication where we set , and the norm of all reprojection errors is less than pixels.

3. Continuous-Time Spline Representation for Fast Motion Estimation

With the bundle adjustment under HFR cameras, each fragment’s movement can be optimized to a trajectory sampled by the high frame-rate. For continuous-time motion estimation, the velocity and the acceleration cannot be calculated preciously by the difference in the bundle adjustment’s results directly. To solve this problem, we use B-spline for continuous-time motion estimation, i.e., position, velocity and acceleration estimation based on bundle adjustment optimized results.

3.1. B-Spline Interpolation

Splines are an excellent choice for representing a continuous trajectory because their derivatives can be computed analytically. B-splines are chosen in this paper as they are a well-known representation for trajectories in R3 [22,23,24]. B-splines use a series of control points (also denoted as knots) to define a linear combination of continuous function which is constrained by the knots. The interpolation between a series of 3D positions (knots) presented in the B-spline is as follows:

where is the De Boor-Cox recursive formula, the fractional coefficient for each given control point . The formula of is:

3.2. Cubic B-Spline Representation for Continuous-Time Motion Estimation

Estimated velocity and acceleration need a C2 continuity, a good approximation of minimal torque trajectories and a parameterization of rigid-body motion devoid of singularities [25,26]. A cubic B-spline representation can satisfy these conditions. We update the 3D position to SE(3), i.e.,

where is the rotation between the world coordinate and the fragment coordinate at time stamp j. When we treat the fragment as a single point, . For cubic B-spline, Equation (14) can be updated by using Equation (16), i.e.,

We define time fraction as: . By using lie algebra of SE(3) and the related propriety, we give the analytical result for motion estimation of the position (Equation (18)), the velocity (Equation (19)), and the acceleration (Equation (20)) at time u(t) during time and .

We define , the parameters are under these formulas:

3.3. Accuracy Evaluation of B-Spline Representation

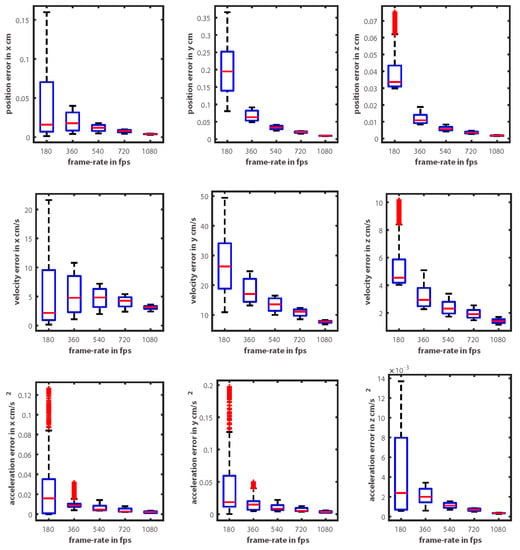

Using Equations (18)–(20), the continuous-time’s position, velocity and acceleration of a moving object can be estimated. The sampling frequency of knots (i.e., the frame-rate of cameras) influences the accuracy of the estimated result. We use HFR cameras with a different frame-rate to capture the known motion of a moving object. Using cubic B-spline motion estimation, the distribution of the position error, velocity error and acceleration error in three axes of the world coordinate are shown in Figure 4.

Figure 4.

Boxplot of position error, velocity error and acceleration error (cm/s2) in three axes of the world coordinate with a frame-rate from 180 to 1080 FPS.

With the initial velocity at around 30 m/s, with a captured frame-rate of over 500 FPS, the velocity error of the B-splines estimation can be controlled under 16 cm/s. The percentage error of the position, velocity and acceleration are under 1%.

4. Experiments and Evaluation

4.1. Experiments Setup of Explosion Simulation

We used two balloons with coffee beans installed between them to simulate the explosion fragments. The reason why we installed coffee beans between two balloons is because the broken shell’s initial velocity is slow. With around 0.1 g of coffee beans, a high speed scattered motion can be created. Similar to the regular explosion presented in the introduction, our explosion followed these four similar stages:

- The balloons are inflated in the inner shell and the inflation behavior is mainly radial;

- The elastic limit causes breakage of the balloons;

- Because of the elastic contraction of the balloon, the pressure difference between the inside and outside is constructed at the same time;

- The shells of the balloons are randomly broken, and the coffee beans are distributed randomly during the inflation. After the balloons are broken, the coffee beans between these two balloons are scattered with a high initial velocity due to the elasticity and pressure difference.

We used two Emergent Vision Technology (EVT) HFR cameras (10 GigE, HT-2000). When using these two cameras for experiments, the frame-rate can be set from 1080 FPS to 91 FPS, where the resolution of these cameras are from 512 × 320 to 2048 × 1088, respectively. HFR cameras captured the scattered motion of the explosion. We also deployed a monitoring module composed of two long-wavelength infrared (LWIR) cameras and one large FOV visible camera under the regular frame-rate (60 FPS). The covering FOV of this module is 120 degrees in both the visible band and LWIR band. The monitoring module helped us (1) monitorthe explosion’s environment for safety concerns, (2) control the stop time of the HFR cameras to save storage space, and (3) capture the possible landing position of each fragment for further research. Our instruments and constructed balloons are shown in Figure 5.

Figure 5.

(a) Constructed balloons for explosion under long-wavelength infrared (LWIR) view, (b) measurement instruments with the monitoring module in the middle.

4.2. Evaluation

The procedure of the continuous-time fast motion estimation is detailed as follows, shown in Figure 6, where:

Figure 6.

Procedure of our method. (a) Motion captured by HFR cameras; (b) Fragments extraction; (c) Bundle adjustment optimization; (d) Continuous-time motion estimation.

- (a)

- Explosion fragments’ motion are captured by the HFR cameras with a related frame-rate setting strategy (synchronization or multiplication); the large FOV LWIR cameras monitor the explosion (shown in Figure 6a)

- (b)

- With recording images from both HFR cameras, we use the dense optical flow: the Farneback method [27] is used to extract the motion area of the images at each time stamp j. The pixel motion of different fragments are analyzed (shown in Figure 6b). Then we combine the HOG feature extraction and the connected component at every extracted motion area from the optical flow estimation. The image position (area) of fragments at each captured frame are extracted, i.e., the dataset of each camera’s observed position of fragment k is established;

- (c)

- The bundle adjustment is optimized based on the HFR cameras’ working strategy, which has been detailed in the previous section. Figure 6c demonstrates the variation of three key parameters (damping factor, norm of reprojection error , and , from top to bottom) of the BA along the iteration step. From the BA optimization, the 3D positions of fragment at each captured time stamp j are established.

- (d)

- With optimized 3D positions, the continuous-time motion (position, velocity, acceleration) of each fragment is estimated by cubic B-spline representation.

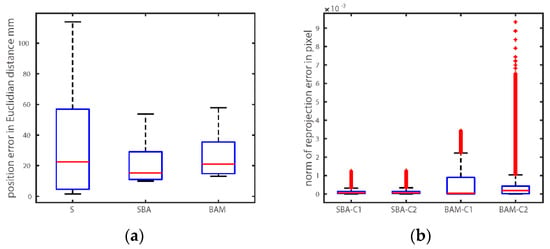

The accuracy of our BA optimization with different frame-rate strategy is presented in this section. We compare synchronization frame-rate bundle adjustment (denoted as SBA) and bundle adjustment with frame-rate magnitude (denoted as BAM) to stereo calculation directly. Euclidean distance is deployed to evaluate the position error, while the norm of the reprojection error from each camera evaluates the accuracy of the proposed methods. We use boxplot to demonstrate the distribution of the position error, shown in Figure 7a, and the reprojection error as shown in Figure 7b (Where -Ci means reprojection in camera i).

Figure 7.

Boxplot of (a) position error, (b) reprojection error of each camera.

The related data of the mean error of position (MEP), mean square error of position (MSEP), mean error of reprojection error (MERE) and mean square error of reprojection error (MESRE) in pixel are shown in Table 1.

Table 1.

Accuracy evaluation of BA.

Figure 7 and Table 1 demonstrate that by using bundle adjustment optimization, the accuracy and stability of the trajectory estimation is highly improved, especially using the frame synchronization strategy (SBA). BAM can efficiently increase the motion’s recording time. Comparing to SBA, the lack of observed motion from camera one at certain time stamps for BAM causes roughly 5 mm of accuracy degradation of the position, 0.001 pixel in MESRE. This is why the outlier of BAM-C2 is significantly high. When captured motion is around the camera’s center area (around 45 pixels), the distortion affection is small, and the position calculated by stereo directly can achieve related high accuracy. Bundle adjustment optimization updates the intrinsic parameters instead of the position around these areas. This is why the minimum position error of stereo calculation directly is lower than BA’s optimization. This is the only drawback of trajectory estimation using bundle adjustment.

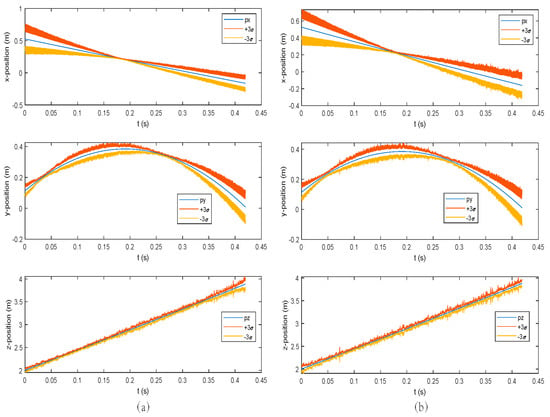

A representative of one fragment’s motion estimated by SBA and BAM with uncertainty bounds (3) is shown in Figure 8. We plot the whole 419 ms from the explosion to being scattered out of the cameras’ FOV. The sample frame-rate for SBA of both HFR cameras is 540 fps, with 1024 × 480 resolution. The frame-rate for BAM is: one camera under 540 fps, with 1024 × 480 resolution, another under 1080 fps, with 512 × 320 resolution.

Figure 8.

Estimated position (in blue) with 3 bounds (red and orange) in three axes; (a) is estimated by SBA + B-spline, (b) is estimated by BAM + B-spline.

The sampling data calculated by B-spline representation is six times the higher frame-rate, e.g., 6480 FPS, which exceeds the limits of our HFR cameras. The uncertainty data of five fragments scattered in this explosion are shown in Table 2 and Table 3. All these supplementary data are calculated by B-spline representation. Based on estimated velocity and acceleration, the momentum and force of each fragment can also be provided. These physical parameters are very important for damage assessment, etc.

Table 2.

The uncertainty data of five fragments using synchronization frame-rate bundle adjustment (SBA).

Table 3.

The uncertainty data of five fragments using bundle adjustment with frame-rate magnitude (BAM).

5. Discussion and Conclusions

In this paper, a continuous-time explosion fragments motion estimation method is proposed. We balanced the FOV and the frame-rate of the HFR camera, designed a high-speed vision system, and captured the moment of the explosion and the motion of the high-speed fragments. Basing on our frame-rate strategy, an adjusted bundle adjustment, i.e., SBA and BMA are proposed for trajectory estimation of high speed. With the optimized trajectory, a cubic B-spline representation of the continuous-time position, velocity and acceleration is proposed for this fast motion. Furthermore, the momentum and force can also be estimated at any given time. With these quantization parameters, the damage assessment of fragments can be evaluated.

The experiments show that our adjusted bundle adjustment cooperated with B-spline representation, high accuracy and robust position, that the velocity and acceleration can be estimated at any given time, and that the related error of the estimated motion can be controlled under 1% by using high frame-rate cameras.

Author Contributions

Conceptualization, Y.N. and F.L.; methodology, Y.N.; validation, Y.W., supervision, X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No statement.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mohr, L.; Benauer, R.; Leitl, P.; Fraundorfer, F. Damage estimation of explosions in urban environments by simulation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. Arch. 2019, 42, 253–260. [Google Scholar] [CrossRef]

- Gu, Q.-Y.; Idaku Ishii, I. Review of some advances and applications in real-time high-speed vision: Our views and experiences. Int. J. Autom. Comput. 2016, 13, 305–318. [Google Scholar] [CrossRef]

- Li, J.; Liu, X.; Liu, F.; Xu, D.; Gu, Q.; Ishii, I. A hardware-oriented algorithm for ultra-high-speed object detection. IEEE Sens. J. 2019, 19, 3818–3831. [Google Scholar] [CrossRef]

- Li, J.; Yin, Y.; Liu, X.; Xu, D.; Gu, Q. 12,000-fps multi-object detection using hog descriptor and svm classifier. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 14 December 2017; pp. 5928–5933. [Google Scholar]

- Gao, H.; Gu, Q.; Takaki, T.; Ishii, I. A self-projected light-section method for fast three-dimensional shape inspection. Int. J. Optomechatron. 2012, 6, 289–303. [Google Scholar] [CrossRef]

- Van der Jeught, S.; Dirckx, J.J. Real-time structured light profilometry: A review. Opt. Lasers Eng. 2016, 87, 18–31. [Google Scholar] [CrossRef]

- Sharma, A.; Raut, S.; Shimasaki, K.; Senoo, T.; Ishii, I. Hfr projector camera based visible light communication system for real-time video streaming. Sensors 2020, 20, 5368. [Google Scholar] [CrossRef] [PubMed]

- Landmann, M.; Heist, S.; Dietrich, P.; Lutzke, P.; Gebhart, I.; Templin, J.; Kühmstedt, P.; Tünnermann, A.; Notni, G. High-speed 3d thermography. Opt. Lasers Eng. 2019, 121, 448–455. [Google Scholar] [CrossRef]

- Chen, R.; Li, Z.; Zhong, K.; Liu, X.; Chao, Y.J.; Shi, Y. Low-speed-camera-array-based high-speed threedimensional deformation measurement method: Principle, validation, and application. Opt. Lasers Eng. 2018, 107, 21–27. [Google Scholar] [CrossRef]

- Jiang, M.; Aoyama, T.; Takaki, T.; Ishii, I. Pixel-level and robust vibration source sensing in high-frame-rate video analysis. Sensors 2016, 16, 1842. [Google Scholar] [CrossRef] [PubMed]

- Ishii, I.; Tatebe, T.; Gu, Q.; Moriue, Y.; Takaki, T.; Tajima, K. 2000 fps real-time vision system with high-frame-rate video recording. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AL, USA, 3–7 May 2010; pp. 1536–1541. [Google Scholar]

- Raut, S.; Shimasaki, K.; Singh, S.; Takaki, T.; Ishii, I. Real-time high-resolution video stabilization using high-frame-rate jitter sensing. ROBOMECH J. 2019, 6, 16. [Google Scholar] [CrossRef]

- Hu, S.; Matsumoto, Y.; Takaki, T.; Ishii, I. Monocular stereo measurement using high-speed catadioptric tracking. Sensors 2017, 17, 1839. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Xu, Y.; Zheng, Y.; Zhu, M.; Yao, H.; Xiao, Z. Tracking a golf ball with high-speed stereo vision system. IEEE Trans. Instrum. Meas. 2018, 68, 2742–2754. [Google Scholar] [CrossRef]

- Hu, S.; Jiang, M.; Takaki, T.; Ishii, I. Real-time monocular three-dimensional motion tracking using a multithread active vision system. J. Robot. Mechatron. 2018, 30, 453–466. [Google Scholar] [CrossRef]

- Shimasaki, K.; Jiang, M.; Takaki, T.; Ishii, I.; Yamamoto, K. Hfr-video-based honeybee activity sensing. IEEE Sens. J. 2020, 20, 5575–5587. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Bustos, Á.P.; Chin, T.-J.; Eriksson, A.; Reid, I. Visual slam: Why bundle adjust? In Proceedings of the IEEE 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 2385–2391. [Google Scholar]

- Agarwal, S.; Snavely, N.; Seitz, S.M.; Szeliski, R. Bundle adjustment in the large. In Proceedings of the European Conference on Computer Vision, Hersonissos, Greece, 5–11 September 2010; pp. 29–42. [Google Scholar]

- Chebrolu, N.; Läbe, T.; Vysotska, O.; Behley, J.; Stachniss, C. Adaptive robust kernels for non-linear least squares problems. arXiv 2020, arXiv:2004.14938. [Google Scholar]

- Gong, Y.; Meng, D.; Seibel, E.J. Bound constrained bundle adjustment for reliable 3d reconstruction. Opt. Express 2015, 23, 10771–10785. [Google Scholar] [CrossRef] [PubMed]

- Qin, K. General matrix representations for b-splines. Vis. Comput. 2000, 16, 177–186. [Google Scholar] [CrossRef]

- Mueggler, E.; Gallego, G.; Rebecq, H.; Scaramuzza, D. Continuous-time visual-inertial odometry for event cameras. IEEE Trans. Robot. 2018, 34, 1425–1440. [Google Scholar] [CrossRef]

- Ovrén, H.; Forssén, P.-E. Trajectory representation and landmark projection for continuous-time structure from motion. Int. J. Robot. Res. 2019, 38, 686–701. [Google Scholar] [CrossRef]

- Lovegrove, S.; Patron-Perez, A.; Sibley, G. Spline fusion: A continuous-time representation for visual-inertial fusion with application to rolling shutter cameras. BMVC 2013, 2, 8. [Google Scholar]

- Geneva, P.; Eckenhoff, K.; Lee, W.; Yang, Y.; Huang, G. Openvins: A research platform for visual-inertial estimation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–30 June 2020; pp. 4666–4672. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).