Quality Assessment of 3D Synthesized Images Based on Textural and Structural Distortion Estimation

Abstract

1. Introduction

2. Related Work

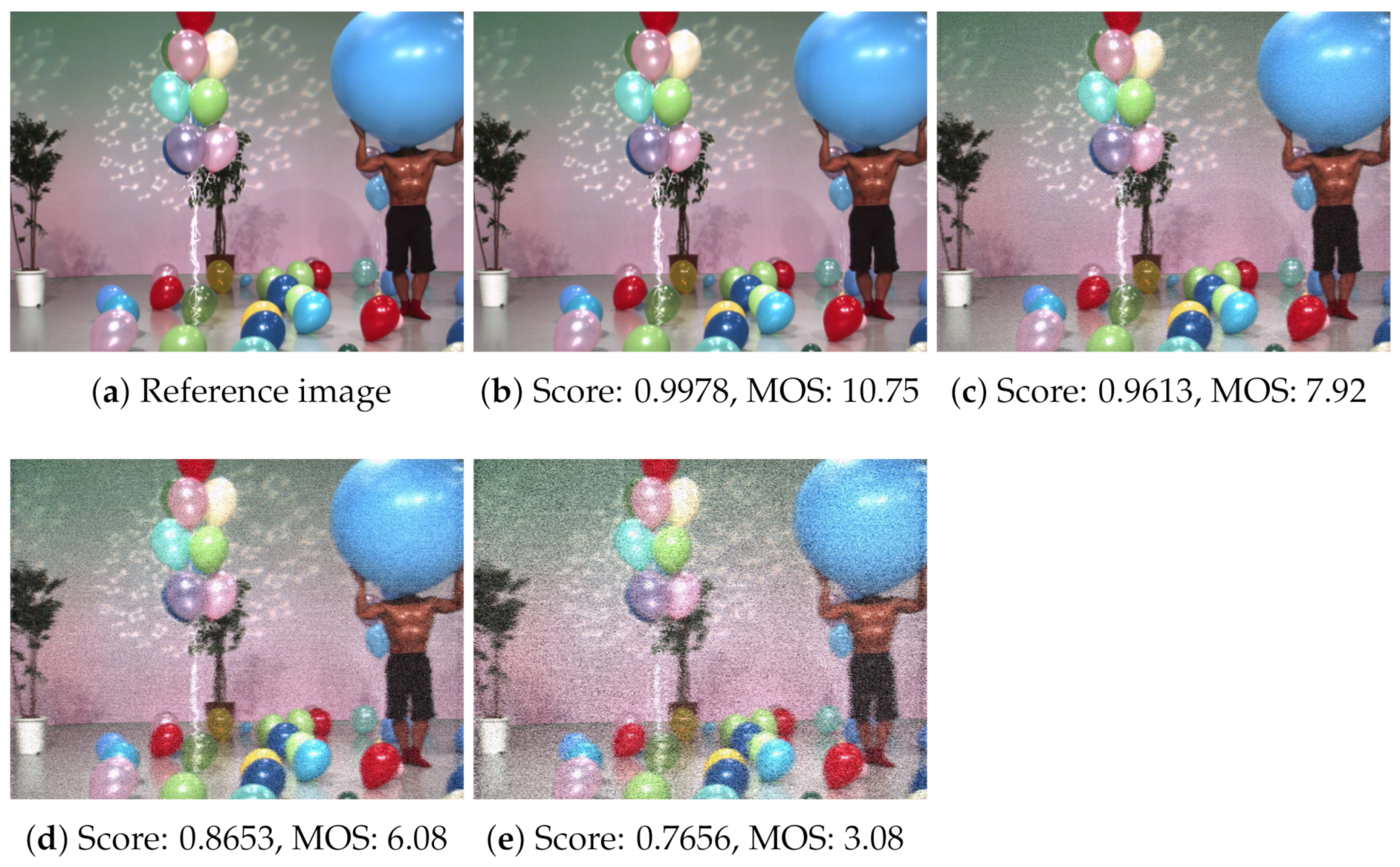

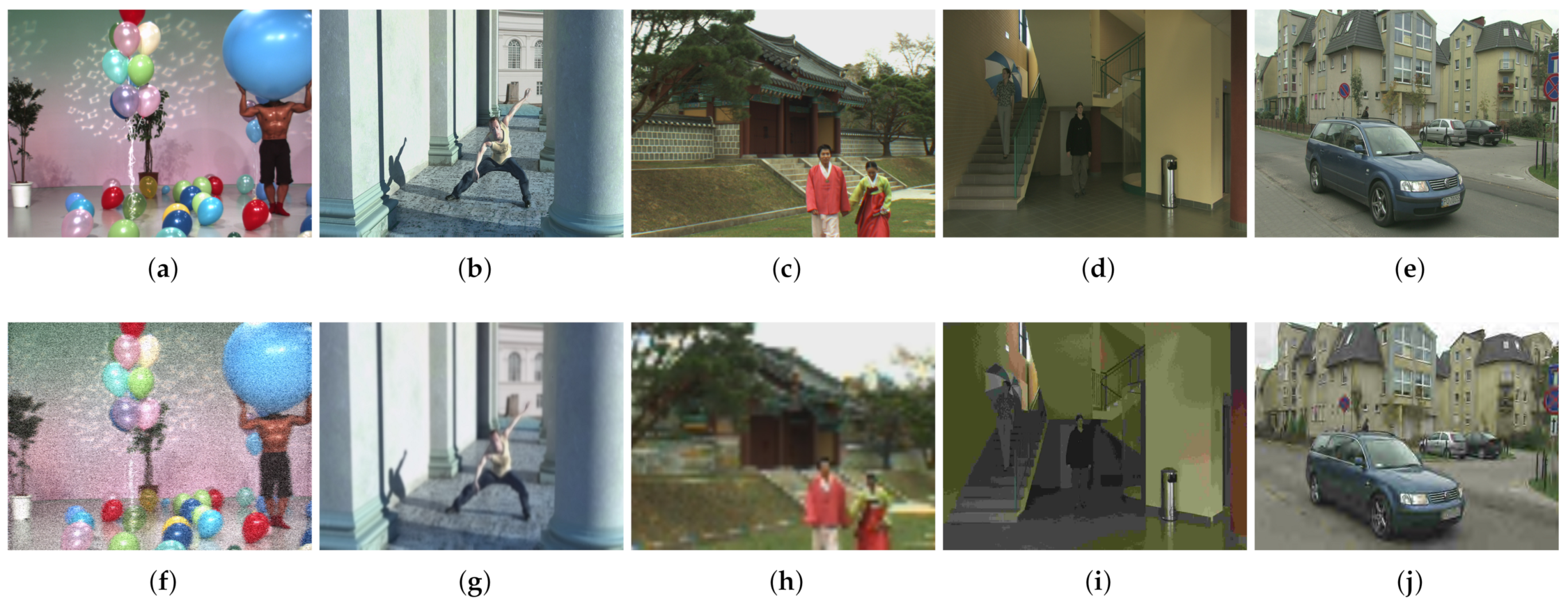

3. The Proposed Technique

3.1. Estimating the Textural Distortion

3.2. Estimating the Structural Distortion

3.3. Final Quality Score

4. Experiments and Results

4.1. Dataset

4.2. Performance Evaluation Parameters

4.3. Performance Comparison with 2D and 3D-IQA Metrics

4.4. Variance of the Residual Analysis

4.5. Statistical Significance Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Tanimoto, M.; Tehrani, M.P.; Fujii, T.; Yendo, T. Free-viewpoint TV. IEEE Signal Process. Mag. 2010, 28, 67–76. [Google Scholar] [CrossRef]

- Fehn, C.; De La Barré, R.; Pastoor, S. Interactive 3-DTV-concepts and key technologies. Proc. IEEE 2006, 94, 524–538. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Merkle, P.; Morvan, Y.; Smolic, A.; Farin, D.; Mueller, K.; De With, P.; Wiegand, T. The effects of multiview depth video compression on multiview rendering. Signal Process.-Image Commun. 2009, 24, 73–88. [Google Scholar] [CrossRef]

- Farid, M.S.; Lucenteforte, M.; Grangetto, M. Edges shape enforcement for visual enhancement of depth image based rendering. In Proceedings of the 2013 IEEE 15th International Workshop on Multimedia Signal Processing (MMSP), Pula, Italy, 30 September–2 October 2013; pp. 406–411. [Google Scholar]

- Bosc, E.; Le Callet, P.; Morin, L.; Pressigout, M. Visual Quality Assessment of Synthesized Views in the Context of 3D-TV. In 3D-TV System with Depth-Image-Based Rendering: Architectures, Techniques and Challenges; Zhu, C., Zhao, Y., Yu, L., Tanimoto, M., Eds.; Springer: New York, NY, USA, 2013; pp. 439–473. [Google Scholar]

- Farid, M.S.; Lucenteforte, M.; Grangetto, M. Edge enhancement of depth based rendered images. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5452–5456. [Google Scholar]

- Huang, H.Y.; Huang, S.Y. Fast Hole Filling for View Synthesis in Free Viewpoint Video. Electronics 2020, 9, 906. [Google Scholar] [CrossRef]

- Boev, A.; Hollosi, D.; Gotchev, A.; Egiazarian, K. Classification and simulation of stereoscopic artifacts in mobile 3DTV content. In Stereoscopic Displays and Applications XX; Woods, A.J., Holliman, N.S., Merritt, J.O., Eds.; International Society for Optics and Photonics, SPIE: San Jose, CA, USA, 2009; Volume 7237, pp. 462–473. [Google Scholar]

- Hewage, C.T.; Martini, M.G. Quality of experience for 3D video streaming. IEEE Commun. Mag. 2013, 51, 101–107. [Google Scholar] [CrossRef]

- Niu, Y.; Lin, L.; Chen, Y.; Ke, L. Machine learning-based framework for saliency detection in distorted images. Multimed. Tools Appl. 2017, 76, 26329–26353. [Google Scholar] [CrossRef]

- Fang, Y.; Yuan, Y.; Li, L.; Wu, J.; Lin, W.; Li, Z. Performance evaluation of visual tracking algorithms on video sequences with quality degradation. IEEE Access 2017, 5, 2430–2441. [Google Scholar] [CrossRef]

- Korshunov, P.; Ooi, W.T. Video quality for face detection, recognition, and tracking. ACM Trans. Multimed. Comput. Commun. Appl. 2011, 7, 1–21. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Lu, L. Why is image quality assessment so difficult? In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; Volume 4, p. IV–3313. [Google Scholar]

- Wang, Z.; Lu, L.; Bovik, A.C. Video quality assessment based on structural distortion measurement. Signal Process.-Image Commun. 2004, 19, 121–132. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Hu, S.; Kwong, S.; Kuo, C.C.J.; Peng, Q. Subjective and objective video quality assessment of 3D synthesized views with texture/depth compression distortion. IEEE Trans. Image Process. 2015, 24, 4847–4861. [Google Scholar] [CrossRef]

- Varga, D. Multi-Pooled Inception Features for No-Reference Image Quality Assessment. Appl. Sci. 2020, 10, 2186. [Google Scholar] [CrossRef]

- Bosc, E.; Le Callet, P.; Morin, L.; Pressigout, M. An edge-based structural distortion indicator for the quality assessment of 3D synthesized views. In Proceedings of the Picture Coding Symposium, Krakow, Poland, 7–9 May 2012; pp. 249–252. [Google Scholar]

- You, J.; Xing, L.; Perkis, A.; Wang, X. Perceptual quality assessment for stereoscopic images based on 2D image quality metrics and disparity analysis. In Proceedings of the International Workshop Video Process. Quality Metrics Consum. Electron, Scottsdale, AZ, USA, 13–15 January 2010; Volume 9, pp. 1–6. [Google Scholar]

- Seuntiens, P. Visual Experience of 3D TV. Ph.D. Thesis, Eindhoven University of Technology, Eindhoven, Austria, 2006. [Google Scholar]

- Campisi, P.; Le Callet, P.; Marini, E. Stereoscopic images quality assessment. In Proceedings of the 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 2110–2114. [Google Scholar]

- Banitalebi-Dehkordi, A.; Nasiopoulos, P. Saliency inspired quality assessment of stereoscopic 3D video. Multimed. Tools Appl. 2018, 77, 26055–26082. [Google Scholar] [CrossRef]

- Sandić-Stanković, D.; Kukolj, D.; Le Callet, P. DIBR-synthesized image quality assessment based on morphological multi-scale approach. EURASIP J. Image Video Process. 2016, 2017, 4. [Google Scholar] [CrossRef]

- Voo, K.H.; Bong, D.B. Quality assessment of stereoscopic image by 3D structural similarity. Multimed. Tools Appl. 2018, 77, 2313–2332. [Google Scholar] [CrossRef]

- Chen, M.J.; Su, C.C.; Kwon, D.K.; Cormack, L.K.; Bovik, A.C. Full-reference quality assessment of stereopairs accounting for rivalry. Signal Process.-Image Commun. 2013, 28, 1143–1155. [Google Scholar] [CrossRef]

- Zhan, J.; Niu, Y.; Huang, Y. Learning from multi metrics for stereoscopic 3D image quality assessment. In Proceedings of the International Conference on 3D Imaging (IC3D), Liege, Belgium, 13–14 December 2016; pp. 1–8. [Google Scholar]

- Jung, Y.J.; Kim, H.G.; Ro, Y.M. Critical Binocular Asymmetry Measure for the Perceptual Quality Assessment of Synthesized Stereo 3D Images in View Synthesis. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1201–1214. [Google Scholar] [CrossRef]

- Niu, Y.; Zhong, Y.; Ke, X.; Shi, Y. Stereoscopic Image Quality Assessment Based on both Distortion and Disparity. In Proceedings of the 2018 IEEE Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 9–12 December 2018; pp. 1–4. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Liu, C.; Yuen, J.; Torralba, A.; Sivic, J.; Freeman, W.T. SIFT Flow: Dense Correspondence across Different Scenes. In ECCV; Forsyth, D., Torr, P., Zisserman, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 28–42. [Google Scholar]

- Liang, H.; Chen, X.; Xu, H.; Ren, S.; Cai, H.; Wang, Y. Local Foreground Removal Disocclusion Filling Method for View Synthesis. IEEE Access 2020, 8, 201286–201299. [Google Scholar] [CrossRef]

- Mahmoudpour, S.; Schelkens, P. Synthesized View Quality Assessment Using Feature Matching and Superpixel Difference. IEEE Signal Process. Lett. 2020, 27, 1650–1654. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, Y.; Ma, X.; Qi, S.; Yan, W.; Chen, H. Quality Assessment for DIBR-Synthesized Images With Local and Global Distortions. IEEE Access 2020, 8, 27938–27948. [Google Scholar] [CrossRef]

- Wang, X.; Shao, F.; Jiang, Q.; Fu, R.; Ho, Y. Quality Assessment of 3D Synthesized Images via Measuring Local Feature Similarity and Global Sharpness. IEEE Access 2019, 7, 10242–10253. [Google Scholar] [CrossRef]

- Chen, Z.; Zhou, W.; Li, W. Blind stereoscopic video quality assessment: From depth perception to overall experience. IEEE Trans. Image Process. 2017, 27, 721–734. [Google Scholar] [CrossRef]

- Gorley, P.; Holliman, N. Stereoscopic image quality metrics and compression. In Stereoscopic Displays and Applications XIX; International Society for Optics and Photonics: San Jose, CA, USA, 29 February 2008; Volume 6803, p. 680305. [Google Scholar]

- Yang, J.; Jiang, B.; Song, H.; Yang, X.; Lu, W.; Liu, H. No-reference stereoimage quality assessment for multimedia analysis towards Internet-of-Things. IEEE Access 2018, 6, 7631–7640. [Google Scholar] [CrossRef]

- Park, M.; Luo, J.; Gallagher, A.C. Toward assessing and improving the quality of stereo images. IEEE J. Sel. Top. Signal Process. 2012, 6, 460–470. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, J. Quality assessment of stereoscopic 3D images based on local and global visual characteristics. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 61–66. [Google Scholar]

- Huang, S.; Zhou, W. Learning to Measure Stereoscopic S3D Image Perceptual Quality on the Basis of Binocular Rivalry Response. Appl. Sci. 2019, 9, 906. [Google Scholar] [CrossRef]

- Shao, F.; Lin, W.; Gu, S.; Jiang, G.; Srikanthan, T. Perceptual full-reference quality assessment of stereoscopic images by considering binocular visual characteristics. IEEE Trans. Image Process. 2013, 22, 1940–1953. [Google Scholar] [CrossRef]

- Bensalma, R.; Larabi, M.C. A perceptual metric for stereoscopic image quality assessment based on the binocular energy. Multidimens. Syst. Signal Process. 2013, 24, 281–316. [Google Scholar] [CrossRef]

- Shao, F.; Li, K.; Lin, W.; Jiang, G.; Yu, M.; Dai, Q. Full-reference quality assessment of stereoscopic images by learning binocular receptive field properties. IEEE Trans. Image Process. 2015, 24, 2971–2983. [Google Scholar] [CrossRef]

- Shao, F.; Li, K.; Lin, W.; Jiang, G.; Yu, M. Using binocular feature combination for blind quality assessment of stereoscopic images. IEEE Signal Process. Lett. 2015, 22, 1548–1551. [Google Scholar] [CrossRef]

- Lin, Y.H.; Wu, J.L. Quality assessment of stereoscopic 3D image compression by binocular integration behaviors. IEEE Trans. Image Process. 2014, 23, 1527–1542. [Google Scholar] [CrossRef]

- Wang, X.; Kwong, S.; Zhang, Y. Considering binocular spatial sensitivity in stereoscopic image quality assessment. In Proceedings of the 2011 Visual Communications and Image Processing (VCIP), Tainan, Taiwan, 6–9 November 2011; pp. 1–4. [Google Scholar]

- Zhou, W.; Yu, L. Binocular responses for no-reference 3D image quality assessment. IEEE Trans. Multimed. 2016, 18, 1077–1084. [Google Scholar] [CrossRef]

- Chen, M.J.; Su, C.C.; Kwon, D.K.; Cormack, L.K.; Bovik, A.C. Full-reference quality assessment of stereoscopic images by modeling binocular rivalry. In Proceedings of the 2012 Conference Record of the Forty Sixth Asilomar Conference on Signals, Systems and Computers (ASILOMAR), Pacific Grove, CA, USA, 4–7 November 2012; pp. 721–725. [Google Scholar]

- Wang, J.; Rehman, A.; Zeng, K.; Wang, S.; Wang, Z. Quality prediction of asymmetrically distorted stereoscopic 3D images. IEEE Trans. Image Process. 2015, 24, 3400–3414. [Google Scholar] [CrossRef]

- Ryu, S.; Kim, D.H.; Sohn, K. Stereoscopic image quality metric based on binocular perception model. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 609–612. [Google Scholar]

- Shao, F.; Li, K.; Lin, W.; Jiang, G.; Dai, Q. Learning blind quality evaluator for stereoscopic images using joint sparse representation. IEEE Trans. Multimed. 2016, 18, 2104–2114. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, J.; Meng, Q.; Lv, Z.; Song, Z.; Gao, Z. Stereoscopic image quality assessment method based on binocular combination saliency model. Signal Process. 2016, 125, 237–248. [Google Scholar] [CrossRef]

- Peddle, D.R.; Franklin, S.E. lmage Texture Processing and Data Integration. Photogramm. Eng. Remote Sens. 1991, 57, 413–420. [Google Scholar]

- Livens, S. Image Analysis for Material Characterisation. Ph.D. Thesis, Universitaire Instelling Antwerpen, Antwerpen, Belgium, 1998. [Google Scholar]

- Battisti, F.; Bosc, E.; Carli, M.; Le Callet, P.; Perugia, S. Objective image quality assessment of 3D synthesized views. Signal Process.-Image Commun. 2015, 30, 78–88. [Google Scholar] [CrossRef]

- Webster, M.A.; Miyahara, E. Contrast adaptation and the spatial structure of natural images. JOSA A 1997, 14, 2355–2366. [Google Scholar] [CrossRef]

- Westen, S.J.; Lagendijk, R.L.; Biemond, J. Perceptual image quality based on a multiple channel HVS model. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Detroit, MI, USA, 9–12 May 1995; Volume 4, pp. 2351–2354. [Google Scholar]

- Peli, E. Contrast in complex images. JOSA A 1990, 7, 2032–2040. [Google Scholar] [CrossRef] [PubMed]

- Damera-Venkata, N.; Kite, T.D.; Geisler, W.S.; Evans, B.L.; Bovik, A.C. Image quality assessment based on a degradation model. IEEE Trans. Image Process. 2000, 9, 636–650. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Kang, K.; Liu, X.; Lu, K. 3D image quality assessment based on texture information. In Proceedings of the 2014 IEEE 17th International Conference on Computational Science and Engineering, Chengdu, China, 19–21 December 2014; pp. 1785–1788. [Google Scholar]

- Chen, G.H.; Yang, C.L.; Xie, S.L. Gradient-based structural similarity for image quality assessment. In Proceedings of the 2006 International Conference on Image Processing (ICIP), Atlanta, GA, USA, 8–11 October 2006; pp. 2929–2932. [Google Scholar]

- Chen, G.H.; Yang, C.L.; Po, L.M.; Xie, S.L. Edge-based structural similarity for image quality assessment. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP), Toulouse, France, 14–19 May 2006; Volume 2, p. II. [Google Scholar]

- Yang, C.L.; Gao, W.R.; Po, L.M. Discrete wavelet transform-based structural similarity for image quality assessment. In Proceedings of the 2008 15th IEEE International Conference on Image Processing (ICIP), San Diego, CA, USA, 12–15 October 2008; pp. 377–380. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R. Structural similarity based image quality assessment. In Digital Video Image Quality and Perceptual Coding; Marcel Dekker Series in Signal Processing and Communications; CRC Press: Boca Raton, FL, USA, 18 November 2005; pp. 225–241. [Google Scholar]

- Huttenlocher, D.P.; Klanderman, G.A.; Rucklidge, W.J. Comparing images using the Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 850–863. [Google Scholar] [CrossRef]

- Tsai, C.T.; Hang, H.M. Quality assessment of 3D synthesized views with depth map distortion. In Proceedings of the 2013 Visual Communications and Image Processing (VCIP), Kuching, Malaysia, 17–20 November 2013; pp. 1–6. [Google Scholar]

- Vivek, E.P.; Sudha, N. Gray Hausdorff distance measure for comparing face images. IEEE Trans. Inf. Forensics Secur. 2006, 1, 342–349. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Song, R.; Ko, H.; Kuo, C. MCL-3D: A database for stereoscopic image quality assessment using 2D-image-plus-depth source. arXiv 2014, arXiv:1405.1403. [Google Scholar]

- Tian, D.; Lai, P.L.; Lopez, P.; Gomila, C. View synthesis techniques for 3D video. In Proceedings of the Applications of Digital Image Processing XXXII, San Diego, CA, USA, 3–5 August 2009; International Society for Optics and Photonics: San Diego, CA, USA, 2009; Volume 7443, p. 74430T. [Google Scholar]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef]

- Farid, M.S.; Lucenteforte, M.; Grangetto, M. Evaluating virtual image quality using the side-views information fusion and depth maps. Inf. Fusion 2018, 43, 47–56. [Google Scholar] [CrossRef]

- Chandler, D.M.; Hemami, S.S. VSNR: A wavelet-based visual signal-to-noise ratio for natural images. IEEE Trans. Image Process. 2007, 16, 2284–2298. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Bovik, A.C.; De Veciana, G. An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans. Image Process. 2005, 14, 2117–2128. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- De Silva, V.; Arachchi, H.K.; Ekmekcioglu, E.; Kondoz, A. Toward an impairment metric for stereoscopic video: A full-reference video quality metric to assess compressed stereoscopic video. IEEE Trans. Image Process. 2013, 22, 3392–3404. [Google Scholar] [CrossRef] [PubMed]

- Benoit, A.; Le Callet, P.; Campisi, P.; Cousseau, R. Quality assessment of stereoscopic images. EURASIP J. Image Video Process 2008, 659024, 2008. [Google Scholar]

- Joveluro, P.; Malekmohamadi, H.; Fernando, W.C.; Kondoz, A. Perceptual video quality metric for 3D video quality assessment. In Proceedings of the 2010 3DTV-Conference: The True Vision—Capture, Transmission and Display of 3D Video (3DTV-CON), Tampere, Finland, 7–9 June 2010; pp. 1–4. [Google Scholar]

- Tian, S.; Zhang, L.; Morin, L.; Deforges, O. NIQSV: A no reference image quality assessment metric for 3D synthesized views. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1248–1252. [Google Scholar]

- Ling, S.; Le Callet, P. Image quality assessment for free viewpoint video based on mid-level contours feature. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 79–84. [Google Scholar]

| Metric | PLCC | SROCC | KROCC | RMSE | MAE |

|---|---|---|---|---|---|

| PSNR | 0.8226 | 0.8400 | 0.6393 | 1.4794 | 1.1830 |

| SSIM | 0.7656 | 0.7777 | 0.5796 | 1.6737 | 1.2810 |

| VSNR | 0.8287 | 0.8368 | 0.6357 | 1.4560 | 1.1520 |

| IFC | 0.5104 | 0.7423 | 0.5495 | 2.2376 | 1.8331 |

| MSSIM | 0.8666 | 0.8762 | 0.6852 | 1.2983 | 1.0188 |

| VIF | 0.7983 | 0.7933 | 0.5999 | 1.5668 | 1.2240 |

| UQI | 0.7537 | 0.7564 | 0.5595 | 1.7099 | 1.3066 |

| Ours | 0.8909 | 0.8979 | 0.7095 | 1.1816 | 0.9245 |

| Metric | PLCC | SROCC | KROCC | RMSE | MAE |

|---|---|---|---|---|---|

| 3DSwIM | 0.6497 | 0.5683 | 0.4088 | 1.9777 | 1.4902 |

| StSD | 0.6995 | 0.7008 | 0.5084 | 1.8593 | 1.4563 |

| Chen | 0.8615 | 0.8708 | 0.6769 | 1.3212 | 1.0362 |

| Benoit | 0.7425 | 0.7518 | 0.5589 | 1.7429 | 1.3480 |

| Campisi | 0.7656 | 0.7777 | 0.5796 | 1.6737 | 1.2810 |

| PQM | 0.8612 | 0.8646 | 0.6729 | 1.3225 | 1.0029 |

| Gorley | 0.7099 | 0.7196 | 0.5270 | 1.8323 | 1.4749 |

| You | 0.7504 | 0.7567 | 0.5600 | 1.7196 | 1.3325 |

| SIQM | 0.7744 | 0.7756 | 0.5648 | 1.6461 | 1.2757 |

| You | 0.3650 | 0.6609 | 0.4938 | 2.4222 | 2.0123 |

| Ryu | 0.8752 | 0.8824 | 0.6923 | 1.2584 | 0.9859 |

| NIQSV | 0.6783 | 0.6208 | 0.4384 | 1.9118 | 1.6032 |

| ST-SIAQ | 0.7133 | 0.7034 | 0.5118 | 1.8233 | 1.4028 |

| Ours | 0.8909 | 0.8979 | 0.7095 | 1.1816 | 0.9245 |

| Metric | AWN | Gauss | Sample | Tansloss | JPEG | JP2K |

|---|---|---|---|---|---|---|

| PSNR | 0.9125 | 0.9047 | 0.9051 | 0.8602 | 0.7491 | 0.8719 |

| SSIM | 0.8652 | 0.8594 | 0.8535 | 0.8542 | 0.7849 | 0.8052 |

| VSNR | 0.8943 | 0.8533 | 0.8449 | 0.7571 | 0.7352 | 0.6150 |

| IFC | 0.9114 | 0.7116 | 0.7733 | 0.6004 | 0.8394 | 0.5985 |

| MSSIM | 0.9103 | 0.9077 | 0.9106 | 0.9011 | 0.9097 | 0.8963 |

| VIF | 0.9555 | 0.9285 | 0.9128 | 0.8168 | 0.9417 | 0.9155 |

| UQI | 0.8501 | 0.8669 | 0.8608 | 0.8494 | 0.8896 | 0.7537 |

| Ours | 0.9081 | 0.9332 | 0.9310 | 0.8195 | 0.9502 | 0.9369 |

| Metric | AWN | Gauss | Sample | Tansloss | JPEG | JP2K |

|---|---|---|---|---|---|---|

| 3DSwIM | 0.4640 | 0.8218 | 0.8127 | 0.7566 | 0.6431 | 0.6478 |

| StSD | 0.7472 | 0.8429 | 0.8392 | 0.6527 | 0.7372 | 0.7836 |

| Chen | 0.9058 | 0.8978 | 0.9088 | 0.8842 | 0.9078 | 0.8928 |

| Benoit | 0.9102 | 0.8600 | 0.8544 | 0.6796 | 0.8044 | 0.8064 |

| Campisi | 0.8652 | 0.8594 | 0.8535 | 0.8542 | 0.7849 | 0.8052 |

| Ryu | 0.9343 | 0.9409 | 0.9163 | 0.8685 | 0.8935 | 0.9284 |

| PQM | 0.8653 | 0.9346 | 0.9337 | 0.8052 | 0.8732 | 0.9027 |

| Gorley | 0.7735 | 0.8550 | 0.8544 | 0.6043 | 0.8326 | 0.9051 |

| You | 0.9278 | 0.8560 | 0.8564 | 0.7930 | 0.7934 | 0.8071 |

| SIQM | 0.7798 | 0.8738 | 0.8673 | 0.6851 | 0.8858 | 0.7057 |

| NIQSV | 0.8184 | 0.9156 | 0.8862 | 0.719 | 0.2798 | 0.7070 |

| ST_SIAQ | 0.7884 | 0.8215 | 0.8070 | 0.8264 | 0.742 | 0.7884 |

| You | 0.8856 | 0.8685 | 0.7138 | 0.5373 | 0.8599 | 0.9121 |

| Ours | 0.9081 | 0.9332 | 0.9370 | 0.8195 | 0.9502 | 0.9369 |

| 2D-IQA Metrics | 3D-IQA Metrics | ||

|---|---|---|---|

| Metric | Variance | Metric | Variance |

| 3DSwiM | 3.9174 | PSNR | 2.1919 |

| StSD | 3.4625 | SSIM | 2.8056 |

| Chen | 1.7483 | VSNR | 2.1232 |

| Benoit | 3.0423 | IFC | 5.0145 |

| Campisi | 2.8056 | MSSIM | 1.6881 |

| Ryu | 1.5860 | VIF | 2.4588 |

| PQM | 1.7518 | UQI | 2.9281 |

| Gorley | 3.3626 | ||

| You | 2.9615 | ||

| You | 5.8762 | ||

| SIQM | 2.7138 | ||

| ST_SIAQ | 3.3297 | ||

| NIQSV | 3.6606 | ||

| Ours | 1.3983 | ||

| Metric | 3DSwIM | Benoit | Campisi | Gorley | PQM | Ryu | SIQM | StSD | You | Chen | NIQSV | ST_SIAQ | You | Ours |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | AGJjST | |

| 3DSwIM | ------ | 0-00-- | 0-00-0 | 0-00-1 | 00000- | 000000 | 0--0-- | 0-0--- | 0-00-- | 000000 | 00-10- | 0-0--- | 0-0011 | 00000- |

| Benoit | 1-11-- | ------ | 1----0 | 1-0--- | 100000 | -00000 | 1-10-- | 1----- | -----0 | --0000 | 1011-- | 1----0 | --0-1- | -00000 |

| Campisi | 1-11-1 | 0----1 | ------ | 1-0--1 | -0000- | 00000- | 1-10-1 | 1----1 | 0----- | 0-000- | -011-1 | 1----- | --0011 | 00000- |

| Gorley | 1-11-0 | 0-1--- | 0-1--0 | ------ | 00--00 | 00-000 | --10-- | --11-- | 0-1--0 | 00-000 | -011-- | --11-0 | 0---1- | 000000 |

| PQM | 11111- | 011111 | -1111- | 11--11 | ------ | 0-0--0 | 111-11 | 111111 | 01111- | 01-0-0 | --111- | 11111- | -1--11 | 0-00-- |

| Ryu | 111111 | -11111 | 11111- | 11-111 | 1-1--1 | ------ | 111-11 | 111111 | -11111 | 111--- | 1111-1 | 11111- | 11--11 | 1--0-- |

| SIQM | 1--1-- | 0-01-- | 0-01-0 | --01-- | 000-00 | 000-00 | ------ | ---1-- | 0-01-0 | 0-0-00 | -0-1-- | ---110 | 0-0-1- | 000000 |

| StSD | 1-1--- | 0----- | 0----0 | --00-- | 000000 | 000000 | ---0-- | ------ | 0----0 | 000000 | -0-10- | -----0 | 0-001- | 000000 |

| You | 1-11-- | -----1 | 1----- | 1-0--1 | 10000- | -00000 | 1-10-1 | 1----1 | ------ | -00000 | 1011-- | 1----- | 1-0011 | -0000- |

| Chen | 111111 | --1111 | 1-111- | 11-111 | 10-1-1 | 000--- | 1-1-11 | 111111 | -11111 | ------ | 1-11-1 | 111111 | ---111 | -000-1 |

| NIQSV | 11-01- | 0100-- | -100-0 | -100-- | --000- | 0000-0 | -1-0-- | -1-01- | 0100-- | 0-00-0 | ------ | -1-010 | 010011 | 0-0000 |

| ST_SIAQ | 1-1--- | 0----1 | 0----- | --00-1 | 00000- | 00000- | ---001 | -----1 | 0----- | 000000 | -0-101 | ------ | 0-0011 | 00000- |

| You | 1-1100 | --1-0- | --1100 | 1---0- | -0--00 | 00--00 | 1-1-0- | 1-110- | 0-1100 | ---000 | 101100 | 1-1100 | ------ | -0-000 |

| Ours | 11111- | -11111 | 11111- | 111111 | 1-11-- | 0--1-- | 111111 | 111111 | -1111- | -111-0 | 1-1111 | 11111- | -1-111 | ------ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alvi, H.M.U.H.; Farid, M.S.; Khan, M.H.; Grzegorzek, M. Quality Assessment of 3D Synthesized Images Based on Textural and Structural Distortion Estimation. Appl. Sci. 2021, 11, 2666. https://doi.org/10.3390/app11062666

Alvi HMUH, Farid MS, Khan MH, Grzegorzek M. Quality Assessment of 3D Synthesized Images Based on Textural and Structural Distortion Estimation. Applied Sciences. 2021; 11(6):2666. https://doi.org/10.3390/app11062666

Chicago/Turabian StyleAlvi, Hafiz Muhammad Usama Hassan, Muhammad Shahid Farid, Muhammad Hassan Khan, and Marcin Grzegorzek. 2021. "Quality Assessment of 3D Synthesized Images Based on Textural and Structural Distortion Estimation" Applied Sciences 11, no. 6: 2666. https://doi.org/10.3390/app11062666

APA StyleAlvi, H. M. U. H., Farid, M. S., Khan, M. H., & Grzegorzek, M. (2021). Quality Assessment of 3D Synthesized Images Based on Textural and Structural Distortion Estimation. Applied Sciences, 11(6), 2666. https://doi.org/10.3390/app11062666