2.2. Camera Pose Estimation and Target Detection

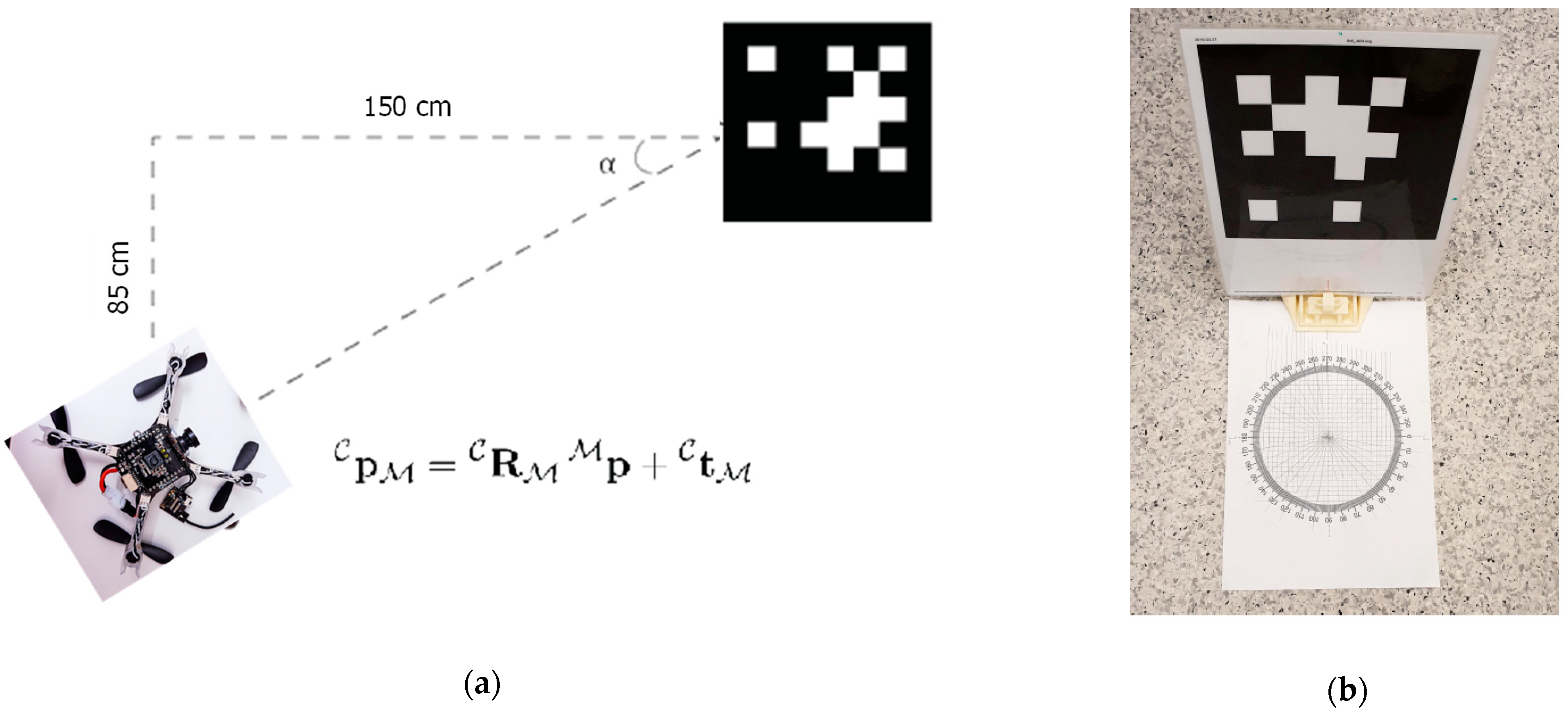

In this research, in order perform the camera pose estimation and target detection, we selected the ArUco library proposed Muñoz and Garrido [

11]. The library contains a set of ArUco markers which are represented by binary square fiducial markers with its own unique identifier that can be used for camera estimation (see

Section 3.2).

When the four corners of the ArUco marker are detected from the camera’s point of view, the camera pose can be estimated. As the marker is planar, the transformation can be carried out by means of a homography function. The function returns the transformation as two vectors: one for the translation and the other one for the relative rotation. After the calculation of those vectors, the rotation matrix, R(Ψ, Θ, Φ), can be computed as shown in Equation (1), which indicates the rotation between the two reference frames. Rotations may also occur about an arbitrary axis—i.e., an axis other than the unit vectors representing the reference frame. In such cases, we need a way of determining the final orientation and the unique axis about which the point vector is rotated (often referred to as orientation kinematics). A rotation of a generic vector

about an arbitrary axis

through an angle

can be written as Equation (2), where

is the skew-symmetric matrix representation of the vector

and is defined as Equation (3). Equation (2) is called Rodrigues’ rotation formula and has proved to be especially useful for our purpose, with the main reason being that by knowing the numerical values of the elements contained within the rotation matrix, it is possible to compute

and

using Equations (4) and (5).

where

is the sum of the scalar values in the main diagonal of the rotation matrix,

R:

.

In order to detect the ArUco markers, at first, in order to find the candidate corners of the markers, a local adaptive thresholding and extraction of contours is carried out. The adaptive thresholding is conducted by applying a window sliding (3 × 3, 5 × 5, 11 × 11, etc.) and finding optimum greyscale window. Values that are below the calculated value will be black and those above are white. After obtaining the thresholded image, if the result is not similar to a square, then the candidate corner is not selected. After selecting the corners, the white and black elements in the inner binary matrix are extracted in order to validate them. This process is conducted using the homography matrix and Otsu’s method [

11]. The resultant binary bits are then compared against all the available ArUco markers, so the respective marker is detected.

Through the ArUco library, camera pose estimation and marker detection can be achieved. However, due to the possibility of camera occlusion, data losses, etc., the relative pose of the Crazyflie 2.0 cannot be conducted. In order to overcome such issues, we have proposed to implement a Kalman filter [

12]. The Kalman filter is an optimal estimator based on a recursive algorithm for estimating the track of an object by means of the given measurements to keep the accuracy of the state estimation aiming to predict future states. In our case, we have proposed to use the linear Kalman filter, which minimizes the mean-square error of the state. If we assume a current state

(including both the position and velocity), as shown in Equation (6), the filtering algorithm will then assume a correlation between the elements contained in

, captured by a covariance matrix,

.

Based on this, the state estimation of

will be computed by means of the previously estimated state,

, the covariance matrix, and the current measurement from time step

k (by assuming that the error between the measured and estimated state are Gaussian distributed). The state is then updated based on the given measurements and the previously estimated state.

Equation (7) indicates the state transition matrix,

. If we assume an acceleration,

, and the estimation shown in Equation (8), we can compute the state estimation as shown in Equation (9), where

is the control matrix and

is the control vector used as an input to the system. By defining the covariance

, it is possible to take into account the noise in every prediction step, as shown in Equation (10).

The given new measurement data are then stored in the vector,

z. As the data may include noise, denoted as

, the vector of the logged data can be defined as Equation (11), where

is a general matrix that represents the measurement matrix. By defining the state estimation as Equation (12), where

is known as the Kalman gain, the final equation to update state can be defined as Equations (13) and (14), where

is determined as Equation (15).

In cases where the desired marker is not identified after some time, the velocity for each state will be exponentially decreased such that for each time step,

, where

denotes a time step with an undetected marker, the updated estimation has been computed as Equation (16), where

is the diminishing factor.

2.3. Control Architecture

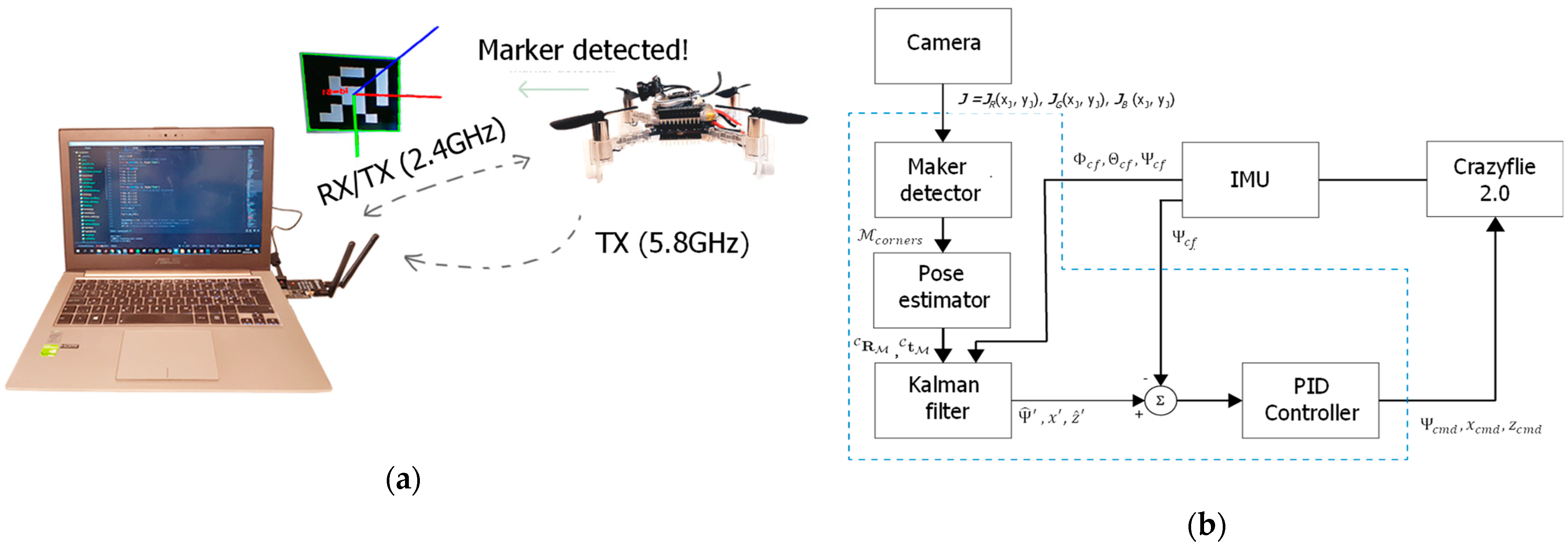

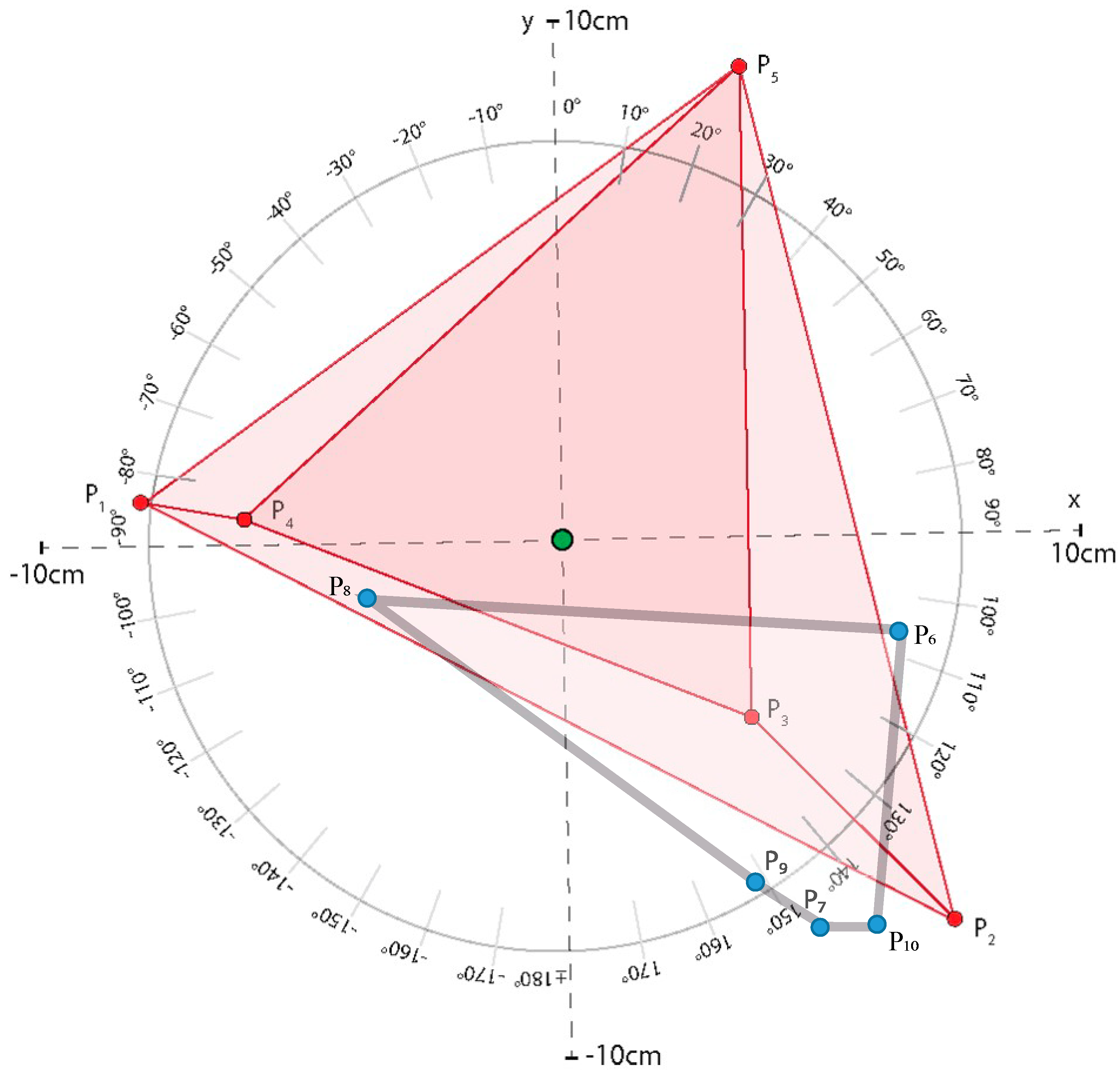

The physical representation of the proposed system is shown in

Figure 2a. As can be observed, a PC is used to process the grabbed images from the camera mounted on-board of the MAV though the video transmitter (Eachine TX801 5.8 GHz) and the command signals to the MAV through a bi-directional USB radio communication (Crazyradio PA 2.4 GHz).

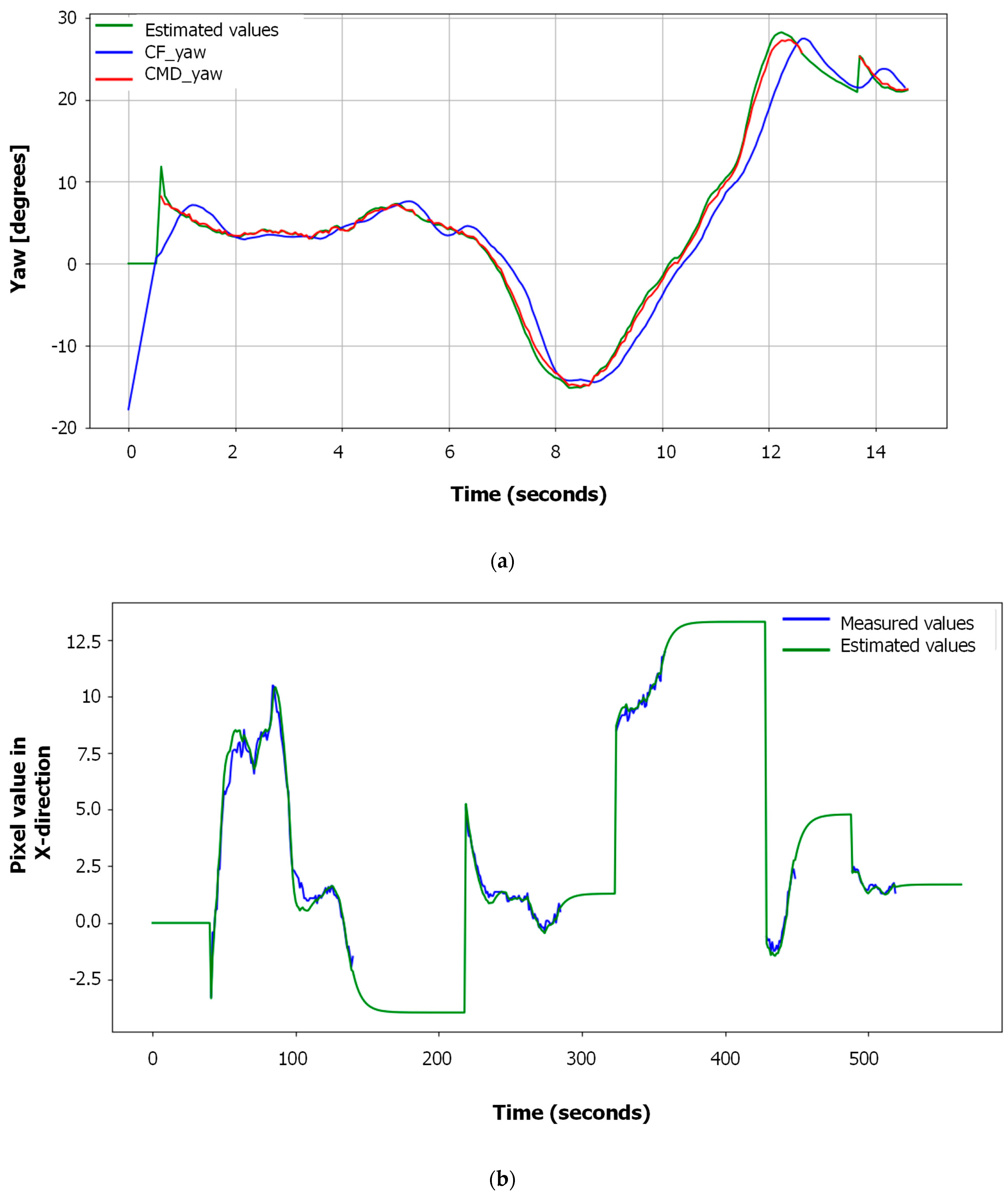

After computing in the PC the camera posture estimation and identifying the ArUco marker, the relative MAV posture is then given as new measurement data to the linear Kalman filter algorithm. As shown in

Figure 2, the estimated pose of the MAV is then used as a reference signal to the compute the error signal. In order to reduce the error, the PID controllers the compute the commanded control signal in order to align the MAV pose towards the target location. As a first approach, we only considered the yaw rotation (

-angle) and the

x and

z translations.

The on-board controller consists of two PID controllers in a cascade. A rule of thumb for cascaded PID controllers is that in order to have a stable and robust control scheme, the inner loop should operate at a higher frequency than the outer loop [

13]. If the outer loop runs at a lower frequency than the inner loop, synchronization problems may occur, which entails in that the steady state value of the outer loop is reached before the inner loop has computed its output response, causing instability [

13]. In this case, the on-board attitude and attitude rate controllers operate at 250 and 500 Hz, respectively, and the off-board controllers operate at about 10 Hz.

The set of off-board PID controllers has three different command variables: the relative yaw angle and translation in x- and z direction. In order to determine the best suitable experimental tuning method for the PID controllers, three different methods were tested: Ziegler–Nichols, Lambda, and Approximate M-constrained Integral Gain Optimisation (AMIGO).

The Ziegler–Nichols method was published in 1942 [

14] and was developed by performing a large number of simulations on pneumatic analogue machines [

15]. The parameters are determined from a step response analysis according to the

Table 1. The AMIGO method was introduced in 2004. It has similarities to the Ziegler–Nichols method, but is instead based on simulations performed with computers [

16]. The parameters are determined from a step response experiment according to

Table 2. The lambda method differs from the methods above by a parameter that the user can adjust themselves. The method is widely used in the paper industry and was developed in the 1960s. The original method only dealt with the PI controller, but it is possible to derive formulas for PID controllers as well. The lambda method does not provide parameters for integrating processes [

15]. For the PID controller in parallel, the parameters are taken from a step response according to

Table 3.

As is shown in

Table 1,

Table 2 and

Table 3, the times calculated are T

63%, T

100%, and

L.

L is the dead time of the system and this is calculated by determining the point of intersection of the previously calculated maximum derivative and the time axis. T

100% is obtained by calculating the intersection point of the maximum derivative with the line Ys, then obtained a time that is T

100% +

L. To get T

100%,

L is then subtracted. T

63% is obtained by examining when the first measurement data point exceeds the value of 0.63Ys. The time for that measurement data point is then time T

63% +

L and, the same as for T

100%, the dead time

L must be subtracted away. In the lambda method, the factor T

cl = T

63% will be used to obtain an aggressive regulation similar to the results of Ziegler–Nichols and AMIGO.

To test the step response of the Crazyflie 2.0 in indoor conditions, different additional weights (0, 4.7, 6.1, and 10.8 g) were considered. To calculate which parameters would be used for PID according to the Ziegler–Nichols, lambda, and AMIGO methods, the same program was used in Matlab. Four tests for each case were performed and they resulted in the mean values of the PID parameters according to

Table 4,

Table 5,

Table 6 and

Table 7.

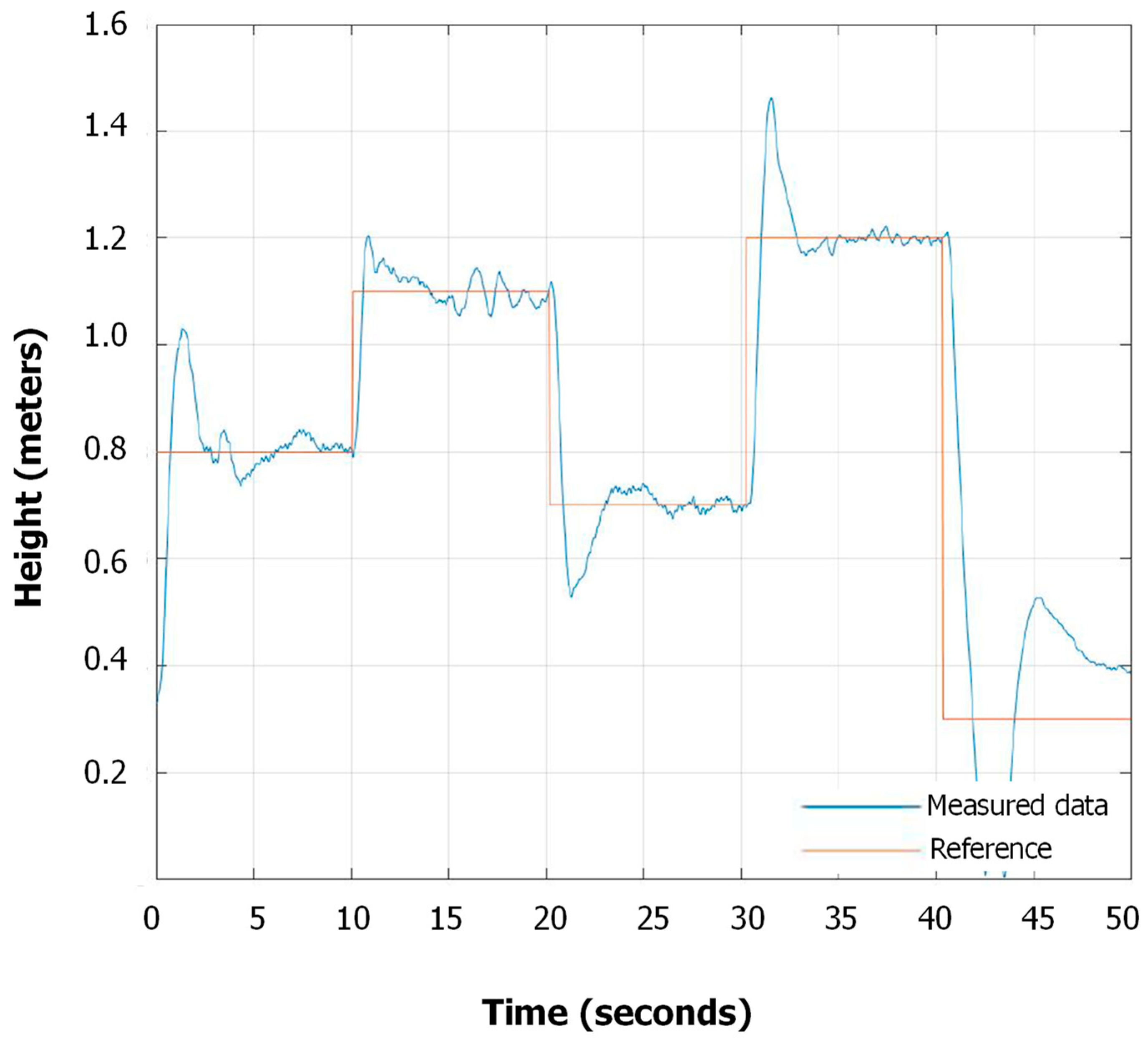

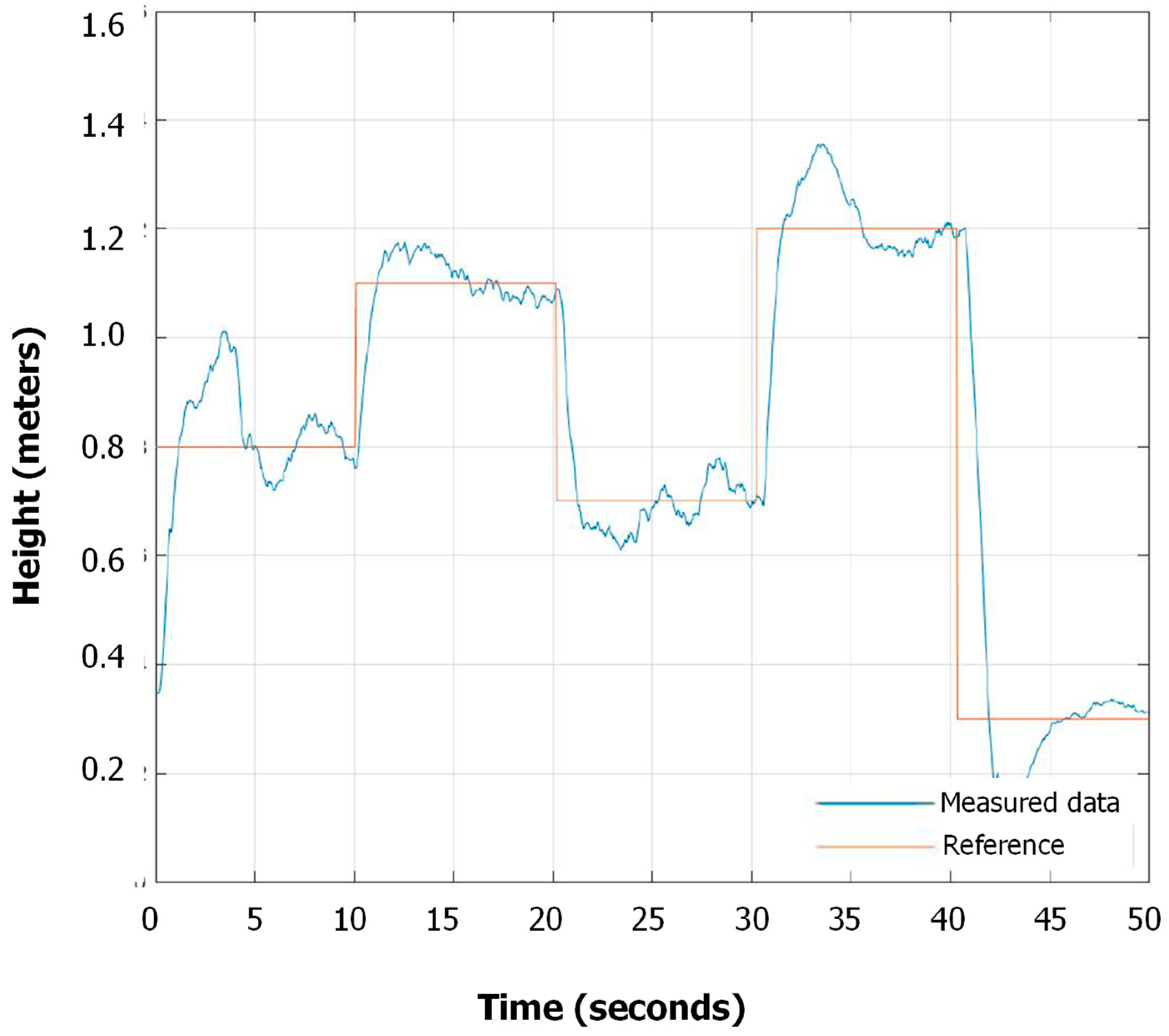

As is shown in the experimental results, with an additional load of 0, 4.7, and 6.1 g in

Table 8,

Table 9 and

Table 10, respectively, the AMIGO method in each case has a noticeably shorter settling time than the Ziegler–Nichols method (as is shown in

Table 8, the lambda method is much slower than the others and is therefore excluded when placing an additional load of 4.7 and 6.1 g). The AMIGO method is considered the better of them even though in some cases it has a slower rise time and higher overshoot than the Ziegler–Nichols method, but these two parameters do not differ much from the different methods. As an example, we can observe the experimental results in

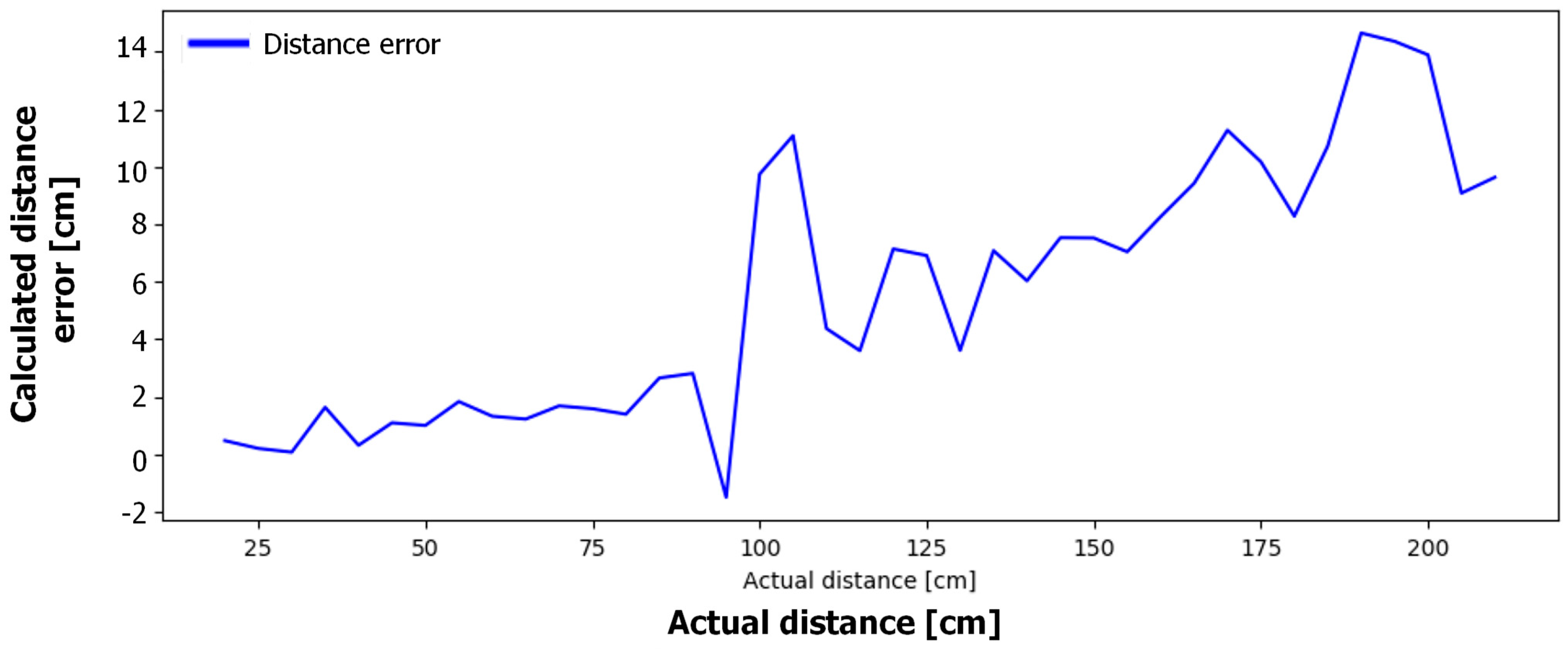

Figure 3 and

Figure 4 for both a case without any additional weight and a case with a load of 4.7 g, respectively.

Therefore, based on the preliminary experimental results presented above, the AMIGO method was chosen as the primary tuning method as it has been proven to be a reliable method for tuning the PID controllers of the Crazyflie 2.0.