MBSE Testbed for Rapid, Cost-Effective Prototyping and Evaluation of System Modeling Approaches

Abstract

:Featured Application

Abstract

1. Introduction

2. Materials and Methods

- Operating system(s): Linux (Ubuntu 16.04) and Windows

- Programming environment: Spyder cross-platform development environment for Python 3.x programming language

- Systems Modeling Language (SysML) [6]

- Decision trees

- Hidden Markov Models (HMM) [7]

- Partially Observable Markov Decision Process (POMDP) model [8]

- Optimization using fitness functions

- N-step Look-ahead decision-processing algorithm

- Traditional deterministic control algorithm (e.g., proportional-integral-derivative controller (PID) algorithm)

- Q-learning algorithm

- NumPy—a Python library for manipulating large, multidimensional arrays and matrices

- Pandas—a Python library for data manipulation and analysis, specifically numerical tables, and time series

- Scikit-learn—a machine-learning library for Python; built on top of NumPy, SciPy and matplotlib

- Python-open CV—a library of Python bindings designed to solve computer vision problems

- Hardware–Software Integration Infrastructure: Multiple simulation platforms are used for visualization, experimentation, and data collection from scenario simulations for both ground-based and airborne systems; integrate simulations (implemented in Python 3) with hardware platforms, such as Donkey Car [4] and quadcopters.

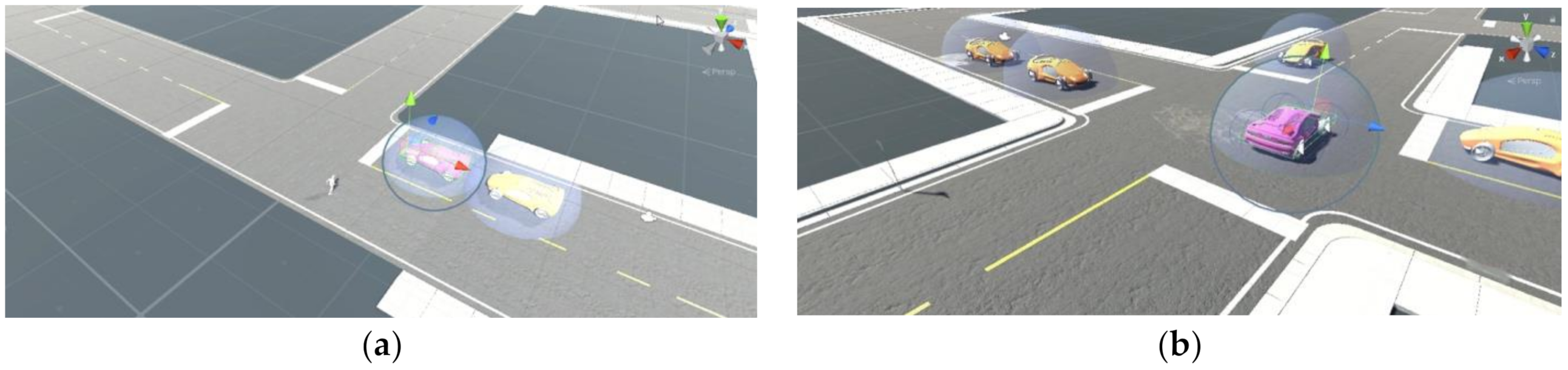

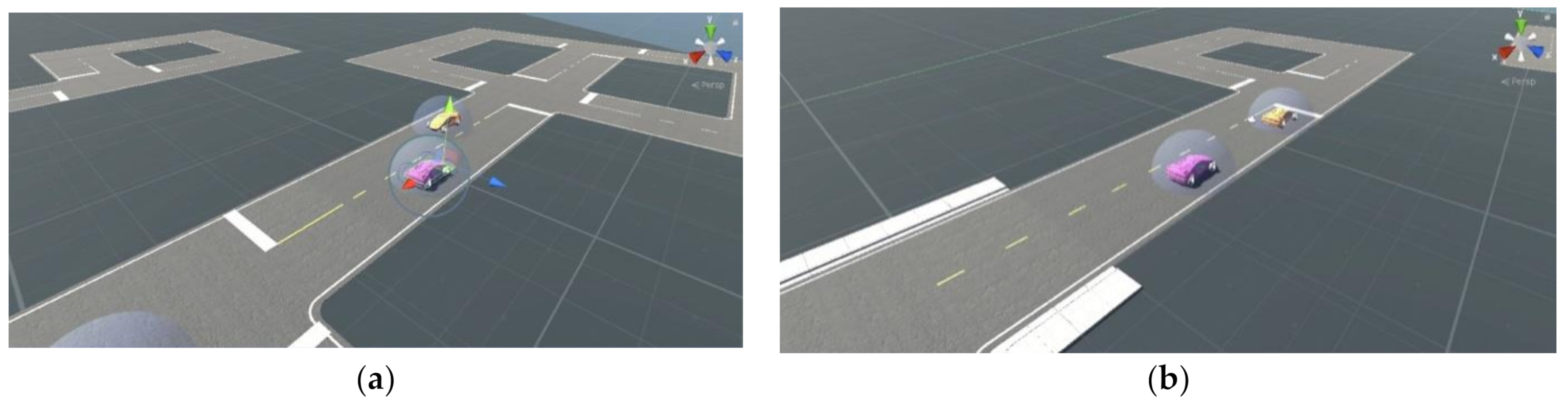

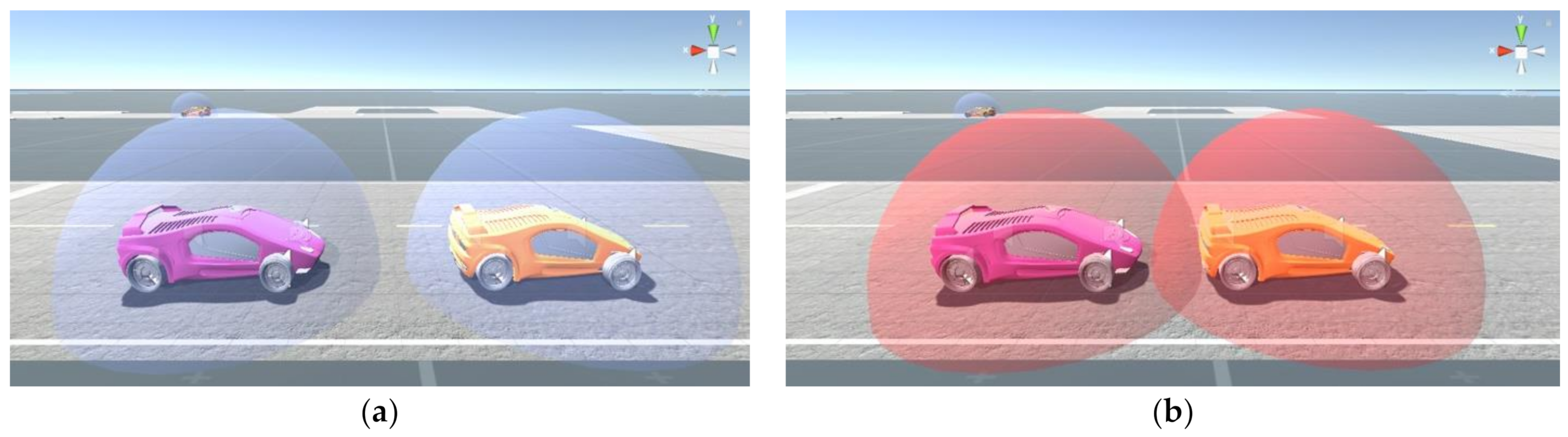

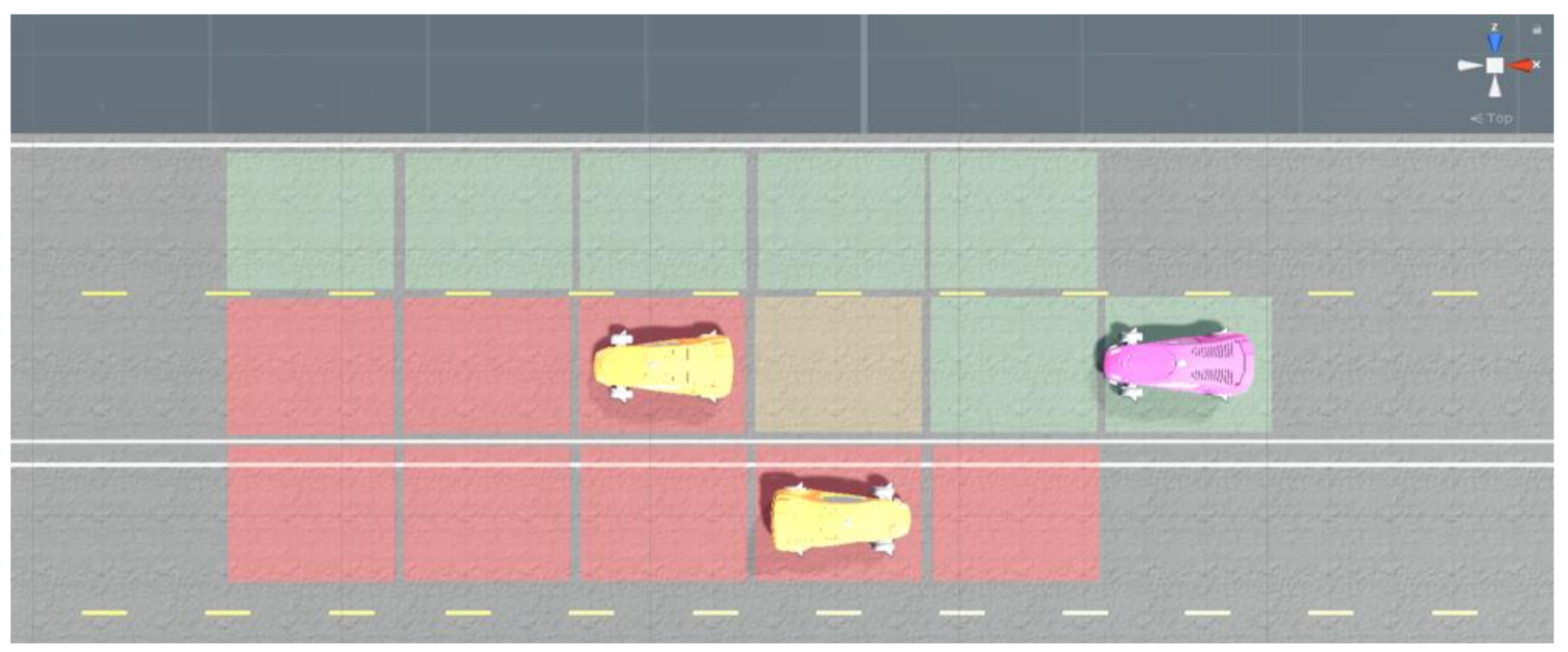

- CARLA Simulation Platform: CARLA offers an open-source high-fidelity simulator (including code and protocols) for autonomous driving research [5]. CARLA provides open digital assets, such as urban layouts, buildings, city maps, and vehicles. CARLA also supports flexible specification of sensor suites, environmental conditions, and dynamic actors. CARLA provides Python APIs to facilitate integration.

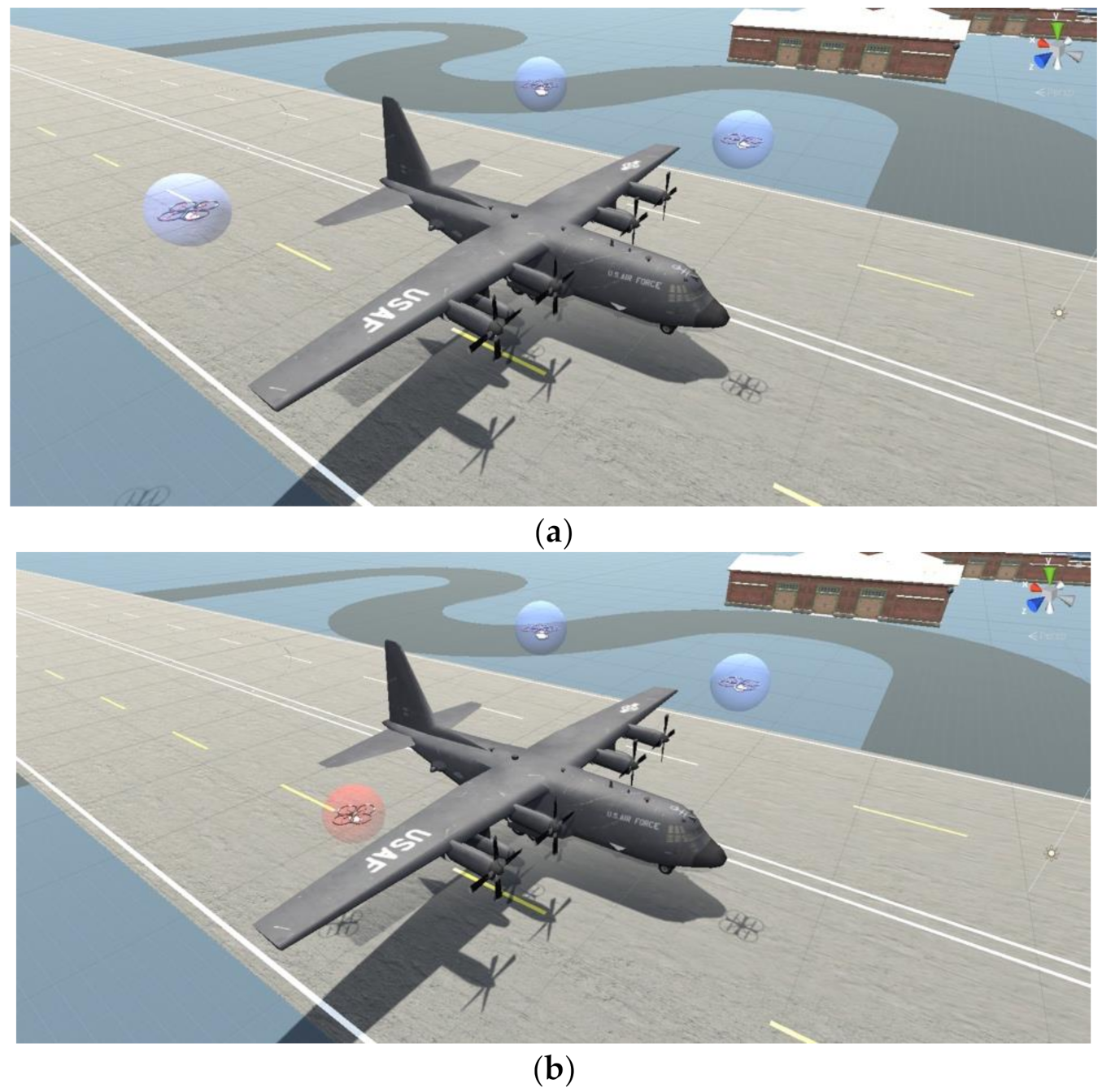

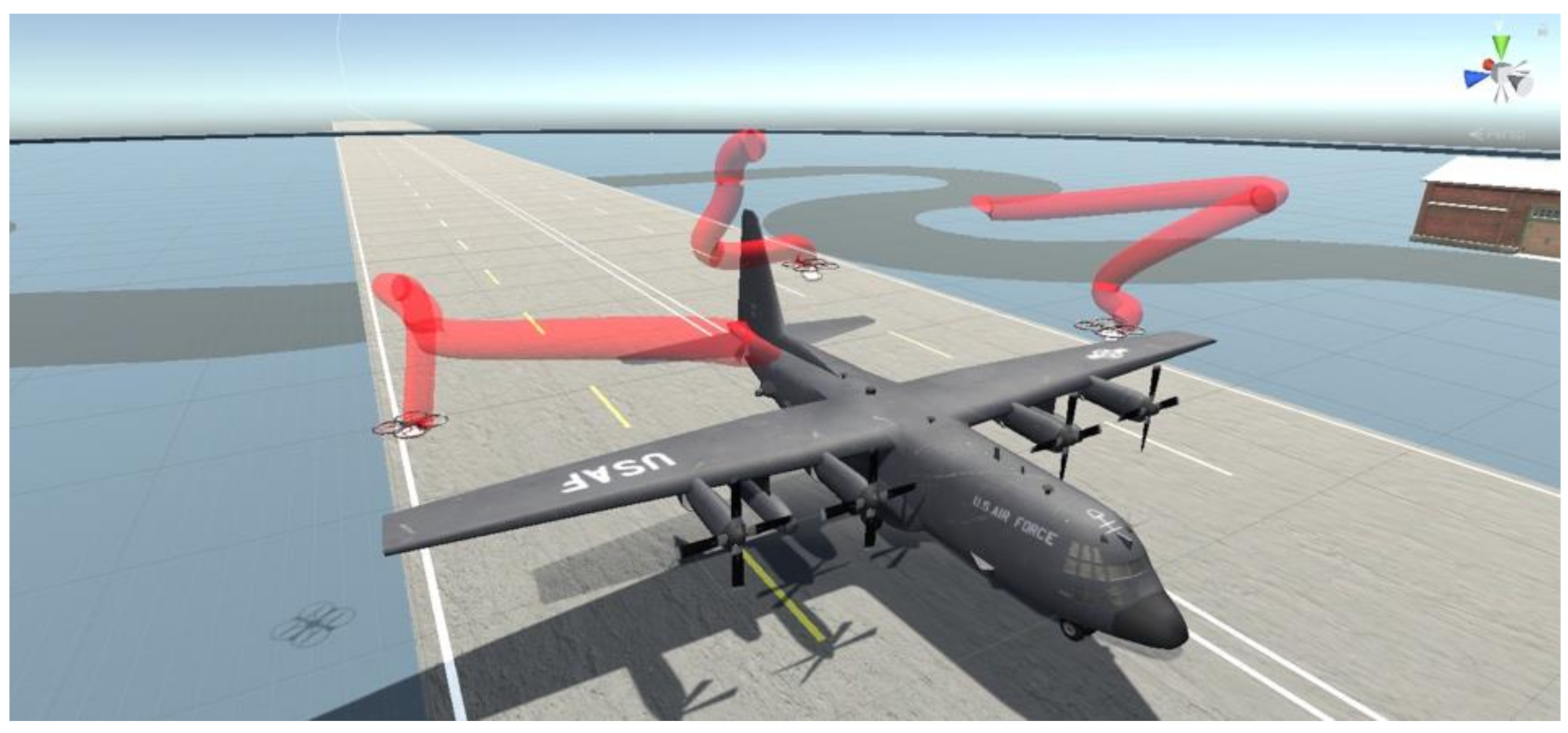

- DroneKit Platform: DroneKit, an open-source platform, is used to create apps, models, and algorithms that run on onboard computers installed on quadcopters. This platform provides various Python APIs that allows for experimenting with simulated quadcopters and drones. The code can be accessed on GitHub [9].

- Donkey Car platform: an open-source platform for conducting autonomous vehicles research

- Raspberry Pi (onboard computer): on the Donkey Car

- Quadcopters (very small UAVs used in surveillance missions), 1/16 scale robot cars

- Video Cameras: mounted on Donkey Car and quadcopters

- Socket communication: used to send commands from a computer to a Donkey Car or a quadcopter

3. Results

3.1. Research Objectives

- Develop a structured framework for cost-effective and rapid prototyping and experimentation with different models, algorithms, and operational scenarios.

- Develop an integrated hardware–software environment to support on-demand demonstrations and facilitate technology transition to customer environments.

- Defining the key modeling formalisms that enable flexible modeling based on operational environment characteristics and knowledge of the system state space.

- Defining a flexible and customizable user interface that enables scenario building by nonprogrammers, visualization of simulation execution from multiple perspectives, and tailored report generation.

- Defining operational scenarios encompassing both nominal and extreme cases (i.e., edge cases) that challenge the capabilities of the system of interest (SoI)

- 4.

- Identifying low-cost components and connectors for realizing capabilities of the SoI

- 5.

- Defining an ontology-enabled integration capability to assure correctness of the integrated system.

- 6.

- Testing the integrated capability using an illustrative scenario of interest to the systems engineering community

3.2. MBSE Testbed Concept

- represent models at multiple scales and from different perspectives.

- integrate with digital twins of physical systems—support both symbolic and high fidelity, time-accurate simulations; the latter can be augmented by FPGA-based development boards to create complex, time-critical simulations; and maintain synchronization between real-world hardware and virtual simulation.

- accommodate multiple views, multiple models, analysis tools, learning algorithms.

- manage dynamically configurable systems through software agents, employed in the simulation—in the future these agents could invoke processes allocated to Field Programmable Gate Arrays (FPGAs).

- collect and generate evidence for developing trust in systems.

- exploit feedback from the system and environment during adaptive system operation.

- address temporal constraints and their time-related considerations.

- address change cascades (e.g., arising from failures) not addressed by existing tools.

- validate models, which implies flexibility in simulation interfaces as well as propagation of changes in modeled components as data are collected in physical tests.

- Inheritance evaluation, in which legacy and third-party components are subjected to the usage and environmental conditions of the new system.

- Probabilistic learning models, which begin with incomplete system representations and progressively fill in details and gaps with incoming data from collection assets; the latter enable learning and filling in gaps in the knowledge of system and environment states.

- Networked control, which requires reliable execution and communication that enables satisfaction of hard time deadlines [5,17] across a network. Because networked control is susceptible to multiple points of cyber vulnerabilities, the testbed infrastructure should incorporate cybersecurity and cyber-resilience.

- Enforceable properties define core attributes of a system that must remain immutable in the presence of dynamic and potentially unpredictable environments. The testbed must support verification that these properties are dependable regardless of external conditions and changes.

- Support for safety-critical systems in the form of, for example, executable, real-time system models that detect safety problems and then shut down the simulation, while the testbed can be queried to determine what happened.

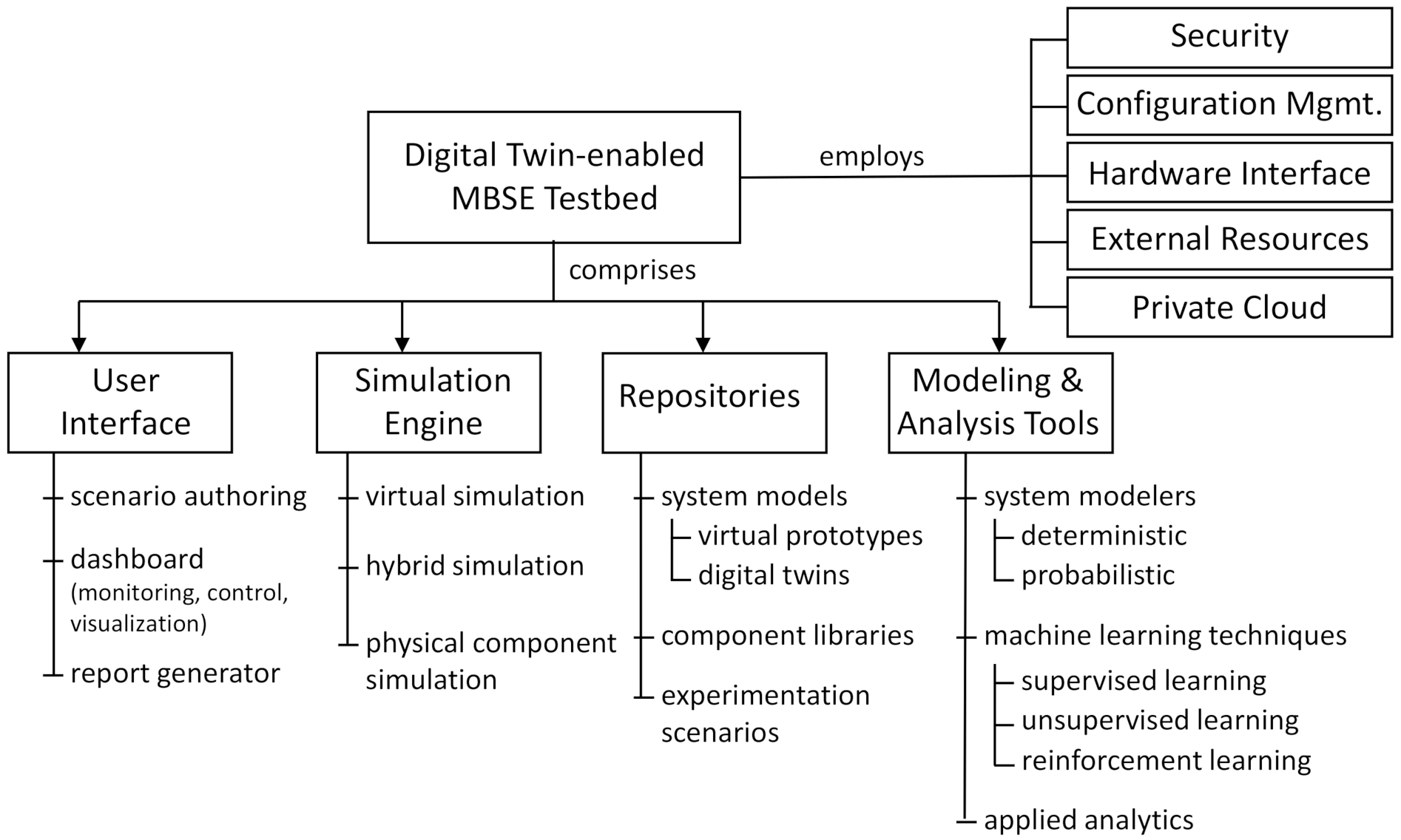

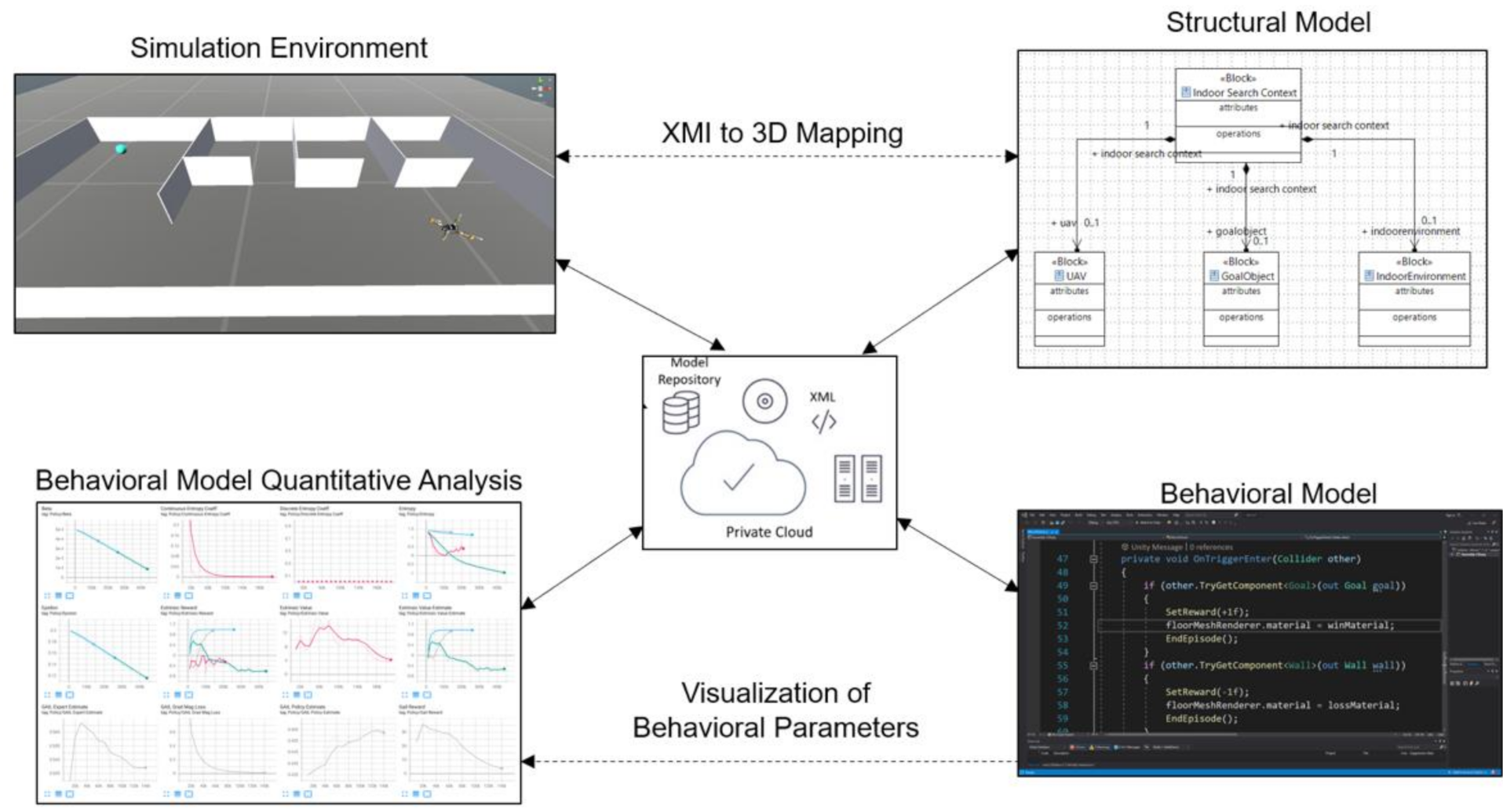

3.3. Logical Architecture of MBSE Testbed

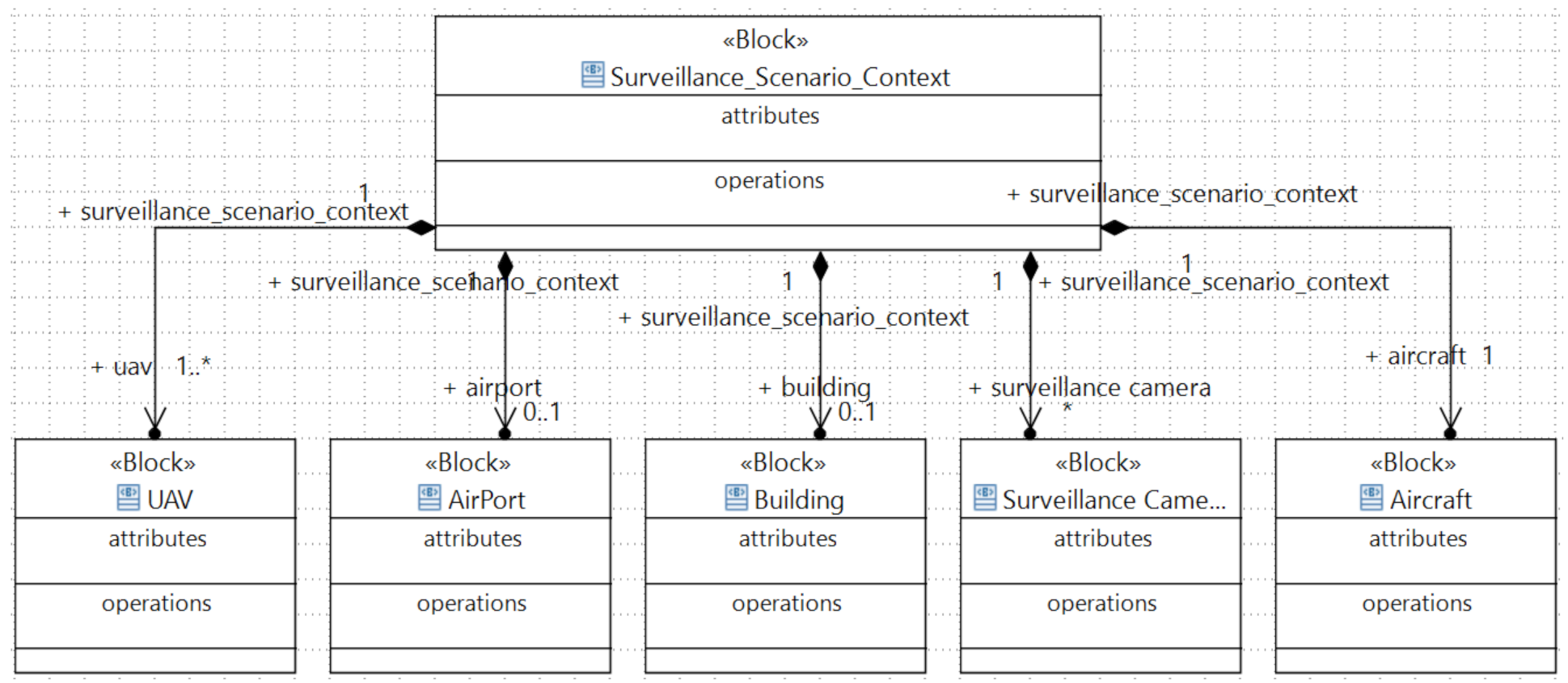

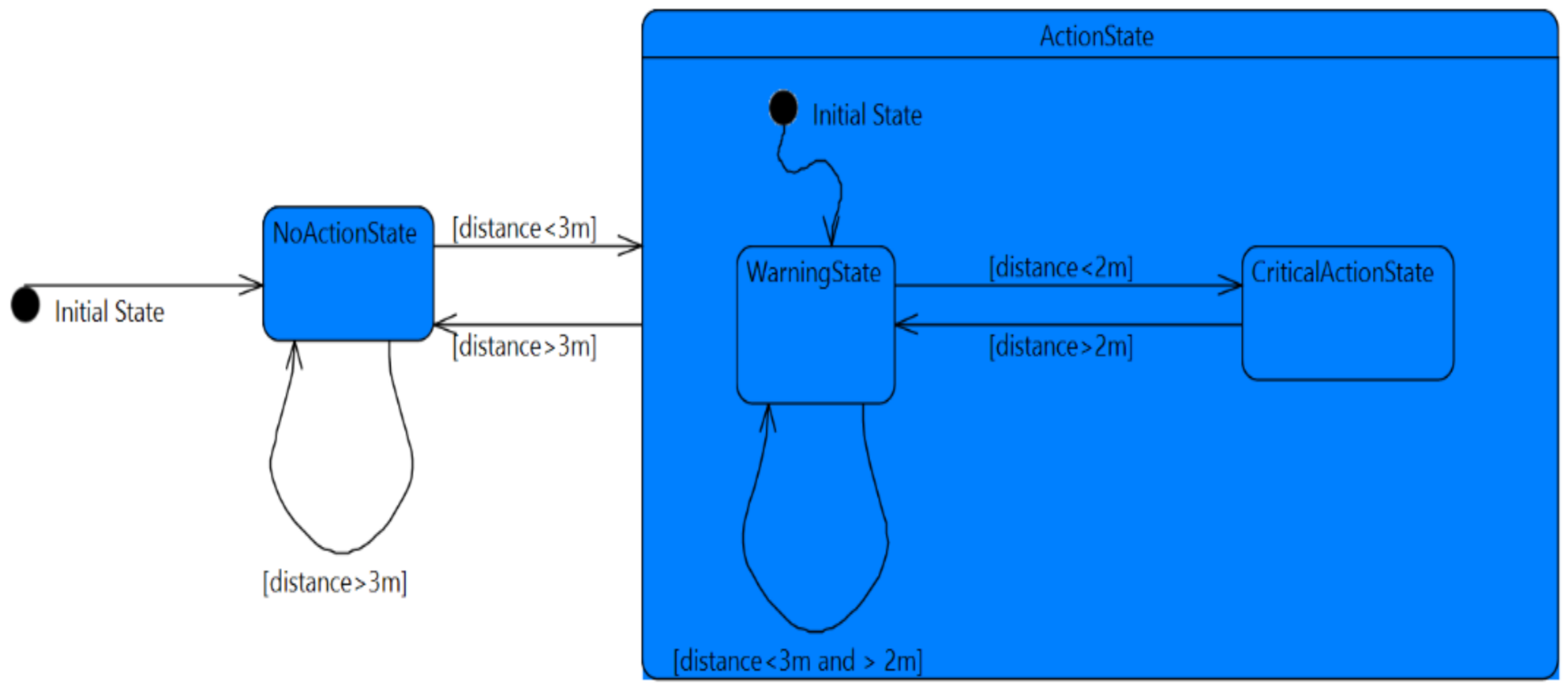

3.3.1. System Modeling and Verification

3.3.2. Rapid Scenario Authoring

3.3.3. Model and Scenario Refinement

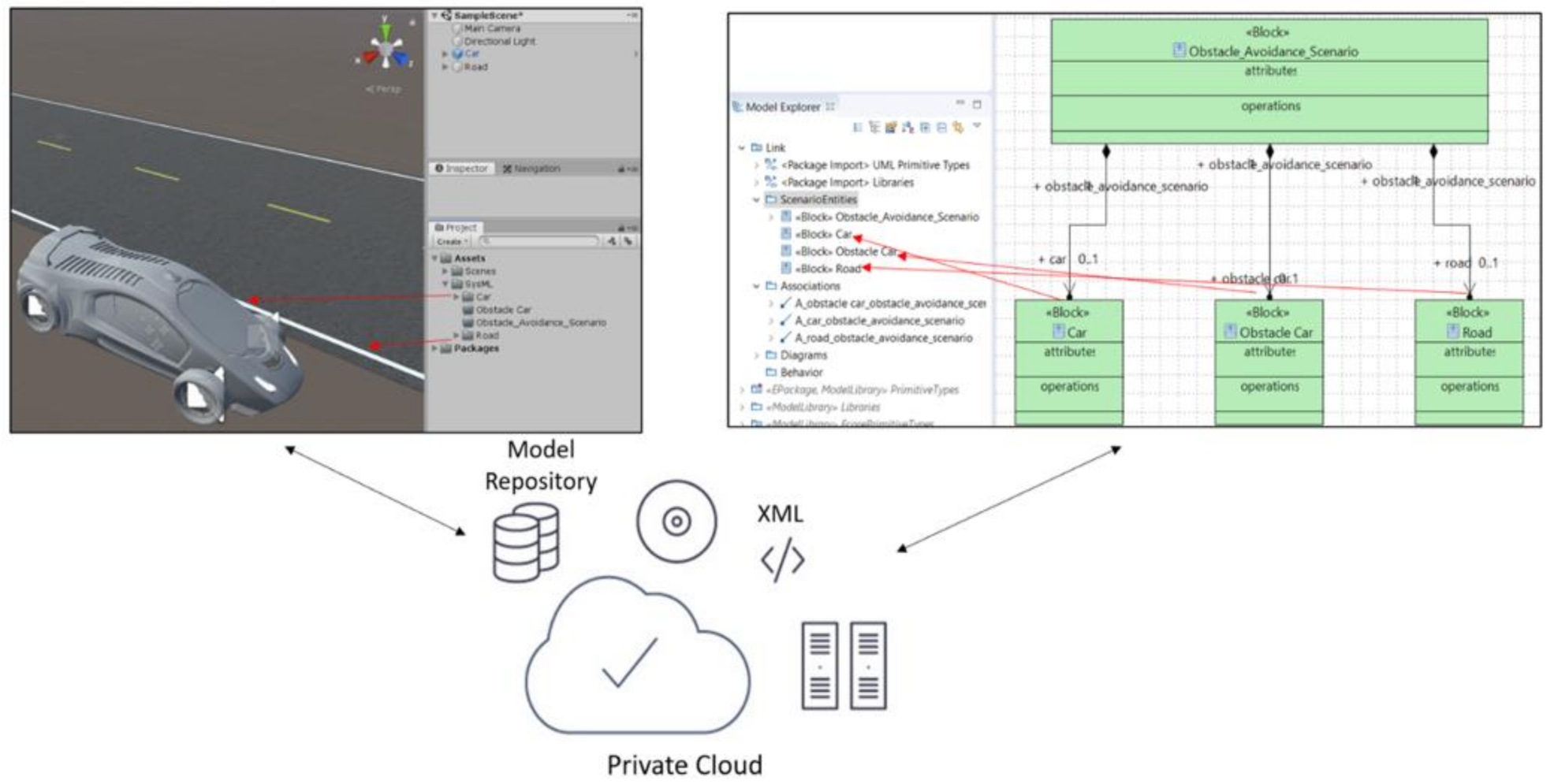

3.3.4. MBSE Repository

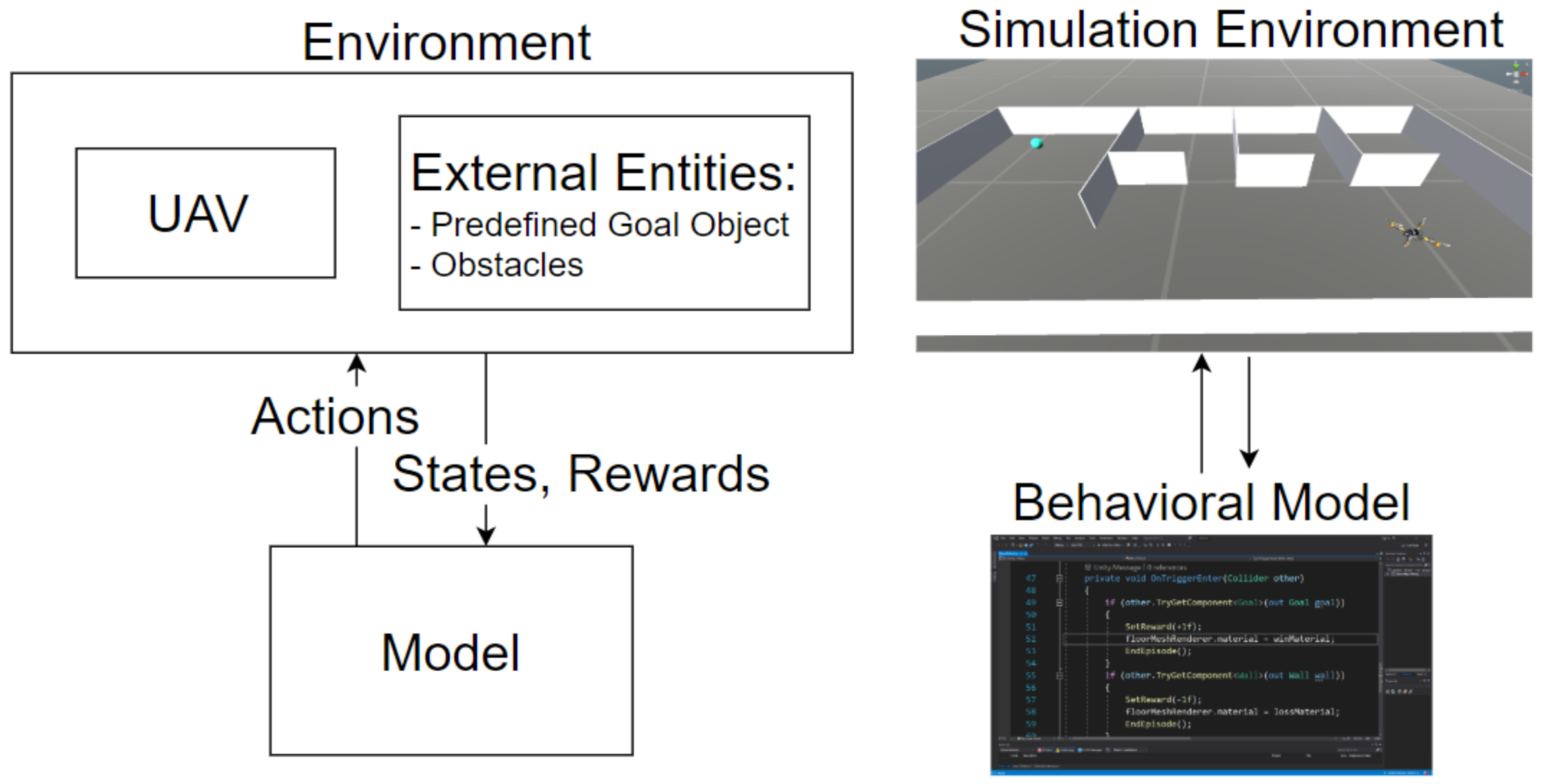

3.3.5. Experimentation Support

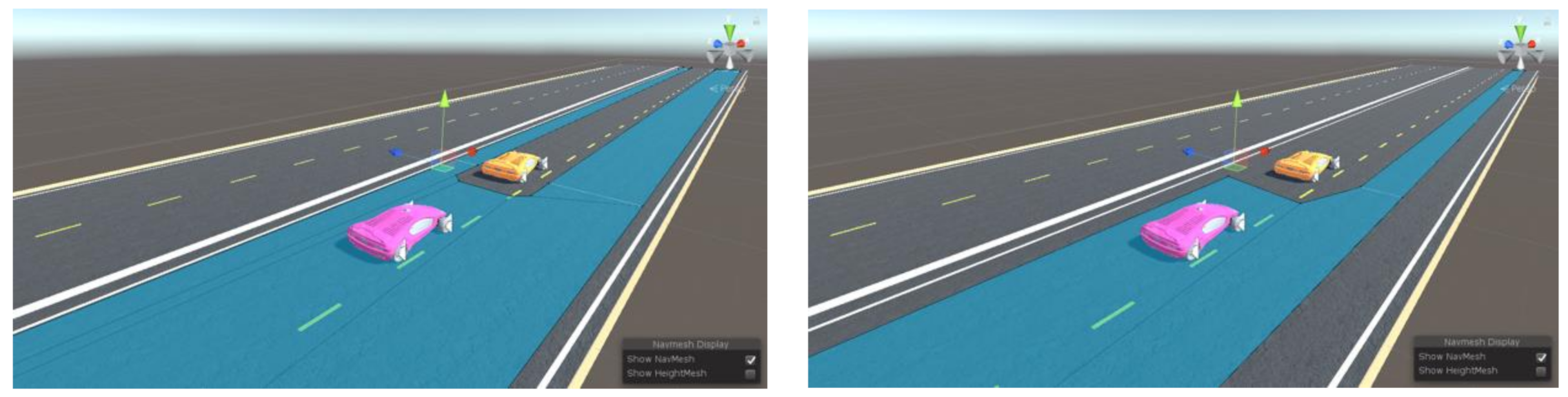

3.3.6. Multiperspective Visualization

3.4. Implementation Architecture

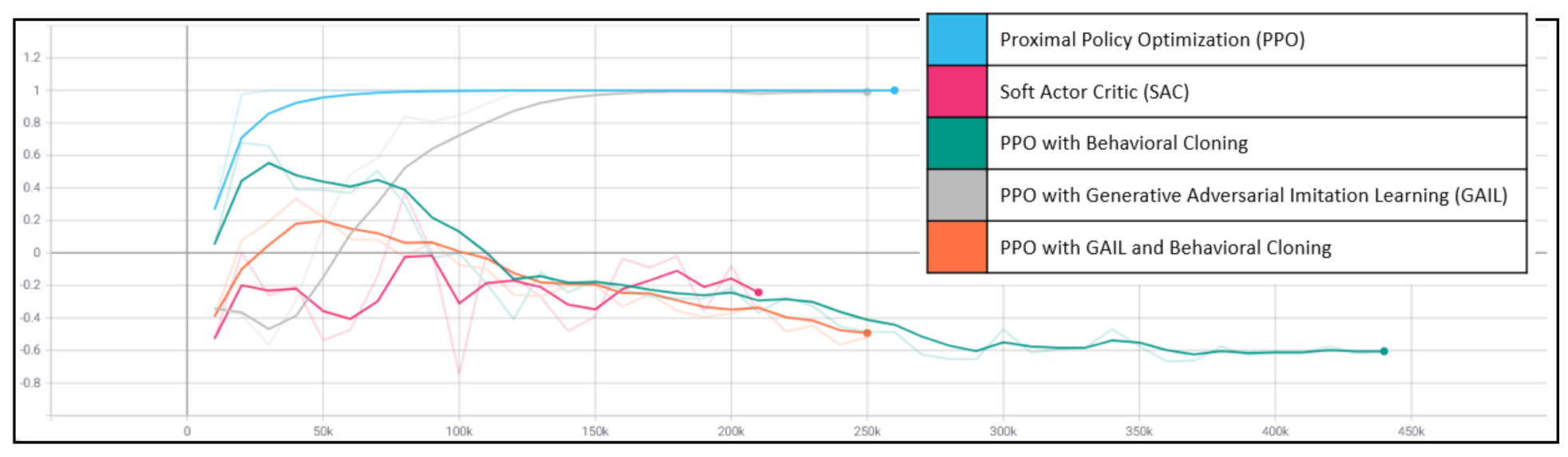

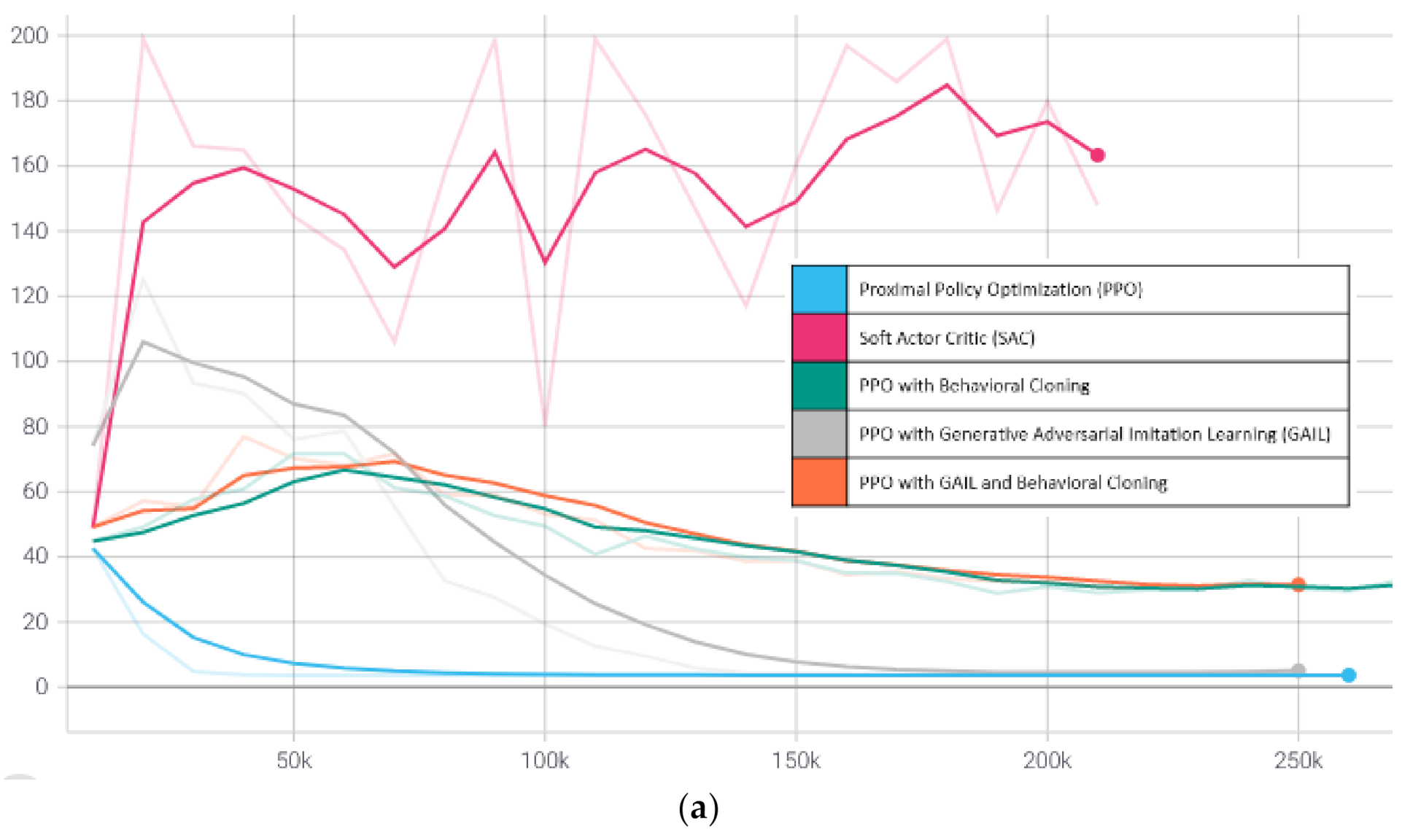

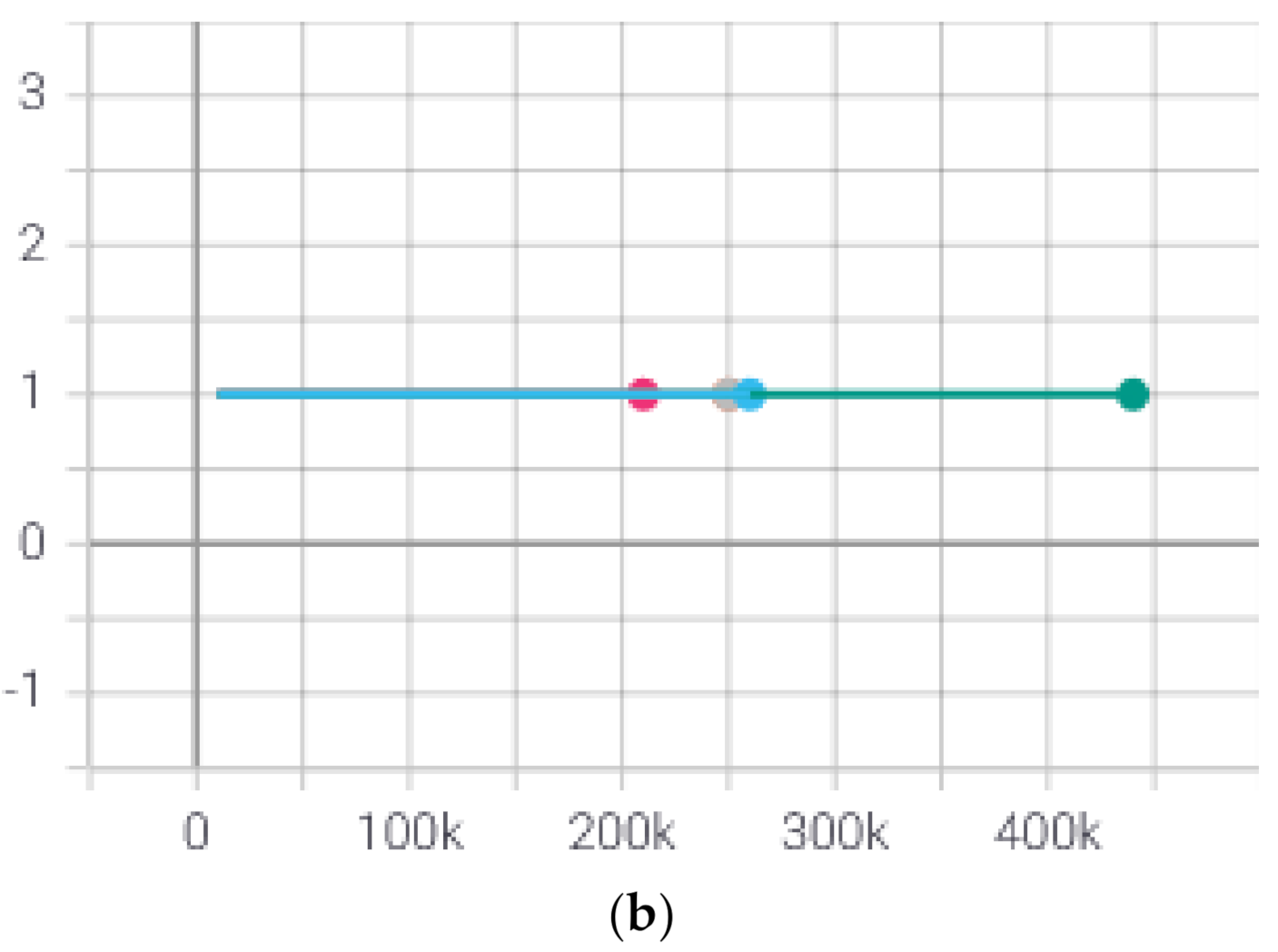

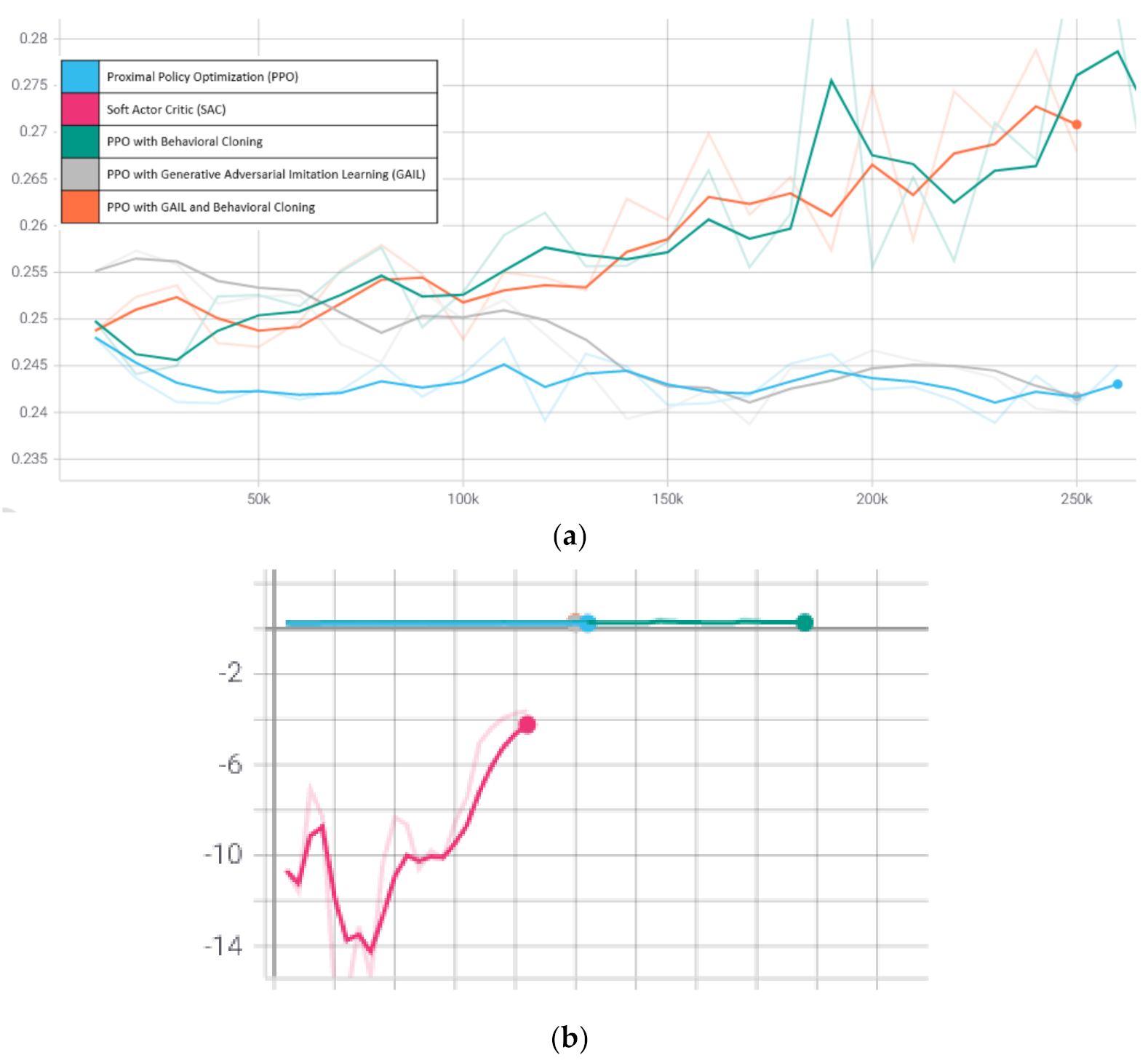

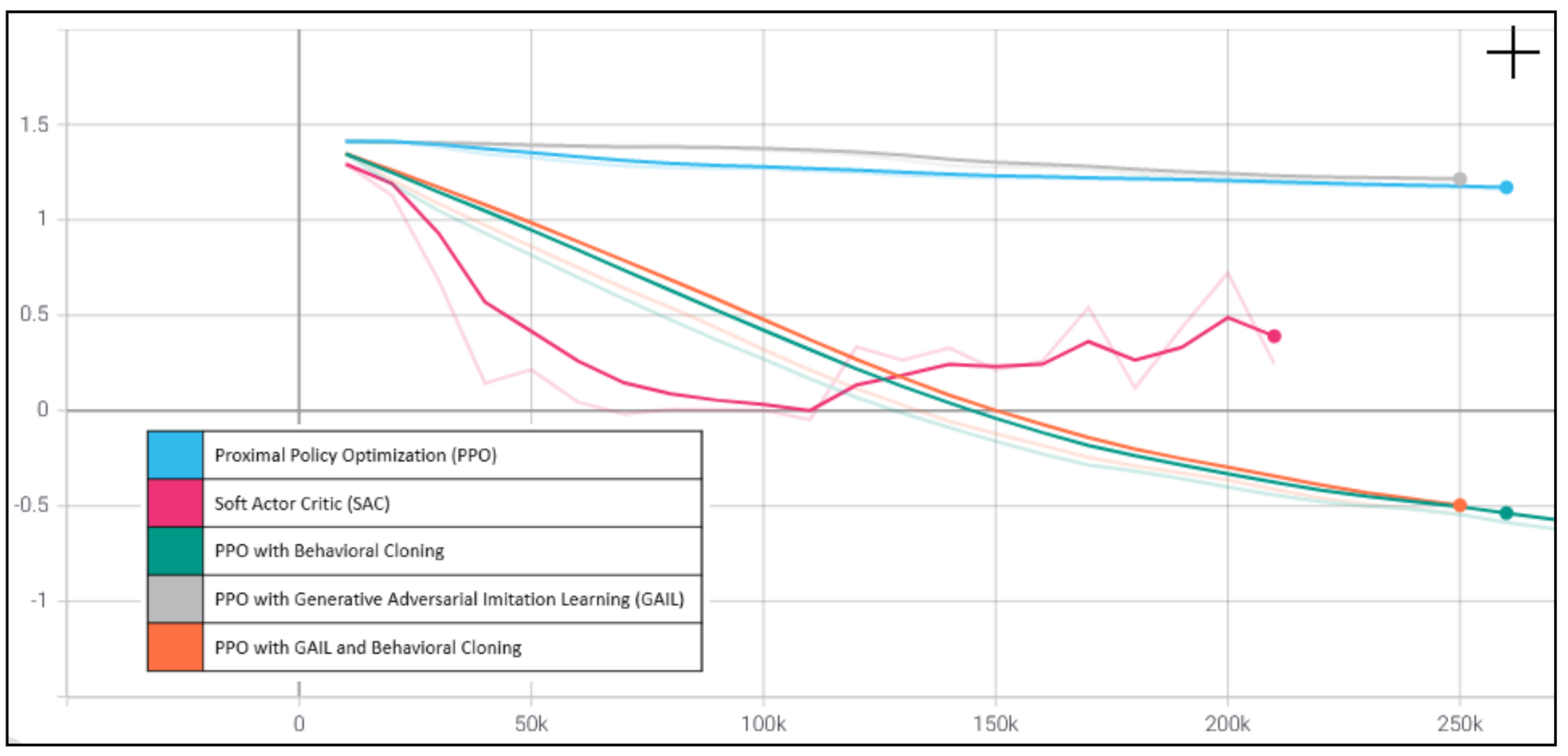

4. Quantitative Analysis

5. Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Madni, A.M.; Sievers, M. Model-Based Systems Engineering: Motivation, Current Status, and Research Opportunities. Syst. Eng. 2018, 21, 172–190. [Google Scholar] [CrossRef]

- Madni, A.M.; Purohit, S. Economic Analysis of Model Based Systems Engineering. Systems 2019, 7, 12. [Google Scholar]

- Purohit, S.; Madni, A.M. Towards Making the Business Case for MBSE. In Proceedings of the 2020 Conference on Systems Engineering Research, Redondo Beach, CA, USA, 8–10 October 2020. [Google Scholar]

- Schluse, M.; Atorf, L.; Rossmann, J. Experimental Digital Twins for Model-Based Systems Engineering and Simulation-Based Development. In Proceedings of the 2017 Annual IEEE International Systems Conference, Montreal, CA, USA, 24–27 April 2017; pp. 1–8. [Google Scholar]

- Madni, A.M.; Madni, C.C.; Lucero, D.S. Leveraging Digital Twin Technology in Model-Based Systems Engineering. Systems 2019, 7, 7. [Google Scholar] [CrossRef] [Green Version]

- Friedenthal, S.; Moore, A.; Steiner, R. A Practical Guide to SysML: The Systems Modeling Language, 3rd ed.; Morgan Kaufman/The OMG Press: Burlington, MA, USA; Elsevier Science & Technology: Amsterdam, The Netherlands, 2014; ISBN 978-0-12-800202-5. [Google Scholar]

- Rabiner, L.R. A tutorial on Hidden Markov Models and selected applications in speech recognition. Proc. IEEE 1989, 772, 257–286. [Google Scholar] [CrossRef]

- Hansen, E. Solving POMDPs by searching in policy space. In Proceedings of the Fourteenth International Conference on Uncertainty in Artificial Intelligence (UIA-98), Madison, WI, USA, 24–26 July 1998. [Google Scholar]

- Siaterlis, C.; Genge, B. Cyber-Physical Testbeds. Commun. ACM 2014, 57, 64–73. [Google Scholar] [CrossRef]

- Madni, A.M.; Sievers, M. SoS Integration: Key Considerations and Challenges. Syst. Eng. 2014, 17, 330–347. [Google Scholar] [CrossRef]

- Bernijazoo, R.; Hillebrand, M.; Bremer, C.; Kaiser, L.; Dumitrescu, R. Specification Technique for Virtual Testbeds in Space Robotics. Procedia Manuf. 2018, 24, 271–277. [Google Scholar] [CrossRef]

- Denno, P.; Thurman, T.; Mettenburg, J.; Hardy, D. On enabling a model-based systems engineering discipline. INCOSE Int. Symp. 2008, 18, 827–845. [Google Scholar] [CrossRef]

- Russmeier, N.; Lamin, A.; Hann, A. A Generic Testbed for Simulation and Physical Based Testing of Maritime Cyber-Physical System of Systems. J. Phys. Conf. Ser. 2019, 1357, 012025. [Google Scholar] [CrossRef]

- Brinkman, M.; Hahn, A. Physical Testbed for Highly Automated and Autonomous Vessels. In Proceedings of the 16th International Conference on Computer and IT Applications in the Maritime Industries, Cardiff, UK, 15–17 May 2017. [Google Scholar]

- Fortier, P.J.; Michel, H.E. Computer Systems Performance Evaluation and Prediction, Chapter 10. In Hardware Testbeds, Instrumentation, Measurement, Data Extraction and Analysis; Digital Press; Elsevier Science & Technology: Amsterdam, The Netherlands, 2003; pp. 305–330. [Google Scholar]

- Bestavros, A. Towards safe and scalable cyber-physical systems. In Proceedings of the NSF Workshop on CPS, Austin, TX, USA, 16 October 2006. [Google Scholar]

- Madni, A.M.; Erwin, D.; Sievers, M. Architecting for Systems Resilience: Challenges, Concepts, Formal Methods, and Illustrative Examples. MDPI Systems. to be published in 2021.

- Kellerman, C. Cyber-Physical Systems Testbed Design Concepts; NIST: Gaithersburg, MD, USA, 2016. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Torabi, F.; Warnell, G.; Stone, P. Behavioral cloning from observation. arXiv 2018, arXiv:1805.01954. [Google Scholar]

- Ho, J.; Ermon, S. Generative adversarial imitation learning. arXiv 2016, arXiv:1606.03476. [Google Scholar]

- Juliani, A.; Berges, V.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Goy, C.; Gao, Y.; Henry, H.; Mattar, M.; et al. Unity: A General Platform for Intelligent Agents. arXiv 2020, arXiv:1809.02627. Available online: https://arxiv.org/abs/1809.02627 (accessed on 4 March 2021).

- Madni, A.M.; Lin, W.; Madni, C.C. IDEON™: An Extensible Ontology for Designing, Integrating, and Managing Collaborative Distributed Enterprises in Systems Engineering. Syst. Eng. 2001, 4, 35–48. [Google Scholar] [CrossRef]

- Madni, A.M. Exploiting Augmented Intelligence in Systems Engineering and Engineered Systems. Syst. Eng. 2020, 23, 31–36. [Google Scholar] [CrossRef]

- Madni, A.M.; Sage, A.; Madni, C.C. Infusion of Cognitive Engineering into Systems Engineering Processes and Practices. In Proceedings of the 2005 IEEE International Conference on Systems, Man, and Cybernetics, Hawaii, HI, USA, 10–12 October 2005. [Google Scholar]

- Madni, A.M. HUMANE: A Designer’s Assistant for Modeling and Evaluating Function Allocation Options. In Proceedings of the Advanced Manufacturing and Automated Systems Conference, Louisville, KY, USA, 16–18 August 1988; pp. 291–302. [Google Scholar]

- Trujillo, A.; Madni, A.M. Exploration of MBSE Methods for Inheritance and Design Reuse in Space Missions. In Proceedings of the 2020 Conference on Systems Engineering Research, Redondo Beach, CA, USA, 19–21 March 2020. [Google Scholar]

- Madni, A.M. Integrating Humans with Software and Systems: Technical Challenges and a Research Agenda. Syst. Eng. 2010, 13, 232–245. [Google Scholar] [CrossRef]

- Shahbakhti, M.; Li, J.; Hedrick, J.K. Early model-based verification of automotive control system implementation. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, USA, 27–29 June 2012; pp. 3587–3592. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Madni, A.M. MBSE Testbed for Rapid, Cost-Effective Prototyping and Evaluation of System Modeling Approaches. Appl. Sci. 2021, 11, 2321. https://doi.org/10.3390/app11052321

Madni AM. MBSE Testbed for Rapid, Cost-Effective Prototyping and Evaluation of System Modeling Approaches. Applied Sciences. 2021; 11(5):2321. https://doi.org/10.3390/app11052321

Chicago/Turabian StyleMadni, Azad M. 2021. "MBSE Testbed for Rapid, Cost-Effective Prototyping and Evaluation of System Modeling Approaches" Applied Sciences 11, no. 5: 2321. https://doi.org/10.3390/app11052321

APA StyleMadni, A. M. (2021). MBSE Testbed for Rapid, Cost-Effective Prototyping and Evaluation of System Modeling Approaches. Applied Sciences, 11(5), 2321. https://doi.org/10.3390/app11052321